Abstract

Much of our knowledge is acquired not from direct experience but through the speech of others. Speech allows rapid and efficient transfer of information that is otherwise not directly observable. Do infants recognize that speech, even if unfamiliar, can communicate about an important aspect of the world that cannot be directly observed: a person’s intentions? Twelve-month-olds saw a person (the Communicator) attempt but fail to achieve a target action (stacking a ring on a funnel). The Communicator subsequently directed either speech or a nonspeech vocalization to another person (the Recipient) who had not observed the attempts. The Recipient either successfully stacked the ring (Intended outcome), attempted but failed to stack the ring (Observable outcome), or performed a different stacking action (Related outcome). Infants recognized that speech could communicate about unobservable intentions, looking longer at Observable and Related outcomes than the Intended outcome when the Communicator used speech. However, when the Communicator used nonspeech, infants looked equally at the three outcomes. Thus, for 12-month-olds, speech can transfer information about unobservable aspects of the world such as internal mental states, which provides preverbal infants with a tool for acquiring information beyond their immediate experience.

Keywords: infant cognitive development, infant speech perception, knowledge acquisition, psychological reasoning, communication

Much of the knowledge humans have about the world—from physical properties of planets to the internal psychological states of others—is acquired indirectly through the speech of others (1–4). Adult humans use speech to transmit and acquire information about aspects of their environment that are directly observable (e.g., spilling coffee) but also about unobservable internal states such as beliefs, knowledge, and desires (e.g., wanting a refill). Speech is thus a powerful means of communication, which allows for rapid and efficient transfer of information that is otherwise not directly observable. Do preverbal infants recognize that speech can communicate information about another person’s internal states? Such recognition would allow infants to use others’ speech as a powerful mechanism for knowledge acquisition and provide a tool for acquiring information about what has not been or cannot be directly experienced (e.g., refs. 2, 5, and 6) even before they understand the meanings of particular words. Here, we examine whether 12-month-old infants understand that speech can communicate to a second person about an intention, an internal state that cannot be directly observed.

By their first birthday, infants treat speech and nonspeech as functionally distinct when individuating and categorizing objects (7–9). For example, distinct speech labels (but not distinct tones or emotional vocalizations) leads infants to expect distinct objects (9), whereas pairing multiple individual category instances with the same speech label (but not tone) helps infants detect similarities between instances and generalize to novel instances (e.g., refs. 7 and 8). Further, when observing two people communicating with each other in a third-party interaction, 12-month-olds recognized that speech—but not nonspeech vocalizations—can communicate about a target object. That is, when a person (the Communicator) repeatedly grasped a target object, 12-month-olds expected that by using speech but not coughs or emotional vocalizations, the Communicator could inform a second person (the Recipient) about the target object (10).

Although infants seem to understand that speech is used for individuating, categorizing, and communicating about observable entities such as objects, no study has directly examined whether infants recognize that speech can communicate about an important unobservable aspect of the world: intentions. An understanding of the intentions of others is fundamental for human cognition, allowing us to go beyond perceptual and behavioral information to make inferences about the underlying causes of human action (e.g., refs. 11 and 12). By 12 months, infants understand that others’ behavior is driven by their internal states, inferring that successful and failed actions could nevertheless be motivated by the same underlying intention (11, 13, 14). Although an understanding that others have intentions seems to be in place by at least 12 months, whether infants understand that people can communicate to others about these unobservable intentions is not yet known.

Here, we examined whether 12-month-olds understand that one person can inform a second person about her unobservable intention using speech, even when the speech is novel. In the current study, infants saw the Communicator, alone, attempt to stack a ring on a funnel but never successfully complete the action because the funnel was out of reach (Fig. 1). By 12 months, infants who see failed actions can infer the intentions underlying the observable action (11, 13, 14, and cf. 15); thus, infants should infer the Communicator’s goal (i.e., to stack the ring on the funnel) from these actions. By this age, infants also track their own knowledge separately from the information available to others (e.g., refs. 16 and 17); thus, infants should be sensitive to the fact that although they themselves know the Communicator’s intention, the Recipient (who was not present during these scenes) does not have access to the same information. Subsequently, infants saw the Recipient, introduced alone, interact neutrally with all objects.

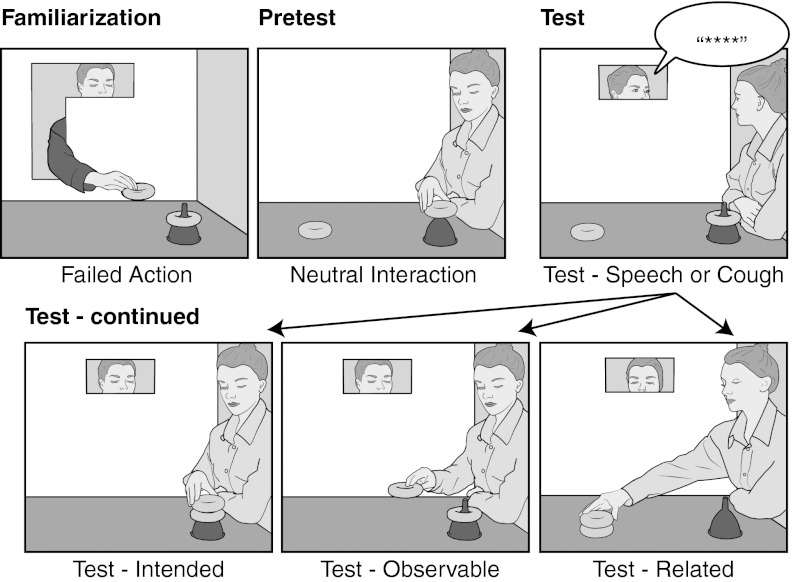

Fig. 1.

Procedure. During Familiarization trials (Upper Left), the Communicator attempted but failed to stack a ring on a funnel that already held a ring. During the Pretest trial (Upper Center), the Recipient interacted neutrally with all objects. In the Test trial (Upper Right), the Communicator vocalized, either speech or a cough, then the Recipient either performed the Intended (Lower Left), Observable (Lower Center), or Related (Lower Right) outcome.

During the test phase, the Communicator and Recipient were present together for the first time; however, a change in the scene prevented the Communicator from being able to reach the objects. The Communicator turned to the Recipient and uttered either a novel word unknown to the infants (“koba”) or a cough (“xhm-xhm-xhm”). Infants who heard the novel word should infer that its meaning was understood by both the Communicator and Recipient because infants understand that language is conventional and, thus, shared between people (10, 18, 19), and should expect the Recipient to be cooperative (e.g., refs. 20 and 21). Each infant then saw one of three test outcomes in which the Recipient: (i) completed the Communicator’s intended action (stacking the ring on the funnel; Intended), (ii) performed the Communicator’s observable movements (attempting to stack the ring but failing; Observable), or (iii) completed a perceptually distinct but related stacking action that, like the Communicator’s intended action, had never been shown (removing a ring already on the funnel and stacking it on the ring on the floor; Related).

In all conditions, infants should know the Communicator’s intention (through observing her prior failed stacking attempts), but the Recipient only sometimes had the requisite information to accomplish the Communicator’s intended action (through the Communicator’s appropriate speech vocalization). If infants make inferences about the Recipient’s knowledge of the Communicator’s intention from their own perspective and not from the Recipient’s, or if they view any type of vocalization as able to communicate about intentions, they should expect the Recipient to be able to fulfill the Communicator’s intention regardless of the vocalization. If, however, infants recognize that the Recipient’s knowledge is different from their own, and understand that some vocalizations (speech) but not others (coughing) can successfully communicate about intentions, they should expect the Recipient to fulfill the Communicator’s intention only when the Communicator vocalized appropriately.

Results

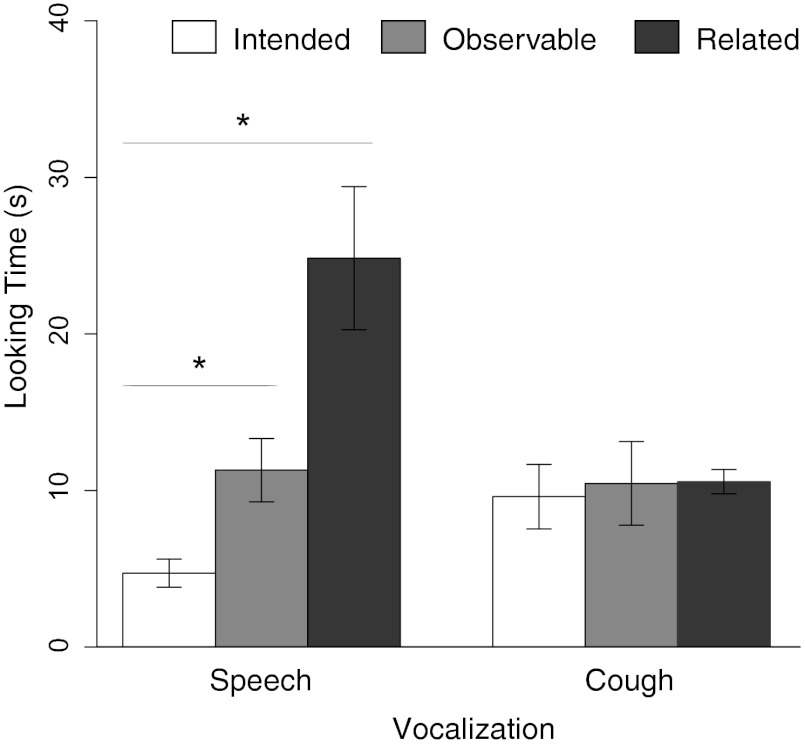

If infants understood that speech, but not nonspeech, could transfer information about an intended—but unachieved and unobservable—goal, they should expect the Recipient to perform the Intended outcome. They should therefore look longer at the Observable and Related outcomes than the Intended outcome when the Communicator said “koba” but not “xhm-xhm-xhm.” The interaction between Vocalization (Speech, Cough) and Outcome (Intended, Observable, Related) was reliable in a factorial analysis of variance (ANOVA), [F(2,42) = 7.68, P = 0.001, η2 = 0.20], and there was no main effect of Vocalization [F(1,42) = 2.64, P > 0.1]. Infants who heard speech responded differently across the three outcomes [F(2,21) = 12.24, P < 0.001, η2 = 0.54], looking reliably longer at Observable [t(14) = 2.98, P = 0.010, r = 0.62] and Related [t(14) = 4.32, P = 0.003, r = 0.76] outcomes than the Intended outcome (Fig. 2). Infants also looked longer at the Related rather than the Observable outcome [t(14) = 2.71, P = 0.017, r = 0.59]. This looking pattern suggests that when an intention has been conveyed through speech, infants who saw the Related outcome treat the Recipient taking the ring off the funnel as incongruent with (and possibly the opposite of) the Communicator's intended goal (of stacking a ring on the funnel). In contrast, infants who heard coughing looked equally at the three outcomes [F(2,21) < 1].

Fig. 2.

Results. Mean looking times and SEM for the Intended (white bars), Observable (gray bars), and Related (black bars) outcomes for Speech (Left) and Cough (Right) vocalizations. *significance at P < 0.05.

To further explore infants' interpretation of the vocalizations, we next compared looking times for the two vocalization conditions within each outcome type. For the Intended outcome, infants looked longer when the Communicator had previously coughed than when she uttered speech [t(14) = 2.49, P = 0.026, r = 0.55]. In the Speech—but not the Cough—condition, infants seemed to expect the Recipient to cooperatively complete the Communicator's unfulfilled intention. For the Observable outcome, infants looked equally whether the Communicator had spoken or coughed [t(14) = 0.25, P = 0.803]. For the Related outcome, infants looked longer when the Communicator had previously spoken than when she coughed [t(14) = 3.07, P = 0.008, r = 0.63], consistent with treating this outcome as incongruent when the Communicator spoke.

We verified that when the critical information was provided, infants were equally attentive in all conditions. First, looking times for the trials preceding the test trial were summed for each infant and analyzed in a Vocalization (Speech, Cough) by Outcome (Intended, Observable, Related) ANOVA. There were no main effects or interactions [all F values < 2.75], demonstrating that differences in looking time during the test trials were not driven by differential attention in the earlier trials. Second, infants looked almost continuously during the initial sections (Materials and Methods) of the test trials during which all the informative actions were presented (Intended: M = 19.2 s of Max = 20 s, SE = 0.31; Observable: M = 24.5 s of Max = 26 s, SE = 0.53; Related: M = 18.1 s of Max = 20 s, SE = 0.63).

Ruling Out an Alternative Hypothesis: That Speech Communicates Generic Knowledge.

Our results suggest that 12-month-old infants understand that one person can inform a second person about her unobservable intention using speech. However, we must rule out a potential alternative explanation: that the use of speech in the test scenes induced infants into a pedagogical learning stance (22) in which they interpreted the Communicator’s speech as conveying generic knowledge to the Recipient about what to do with funnels (e.g., stack rings on them) rather than the Communicator conveying her intention to stack the ring to the Recipient.

If infants interpret speech as conveying generic information (“It’s a Koba! Use it as one ought to use a Koba”; ref. 22), then speech should allow the Recipient to fulfill the intended action regardless of the speaker. We tested this possibility with two conditions. During familiarization, a male Actor performed the action of trying but failing to stack the ring and, thus, gave evidence of having the intention to stack the ring on the funnel. In test trials (identical to the original conditions), a female Communicator said “koba” to the Recipient who then performed the Intended or Observable outcomes: (i) completing the male Actor’s intended action (stacking the ring on the funnel; Actor-Intended), or (ii) performing the male Actor’s observable movements (attempting to stack the ring but failing; Actor-Observable).

If infants interpret the Communicator’s speech as conveying generic information—such that hearing “koba” from anyone would inform the Recipient about what to do with the ring or the funnel—then infants should look equally at the original Communicator-Intended and the new Actor-Intended outcomes. If, instead, infants interpret the Communicator’s speech as transferring information about her own person-specific intention, then they should look longer at the Actor-Intended than the original Communicator-Intended outcome and treat the Actor-Intended outcome as similar to the Actor-Observable outcome.

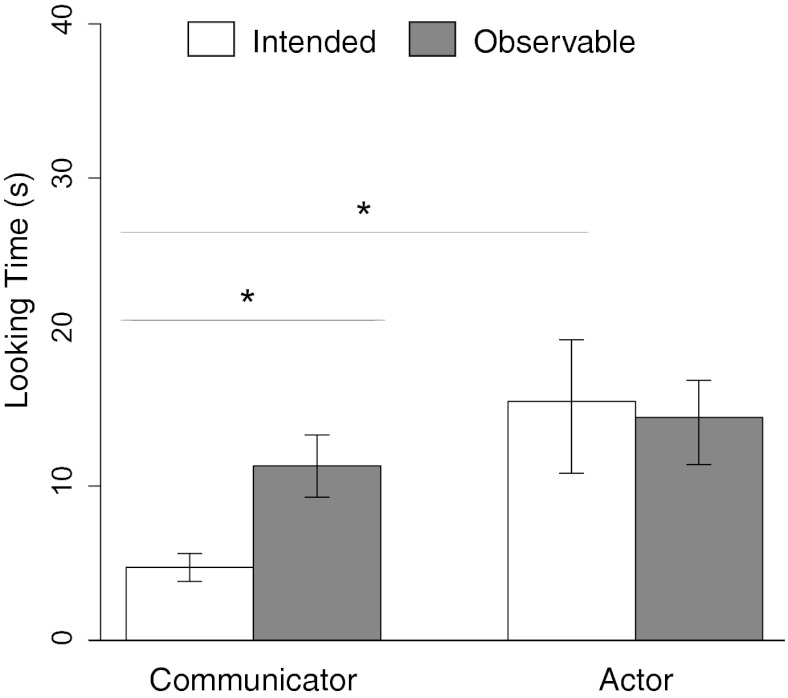

Infants looked reliably longer in the Actor-Intended than the Communicator-Intended outcome [t(14) = 2.44, P = 0.029, r = 0.55], and infants looked equally at the Actor-Intended and Actor-Observable outcomes [t(14) = 0.20, P = 0.842], consistent with the understanding that speech from a Communicator who is not the Actor with the intention does not provide a Recipient with generic information about which action to perform (Fig. 3). Finally, infants looked equally in the Actor-Observable and Communicator-Observable outcomes [t(14) = 0.93, P = 0.370], suggesting that the mere presence of a third person (the Actor) did not increase looking time overall. The results are consistent with infants’ understanding that speech transfers information about one person’s unobservable intentions to another person.

Fig. 3.

Results of conditions ruling out the generic knowledge hypothesis. Shown are the mean looking times and SEM for the Intended (white bars) and Observable (gray bars) outcomes for the original conditions in which the Communicator both acted on the objects and spoke (Left) and the conditions in which the Actor acted on the objects and the Communicator subsequently spoke (Right). *significance at P < 0.05.

Discussion

The current results demonstrate that 12-month-old infants understand that speech can communicate about unobservable intentions. Prior work had shown that infants understand that speech, but not nonspeech vocalizations, can be used to individuate and categorize objects (e.g., refs. 7 and 9) and to communicate about objects (10), but our results go beyond those findings by showing that infants realize that speech, but not a nonspeech vocalization, can transfer information to another person about an intended but unachieved goal. The current results highlight the privileged role that speech plays in communication and reveal a sophisticated understanding of the kind of information that can be transferred by using speech. Not only do infants understand that others’ behavior is driven by their internal states even when outcomes are unsuccessful (e.g., refs. 11, 13, and 14), but they also understand that people can communicate about internal states by using speech.

Infants did not infer that speech should allow the Recipient to fulfill an intended action regardless of the speaker. Instead, infants seem to have inferred that the specific content of speech recovered by the Recipient was the Communicator’s person-specific and episodically relevant intention. Thus, infants do not necessarily interpret speech directed to third parties as communicating generic kind-specifying referential information, unlike their interpretation of communicative acts directed toward the infants themselves (22).

The understanding that speech, even when it is unfamiliar, can communicate about intentions is in place by 12 months, before infants understand the meanings of many words, suggesting that this understanding is unlikely to arise from learning individual word-referent links (e.g., ref. 23). Infants’ abstract understanding of the communicative function of speech may serve as a powerful tool for knowledge acquisition by indexing opportunities for learning about the world around them, even about information that cannot be directly observed (see refs. 24–26 for related discussion). Furthermore, this recognition of speech as a signal for communication provides a mechanism for acquiring knowledge that may complement learning through teaching directed toward the infant (reviewed in ref. 22). Unlike natural pedagogical mechanisms, recognizing that speech is used for communication additionally allows infants to acquire information even when communicative signals are not directed toward them (as in third-party interactions in which the infant is an observer rather than a participant).

Finally, the current results add to our understanding of early communicative development. Communicative interactions are complex. To make sense of such interactions, an observer must understand, minimally, that the Communicator is sending an appropriate signal to the Recipient in a format they share (even if the observer does not; ref. 27). The results further demonstrate that infants are able to evaluate the effectiveness of communicative interactions between third parties that include the transfer of information that has not or cannot be observed (see also refs. 28 and 29). Humans are thus poised to detect communicative interactions by the end of their first year, recognizing that speech is a tool for acquiring knowledge beyond their immediate experience.

Materials and Methods

Participants.

Forty-eight infants participated in six conditions: Speech-Intended (4 females and 4 males; Mage = 12 mo, 10 d; range 11,26–12,20), Speech-Observable, (4 females and 4 males; Mage = 12 mo, 12 d; range 12,06–12,20), Speech-Related (4 females and 4 males; Mage = 12 mo, 7 d; range 11,25–12,22), Cough-Intended (4 females and 4 males; Mage = 12 mo, 9 d; range 11,28–12,18), Cough-Observable (4 females and 4 males; Mage = 12 mo, 8 d; range 12,01–12,17), and Cough-Related (4 females and 4 males; Mage = 12 mo, 15 d; range 12,02–12,22). Data from 15 additional infants were excluded for fussiness (n = 8), never looking away from the display (n = 1), parental interference (n = 2), and experimenter error (n = 4).

An additional 16 infants participated in two conditions to rule out an alternative hypothesis based on generic knowledge: Actor-Intended (4 females and 4 males; Mage = 12 mo, 6 d; range 11,25–12,21), and Actor-Observable (4 females and 4 males; Mage = 12 mo, 11 d; range 11,27–12,23). Data from six additional infants were excluded for fussiness (n = 1), never looking away from the display (n = 3), and experimenter error (n = 2). Parents gave informed consent on behalf of their infants. Parents were compensated up to $20 for transportation costs, and infants received a small gift. All procedures were approved by New York University's Institutional Review Board.

Apparatus and Stimuli.

Events were presented in a display box (90 cm wide, 71 cm tall, 55 cm deep) that was covered with a beige curtain between trials. During the Familiarization trials, the back wall contained an inverted L-shaped window (51 cm tall, 45 cm wide), which allowed the Communicator’s right arm and the top of her face to be visible but hid her mouth and torso. During the test, the window was smaller (30 cm by 15 cm) so that only the top of the Communicator’s face was visible and she was no longer able to reach the objects. The Recipient was present on the right, through a side window covered with a yellow curtain.

Objects were a red-painted funnel (10 cm tall, 10 cm wide at the base) and two yellow rings (10 cm in diameter, 3 cm thick). The funnel (34 cm from the back, 26 cm from the right) had one of the rings stacked on it. The second ring was on the floor (34 cm from the back, 65 cm from the right). The placement of the funnel and rings ensured that during the Familiarization trials, the Communicator could easily reach the ring on the floor but not the funnel, whereas during subsequent trials, the Recipient could easily reach and manipulate both rings and the funnel.

Procedure.

Each infant was seated on a parent’s lap and saw five trials (three Familiarization, one Pretest, and one Test), presented live by two trained experimenters whose movements were timed to a metronome clicking once per second. Parents closed their eyes after the first trial. A hidden observer who was unable to see the scenes indicated whether infants were attending to the scene. Each trial started with the curtain rising to reveal the scene and ended when, during its main section (see below), infants looked for at least 2 s, then either looked away for 2 consecutive seconds or looked for the maximum trial length.

Test trial endings were verified off-line by an offline coder who was blind to vocalization condition and to outcome, and who coded infant looking time for test trials from a video with visual chapter markers indicating the start of the trial, the start of the main section, and the end of the trial, so that videos could be coded without audio. The video was taken from a camera just below the display box recording at 30 frames per second and was coded frame by frame. For trials for which the coders were in agreement on the 2-s look away that ended the trial, we included the online looking time in the analyses. For trials in which the online and the offline coder differed on the 2-s look away that ended the trial, a second independent blind coder coded the trial. For trials in which the two offline coders agreed the infant looked away for 2 s before the online coder indicated a 2-s look away, we included the offline looking time in the analyses. Thus, the offline coding was used for 2 of 48 trials in the main experiment (one each in the Speech-Observable and the Cough-Observable conditions) and 2 of 16 trials in the conditions to rule out the generic knowledge hypothesis (one each in the Actor-Intended and Actor-Observable conditions). There were no trials in which the two offline coders agreed that the online coder indicated a 2-s look away prematurely.

Half of the infants in the main experiment heard speech and the other half heard coughing. Within each vocalization condition, one-third saw each outcome: Intended, Observable, or Related.

Trials.

Each trial was divided into an initial section, during which the informative actions were presented, followed by a main section, during which the scene was static or noninformative actions were presented. The main analyses were conducted on infant looking times during the main section.

Familiarization.

The Communicator was visible alone in the larger back window. She first looked at the ring on the funnel then the ring on the floor, she grasped the latter and three times attempted to stack it on the funnel, then retracted her arm (12 s). She looked at the funnel, the ring in her hand, and reattempted stacking (9 s), ending the trial’s initial section. The trial’s main section was comprised of two additional repetitions of the 9-s sequence.

Pretest.

The Communicator was no longer present. The Recipient was visible in the side window. She looked at the funnel then the ring on the floor (4 s). Next she looked at the ring on the funnel, grasped and lifted the ring, tilted it toward and away from the infant, and then replaced it on the funnel (6 s). She then looked at the ring on the floor, grasped and lifted it, tilted it, and then replaced it on the floor (6 s), ending the trial’s initial section. In the main section, the Recipient interacted again with each ring (12 s) and retracted her hand (3 s).

Test.

Both the Communicator and Recipient were present in their respective locations, but the Communicator’s smaller window prevented her from reaching the objects. After the infant looked for 2 s, the Communicator looked at each ring (4 s), made eye contact with the Recipient and twice vocalized, producing either a nonsense word, “koba” (Speech condition; 4 s) or a coughing vocalization with matched intonation, “xhm-xhm-xhm” (Cough condition; 4 s). The Recipient performed different actions depending on the Outcome:

Intended outcome.

The Recipient looked at and grasped the floor ring (2 s), lifted and moved it toward the funnel (2 s), placed it on the funnel (2 s), and retracted her hand (1 s).

Observable outcome.

The Recipient looked at and grasped the floor ring (2 s), lifted and moved it toward the funnel (2 s), and three times attempted to stack it on the funnel (3 s). She returned the ring to the floor (5 s) and retracted her hand (1 s).

Related outcome.

The Recipient looked at and grasped the funnel ring (2 s), lifted and moved it toward the floor ring (2 s), placed it on top of the floor ring (2 s), and retracted her hand (1 s).

Each test trial’s main section began after the Recipient retracted her hand and consisted of both actors fixating on the displaced ring until the trial ended (40 s maximum trial length, or until the infant looked away from the scene for 2 consecutive seconds).

Apparatus, Stimuli, and Procedure for Conditions to Rule Out the Generic Knowledge Hypothesis.

For the Actor-Intended and Actor-Observable conditions, the apparatus, stimuli, and procedure were identical to the original Speech-Intended and Speech-Observable conditions except that in the Familiarization trials, it was not the Communicator who attempted and failed to stack the ring on the funnel. Instead a male Actor, perceptually distinct from the female Communicator, performed the familiarization actions. Thus, for these two conditions, there were three trained experimenters: the Actor, the Communicator, and the Recipient.

An additional scene after the Familiarization trials and before the Pretest trial made the Communicator visible to the infants before the test scene. In this scene, the male Actor was no longer present. The female Communicator was visible in the smaller back window. She looked at the funnel (2 s), then looked at the ring on the floor (2 s), and then looked neutrally in the center (2 s). In the main section, the Communicator again looked at each of the objects in turn. Test trials were performed as in the main experiment.

Acknowledgments

We thank Alia Martin and Christina Starmans for discussion, Marjorie Rhodes and Gary Marcus for comments on the manuscript, all the members of the NYU Infant Cognition and Communication Lab, and the parents and infants who participated. This research was supported by a New York University National Science Foundation ADVANCE Research Challenge Grant (to A.V.) and the Social Sciences and Humanities Research Council of Canada (to K.H.O.).

Footnotes

The authors declare no conflict of interest.

*This Direct Submission article had a prearranged editor.

References

- 1.Clark HH. Using Language. Cambridge, UK: Cambridge Univ Press; 1996. [Google Scholar]

- 2.Gelman SA. Learning from others: Children’s construction of concepts. Annu Rev Psychol. 2009;60:115–140. doi: 10.1146/annurev.psych.59.103006.093659. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Searle JR. Speech Acts: An Essay in the Philosophy of Language. Cambridge, UK: Cambridge Univ Press; 1969. [Google Scholar]

- 4.Sperber D, Wilson D. Relevance: Communication and Cognition. Oxford: Blackwell; 1986. [Google Scholar]

- 5.Ganea PA, Harris PL. Not doing what you are told: Early perseverative errors in updating mental representations via language. Child Dev. 2010;81:457–463. doi: 10.1111/j.1467-8624.2009.01406.x. [DOI] [PubMed] [Google Scholar]

- 6.Ganea PA, Shutts K, Spelke ES, DeLoache JS. Thinking of things unseen: Infants’ use of language to update mental representations. Psychol Sci. 2007;18:734–739. doi: 10.1111/j.1467-9280.2007.01968.x. [DOI] [PubMed] [Google Scholar]

- 7.Balaban MT, Waxman SR. Do words facilitate object categorization in 9-month-old infants? J Exp Child Psychol. 1997;64:3–26. doi: 10.1006/jecp.1996.2332. [DOI] [PubMed] [Google Scholar]

- 8.Fulkerson AL, Waxman SR. Words (but not tones) facilitate object categorization: Evidence from 6- and 12-month-olds. Cognition. 2007;105:218–228. doi: 10.1016/j.cognition.2006.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Xu F. The role of language in acquiring object kind concepts in infancy. Cognition. 2002;85:223–250. doi: 10.1016/s0010-0277(02)00109-9. [DOI] [PubMed] [Google Scholar]

- 10.Martin A, Onishi KH, Vouloumanos A. Understanding the abstract role of speech in communication at 12 months. Cognition. 2012;123:50–60. doi: 10.1016/j.cognition.2011.12.003. [DOI] [PubMed] [Google Scholar]

- 11.Brandone AC, Wellman HM. You can’t always get what you want: Infants understand failed goal-directed actions. Psychol Sci. 2009;20:85–91. doi: 10.1111/j.1467-9280.2008.02246.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Woodward AL. Infants selectively encode the goal object of an actor’s reach. Cognition. 1998;69:1–34. doi: 10.1016/s0010-0277(98)00058-4. [DOI] [PubMed] [Google Scholar]

- 13.Hamlin JK, Newman G, Wynn K. 8-month-old infants infer unfulfilled goals, despite ambiguous physical evidence. Infancy. 2009;14:579–590. doi: 10.1080/15250000903144215. [DOI] [PubMed] [Google Scholar]

- 14.Nielsen M. 12-month-olds produce others’ intended but unfulfilled acts. Infancy. 2009;14:377–389. doi: 10.1080/15250000902840003. [DOI] [PubMed] [Google Scholar]

- 15.Meltzoff AN. Understanding the intentions of others: Re-enactment of intended acts by 18-month-old children. Dev Psychol. 1995;31:838–850. doi: 10.1037/0012-1649.31.5.838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Luo Y, Baillargeon R. Do 12.5-month-old infants consider what objects others can see when interpreting their actions? Cognition. 2007;105:489–512. doi: 10.1016/j.cognition.2006.10.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Luo Y. Do 10-month-old infants understand others’ false beliefs? Cognition. 2011;121:289–298. doi: 10.1016/j.cognition.2011.07.011. [DOI] [PubMed] [Google Scholar]

- 18.Buresh JS, Woodward AL. Infants track action goals within and across agents. Cognition. 2007;104:287–314. doi: 10.1016/j.cognition.2006.07.001. [DOI] [PubMed] [Google Scholar]

- 19.Graham SA, Stock H, Henderson A. Nineteen-month-olds’ understanding of the conventionality of object labels versus desires. Infancy. 2006;9:341–350. doi: 10.1207/s15327078in0903_5. [DOI] [PubMed] [Google Scholar]

- 20.Warneken F, Tomasello M. Helping and cooperation at 14 months of age. Infancy. 2007;11:271–294. doi: 10.1111/j.1532-7078.2007.tb00227.x. [DOI] [PubMed] [Google Scholar]

- 21.Song HJ, Onishi KH, Baillargeon R, Fisher C. Can an agent’s false belief be corrected by an appropriate communication? Psychological reasoning in 18-month-old infants. Cognition. 2008;109:295–315. doi: 10.1016/j.cognition.2008.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Csibra G, Gergely G. Natural pedagogy. Trends Cogn Sci. 2009;13:148–153. doi: 10.1016/j.tics.2009.01.005. [DOI] [PubMed] [Google Scholar]

- 23.Nazzi T, Bertoncini J. Before and after the vocabulary spurt: Two modes of word acquisition? Dev Sci. 2003;6:136–142. [Google Scholar]

- 24.Bloom P. How Children Learn the Meanings of Words. Cambridge, MA: MIT Press; 2000. [Google Scholar]

- 25.Carey S. The Origin of Concepts. New York: Oxford Univ Press; 2009. [Google Scholar]

- 26.Waxman SR, Gelman SA. Early word-learning entails reference, not merely associations. Trends Cogn Sci. 2009;13:258–263. doi: 10.1016/j.tics.2009.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Vouloumanos A, Onishi KH. In: Social Cognition and Social Neuroscience: Agency and Joint Attention. Terrace HS, Metcalfe J, editors. Oxford: Oxford Univ Press; in press. [Google Scholar]

- 28.Akhtar N, Jipson J, Callanan MA. Learning words through overhearing. Child Dev. 2001;72:416–430. doi: 10.1111/1467-8624.00287. [DOI] [PubMed] [Google Scholar]

- 29.Floor P, Akhtar N. Can 18-month-old infants learn words by listening in on conversations? Infancy. 2006;9:327–339. doi: 10.1207/s15327078in0903_4. [DOI] [PubMed] [Google Scholar]