Abstract

Biomarkers are critical to targeted therapies as they may identify patients more likely to benefit from a treatment. Several prospective designs for biomarker directed therapy have been previously proposed, differing primarily in the study population, randomization scheme, or both. Recognizing the need for randomization yet acknowledging the possibility of promising but inconclusive results after a Stage I cohort of randomized patients, we propose a two-stage Phase II design on marker-positive patients that allows for direct assignment in a Stage II cohort. In Stage I, marker-positive patients are equally randomized to receive experimental treatment or control. Stage II has the option to adopt “direct assignment” whereby all patients receive experimental treatment. Through simulation, we studied the power and type I error rate (T1ER) of our design compared to a balanced randomized two-stage design, and performed sensitivity analyses to study the effect of timing of Stage I analysis, population shift effects and unbalanced randomization. Our proposed design has minimal loss in power (<1.8%) and increase in T1ER (<2.1%) compared to a balanced randomized design. The maximum increase in T1ER in the presence of a population shift was between 3.1–5%; the loss in power across possible timings of Stage I analysis was <1.2%. Our proposed design has desirable statistical properties with potential appeal in practice. The direct assignment option, if adopted, provides for an “extended confirmation phase” as an alternative to stopping the trial early for evidence of efficacy in Stage I.

Keywords: phase II, biomarker, direct assignment

INTRODUCTION

Targeted therapies play an increasingly important role in the medical treatment of oncology patients. Biomarkers are a critical component of targeted therapies as they can be used to identify patients who are more likely to benefit from a particular treatment. A biomarker is a characteristic that is objectively measured and evaluated as an indicator of normal biological processes, pathogenic processes, or pharmacologic responses to a therapeutic intervention [1]. A biomarker can be useful for treatment either through the estimation of disease-related trajectories (i.e. prognostic signatures) and/or prediction of patient-specific benefit to a particular treatment (i.e. predictive tool). Clinical trials to validate biomarkers represent a crucial step in translating basic science research into improved clinical practice.

Several Phase III prospective clinical trial designs for biomarker-directed therapy have been previously proposed; we briefly describe three such design classes. All-comers designs enroll and randomize all patients, thus allowing for the testing of an interaction between treatment and marker, thereby facilitating validation of the marker’s predictive ability. All-comers designs can be broadly classified into two types that differ by randomization schema and analytical strategies: (1) the marker-stratified design [2] randomizes all patients, stratified by marker status, followed either by a test for interaction or separate tests for efficacy in each marker group; and (2) the sequential testing strategy design [3] randomizes all patients to treatment but allows for testing a treatment effect in both the overall population and the marker-positive group. The second set of designs, adaptive designs, are a class of designs that adapt design parameters during the course of a trial based on accumulating data. These adaptations can take the form of dropping a particular marker-defined subgroup based on initial futility [4] or changing randomization probabilities within marker-subgroups [5]. The enrichment design [6] aims to understand the safety and clinical benefit of a treatment in a subgroup of patients defined by a specific marker status. All patients are screened for the presence of a marker, and only those patients with certain molecular features are included in the trial and randomized to treatment or control. These designs are particularly pertinent when there is compelling preliminary evidence that treatment benefit, if any, will be restricted to a subgroup of patients who express a specific molecular feature.

Randomization remains the gold standard for testing the predictive ability of a marker and the only way to ensure an unbiased estimation treatment effect. As such, all of the designs mentioned above appropriately randomize patients to receive treatment or not throughout the entire duration of the trial. However, are there certain situations in which it may not be necessary to randomize until the end of a trial? For example, consider the setting of a binary biomarker and a targeted therapy believed to have a beneficial effect in the marker positive group and an unknown effect in the marker negative group. One example of such is Sorafenib in combination with Ganetespib in non-small cell lung cancer (NSCLC) patients whose tumors harbor K-ras mutations. Preliminary evidence suggests that patients with K-ras mutations treated with single-agent Sorafenib achieve objective response, contrary to what is observed in the general NSCLC patient population [7, 8]. Preclinical data also suggest activity of Ganetespib in K-ras mutant NSCLC cell lines [9]. Suppose there is evidence for cytotoxic synergy between Sorafenib and Ganetespib. It may therefore be of interest to study the benefit of combination therapy versus single-agent therapy in K-ras mutant NSCLC, with the hypothesis that there will be enhanced activity associated with the combination therapy. To investigate this hypothesis, we could adopt one of the above designs, depending on strength of preliminary evidence, marker prevalence, and assay properties. However, suppose in the course of the trial, promising though not definitive evidence of efficacy associated with the combination therapy becomes apparent. In that case, a design that allowed for a switch to direct assignment (i.e. all patients receive the combination therapy in our example) after an interim analysis could be considered. Such a design would likely have clinical and patient appeal, while still providing information on treatment benefit. Colton (1963,1965; [10,11]) first proposed several designs that involved directly assigning patients to one of two treatment arms. He considered a cost function approach to clinical trial design for comparing two treatments, whereby the choice of design parameters was driven by minimization of the cost associated with treating patients. In his class of designs, the second stage always was a direct assignment.

In this paper, we propose a Phase II design that includes an option for direct assignment to the experimental treatment when there is promising but not definitive evidence of a treatment benefit at the end of an initial, randomized stage of the trial. Specifically we propose a two-stage enrichment design (i.e. screen all patients for marker status, but only enroll and randomize a particular marker subgroup, e.g. marker-positive or marker-negative for a binary marker) that may stop early for futility or efficacy. While we focus on an enrichment design to illustrate our proposal, the design can easily be extended to include the other marker group(s). Further, although we motivate this proposed design in the context of a targeted therapy, we note that this design could in fact be used for a cytotoxic agent since no decisions are made with respect to the targeted versus an overall hypothesis. If the trial does not stop early for futility after Stage I, then in Stage II the trial can continue in one of two ways: 1) continue with randomization as in Stage I; or 2) switch to “direct assignment,” where all patients are given the experimental treatment. The decision for direct assignment is based on observing promising, but not definitive, results indicating treatment benefit in Stage I. Through simulation, we study the empirical power and type I error rate of our design, compared with a balanced randomized design, for different treatment effect sizes.

METHODS

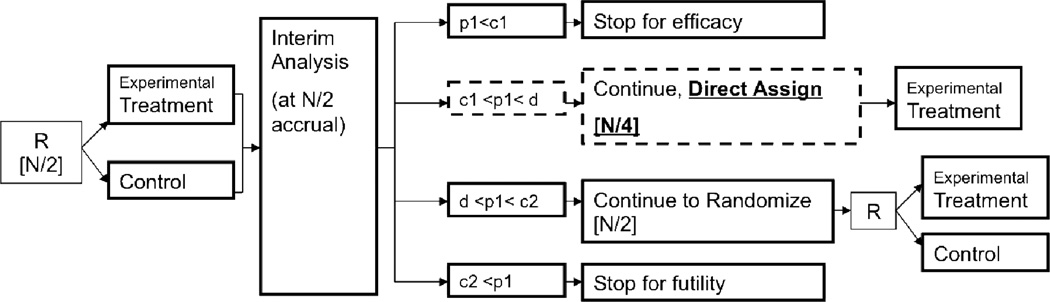

We consider a binary outcome, for example objective response, progression-free status (PFS) at a pre-defined time-point, or percent change in an expression level dichotomized as high or low. Figure 1 gives a schematic of our proposed design, which we describe in more detail below.

Figure 1.

Design schematic (among M+ patients only). Square brackets [] indicate number of patients enrolled at the given stage. R = randomize; N = total number of patients allocated at start of trial; p1 = p-value based on Stage I patient data; c1, c2, and d are O’Brien-Fleming stopping boundaries (d is the boundary for concluding overall trial efficacy, and is also used to decide between adopting the direct assignment option versus continuing with randomization in Stage II).

Design Framework

Patients who meet the trial eligibility criteria with a valid test result for the marker (M), and who belong to the specific marker group (say, marker-positive M+) are randomized (1:1) to receive either an experimental treatment or control. We assume that a planned interim analysis occurs after one-half of the patients are accrued. The interim analysis decisions are based on the p-values from a test comparing the experimental treatment to control using Stage I data. A decision is made to stop early for futility, continue with 1:1 randomization, continue with direct assignment, or stop early for efficacy. If accrual continues to Stage II, then at the end of Stage II, a decision is made regarding treatment efficacy. This decision is based on the p-value from a test comparing the experimental treatment versus control using accumulated data from both stages. Of note, if the trial continues into Stage II with direct assignment, then the final comparison is between all the patients treated in the experimental treatment (from Stages I and II) and the control (from Stage I) patients.

Design Parameters and Assumptions

We specify the overall type I error rate (α) and power (1-β); and the expected response rates in control (pcontrol) and in treated (ptreat) patients, with associated treatment effect (response rate ratio, RRR= ptreat / pcontrol). As we are assuming the preliminary evidence suggests strong benefit of the new treatment in the M+ group, we specify α to be one-sided, as is common for phase II trials.

The maximum sample size (N) is calculated based on α, β, and the expected effect size, using a two sample test of proportions for a two-stage design with a 1:1 randomization and O’Brien-Fleming (OF) stopping rules, allowing for early stopping for efficacy (c1) or futility (c2) after Stage I. Additionally, the decision to adopt direct assignment (vs. continue with balanced randomization) in Stage II uses the same boundary as for concluding efficacy at the end of the trial. That is, if d is the OF boundary for concluding efficacy at the end of the trial, then we set the boundary for deciding between adopting direct assignment (versus continuing with balanced randomization) for Stage II to be d. If the trial continues to Stage II with direct assignment, then we direct assign (i.e. enroll) half of the planned Stage II sample size. Thus the effective trial accrual depends on the interim analysis decisions: if the trial stops early for either efficacy or futility, then the total accrual is N/2; if the trial continues with randomization, then total accrual is N; and if the trial continues with the direct assignment option, then total accrual is 3N/4.

Simulation Study: Parameter Values

We conducted a simulation study to evaluate the performance of the design in terms of power and type I error rate. We generated 6,000 trials and specified β=0.80; and α=0.10 and 0.20. We considered a control response rate of pcontrol = 0.20. We considered experimental treatment response rates of ptreat = 0.40, 0.45, 0.50, and 0.60, with associated response rate ratios (RRR) of 2.00, 2.25, 2.50, and 3.00 respectively. These values were chosen to be consistent with what is commonly targeted in Phase II oncology trials. For each of the two α values, we used a fixed sample size that was calculated based on a RRR of 2.00 and β=0.80, assuming a two-stage balanced randomized design based on a two-sample test of proportions using OF stopping rules. In particular, for α=0.10, β=0.80, and RRR=2.00, the maximum total sample size is N=101; and for α=0.20, β=0.80, and RRR=2.00, the maximum total sample size is N=65 (Table 1). Then, using the same sample sizes and for each of α=0.10 and 0.20, we calculated power and type I error rate for the different RRR as follows. Under the alternative hypothesis HA: pcontrol ≠ ptreat we calculated empirical power as the proportion of the 6,000 simulated trials in which the null hypothesis was (correctly) rejected using significance levels α=0.10 and 0.20. Under the null hypothesis H0: pcontrol = ptreat, we calculated the empirical type I error rate as the proportion of the 6,000 simulated trials in which the null hypothesis was (incorrectly) rejected using significance levels α=0.10 and 0.20. All tests of equal proportions were based on the normal-approximation z-test without correction for continuity. We compared our design against a balanced randomized design.

Table 1.

O’Brien-Fleming Stopping Boundaries (for p-values) and Maximum Sample Sizes for 1-β = 0.80, RRR=2.0, interim analysis after f=0.50 accrual, and each of pre-specified Type I error rates, α = 0.10 and 0.20.

| OF Stopping Boundaries | ||||

|---|---|---|---|---|

| α | Early efficacy (c1) |

Early futility (c2) |

Overall efficacy (d) |

Max N |

| 0.10 | 0.0200 | 0.4566 | 0.0940 | 101 |

| 0.20 | 0.0699 | 0.5765 | 0.1803 | 65 |

Sensitivity Analyses

In addition to the parameter values specified above, we conducted sensitivity analyses to explore the effects on type I and II error of: (1) population shift, (2) timing of Stage I analysis, and (3) unbalanced randomization. We discuss these in detail below.

Population shift is a potential concern of our design as it is with other designs such as outcome-adaptive randomization or single-arm Phase II trials that use historical controls. Specifically with the direct assignment option, patients who enroll during Stage II know they will receive the experimental treatment and so may differ fundamentally with respect to outcomes from patients in Stage I, with whom comparisons are being made in the final analysis. To investigate this concern and the potential effect on the type I error rate, we conducted a sensitivity analysis in which we hypothesized the Stage II response rate for the experimental treatment group is shifted by an amount (δ) from the Stage I response rate if direct assignment is used for Stage II. We considered shift values of δ = +/− 0.025, 0.05, 0.10, 0.20 and +0.30 for α=0.10. The shift values of δ = +0.30, +/−0.20, +/−0.10 were included to examine what may happen in the unlikely case of an extreme population shift. We compared the empirical type I error rate and power under these possible population shifts, to the corresponding rates under no population shift (δ=0). In particular, we obtained the type I error rate by assuming the null hypothesis in Stage I (i.e. pcontrol = ptreat = 0.20), and then in Stage II if direct assignment option was adopted, we specify ptreat = 0.20 + δ. To obtain the power, we assumed the alternative hypothesis in Stage I (i.e. RRR=2.0, pcontrol = 0.20, ptreat = 0.40), and in Stage II if direct assignment option was adopted, we specify ptreat = 0.40 + δ.

A second sensitivity analysis addresses the timing of stage I analysis. In the proposed design, we consider a single analysis at one-half (f=0.50) accrual. As a sensitivity analysis, we considered analyses at one-third (f=0.33) and two-thirds (f=0.67) of accrual.

Finally, a variation of our proposed design would be to allow for an unbalanced (e.g. 4:1 or 5:1 randomization for experimental treatment versus control) design in Stage II when Stage I results are promising but not definitive to stop trial for evidence of efficacy. We refer to this as the “unbalanced randomized design.” This would be an intermediate option between our proposed design and an adaptive design. We explored this possibility with the option for 4:1 randomization, instead of direct assignment, in Stage II; specifying an overall alpha level of α=0.10, response rate ratio of RRR=2.00, and with interim at one-half accrual.

RESULTS

The results from the simulation study are summarized in Tables 2 and 3. Table 2 summarizes the distribution of interim analysis decisions across the 6,000 trials: the proportion of trials that stopped early for either efficacy or futility, or continued with direct assignment or balanced randomization, under both the null (RRR=1.0) and the alternative (RRR=2.0); Table 3 summarizes the operating characteristics: power and type I error rate. In general, the operating characteristics of our proposed design with the option for direct assignment are comparable to a balanced randomized design. For α=0.10 and the range of RRR values considered, the power varied from 79.3%to 99.3% for a design with the direct assignment option, versus from 80.6% to 99.7% for a balanced randomized design. For α=0.20, the power varied from 78.0% to 98.7% and from 79.3% to 99.0%, for a design with direct assignment option and a balanced randomized respectively. The maximum loss in power associated with implementing a design with the assignment option vs. a balanced randomized design is 1.7% (88.0% vs. 86.3%) at α=0.20 and RRR=2.25. The effect of the direct assignment option on power decreases with larger treatment effects. For instance, at α=0.10, the decrease in power for detecting a RRR of 2.0 is 1.3% (80.6% vs. 79.3% for balanced randomized design and design with direct assignment option, respectively), compared with 0.4% (99.7% vs. 99.3%) for detecting a RRR of 3.0. The type I error rate increased slightly for a design with the direct assignment option (11.5%, vs. 10.4% for a balanced randomized design at the nominal alpha-level of α=0.10, and 21.8%, vs. 19.7% for a balanced randomized design at α=0.20).

Table 2.

Distribution of Interim Analysis Decisions after Stage 1 for 1-β = 0.80, under null (RRR=1.0) and alternative (RRR=2.0), across 6,000 simulated trials.

| Number of Trials (%) | |||||

|---|---|---|---|---|---|

| α | Stop early for efficacy |

Continue to Stage II, Adopt Direct Assignment |

Continue to Stage II, Randomize |

Stop early for futility |

|

|

RRR=1.0 (Null) |

0.10 | 138 (2) | 479 (8) | 2198 (37) | 3185 (53) |

| 0.20 | 480 (8) | 704 (12) | 2278 (38) | 2538 (42) | |

|

RRR=2.0 (Alternative) |

0.10 | 1808 (30) | 1803 (30) | 1974 (33) | 415 (7) |

| 0.20 | 2452 (41) | 1341 (22) | 1736 (29) | 471 (8) | |

Table 3.

Comparison of Operating Characteristics for a Design with direct assignment option vs. a Balanced randomized design. 6,000 trial simulations; 1-β = 0.80; pcontrol = 0.20, Stage I analysis at half of accrual (f=0.50); Total sample size planned N = 101 and 65 for α = 0.10 and 0.20, respectively.

| α = 0.10 | α = 0.20 | ||||

|---|---|---|---|---|---|

| Response Rate Ratio (ptreat / pcontrol) |

Balanced Randomized Design |

Design with Direct Assignment Option |

Balanced Randomized Design |

Design with Direct Assignment Option |

|

| Power (%) | 2.00 | 80.6 | 79.3 | 79.3 | 78.0 |

| 2.25 | 90.5 | 89.0 | 88.0 | 86.3 | |

| 2.50 | 96.5 | 95.5 | 94.5 | 93.2 | |

| 3.00 | 99.7 | 99.3 | 99.0 | 98.7 | |

| Type I Error Rate (%) | - | 10.4 | 11.5 | 19.7 | 21.8 |

Sensitivity Analyses

Population Shift

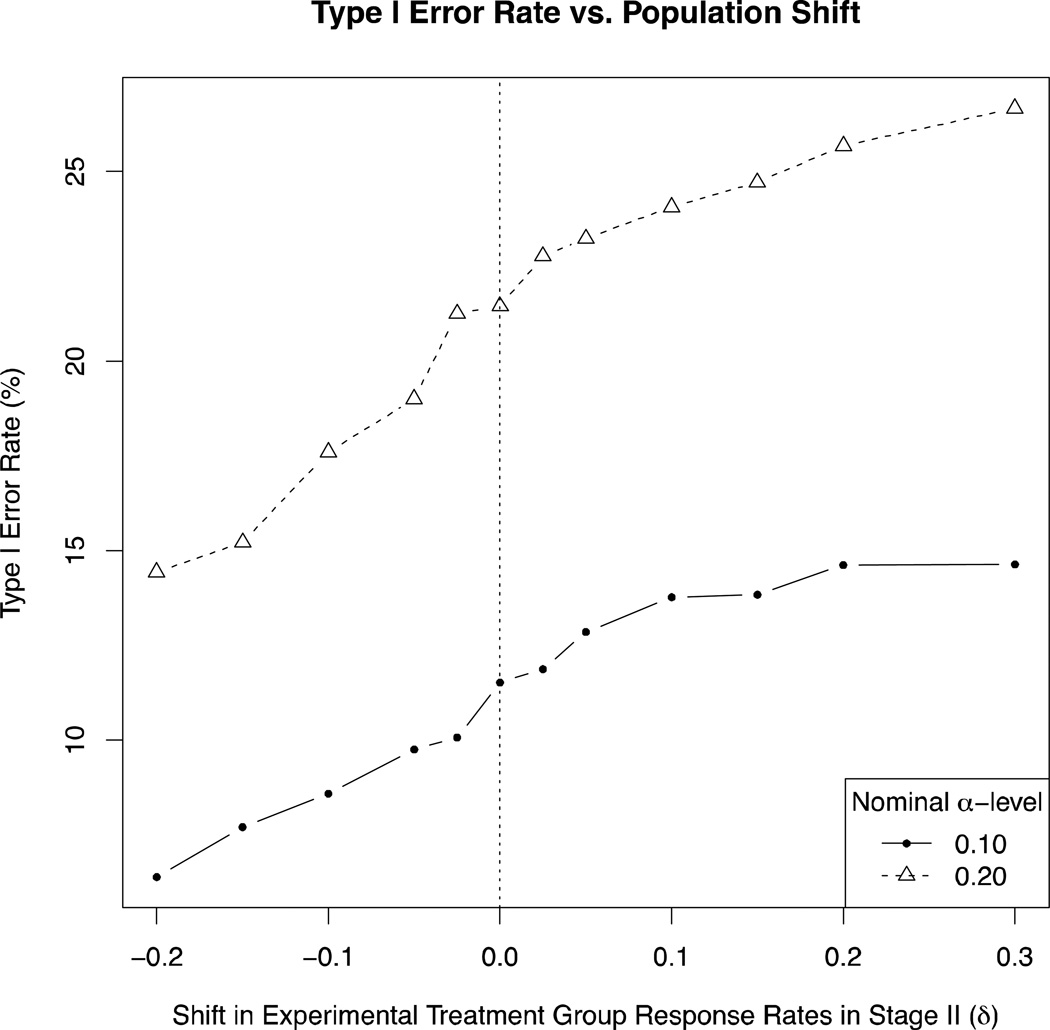

Type I error rate as a function of population shift size is presented in Figure 2. Since we only assumed a population shift in the case when the direct assignment option is adopted, we compared properties of a design with the direct assignment option across different population shift values; the balanced randomized design would not be affected by a population shift and thus is not included in comparisons. For α=0.10 (Figure 2, solid line, solid circles), the empirical type I error rate of a design with the direct assignment option, assuming no population shift (i.e. δ=0) was 11.5%. For small, but plausible, positive shifts (i.e. δ=+0.025 and +0.05), there was minimal effect on empirical type I error rate (type I error rates: 11.9% and 12.9%). As expected, the type I error rate decreased with negative population shifts (reaching a minimum of 6.4% for a shift of δ=−0.20), and increased with positive population shifts (reaching a maximum of 14.6% for the unlikely shift of δ=+0.30). The increase in type I error rate at a nominal α-level of 0.10 was at most 3.1% (from 11.5% to 14.6%, associated with a shift of δ=+0.30). For a nominal α-level of 0.20 (Figure 2, dotted line, open triangles), the empirical type I error rate of a design with the direct assignment option, assuming no population shift (i.e. δ=0) was 21.5%. The maximum increase in type I error rate was 5% (associated with a shift of δ=+0.30). Although the increase in type I error rate is, as expected, greater for higher nominal α-levels, it is still relatively minor.

Figure 2.

Type I error rate as a function of shift in response rate for experimental treatment group, only if Direct Assignment is adopted (vertical dotted line at δ=0, i.e. no population shift). Nominal alpha level = 0.10 (solid line, solid circles) and 0.20 (dotted line, open triangles).

We also considered the effect of population shift on power. For α=0.10, the empirical power of a design with the direct assignment option, assuming no population shift was 79.3%. The power substantially decreased with extreme negative population shifts (63.0% for a shift of δ=−0.20), and minimally increased with positive population shifts (79.2%, 80.6%, and 82.3% for shifts of δ=+0.025, +0.05, and +0.30, respectively).

Timing of Stage I analysis

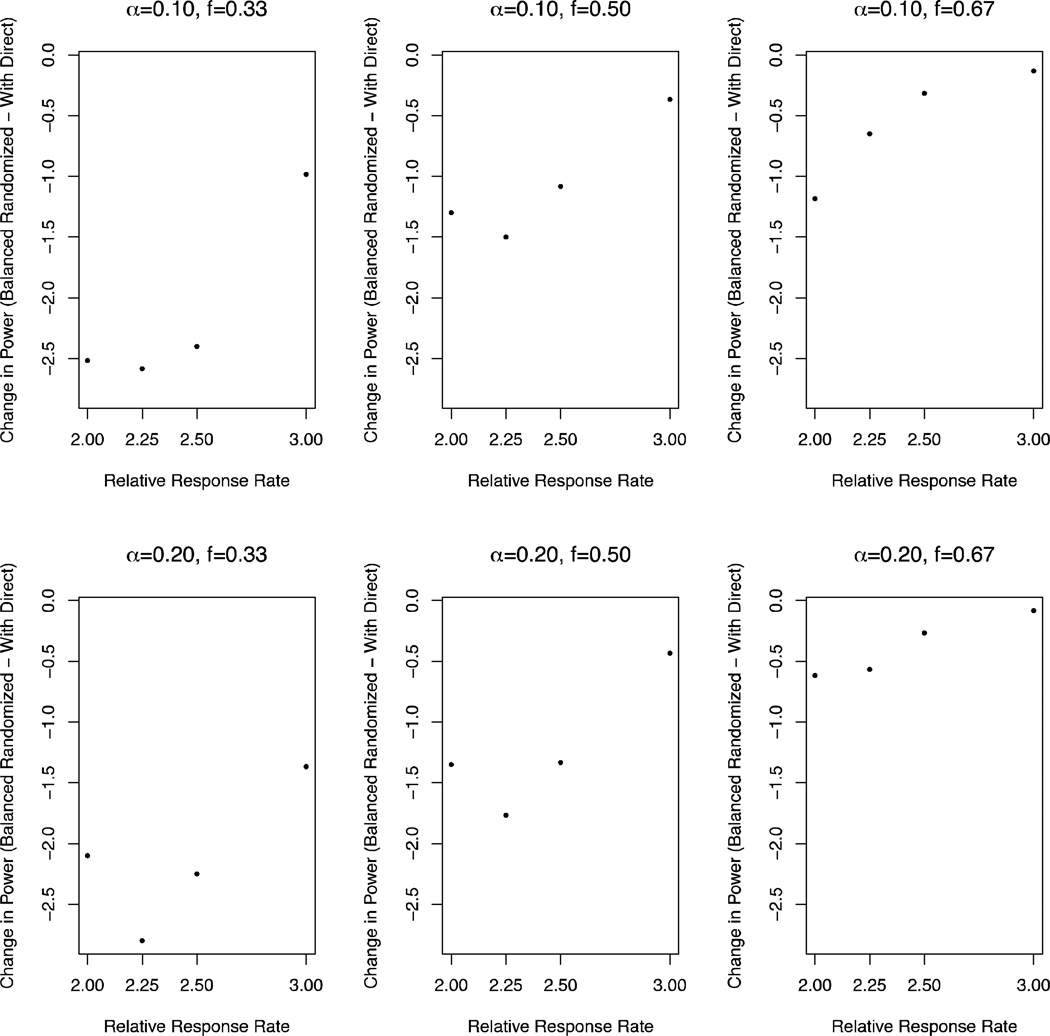

Figure 3 displays power as a function of timing of Stage I analysis. In general, the later the interim analysis, the smaller the loss in power and increase in type I error rate associated with a design with direct assignment option compared to a balanced randomized design. For interim after 1/3 accrual (f=0.33), the loss in power (over RRR=2.0, 2.25, 2.5, 3.0; and α=0.10, 0.20) ranged from 1.0% to 2.8%; and for interim after 2/3 accrual (f=0.67), the loss in power ranged from 0.08% to 1.2%. We also considered type I error rate. In terms of type I error rates, for interim after 1/3 accrual, the increase in type I error rate was 3.0% and 5.0% for α=0.10 and 0.20, respectively; and for interim after 2/3 accrual, the increase was 0.6% and 0.4% for α=0.10 and 0.20, respectively.

Figure 3.

Change in power from a Balanced randomized design to a Design with direct assignment option, as a function of timing of Stage I analysis (f=0.33, 0.50, 0.67 accrual; by columns); overall alpha level (α=0.10, 0.20; by rows); and response rate ratio (RRR=2.0, 2.25, 2.5, 3.0; on x-axis).

Unbalanced randomization

The loss in power (to detect a RRR of 2.0 at α=0.10) associated with an unbalanced randomized (4:1) design compared to a balanced randomized design is 0.4% (80.2% vs. 80.6%). This is marginally less than the loss in power associated with a design with the direct assignment option compared to a balanced randomized design (1.3%; 79.3% vs. 80.6%). This result is expected, since unbalanced randomized designs are less powerful than balanced randomized designs, and the design with a direct assignment option is the most extreme case of an unbalanced randomized design. There is nearly no effect on type I error rate at α=0.10 associated with the unbalanced randomized design (4:1) compared to a balanced randomized design (both,10.4%).

DISCUSSION

Testing of novel targeted agents with predictive biomarkers in clinical trials requires new strategies. In the case of a targeted agent with promising efficacy, it is desirable to allow as many patients as possible to the new treatment. Several studies have been conducted to investigate factors associated with participation in cancer clinical trials. Among breast cancer patients, one of the principle drawbacks of participating in clinical trials was the potential for receiving the less effective treatment [12]. The direct assignment phase of our proposed design removes the uncertainty regarding treatment assignment (associated with randomized treatment assignment) when an initial promising benefit has been observed. Such certainty regarding receiving treatment received in a clinical trial is only possible with a direct assignment. Even outcome-adaptive designs with re-weighted randomization probabilities based on accumulating outcome data do not provide such level of certainty.

Colton [10,11] previously proposed several designs that involved directly assigning patients to one of two treatment arms, which differs from our proposed design in two key ways. First, the parameters in his design were driven by minimization of the cost associated with treating patients, which is in contrast to our objectives, which are to control for power and Type I error rates. Another key distinction is that in the Colton designs, the second stage always goes to direct assignment. In our proposed design, a data-derived decision is made at interim based on Stage I data regarding whether or not to direct assign in Stage II. We emphasize that our design does not always switch to direct assignment in Stage II. Only when there is convincing though not definitive evidence from Stage I does the trial design switch to direct assignment. In the absence of such evidence, the trial continues with randomization in Stage II. As such, the direct assignment option provides an “extended confirmation phase” as an alternative to stopping the trial early for efficacy, which may help to avoid possibly prematurely launching into a Phase III trial. This latter benefit of our design is especially important given the high failure rates of Phase III trials [13].

Our simulation studies demonstrate that the addition of a direct assignment option, in the absence of extreme population shift, results in similar statistical operating characteristics relative to a balanced randomized design. This relatively minimal loss in power (Table 3) is in part explained by the probability that a trial adopts the direct assignment option, since it is only with direct assignment where power is lost relative to balanced randomization. Table 2 presents the proportion of the 6,000 simulated trials that adopted direct assignment, under both the null (RRR=1.0) and the alternative (RRR=2.0) (Table 2). For α-levels 0.10 and 0.20, the probability that a trial adopts direct assignment is 0.30 and 0.22 respectively under the alternative, which is meaningfully high to make this design with a direct assignment option attractive, yet, as we have found in our simulation, not too high so as to lead to a substantial loss in power.

Based on our sensitivity analyses, we believe that that the risk associated with false trial conclusions associated with a plausible population shift in our proposed design is minor. In addition, the timing of the Stage I analysis appears to have little effect on the loss in power. A design that allows for extreme unbalanced randomization (e.g. 4:1) in Stage II is slightly more powerful than a design with a direct assignment option in stage II, and could be considered as an alternative to our design. Nevertheless, the direct assignment design has marginally little loss power and provides the advantage of certainty of treatment received.

One could imagine various extensions to our proposed design. For example, a design could plan for two interim analyses, for example at one-third and two-thirds accrual, but with the option for direct assignment only available after the second interim analysis (i.e. the first interim analysis is for futility only). Extensions to our design to incorporate continuous or time-to-event endpoints are also a possibility. Further, while we utilized an enrichment design in the marker-positive group as a framework to our design, it may be of interest to extend to a situation involving multiple marker group(s). A final possibility, if ethical, would be to use a placebo control throughout the trial, where both the patient and the treating physician are blinded to the treatment assignment. In this case, if in Stage II the direct assignment option is adopted, then while the randomization would continue at the patient level (so the patient and the treating physician are unaware that the design has switched to a direct assignment), the placebo arm would be discontinued and all patients would be assigned to the experimental arm.

Biomarker-based clinical trials is a rapidly developing field. In the spectrum of designs proposed to date, with adaptive designs comprising one end and fixed balanced randomized designs the other, our proposed design with a direct assignment option in Stage II provides a possible middle ground. It provides (relative) logistical simplicity, while still allowing adaptation based on accumulated data, and maintaining desirable statistical properties. We believe that this design may have a better appeal to clinicians and patients, and could in fact be used for a cytotoxic agent since no decisions are made with respect to the targeted versus an overall hypothesis.

Acknowledgments

FINANCIAL SUPPORT: Mandrekar and Sargent: National Cancer Institute Grants No CA-15083 (Mayo Clinic Cancer Center) and CA-25224 (North Central Cancer Treatment Group)

Appendix

Interim (Stage I) decision based on number of responses

The interim decision is based on the cut-offs of the Stage I p-values. However, since we use a normal-based z-test, we can translate these decision cut-offs into approximate number of excess responses needed in the experimental arm relative to the control arm. General expressions are derived below, but first consider an example with n=50 per arm in Stage I, overall Type I error rate α=0.10, the null hypothesis that pcontrol = ptreat==0.2, and the OF stopping boundaries given in Table 1. In this case, if the number of excess responses in the experimental arm relative to the control arm is at least 8, then the trial stops early for efficacy; if the excess is either 6 or 7, then the trial continues into Stage II with direct assignment; if the excess is between 1 and 5, then the trial continues to Stage II with randomization; otherwise the trial stops early for futility. In other words, to stop for futility, the number of responses on the experimental arm exceeds those on the control arm by at most 1.

Here we derive general expressions for translating the Stage I decision stopping rules into number of responses. At Stage I analysis, we test the null hypothesis that pcontrol = ptreat using data collected in Stage I. In particular, let n denote the Stage I sample sizes for each group and p denote the null hypothesized common response rate, then the test statistic calculated under the null hypothesis is:

where p̂1, p̂2 denote the observed response rates in the treatment and control groups, respectively; and X1, X2 denote the observed number of responses in the treatment and control groups, respectively. At the end of Stage I, we “stop the trial early for efficacy” when the Stage I p-value is less than c1, which corresponds to

where zp denotes the (1-p)*100th percentile of a standard normal distribution for 0≤p≤1. Similarly, we “continue to Stage II with direct assignment” when the Stage I p-value is between c1 and d, which corresponds to

“continue to Stage II with randomization” when the Stage I p-value is between d and c2, which corresponds to

and “stop the trial early for futility” when the Stage I p-value is less than c2, which corresponds to

Footnotes

CONFLICTS OF INTEREST: None.

REFERENCES

- 1.Mandrekar SM, Grothey A, Goetz MP, Sargent DJ. Clinical trial designs for prospective validation. American Journal of Pharmacogenomics. 2005;5(5):317–325. doi: 10.2165/00129785-200505050-00004. [DOI] [PubMed] [Google Scholar]

- 2.Sargent DJ, Conley BA, Allegra C, Collette L. Clinical trial designs for predictive marker validation in cancer treatment trials. J Clin Oncol. 2005;23(9):2020–2027. doi: 10.1200/JCO.2005.01.112. [DOI] [PubMed] [Google Scholar]

- 3.Simon R, Wang SJ. Use of genomic signatures in therapeutics development in oncology and other diseases. The Pharmacogenomics Journal. 2006;6:166–173. doi: 10.1038/sj.tpj.6500349. [DOI] [PubMed] [Google Scholar]

- 4.Wang SJ, O’Neill RT, Hung HMJ. Approaches to evaluation of treatment effect in RCT with genomic subset. Pharmaceut Statist. 2007;6:227–244. doi: 10.1002/pst.300. [DOI] [PubMed] [Google Scholar]

- 5.Lee JJ, Gu X, Liu S. Bayesian adaptive randomization designs for targeted agent development. Clin Trials. 2010;7(5):584–596. doi: 10.1177/1740774510373120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Simon R, Maitournam A. Evaluating the Efficiency of Targeted Designs for Randomized Clinical Trials. Clinical Cancer Research. 2004;10:6759–6763. doi: 10.1158/1078-0432.CCR-04-0496. [DOI] [PubMed] [Google Scholar]

- 7.Smit EF, Dingemans AC, Thunnissen FB, Hochstenbach MM, van Suylen R-J, Postmus PE. Sorafenib in Patients with Advanced Non-small Cell Lung Cancer that Harbor K-Ras Mutations A Brief Report. J Thoracic Oncol. 2010;5:719–720. doi: 10.1097/JTO.0b013e3181d86ebf. [DOI] [PubMed] [Google Scholar]

- 8.Mellema WW, Dingemans AMC, Groen HJM, van Wijk A, Burgers S, Kunst PWA, et al. A phase II study of sorafenib in patients with stage IV non-small cell lung cancer (NSCLC) with KRAS mutation. AACR-IASLC. 2011 doi: 10.1158/1078-0432.CCR-12-1779. Abstract PR-5. [DOI] [PubMed] [Google Scholar]

- 9.Acquaviva J, Smith D, Sang J, Wada Y, Proia DA. Targeting KRAS mutant NSCLC with the Hsp90 inhibitor ganetespib. AACR-IASLC. 2011 doi: 10.1158/1535-7163.MCT-12-0615. Abstract B1. [DOI] [PubMed] [Google Scholar]

- 10.Colton T. A model for selecting one of two medical treatments. JASA. 1963;58(302):388–400. [Google Scholar]

- 11.Colton T. A two-stage model for selecting one of two treatments. Biometrics. 1965;21(1):169–180. [Google Scholar]

- 12.Avis NE, Smith KW, Link CL, Hortobagyi GN, Rivera E. Factors Associated with Participation in Breast Cancer Treatment Clinical Trials. J Clin Oncol. 2006;24(12):1860–1867. doi: 10.1200/JCO.2005.03.8976. [DOI] [PubMed] [Google Scholar]

- 13.Kola I, Landis J. Can the pharmaceutical industry reduce attrition rates? Nat Rev Drug Discov. 2004;3:711–715. doi: 10.1038/nrd1470. [DOI] [PubMed] [Google Scholar]