Abstract

The role of dopamine in reward is a topic of debate. For example, some have argued that phasic dopamine signaling provides a prediction-error signal necessary for stimulus-reward learning, whereas others have hypothesized that dopamine is not necessary for learning per se, but for attributing incentive motivational value (“incentive salience”) to reward cues. These psychological processes are difficult to tease apart, because they tend to change together. To disentangle them we took advantage of natural individual variation in the extent to which reward cues are attributed with incentive salience, and asked whether dopamine (specifically in the core of the nucleus accumbens) is necessary for the expression of two forms of Pavlovian conditioned approach behavior - one in which the cue acquires powerful motivational properties (sign-tracking) and another closely related one in which it does not (goal-tracking). After acquisition of these conditioned responses (CRs), intra-accumbens injection of the dopamine receptor antagonist flupenthixol markedly impaired the expression of a sign-tracking CR, but not a goal-tracking CR. Furthermore, dopamine antagonism did not produce a gradual extinction-like decline in behavior, but maximally impaired expression of a sign-tracking CR on the very first trial, indicating the effect was not due to new learning (i.e., it occurred in the absence of new prediction-error computations). The data support the view that dopamine in the accumbens core is not necessary for learning stimulus-reward associations, but in attributing incentive salience to reward cues, transforming predictive CSs into incentive stimuli with powerful motivational properties.

Keywords: rat, sign-tracking, goal-tracking, motivation, learning

Introduction

There is general agreement that dopamine signaling within mesolimbic brain circuitry contributes to reward, but its exact role is less clear. One view is that phasic dopamine activity provides a prediction-error signal necessary for learning stimulus-reward associations (Montague et al., 1996; Schultz et al., 1997; Bayer & Glimcher, 2005). In contrast, others have argued that dopamine is not necessary for learning, but for attributing incentive salience to reward cues (Berridge & Robinson, 1998; Berridge, 2012; Zhang et al., 2012). It is difficult to parse these psychological functions, because the predictive and incentive values of reward-associated stimuli are strongly correlated and often change together. However, individuals vary in the extent to which they attribute reward cues with motivational properties, and this variation can be exploited to dissociate these components of reward (Berridge & Robinson, 2003; Flagel et al., 2009; Robinson & Flagel, 2009).

When a Pavlovian conditional stimulus (CS) predicts delivery of a food reward (US), only in some animals (sign-trackers, STs; Hearst & Jenkins, 1974) does the cue becomes attractive, eliciting approach towards it, and become desired, in that animals will work to obtain it (Robinson & Flagel, 2009). For others (goal-trackers, GTs; Boakes, 1977) the cue itself is not attractive, and is less desirable, but nevertheless, it comes to reliably evoke conditioned approach towards the location of impending food delivery (Robinson & Flagel, 2009; Flagel et al., 2011b; Lomanowska et al., 2011). Thus, the cue is an equally predictive and effective CS for both STs and GTs, and it comes to evoke a conditioned response (CR) in both, but only in STs is the predictive CS attributed with incentive salience, rendering it an attractive and desirable “incentive stimulus” (Flagel et al., 2009).

Flagel et al. (2011b) took advantage of this variation to examine the role of dopamine in stimulus-reward learning. They reported that learning a ST CR, but not a GT CR, was associated with transfer of a phasic dopamine signal from the US to CS, and systemic injection of a dopamine antagonist prevented learning of a ST CR, but not a GT CR (see also Danna & Elmer, 2010). They suggested, therefore, that dopamine plays a selective role in attributing incentive salience to reward cues during learning. Flagel et al. (2011b) also reported that the performance of both sign and goal-tracking was impaired by dopamine antagonism. However, it is difficult to interpret this result, because the effects occurred at doses that also produced nonspecific reductions in motor activity. The purpose of this study was to further explore the role of dopamine in the performance of these two forms of Pavlovian conditioned approach, after they were acquired, using intracerebral drug administration to obviate nonspecific effects of dopamine antagonism on behavior. We focused on the core of the nucleus accumbens (NAcC), because of the considerable evidence this region is critical in mediating the learning and performance of motivated behaviors (Ikemoto & Panksepp, 1999; Cardinal et al., 2002), and because it shows dopamine prediction-error signals (Day et al., 2007).

Materials and Methods

Subjects

Male Sprague Dawley rats (N=53) (Harlan, IN) weighing 275–325 grams at surgery were individually housed in a temperature and humidity controlled colony room kept on a 12-hr light/12-hr dark cycle (lights on at 0800 hr). Water and food were available ad libitum (i.e., rats were not food deprived at any time). After arrival rats were given one week to acclimate to the colony room before testing began. During this period they were repeatedly handled by the experimenters. All procedures were approved by the University of Michigan Committee on the Use and Care of Animals (UCUCA).

Apparatus

Behavioral testing was conducted in standard (30.5 × 24.1 × 21 cm) test chambers (Med Associates Inc., St. Albans, VT, USA) located inside sound-attenuating cabinets. A ventilating fan masked background noise. For Pavlovian training each chamber had a food cup located in the center of one wall, 3 cm above a stainless steel grid floor. Head entries into the food cup were recorded by breaks of an infrared photobeam located inside. A retractable lever that could be illuminated from behind was located 2.5 cm to the left or right of the food cup, approximately 6 cm above the floor. The location of the lever with respect to the food cup was counterbalanced across rats. On the wall opposite the food cup, a red house light remained illuminated throughout all experimental sessions. Lever deflections and beam breaks were recorded using Med Associates software.

Surgery

Rats were anesthetized with ketamine hydrochloride (100 mg/kg i.p.) and xylazine (10 mg/kg i.p.) and positioned in a stereotaxic apparatus (David Kopf Instruments, Tujunga, CA). The skull of each rat was leveled and chronic guide cannulae (22 gauge stainless steel; Plastics One) were inserted bilaterally 2 mm above the target site in the NAcC (relative to Bregma: anterior +1.8 mm; lateral +1.6 mm; ventral −5.0 mm). Guide cannulae were secured with skull screws and acrylic cement, and wire stylets (28 gauge, Plastics One) were inserted to prevent occlusion. After surgery, rats received antibiotic and carprofen (5 mg/kg) for pain. Rats were allowed to recover from surgery for at least 7 days before testing began.

Microinjections

Dopamine receptor blockade was achieved with microinjections of the relatively nonspecific dopamine receptor antagonist, flupenthixol (Sigma, St. Louis, MO). We chose a nonspecific antagonist in order to block all actions of endogenous dopamine within the NAcC, to assess the general (i.e., not specific to a particular receptor) function of dopamine in the expression of different forms of Pavlovian conditioned approach behavior. Flupenthixol was administered in four doses: 0, 5, 10, and 20 μg in 0.9% sterile saline. Drug doses were based on previous studies (e.g., Di Ciano et al., 2001). Intracerebral microinjections were made through 28 gauge injector cannulae (Plastics One) lowered to the injection site in the NAcC (ventral −7.0 mm relative to skull), 2 mm below the ventral tip of the guide cannulae. During infusions, rats were gently held by the experimenter. All infusions were administered bilaterally in a volume of 0.5 μl/side, delivered over 90 s using a syringe pump (Harvard Apparatus, Holliston, MA) connected to microinjection cannulae via PE-20 tubing. After infusions, the injectors were left in place for 60 s to allow for drug diffusion before being withdrawn and replaced with wire stylets. All infusions were separated by at least 1 additional day of behavioral testing without treatment.

Procedure

Pavlovian training

Pavlovian training procedures were the similar to those described previously (Flagel et al., 2007; Saunders & Robinson, 2010). For two days prior to the start of training, 10 banana-flavored pellets (45 mg, BioServe, #F0059; Frenchtown, NJ) were placed in the home cages to familiarize the rats with this food. Approximately one week after surgery, rats were placed in the test chambers, with the lever retracted, and trained to retrieve pellets from the food cup by presenting 25 45-mg banana pellets on a variable time (VT) 30-sec schedule. All rats retrieved the pellets and therefore the next day they began Pavlovian training. Each trial consisted of insertion (and simultaneous illumination) of the lever (CS) into the chamber for 8 s, after which time the lever was retracted and a single food pellet (US) was immediately delivered into the adjacent food cup. Each training session consisted of 25 trials in which CS-US pairings occurred on a variable time (VT) 90-s schedule (the time between CS presentations varied randomly between 30 and 150 s). Lever deflections, food cup entries during the 8-s CS period, latency to the first lever deflection, latency to first food cup entry during the CS period, and food cup entries during the inter-trial interval were measured.

Quantification of behavior using an index of Pavlovian conditioned approach (PCA)

For some analyses rats were classed into three groups: (1) Those who preferentially interacted with the lever (“sign-trackers”, STs), (2) those who preferentially interacted with the food cup during lever presentation (“goal-trackers”, GTs), and (3) those who had no clear preference for the lever or food cup (“intermediates”, INs). The extent to which behavior was lever (CS) or food-cup directed was quantified using a composite index (Lovic et al., 2011; Saunders & Robinson, 2011; Meyer et al., In press) that incorporated three measures of Pavlovian conditioned approach: (1) the probability of either deflecting the lever or entering the food cup during each CS period [P(lever) − P(food cup)]; (2) the response bias for contacting the lever or the food cup during each CS period [(#lever deflections − #food-cup entries)/(#lever deflections + #food-cup entries)]; and (3) the latency to contact the lever or the food cup during the CS period [(lever deflection latency − food-cup entry latency)/8]. Thus, the Pavlovian conditioned approach index (PCA Index) score consisted of [(Probability difference score + Responses bias score + Latency difference score)/3]. This formula produces values on a scale ranging from −1.0 to +1.0, where scores approaching −1.0 represent a strong food cup-directed bias and scores approaching +1.0 represent strong lever-directed bias. The average PCA Index score for days 4 and 5 of training was used to class rats. Rats were designated STs if they obtained an average index score of +0.5 or greater (which means they directed their behavior towards the lever at least twice as often as to the food cup), and as GTs if they obtained a score of −0.5 or less. The remaining rats within the −0.49/+0.49 range were classed as INs.

Experiment 1: The effect of flupenthixol on two forms of Pavlovian conditioned approach behavior: 10 min between drug treatment and testing

Training and microinjection tests

Rats initially underwent Pavlovian training for 8 consecutive days, with no drug pretreatment, as described above. After the 8th day of training, by which time conditioned responding had stabilized, rats were given a vehicle microinjection before the next training session. Each rat was subsequently given an injection of each of three doses of flupenthixol (5, 10, and 20 μg) in a counterbalanced order, followed by a second vehicle injection before the final session. After all microinjections, rats were placed in holding boxes for approximately 10 minutes before being moved to the testing chambers for the start of the session. The days rats received microinjections were separated by 1–2 days of additional Pavlovian training without pretreatment to ensure conditioned responding was maintained between treatments.

Video coding of orienting behavior

A subset of STs (n=8) was video recorded during vehicle and flupenthixol administration sessions. Video was scored offline to quantify approach/contact and orientation to the CS. An orientation response was scored if the rat made a head and/or body movement in the direction of the lever during the period it was extended, even if it did not approach or contact the CS. A contact was scored if the rat approached and touched the lever with its nose, mouth, and/or forepaw, even if contact failed to produce a deflection of the lever.

Experiment 2: The effect of flupenthixol on lever (CS) directed Pavlovian conditioned approach behavior: 35 min between drug treatment and testing

Training and microinjection tests

A separate group of STs (n=11) were tested in order to investigate the time course of flupenthixol effects found in Experiment 1 (see below). These rats received Pavlovian training exactly as in Experiment 1, for 10 sessions, then received vehicle and flupenthixol (20 μg) injections, in a counterbalanced order, before separate test sessions. Following microinjections, rats were placed in holding chambers for 35 minutes and then moved to the testing chambers for the start of the session.

Extinction

After the last test with a drug injection all rats were trained for three additional days, to once again stabilize performance, and then all underwent extinction training over the next four consecutive days. For these four sessions, no food pellets were delivered upon lever retraction, but conditions were otherwise the same as during Pavlovian training. Rats received a vehicle microinjection before the first extinction session.

Histology

After the completion of all behavioral testing, rats were anesthetized with an overdose of sodium pentobarbital and their brains were removed and flash frozen in isopentane chilled to approximately −30 C by a mixture of isopropyl alcohol and dry ice. Frozen brains were sectioned on a cryostat at a thickness of 60 μm, mounted on slides, air-dried, and stained with cresyl violet. Microinjection sites were verified by light microscopy and plotted onto drawings from a rat brain atlas (Paxinos & Watson, 2007).

Statistical Analyses

Linear mixed-models (LMM) analyses of variance (ANOVA) were used for all repeated-measures data. The best-fitting model of repeated measures covariance was determined by the lowest Akaike information criterion score (Verbeke, 2009). Depending on the model selected, the degrees of freedom were adjusted to a non-integer value, however, according to journal instructions, we report the unadjusted degrees of freedom alongside the LMM results. Significant interactions were followed by main effects and planned comparisons. Bonferroni corrections were used for planned comparisons between vehicle and each drug dose where appropriate. Paired t tests were used to compare mean order scores of IN rats and to compare mean orientation and contact behavior from video coded data. Statistical significance was set at p < 0.05.

Also note that in Experiment 1 we found that the drug did not start to have much effect until about half way through the session, and so we conducted Experiment 2 to determine if this was because of a delayed onset of drug action. We determined that, indeed, there was a delay before the drug exerted its full effect. Therefore, the data shown for Experiment 1 (Figures 2, 4, 5, and 7) are from trials 13–25, because this is when we determined the drug exerted its full behavioral effects (see Figure 6A). The full session data were also analyzed for all measures described, and similar effects were found. For the sake of brevity, we chose not to present the full session data, but only data from trials 13–25 for Figures 2, 4, 5, and 7. Although both analyses resulted produced a similar pattern of results, the effect is most clearly illustrated in the later trials, not surprisingly, because only in these trials was the drug exerting its full effect.

Figure 2.

Effects of flupenthixol on two types of Pavlovian conditioned approach. Lever-directed (A–C) and food cup-directed (D–F) CRs were quantified for all rats tested in Experiment 1 (n = 42). Data presented correspond to trials 13–25 of experimental sessions. A, The probability of lever-directed CR occurrence. The probability of lever-directed CRs was significantly reduced after flupenthixol doses 10 μg (p = 0.001) and 20 μg (p < 0.001). B, Number of lever-directed CRs. The number of lever-directed CRs was reduced following each dose of flupenthixol (ps < 0.004). C, Latency to make a lever-directed CR. The latency of lever-directed CRs was significantly longer after 5 μg (p = 0.021) as well as 10 and 20 μg (ps < 0.001) doses of flupenthixol. D, Probability of food cup-directed CR occurrence. E, Number of food cup-directed CRs. The highest flupenthixol dose (20 μg) produced a small but significant reduction in the number of food cup-directed CRs (p = 0.022). F, Latency to make a food cup-directed CR. *p < 0.05, **p < 0.01 (relative to vehicle). Error bars indicate SEM.

Figure 4.

Effects of flupenthixol on STs (n=16) and GTs (n=13). Data presented correspond to trials 13–25 of experimental sessions. A, Probability of making an ST or GT CR. B, Number of ST or GT CRs (Statistical analysis was done on the raw data, but in B the data are expressed as a % of vehicle, in order to directly illustrate the relative size of the effect of flupenthixol that would otherwise be obscured by group baseline differences in total responding). C, Latency to make an ST or GT CR. D, Number of non-CS food cup entries. *p < 0.05, **p < 0.01 (relative to vehicle). Error bars indicate SEM.

Figure 5.

Effects of flupenthixol on response topography and order of responding. Data presented correspond to trials 13–25 of experimental sessions. A, Proportion of CUP trials (CUP FIRST + CUP ONLY trials), LEVER trials (LEVER FIRST + LEVER ONLY trials), and NONE trials for STs, GTs, and INs following vehicle (top circles) or 20 μg flupenthixol (bottom circles). Flupenthixol specifically reduced LEVER trials, but not CUP trials, and increased NONE trials, in all groups. B, Effect of flupenthixol on the order of responding in INs. An order score was calculated as follows: [(# LEVER FIRST trials - # CUP FIRST trials)/total # BOTH trials]. Following vehicle administration, INs had a positive order score, indicating a bias towards making a lever-directed CR first. After flupenthixol administration, this score reversed, indicating a bias towards making a food cup-directed CR first. **p < 0.01 (relative to vehicle). Error bars indicate SEM.

Figure 7.

Lever orientation and approach behavior. Data presented correspond to trials 13–25 of experimental sessions. Left bars, Video-scored probability of making a conditioned orienting response for a subset of STs (n=8) in Experiment 1 following vehicle and 20 μg flupenthixol. Middle bars, Video-scored probability of approaching the lever following vehicle and 20 μg flupenthixol. Right bars, Probability of making a computer-scored lever deflection following vehicle and 20 μg flupenthixol. **p < 0.01 (relative to vehicle). Error bars indicate SEM.

Figure 6.

Time course of flupenthixol and extinction effects on lever-directed CRs. Experiment 1 (A–B): 10-min delay between drug administration and testing. A, The number of lever-directed CRs among STs (n=16) across the 25-trial test session and B, on the first and last two trials, after vehicle and 20 μg flupenthixol administration. Experiment 2 (C–F): 35-min delay between drug administration and testing. C, Number of lever-directed CRs among STs (n=11) across the 25-trial test session and D, on the first and last two trials, after vehicle and 20 μg flupenthixol administration. E, Number of lever-directed CRs across the 25-trial test session for vehicle and extinction days 1, 2, and 4. F, Average number of lever-directed CRs on the first and last two trials of the vehicle session and extinction day 1. Error bars indicate SEM.

Results

Experiment 1: Individual variation in Pavlovian conditioned approach behavior

Figure 1 (top) illustrates the degree of individual variation in conditioned responding in the rats used in Experiment 1, by plotting the distribution of individual PCA Index scores (n=42). There was wide variation in the type and prevalence of different forms of Pavlovian conditioned approach behavior. The type of response made on each single trial was categorized as: (1) Contact with the food cup only (CUP ONLY), (2) contact with the lever only (LEVER ONLY), (3) contact with the food cup first followed by a lever contact (CUP FIRST; i.e., both responses occurred within the same 8-s CS period), (4) contact with the lever first followed by a food cup contact (LEVER FIRST), and (5) no food cup or lever contacts (NONE). The percentage of trials with each type of response was averaged over training sessions 4–8, which corresponded to the emergence of stable PCA behavior (see below), and for illustrative purposes we grouped rats into 4 subgroups based on their PCA Index scores. As shown in Figure 1 (bottom), the distribution of response types varied markedly as a function of PCA Index score. Rats with Index scores in the most negative range (−1.0 to −0.5) made over 90% CUP ONLY and CUP FIRST trials (Figure 1, left pie graph). Rats with scores between −0.49 and 0 were also biased toward the food cup on a majority of trials, showing 59% CUP ONLY and CUP FIRST trials, but also exhibited substantial lever-directed behavior, with 30% LEVER ONLY and LEVER FIRST trials (Figure 1, second pie graph). Within the 0 to +0.49 score range, rats were biased towards the lever on a majority of trials, with 74% LEVER FIRST and LEVER ONLY trials, but still had a sizable proportion of food cup trials, with 20% CUP ONLY and CUP FIRST trials (Figure 1, third pie graph). Finally, rats with scores from +0.5 to +1.0 made over 90% LEVER ONLY and LEVER FIRST responses (Figure 1, right pie graph). Note that rats with scores on the extreme ends of the Index distribution almost exclusively made ONLY responses, whereas rats with intermediate scores had large numbers of BOTH trials (i.e., CUP FIRST or LEVER FIRST).

Figure 1.

Individual variation in Pavlovian conditioned approach behavior. The top section shows PCA Index scores for individual rats (n=42) used in Experiment 1. Moving from left to right, scores range from −1.0 (food-cup biased) to +1.0 (lever biased). The bottom section illustrates the proportion of different response types for the rats with PCA Index scores within four different ranges. Five response types were possible on a given trial: (1) Contact with the food cup only (CUP ONLY), (2) contact with the lever only (LEVER ONLY), (3) contact with the food cup first followed by a lever contact (CUP FIRST; i.e., both responses occurred within the same 8-s CS period), (4) contact with the lever first followed by a food cup contact (LEVER FIRST), and (5) no food cup or lever contact (NONE).

Flupenthixol selectively impairs the expression of lever (CS) directed behavior

The effect of flupenthixol on two forms of Pavlovian conditioned approach behavior was analyzed in two different ways. In the first analysis rats were not subdivided into groups based on their behavior, but the analysis was based on the type of conditioned response (CR) observed on every individual trial, in each individual rat tested in Experiment 1 (i.e., independent of a rat’s PCA Index score). A lever-directed CR was defined as a trial on which a rat made either a LEVER ONLY or a LEVER FIRST response (Figure 2, panels A–C). A food cup-directed CR was defined as a trial on which a rat made either a CUP ONLY or CUP FIRST response (Figure 2, panels D–F). As noted in the Methods section (Statistical Analyses), the data presented in Figure 2 correspond to trials 13–25 of experimental sessions, to capture the period when the drug exerted its effect (see below).

Probability

A two-way ANOVA comparing the effect of flupenthixol (vehicle, 5, 10, or 20 μg) as a function of CR type (lever versus food cup-directed responses) showed that flupenthixol reduced the probability of responding during the CS period (effect of treatment, F(3,41) = 17.444, p < 0.001), but this effect varied depending on whether the CR was directed towards the lever or towards the food cup (CR type X treatment interaction, F(3,41) = 5.036, p = 0.005; Figure 2A and 2D). One-way ANOVAs revealed that flupenthixol dose-dependently reduced the probability of a lever-directed CR (effect of treatment, F(3,41) = 9.378, p < 0.001; Figure 2A), but had no significant effect on the probability of a food cup-directed CR (no effect of treatment, F(3,41) = 0.330, p = 0.803; Figure 2D).

Contacts

Similarly, flupenthixol reduced the total amount of responding, indicated by the total number of contacts made during CS presentation (effect of treatment, F(3,41) = 19.450, p < 0.001), but the magnitude of the effect again varied by type of CR (CR type X treatment interaction, F(3,41) = 3.988, p = 0.015; Figure 2B and 2E). Flupenthixol produced a reduction in number of lever deflections at all doses tested (effect of treatment, F(3,41) = 9.675, p < 0.001; Figure 2B). There was a significant effect of flupenthixol on the number of head entries into the food cup during the CS period (effect of treatment, F(3,41) = 4.305, p = 0.010), but this effect was more modest, as indicated by the significant interaction (above) and that the effect was significant only after treatment with the highest dose of flupenthixol (Figure 2E).

Latency

Finally, the latency to make a CR upon CS presentation was increased by flupenthixol (effect of treatment, F(3,41) = 19.540, p < 0.001), but only for lever-directed responses (CR type X treatment interaction, F(3,41) = 4.953, p = 0.005; Figure 2C and 2F). One-way ANOVAs revealed that the latency to contact the lever was increased (effect of treatment, F(3,41) = 13.779, p < 0.001; Figure 2C), but the latency to make a head entry into the food cup was unaffected (no effect of treatment, F(3,41) = 1.871, p < 0.151; Figure 2F).

Flupenthixol influences behavior to a greater extent in sign-trackers than goal-trackers

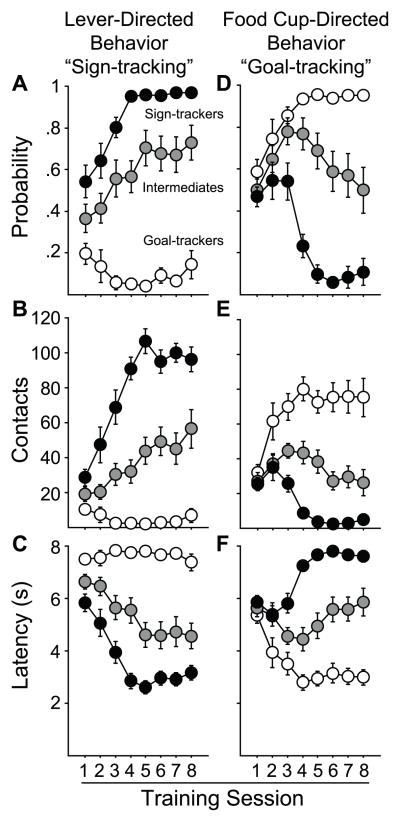

We next analyzed the data by classing rats as STs or GTs based on their PCA Index score, as described above and elsewhere (Saunders & Robinson, 2011). GTs were defined as rats with PCA scores between −1.0 and −0.5, who made over 90 % CUP ONLY and CUP FIRST trials during training (Figure 1, left pie graph). STs were defined as rats with PCA scores between +0.5 and +1.0, who made over 90 % LEVER ONLY and LEVER FIRST trials during training (Figure 1, right pie graph). Intermediates (INs) had PCA scores between −0.49 and +0.49 (Figure 1, middle pie graphs). Figure 3 shows the time course of learning Pavlovian conditioned approach responses in animals grouped in this fashion, across the initial 8 days of training. Similar to previous reports (Flagel et al., 2007), with training, rats classed as STs developed a high probability of rapidly approaching and vigorously engaging the lever (Figure 3A–C), rarely contacting the food cup. In contrast, rats classed as GTs learned to rapidly approach and enter the food cup upon CS onset, and rarely contacted the lever itself (Figure 3D–F). The approach behavior of INs vacillated between lever and food cup, as they showed a similar likelihood of contacting the lever or entering the food cup during lever extension, and did so with similar latencies. Thus, these data clearly illustrate that, as a function of CS-US pairing, both STs and GTs acquired a conditioned response (they both learned), as we have reported previously (Robinson & Flagel, 2009; Saunders & Robinson, 2010; Yager & Robinson, 2010), they just directed their conditioned approach response to different places.

Figure 3.

Pavlovian conditioned approach training. Lever-directed behavior (“sign-tracking”, A–C) and food cup-directed behavior (“goal-tracking”, D–F) across 8 days of training for rats classed as sign-trackers (STs, n=16), goal-trackers (GTs, n=13), or intermediates (INs, n=13). Error bars indicate SEM.

Figure 4 shows the effects of flupenthixol on STs and GTs. INs were excluded from this analysis because we wanted to directly compare two groups that differed markedly in the extent to which they attributed incentive salience to the CS. Additionally, the data presented in Figure 4 correspond to trials 13–25 of experimental sessions (see above).

Probability

A two-way ANOVA showed that flupenthixol significantly altered the probability of approach behavior (effect of treatment, F(3,28) = 25.834, p < 0.001; Figure 4A) but the magnitude of this effect was greater in STs than GTs (group X treatment interaction, F(3,28) = 8.384, p < 0.001). Independent one-way ANOVAs revealed that flupenthixol reduced the probability of approach in STs (effect of treatment, F(3,28) = 37.936, p < 0.001; Figure 4A) at doses of 10 and 20 μg. The effect in GTs (effect of treatment, F(3,28) = 3.174, p = 0.041; Figure 4A) was significant only at the highest dose tested (p = 0.04).

Contacts

Flupenthixol administration also influenced how avidly animals responded, as indicated by number of contacts (effect of treatment, F(3,28) = 31.451, p < 0.001; Figure 4B, data expressed as % of vehicle contacts), but only in STs (group X treatment interaction, F(3,28) = 14.677, p < 0.001). Independent one-way ANOVAs showed that flupenthixol decreased the number of times STs engaged the lever (effect of treatment, F(3,28) = 43.470, p < 0.001; Figure 4B), and did so at all doses tested (ps < 0.001). In contrast, flupenthixol had no significant effect on the number of head entries into the food cup in GTs (no effect of treatment, F(3,28) = 1.716, p = 0.183; Figure 4B).

Latency

Finally, flupenthixol administration influenced the rapidity of approach, measured as the latency from CS onset to the first lever deflection or head entry (effect of treatment, F(3,30.012) = 21.966, p < 0.001; Figure 4C), but again, this effect varied as a function of group (group X treatment interaction, F(3,28) = 9.751, p < 0.001). Independent one-way ANOVAs showed that flupenthixol increased the latency of approach to the CS in STs (effect of treatment, F(3,28) = 28.122, p < 0.001; Figure 4C), and did so at all doses tested (ps < 0.001). However, although the effect of flupenthixol on latency in GTs was statistically significant based on the one-way ANOVA (effect of treatment, F(3,28) = 3.048, p = 0.044; Figure 4C), none of the paired comparisons revealed a statistically significant effect of any given dose.

Figure 4D shows the number of food cup head entries rats made during the inter-trial interval (ITI), the period between CS presentations, which serves as an indirect measure of the effect of flupenthixol on general motor activity. GTs made more non-CS food cup entries than STs (effect of group, F(3,28) = 5.698, p = 0.024). However, by the end of training their rate of food cup entries during the CS period (mean = 0.376 entries/s, SEM = 0.055) was much higher than during non-CS periods (mean = 0.072 entries/s, SEM = 0.010) (see also Meyer et al., 2012), indicating that GTs discriminated between CS and non-CS periods. Importantly, there was no effect of flupenthixol administration on the number of inter-trial interval food cup entries made by either group (no effect of treatment, F(3,28) = 0.132, p = 0.94), indicating that flupenthixol did not impair general motor activity at the doses we used.

Effect of flupenthixol on the topography of the CR in STs, GTs and INs

Figure 5A shows a more detailed analysis of the effects of just the highest dose of flupenthixol (20 μg) on different types of CRs in STs, GTs and INs (as defined above and shown in Figure 1). For the sake of simplicity, CUP FIRST and CUP ONLY trials were grouped together (CUP trials) and LEVER FIRST and LEVER ONLY trials were grouped together (LEVER trials). Figure 5A shows that in all groups flupenthixol reduced the proportion of LEVER trials, but not CUP trials, and increased NONE trials. Goal-trackers: As expected, after vehicle GTs made mostly CUP trials (78% CUP, 16% LEVER, and 6% NONE trials). Following 20 μg flupenthixol, CUP trials were modestly reduced to 70% of trials, while LEVER trials were halved to 8% and NONE trials increased to 22% (Figure 5A, left pie graphs). Intermediates: After vehicle INs exhibited roughly equal proportions of CUP (42%) and LEVER (41%) trials, with 17% NONE trials. Administration of 20 μg flupenthixol selectively reduced LEVER trials to 19% and increased NONE trials to 41%, while leaving CUP trials unaffected, at 40% (Figure 5A, middle pie graphs). Sign-trackers: When treated with vehicle STs made LEVER responses 97% of the time, with 0% CUP, and 3% NONE trials. Flupenthixol administration altered this response pattern by reducing the proportion of LEVER trials by roughly half, to 43%, and increasing NONE trials to 42%. Interestingly, CUP trials in STs increased modestly, to 15% (Figure 5A, right pie graphs). In summary, the effect of flupenthixol was to primarily decrease LEVER trials and to increase NONE trials, with only modest effects on CUP trials, regardless of the phenotype.

We next sought to further characterize the effect of flupenthixol on IN rats, which exhibit substantial numbers of both LEVER and CUP responses during a single CS period (i.e., BOTH responses). Thus, we analyzed trials in which rats both contacted the CS and entered the food cup during a single CS period (BOTH trials) and calculated an order score [(# LEVER FIRST trials − # CUP FIRST trials)/total # BOTH trials] ranging from −1.0 to +1.0, to determine which response occurred first. A positive order score represents a tendency to contact the lever first followed by the food cup on BOTH trials whereas a negative score indicates a bias towards contacting the food cup first. Figure 5B shows the average order scores for INs following vehicle and flupenthixol administration (for simplicity, flupenthixol doses were collapsed). After vehicle, INs tended to approach the lever first on BOTH trials. After flupenthixol administration, this order bias reversed and INs showed a tendency to approach the food cup first. A paired t test revealed that this change in order score was significant (paired t test, t(9) = 3.315, p = 0.009).

Experiment 2: Time course of flupenthixol effects on STs

We next examined the time course of flupenthixol’s effect on the amount of lever-directed behavior specifically in STs, on a trial-by-trial basis, during the test session when they were treated with the highest dose of flupenthixol (20 μg). In Experiment 1, after flupenthixol administration, there was no effect on lever contacts during the first few trials (relative to vehicle), but over the test session there was a gradual decrease in responding, as indicated by a significant trial X treatment interaction (F(24, 312) = 1.643, p = 0.031; Figure 6A). This interaction is clearly illustrated by comparing responding on the first two trials to the last two trials of the test session (Figure 6B). Two possible mechanisms could explain this time course. (1) Delayed drug effects: It is possible that flupenthixol had not yet reached the full extent of its action in vivo within 10-min post-injection. (2) Pavlovian’extinction mimicry’: Administration of dopamine antagonists to rats during an instrumental food-seeking paradigm causes an progressive decrease in responding over time, even when food remains available (Wise et al., 1978), which has been interpreted as an extinction-like effect (but see Phillips et al., 1981; Salamone et al., 2012). In order to test whether the delayed suppression of sign-tracking seen in Experiment 1 was due to delayed drug action or to an extinction-like effect we conducted a second experiment in a separate group of STs (n=11). In this experiment we imposed a 35-min delay after vehicle and flupenthixol (20 μg) microinjections before beginning testing. This delay was chosen because it was approximately the amount of time before flupenthixol produced a marked reduction in sign-tracking behavior in Experiment 1 (see Figure 6A).

As Figure 6C and 6D show, following a 35-min post-injection delay, the number of lever contacts was maximally suppressed on the very first trial. Comparison of lever contacts during the first and last two trials clearly shows the immediacy of the flupenthixol effect (Figure 6D). Over the course of the session there was a gradual decrease in the number of lever contacts after both vehicle and flupenthixol treatment, but the rate of this decrease was the same under both conditions (no trial X treatment interaction, F(24,240) = 0.748, p = 0.799). Note that rats were not food deprived in these experiments, and so the gradual decline in the responding per trial across the test session, even after vehicle, may be because the rats become increasingly sated as the session progressed. In conclusion, these data suggest that the delayed onset of flupenthixol’s effect evident in Experiment 1 was not due to an extinction-like effect, but to a delay in peak drug effect, which is why data from trials 13–25 were selected for analysis in Experiment 1 (see above).

Extinction of ST behavior

In order to more directly contrast the effects of flupenthixol with Pavlovian extinction, the STs in Experiment 2 were given four sessions of PCA extinction training, during which no food pellets were delivered following lever retraction. Figure 6E shows the time course of lever deflections, trial-by-trial, during these extinction sessions, relative to the vehicle test session, when pellets were delivered. In contrast to flupenthixol administration, when there was a 35-min delay in testing (Figure 6C), on day 1 of extinction, sign-tracking behavior was initially identical to vehicle, and decreased gradually across the session. Examination of the first and last two trials clearly shows that the pattern of behavior on day 1 of extinction differed from that seen following flupenthixol, when testing was delayed by 35 min (compare Figure 6F and 6D). Days 2 and 4 of extinction are also shown in Figure 6E. While within-session extinction was clear by day 2, sign-tracking during the first trials of the session remained similar to vehicle levels even on day 4. Thus, the effect of flupenthixol, when testing was delayed by 35 min, had a very different temporal profile than the effect of extinction training, further indicating that flupenthixol did not produce an extinction-like effect (see also Phillips et al., 1981; Salamone et al., 2012).

Flupenthixol does not affect an orienting CR in STs

To further examine the possibility that flupenthixol decreased sign-tracking behavior because dopamine transmission in the NAcC is necessary to maintain the learned association between lever extension and reward delivery, we looked at the effect of flupenthixol on another CR that develops in response to presentation of a CS: a conditioned orienting response. We operationally defined this as a head and/or body movement in the direction of the lever, even if it did not bring the rat into close proximity to it (which would be classed as an approach response; see below). Importantly, we have found that with lever(CS)-US pairing, both STs and GTs acquire a conditioned orienting response, and they do not differ in their rate of learning this CR, even though only STs develop a high probability of approaching the lever (L.M. Yager, unpublished observations). To examine the role of dopamine in the expression of the orienting CR in STs, a subset of STs (n=8) from Experiment 1 were video recorded during the vehicle and 20 μg flupenthixol test sessions. This video was scored offline to quantify the occurrence of conditioned orienting behavior. As shown in Figure 7, after training, the probability of a conditioned orienting response on each trial was very high (over 90%), and this was not influenced by treatment with the high dose of flupenthixol (paired t test relative to vehicle, t(7) = 0.168, p = 0.436; left bars). This is important, because it establishes that after flupenthixol administration the learned CS-US association is still intact in STs, even though they do not approach the CS. As before, for data presented in Figure 7 we restricted our analysis to trials 13–25 of experimental sessions.

As an aside, the conditioned orienting response described here should not be confused with the conditioned orienting response described by Holland and his colleagues in a series of papers (e.g., Holland, 1977; Han et al., 1997). That CR consists of rearing in close proximity to a visual stimulus, which by our criteria would be classed as an approach response.

We also used this video to determine if the effect of flupenthixol on the expression of a ST CR could be because more effort was required to physically deflect the lever (recorded as a ST CR) than to break a photobeam (recorded as a GT CR). To do this we scored the occurrence of CS-evoked approach responses, independent of whether the lever was deflected. Thus, in this analysis approach was defined as merely coming into close proximity to the lever, touching it, but not necessarily deflecting it. This approach response is therefore directly comparable to approach to the food cup, and therefore there is no difference in the “effort” required for a ST versus a GT response. Figure 7 shows that in STs flupenthixol decreased the probability of approaching the lever regardless of whether an approach response was scored by simple proximity to it (paired t test relative to vehicle, t(7) = 3.910, p = 0.003; middle bars), or by physically deflecting the lever (paired t test relative to vehicle, t(7) = 4.437, p = 0.002; right bars).Thus, when controlling for potential differences in effort between ST and GT CRs, dopamine antagonism still had a selective effect in reducing sign-tracking. This suggests that our results cannot be explained by the view that dopamine is involved in effort-related processes necessary for motivated behavior to occur (Robbins & Everitt, 1992; Salamone et al., 2007).

Histological verification of cannulae placements

Figure 8 illustrates the location of microinjection tips within the NAcC for rats used in Experiment 1. Placements for rats in Experiment 2 were similar (data not shown).

Figure 8.

Location of microinjection tips within the NAcC relative to Bregma for STs, GTs, and INs used in Experiment 1.

Discussion

Flagel et al. (2011b) reported that dopamine plays a very selective role in stimulus-reward learning – it is necessary to attribute incentive salience to cues predictive of reward, but not to learn a CS-US association (see also Danna & Elmer, 2010; Shiner et al., 2012). We extend that notion here, and report that dopamine in the NAcC also plays a selective role in the performance of Pavlovian CRs already acquired. Dopamine blockade within the NAcC markedly degraded the expression of Pavlovian conditioned approach behavior directed towards the CS itself (sign-tracking), but not conditioned approach behavior directed towards the food cup (goal-tracking, see also Chang et al., 2012). These findings have a number of implications for thinking about the role of mesolimbic dopamine in reward.

Mesolimbic dopamine as a prediction-error signal necessary for learning

Electrophysiological recordings from dopamine neurons in the ventral tegmental area and substantia nigra, and direct measures of release events within the NAcC, have shown that a phasic dopamine response transfers from an unexpected reward (US) to the CS that predicts reward delivery, over the course of training (Schultz et al., 1997; Pan et al., 2005; Day et al., 2007; Cohen et al., 2012). These studies led to the hypothesis that phasic dopamine transmission provides a prediction-error signal, coding the discrepancy between actual and predicted events, that is required for learning stimulus-reward associations (Montague et al., 1996; Schultz et al., 1997; Bayer & Glimcher, 2005). Therefore, blocking dopamine transmission within NAcC could be functionally equivalent to reward omission, which produces a pause in dopamine neuron firing (i.e., a negative prediction error; Schultz et al., 1997; McClure et al., 2003; Pan et al., 2005; Cohen et al., 2012), leading to new learning. If, under dopamine blockade, the predictive value of the CS was negatively adjusted, trial by trial, this could produce in a gradual reduction in sign-tracking behavior, similar to that seen in instrumental responding (Wise et al., 1978).

The results suggest, however, that the effect of flupenthixol on sign-tracking behavior was not due to updating a prediction-error signal (i.e., new learning). With an optimal delay between flupenthixol administration and testing, the expression of sign-tracking behavior was maximally suppressed on the very first trial. This indicates that dopamine antagonism altered the value of the CS in the absence of new learning (i.e., without new prediction-error computations; see also Shiner et al., 2012). This is in contrast to the gradual, multi-session-long decay in sign-tracking observed when actual extinction conditions were in effect (see also Phillips et al., 1981). These results are complementary to previous studies in which dopaminergic activity was increased by drugs or sensitization, also producing an immediate increase in responding (Wyvell & Berridge, 2001; Tindell et al., 2005; Tindell et al., 2009; Smith et al., 2011; for review see Berridge, 2012). Of course, a learning-based interpretation also cannot account for why the GT and conditioned orienting CRs were not similarly decreased. However, as put by Berridge (2012, p. 1139), “advocates of dopamine-learning theories may reply that only some forms of reward learning require dopamine”. But he goes on to say, “so what particular forms of learning would those advocates suggest need dopamine?” “Pavlovian reward learning was the original source of the dopamine prediction-error hypothesis” … “if not for Pavlovian reward learning, then for what learning is dopamine needed?”

Mesolimbic dopamine as an incentive salience signal

It has been argued that the primary role of mesolimbic dopamine in reward is to attribute incentive salience to rewards and their associated cues, making them attractive and desirable, and capable of exerting motivational control over behavior (Berridge & Robinson, 1998; Berridge, 2007; Berridge, 2012). That is, dopamine in the accumbens is involved in transforming a predictive, but “cold” informational CS, into a “hot” motivating incentive stimulus. This concept was formalized in a recent computational model of incentive salience (Zhang et al., 2009; Berridge, 2012; Zhang et al., 2012). In contrast to traditional “model-free” forms of stimulus-reward learning (see Sutton, 1988; Sutton & Barto, 1998; Daw et al., 2005), which require the cached learned value of a Pavlovian CS be updated incrementally, via new dopamine prediction errors (Schultz et al., 1997), the incentive salience model predicts that dopamine’s role is specifically in transforming the motivational value of learned CSs’on-the-fly’, without the need to re-experience CS-US pairing, as observed here.

In thinking about the differential effect of dopamine antagonism on the learning and expression of a ST CR vs. a GT CR it is important to consider the distinction between a conditional stimulus (CS) and an incentive stimulus, a distinction long emphasized by learning theorists (Konorski, 1967; Bindra, 1978; Toates, 1986; Dickinson & Balleine, 1994; Berridge, 2001; Dickinson & Balleine, 2002). Our recent studies on individual variation in the attribution of incentive salience to reward cues indicate that a perfectly effective CS may or may not also function as an incentive stimulus. Only in STs is the CS transformed into a powerfully attractive and desirable “motivational magnet” (Flagel et al., 2007; Flagel et al., 2009; Robinson & Flagel, 2009; Saunders & Robinson, 2010; Yager & Robinson, 2010; Flagel et al., 2011b; Meyer et al., 2012), and our results suggest that it is this transformation that requires dopamine in the NAcC. We suggest, therefore, that dopamine antagonism attenuates the learning (Flagel et al., 2011b) and performance (present results) specifically of a ST CR because it degrades the motivational properties of the CS, which are required for the CS to become attractive, but without necessarily compromising the CS-US association (Berridge & Robinson, 1998; Berridge, 2012).

Of course, dopamine neurons in the midbrain project to many other forebrain regions, including the NAcc shell, dorsal striatum, amygdala, prefrontal cortex, and hippocampus, and they are not homogeneous in their pattern of activity (Fields et al., 2007; Bromberg-Martin et al., 2010; Lammel et al., 2011; Witten et al., 2011), raising the possibility that dopamine signaling within other regions are necessary for learning stimulus-reward associations. However, recording and release studies cannot establish whether dopamine activity in any structure is necessary for any particular function – by their nature such studies are only correlative. It is important to keep in mind, therefore, that the systemic administration of flupenthixol, which would block dopamine receptors in all brain regions, failed to prevent the learning of a GT CR (Flagel et al., 2011b). This suggests that dopamine in no brain region is necessary for learning all CS-US associations. The data reported here are consistent with this notion (although, of course, we can only draw strong conclusions about the NAcC based on the data reported here).

The fact that dopamine antagonism had little effect on the performance (and learning, Flagel et al., 2011b) of a GT CR suggests, of course, that for GTs the CS did not function as an incentive stimulus. At first glance this may seem inconsistent with recent reports that systemic dopamine antagonism (Wassum et al., 2011) or inactivation of the nucleus accumbens via GABA receptor agonists (Blaiss & Janak, 2009) can decrease goal-directed CRs. However, an important procedural difference may explain the discrepancy. These studies assessed goal approach in response to an auditory CS, and when a tone is used as the CS all rats develop a GT CR (i.e., rats do not approach a tone CS; Cleland & Davey, 1983). As mentioned above, when a discrete localizable cue is used as the CS it serves as a more effective conditioned reinforcer in STs compared to GTs (Robinson & Flagel, 2009), but, if an auditory CS is used we have found it is an effective conditioned reinforcer in both STs and GTs, suggesting a tone cue is attributed with motivational properties in both STs and GTs (P.J. Meyer, unpublished observations). Thus, for any given individual, whether a cue acquires motivational properties may vary depending on sensory modality, and perhaps even the extent to which it can be engaged and manipulated (Holland, 1977; Chang et al., 2012). This also suggests that the form of the CR alone may not always indicate whether a CS is attributed with motivational properties, or whether performance of the CR is dopamine-dependent. Sometimes a CR directed to the location of reward delivery may reflect activation of a Pavlovian conditioned motivational state (and be dopamine-dependent) and other times not. Additional tests are required to determine the psychological and neurobiological processes underlying what may otherwise appear to be exactly the same behavior.

Nevertheless, here, expression of a goal-tracking CR did not require dopamine in the NAcC, and so we should consider what psychological process might underlie goal-tracking under these conditions. We can only speculate, but one possibility is that it is governed by a cognitive reward expectancy process (Bindra, 1978; Toates, 1986; Dickinson & Balleine, 1994). If presentation of the CS evokes an explicit cognitive representation of the outcome (US), this could result in goal-directed approach to the food cup to await delivery of the expected reward. Goal-directed instrumental behavior governed by explicit cognitive expectations (“instrumental incentives”) does not require dopamine (Dickinson et al., 2000; Yin et al., 2006; Lex & Hauber, 2010; Wassum et al., 2011), but instead may depend on endogenous opioid signaling (Wassum et al., 2009), which has also been implicated in some aspects of goal-tracking behavior (Mahler & Berridge, 2009; Difeliceantonio & Berridge, 2012). Therefore, the goal-directed approach seen here may be akin to behavior governed by an act-outcome association, which is thought to be dependent more on corticostriatal than mesolimbic circuits (Balleine & Dickinson, 1998; Daw et al., 2005), and may be related to so-called ‘model-based’ forms of learning (Tolman, 1948; Dayan & Balleine, 2002; Daw et al., 2005; Glascher et al., 2010). Consistent with this notion, cue-induced c-fos mRNA expression in corticostriatal regions was correlated in GTs, but not STs (Flagel et al., 2011a), suggesting the possibility of greater “top-down” cortical regulation of behavior in GTs.

Finally, we should note that the distinction between STs and GTs is not absolute, and although each shows a strong propensity to make a specific CR on any given trial (for as yet unknown reasons) most rats will still make the non-preferred CR on some trials. Of course, this vacillation is most pronounced in INs. This raises the interesting possibility that the psychological process that governs behavior may shift dynamically on a trial-by-trial basis, from one that is dopamine-dependent to one that is not, a hypothesis that can be tested.

In conclusion, we suggest that the role of dopamine in the NAcC in stimulus-reward learning is to attribute incentive salience to reward cues, transforming predictive CSs into powerful incentives, which can motivate not only normal behavior, but are also more likely to instigate maladaptive behavior (Robinson & Berridge, 1993).

Acknowledgments

This research was supported by National Institute on Drug Abuse grants to B.T.S. (F31 DA030801) and T.E.R. (R37 DA004294 and P01 DA031656). The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute on Drug Abuse or the National Institutes of Health. We thank Elizabeth O’Donnell and Taylor Swabash for assistance with behavioral testing and Kent Berridge, Shelly Flagel, Paul Meyer, and Jocelyn Richard for helpful comments on an earlier draft of the manuscript.

Footnotes

The authors report not financial conflict of interest.

References

- Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC. Reward learning: Reinforcement, incentives, and expectations. In: Medin DL, editor. Psychology of Learning and Motivation: Advances in Research and Theory. Academic Press; San Diego: 2001. pp. 223–278. [Google Scholar]

- Berridge KC. The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology (Berl) 2007;191:391–431. doi: 10.1007/s00213-006-0578-x. [DOI] [PubMed] [Google Scholar]

- Berridge KC. From prediction error to incentive salience: mesolimbic computation of reward motivation. Eur J Neurosci. 2012;35:1124–1143. doi: 10.1111/j.1460-9568.2012.07990.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Res Rev. 1998;28:309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. Parsing reward. Trends Neurosci. 2003;26:507–513. doi: 10.1016/S0166-2236(03)00233-9. [DOI] [PubMed] [Google Scholar]

- Bindra D. How adaptive behavior is produced: A perceptual-motivation alternative to response reinforcement. Behav Brain Sci. 1978;1:41–91. [Google Scholar]

- Blaiss CA, Janak PH. The nucleus accumbens core and shell are critical for the expression, but not the consolidation, of Pavlovian conditioned approach. Behav Brain Res. 2009;200:22–32. doi: 10.1016/j.bbr.2008.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boakes RA. Performance on learning to associate a stimulus with positive reinforcement. In: Davis H, Hurwitz H, editors. Operant-Pavlovian Interactions. Earlbaum; Hillsdale, NJ: 1977. pp. 67–97. [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron. 2010;68:815–834. doi: 10.1016/j.neuron.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinal RN, Parkinson JA, Hall J, Everitt BJ. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci Biobehav Rev. 2002;26:321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- Chang SE, Wheeler DS, Holland PC. Roles of nucleus accumbens and basolateral amygdala in autoshaped lever pressing. Neurobiol Learn Mem. 2012;97:441–451. doi: 10.1016/j.nlm.2012.03.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cleland GG, Davey GC. Autoshaping in the rat: The effects of localizable visual and auditory signals for food. J Exp Anal Behav. 1983;40:47–56. doi: 10.1901/jeab.1983.40-47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen JY, Haesler S, Vong L, Lowell BB, Uchida N. Neuron-type-specific signals for reward and punishment in the ventral tegmental area. Nature. 2012;482:85–88. doi: 10.1038/nature10754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danna CL, Elmer GI. Disruption of conditioned reward association by typical and atypical antipsychotics. Pharmacol Biochem Behav. 2010;96:40–47. doi: 10.1016/j.pbb.2010.04.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Day JJ, Roitman MF, Wightman RM, Carelli RM. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat Neurosci. 2007;10:1020–1028. doi: 10.1038/nn1923. [DOI] [PubMed] [Google Scholar]

- Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36:285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- Di Ciano P, Cardinal RN, Cowell RA, Little SJ, Everitt BJ. Differential involvement of NMDA, AMPA/kainate, and dopamine receptors in the nucleus accumbens core in the acquisition and performance of Pavlovian approach behavior. J Neurosci. 2001;21:9471–9477. doi: 10.1523/JNEUROSCI.21-23-09471.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dickinson A, Balleine B. Motivational control of goal-directed action. Anim Learn Behav. 1994;22:1–18. [Google Scholar]

- Dickinson A, Balleine B. The role of learning in the operation of motivational systems. In: Pashler H, Gallistel R, editors. Steven’s handbook of experimental psychology: Learning, Motivation, and Emotion. Wiley; New York: 2002. pp. 497–533. [Google Scholar]

- Dickinson A, Smith J, Mirenowicz J. Dissociation of pavlovian and instrumental incentive learning under dopamine antagonists. Behav Neurosci. 2000;114:468–483. doi: 10.1037//0735-7044.114.3.468. [DOI] [PubMed] [Google Scholar]

- Difeliceantonio AG, Berridge KC. Which cue to’want’? Opioid stimulation of central amygdala makes goal-trackers show stronger goal-tracking, just as sign-trackers show stronger sign-tracking. Behav Brain Res. 2012;230:399–408. doi: 10.1016/j.bbr.2012.02.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fields HL, Hjelmstad GO, Margolis EB, Nicola SM. Ventral tegmental area neurons in learned appetitive behavior and positive reinforcement. Annu Rev Neurosci. 2007;30:289–316. doi: 10.1146/annurev.neuro.30.051606.094341. [DOI] [PubMed] [Google Scholar]

- Flagel SB, Akil H, Robinson TE. Individual differences in the attribution of incentive salience to reward-related cues: Implications for addiction. Neuropharmacology. 2009;56:139–148. doi: 10.1016/j.neuropharm.2008.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flagel SB, Cameron CM, Pickup KN, Watson SJ, Akil H, Robinson TE. A food predictive cue must be attributed with incentive salience for it to induce c-fos mRNA expression in cortico-striatal-thalamic brain regions. Neuroscience. 2011a;196:80–96. doi: 10.1016/j.neuroscience.2011.09.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flagel SB, Clark JJ, Robinson TE, Mayo L, Czuj A, Willuhn I, Akers CA, Clinton SM, Phillips PE, Akil H. A selective role for dopamine in stimulus-reward learning. Nature. 2011b;469:53–57. doi: 10.1038/nature09588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Flagel SB, Watson SJ, Robinson TE, Akil H. Individual differences in the propensity to approach signals vs goals promote different adaptations in the dopamine system of rats. Psychopharmacology (Berl) 2007;191:599–607. doi: 10.1007/s00213-006-0535-8. [DOI] [PubMed] [Google Scholar]

- Glascher J, Daw N, Dayan P, O’Doherty JP. States versus rewards: dissociable neural prediction error signals underlying model-based and model-free reinforcement learning. Neuron. 2010;66:585–595. doi: 10.1016/j.neuron.2010.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han JS, McMahan RW, Holland P, Gallagher M. The role of an amygdalo-nigrostriatal pathway in associative learning. J Neurosci. 1997;17:3913–3919. doi: 10.1523/JNEUROSCI.17-10-03913.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hearst E, Jenkins H. Sign-tracking: The stimulus-reinforcer relation and directed action. Monograph of the Psychonomic Society; Austin: 1974. [Google Scholar]

- Holland PC. Conditioned stimulus as a determinant of the form of the Pavlovian conditioned response. J Exp Psychol Anim Behav Process. 1977;3:77–104. doi: 10.1037//0097-7403.3.1.77. [DOI] [PubMed] [Google Scholar]

- Ikemoto S, Panksepp J. The role of nucleus accumbens dopamine in motivated behavior: a unifying interpretation with special reference to reward-seeking. Brain Res Brain Res Rev. 1999;31:6–41. doi: 10.1016/s0165-0173(99)00023-5. [DOI] [PubMed] [Google Scholar]

- Konorski J. Integrative activity of the brain: An interdisciplinary approach. University of Chicago Press; Chicago: 1967. [Google Scholar]

- Lammel S, Ion DI, Roeper J, Malenka RC. Projection-specific modulation of dopamine neuron synapses by aversive and rewarding stimuli. Neuron. 2011;70:855–862. doi: 10.1016/j.neuron.2011.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lex B, Hauber W. The role of nucleus accumbens dopamine in outcome encoding in instrumental and Pavlovian conditioning. Neurobiol Learn Mem. 2010;93:283–290. doi: 10.1016/j.nlm.2009.11.002. [DOI] [PubMed] [Google Scholar]

- Lomanowska AM, Lovic V, Rankine MJ, Mooney SJ, Robinson TE, Kraemer GW. Inadequate early social experience increases the incentive salience of reward-related cues in adulthood. Behav Brain Res. 2011;220:91–99. doi: 10.1016/j.bbr.2011.01.033. [DOI] [PubMed] [Google Scholar]

- Lovic V, Saunders BT, Yager LM, Robinson TE. Rats prone to attribute incentive salience to reward cues are also prone to impulsive action. Behav Brain Res. 2011;223:255–261. doi: 10.1016/j.bbr.2011.04.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahler SV, Berridge KC. Which Cue to “Want?” Central Amygdala Opioid Activation Enhances and Focuses Incentive Salience on a Prepotent Reward Cue. J Neurosci. 2009;29:6500–6513. doi: 10.1523/JNEUROSCI.3875-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure SM, Daw ND, Montague PR. A computational substrate for incentive salience. Trends Neurosci. 2003;26:423–428. doi: 10.1016/s0166-2236(03)00177-2. [DOI] [PubMed] [Google Scholar]

- Meyer PJ, Lovic V, Saunders BT, Yager LM, Flagel SB, Morrow JD, Robinson TE. Quantifying individual variation in the propensity to attribute incentive salience to reward cues. PLoS One. doi: 10.1371/journal.pone.0038987. In press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer PJ, Ma ST, Robinson TE. A cocaine cue is more preferred and evokes more frequency-modulated 50-kHz ultrasonic vocalizations in rats prone to attribute incentive salience to a food cue. Psychopharmacology (Berl) 2012;219:999–1009. doi: 10.1007/s00213-011-2429-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague PR, Dayan P, Sejnowski TJ. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J Neurosci. 1996;16:1936–1947. doi: 10.1523/JNEUROSCI.16-05-01936.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pan WX, Schmidt R, Wickens JR, Hyland BI. Dopamine cells respond to predicted events during classical conditioning: evidence for eligibility traces in the reward-learning network. J Neurosci. 2005;25:6235–6242. doi: 10.1523/JNEUROSCI.1478-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paxinos G, Watson C. The rat brain in stereotaxic coordinates. Academic Press; New York, NY: 2007. [Google Scholar]

- Phillips AG, McDonald AC, Wilkie DM. Disruption of autoshaped responding to a signal of brain-stimulation reward by neuroleptic drugs. Pharmacol Biochem Behav. 1981;14:543–548. doi: 10.1016/0091-3057(81)90315-4. [DOI] [PubMed] [Google Scholar]

- Robbins TW, Everitt BJ. Functions of dopamine in the dorsal and ventral striatum. Sem Neurosci. 1992;4:119–127. [Google Scholar]

- Robinson TE, Berridge KC. The neural basis of drug craving: an incentive-sensitization theory of addiction. Brain Res Rev. 1993;18:247–291. doi: 10.1016/0165-0173(93)90013-p. [DOI] [PubMed] [Google Scholar]

- Robinson TE, Flagel SB. Dissociating the predictive and incentive motivational properties of reward-related cues through the study of individual differences. Biol Psychiatry. 2009;65:869–873. doi: 10.1016/j.biopsych.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Farrar A, Mingote SM. Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology (Berl) 2007;191:461–482. doi: 10.1007/s00213-006-0668-9. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Nunes EJ, Randall PA, Pardo M. The behavioral pharmacology of effort-related choice behavior: dopamine, adenosine and beyond. J Exp Anal Behav. 2012;97:125–146. doi: 10.1901/jeab.2012.97-125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saunders BT, Robinson TE. A cocaine cue acts as an incentive stimulus in some but not others: Implications for addiction. Biol Psychiatry. 2010;67:730–736. doi: 10.1016/j.biopsych.2009.11.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Saunders BT, Robinson TE. Individual variation in the motivational properties of cocaine. Neuropsychopharmacology. 2011;36:1668–1676. doi: 10.1038/npp.2011.48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Shiner T, Seymour B, Wunderlich K, Hill C, Bhatia KP, Dayan P, Dolan RJ. Dopamine and performance in a reinforcement learning task: evidence from Parkinson’s disease. Brain. 2012 doi: 10.1093/brain/aws1083. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith KS, Berridge KC, Aldridge JW. Disentangling pleasure from incentive salience and learning signals in brain reward circuitry. Proc Natl Acad Sci U S A. 2011;108:E255–E264. doi: 10.1073/pnas.1101920108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutton RS. Learning to predict by the methods of temporal differences. Mach Learn. 1988;3:9–44. [Google Scholar]

- Sutton RS, Barto AG. Reinforcement learning: An introduction. MIT Press; Cambridge, MA: 1998. [Google Scholar]

- Tindell AJ, Berridge KC, Zhang J, Pecina S, Aldridge JW. Ventral pallidal neurons code incentive motivation: amplification by mesolimbic sensitization and amphetamine. Eur J Neurosci. 2005;22:2617–2634. doi: 10.1111/j.1460-9568.2005.04411.x. [DOI] [PubMed] [Google Scholar]

- Tindell AJ, Smith KS, Berridge KC, Aldridge JW. Dynamic computation of incentive salience: “wanting” what was never “liked”. J Neurosci. 2009;29:12220–12228. doi: 10.1523/JNEUROSCI.2499-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toates F. Motivational Systems. Cambridge University Press; Cambridge, UK: 1986. [Google Scholar]

- Tolman EC. Cognitive maps in rats and men. Psychol Rev. 1948;55:189–208. doi: 10.1037/h0061626. [DOI] [PubMed] [Google Scholar]

- Verbeke G. Linear mixed models for longitudinal data. Springer; New York, NY: 2009. [Google Scholar]

- Wassum K, Ostlund S, Maidment N, Balleine B. Distinct opioid circuits determine the palatability and the desirability of rewarding events. Proc Natl Acad Sci U S A. 2009;106:12512– 12517. doi: 10.1073/pnas.0905874106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wassum KM, Ostlund SB, Balleine BW, Maidment NT. Differential dependence of Pavlovian incentive motivation and instrumental incentive learning processes on dopamine signaling. Learn Mem. 2011;18:475–483. doi: 10.1101/lm.2229311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wise RA, Spindler J, deWit H, Gerberg GJ. Neuroleptic-induced “anhedonia” in rats: pimozide blocks reward quality of food. Science. 1978;201:262–264. doi: 10.1126/science.566469. [DOI] [PubMed] [Google Scholar]

- Witten IB, Steinberg EE, Lee SY, Davidson TJ, Zalocusky KA, Brodsky M, Yizhar O, Cho SL, Gong S, Ramakrishnan C, Stuber GD, Tye KM, Janak PH, Deisseroth K. Recombinase-driver rat lines: tools, techniques, and optogenetic application to dopamine-mediated reinforcement. Neuron. 2011;72:721–733. doi: 10.1016/j.neuron.2011.10.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wyvell CL, Berridge KC. Incentive sensitization by previous amphetamine exposure: Increased cue-triggered “wanting” for sucrose reward. J Neurosci. 2001;21:7831–7840. doi: 10.1523/JNEUROSCI.21-19-07831.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yager LM, Robinson TE. Cue-induced reinstatement of food seeking in rats that differ in their propensity to attribute incentive salience to food cues. Behav Brain Res. 2010;214:30–34. doi: 10.1016/j.bbr.2010.04.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin H, Zhuang X, Balleine B. Instrumental learning in hyperdopaminergic mice. Neurobiol Learn Mem. 2006;85:283–288. doi: 10.1016/j.nlm.2005.12.001. [DOI] [PubMed] [Google Scholar]

- Zhang J, Berridge KC, Aldridge JW. Computational models of incentive-sensitization in addiction: Dynamic limbic transformation of learning into motivation. In: Gutkin B, Ahmed SH, editors. Computational Neuroscience of Drug Addiction. Springer; New York, NY: 2012. pp. 189–203. [Google Scholar]

- Zhang J, Berridge KC, Tindell AJ, Smith KS, Aldridge JW. A neural computational model of incentive salience. PLoS Comput Biol. 2009;5:e1000437. doi: 10.1371/journal.pcbi.1000437. [DOI] [PMC free article] [PubMed] [Google Scholar]