Abstract

The combination of incessant advances in sequencing technology producing large amounts of data and innovative bioinformatics approaches, designed to cope with this data flood, has led to new interesting results in the life sciences. Given the magnitude of sequence data to be processed, many bioinformatics tools rely on efficient solutions to a variety of complex string problems. These solutions include fast heuristic algorithms and advanced data structures, generally referred to as index structures. Although the importance of index structures is generally known to the bioinformatics community, the design and potency of these data structures, as well as their properties and limitations, are less understood. Moreover, the last decade has seen a boom in the number of variant index structures featuring complex and diverse memory-time trade-offs. This article brings a comprehensive state-of-the-art overview of the most popular index structures and their recently developed variants. Their features, interrelationships, the trade-offs they impose, but also their practical limitations, are explained and compared.

INTRODUCTION

Developments in sequencing technology continue to produce data at higher speed and lower cost. The resulting sequence data form a large fraction of the data processed in life sciences research. For example, de novo genome assembly joins relatively short DNA fragments together into longer contigs based on overlapping regions, whereas in RNA-seq experiments, cDNA is mapped to a reference genome or transcriptome. Further down the analysis pipeline, DNA and protein sequences are aligned to one another and similarity between aligned sequences is estimated to infer phylogenies (1). Although the type of sequences and applications varies widely, they all require basic string operations, most notably search operations. Given the sheer number and size of the sequences under consideration and the number of search operations required, efficient search algorithms are important components of genome analysis pipelines. For this reason, specialized data structures, generally bundled under the term ‘index structures’, are required to speed up string searching.

The use of specialized algorithms and data structures is motivated by the fact that the data flow has already surpassed the flow of advances in computer hardware and storage capabilities. However, although index structures are already widely used to speed up bioinformatics applications, they too are challenged by the recent data flood. Index structures require an initial construction phase and impose extra storage requirements. In return, they provide a wide variety of efficient string searching algorithms. Traditionally, this has led to a dichotomy between search efficiency and reduced memory consumption. However, recent advances in index structures have shown that compression and fast string searching can be achieved simultaneously using a combination of compression and indexing, thus solving this dichotomy (2).

There are many types of index structures. The most commonly known index structures are inverted indexes and lookup tables. These work in a similar way to the indexes found at the back of books. However, biological sequences generally lack a clear division in words or phrases, a prerequisite for inverted indexes to function properly. Two alternative index structures are used in bioinformatics applications. k-mer indexes divide sequences into substrings of fixed length k and are used, among others, in the BLAST (3) alignment tool. ‘Full-text indexes’, on the other hand, allow fast access to substrings of any length. Full-text indexes come at a greater memory and construction cost compared with k-mer indexes and are also far more complex. However, they contain much more information and allow for faster and more flexible string searching algorithms (4).

Full-text index structures are widely used crucial black box components of many bioinformatics applications. Their success is illustrated by the number of bioinformatics tools that currently use them. Examples are tools for short read mapping (5–9), alignment (10,11), repeat detection (12), error correction (13,14) and genome assembly (15–17). The memory and time performance of many of these tools are directly affected by the type and implementation of the index structure used. The choice for a tool impacts the choice of index structures and vice versa. However, the description of these tools in scientific literature often bypasses a detailed description about the specifications of the index structures used. Concepts such as suffix trees, suffix arrays or FM-indexes are introduced in general terms in bioinformatics courses, but most of the time, these index structures are applied as black boxes having certain properties and allowing certain operations on strings at a given time. This does injustice to the vast and rich literature available on index structures and does not present their complex design, possibilities and limitations. Moreover, most tools are designed using basic implementations of these index structures, without taking full advantage of the latest advances in indexing technology.

The goal of this article is 2-fold. On the one hand, we offer a comprehensive review of the basic ideas behind classical index structures, such as suffix trees, suffix arrays and Burrows–Wheeler-based index structures, such as the FM-indexes. No prior knowledge about index structures is required. On the other hand, we give an overview of the limitations of these structures as well as the research done in the last decade to overcome these limitations. Furthermore, in light of recent advances made in both sequencing technology as well as computing technology, we give prospects on future developments in index structure research.

Overview

This article is structured according to the following outline. The first main section introduces basic concepts and notations which are used throughout the article. This section also clarifies the relationship between computer science string algorithms and sequence analysis applications. Furthermore, it explains some algorithmic performance measures which have to be taken into account when dealing with advanced data structures. Readers well acquainted with data structures and algorithms may easily skip this section.

The second section reviews some of the most popular index structures currently in use. These include suffix trees, enhanced and compressed suffix arrays and FM-indexes, which are based on the Burrows–Wheeler transform. Both representational and algorithmic aspects of basic search operations of these index structures are discussed using a running example. Furthermore, the features of these different index structures are compared on an abstract level and their interrelation is made clear.

The next section gives an overview of current state-of-the-art main (RAM) memory index structures, with a focus on memory-time trade-offs. Several memory saving techniques are discussed, including compression techniques utilized in ‘compressed index structures’. The aim of this section is to provide insight into the complexity of the design of these compressed index structures, rather than to give their full details. It is shown how their design is composed of auxiliary data structures that govern the performance of the main index structure. On a larger scale, practical results from the bioinformatics literature illustrate the performance gain and limitations of search algorithms. Furthermore, a comparison between index structures, together with an extensive literature list, acts as a taxonomy for the currently known main memory full-text index structures.

While main memory index structures are the main focus of the second section, the design, limitations and improvements of external memory index structures are also discussed. The difference between index structures for internal and external memory is most prominent in their use of compression techniques, which are (still) less important in external memory. However, because harddisk access is much slower than main memory access, data structure layout and access patterns are much more important.

The second biggest bottleneck of index structure usage is the initial construction phase, which is covered in the final section. Both main memory as well as secondary memory construction algorithms are reviewed. The main conceptual ideas used for construction of the index structures discussed in previous sections are provided together with examples of the best results of construction algorithms found in the literature.

Finally, a summary of the findings presented in this article and some prospects on future directions of the research on index structures and its impact on bioinformatics applications is given. These prospects include variants and extensions of classical index structures, designed to answer specific biological queries, such as the search for structural RNA patterns, but also the use of new computing paradigms, such as the Google MapReduce framework (18).

IMPORTANT CONCEPTS

Index structures originate from the field of theoretical computer science. This section introduces some important concepts for readers not familiar with the field. Readers with a background in data structures and algorithms may skip this section, except for the notations introduced at the end of this section.

Strings versus sequences

The term ‘sequence’ is used for different concepts in the field of computer science and biology. What is called a sequence in biology is usually a ‘string’ in standard computer science parlance. The distinction between strings and sequences becomes especially prominent in computer science when introducing the concepts of substrings and subsequences. The former refer to contiguous intervals from larger strings, whereas the latter do not necessarily need to be contiguous intervals from the original string. As index structures work with substrings and to avoid ambiguity, we will stick to the standard computer science term string throughout this article, unless we explicitly want to stress the biological origin of the sequence.

String matching

Key components of genome analysis include statistical methods for scoring and comparing string hypotheses and string matching algorithms for efficient string comparisons. However, the former component falls beyond the scope of this review as our main focus lies on string matching algorithms studied in the field of computer science. This again gives rise to a terminology barrier between the two research fields. For nearly all index structures discussed in this review, efficient algorithms for exact and inexact string matching exist. These algorithms allow fast queries into sequence databases, similarity searches between sequences and DNA/RNA mapping. Inexact string matching is usually implemented using a backtracking algorithm on the suffix tree or a seed-and-extend approach. The latter approach may use maximal exact matches or other types of shared substrings. Maximal exact matches are examples of identical substrings shared between multiple strings and are frequently used as seeds in sequence alignment or in tools that determine sequence similarity (10). Searching for all maximal exact matches in an efficient way requires strong index structures that are fully expressive, i.e. allow for all suffix tree operations in constant time (19).

Index structures reaching full expressiveness are able to handle a multitude of string searching problems such as locating several types of repeats, finding overlapping strings and finding the longest common substring. These string matching algorithms are, among others, used in genome assembly (finding repeats and overlaps), error correction of sequencing reads (repeats), fast identification of DNA contaminants (longest common substring) and genealogical DNA testing (short tandem repeats).

In addition, some index structures are geared toward specific applications. ‘Affix index structures’, for example, allow bidirectional string searching. As a result, they can be used for searching RNA structure patterns (20) and for short read mapping (6). ‘Weighted suffix trees’ (21) can be used to find patterns in biological sequences that contain weights such as base probabilities, but are also applied in error correction (13). ‘Geometric suffix trees’ (22) have been used to index 3D protein structures. ‘Property suffix trees’ have additional data structures to efficiently answer property matching queries. This can be useful, for example, in retrieving all occurrences of patterns that appear in a repetitive genomic structure (23).

Theoretical complexity

As is the case for other data structures, the performance of algorithms working on index structures is usually expressed in terms of their theoretical complexity, indicated by the ‘big- notation’. Although a theoretical measure of the worst-case scenario, it contains valuable practical information about the qualitative and quantitative performance of algorithms and data structures. For example, some index structures contain an alphabet-dependency, whereas others do not. Thus, alphabet-independent index structures theoretically perform string searches equally well on DNA sequences (4 different characters) as on protein sequences (20 different characters). The qualitative information of the theoretical complexity usually categorizes the dependency of input parameters in terms of logarithmic, linear, quasilinear, quadratic or exponential dependency. Intuitively, this means that even if several algorithms nearly have the same execution time or memory requirements for a given input sequence, the execution time and memory requirements of some algorithms will grow much faster than those of others when the input size increases. In practice, quasilinear algorithms [complexity

notation’. Although a theoretical measure of the worst-case scenario, it contains valuable practical information about the qualitative and quantitative performance of algorithms and data structures. For example, some index structures contain an alphabet-dependency, whereas others do not. Thus, alphabet-independent index structures theoretically perform string searches equally well on DNA sequences (4 different characters) as on protein sequences (20 different characters). The qualitative information of the theoretical complexity usually categorizes the dependency of input parameters in terms of logarithmic, linear, quasilinear, quadratic or exponential dependency. Intuitively, this means that even if several algorithms nearly have the same execution time or memory requirements for a given input sequence, the execution time and memory requirements of some algorithms will grow much faster than those of others when the input size increases. In practice, quasilinear algorithms [complexity  (nlog n)] are sometimes much faster than linear algorithms [complexity

(nlog n)] are sometimes much faster than linear algorithms [complexity  (n)], because of the lower order terms and constants involved. These are usually omitted in the big-

(n)], because of the lower order terms and constants involved. These are usually omitted in the big- notation. In general, however, the big-

notation. In general, however, the big- notation is a good guideline for algorithm and data structure performance. Furthermore, this measure of algorithm and data structure efficiency is timeless and is not dependent on hardware, implementation and data specifications, as opposed to benchmark test results which can be misleading and may quickly become obsolete over time.

notation is a good guideline for algorithm and data structure performance. Furthermore, this measure of algorithm and data structure efficiency is timeless and is not dependent on hardware, implementation and data specifications, as opposed to benchmark test results which can be misleading and may quickly become obsolete over time.

Computer memory

Practical performance of index structures is not only governed by their algorithmic design, but also by the hardware that holds the data structure. Computer memory in essence is a hierarchical structure of layers, ordered from small, expensive, but fast memory to large, cheap and slow memory types. The hierarchy can roughly be divided into ‘main memory’, most notably RAM memory and caches, and secondary or ‘external memory’, which usually consists of hard disks or in the near future solid-state disks. Most index structures and applications are designed to run in main memory, because this allows for fast ‘random access’ to the data, whereas hard disks are usually 105–106 times slower for random access (24). As the price of biological data currently decreases much faster than the price of RAM memory and bioinformatics projects are becoming much larger, comparing more data than ever before, algorithms and data structures designed for cheaper external memory become more important (25). These external memory algorithms usually read data from external memory, process the information in main memory and output the result again to disk. As mentioned above, these ‘input/output’ (I/O) operations are very expensive. As a result, the algorithmic design needs to minimize these operations as much as possible, for example by keeping key information that is needed frequently into main memory. This technique, known as ‘caching’, is also used by file systems. File systems usually load more data into main memory than requested because it is physically located close to the requested data and may be predicted to become needed in the near future. The physical ‘locality’ of data organized by index structures is thus of great importance. Moreover, data that is often logically requested in sequential order, should also be physically ordered sequentially, because sequential disk access is almost as fast as random access in main memory. More information about index structure design for the different memory settings is found in ‘Popular index structures’, ‘Time-memory trade-offs’ and ‘Index structures in external memory’ sections.

Notations

The following notations are used throughout the rest of the text. Let the finite, totally ordered alphabet Σ be an array of size |Σ| (|·| will be used to denote the size of a string, set or array). The DNA-alphabet, for example, has size four and is given by Σ = {A,C,G,T}. Furthermore, let Σk and Σ*, respectively, be the set of all strings composed of k characters from Σ and the set of all strings composed of zero or more characters from Σ. The empty string will be denoted as ε. Let S ∈ Σn. All indexes in this article are zero-based. For every 0 ≤ i ≤ j < n, S[i] denotes the character at position i in S, S[i .. j] denotes a substring that starts at position i and ends at position j and S[i .. j ] = ε for i > j. S[i..] is the i-th suffix of S and S[..i] is the i-th prefix of S and S[−1..] = S[.. n] = ε. Likewise, A[i .. j] denotes an interval in an array A and the comma separator is used in 2D arrays, e.g. M[i, j] denotes the matrix element of M at the i-th row and j-th column.

S represents the indexed string which is usually very large, i.e. a chromosome or complete genome. Another string P denotes a pattern, which is searched in S. The length of P is m and usually m ≪ n holds, unless stated otherwise. For example, P can be a certain pattern, a sequencing read or a gene. The lexicographical order relation between two elements of Σ* is represented as <. The ‘longest common prefix’ LCP(S, P) of two strings S and P is the prefix S[..k], such that S[..k] = P[..k] and S[k + 1] ≠ P[k + 1].

As a final remark, note that all logarithms in this article have base two, unless stated otherwise.

POPULAR INDEX STRUCTURES

Index structures are data structures used to preprocess one or more strings to speed up string searches. As the examples in this section will illustrate, the types of searches can be quite diverse, yet some index structures manage to achieve an optimal performance for a broad class of search problems. The ultimate goal of index structures is to quickly capture maximal information about the string to be queried and to represent this information in a compact form. It turns out that both requirements often conflict in practice, with different types of index structures providing alternative trade-offs between speed and memory consumption. However, the speedup achieved over classical string searching algorithms often makes up for the extra construction and memory costs.

The type of index structures discussed here are ‘full-text index structures’. Unlike natural language, biological sequences do not show a clear structure of words and phrases, making popular ‘word-based’ index structures such as inverted files (26) and B-trees (27) less suited for indexing genomic sequences. Instead, full-text indexes that store information about all variable length substrings are better suited to analyze the complex nature of genome sequences.

The three most commonly used full-text index structures in bioinformatics today are suffix trees, suffix arrays and FM-indexes. The raison d'être of the latter two is the high-memory requirements of suffix trees. In this section, it is shown how those smaller indexes actually are reduced suffix trees and can be enhanced with auxiliary information to achieve complete suffix tree functionality.

Suffix trees

Suffix trees have become the archetypical index structure used in bioinformatics. Introduced by Weiner (28), who also gave a linear time construction algorithm, they are said to efficiently solve a myriad of string processing problems (29). Complex string problems such as finding the longest common substring can be solved in linear time using suffix trees. The suffix tree of a string S contains information about all suffixes of that string and gives access to all prefixes of those suffixes, thus effectively allows fast access to all substrings of the string S.

The suffix tree ST(S) is formally defined as the radix tree (30), i.e. a compact string search tree data structure, built from all suffixes of S. The edges of ST(S) are labeled with substrings of S and the leaves are numbered 0 to n − 1. The one-to-one correspondence between leaf i of ST(S) and suffix i of S is found by concatenating all edge labels on the path from the root to the leaf: the concatenated string ending in leaf i equals suffix S[i..]. Moreover, internal nodes correspond to the LCP of suffixes of S, such that labels of all outgoing edges from an internal node start with a different character and every internal node has at least two children. This last property allows to distinguish suffix trees and non-compact suffix ‘tries’ whose nodes can have single children because edge label lengths are all equal to one. In order for the above properties to hold for a string S, the last character of S has to uniquely appear in S. In practice, this problem is solved by appending a special end-character $ to the end of string S, with $∉Σ and $ < c, ∀c ∈ Σ. This special end-character plays the same role as the virtual end-of-string symbol used in regular expressions (also represented as $ in that context). Hereafter, for every indexed string S it is assumed S[n − 1] = $ or, equivalently, S ∈ Σ*$ holds. As a running example, the suffix tree ST(S) for the string S = ACATACAGATG$ is given in Figure 1.

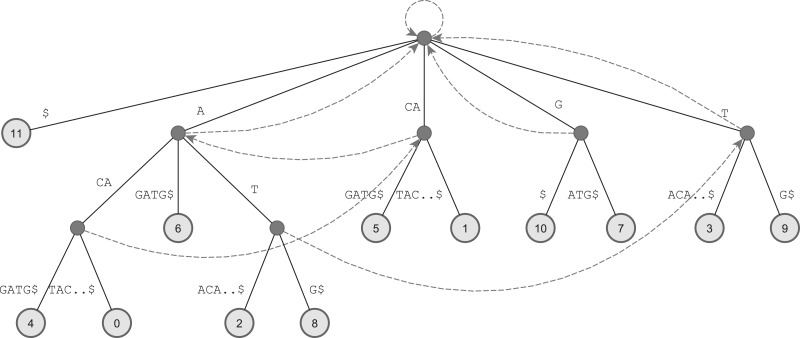

Figure 1.

Suffix tree for string S = ACATACAGATG, where $ is the special end-character. Each number i inside a leaf represents suffix S[i..] of the string S. Dashed arrows correspond to suffix links. Edges are arranged in lexicographical order. For the sake of brevity, only the first characters followed by two dots and the special end-character $ are shown for edge labels that spell out the rest of the suffix corresponding to the leaf the edge is connected with.

The ‘label’ ℓ(v) of a node v of ST(S) is defined as the concatenation of edge labels on the path from the root to the node. From this definition it follows that ℓ(root) = ϵ. The ‘string depth’ of v is defined as |ℓ(v)|. The ‘suffix link’ sl(v) of an internal node v with label cw (c ∈ Σ and w ∈ Σ*) is the unique internal node with label w. Suffix links are represented as dashed lines in Figure 1.

Most suffix tree algorithms boil down to (partial or full) top-down or bottom-up traversals of the tree, or the following of suffix links (19). These different types of traversals are further illustrated using some classical string algorithms.

In the exact string matching problem, all positions of a substring P have to be found in string S. Exact string matching is an important problem on its own and is also used as a basis for more complex string matching problems. Since P is a substring of S if and only if P is a prefix of some suffix of S, it follows that matching every character of P along a path in ST(S) (starting at the root) gives the answer to the existential question. This algorithm thus requires a partial top-down traversal of ST(S) and has a time complexity of  (m). Since suffixes of S are grouped by common prefixes in ST(S), the set of leaves in the subtree below the path that spells out P represents all locations where P occurs in S. This set is denoted as occ(P, S) and can be obtained in

(m). Since suffixes of S are grouped by common prefixes in ST(S), the set of leaves in the subtree below the path that spells out P represents all locations where P occurs in S. This set is denoted as occ(P, S) and can be obtained in  (|occ(P, S)|) time. As an example, consider matching pattern P = AC to the running example in Figure 1. The algorithm first finds the edge with label A going down from the root and then continues down the tree along the edge labeled CA. After matching the character C, the algorithm decides that P is a substring of S. Furthermore, occ(P, S) = {0, 4} and thus P = S[0..1] = S[4..5]. This classical example already demonstrates the true power of suffix trees: the time complexity for matching k patterns of length m to a string of length n is

(|occ(P, S)|) time. As an example, consider matching pattern P = AC to the running example in Figure 1. The algorithm first finds the edge with label A going down from the root and then continues down the tree along the edge labeled CA. After matching the character C, the algorithm decides that P is a substring of S. Furthermore, occ(P, S) = {0, 4} and thus P = S[0..1] = S[4..5]. This classical example already demonstrates the true power of suffix trees: the time complexity for matching k patterns of length m to a string of length n is  (n + km). String matching algorithms that preprocess pattern P instead of string S [Boyer–Moore (31) and Knuth–Morris–Pratt (32), among others] require

(n + km). String matching algorithms that preprocess pattern P instead of string S [Boyer–Moore (31) and Knuth–Morris–Pratt (32), among others] require  (k(n + m)) time to solve the same problem. Since k and n are usually very large in most bioinformatics applications, for example in mapping millions (=k) short (=m) reads to the human genome (=n), this speedup is significant.

(k(n + m)) time to solve the same problem. Since k and n are usually very large in most bioinformatics applications, for example in mapping millions (=k) short (=m) reads to the human genome (=n), this speedup is significant.

Bottom-up traversals through suffix trees are mainly required for the detection of highly similar patterns, such as common substrings or (approximate) repeats. This follows from the fact that internal nodes of ST(S) represent the LCP of suffixes in their subtree. Internal nodes with maximal string depth correspond to suffixes with the largest LCP, which makes it easy to find maximal repeats and LCPs using a full bottom-up search of ST(S). In detail, the longest common substring of two strings S1 and S2 of lengths n1 and n2 is found by first building a suffix tree for the concatenated string S1S2, called a ‘generalized suffix tree’ (GST), and then traversing the GST twice. During an initial top-down traversal, string depths are stored at the internal nodes [if this information is gathered during construction of ST(S1S2), the top-down traversal can be skipped]. A consecutive bottom-up traversal determines whether leaves in the subtree of an internal node all originate from S1, S2 or both. This information can percolate up to parent nodes. In case leaves from both S1 and S2 have the current node as their ancestor, the corresponding suffixes have a common prefix. Since every internal node is visited at most once during each traversal, and calculations at every internal node can be done in constant time, this algorithm requires  (n1 + n2) time. The details of the algorithm can be found in (29). Maximal repeats, such as calculated in Vmatch (http://www.vmatch.de/), are found in a similar fashion. A maximal repeat is a substring of length l > 0 that occurs at least at two positions i1 < i2 in S and that is both left-maximal (S[i1 − 1] ≠ S[i2 − 1]) and right-maximal (S[i1 + l] ≠ S[i2 + l]). Labels of the internal nodes of ST(S) represent all repeated substrings that are right-maximal. There are, however, node labels that correspond to repeats that are not left-maximal. Similar to finding the longest common substring, a bottom-up traversal of ST(S) uses information in the leaves to check left-maximality and forwards this information to parent nodes. As an example, the maximal repeats in the running example (Figure 1) are ACA, AT, A and T. The first internal node v visited by a bottom-up traversal has ℓ(v) = ACA and v has two leaves: 0 and 4. Since leaf 0 is a child of v, left-maximality is guaranteed for v and every parent of v. The internal node w with label ℓ(w) = CA has leaves 5 and 1 as children, but because S[5 − 1] = S[1 − 1] = A, ℓ(w) = CA is not a maximal repeat.

(n1 + n2) time. The details of the algorithm can be found in (29). Maximal repeats, such as calculated in Vmatch (http://www.vmatch.de/), are found in a similar fashion. A maximal repeat is a substring of length l > 0 that occurs at least at two positions i1 < i2 in S and that is both left-maximal (S[i1 − 1] ≠ S[i2 − 1]) and right-maximal (S[i1 + l] ≠ S[i2 + l]). Labels of the internal nodes of ST(S) represent all repeated substrings that are right-maximal. There are, however, node labels that correspond to repeats that are not left-maximal. Similar to finding the longest common substring, a bottom-up traversal of ST(S) uses information in the leaves to check left-maximality and forwards this information to parent nodes. As an example, the maximal repeats in the running example (Figure 1) are ACA, AT, A and T. The first internal node v visited by a bottom-up traversal has ℓ(v) = ACA and v has two leaves: 0 and 4. Since leaf 0 is a child of v, left-maximality is guaranteed for v and every parent of v. The internal node w with label ℓ(w) = CA has leaves 5 and 1 as children, but because S[5 − 1] = S[1 − 1] = A, ℓ(w) = CA is not a maximal repeat.

A final way of traversing suffix trees is by following suffix links. Suffix links can both be used in suffix tree construction and algorithms for searching maximal exact matches or matching statistics. Intuitively, suffix links maintain a sliding window when matching a pattern to the suffix tree. Furthermore, suffix links act as a memory-efficient alternative to GSTs. As constructing, storing and updating suffix trees is a costly operation, the utilization of suffix links offers an important trade-off. The following algorithm demonstrates how suffix links enable a quick comparison between all suffixes of string S1 and the suffix tree ST(S2) of another string S2. Suppose the first suffix S1[0..] has been compared up to a node v with ℓ(v) = S2[0..i]. After following sl(v) = w, the second suffix S1[1..] is already matched to ST(S2) up to w, with ℓ(w) = S2[1..i]. In this way, i = |ℓ(w)| characters do not have to be matched again for this suffix. This process can be repeated until all suffixes of S1 are matched to ST(S2). Hence, the maximal exact matches between S1 and S2 can be found again in  (n1 + n2) time, but using less memory to store only the suffix tree of S2 plus its suffix links.

(n1 + n2) time, but using less memory to store only the suffix tree of S2 plus its suffix links.

Given enough fast memory, suffix trees are probably the best data structure ever invented to support string algorithms. For large-scale bioinformatics applications, however, memory consumption really becomes a bottleneck. Although the memory requirements of suffix trees are asymptotically linear, the constant factor involved is quite high, i.e. up to 10 (33) to 20 times (34) higher than the amount of memory required to store the input string. However, state-of-the-art suffix tree implementations are able to handle sequences of human chromosome size (10). During the last decade, a lot of research focused on tackling this memory bottleneck, resulting in many suffix tree variants that show interesting memory versus time trade-offs.

Suffix arrays

The most successful and well-known variants of suffix trees are the so-called suffix arrays (35). They are made up of a single array containing a permutation of the indexes of string S, making them extremely simple and elegant. In terms of performance, expressiveness is traded for lower memory footprint and improved locality. Suffix arrays in general only require four times the amount of storage needed for the input string, can be constructed in linear time and can exactly match all occurrences of pattern P in string S in  (mlogn+|occ(P, S)|) time using a binary search.

(mlogn+|occ(P, S)|) time using a binary search.

Suffix array SA(S) stores the lexicographical ordering of all suffixes of string S as a permutation of its index positions: S[SA[i − 1]..] < S[SA[i]..], 0 < i < n. The last column of Table 1 shows the lexicographical ordering for the running example. SA(S) itself can be found in the second column. The uniqueness of the lexicographical order is determined by the fact that all suffixes have different lengths, and the use of the special end-character $ < c, c ∈ Σ. By definition, S[SA[0]] always equals the string $. The relationship between suffix trees and suffix arrays becomes clear when traversing suffix trees depth-first and giving priority to edges with lexicographically smaller labels. Leaf numbers encountered in this order spell out the suffix array. All edges were lexicographically ordered on purpose in Figure 1, so that leaf numbers, read from left to right, form SA(S) as found in Table 1. Exact matching of substring P is done using two binary searches on SA(S). These binary searches locate PL = min{k|P ≤ S[SA[k]]} and PR = max{k|P ≥ S[SA[k]]}, which form the boundaries of the interval in SA(S) where occ(P, S) is found. Note that counting the occurrences requires  (mlogn) time, but finding occ(P, S) only requires an additional

(mlogn) time, but finding occ(P, S) only requires an additional  (|occ(P, S)|) time.

(|occ(P, S)|) time.

Table 1.

Arrays used by enhanced suffix arrays (columns 2–5), compressed suffix arrays (columns 2, 6 and 7) and FM-indexes (columns 8 – 14) for string S = ACATACAGATG$

| ESA |

CSA |

FM-index ‘rank’ |

||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i | SA | LCP | child | sl | SA−1 | Ψ | BWT | $ | A | C | G | T | LF | S[SA[i]..] |

| 0 | 11 | −1 | 2 | 2 | G | 0 | 0 | 0 | 1 | 0 | 8 | $ | ||

| 1 | 4 | 0 | 6 | [0..11] | 7 | 6 | T | 0 | 0 | 0 | 1 | 1 | 10 | ACAGATG$ |

| 2 | 0 | 3 | 2 | [6..7] | 4 | 7 | $ | 1 | 0 | 0 | 1 | 1 | 0 | ACATACAGATG$ |

| 3 | 6 | 1 | 4 | [0..11] | 10 | 9 | C | 1 | 0 | 1 | 1 | 1 | 6 | AGATG$ |

| 4 | 2 | 1 | 5 | 1 | 10 | C | 1 | 0 | 2 | 1 | 1 | 7 | ATACAGATG$ | |

| 5 | 8 | 2 | 3 | [10..11] | 6 | 11 | G | 1 | 0 | 2 | 2 | 1 | 9 | ATG$ |

| 6 | 5 | 0 | 8 | 3 | 3 | A | 1 | 1 | 2 | 2 | 1 | 1 | CAGATG$ | |

| 7 | 1 | 2 | 7 | [1..5] | 9 | 4 | A | 1 | 2 | 2 | 2 | 1 | 2 | CATACAGATG$ |

| 8 | 10 | 0 | 10 | 5 | 0 | T | 1 | 2 | 2 | 2 | 2 | 11 | G$ | |

| 9 | 7 | 1 | 9 | [0..11] | 11 | 5 | A | 1 | 3 | 2 | 2 | 2 | 3 | GATG$ |

| 10 | 3 | 0 | 8 | 1 | A | 1 | 4 | 2 | 2 | 2 | 4 | TACAGATG$ | ||

| 11 | 9 | 1 | 11 | [0..11] | 0 | 8 | A | 1 | 5 | 2 | 2 | 2 | 5 | TG$ |

From left to right: index position, suffix array, LCP array, child array, suffix link array, inverse suffix array, Ψ-array, BWT text, ‘rank’ array, LF-mapping array and suffixes of string S. FM-indexes also require an array C(S).

Although conceptually simple, suffix arrays are not just reduced versions of suffix trees (36,37). Optimal solutions for complex string processing problems can be achieved by algorithms on suffix arrays without simulating suffix tree traversals. An example is the all pairs suffix–prefix problem in which the maximal suffix–prefix overlap between all ordered pairs of k strings of total length n can be determined by both suffix trees (29) and suffix arrays (37) in  (n + k2) time.

(n + k2) time.

Enhanced suffix arrays

Suffix arrays are not that information-rich compared with suffix trees, but require far less memory. They lack LCP information, constant time access to children and suffix links, which makes them less fit to tackle more complex string matching problems. Abouelhoda et al. (19) demonstrated how suffix arrays can be embellished with additional arrays to recover the full expressivity of suffix trees. These so-called ‘enhanced suffix arrays’ consist of three extra arrays that, together with a suffix array, form a more compact representation of suffix trees that can also be constructed in  (n) time. Furthermore, the next paragraphs demonstrate how the extra arrays of enhanced suffix arrays enable efficient simulation of all traversal types of suffix trees (19).

(n) time. Furthermore, the next paragraphs demonstrate how the extra arrays of enhanced suffix arrays enable efficient simulation of all traversal types of suffix trees (19).

A first array LCP(S) supports bottom-up traversals on suffix array SA(S). It stores LCP lengths of consecutive suffixes from the suffix array, i.e. LCP[i] = | LCP(S[SA[i − 1]..],S[SA[i]..])|, 0 < i < n. By definition, LCP[0] = −1. An example LCP array for the running example is shown in the third column of Table 1. Originally, Manber and Myers (35) utilized LCP arrays to speed up exact substring matching on suffix arrays to achieve an  (m+logn+|occ(P, S)|) time bound. Recently, Grossi (36) proved that the

(m+logn+|occ(P, S)|) time bound. Recently, Grossi (36) proved that the  (m + logn + |occ(P, S)|) time bound for exact substring matching can be reached by using only S, SA(S) and

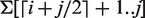

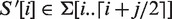

(m + logn + |occ(P, S)|) time bound for exact substring matching can be reached by using only S, SA(S) and  sampled LCP array entries. Furthermore, it is possible to encode those sampled LCP array entries inside a modified version of SA(S) itself. However, the details of this technique are rather technical and fall beyond the scope of this review. Later, Kasai et al. (38) showed how all bottom-up traversals of suffix trees can be mimicked on suffix arrays in linear time by traversing LCP arrays. In fact, LCP(S) represents the tree topology of ST(S). Recall that internal nodes of suffix trees group suffixes by their LCPs. In enhanced suffix arrays, internal nodes are represented by ‘LCP intervals’ ℓ-[i .. j]. Formally, an interval ℓ -[i .. j], 0 ≤ i < j < n is an LCP interval with ‘LCP value’ ℓ if for every i < k ≤ j: LCP[k] ≥ ℓ and there exists i < k ≤ j: LCP[k] = ℓ and LCP[i] < ℓ and LCP[j + 1] < ℓ. The LCP interval 0-[0..n − 1] is defined to correspond to the root of ST(S). Intuitively, an LCP interval is a maximal interval of minimal LCP length that corresponds to an internal node of ST(S). As an illustration, LCP interval 1-[1..5] with LCP value 1 of the example string S in Table 1 corresponds to internal node v with label ℓ(v) = A in Figure 1. Similarly, subinterval relations among LCP intervals relate to parent–child relationships in suffix trees. Abouelhoda et al. (19) have shown that the boundaries between LCP subintervals of LCP interval ℓ-[i .. j] are given by the ‘ℓ-indexes’ for which it holds that LCP[k] = ℓ, i < k ≤ j. Singleton intervals correspond to leaves in the suffix tree and non-singleton intervals correspond to internal nodes. Consider, for example, the LCP interval 1-[1..5] in the running example. Its ℓ-indexes are 3 and 4. The resulting subintervals are LCP intervals 3-[1..2] and 2-[4..5] and singleton interval [3..3]. The above definitions thus generate a virtual suffix tree called the ‘LCP interval tree’. Note that the topology of this tree is not stored in memory, but is traversed using the arrays SA(S) and LCP(S).

sampled LCP array entries. Furthermore, it is possible to encode those sampled LCP array entries inside a modified version of SA(S) itself. However, the details of this technique are rather technical and fall beyond the scope of this review. Later, Kasai et al. (38) showed how all bottom-up traversals of suffix trees can be mimicked on suffix arrays in linear time by traversing LCP arrays. In fact, LCP(S) represents the tree topology of ST(S). Recall that internal nodes of suffix trees group suffixes by their LCPs. In enhanced suffix arrays, internal nodes are represented by ‘LCP intervals’ ℓ-[i .. j]. Formally, an interval ℓ -[i .. j], 0 ≤ i < j < n is an LCP interval with ‘LCP value’ ℓ if for every i < k ≤ j: LCP[k] ≥ ℓ and there exists i < k ≤ j: LCP[k] = ℓ and LCP[i] < ℓ and LCP[j + 1] < ℓ. The LCP interval 0-[0..n − 1] is defined to correspond to the root of ST(S). Intuitively, an LCP interval is a maximal interval of minimal LCP length that corresponds to an internal node of ST(S). As an illustration, LCP interval 1-[1..5] with LCP value 1 of the example string S in Table 1 corresponds to internal node v with label ℓ(v) = A in Figure 1. Similarly, subinterval relations among LCP intervals relate to parent–child relationships in suffix trees. Abouelhoda et al. (19) have shown that the boundaries between LCP subintervals of LCP interval ℓ-[i .. j] are given by the ‘ℓ-indexes’ for which it holds that LCP[k] = ℓ, i < k ≤ j. Singleton intervals correspond to leaves in the suffix tree and non-singleton intervals correspond to internal nodes. Consider, for example, the LCP interval 1-[1..5] in the running example. Its ℓ-indexes are 3 and 4. The resulting subintervals are LCP intervals 3-[1..2] and 2-[4..5] and singleton interval [3..3]. The above definitions thus generate a virtual suffix tree called the ‘LCP interval tree’. Note that the topology of this tree is not stored in memory, but is traversed using the arrays SA(S) and LCP(S).

Fast top-down searches of suffix trees not only require their tree topology, but also constant time access to child nodes. For an LCP interval ℓ-[i .. j], this means constant time access to its ℓ-indexes. This information can be precomputed in linear time for the entire LCP interval tree and stored in another array of enhanced suffix arrays, the ‘child array’. The first ℓ-index is either stored in i or j [the exact location can be determined in constant time (19)] and the next ℓ-index is stored at the location of the previous ℓ-index. The child array for the running example is given in the fourth column of Table 1. As an example, again consider LCP interval 1-[1..5]. The first ℓ-index (3) is stored at position 5 and the second ℓ-index (4) is stored at position 3. Since child[4] = 5 is equal to the right boundary of the interval (which cannot equal ℓ by definition), 4 is the last ℓ-index. The child array allows enhanced suffix arrays to simulate top-down suffix tree traversals.

As a final step towards complete suffix tree expressiveness, suffix arrays can be enhanced with ‘suffix link arrays’ that store suffix links as pointers to other LCP intervals. These pointers are stored at the position of the first ℓ-index of an LCP interval because no two LCP intervals share the same position as their first ℓ-index (19). This property and the suffix link array for the running example can be checked in Table 1.

With three extra arrays added, enhanced suffix arrays support all operations and traversals on suffix trees using the same time complexity. However, the simple modular structure allows memory savings if not all traversals are required for an application. Furthermore, array representations generally show better locality than most standard suffix tree representations, which is important when converting the index to disk, but also improves cache usage in memory (39). Practical implementation improvements have further reduced memory consumption (40) of enhanced suffix arrays and have speeded up substring matching for larger alphabets (41). In practice, several state-of-the-art bioinformatics tools make use of enhanced suffix arrays for finding repeated structures in genomes (Vmatch), short read mapping (5) and genome assembly (16). If memory is a concern, enhanced suffix arrays occupy about the same amount of memory as regular suffix trees and are thus equally inapplicable for large strings. Suffix arrays (without enhancement) are preferred for exact substring matching in very large strings.

Compressed suffix arrays

Although suffix arrays are much more compact than suffix trees, their memory footprint is still too high for extremely large strings. The main reason stems from the fact that suffix arrays (and suffix trees) store pointers to string positions. The largest pointer takes  (log n) bits, which means that suffix arrays require

(log n) bits, which means that suffix arrays require  (nlog n) bits of storage. This is large compared with

(nlog n) bits of storage. This is large compared with  (nlog|Σ|) bits needed for storing uncompressed strings. A demand for smaller indexes that remain efficient gave rise to the development of ‘succinct indexes’ and ‘compressed indexes’. Succinct indexes require

(nlog|Σ|) bits needed for storing uncompressed strings. A demand for smaller indexes that remain efficient gave rise to the development of ‘succinct indexes’ and ‘compressed indexes’. Succinct indexes require  (n) bits of space, whereas the memory requirements of compressed indexes is in the order of magnitude of the compressed string (42).

(n) bits of space, whereas the memory requirements of compressed indexes is in the order of magnitude of the compressed string (42).

Many types of compressed suffix arrays (43) have already been proposed [see Navarro and Mäkinen for a recent review (42)]. They are usually centered around the idea of storing ‘suffix array samples’, complemented with a good compressible ‘neighbor array’ Ψ(S). To understand the role of the array Ψ(S), the concept of ‘inverse suffix arrays’ SA−1(S) is introduced for which holds that SA−1[SA[i]] ≡ SA[SA−1[i]] = i. Ψ(S) can then be defined as Ψ[i] ≡ SA−1[SA[i] + 1 mod(n − 1)] for 0 ≤ i < n. This definition closely resembles that of suffix links and it will thus come as no surprise that in practice Ψ can be used to recover suffix links (44). Consequently, the array Ψ can be used to recover suffix array samples from a sparse representation of SA(S). This is illustrated using the running example string from Table 1. Assume that only SA[0], SA[6] and SA[11] are stored and that the value of SA[10] is unknown. Note that Ψ[10] = 1 and SA[1] = 4 = 3 + 1, i.e. the requested value plus one. A sampled value of SA(S) is reached by repeatedly calculating Ψ[Ψ[..Ψ[10]]] = Ψk[10]. In the example k = 2, because Ψ[Ψ[10]] = 6. Consequently, SA[10] = SA[6] − k = 5 − 2 = 3. A more detailed discussion about compressed suffix arrays is given in the next section.

The Burrows–Wheeler transform

Several compressed index structures, most notably the FM-index (45), are based on the Burrows–Wheeler transform (46) BWT(S). This reversible permutation of the string S is also known to lie at the core of compression tools such as the fast ‘bzip2’ compression tool.

The Burrows–Wheeler transform does not compress a string itself, rather it enables an easier and stronger compression of the original string by exploiting regularities found in the string. Unlike SA(S) that is a permutation of the index positions of S, BWT(S) is a permutation of the characters of S. As a result, BWT(S) only occupies  (nlog|Σ|) bits of memory in contrast to

(nlog|Σ|) bits of memory in contrast to  (nlog n) bits needed for storing SA(S). As it contains the original string itself, the Burrows–Wheeler transform does not require an additional copy of S for string searching algorithms. Index structures having this property are called ‘self-indexes’.

(nlog n) bits needed for storing SA(S). As it contains the original string itself, the Burrows–Wheeler transform does not require an additional copy of S for string searching algorithms. Index structures having this property are called ‘self-indexes’.

Intuitively, the Burrows–Wheeler transformation orders the characters of S by the context following the characters. Thus, characters followed by similar substrings will be close together. A simple way to formally define BWT(S) uses a conceptual n × n matrix M whose rows are formed by the characters of the lexicographically sorted n cyclic shifts of S. BWT(S) is the string represented by the last column of M, or BWT[i] ≡ M[i, n − 1], 0 ≤ i < n. Note that the rows of M up to the character $ also represent the suffixes in lexicographical order, or, equivalently, in suffix array order. Thus, the first column of M equals the first characters of the suffixes in suffix array order, from which follows that BWT(S) can also be defined as BWT[i] ≡ S[SA[i] − 1 modn], 0 ≤ i < n, where the modulo operator is used for the case SA[i] = 0. From this definition it immediately follows that BWT(S) can be constructed in linear time using SA(S). BWT(S) for the running example can be found in Table 1, column 8, whereas the complete matrix M is given in Table 2.

Table 2.

Conceptual matrix M containing the lexicographically ordered n cyclic shifts of S = ACATACAGATG$

| i | S[SA[i]] | BWT[i] | offset[i] | LF[i] | |

|---|---|---|---|---|---|

| 0 | $ | ACATACAGAT | G | 0 | 8 |

| 1 | A | CAGATG$ACA | T | 0 | 10 |

| 2 | A | CATACAGATG | $ | 0 | 0 |

| 3 | A | GATG$ACATA | C | 0 | 6 |

| 4 | A | TACAGATG$A | C | 1 | 7 |

| 5 | A | ATG$ACATAC | G | 1 | 9 |

| 6 | C | AGATG$ACAT | A | 0 | 1 |

| 7 | C | ATACAGATG$ | A | 1 | 2 |

| 8 | G | $ACATACAGA | T | 1 | 11 |

| 9 | G | ATG$ACATAC | A | 2 | 3 |

| 10 | T | ACAGATG$AC | A | 3 | 4 |

| 11 | T | G$ACATACAG | A | 4 | 5 |

M[0..11,0] contains the lexicographically ordered characters of S and M[0..11,11] equals BWT(S). The last two columns are required for the inverse transformation. offset[i] stores the number of times BWT[i] has appeared earlier in BWT(S). The last column LF[i] contains pointers used during the inverse transformation algorithm: if S[i] = BWT[j], then BWT[LF[j]] = S[i − 1].

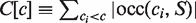

The inverse transformation that reconstructs S from BWT(S) is key to uncompression algorithms and the string matching algorithm utilized in compressed index structures. It recovers S back-to-front and is based on a few simple observations. First, although BWT(S) only stores the last column of M, the first column of M is easily retrieved from BWT(S) because it is the lexicographical ordering of the characters of S [and thus also BWT(S)]. Moreover, the first column of M can be represented in compact form as an array C(S) that stores the number of characters in S that are lexicographically smaller than character c ∈ Σ. More precisely:  , ci ∈ Σ. For the running example, C(S) = [0,1,6,8,10] can be retrieved from Table 2. A second observation is that BWT(S) stores the order of characters preceding the suffixes in suffix array order. As a result, if the character at position i (S[i]) has been decoded and the lexicographical order of suffix S[i..] is known to be j, character S[i − 1] is found in BWT[j]. Finally, the most important observation that allows for the retrieval of S from BWT(S) is that identical characters preserve their relative order in the first and last columns of M. To see the correctness of this observation, let BWT[i] = BWT[j] = c for i < j. The lexicographical ordering of the cyclic permutations means that the suffix in row i of M corresponding to SA[i] is lexicographically smaller than the suffix in row j corresponding to SA[j]. From cS[SA[i]..] < cS[SA[j]..] it then follows that the location of character c corresponding to BWT[i] precedes the location of character c corresponding to BWT[j] in the first column of M. The relative order of identical characters in BWT(S) is captured in the array offset(S): offset[i] stores the number of times that character BWT[i] occurs in BWT(S) before position i, i.e. offset[i] ≡ |occ(BWT[i],BWT[..i − 1])|, 0 < i < n. Given a position i in BWT(S), the corresponding character in the first column of M can then be found at position LF[i] = C[BWT[i]] + offset[i]. The array LF(S) is called the ‘last-to-first column mapping’.

, ci ∈ Σ. For the running example, C(S) = [0,1,6,8,10] can be retrieved from Table 2. A second observation is that BWT(S) stores the order of characters preceding the suffixes in suffix array order. As a result, if the character at position i (S[i]) has been decoded and the lexicographical order of suffix S[i..] is known to be j, character S[i − 1] is found in BWT[j]. Finally, the most important observation that allows for the retrieval of S from BWT(S) is that identical characters preserve their relative order in the first and last columns of M. To see the correctness of this observation, let BWT[i] = BWT[j] = c for i < j. The lexicographical ordering of the cyclic permutations means that the suffix in row i of M corresponding to SA[i] is lexicographically smaller than the suffix in row j corresponding to SA[j]. From cS[SA[i]..] < cS[SA[j]..] it then follows that the location of character c corresponding to BWT[i] precedes the location of character c corresponding to BWT[j] in the first column of M. The relative order of identical characters in BWT(S) is captured in the array offset(S): offset[i] stores the number of times that character BWT[i] occurs in BWT(S) before position i, i.e. offset[i] ≡ |occ(BWT[i],BWT[..i − 1])|, 0 < i < n. Given a position i in BWT(S), the corresponding character in the first column of M can then be found at position LF[i] = C[BWT[i]] + offset[i]. The array LF(S) is called the ‘last-to-first column mapping’.

The above observations allow the back-to-front recovery of S from BWT(S) utilizing a zig-zag algorithm. Starting in row i0 of BWT(S) containing character $, the position of the previous character of S is found in row LF[i0] = i1. The next preceding character is found on row i2 = LF[i1] in BWT(S), and so on. Thus, to find the row of the next preceding character, the algorithm looks horizontally in Table 2 and the actual character is retrieved from the BWT column on that row in Table 2. Note that neither M nor its first column are ever used explicitly during the algorithm. They only serve to understand the procedure for the inverse transformation. In practice, C(S) and offset(S) are first constructed from BWT(S). During each step, LF[ik] is calculated using C(S) and offset(S) and BWT[LF[ik]] is returned as the preceding character. As an example, M, offset(S) and LF(S) for the running example can be found in Table 2 and C(S) is given above. S[SA[0]] = $ is preceded by the character BWT[i0] = G in the running example. Consequently, G$ is the lexicographical first suffix that starts with G, which translates into offset[i0] = 0. The first row of M whose corresponding suffix starts with G has row number C[G] = 8. Adding the number of suffixes that also start with G, but are lexicographically smaller than G$ (=0), returns the position in BWT(S) of the next character that will be decoded. BWT[8 + 0] = BWT[LF[0]] = T = S[9]. In the next step, S[8] is retrieved by computing LF[8] = 11 and BWT[11] = A. Eventually, S is retrieved in  (n) time using the LF-mapping.

(n) time using the LF-mapping.

The Burrows–Wheeler transform by itself only permutes strings without compressing them. It is, however, easier to compress BWT(S) than the original string S, as the order of the characters in BWT(S) is determined by similar contexts following the characters, analogous to the way suffixes are grouped by LCPs in suffix trees. An immediate consequence is that run-length encoding, which encodes runs of identical characters by their length, shows good compression results for BWT(S). Apart from run-length encoding (45,47), move-to-front lists (45), wavelet trees (42,47,48) and several entropy encoders, such as Huffman codes (49,50), have also been used successfully to compress BWT(S). For a complete overview on compression techniques based on the Burrows–Wheeler transform, we refer to the book of Adjeroh et al. (51).

Analogous to suffix arrays, BWT(S) can be used to find exact matches of substrings by applying binary search. Similar to compressed suffix arrays, binary searching BWT(S) requires auxiliary data structures, including Ψ(S) and (sampled) SA(S) (51), resulting in compressed suffix arrays. Given the relation between BWT(S) and SA(S), BWT(S) can also be utilized for constructing other compressed suffix arrays (52). Moreover, suffix trees, suffix arrays and other non-self-indexes require a copy of the indexed string S, which can be replaced by a compressed form of BWT(S) to reduce space.

FM-indexes

Another search method for exact string matching can be applied to Burrows–Wheeler transformed strings, using ideas from the inverse transformation algorithm. This method is referred to as ‘backward searching’ and forms the basic search mechanism of ‘FM-indexes’ (45). FM-index is the short name given by Ferragina and Manzini to their full-text self-indexes that require ‘minute amount of space’. The space requirement is proportional to and sometimes even smaller than that of the indexed string. FM-indexes can be constructed in  (n) time and all occurrences of pattern P can be located in

(n) time and all occurrences of pattern P can be located in  (m + |occ(P, S)|log n) time. Note that finding |occ(P, S)| only requires

(m + |occ(P, S)|log n) time. Note that finding |occ(P, S)| only requires  (m) time, which makes that FM-indexes have theoretical optimal time and space requirements for counting the number of occurrences of a pattern in a string.

(m) time, which makes that FM-indexes have theoretical optimal time and space requirements for counting the number of occurrences of a pattern in a string.

The backward search algorithm employed by FM-indexes requires BWT(S), C(S) and a 2D n × |Σ| array rank(S) [In many papers, rank(S) is referred to as Occ(S), but to avoid confusion with occ(P, S), the name ‘rank’ is used]. This array is defined as rank[i, c] ≡ |occ(c, BWT[..i])|, 0 ≤ i < n, c ∈ Σ. For the running example, rank(S) is shown as columns 9 – 13 in Table 1. The role of rank(S) is similar to the role offset(S) plays in the inverse transformation of BWT(S). However, while offset(S) only stores information on the number of occurrences of one character for each index position, rank(S) contains this information for all the characters in the alphabet in all index positions. The extra information contained in rank(S) compared with offset(S) gives it the advantage of granting random access to LF(S). Furthermore, rank(S) is easier to compress than offset(S) or LF(S) (51).

During the course of the search algorithm, P is matched from right to left. For every step i, 0 ≤ i < m, an interval BWT[si .. ei] is maintained that contains all occurrences of P[m − i..]. Initially, [s0 .. e0] ≡ [0..n − 1], and after m steps [sm .. em] contains the suffix array interval corresponding to occ(P, S). Given [si .. ei] and c = P[m − i − 1], the next interval is found using the formulas si+1 = C[c] + rank[c, si − 1] and ei+1 = C[c] + rank[c, ei + 1] − 1. Here, array C(S) is used to locate the interval of suffixes starting with c in SA(S) and array rank(S) is used to find the number of suffixes starting with c that are lexicographically smaller and larger than the ones prefixed by cP[m − i..]. As an example of backward searching, again consider matching P = CA to the running example in Table 1. Initially, the backward search interval is [0..11]. Since C[A] = 1 and C[C] = 6, the backward search interval narrows down to [s1..e1] = [1..5] in the next step, which corresponds to the suffix array interval containing suffixes starting with A. Note that BWT[3] = BWT[4] = C, so there are two suffixes starting with A that are preceded by C. Consequently, s2 = C[C] + rank[0, C] = 6 + 0 = 6 and e2 = C[C] + rank[5, C] − 1 = 6 + 2 − 1 = 7. The answer |occ(P, S)| = 7 − 6 + 1 = 2 is found in  (m) time. rank[0, C] = 0 means that there are no suffixes starting with C located in SA[0..0] and rank[5, C] = 2 means that there are 2 suffixes starting with C located in SA[0..5]. Also note the resemblance between LF-mapping and backward search: s2 also could have been found as the first occurrence of C in BWT[1..5], which is 3: LF[3] = 6 = s2. Likewise, e2 could have been found as the last occurrence of C in BWT[1..5]. However, instead of locating these occurrences, note that offset[3] = rank[3, C] − 1 = rank[1, C] − 1. Thus, the offset(S) values are stored in rank(S) at the boundaries of every interval, allowing search intervals to be narrowed down in constant time. As a result, the reverse search algorithm of the FM-index simulates a top-down search in a suffix ‘trie’, i.e. a suffix tree where every edge label contains only a single character.

(m) time. rank[0, C] = 0 means that there are no suffixes starting with C located in SA[0..0] and rank[5, C] = 2 means that there are 2 suffixes starting with C located in SA[0..5]. Also note the resemblance between LF-mapping and backward search: s2 also could have been found as the first occurrence of C in BWT[1..5], which is 3: LF[3] = 6 = s2. Likewise, e2 could have been found as the last occurrence of C in BWT[1..5]. However, instead of locating these occurrences, note that offset[3] = rank[3, C] − 1 = rank[1, C] − 1. Thus, the offset(S) values are stored in rank(S) at the boundaries of every interval, allowing search intervals to be narrowed down in constant time. As a result, the reverse search algorithm of the FM-index simulates a top-down search in a suffix ‘trie’, i.e. a suffix tree where every edge label contains only a single character.

After backward searching has terminated, occ(P, S) is still unknown. Using LF-mapping, this set can be retrieved from the interval BWT[sm .. em]. One possibility is to count the number of backward searches it takes to reach character $ for every sm ≤ i ≤ em. However, this would require too much time. To achieve better performance, FM-indexes mark additional positions with suffix array values in BWT(S). The number of suffix array values stored constitutes a time-space trade-off. Recall that LF[i] returns the position in SA(S) of suffix S[SA[i] − 1..]. Thus SA[LF[i]] = SA[i] − 1, such that LF(S) can be used to find the next smaller suffix array value. The ability of LF(S) to find smaller suffix array values is used as an argument to classify FM-indexes as compressed suffix arrays (45). Moreover, LF(S) and Ψ(S) are each others' inverse: SA[LF[i]] = SA[i] − 1 and SA[Ψ[i]] = SA[i] + 1, hence LF[Ψ[i]] = Ψ[LF[i]] = i.

FM-indexes combine fast string matching with low memory requirements. Their original design (45) compresses BWT(S) using move-to-front lists, run-length encoding and a variable-length prefix code. In the original paper, rank(S) was compressed using the ‘Four-Russians’ technique (53). Roughly speaking, this technique comes down to subdividing the problem into small enough subproblems and indexing all solutions to these small problems in a global table. The subdivision into smaller subproblems is done by recursively splitting arrays into equally sized blocks and storing answers to queries relative to the larger parent block. Other compression methods have been proposed that show better performance in practice (49) or that give different space-time trade-offs (47,48,50,54,55).

Since they allow fast pattern matching while having small memory requirements, FM-indexes have become a very popular tool for different types of genome analyses. Compressed full-text index structures are mainly used for exact string matching, but algorithms for inexact string matching exist (51,56). FM-indexes have started to become used as part of de novo genome assembly algorithms (17) and are supporting popular tools for mapping reads to reference sequences such as Bowtie (7), BWA (8) and SOAP2 (9).

TIME-MEMORY TRADE-OFFS

The increase in sequencing data requires efficient algorithms and data structures to form the backbone of computational tools for storing, processing and analyzing these sequences. Without the use of index structures, many algorithms that rely on string searching would become unfeasible due to a long execution time. However, index structures also incur a memory overhead to sequence analysis.

Over the last decade, much energy has been put into decreasing the memory consumption of index structures. The proposals differ in the performance overhead incurred by lowering the memory footprint. Some index structures suffer from a logarithmic slowdown, while others allow for the tuning of the space-time trade-off. There are indexes that have been especially designed for certain types of data, whereas others are tweaked for particular hardware architectures. An example of a data-specific property influencing index structure performance is the alphabet size of the sequences. Another major factor that allows classifying index structures is their expressiveness. Suffix trees are considered to have full expressiveness (29), supporting a large variety of string algorithms. Conversely, the bulk of recent compressed self-index structures are limited to performing mainly (in)exact string matching. These string matching self-indexes are often compared on the basis of four criteria: their performance of extracting a random substring of S, calculating |occ(P, S)| and occ(P, S) and their size. An overview of the memory taken by several index structures discussed in this section can be found in Table 3. This table represents memory requirements both in general terms of number of bits required per indexed character, as well as in terms of its size for indexing full genomes. Note, however, that the list of index structures in Table 3 is not complete nor gives a full overview of the memory-time trade-offs. For example, external memory index structures were omitted, but can be found in ‘Index structures in external memory’ section. Additionally, peak memory requirements during construction can be much higher than the figures described here (see ‘Construction’ section). Furthermore, index structures contain parameters that allow manual tuning of the memory-time trade-off. Finally, because the expressiveness differs greatly between index structures, Table 3 does not include any time-related results. Partial results for some algorithms can be found elsewhere (39,54,57,58).

Table 3.

Representative memory requirements for different index structure implementations, expressed both as bits per indexed character (column 2) and estimated size in megabytes for several known genomes (columns 3–5)

| Name index structure | Bits/char | Size for genome in MB |

Reference | ||

|---|---|---|---|---|---|

| Yeast | Fruit fly | Human | |||

| 2-bit encoded string | 2 | 3 | 35 | 775 | NCBIa |

| CSA Grossi et al. | 2.4 | 4 | 42 | 931 | (59,60) |

| FM-index | 3.36 | 5 | 59 | 1302 | (45,39) |

| SSA (best) | 4 | 6 | 70 | 1551 | (47,57) |

| CST Russo et al.b | 5 | 8 | 87 | 1939 | (61,62) |

| CSA Sadakane (best) | 5.6 | 8 | 98 | 2171 | (63,64) |

| LZ-index (best) | 6.64 | 10 | 116 | 2574 | (57) |

| byte encoded string | 8 | 12 | 139 | 3102 | NCBIa |

| CST Navarrob | 12 | 18 | 209 | 4653 | (62) |

| SSA (worst) | 20 | 30 | 349 | 7754 | (47,57) |

| CST Sadakaneb | 30 | 45 | 523 | 11 632 | (44,62) |

| LZ-index (worst) | 35.2 | 53 | 614 | 13 648 | (65,39) |

| Suffix array | 40 | 60 | 697 | 15 509 | (35) |

| Enhanced SA | 72 | 109 | 1255 | 27 916 | (19) |

| WOTD suffix tree | 76 | 115 | 1325 | 29 467 | (33) |

| ST McCreight | 232 | 350 | 4045 | 89 952 | (34,33) |

Column 6 contains references to the original theoretical proposals and an additional reference to the articles from which these practical estimates originate. For ease of comparison purposes, the index structures are sorted by increasing memory requirements. As a reference, the original (non-indexed) sequence is also included (bold), both stored using 2-bit encoding and byte encoding.

aGenome sizes were taken from the NCBI genome information pages http://www.ncbi.nlm.nih.gov/genome of Saccharomyces cerevisiae (yeast), Drosophila melanogaster (fruit fly) and Homo Sapiens (human).

bMean of the interval of possible memory requirements given in (62).

The remainder of this section focuses on the basic principles behind these index structures and the memory-time trade-offs induced by design choices and confounding factors such as application and data types.

Uncompressed index structures

Choosing appropriate data structures for implementing the different components of suffix trees forms a basic step in lowering their memory requirements. These components include nodes, edges, edge labels, leaf numbers and suffix links. The topological information of ST(S) and the edge labels are traditionally stored as pointers, resulting in suffix trees that require  (n) words of usually 32 bits. Note that for very large strings (n > 232 ≈ 4·109) 32 bits is insufficient for storing the pointers, thus larger representations are required. This factor is often overlooked when presenting theoretical results.

(n) words of usually 32 bits. Note that for very large strings (n > 232 ≈ 4·109) 32 bits is insufficient for storing the pointers, thus larger representations are required. This factor is often overlooked when presenting theoretical results.

There is only one major  (|Σ|)-sized memory-time trade-off in this traditional representation. This trade-off comes from the data structure that handles access to child vertices. Most implementations make use of—roughly ordered from high-memory requirements to low access time—static arrays, dynamic arrays (39), hash tables, linked lists and layouts with only pointers toward the first child and next sibling. Furthermore, mixed data structures that represent vertices with different numbers of children have also been proposed (66). Note that for DNA sequences, |Σ| is very small, turning array implementations into a workable solution. Also note that algorithms that perform full suffix tree traversals, such as repeat finding and many other string problems (29), do not suffer from a performance loss when implemented with more memory-efficient data structures.

(|Σ|)-sized memory-time trade-off in this traditional representation. This trade-off comes from the data structure that handles access to child vertices. Most implementations make use of—roughly ordered from high-memory requirements to low access time—static arrays, dynamic arrays (39), hash tables, linked lists and layouts with only pointers toward the first child and next sibling. Furthermore, mixed data structures that represent vertices with different numbers of children have also been proposed (66). Note that for DNA sequences, |Σ| is very small, turning array implementations into a workable solution. Also note that algorithms that perform full suffix tree traversals, such as repeat finding and many other string problems (29), do not suffer from a performance loss when implemented with more memory-efficient data structures.

In practice, suffix trees and suffix arrays require between 34n and 152n bits of memory. The suffix tree implementations described by Kurtz (66) perform very well and are implemented in the latest release of MUMmer (10), an open-source sequence analysis tool. The implementation in MUMmer allows indexing DNA sequences up to 250 Mbp on a computer with 4 GB of memory. Single human chromosomes are thus well within reach of standard suffix trees. Another implementation by Giegerich et al. (33) is even smaller, but lacks suffix links. Enhanced suffix arrays (19) also reach full expressiveness of suffix trees, as described in the previous section. When carefully implemented, they require anything between 40n and 72n bits. Enhanced suffix arrays use a linked list to represent the vertices of the tree. However, the  (|Σ|) performance penalty for string matching can be reduced to

(|Σ|) performance penalty for string matching can be reduced to  (|logΣ|) (41). Furthermore, enhanced suffix arrays form the basis of the Vmatch program that finds different types of exact and approximate repeats in sequences of several hundreds of Mbp in a few seconds. Moreover, according to a comparison between several implementations of suffix trees and enhanced suffix arrays (39), enhanced suffix arrays show the best overall performance for both the memory footprint and the traversal times. Finally, their modular design allows replacing some arrays by a compressed counterpart to further reduce space.

(|logΣ|) (41). Furthermore, enhanced suffix arrays form the basis of the Vmatch program that finds different types of exact and approximate repeats in sequences of several hundreds of Mbp in a few seconds. Moreover, according to a comparison between several implementations of suffix trees and enhanced suffix arrays (39), enhanced suffix arrays show the best overall performance for both the memory footprint and the traversal times. Finally, their modular design allows replacing some arrays by a compressed counterpart to further reduce space.

Sparse indexes

An intuitive solution for decreasing index structure memory requirements is sparsification or sampling of suffixes or array indexes. ‘Sparse suffix trees’ (67) and ‘sparse suffix arrays’ (68) adopt the idea of utilizing a sparse set of suffixes, whereas compressed suffix arrays and trees sample values in Ψ(S), C(S), rank(S) and other arrays involved in their design. As a consequence of sparsification, more string comparisons and sequential string searches are required. This, however, gives the opportunity to optionally tweak the size of the index structure based on the available memory. Although compressed index structures have received more attention in bioinformatics applications, sparse suffix arrays have been successfully used for exact pattern matching, retrieval of maximal exact matches (69) and read alignment (70). Furthermore, splitting indexes over multiple sparse index structures has been used for index structures that reside on disk (71) and for distributed query processing (72).

Word-based index structures are special cases of sparse index structures which only sample one suffix per word. Although word-based index structures are most popular in the form of inverted files, word-based suffix trees (73,74) and suffix arrays (68) also exist. Although it is possible to divide biological sequences into ‘words’, word-based index structures are generally designed to answer pattern matching queries on natural language data. On natural language data, Transier and Sanders (75) found that inverted files outperformed full-text indexes by a wide margin. Unfortunately, the inverted files were not compared against word-based implementations of suffix trees and suffix arrays. A somewhat dual approach was taken by Puglisi et al. (76), who adapted inverted files to become full-text indexes able to perform substring queries. They found compressed suffix arrays to generally outperform inverted files for DNA sequences, but the opposite conclusion was drawn for protein sequences. It turns out that compressed suffix arrays perform relatively better compared with inverted files when searching for patterns having fewer occurrences. Note that both comparative studies were performed in primary memory.

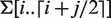

Compressed index structures

Compressed and succinct index structures are currently the most popular forms of index structures used in bioinformatics. Index structures such as compressed suffix arrays and FM-indexes are gradually built into state-of-the-art read mapping tools and other bioinformatics applications. Where traditional index structures require  (nlog n) bits of storage, succinct index structures require

(nlog n) bits of storage, succinct index structures require  (n) bits and the memory footprint of compressed index structures is defined relative to the ‘empirical entropy’ (77) of a string. Furthermore, these self-indexes contain S itself, thus saving again

(n) bits and the memory footprint of compressed index structures is defined relative to the ‘empirical entropy’ (77) of a string. Furthermore, these self-indexes contain S itself, thus saving again  (n) bits. Theoretically, this means that the size of compressed index structures can become a fraction of S itself. In practice, however, DNA and protein sequences do not compress very well (2,70). For this reason, the size of compressed index structures is roughly similar to the size of storing S using a compact bit representation. The major disadvantage of compressed index structures is the logarithmic increase in computation time for many string algorithms. This is, however, not the case for all string algorithms. For example, calculating |occ(P, S)| can still be done in

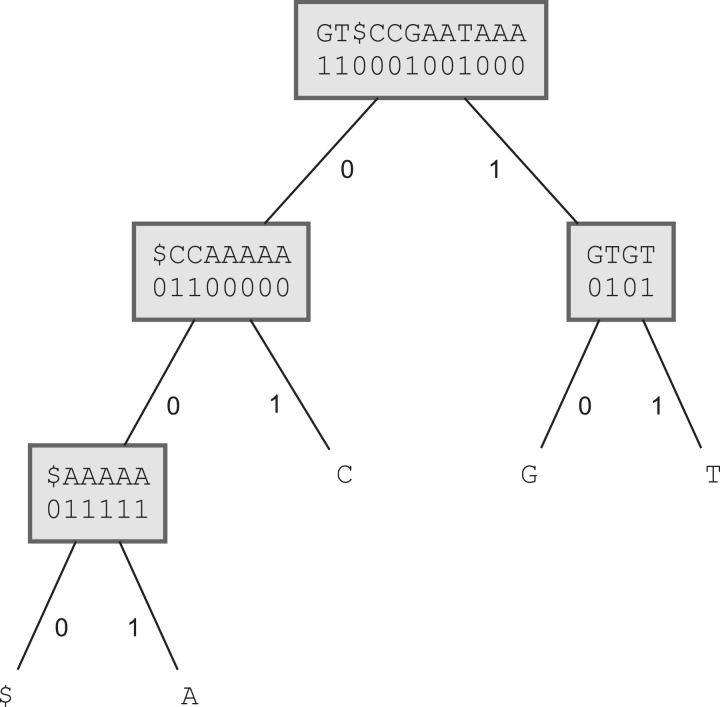

(n) bits. Theoretically, this means that the size of compressed index structures can become a fraction of S itself. In practice, however, DNA and protein sequences do not compress very well (2,70). For this reason, the size of compressed index structures is roughly similar to the size of storing S using a compact bit representation. The major disadvantage of compressed index structures is the logarithmic increase in computation time for many string algorithms. This is, however, not the case for all string algorithms. For example, calculating |occ(P, S)| can still be done in  (m) time for some compressed indexes. These internal differences between compressed index structures result from their complex nature, as they combine ideas from classical index structures, compression algorithms, coding strategies and other research fields. In the following paragraphs, the conceptual differences of state-of-the-art compressed index structures are surveyed, illustrated with theoretical and practical comparisons wherever possible. A more technical review is found in (42).