Abstract

The ability to perceive important features of electrical stimulation varies across stimulation sites within a multichannel implant. The aim of this study was to optimize speech processor MAPs for bilateral implant users by identifying and removing sites with poor psychophysical performance. The psychophysical assessment involved amplitude-modulation detection with and without a masker, and a channel interaction measure quantified as the elevation in modulation detection thresholds in the presence of the masker. Three experimental MAPs were created on an individual-subject basis using data from one of the three psychophysical measures. These experimental MAPs improved the mean psychophysical acuity across the electrode array and provided additional advantages such as increasing spatial separations between electrodes and/or preserving frequency resolution. All 8 subjects showed improved speech recognition in noise with one or more experimental MAPs over their everyday-use clinical MAP. For most subjects, phoneme and sentence recognition in noise were significantly improved by a dichotic experimental MAP that provided better mean psychophysical acuity, a balanced distribution of selected stimulation sites, and preserved frequency resolution. The site-selection strategies serve as useful tools for evaluating the importance of psychophysical acuities needed for good speech recognition in implant users.

INTRODUCTION

Variation in perception across stimulation sites within an individual subject’s cochlear implant has been demonstrated by psychophysical measures that assess stimulus detection, loudness and temporal or spatial discrimination at individual stimulation sites (Zwolan et al., 1997; Donaldson and Nelson, 2000; Pfingst et al., 2004; Pfingst and Xu, 2005; Pfingst et al., 2008; Zhou et al., 2012; Garadat et al., 2012). The patterns of the across-site variation are subject-specific, suggesting that they are not based on the normal organization of the auditory system, but rather, that they are at least in part dependent on peripheral site-specific conditions such as pathology, in an individual’s implanted ear. Histological analysis of human temporal bones shows that these peripheral conditions are not distributed uniformly in the implanted ear but occur in localized areas (Johnsson et al., 1981; Nadol, 1997), suggesting a likely cause of the across-site variation in perception. In addition, animal studies comparing psychophysical and electrophysiological responses to histological data support the idea that conditions in the cochlea near the cochlear-implant electrodes have significant impact on the functional responses to electrical stimulation (Pfingst et al., 1981; Shepherd et al., 1993; Shepherd and Javel, 1997; Shepherd et al., 2004; Chikar et al., 2008; Kang et al., 2010; Pfingst et al., 2011b).

The uneven perceptual acuity along the tonotopic axis warrants the use of fitting strategies based on selection of optimal stimulation sites within the patient’s multisite electrode array, assuming that the stimulation sites with poor perceptual acuity could negatively affect perception with the whole electrode array. Furthermore, across-site variation itself might be a detrimental factor, since studies have shown that subjects with less variance across stimulation sites in psychophysical detection thresholds have better speech outcome (Pfingst et al., 2004; Pfingst and Xu, 2005; Bierer, 2007). Therefore, outcome performance can potentially be improved if the stimulation sites with poor acuity are avoided, which reduces across-site variation and improves the mean psychophysical performance across the array. We call this approach a site-selection strategy. Zwolan et al. (1997) were among the first to use the site-selection strategy for creating an experimental MAP, which excluded electrodes that were not discriminable from their nearest neighbors. Speech recognition was significantly improved on at least one speech test for 7/9 subjects while 2/9 subjects showed a significant decrease on at least one speech test. In a recent study, individual implant users’ clinical MAPs were optimized by removing 5 electrodes with the poorest modulation detection sensitivity, one from each of 5 tonotopic regions segmented evenly along the electrode array (Garadat et al., 2011). All 12 subjects in that study achieved improved speech reception thresholds using the optimized MAP compared to the clinical MAP that they had used every day for at least 12 months.

The pattern in which perceptual responses vary along the electrode array differs across psychophysical measures, probably because the various psychophysical tasks are mediated by different mechanisms and characterize different aspects of perception (Pfingst et al., 2008; Pfingst et al., 2011a). To maximize the potential benefit of a site-selection strategy for speech recognition, it is important to determine which psychophysical measures are correlated with speech recognition. Modern multichannel cochlear implant devices typically use temporal-envelope-based speech processing strategies that transmit the low-frequency envelope components of speech signals by using them to modulate constant-rate pulse trains (Wilson et al., 1995). Since the current designs of cochlear implants inherently provide low spectral resolution, the success of transmitting the speech information largely depends on coding of the slowly-varying envelope amplitudes of the signal (Eddington et al., 1978; Shannon et al., 1995). Studies have shown that amplitude modulation detection thresholds (MDTs) that measure a listener’s ability to detect current change in the envelope is correlated with their speech recognition performance, although the strength of this relationship has not been consistent across experiments (Cazals et al., 1994; Fu, 2002; Luo et al., 2008).

Accurate coding of the speech envelopes from the output of each frequency channel also depends on the independence of the frequency channels. Speech recognition performance of cochlear implant users typically saturates when the number of channels is increased to 8, or fewer in some cases (Friesen et al., 2001). This is thought to be due to channel interaction. In cochlear implants, there is likely to be spatial overlap in the neural populations that are activated by individual channels of stimulation. The amount of channel interaction has been attributed to the sparseness or unevenness in the distribution of surviving and stimulatable neural fibers, positioning of the implant electrodes in the scala tympani, and other variables sometimes referred to collectively as the electrode-neuron interface (Long et al., 2010; Bierer and Faulkner, 2010). In psychophysical tasks, channel interactions can manifest as reduced sensitivity in amplitude modulation detection (i.e., elevated MDTs) in the presence of a masker interleaved in time on an adjacent electrode (Richardson et al., 1998; Chatterjee, 2003; Garadat et al., 2012).

In the present study, we explored site-selection strategies in bilateral implant subjects using three psychophysical measures that either assesses temporal acuity (MDTs in quiet), spatial acuity (channel interaction), or both (masked MDTs). One criterion psychophysical measure for site selection was chosen for each bilateral implant subject by comparing the subject’s ear differences in the three psychophysical measures in relation to the ear difference in speech recognition. We first determined for each subject the measure that showed ear difference in the same direction in the across-site mean (ASM) as the ear difference in speech recognition. We hypothesized that site selection using measures chosen on an individual basis would be the most effective. Customized site-selection strategies used within two ears provide a number of advantages over the options available with unilateral implants. With bilateral implants, stimulation sites can be selected without changing frequency allocation, the number of spectral channels, or processing strategy via dichotic stimulation, as detailed in Sec. 2.

The aims of the study therefore were (1) to examine the across-site patterns of performance for MDTs in quiet, MDTs in the presence of an unmodulated masker, and channel interaction (the amount of MDT elevation in the presence of a masker) across ears and subjects, (2) to create customized MAPs using site-selection strategies on an individual-subject basis using the psychophysical measure that showed ASM ear difference in the same direction as the ear difference in speech recognition, and (3) to examine the effectiveness of the customized MAPs by comparing them to the listeners’ everyday-use clinical MAPs. The site-selection strategies used in this within-subject design would help elucidate the relationship of psychophysical and speech recognition performance in cochlear implant users that was not conclusive in previous studies that used across-subject designs. These strategies also have strong clinical implications because they can be used to guide fitting cochlear implants in clinical practice.

METHODS

Subjects and hardware

Eight bilaterally implanted post-lingually deafened adult subjects were recruited from the University of Michigan, Ann Arbor Cochlear Implant clinic and surrounding areas. The subjects were native English speakers. They were users of Nucleus CI24M, CI24R, CI24RE, or CI512 implants and all were programmed with an ACE speech-processing strategy. All subjects were programmed with a monopolor (MP1+2) electrode configuration. The subjects used Freedom or CP810 speech processors for both ears, or one for each ear. All subjects were sequentially implanted demonstrating an ear-difference in speech recognition to various degrees. These subjects showed no presence of cognitive disabilities. The demographic information for the eight subjects is shown in Table TABLE I.. The use of human subjects for this study was reviewed and approved by the University of Michigan Medical School Institutional Review Board.

TABLE I.

Demographic information of the bilaterally implanted subjects.

| Duration of deafness prior to implantation (years) | Duration of CI use (years) | |||||||

|---|---|---|---|---|---|---|---|---|

| Subject | Gender | Age | L | R | L | R | Implant type (L/R) | Etiology (L/R) |

| S52 | F | 58.5 | 54.0 | 21.5 | 1.9 | 12.0 | CI24R(CA)/CI24M | Trauma/Hereditary |

| S60 | M | 71.4 | 0.2 | 6.2 | 8.2 | 2.2 | CI24R(CS)/CI24RE(CA) | Hereditary/Hereditary |

| S69 | M | 70.8 | 4.4 | 9.2 | 6.4 | 1.6 | CI24R(CA)/CI512 | Noise exposure/Noise exposure |

| S81 | F | 59.8 | 2.5 | 4.0 | 5.2 | 3.7 | CI24RE(CA)/CI24RE(CA) | Hereditary/Hereditary |

| S86 | F | 64.2 | 31.0 | 0.7 | 3.2 | 3.5 | CI24RE(CA)/CI24RE(CA) | Hereditary/Hereditary |

| S88 | M | 60.0 | 4.5 | 33.5 | 8.5 | 8.5 | CI24RE(CA)/CI24R(CS) | Hereditary/Hereditary |

| S89 | M | 66.4 | 64.1 | 59.9 | 2.3 | 6.5 | CI24RE(CA)/CI24R(CA) | Hereditary/Hereditary |

| S90 | F | 74.0 | 45.6 | 51.3 | 7.4 | 1.7 | CI24R(CA)/CI512 | Hereditary/Hereditary |

Laboratory-owned Cochlear speech processors (Cochlear Corporation, Englewood, CO) were used for the psychophysical and speech recognition tests. Two Freedom processors, one for each ear, were used for collecting the psychophysical data. For the speech recognition tests including those for the experimental and the subjects’ clinical MAPs, laboratory-owned Freedom or CP810 processers were used depending on what the subjects wore daily for their clinical MAPs.

Psychophysical measures

Stimuli and stimulation method

Stimuli for the following psychophysical tests were 500 ms trains of symmetric-biphasic pulses with a mean phase duration of 50 μs and an inter-phase interval of 8 μs, presented at a rate of 900 pps using a monopolar (MP1+2) electrode configuration. The labels for the stimulation sites were the same as those of the scala tympani electrodes, which were numbered 1 to 22 from base to apex. Psychophysical data were not collected at stimulation sites that were turned off in the subject’s everyday-use clinical MAP. The number of sites turned off for this reason ranged from 0 to 3 per ear.

Measure of dynamic range

Dynamic range was obtained by measuring the thresholds (Ts) and the maximum comfortable levels (Cs) for each of the stimulation sites in both ears using Cochlear Custom Sound Suite 3.1 software. T levels were obtained using the method of adjustment. The level of the stimulus was varied up and down until the subjects could just hear the beep(s). To measure C level, the stimulus level was raised to the maximum point where the subjects judged that they could tolerate the sound for a long period of time.

Amplitude-modulation detection

Single-channel MDTs were measured at each of the available stimulation sites in a random order. Electrical stimuli were presented through a research interface (Cochlear Corporation NICII) controlled by Matlab software. The stimulus level was set at 50% of the dynamic range [i.e., T + (C−T) × 0.5 in clinical units] at the tested stimulation site. The phase duration of both positive and negative phases of the pulses was modulated by a 10 Hz sinusoid that started and ended at zero phases. Stimulus charges can be controlled in finer steps if phase duration rather than amplitude is modulated. A four-alternative forced-choice (4AFC) paradigm was used. The four 500 ms pulse train stimuli were separated by 500 ms silent intervals. Only one of the pulse trains, chosen at random from trial to trial, contained the amplitude-modulated signal. Modulation depth started at 50% and adapted using a two-down, one-up adaptive-tracking procedure (Levitt, 1971). In the tracking procedure, the step size was 6 dB until the first reversal, 4 dB for the next two, and 1 dB for the remaining reversals. The MDT was defined as the mean of the modulation depths at the last 6 reversals. Feedback was given after each trial.

To measure MDT in the presence of a masker and assess channel interaction, dual-channel stimulation was used where MDT was measured on a probe electrode in the presence of an unmodulated masker on an adjacent electrode. The masker was presented on the adjacent electrode apical to the probe electrode, or in the case of electrode 22, basal to the probe electrode, at 50% of the dynamic range at the masking electrode. A previous study on forward masked excitation patterns (Lim et al., 1989) showed that the amount of masking decreases more rapidly as a function of electrode separation in the apical direction than in the basal direction, which provided rationale for choosing the apical electrode as the masker in this study and others (Richardson et al., 1998). The masker was un-modulated to minimize informational masking at the central level (Chatterjee, 2003). The dual channels were interleaved in time to achieve concurrent but non-simultaneous stimulation as in a normal cochlear implant speech processor.

The MDTs in quiet and the masked MDTs were both measured twice for each stimulation site and the means of the two MDTs were taken as the final measurements. The amount of masking (i.e., masked MDT minus MDT in quiet) was calculated as a measure of channel interaction for each stimulation site. The ASMs and across-site variances (ASVs) were calculated for each ear for all three measures.

Constructing experimental MAPs

Determining a psychophysical measure for the site-selection strategy

Following the psychophysical assessment of the whole electrode array in both ears and speech reception threshold (SRT) measured for each ear alone (procedure described in the following section), the measure for site selection was chosen on an individual basis. For each subject, the psychophysical measure that showed the ASM ear difference in the same direction as the ear difference in speech recognition was chosen as the criterion measure for site selection. Table TABLE II. summarizes for each subject the better ear on speech recognition and the ear that showed better ASM on each of the three psychophysical measures. One-sample Z tests were performed to examine whether the ASM ear differences were significantly different than zero. P values of the Z tests are also included in the table.

TABLE II.

The better ear on speech reception threshold (SRT) and on each of the three psychophysical measures.

| S52 | S60 | S69 | S81 | S86 | S88 | S89 | S90 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MDTs in quiet | R | p < 0.01 | R | p < 0.01 | R | p < 0.01 | L | p < 0.01 | L | p < 0.01 | R | p < 0.01 | L | p < 0.01 | L | p < 0.01 |

| masked MDTs | R | p < 0.01 | R | p < 0.01 | R | p < 0.01 | L | p < 0.01 | R | p = 0.42 | R | p < 0.01 | L | p < 0.01 | L | p < 0.01 |

| Amount of masking | R | p = 0.93 | L | p < 0.01 | L | p < 0.01 | R | p < 0.01 | R | p < 0.01 | R | p < 0.01 | R | p < 0.01 | R | p = 0.12 |

| SRT (L) dB | 15.000 | 2.565 | 10.425 | 0.670 | 4.555 | 20.280 | 3.995 | 21.565 | ||||||||

| SRT (R) dB | 4.830 | 7.710 | 7.285 | 0.665 | 8.000 | 16.140 | 5.425 | 11.565 | ||||||||

| Better ear on SRT | R | L | R | R | L | R | L | R |

For four subjects (S60, S81, S86, and S90), only one measure showed ear differences in the same direction as their SRT ear differences and that measure was used for site selection. For two subjects (S69 and S89), MDTs in quiet and masked MDTs showed high correlation with one another across the array (p < 0.001), and both showed ear differences in the same direction as the SRT ear differences. In these two cases, masked MDT was chosen as the measure for site selection, because it presumably reflects both temporal and spatial acuity of that site. For the remaining two subjects (S52 and S88), ear differences of all three measures were consistent with the SRT ear differences. The amount of masking was chosen because it showed the largest across-site variance, which facilitates site selection. In summary, the measure of channel interaction (i.e., amount of masking) was used for five subjects, masked MDT was used for two subjects, and MDT in quiet was used for one. The measure for site selection (MSS) for each subject is indicated by text “MSS” in the corresponding panel in Fig. 1.

Figure 1.

Psychophysical measures for all available stimulation sites in the left (open symbols) and right (filled symbols) ears. The first column shows means and ranges of modulation detection thresholds (MDTs) in quiet. The second column shows means and ranges of masked MDTs. The third column shows the amount of masking (masked MDTs minus MDTs in quiet). Correlation coefficients (r) for the across-site correlations between two ears on the psychophysical measure are shown in the upper right corner of each panel. Asterisks indicate statistically significant correlations between the two ears in the across-site patterns (p < 0.05). The ASMs of the left and right ears for the three measures are shown in the upper left corner of each panel. For each subject, the psychophysical measure chosen for site selection is indicated by text “MSS” shown at the lower right corner of the corresponding panel.

Experimental MAPs

Three experimental MAPs (i.e., A, B, and C) were constructed for each subject using the chosen psychophysical measure for selecting stimulation sites. MAP refers to stimulation parameters that included the speech processing strategy, level settings, SmartSound setting, sites selected for stimulation and frequency allocation. The three experimental MAPs were identical to the subject’s clinical MAP except for site selection and frequency allocation. Examples of the site selection strategies for the three experimental MAPs are shown in Fig. 2 using MDT data from S86. Note that for each ear, the stimulation sites are numbered 1 to 22 from base to apex. With all electrodes on and functioning, a site in one ear is considered to match the site in the opposite ear with the same number, despite a possible ear difference in insertion depth. This is based on the assumption that subjects with over a year of experience with bilateral implants had come to match correspondingly numbered sites in the two ears based on frequency allocation, as detailed in the Discussion section below.

Figure 2.

Schematic representation of site selection and frequency allocation for the three experimental MAPs. MDTs measured from S86 are shown above the implant diagrams. The filled symbols stand for the right ear, and the open ones stand for the left ear. The stimulation sites marked with squares were those that were turned off. In the implant diagrams, ellipses represent electrodes, with filled ones representing those that were turned off in the MAP. Open symbols represent electrodes that were used. Numbers in the open ellipses stand for the numbers designating the analysis bands assigned to the electrodes. In MAP A, for each stimulation site, the ear with better MDT was selected while the site on the opposite ear was turned off. In MAP B, the electrode array was divided into 5 segments. Within each segment, two sites with relatively poor MDT, each from a different ear, were turned off. In MAPs A and B, there were spectral “holes” in each ear at the stimulation sites that were turned off. However, these spectral contents were presented in the opposite ear. In MAP C, the same stimulation sites were turned off as in MAP B, but the frequencies of the electrode arrays in both implants were re-adjusted, so each implant contained 17 contiguous frequency bands with widened bandwidths.

In MAP A, for each of the 22 stimulation sites along the electrode array, the ear that showed better acuity between the two ears (lower MDT in this example) was selected, while the corresponding site in the contralateral ear was turned off. Frequency allocation was not adjusted after electrodes were turned off.1 As a result, each implant transmitted only a set of partial channels, but these channels were complementary to those in the contralateral ear. Combining ears, the total number of spectral channels transmitted in this dichotic MAP was the same as that in the clinical diotic MAP,2 and so was the frequency content allocated to each of the selected sites. Thus, spectral resolution was preserved without requiring the listeners to adapt to new frequency-place allocation or new allocated bandwidths. In addition, in MAP A, the mean spatial separation between electrodes in each ear was increased, relative to the diotic case, because on average, half of the electrodes in each implant were not stimulated.

In cases where the subject showed better acuity in one ear for the majority of the stimulation sites, as in the example at the top of Fig. 1 (15 in the right ear versus 7 in the left), the number of activated electrodes would be considerably different across the two ears, leading to an imbalance of loudness percept across ears. This loudness imbalance was reported by some subjects. This limitation was averted in MAP B. In MAP B, the tonotopic axis was divided into 5 segments of 4 to 5 electrodes each (5 electrodes on the apical- and basal-end segments and 4 in the remaining 3 segments). Within each segment, the worst performing site across ears was the first to be turned off. Another site that was the worst performing site chosen from the opposite ear and was different from the first was the second to be turned off. Thus, each implant had 5 un-stimulated sites and a total of 10 different sites were turned off in the combination of the two ears. Again, the frequency allocation was not adjusted after electrodes were turned off. The frequency content for the sites that were turned off in one ear was transmitted by the corresponding sites in the contralateral side. This MAP created moderate spatial separation between electrodes and provided additional advantages of balancing the number of activated stimulation sites and their distribution along the tonotopic axis across ears.

It is common that a subject has one or more electrodes turned off in their clinical MAP due to shorted electrodes or discomfort. Differences in the number of activated electrodes between ears would result in mismatched analysis bands in a subject’s diotic clinical MAP. This might compromise the benefit of the dichotic MAP A and B, because the sites turned off in one ear would then not be precisely complemented in the opposite ear. MAP C was created as an alternative for MAP B. It selected the same sites as MAP B, but the frequencies were reallocated for the selected sites along the whole array so that each implant transmitted 17 contiguous but widened frequency bands (note the distribution of the analysis bands in the diagram for MAP C in Fig. 2).

Selected electrodes for MAP A and B for each subject are listed in Table TABLE III.. Electrodes chosen for MAP C were identical to those for MAP B.

TABLE III.

Selected electrodes for MAPs A and B. The “x” indicates that the electrode was not activated. MAP C utilized the same electrodes as MAP B.

| MAP A | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| S52 | S60 | S69 | S81 | S86 | S88 | S89 | S90 | |||||||||

| Electrode | L | R | L | R | L | R | L | R | L | R | L | R | L | R | L | R |

| 1 | x | 1 | 1 | x | 1 | x | 1 | x | 1 | x | 1 | 1 | ||||

| 2 | 2 | 2 | x | x | 2 | x | 2 | x | 2 | 2 | 2 | 2 | ||||

| 3 | 3 | x | x | 3 | x | 3 | x | 3 | 3 | x | 3 | 3 | x | x | 3 | |

| 4 | 4 | x | x | 4 | 4 | x | x | 4 | 4 | x | x | 4 | x | 4 | 4 | x |

| 5 | x | 5 | 5 | x | 5 | x | 5 | x | 5 | x | 5 | x | 5 | x | x | 5 |

| 6 | x | 6 | 6 | x | 6 | x | x | 6 | 6 | x | x | 6 | 6 | x | 6 | x |

| 7 | 7 | x | 7 | x | 7 | x | 7 | 7 | x | x | 7 | 7 | x | x | 7 | |

| 8 | 8 | x | x | 8 | x | 8 | x | 8 | x | 8 | x | 8 | 8 | x | x | 8 |

| 9 | x | 9 | 9 | x | x | 9 | x | 9 | x | 9 | x | 9 | 9 | x | 9 | x |

| 10 | 10 | x | 10 | x | x | 10 | x | 10 | x | 10 | x | 10 | 10 | x | x | 10 |

| 11 | 11 | x | 11 | x | x | 11 | x | 11 | x | 11 | 11 | x | 11 | x | x | 11 |

| 12 | 12 | x | x | 12 | 12 | x | x | 12 | x | 12 | x | 12 | 12 | x | 12 | x |

| 13 | x | 13 | 13 | x | 13 | x | x | 13 | x | 13 | 13 | x | 13 | x | 13 | x |

| 14 | 14 | x | 14 | x | 14 | x | 14 | x | 14 | x | x | 14 | 14 | x | x | 14 |

| 15 | 15 | x | x | 15 | x | 15 | x | 15 | 15 | x | x | 15 | 15 | x | 15 | x |

| 16 | 16 | x | 16 | x | x | 16 | x | 16 | 16 | x | x | 16 | 16 | x | x | 16 |

| 17 | x | 17 | 17 | x | x | 17 | 17 | x | 17 | x | x | 17 | 17 | x | 17 | x |

| 18 | x | 18 | 18 | x | x | 18 | 18 | x | 18 | x | x | 18 | 18 | x | 18 | x |

| 19 | x | 19 | 19 | x | x | 19 | 19 | 19 | x | x | 19 | x | 19 | x | 19 | |

| 20 | x | 20 | x | 20 | x | 20 | 20 | 20 | x | x | 20 | 20 | x | 20 | x | |

| 21 | 21 | x | 21 | x | 21 | 21 | x | 21 | x | 21 | x | 21 | x | 21 | x | |

| 22 | x | 22 | 22 | 22 | 22 | x | 22 | x | 22 | x | 22 | x | x | 22 | ||

| MAP B | ||||||||||||||||

| S52 | S60 | S69 | S81 | S86 | S88 | S89 | S90 | |||||||||

| Electrode | L | R | L | R | L | R | L | R | L | R | L | R | L | R | L | R |

| 1 | 1 | 1 | 1 | 1 | 1 | x | 1 | x | 1 | 1 | x | 1 | ||||

| 2 | x | 2 | 2 | x | 2 | x | 2 | x | 2 | 2 | x | 2 | ||||

| 3 | 3 | 2 | x | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | 3 | x | x | 3 | |

| 4 | 4 | x | 4 | x | 4 | 4 | 4 | 4 | 4 | x | 4 | 4 | 4 | 4 | 4 | x |

| 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | 5 | x | 5 | 5 | 5 | 5 |

| 6 | 6 | 6 | 6 | x | 6 | x | 6 | 6 | 6 | x | 6 | x | 6 | 6 | 6 | x |

| 7 | 7 | x | 7 | 7 | 7 | 7 | 7 | 7 | 7 | 7 | 7 | 7 | 7 | x | 7 | |

| 8 | 8 | x | 8 | 8 | 8 | 8 | x | 8 | x | 8 | 8 | 8 | 8 | x | 8 | 8 |

| 9 | x | 9 | 9 | 9 | x | 9 | 9 | x | 9 | 9 | x | 9 | x | 9 | 9 | 9 |

| 10 | 10 | x | 10 | 10 | 10 | 10 | x | 10 | 10 | x | 10 | 10 | 10 | 10 | 10 | 10 |

| 11 | 11 | 11 | 11 | x | x | 10 | 11 | 11 | x | 11 | 11 | x | 11 | 11 | x | 11 |

| 12 | 12 | 12 | x | 12 | 12 | 11 | 12 | 12 | 12 | 12 | x | 12 | 12 | x | 12 | x |

| 13 | x | 13 | 13 | 13 | 13 | x | 13 | x | 13 | 13 | 13 | 13 | x | 13 | 13 | 13 |

| 14 | 14 | 14 | 14 | 14 | 14 | 13 | 14 | x | 14 | 14 | 14 | x | 14 | x | 14 | 14 |

| 15 | 15 | x | x | 15 | 15 | 14 | x | 15 | 15 | 15 | x | 15 | 15 | 15 | 15 | x |

| 16 | 16 | 16 | 16 | 16 | 16 | x | 16 | 16 | x | 16 | 16 | 16 | x | 16 | x | 16 |

| 17 | x | 17 | 17 | x | x | 16 | 17 | 17 | 17 | x | 17 | 17 | 17 | 17 | 17 | 17 |

| 18 | x | 18 | 18 | x | 18 | x | x | 18 | x | 18 | 18 | 18 | 18 | x | 18 | x |

| 19 | 19 | 19 | 19 | 19 | 19 | 18 | 19 | 19 | x | x | 19 | 19 | 18 | 19 | 19 | |

| 20 | 20 | 20 | x | 20 | 21 | 19 | 20 | 20 | 20 | 20 | 20 | 21 | 20 | 20 | 20 | |

| 21 | 21 | 21 | 21 | x | 20 | 21 | x | 21 | 21 | 21 | x | 21 | 21 | 21 | 21 | |

| 22 | 22 | x | 22 | 21 | 22 | 22 | 22 | 22 | 22 | 22 | x | 22 | x | 22 | ||

Speech recognition tests

Speech tests consisted of phoneme recognition tests in quiet and in noise and a test that measured speech reception thresholds (SRTs) for sentences in the presence of amplitude-modulated noise. The speech stimuli were presented in a double-walled sound attenuating booth through a loud speaker positioned about 1 m away from the subject at 0° azimuth. The levels of the speech stimuli were calibrated and presented at approximately 60 dB SPL.

Consonant and vowel recognition were measured in quiet and in a speech-shaped noise at 5 dB and 0 dB signal to noise ratios (SNRs). Consonant and vowel materials were chosen from Shannon et al. (1999) presented in a consonant-/a/ context and Hillenbrand et al. (1995) presented in a /h/-vowel-/d/ context, respectively.

SRTs were obtained using an adaptive-tracking procedure. The stimulus composed of a target CUNY sentence (Boothroyd et al., 1985) presented with broadband noise amplitude modulated with a 4 Hz sinusoid. SNR was set at 20 dB at the beginning of the test and lowered by 2 dB if the subject correctly repeated the sentence, or increased by 2 dB if the subject missed any words when repeating the sentence. The mixed signal (sentence + noise) was normalized to its peak amplitude after the signal to noise ratio was changed depending on the subjects’ response from trial to trial. The one-down one-up procedure measured a 50% correct point on the psychometric function. The threshold was taken as the mean of the SNRs at the last 6 reversals out of a total of 12 reversals.

Prior to testing, the subjects were familiarized with the speech material and testing procedure. SRTs were first measured without training for each ear alone using the subject’s everyday-use clinical MAP for the purpose of determining the psychophysical measure for site selection as described above. Phoneme recognition and SRT were then measured for the three experimental MAPs and the subject’s clinical diotic MAP in a random order. Training was only provided when the first time an experimental MAP was tested. Training for the phoneme recognition test included one repetition of the test at each of the three SNR conditions with feedback. The SRT test was run once, without feedback, for the purpose of training. For actual testing, phoneme recognition and SRTs were measured twice for each MAP without feedback and the mean scores were used.

RESULTS

MDTs in quiet, masked MDTs and the amount of masking (masked MDTs minus MDTs in quiet) for the left and right ears are shown for individual subjects in Fig. 1. Data were not collected at stimulation sites that were turned off in the subject’s everyday-use clinical MAP and thus were not included for psychophysical assessment.

Across-ear correlations and differences

For each subject, psychophysical data were compared between the two ears to determine whether the two ears showed similar across-site patterns. Significant across-ear correlations were observed for MDTs in quiet and masked MDTs for 6 of the 8 subjects. Correlation coefficients are shown in each panel of Fig. 1. Exceptions were S90, where neither MDTs in quiet, nor masked MDTs showed significant correlation between ears, S81 where MDTs in quiet were not significantly correlated between ears, and S88 where masked MDTs were not significantly correlated between ears. Interestingly, the amount of masking was not significantly correlated between ears for any of the subjects.

The ASM ear differences in each of the three psychophysical measures were tested for being significantly different than zero (Table TABLE II.). Twenty-one out of the 24 ASM ear differences were significantly different than zero. In addition, ear differences were also present in demographic variables such as duration of deafness. The ear differences in duration of deafness prior to implantation were in the same direction as the ear differences in the amount of masking in 6 out of 8 subjects, but were in the same direction as the ear differences in modulation sensitivity in only 3 subjects.

Across-ear and across-site correlations among the psychophysical measures

The relationships between the three psychophysical measures were examined across the 16 ears, and separately for the left and right ears. Results for the 16 ears pooled as a group are reported below, because they were the same as those for the left and right ears analyzed separately. The ASMs of MDTs in quiet and with a masker showed a strong positive correlation across ears (r = 0.99, p < 0.0001). The ASMs of the modulation sensitivity measures either in quiet or with a masker were negatively correlated with the amount of masking (r = −0.83, p = 0.0001; r = −0.76, p = 0.0006) (Fig. 3). The ASVs of the three measures showed positive correlations with one another. Thus, ears that showed high ASV on one measure tended to show high ASV on all measures (Fig. 3), although the correlation was not significant for MDT in quiet versus the amount of masking after a Bonferroni correction.

Figure 3.

Scatter plots showing the correlations between parings of the three psychophysical measures. The left columns show correlations of the across-site means (ASMs) for pairings of each of the three measures. The right columns show correlations of the across-site variances (ASVs) for pairings of each of the three measures. The three rows show correlations between MDTs in quiet and masked MDTs, MDTs in quiet and the amount of masking, and masked MDTs and the amount of masking, respectively. Symbols stand for different subjects, with the filled ones representing right ears, and the open ones representing left ears. Regression lines represent linear fits to the data. Correlation coefficients (r) and p values are shown in each panel.

The relationships among the three measures were also examined within ears to determine if these measures varied in correlated patterns across stimulation sites. MDTs in quiet and masked MDTs demonstrated high positive correlations across stimulation sites for all 16 ears tested (p all < 0.05). Although the presence of a masker usually produced elevated MDT at the probe electrode, the effect of masking was larger for some sites than for others. The non-uniform effect of masking is most clearly seen in the across-site patterns in the measure of the amount of masking (Fig. 1, right column). The negative correlation between amount of masking and MDTs seen across ears reported above was shown across stimulation sites in 3 out of 16 ears (p all < 0.05), while the other 13 ears showed no significant correlation (p all > 0.05).

Correlation between psychophysics and speech recognition

Correlation of the psychophysical performance and speech recognition was examined across subjects. ASMs of each psychophysical measure were correlated with the SRT scores obtained from the 16 ears alone. None of the measures’ ASM showed significant correlation with SRTs, with or without controlling for the factor of pre-implant duration of deafness (p > 0.05). SRT scores obtained with bilateral stimulation or averaged for the two ears did not show correlation across the 8 subjects with psychophysical acuity averaged for the two ears (p > 0.05 in all cases). Also, the ear differences in SRT scores did not show correlation with the ear differences in any of the three psychophysical measures across subjects (p > 0.05 in all cases). Given that the previous studies that established the relationship between modulation detection sensitivity and speech recognition across subjects typically measured MDT at a single site (Cazals et al., 1994; Fu, 2002; Luo et al., 2008), correlations were repeated using regional means, i.e., psychophysical data averaged within each of the five tonotopic segments. Again, none of the regional measurements in any of the five tonotopic segments showed significant correlation across subjects with speech recognition.

Benefit of individually customized stimulation MAPs

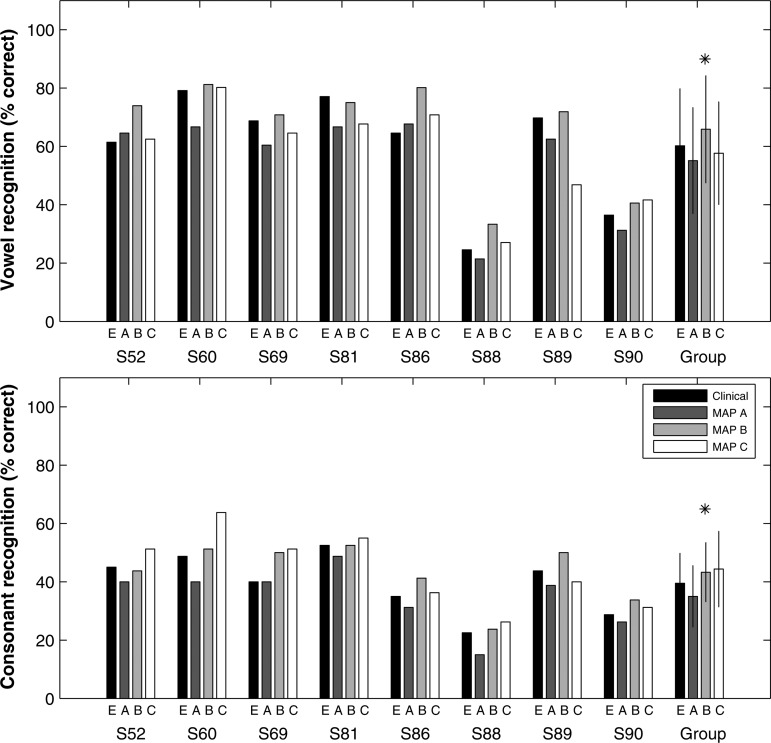

Group mean speech recognition performance for the vowels and consonants are summarized in Fig. 4 for the four different MAPs as a function of SNRs.

Figure 4.

Phoneme recognition using four MAPs as a function of SNR. Group mean (left) vowel and (right) consonant recognition using the subjects’ everyday-use clinical MAP as well as three experimental MAPs are shown as a function of SNR. Performance for different MAPs is shown in different symbols. Error bars represent ±1 standard deviation.

For vowel recognition, results of a two-way repeated-measures ANOVA showed a significant effect of SNR [F (2, 14) = 37.78, p < 0.001] and MAP [F (3, 21) = 4.02, p = 0.02]. The interaction between the two factors was also significant [F (6, 42) = 4.44, p < 0.005], indicating that the difference between the MAPs was larger at some SNRs than at others. Paired comparisons indicated that at 0 dB SNR, experimental MAP B produced significantly higher group mean vowel recognition compared to the everyday-use clinical MAP (MAP E) and the other two experimental MAPs (MAPs A and C) (all p < 0.05). Individual data for the 0 dB SNR condition are shown in Fig. 5 (top). For 7 out of 8 subjects, one or more experimental MAPs provided improvement in vowel recognition relative to that achieved with the everyday-use clinical MAP. For these 7 subjects, 6 benefited the most from MAP B.

Figure 5.

Individual and group mean (top) vowel recognition and (bottom) consonant recognition at 0 dB SNR. Error bars represent ±1 standard deviation. On the x axis, the MAP letters are shown for each subject, with the letter E representing the subject’s everyday-use clinical MAP. Asterisk indicates MAP B produced statistically significantly better group mean performance compared to the performance using the subjects’ everyday-use clinical MAP.

For consonant recognition, results of a two-way repeated-measures ANOVA showed a significant effect of SNR [F (2, 14) = 153.81, p < 0.001] and MAP [F (3, 21) = 15.32, p < 0.001]. The interaction between the two factors however was not significant [F (6, 42) = 0.52, p = 0.79]. Similar to vowel recognition, paired comparisons indicated that at 0 dB SNR, only experimental MAP B produced significantly better group mean scores than the clinical MAP [t (7) = 2.80, p = 0.026]. Although performance of MAP C was comparable to MAP B [t (7) = −0.44, p = 0.67], it was not significantly better than the clinical MAP [t (7) = 2.32, p = 0.053]. Individual data for the 0 dB SNR condition are shown in Fig. 5 (bottom). For S86, S89, and S90, the largest improvement, relative to the clinical MAP, was achieved using MAP B, while for the rest of the group, the largest improvement was achieved using MAP C.

Individual and group mean SRTs for CUNY sentences in amplitude-modulated noise are shown in Fig. 6. Note that for this test, lower SRT scores are better because they indicate the ability to recognize the sentences at lower, more challenging, signal to noise ratios. Consistent with the phoneme recognition results, the group mean SRT for MAP B was significantly better than that for the everyday-use clinical MAP [t (7) = 3.76, p = 0.007]. Different from the phoneme recognition results which showed essentially no benefit using MAP A at 0 dB SNR, 4 out of 8 subjects showed improved sentence recognition in noise relative to the clinical MAP using MAP A. Half of the electrodes were turned off in MAP A producing the largest mean spatial separation between electrodes among the three experimental MAPs. A control MAP was tested for the four subjects who showed a benefit from MAP A. The control dichotic MAP assigned the odd indexed analysis bands to the left implant and the even indexed bands to the right implant (i.e., Odd/Even MAP). This MAP created the same mean electrode separation as in MAP A, but did not take into consideration the sites’ psychophysical acuity. The subjects (S52, S60, S69, and S86) did not seem to benefit from the Odd/Even MAP; rather SRTs were worse compared to their everyday-use clinical MAP. Although some subjects showed improvement over the clinical MAP using more than one experimental MAP, the best performance as indicated by a labeled arrow in Fig. 6, was seen for MAP B in 6 out of 8 subjects and in the group statistics. The vowel and consonant recognition scores at 0 dB SNR were highly correlated with the subjects’ SRTs (p < 0.001).

Figure 6.

Individual and group mean speech reception thresholds (SRTs). SRTs represent the signal to noise ratio required for a listener to repeat 50% of the sentences accurately. Lower scores indicate better ability to recognize sentences in noise. For subjects who benefited from MAP A, an additional control MAP (Odd/Even) was tested and results are shown in the fifth (open) bar. For each subject, the best MAP is indicated by a labeled arrow. In the group data, the asterisk indicates statistically significantly lower SRTs using MAP B compared to the SRTs using the subjects’ clinical MAP.

DISCUSSION

This study examined modulation detection sensitivity and channel interaction for individual stimulation sites along the tonotopic axis and their importance for speech recognition in 8 bilaterally implanted subjects. Three experimental MAPs were created on an individual basis. Stimulation sites for these MAPs were selected so as to reduce the number of sites with poor psychophysical acuity. The effectiveness of these site-selection strategies in improving speech recognition was evaluated for each MAP.

Psychophysical acuity

Modulation detection sensitivity was examined for each available stimulation site and was repeated with an un-modulated masker. Overall, modulation detection sensitivity was reduced as a result of the masker on the adjacent site, consistent with previous studies that used un-modulated or modulated maskers (Richardson et al., 1998; Chatterjee, 2003; Garadat et al., 2012). The amount of masking, which was the modulation-detection threshold elevation in the presence of the masker, was larger for some stimulation sites than for others. Although the masking effect varied across sites, the masking effect was small relative to the across-site variation in the MDT, so that the masker did not appreciably alter the overall across-site patterns of the MDT functions. Consequently, the masked MDTs varied across stimulation sites and across ears in a correlated pattern with MDTs in quiet (Figs. 13).

The negative correlation between the amount of masking and the MDT measures across ears and in some cases across stimulation sites, were mathematically driven by the positive correlation between two MDT measures. The amount of masking is essentially the difference between MDTs with and without a masker. Under conditions where the slope of the positive correlation between the two MDT measures is smaller than 1, either one of the MDT measures is mathematically forced to vary with the amount of masking in the opposite direction.

Amplitude modulation detection is presumably strongly related to intensity resolution because modulation detection relies on a listener’s ability to detect amplitude fluctuation in the envelope of a signal (Wojtczak and Viemeister, 1999; Galvin and Fu, 2009). Neural processing of amplitude modulated acoustical and electrical signals is based on a temporal mechanism, where spike timing in the auditory nerve encodes the modulation information, with the synchronization magnitude varying with modulation depth, frequency, or stimulus level (Joris and Yin, 1992; Joris et al., 2004; Goldwyn et al., 2010). The amount of masking, although not completely independent of the MDT measures, is probably determined by the interplay of a number of factors that are primarily spatial. Modulation detection interference decreases as the function of the spatial separation between the probe and masking electrodes (Richardson et al., 1998), which is evidence to indicate neural excitation produced by adjacent electrodes overlap spatially. Psychophysical studies have commonly described spatial interaction between channels in terms of threshold elevation in the presence of maskers in close proximity on the array, such as forward-masked excitation patterns (Chatterjee and Shannon, 1998; Cohen et al., 2003), across-channel gap detection (Hanekom and Shannon, 1998), or shift of absolute detection threshold in the presence of a masker interleaved on adjacent electrodes (Bierer, 2007). Spatial interaction between channels is a result of factors including the distance between the electrode to the nerve fibers and the density of surviving neural fibers (Long et al., 2010; Bierer, 2007). Large current spread which leads to channel interaction is thought to occur at stimulation sites with sparse nerve fibers or poor electrode-neuron interface than at sites with close approximation to the modiolus and high density of nerve fibers. Studies have suggested that the density of nerve fibers is closely related to the duration of hearing loss in the deafened ear (e.g., Nadol et al., 1989). Auditory fibers degenerate over time without the trophic support from the hair cell activity. Subjects tested in the present study demonstrated differences in duration of deafness between the two ears and interestingly, for 6 out of 8 subjects, the ear that had a longer duration of deafness also showed a larger amount of channel interaction, but did not necessarily demonstrate poorer modulation detection. It would be useful to test a larger sample size to confirm the generalizability of these observations.

Psychophysical acuity and speech recognition

Previous studies have provided evidence that modulation detection sensitivity measured at single stimulation sites predicts speech recognition across implant users (Cazals et al., 1994; Fu, 2002; Luo et al., 2008). In contrast to these findings, our data from 16 ears did not support such a relationship using MDTs averaged across all stimulation sites or MDTs averaged across restricted tonotopic regions. Nor was speech recognition across ears related to channel interaction. Undoubtedly, speech recognition performance could be influenced by cognitive ability and other subject variables in addition to psychophysical acuity. The interpretation of the relationship between psychophysical and speech recognition data can be difficult when these confounding variables are not controlled in the across-subject designs. Such a relationship, which is observed in some samples but not in others, is probably not generalizable to the population of all cochlear implant listeners. For the within-subject design used in the current study, where the ear differences in speech recognition were compared in relation to the ASM psychophysical performance in the same subject, the effects of across-subject differences in cognitive and other subject variables were minimized.

The direction of ASM ear difference in a psychophysical measure is however by no means sufficient for determining its relationship with speech recognition, since each measure had a 50% chance of being consistent with the direction of ear difference in speech recognition. However, within-subject site-selection experiments such as those used here and by Garadat et al. (2011, 2012) serve to test whether or not the selected measures are indeed important for speech recognition.

The results of these studies that use within-subject designs suggest that both spatial and temporal acuity at individual stimulation sites in the multisite prosthesis do significantly affect speech recognition. However, in these studies, the across-subject differences in speech recognition were often larger than the speech-recognition differences created within subjects by the experimental MAPs (e.g., see Figs. 56). This suggests that across-subject differences, such as cognitive variables also play a large role in the benefit that the subjects receive from their prostheses.

Spectral fusion

The principle of the site-selection strategies was to improve the mean psychophysical performance by turning off stimulation sites that showed poor acuity. Dichotic MAPs were created for the purpose of preserving frequency resolution with the removal of stimulation sites, because the frequency bands of the electrodes that were turned off could be compensated for by input from the corresponding sites in the opposite ear. This approach is based on the assumption that the complementary spectral information in the two ears can be correctly integrated centrally to form a cohesive auditory image. This is known as spectral fusion (Cutting, 1976). Studies have shown that spectral fusion could be disrupted by the differences in onset timing (Broadbent, 1955), fundamental frequencies in the dichotic signals (Broadbent and Ladefoged, 1957) or by coarse spectral resolution of the original signal (Loizou et al., 2003). None of these detrimental factors were introduced by the two dichotic MAPs (MAPs A and B) used here. MAP A however could produce an imbalance in the distribution of the activated electrodes, leading to an imbalance in signal intensity between ears. Experiments on dichotic listening have provided evidence that a 5 dB ear difference in intensity is sufficient to cause a reversal in ear advantage. With larger intensity differences, speech perception relies entirely on the un-attenuated ear (Cullen et al., 1974). Our subjects whose better-performing sites were distributed mostly in one ear, for example S89 (Fig. 1) and S88, did report a large difference in the loudness percept between ears when using MAP A. It is possible that this imbalance prevented these listeners from using information from the ear that received considerably less power, which could explain the lack of benefit of this MAP for some listeners. There was a trend of negative correlation between the performance of MAP A and the degree of imbalance, although the trend did not reach statistical significance.

Cohesive fusion could also be affected by binaural frequency-place mismatch due to the difference in the insertion depth of the two implants. Acoustic simulation studies have indicated that when two implants transmit complementary spectral information of a speech signal, if the two implants are not aligned tonotopically, the order of the analysis bands could be disrupted leading to incorrectly represented or reversed spectral peaks in the integrated sound (Siciliano et al., 2010). However, there is also evidence suggesting that the central auditory system in normal-hearing listeners as well as cochlear implant listeners can adapt to frequency-place mismatch with experience (Rosen et al., 1999; Fu and Galvin, 2003; Fu et al., 2002; Faulkner, 2006; Reiss et al., 2011). Reiss et al. (2011) have shown that, over time, the pitch percept evoked at a tonotopic place becomes closer to the allocated frequency of the speech processer than to the characteristic frequency of the place of stimulation. Speech recognition results from the present study suggest that frequency-place mismatch did not have a detrimental effect on fusing dichotic signals, since our subjects achieved better or equivalent speech recognition using one or both dichotic MAPs compared to their diotic clinical MAP. Note that the two dichotic MAPs (A and B) did not alter the frequency allocations that were used for each ear in the clinical MAP. Because all of the listeners in our experiments had at least one year of experience with their two implants, they probably had already adapted to the mismatch, if any, while using their clinical MAP.

Efficacy of the experimental MAPs

The group data suggest that MAP B provided significantly better phoneme recognition at a very difficult SNR (0 dB) compared to the subjects’ clinical MAP. With MAP B, the listeners could also understand spoken sentences at a significantly more challenging SNR (lower SNR) compared to using their everyday-use clinical MAP. For individual subjects, improved speech recognition performance relative to their clinical MAP was seen for at least one experimental MAP. Although the magnitude of improvement that each MAP provided was highly subject dependent, overall, the majority of the subjects benefited the most from MAP B for sentence recognition and vowel recognition in noise.

All three experimental MAPs removed sites with relatively poor acuity, thus they all improved the mean psychophysical performance for each ear and for the combined ears. Among the three MAPs, MAP A created the largest mean spatial separation among stimulation sites because half of the electrodes were not used in the MAP. However, the summation effect important for binaural benefit (Litovsky et al., 2006) was compromised by removing half of the electrodes, because no spectral information was repeated in the second ear. In addition, the distribution of the activated electrodes in MAP A was dependent on the pattern of the across-site acuity in the two ears. As noted above, an imbalance in loudness could be introduced, as was observed for S88 and S89, which we hypothesized could potentially affect central integration. Evidence to support this hypothesis came from subject S52, whose better-performing sites were evenly distributed across the two ears (Fig. 1). For sentence recognition in noise (Fig. 6), S52 performed much better with MAP A than with the clinical MAP. The Odd/Even MAP created comparable mean spatial separation as MAP A, but produced worse performance not only relative to MAP A but also to the subject’s clinical MAP. This suggests that either a 2-electrode separation provided by the Odd/Even MAP was not sufficient at some tonotopic places (Mani et al., 2004), or that it was site selection based on psychophysical acuity that contributed to the improved performance by MAP A.

MAPs A and B were dichotic MAPs that contained “spectral holes” in one ear that were compensated for by the corresponding sites in the opposite ear. The advantage of using dichotic stimulation was that frequency resolution could be preserved because the number of spectral bands and the widths of those bands were not changed and the frequency allocation to the electrodes was the same as that to which the subject had already adapted. In theory, the “spectral holes” could only be precisely filled if the two implants used the exact same number of the analysis bands with the same bandwidths. If additional electrodes in one of the implants needed to be turned off due to shorting or uncomfortable sensations, the analysis bands between the two implants would not exactly match due to broadening of the analysis bands on one side in the clinical MAP. MAP C was designed to overcome this problem by not leaving holes after the removal of stimulation sites. Rather, frequency allocations to the electrode array were re-adjusted so that each implant was assigned 17 frequency bands, contiguous in frequency but widened in bandwidth. Most subjects who participated in this study (7 out of 8) had one or more electrodes turned off in their clinical MAP, which is also common in the broader implant-user population. However, speech recognition results did not show a consistent benefit of MAP C over MAP B. As discussed previously, most subjects showed the largest improvement using MAP B. These findings suggest that the reduced frequency resolution in MAP C offset the benefit of aligned frequency allocation across ears. The fact that MAP C required the subjects to adapt to new frequency allocation, although small, might also explain the difference between MAP B and MAP C. This explanation could be tested using long-term training with MAP C.

In conclusion, MAP B appeared to be the most optimal for the majority of the subjects because it preserved spectral resolution, provided across-ears balance, and did not require change of frequency allocation, while the other two experimental MAPs did not offer all three advantages. Although the group-mean effect size for MAP B was relatively small, i.e., 5–6% improvement for phoneme recognition and a 2 dB improvement for speech reception threshold, one subject voluntarily reported that this MAP did improve communication in his everyday listening environment. Moreover, the potential of the experimental MAPs tested acutely might be underestimated because they were compared with the everyday-use clinical MAPs which the subjects had used for a much longer time. Although the effect size was relatively small, the site-selection approach used in these experiments provides a useful tool for evaluating various psychophysical and electrophysiological measures of implant function and determining their importance for speech recognition.

CONCLUSIONS AND IMPLICATIONS

The present study showed that psychophysical acuity assessed by modulation detection thresholds with and without a masker, and channel interaction, quantified as MDT threshold elevation in the presence of a masker, varied across the stimulation sites along the tonotopic axis in an implanted ear. Speech recognition in noise was significantly improved when the mean psychophysical performance was improved by removing stimulation sites with relatively poor acuity. Of the three experimental MAPs, site selection was most effective when the distribution of the selected stimulation sites was balanced across the two ears and frequency resolution was preserved by using dichotic stimulation. The findings of this study have important implications for the fitting of bilateral implant users in clinical practice. Our data suggest that bilateral implant fitting should take into consideration the perceptual strengths of individual stimulation sites in the two ears. With regard to sound localization, it is possible that dichotic stimulation may result in loss of spectral cues provided by the head-related transfer functions in individual ears, but these MAPs may still preserve interaural level cues useful for sound localization. The site-selection strategies could theoretically be more easily implemented in clinical practice, if simpler, less time intensive psychophysical or electrophysiological measures could be found to be effective for identifying the optimal stimulation sites.

ACKNOWLEDGMENTS

We thank our dedicated cochlear implant subjects for their participation in the experiments, Soha Garadat for assisting with data collection, and Jennifer Benson and Gina Su for editorial assistance. This work was supported by NIH-NIDCD grants R01 DC010786, T32 DC00011, and P30 DC05188.

Footnotes

The T and C levels were set to zero for these electrodes so that the corresponding sites were not stimulated but the frequency allocation originally assigned was maintained.

We use the term “diotic” for cases where the same frequency coverage was applied to the two implants. In the diotic MAPs, allocated bandwidths would be identical between ears with two full electrode arrays and bandwidths would be slightly different when the two arrays had different numbers of electrodes.

References

- Bierer, J. A. (2007). “ Threshold and channel interaction in cochlear implant users: Evaluation of the tripolar electrode configuration,” J. Acoust. Soc. Am. 121, 1642–1653. 10.1121/1.2436712 [DOI] [PubMed] [Google Scholar]

- Bierer, J. A., and Faulkner, K. F. (2010). “ Identifying cochlear implant channels with poor electrode-neuron interface: Partial tripolar, single-channel thresholds and psychophysical tuning curves,” Ear Hear. 31, 247–258. 10.1097/AUD.0b013e3181c7daf4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boothroyd, A., Hanin, L., and Hnath, T. (1985). A Sentence Test of Speech Perception: Reliability, Set Equivalence, and Short Term Learning (Speech and Hearing Sciences Research Center, City University, New York). [Google Scholar]

- Broadbent, D. E. (1955). “ A note on binaural fusion,” Quart. J. Exp. Psychol. 7, 46–47. 10.1080/17470215508416672 [DOI] [Google Scholar]

- Broadbent, D. E., and Ladefoged, P. (1957). “ On the fusion of sounds reaching different sense organs,” J. Acoust. Soc. Am. 29, 708–710. 10.1121/1.1909019 [DOI] [Google Scholar]

- Cazals, Y., Pelizzone, M., Saudan, O., and Boex, C. (1994). “ Low-pass filtering in amplitude modulation detection associated with vowel and consonant identification in subjects with cochlear implants,” J. Acoust. Soc. Am. 96, 2048–2054. 10.1121/1.410146 [DOI] [PubMed] [Google Scholar]

- Chatterjee, M. (2003). “ Modulation masking in cochlear implant listeners: Envelope versus tonotopic components,” J. Acoust. Soc. Am. 113, 2042–2053. 10.1121/1.1555613 [DOI] [PubMed] [Google Scholar]

- Chatterjee, M., and Shannon, R. (1998). “ Forward masked excitation patterns in multielectrode electrical stimulation,” J. Acoust. Soc. Am. 103, 2565–2572. 10.1121/1.422777 [DOI] [PubMed] [Google Scholar]

- Chikar, J. A., Colesa, D. J., Swiderski, D. L., Polo, A. D., Raphael, Y., and Pfingst, B. E. (2008). “ Over-expression of BDNF by adenovirus with concurrent electrical stimulation improves cochlear implant thresholds and survival of auditory neurons,” Hear. Res. 245, 24–34. 10.1016/j.heares.2008.08.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen, L., Richardson, L., Saunders, E., and Cowan, R. (2003). “ Spatial spread of neural excitation in cochlear implant recipients: Comparison of improved ECAP method and psychophysical forward masking,” Hear. Res. 179, 72–87. 10.1016/S0378-5955(03)00096-0 [DOI] [PubMed] [Google Scholar]

- Cullen, J. K., Jr., Thompson, C. L., Hughes, L. F., Berlin, C. L., and Samson, D. S. (1974). “ The effects of varied acoustic parameters on performance in dichotic speech perception tasks,” Brain Lang. 1, 307–322. 10.1016/0093-934X(74)90009-1 [DOI] [Google Scholar]

- Cutting, J. E. (1976). “ Auditory and linguistic process in speech perception: Inferences from six fusions in dichotic listening,” Psychol. Rev. 83, 114–140. 10.1037/0033-295X.83.2.114 [DOI] [PubMed] [Google Scholar]

- Donaldson, G. S., and Nelson, D. A. (2000). “ Place-pitch sensitivity and its relation to consonant recognition by cochlear implant listeners using the MPEAK and SPEAK speech processing strategies,” J. Acoust. Soc. Am. 107, 1645–1658. 10.1121/1.428449 [DOI] [PubMed] [Google Scholar]

- Eddington, D. K., Dobelle, W. H., Brackmann, D. E., Mladejovsky, M. G., and Parkin, J. L. (1978). “ Auditory prostheses research with multiple channel intracochlear stimulation in man,” Ann. Otol. Rhinol. Laryngol. 87, 1–39. [PubMed] [Google Scholar]

- Faulkner, A. (2006). “ Adaptation to distorted frequency-to-place maps: Implications of simulations in normal listeners for cochlear implants and electroacoustic stimulation,” Audiol. Neurotol. 11, 21–26. 10.1159/000095610 [DOI] [PubMed] [Google Scholar]

- Friesen, L. M., Shannon, R.V., Baskent. D., and Wang, X. (2001). “ Speech recognition in noise as a function of the number of spectral channels: Comparison of acoustic hearing and cochlear implants,” J. Acoust. Soc. Am. 110, 1150–1163. 10.1121/1.1381538 [DOI] [PubMed] [Google Scholar]

- Fu, Q.-J. (2002). “ Temporal processing and speech recognition in cochlear implant users,” Neuroreport 13, 1635–1639. 10.1097/00001756-200209160-00013 [DOI] [PubMed] [Google Scholar]

- Fu, Q.-J., and Galvin, J. J., III (2003). “ The effects of short-term training for spectrally mismatched noise-band speech,” J. Acoust. Soc. Am. 113, 1065–1072. 10.1121/1.1537708 [DOI] [PubMed] [Google Scholar]

- Fu, Q.-J., Shannon, R. V., and Galvin, J. J., III (2002). “ Perceptual learning following changes in the frequency-to-electrode assignment with the Nucleus-22 cochlear implant,” J. Acoust. Soc. Am. 112, 1664–1674. 10.1121/1.1502901 [DOI] [PubMed] [Google Scholar]

- Galvin, J. J., III, and Fu, Q.-J. (2009). “ Influence of stimulation rate and loudness growth on modulation detection and intensity discrimination in cochlear implant users,” Hear. Res. 250, 46–54. 10.1016/j.heares.2009.01.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garadat, S. N., Zwolan, T. A., and Pfingst, B. E. (2011). “ Speech recognition in cochlear implant users: Selection of sites of stimulation based on temporal modulation sensitivity,” Conference on Implantable Auditory Prostheses Meeting Abstr., 12.

- Garadat, S. N., Zwolan, T. A., and Pfingst, B. E. (2012). “ Across-site patterns of modulation detection: Relation to speech recognition,” J. Acoust. Soc. Am. 131, 4030–4041. 10.1121/1.3701879 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldwyn, J. H., Shea-Brown, E., and Rubinstein, J. T. (2010). “ Encoding and decoding amplitude-modulated cochlear implant stimuli-a point process analysis,” J. Comput. Neurosci. 28, 405–424. 10.1007/s10827-010-0224-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanekom, J. J., and Shannon, R. V. (1998). “ Gap detection as a measure of electrode interaction in cochlear implants,” J. Acoust. Soc. Am. 104, 2372–2384. 10.1121/1.423772 [DOI] [PubMed] [Google Scholar]

- Hillenbrand, J., Getty, L. A., Clark, M. J., and Wheeler, K. (1995). “ Acoustic characteristics of American English vowels,” J. Acoust. Soc. Am. 97, 3099–3111. 10.1121/1.411872 [DOI] [PubMed] [Google Scholar]

- Johnsson, L. G., Hawkins, J. E., Jr., Kingsley, T. C., Black, F. O., and Matz, G. J. (1981). “ Aminoglycoside-induced cochlear pathology in man,” Acta Otolaryngol. Suppl. 383, 1–19. [PubMed] [Google Scholar]

- Joris, P. X., Schreiner, C., and Rees, A. (2004). “ Neural processing of amplitude-modulated sounds,” Physiol. Rev. 84, 541–577. 10.1152/physrev.00029.2003 [DOI] [PubMed] [Google Scholar]

- Joris, P. X., and Yin, T. C. (1992). “ Responses to amplitude-modulated tones in the auditory nerve of cat,” J. Acoust. Soc. Am. 91, 215–232. 10.1121/1.402757 [DOI] [PubMed] [Google Scholar]

- Kang, S. Y., Colesa, D. J., Swiderski, D. L., Su, G. L., Raphael, Y., and Pfingst, B. E. (2010). “ Effects of hearing preservation on psychophysical responses to cochlear implant stimulation,” J. Assoc. Res. Otolaryngol. 11, 245–265. 10.1007/s10162-009-0194-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levitt, H. (1971). “ Transformed up-down methods in psychoacoustics,” J. Acoust. Soc. Am. 49, 467–477. 10.1121/1.1912375 [DOI] [PubMed] [Google Scholar]

- Lim, H. H., Tong, T. C., and Clark, G. M. (1989). “ Forward masking patterns produced by intracochlear electrical stimulation of one and two electrode pairs in the human cochlea,” J. Acoust. Soc. Am. 86, 971–980. 10.1121/1.398732 [DOI] [PubMed] [Google Scholar]

- Litovsky, R. Y., Parkinson, A., Arcaroli, J., and Sammeth, C. (2006). “ Simultaneous bilateral cochlear implantation in adults: a multicenter clinical study,” Ear Hear. 27, 714–731. 10.1097/01.aud.0000246816.50820.42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loizou, P. C., Mani, A., and Dorman, M. F. (2003). “ Dichotic speech recognition in noise using reduced spectral cues,” J. Acoust. Soc. Am. 114, 475–483. 10.1121/1.1582861 [DOI] [PubMed] [Google Scholar]

- Long, C. J., Holden, T. A., McClelland, G. H., Parkinson, W. S., and Smith, Z. M. (2010). “ Towards a measure of neural survival using psychophysics and CT scans,” Objective Measures in Auditory Implants, 6th International Symposium Abstr., 23–25.

- Luo, X., Fu, Q.-J., Wei, C. G., and Cao, K. L. (2008). “ Speech recognition and temporal amplitude modulation processing by Mandarin-speaking cochlear implant users,” Ear Hear. 29, 957–970. 10.1097/AUD.0b013e3181888f61 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mani, A., Loizou, P. C., Shoup, A., Roland, P., and Kruger, P. (2004). “ Dichotic speech recognition by bilateral cochlear implant users,” Int. Congr. Ser. 1273, 466–469. 10.1016/j.ics.2004.09.007 [DOI] [Google Scholar]

- Nadol, J. B., Jr. (1997). “ Patterns of neural degeneration in the human cochlea and auditory nerve: Implications for cochlear implantation,” Otolaryngol. Head Neck Surg. 117, 220–228. 10.1016/S0194-5998(97)70178-5 [DOI] [PubMed] [Google Scholar]

- Nadol, J. B., Jr., Young, Y.-S., and Glynn, R. J. (1989). “ Survival of spiral ganglion cells in profound sensorineural hearing loss: implications for cochlear implantation,” Ann. Otol. Rhinol. Laryngol. 98, 411–416. [DOI] [PubMed] [Google Scholar]

- Pfingst, B. E. Bowling, S. A., Colesa, D. J., Garadat, S. N., Raphael, Y., Shibata, S. B., Strahl, S. B., Su, G. L., and Zhou, N. (2011a). “ Cochlear infrastructure for electrical hearing,” Hear. Res. 281, 65–73. 10.1016/j.heares.2011.05.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfingst, B. E., Burkholder-Juhasz, R. A., Xu. L., and Thompson, C. S. (2008). “ Across-site patterns of modulation detection in listeners with cochlear implants,” J. Acoust. Soc. Am. 123, 1054–1062. 10.1121/1.2828051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfingst, B. E., Colesa, D. J., Hembrador, S., Kang, S. Y., Middlebrooks, J. C., Raphael, Y., and Su, G. L. (2011b). “ Detection of pulse trains in the electrically stimulated cochlea: Effects of cochlear health,” J. Acoust. Soc. Am. 130, 3954–3968. 10.1121/1.3651820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfingst, B. E., Sutton, D., Miller, J. M., and Bohne, B. A. (1981). “ Relation of psychophysical data to histopathology in monkeys with cochlear implants,” Acta. Otolaryngol. 92, 1–13. 10.3109/00016488109133232 [DOI] [PubMed] [Google Scholar]

- Pfingst, B. E., and Xu, L. (2005). “ Psychophysical metrics and speech recognition in cochlear implant users,” Audiol. Neurotol. 10, 331–341. 10.1159/000087350 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfingst, B. E., Xu, L., and Thompson, C. S. (2004). “ Across-site threshold variation in cochlear implants: Relation to speech recognition,” Audiol. Neurotol. 9, 341–352. 10.1159/000081283 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss, L. A., Lowder, M. W., Karsten, S. A., Tuner, C. W., and Gantz, B. J. (2011). “ Effects of extreme tonotopic mismatches between bilateral cochlear implants on electric pitch perception: A case study,” Ear Hear. 32, 536–540. 10.1097/AUD.0b013e31820c81b0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richardson, L. M., Busby, P. A., and Clark, G. M. (1998). “ Modulation detection interference in cochlear implant subjects,” J. Acoust. Soc. Am. 104, 442–453. 10.1121/1.423248 [DOI] [PubMed] [Google Scholar]

- Rosen, S., Faulkner, A., and Wilkinson, L. (1999). “ Adaptation by normal listeners to upward spectral shifts of speech: Implications for cochlear implants,” J. Acoust. Soc. Am. 106, 3629–3636. 10.1121/1.428215 [DOI] [PubMed] [Google Scholar]

- Shannon, R. V., Jensvold, A., Padilla, M., Robert, M. E., and Wang, X. (1999). “ Consonant recordings for speech testing,” J. Acoust. Soc. Am. 106, L71–L74. 10.1121/1.428150 [DOI] [PubMed] [Google Scholar]

- Shannon, R. V., Zeng, F. G., Kamath, V., Wygonski, J., and Ekelid, M. (1995). “ Speech recognition with primarily temporal cues,” Science 270, 303–304. 10.1126/science.270.5234.303 [DOI] [PubMed] [Google Scholar]

- Shepherd, R. K., Hatsushika, S., and Clark, G. M. (1993). “ Electrical stimulation of the auditory nerve: The effect of electrode position on neural excitation,” Hear. Res. 66, 108–120. 10.1016/0378-5955(93)90265-3 [DOI] [PubMed] [Google Scholar]

- Shepherd, R. K., and Javel, E. (1997). “ Electrical stimulation of the auditory nerve. I. Correlation of physiological responses with cochlear status,” Hear. Res. 108, 112–144. 10.1016/S0378-5955(97)00046-4 [DOI] [PubMed] [Google Scholar]

- Shepherd, R. K., Roberts, L. A., and Paolini, A. G. (2004). “ Long-term sensorineural hearing loss induces functional changes in the rat auditory nerve,” Eur. J. Neurosci. 20, 3131–3140. 10.1111/j.1460-9568.2004.03809.x [DOI] [PubMed] [Google Scholar]

- Siciliano, C. M., Faulkner, A., Rosen, S., and Mair, K. (2010). “ Resistance to learning binaurally mismatched frequency-to-place maps: Implications for bilateral stimulation with cochlear implants,” J. Acoust. Soc. Am. 127, 1645–1660. 10.1121/1.3293002 [DOI] [PubMed] [Google Scholar]

- Wilson, B. S., Lawson, D. T., Zerbi, M., Finley, C. C., and Wolford, R. D. (1995). “ New processing strategies in cochlear implantation,” Am. J. Otol. 16, 669–675. [PubMed] [Google Scholar]

- Wojtcazk, M., and Viemeister, N. F. (1999). “ Intensity discrimination and detection of amplitude modulation,” J. Acoust. Soc. Am. 106, 1917–1924. 10.1121/1.427940 [DOI] [PubMed] [Google Scholar]

- Zhou, N., Xu, L., and Pfingst, B. E. (2012). “ Characteristics of detection thresholds and maximum comfortable loudness levels as a function of pulse rate in human cochlear implant users,” Hear. Res. 284, 25–32. 10.1016/j.heares.2011.12.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwolan, T. A., Collins, L. M., and Wakefield, G. H. (1997). “ Electrode discrimination and speech recognition in postlingually deafened adult cochlear implant subjects,” J. Acoust. Soc. Am. 102, 3673–3685. 10.1121/1.420401 [DOI] [PubMed] [Google Scholar]