Abstract

To behave adaptively, we must learn from the consequences of our actions. Studies using event-related potentials (ERPs) have been informative with respect to the question of how such learning occurs. These studies have revealed a frontocentral negativity termed the feedback-related negativity (FRN) that appears after negative feedback. According to one prominent theory, the FRN tracks the difference between the values of actual and expected outcomes, or reward prediction errors. As such, the FRN provides a tool for studying reward valuation and decision making. We begin this review by examining the neural significance of the FRN. We then examine its functional significance. To understand the cognitive processes that occur when the FRN is generated, we explore variables that influence its appearance and amplitude. Specifically, we evaluate four hypotheses: (1) the FRN encodes a quantitative reward prediction error; (2) the FRN is evoked by outcomes and by stimuli that predict outcomes; (3) the FRN and behavior change with experience; and (4) the system that produces the FRN is maximally engaged by volitional actions.

Keywords: Feedback-related negativity (FRN), Error-related negativity (ERN), Event-related potentials (ERPs), Temporal difference learning, Anterior cingulate cortex

1. Introduction

To cope with the unique demands of different tasks, the cognitive system must maintain information about current goals and the means for achieving them. Equally important is the ability to monitor performance, and when necessary, to adjust ongoing behavior. Studies of error detection show that people do monitor their performance. After committing errors, they exhibit compensatory behaviors such as spontaneous error correction and post-error slowing (Rabbitt, 1966, 1968). Experiments using event-related potentials (ERPs) have provided insight into the neural basis of these behavioral phenomena. Most of this research has focused on the error-related negativity (ERN), an ERP component that closely follows error commission (Falkenstein et al., 1991; Gehring et al., 1993). In a seminal study, Gehring et al. demonstrated that the ERN was enhanced when instruction stressed accuracy (1993). Additionally, as the amplitude of the ERN increased, so too did the frequency of spontaneous error correction and the extent of post-error slowing. These findings support the claim that the ERN is a manifestation of error detection or compensation (Coles et al., 2002; Gehring et al., 1993).1

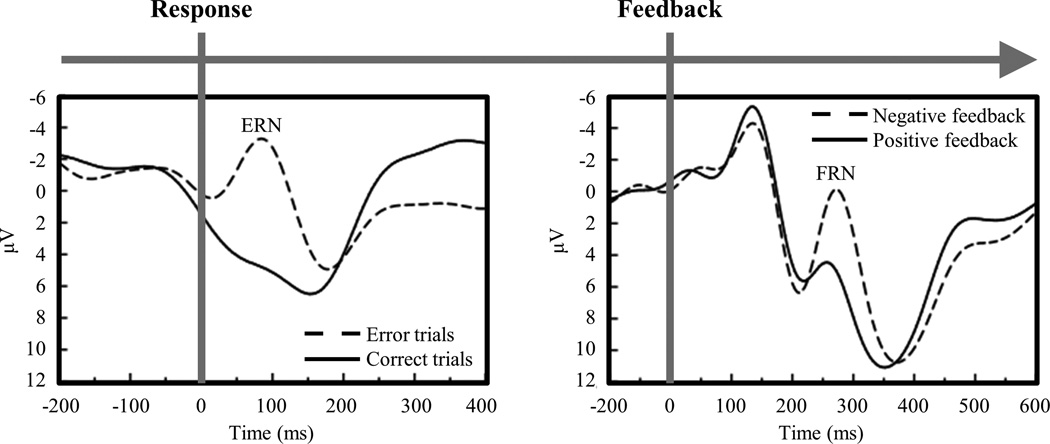

The ERN typically appears in speeded reaction time tasks. In such tasks, errors are due to impulsive responding. A representation of the correct response can be derived from ongoing stimulus processing. In other tasks, errors are due to uncertainty rather than to impulsivity. In such tasks, individuals must rely on external feedback to determine whether responses are correct. Another component called the feedback-related negativity (FRN) follows the display of negative feedback (Miltner et al., 1997). Owing to their many similarities, the ERN and FRN are thought to arise from the same system but in different circumstances (Gentsch et al., 2009; Holroyd & Coles, 2002; Miltner et al., 1997). The ERN follows response errors, and the FRN follows negative feedback (Figure 1). We highlight these components’ similarities throughout this review.

Figure 1.

The error-related negativity (ERN) appears in response-locked waveforms as the difference between error trials and correct trials. The ERN emerges at the time of movement onset and peaks 100 ms after response errors. The feedback-related negativity (FRN) appears in feedback-locked waveforms as the difference between negative feedback and positive feedback. The FRN emerges at 200 ms and peaks 300 ms after negative feedback (adapted from Nieuwenhuis et al., 2002).

Since its discovery, over two hundred studies have been published on the FRN. Table 1 contains the subset most pertinent to this review. These studies seek to clarify the cognitive processes that occur when the FRN is generated, and they seek to identify the brain regions that implement these processes. Because so many of these studies are motivated by the idea that the FRN is a neural substrate of error-driven learning, we begin by describing the principles of reinforcement learning (Sutton & Barto, 1998). We then examine the neural significance of the FRN. Specifically, we evaluate the claim that the FRN arises in the anterior cingulate cortex. In the remainder of the paper, we explore the cognitive significance of the FRN by considering its antecedent conditions – the variables that affect its appearance and amplitude. Existing FRN research centers on four themes, which we develop in turn: (1) the FRN encodes a quantitative reward prediction error; (2) the FRN is evoked by outcomes and by stimuli that predict outcomes; (3) the FRN and behavior change with experience; and (4) the system that produces the FRN is maximally engaged by volitional actions.

Table 1.

Major Themes and Representative Findings in FRN Research

Source localization results revealed an additional generator in the anterior cingulate

High probability losses < low probability losses

High probability wins > low probability wins

Data on constituent win and loss waveforms not included

Manipulations of reward probability that failed to influence FRN amplitude

Manipulations of reward magnitude that failed to influence FRN amplitude

Block-wise analysis

Model-based analysis

Parametric analysis

Observing adversary’s outcomes

Observing partner’s outcomes

Observing neutral outcomes

2. Principles of reinforcement learning

2.1. Temporal difference learning

To behave adaptively, we must learn from the consequences of our actions (Thorndike, 1911). Reinforcement learning theories formalize how such learning occurs (Sutton & Barto, 1998). According to these theories, differences between actual and expected outcomes, or reward prediction errors, provide teaching signals. Upon experiencing an outcome, the individual computes a prediction error:

| (1) |

Rewardt+1 denotes immediate reward, V(statet+1) denotes the estimated value of the new world state (i.e., future reward), and V(statet) denotes the estimated value of the previous state. The temporal discount rate (γ) controls the weighting of future reward. Discounting future reward ensures that when state values are equal, the individual will favor states that are immediately rewarding.

The prediction error is calculated as the difference between the value of the outcome, [rewardt+1 + γ×V(statet+1)], and the value of the previous state, V(statet). The individual uses the prediction error to update the estimated value of the previous state,

| (2) |

The learning rate (α) scales the size of updates. By revising expectations in this way, the individual learns to associate states with the sum of the immediate and future rewards that follow. This is called temporal difference learning.

Physiological studies provided early support for temporal difference learning by showing that firing rates of monkey midbrain dopamine neurons scaled with differences between actual and expected rewards (Schultz, 2007). Additionally, when a conditioned stimulus reliably preceded reward, the dopamine response transferred back in time from the reward to the conditioned stimulus, as predicted by temporal difference learning. Neuroimaging experiments have extended these results to humans by demonstrating that blood-oxygen level-dependent (BOLD) responses in the striatum and prefrontal cortex, regions innervated by dopamine neurons, mirror reward prediction errors as well (McClure et al., 2004; O’Doherty, 2004).

2.2. Actor-critic model

Temporal difference learning allows the individual to predict immediate and future rewards. Prediction is only useful insofar as it allows the individual to select advantageous behaviors. The actor-critic model provides a two-process account of how humans and animals solve this control problem (Sutton & Barto, 1998). One component, the critic, computes and uses prediction errors to learn state values (Eqs. 1 & 2). The other component, the actor, uses the critic’s prediction error signal to adjust the action selection policy, p(state, action), so that actions that increase state values are repeated,

| (3) |

The actor and critic components have been associated with the dorsal and ventral striatum. Following the analogy between dopamine responses and temporal difference learning, dopamine neurons in the substantia nigra pars compacta (SNc) project to the dorsal striatum, and dopamine neurons in the ventral tegmental area (VTA) project to the ventral striatum (Amalric & Koob, 1993). Physiological and lesion studies implicate the dorsal striatum in the acquisition of action values, and the ventral striatum in the acquisition of state values (Cardinal et al., 2002; Packard & Knowlton, 2002). In accord with these data, neuroimaging studies have found that instrumental conditioning tasks, which require behavioral responses, engage the dorsal and ventral striatum. Classical conditioning tasks, which do not require behavioral responses, only engage the ventral striatum (Elliott et al., 2004; O’Doherty et al., 2004; Tricomi et al., 2004). These findings have led to the proposal that the dorsal striatum, like the actor, learns action preferences, while the ventral striatum, like the critic, learns state values (Joel et al., 2002; O’Doherty et al., 2004).

2.3. The reinforcement learning theory of the error-related negativity

The principles of reinforcement learning have been instantiated in the reinforcement learning theory of the error-related negativity (RL-ERN; Holroyd & Coles, 2002; Nieuwenhuis et al., 2004a). This theory builds on the idea that the dopamine system monitors outcomes to determine whether things have gone better or worse than expected. Positive prediction errors induce phasic increases in dopamine firing rates, and negative prediction errors induce phasic decreases in dopamine firing rates. The SNc and VTA send prediction errors to the basal ganglia where they are used to revise expectations. The VTA also sends prediction errors to cortical structures such as the anterior cingulate where they are used to integrate reward information with action selection.

The FRN is thought to reflect the impact of dopamine signals on neurons in the anterior cingulate. Phasic decreases in dopamine activity disinhibit anterior cingulate neurons, producing a more negative FRN. Phasic increases in dopamine activity inhibit anterior cingulate neurons, producing a more positive FRN. Several sources of evidence support the idea that dopamine responses moderate the FRN. For example, dopamine functioning in the prefrontal cortex shows protracted maturation into adolescence and marked decline during adulthood (Bäckman et al., 2010; Benes, 2001). Paralleling this observation, the FRN distinguishes most strongly between losses and wins in young adults and less strongly in children and older adults (Eppinger et al., 2008; Hämmerer et al., 2011; Nieuwenhuis et al., 2002; Wild-Wall et al., 2009). Additionally, Parkinson and Huntington patients express decreased dopamine in the basal ganglia. Although the FRN has not been studied in these populations, the closely related ERN is attenuated in advanced Parkinson and Huntington patients (Beste et al., 2006; Falkenstein et al., 2001; Stemmer et al., 2007).2 Lastly, amphetamine, a dopamine agonist, increases the amplitude of the ERN (de Bruijn et al., 2004), and haloperidol and pramipexole, dopamine antagonists, attenuate the ERN (de Bruijn et al., 2006; Zirnheld et al., 2004) and dampen neural responses to reward (Santesso et al., 2009). Collectively, these results point to the involvement of dopamine in the FRN, although they do not preclude the potential impact of other neurotransmitter systems on its expression (Jocham & Ullsperger, 2009).

2.4. Alternate accounts

RL-ERN accounts for the FRN in terms of reward prediction errors that arise from the dopamine system and arrive at the anterior cingulate. According to other accounts, the FRN and related components (i.e., the ERN and the N2) reflect response conflict (Cockburn & Frank, 2011; Yeung et al., 2004), surprise (Alexander & Brown, 2011; Jessup et al., 2010; Oliveira et al., 2007), or evaluation of the motivational impact of events (Gehring & Willoughby, 2002; Luu et al., 2003). We return to these alternatives in the discussion.

3. Neural significance of the FRN

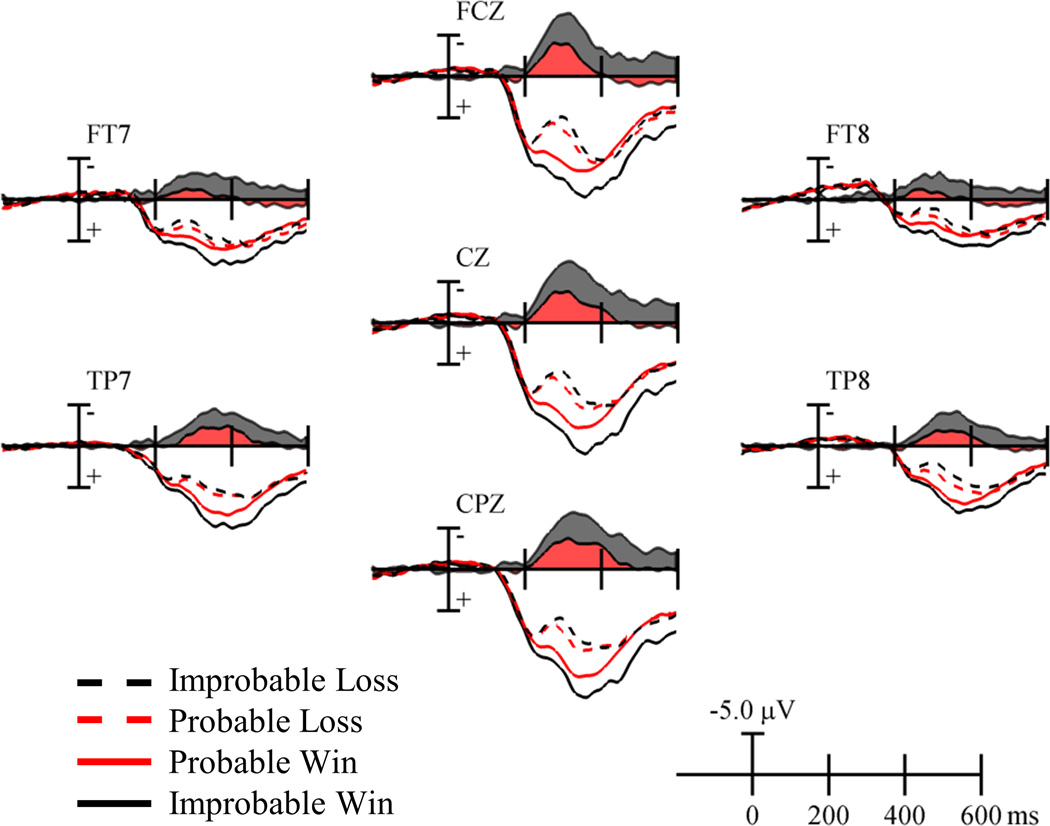

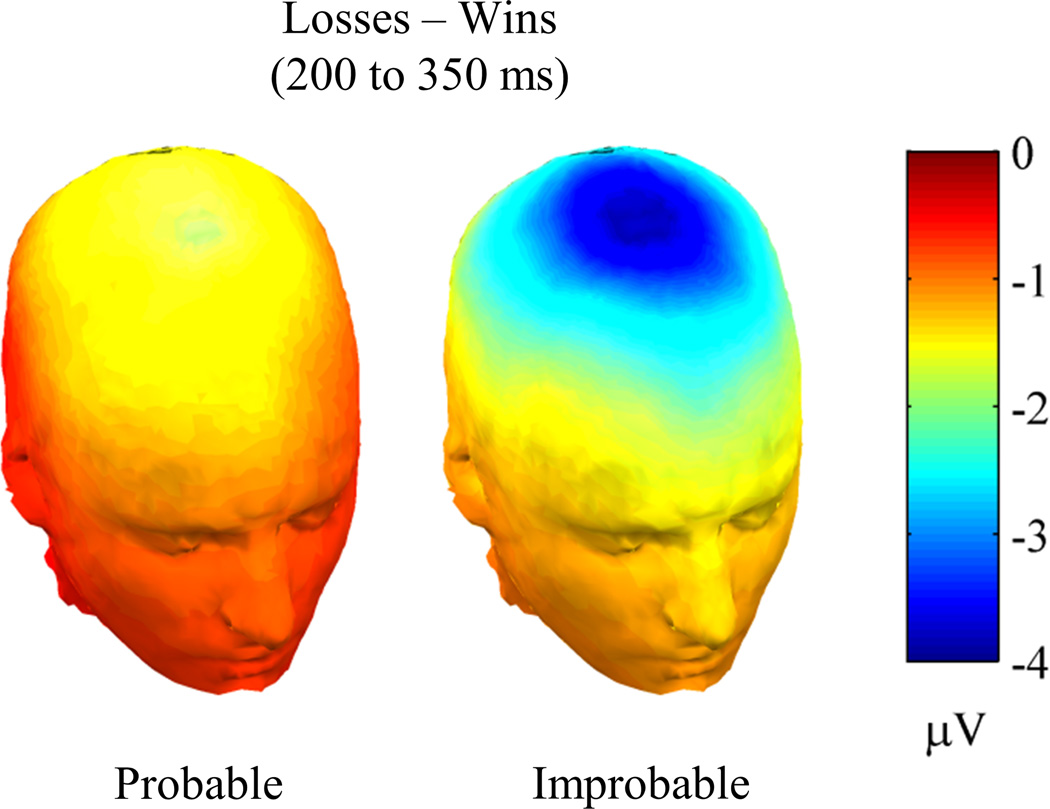

Figure 2 presents ERP waveforms from a probabilistic learning experiment conducted in our laboratory (Walsh & Anderson, 2011a). In each trial, participants selected between two stimuli. The experiment contained three stimuli that were rewarded with different probabilities, P = {0%, 33%, and 66%}. The FRN is computed as the difference in voltages following losses and wins that occurred with low probability (losses | 66% Cue – wins | 33% Cue) and with high probability (losses | 33% Cue – wins | 66% Cue).3 The FRN appears as a negativity following losses and is maximal from 200 to 350 ms.4 Although waveforms are relatively more negative following losses, they do not literally drop below zero. This is because the FRN is superimposed upon the larger, positive-going P300, which is evoked by stimulus processing (Johnson, 1986). Figure 3 shows the topography of the FRN following probable and improbable outcomes. For both outcome types, the FRN has a frontocentral focus. These results coincide with other studies in showing that the FRN is maximal over the frontocentral scalp and from 200 to 350 ms.

Figure 2.

Feedback-locked ERPs for probable and improbable wins and losses (colored lines), and FRN difference waves (colored regions) (data from the no instruction condition of Walsh & Anderson, 2011a).

Figure 3.

Topography of the FRN following probable outcomes (losses | 33% Cue – wins | 66% Cue) and improbable outcomes (losses | 66% Cue – wins | 33% Cue) (data from the no instruction condition of Walsh & Anderson, 2011a).

The topography of the FRN is compatible with a generator in the anterior cingulate. Investigators have used equivalent current dipole localization techniques (e.g., BESA; Scherg & Berg, 1995), and distributed source localization techniques (e.g., LORETA; Pascual-Marqui et al., 2002) to identify the FRN’s source. The former approach involves modeling the observed distribution of voltages over the scalp using a small number of dipoles with variable locations, orientations, and strengths. The latter approach involves modeling observed voltages using a large number of voxels with fixed locations and orientations but with variable strengths.

Dipole source models indicate that the topography of the FRN is consistent with a source in the anterior cingulate (Gehring & Willoughby, 2002; Hewig et al., 2007; Miltner et al., 1997; Nieuwenhuis et al., 2005a; Potts et al., 2006; Ruchsow et al., 2002; Tucker et al., 2003; Zhou et al., 2010). Similarly, distributed source models indicate that the topography of the FRN is consistent with graded activation in the anterior cingulate (Bellebaum & Daum, 2008; Cohen & Ranganath, 2007; Gruendler et al., 2011; Mathewson et al., 2008). The response-locked ERN also has a frontocentral distribution that, like the FRN, is consistent with a source in the anterior cingulate (Dehaene et al., 1994; Gruendler et al., 2011).

The anterior cingulate receives inputs from the limbic system and from cortical structures including the prefrontal cortex and motor cortex (Paus, 2001). Pyramidal neurons in the anterior cingulate, in turn, project to motor structures including the basal ganglia, the primary and supplementary motor areas, and the spinal cord (van Hoesen et al., 1993). Thus, the anterior cingulate is in a prime position to transform motivational and cognitive inputs into actions. The foci of activation in ERP studies overlap with the rostral cingulate zone, the human analog of the monkey cingulate motor area (Picard & Strick, 1996; Ridderinkhoff et al., 2004). The proposal that the ERN and FRN originate from the anterior cingulate coincides with this region’s role in planning and executing behavior (Bush et al., 2000; Kennerley et al., 2006; Ridderinkhof et al., 2004).

Source localization results must be regarded with caution because different configurations of neural generators can produce identical voltage distributions (i.e., the inverse problem). Nevertheless, neuroimaging studies have reported anterior cingulate activation following negative feedback and response errors (Bush et al., 2002; Holroyd et al., 2004b; Jocham et al., 2009; Mathalon et al., 2000; Ullsperger & von Cramon, 2003).

Paralleling these neuroimaging results, local field potentials in the human anterior cingulate are sensitive to losses and negative feedback (Halgren et al., 2002; Pourtois et al., 2010), as are the responses of individual anterior cingulate neurons (Williams et al., 2004). Thus, there is a convergence of evidence at the levels of individual neuron responses, local field potentials, and scalp-recorded ERPs. Likewise, extracranial EEG recordings from monkeys reveal an analog to the human ERN and FRN (Godlove et al., 2011; Vezoli & Procyk, 2009). Local field potentials in the monkey anterior cingulate are sensitive to errors and negative feedback (Emeric et al., 2008; Gemba et al., 1986), as are the responses of individual anterior cingulate neurons (Ito et al., 2003; Niki & Watanabe, 1979; Shima & Tanji, 1998). The onset of the FRN coincides with the timing of local field potentials and of individual neuron responses, and is somewhat earlier in monkeys than humans as would be expected given the shorter latencies of monkey ERP components (Schroeder et al., 1995). The electrophysiological response of dopamine neurons begins 60 to 100 ms after reward delivery (Schultz, 2007). That the FRN emerges slightly later is not surprising given that it reflects the summation of postsynaptic potentials caused by dopamine release, rather than the responses of dopamine neurons themselves.

Given its purported role in reward learning, one might expect that ablation of the anterior cingulate would disrupt responses to errors and feedback. Indeed, the ERN is attenuated in neurological patients with lesions to the anterior cingulate (Swick & Turken, 2002; Ullsperger et al., 2002). It would be interesting to see whether the FRN is also reduced in these patients, as ablation of the anterior cingulate impairs feedback-driven learning of action values (Camille et al., 2011; Williams et al., 2004).5

Source localization studies have identified alternative (or additional) neural generators for the FRN. Some studies indicate that the FRN arises in the posterior cingulate cortex (Badgaiyan & Posner, 1998; Cohen & Ranganath, 2007; Doñamayor et al., 2011; Luu et al., 2003; Müller et al., 2005; Nieuwenhuis et al., 2005a). Many of these studies identified an additional source in the anterior cingulate, suggesting that the anterior and posterior cingulate jointly contribute to the FRN. This is plausible given that the anterior and posterior cingulate are reciprocally connected, and that the posterior cingulate also signals reward properties (Hayden et al., 2008; McCoy et al., 2003; Nieuwenhuis et al., 2004b, 2005a; van Veen et al., 2004) and response errors (Menon et al., 2001). Still other studies indicate that the FRN arises in the ventral and dorsal striatum (Carlson et al., 2011; Foti et al., 2011; Martin et al., 2009). These regions are densely innervated by dopamine neurons, and striatal BOLD responses mirror reward prediction errors (O’Doherty et al., 2004). Researchers traditionally thought that subcortical structures such as the striatum contribute little to scalp-recorded EEG signals. This view has been challenged, however, raising the possibility that the striatum contributes to the FRN (Foti et al., 2011). These results notwithstanding, the anterior cingulate has most consistently been associated with the FRN. Although other regions are undoubtedly involved in reward learning, their contributions to scalp-recorded ERPs remain less studied.

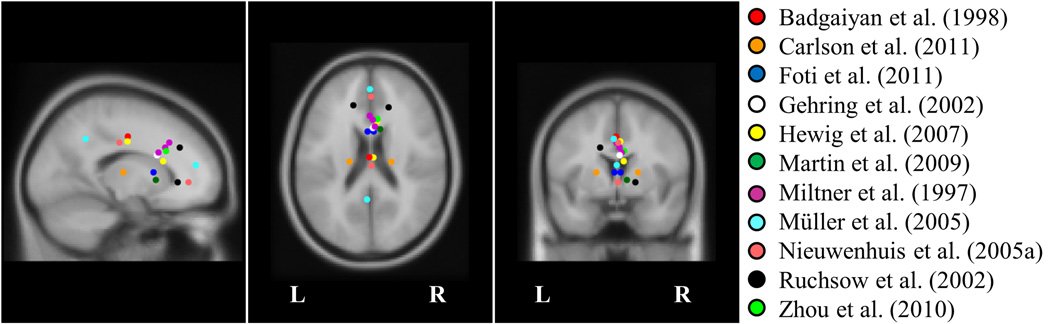

As the focus of the FRN along the anterior-posterior axis of the scalp varies between studies, so too do the locations of the modeled generators within the anterior cingulate (Figure 4). This spatial variability is expected for three reasons. First, some studies do not freely fit dipoles, raising the possibility that a different source would account equally well for the observed voltage distribution. Second, source models that localize components using the difference-wave approach (i.e., the difference between voltage topographies following losses and wins) are associated with a spatial error on the order of tens of millimeters (Dien, 2010). Third, neuroimaging techniques with far greater spatial resolution than EEG also reveal activation in extensive and variable portions of the anterior cingulate during error processing (Bush et al., 2000; Ridderinkhof et al., 2004). The anterior cingulate can be subdivided into its dorsal and rostral-ventral aspects. Neuroimaging experiments and lesion studies implicate the dorsal anterior cingulate in cognitive processing and the rostral-ventral anterior cingulate in affective processing (Bush et al., 2000). Localization of the FRN to the dorsal and rostral-ventral subdivisions of the anterior cingulate might reflect the multifaceted roles of feedback in engaging cognitive and affective processes.

Figure 4.

Equivalent dipole solutions from source localization studies. Miltner et al. (1997) fit dipoles for three experiment conditions, and Hewig et al. (2007) fit dipoles for two experiment contrasts. Several studies modeled the FRN using two-dipole solutions (Carlson et al., 2011; Foti et al., 2011; Müller et al., 2005; Nieuwenhuis et al., 2005a; Ruchsow et al., 2002).

4. Cognitive significance of the FRN

4.1. The FRN reflects a quantitative reward prediction error

Phasic responses of dopamine neurons scale with differences between actual and expected outcomes (Schultz, 2007). A central claim of RL-ERN is that the amplitude of the FRN also depends on the difference between the actual and the expected value of an outcome. Expected value, in turn, depends on the probability and magnitude of rewards,

| (4) |

4.1.1. Reward probability

Investigators have examined the relationship between reward probability and FRN amplitude. Figure 2 presents ERPs from a probabilistic learning experiment conducted in our laboratory (Walsh & Anderson, 2011a). If the FRN tracks quantitative reward prediction errors, we expected that FRN amplitude, defined as the difference between losses and wins, would be greater for improbable outcomes than for probable outcomes. This is because improbable losses yield more negative prediction errors than probable losses, and improbable wins yield more positive prediction errors than probable wins. Thus, the difference between improbable losses and wins should exceed the difference between probable losses and wins. As expected, the FRN was greater for improbable outcomes than for probable outcomes. Although many other studies have found that FRN amplitude is inversely related to outcome likelihood (Eppinger et al., 2008; 2009; Hewig et al., 2007; Holroyd & Coles, 2002; Holroyd et al., 2003, 2009; Nieuwenhuis et al., 2002; Potts et al., 2006, 2010; Walsh & Anderson, 2011a, 2011b), some have not (Hajcak et al., 2005; 2007). In these cases, participants may have received insufficient experience to develop strong expectations. Indeed, when participants rated their confidence immediately before outcomes were revealed, the FRN related to their expectations (Hajcak et al., 2007).

RL-ERN further predicts that ERPs will be more positive after improbable wins than probable wins, and that ERPs will be more negative after improbable losses than probable losses. Figure 2 renders such a valence-by-likelihood interaction. Studies that report difference waves along with the constituent win and loss ERPs lend mixed support to this prediction. In many cases, outcome likelihood influences win and loss waveforms in opposite directions as predicted by RL-ERN, but in other cases, outcome likelihood only affects win waveforms. To determine whether outcome likelihood consistently affects win and loss waveforms, we examined the direction of the effects in 25 studies of neurotypical adults that manipulated reward probability (Table 1).6 Waveforms were more positive after unexpected than expected wins in 84% of studies (sign test: p < .001). Conversely, waveforms were more negative after unexpected than expected losses in 76% of studies (sign test: p < .01). Although the number of experiments showing expected effects for wins and losses are equivalent by McNemar’s test, p > .1, the magnitude of the effect is typically larger for wins.

These results confirm that outcome likelihood affects win and loss waveforms, but they also point to a win/loss asymmetry: outcome likelihood modulates neural responses to wins more strongly than to losses. Such an asymmetry could arise for two reasons. First, because of their low baseline rate of activity, dopamine neurons exhibit a greater range of responses to positive events than to negative events. As such, the phasic increase in dopamine firing rates that follows improbable positive outcomes exceeds the phasic decrease that follows improbable negative outcomes (Bayer & Glimcher, 2005; Mirenowicz & Schultz, 1996). Amplifying this effect, dopamine concentration increases in a non-linear, accelerated manner with firing rate (Chergui et al., 1994). For these reasons, positive prediction errors could disproportionately influence neural activity in concomitant structures like the anterior cingulate. According to this account, the impact of negative outcomes on the FRN, though real, is slight. The greater source of variance comes from the superposition of a reward positivity on EEG activity after positive outcomes (Holroyd et al., 2008).

Second, the effect of outcome likelihood on waveforms following losses may be obscured by the P300, a positive component that follows low probability events (Johnson, 1986). According to this view, improbable wins produce a reward positivity that summates with the P300. Improbable losses produce an FRN, but a still-larger P300 obscures the FRN. Although the P300 is maximal at posterior sites, the P300 extends to central and frontal sites. When outcome probabilities are not equal, as when directly comparing probable losses and improbable losses, measures of the FRN from frontal sites and especially from central and posterior sites are likely to be confounded by the P300.

To distinguish between these accounts, Foti et al. (2011) used principal components analysis (PCA), a data reduction technique that decomposes ERP waveforms into their latent factors. They identified a reward-related factor with a latency and topography that matched the FRN. The factor displayed a positive deflection following rewards, and no change following non-rewards. This result is consistent with the idea that the FRN arises from the superposition of a reward positivity on EEG activity after positive outcomes. These results do not unambiguously establish that outcome likelihood only affects neural activity following wins, however. Foti et al. did not vary reward probability (2011). As such, it is unclear whether the reward-related factor is sensitive to outcome likelihood, or just outcome valence. Additionally, other PCA decompositions have revealed separate reward- and loss-related factors (Potts et al., 2010), or a single factor that distinguishes between losses and rewards (Boksem et al., 2012; Carlson et al., 2011; Foti & Hajcak, 2009). Because none of these studies manipulated outcome likelihood, however, it is unclear whether the factors in each are sensitive to outcome likelihood or just outcome valence.

4.1.2. Reward magnitude

In addition to examining the effect of reward probability on the FRN, investigators have examined the relationship between reward magnitude and FRN amplitude. RL-ERN predicts that the difference between large magnitude losses and wins will exceed the difference between small magnitude losses and wins. In contrast to this prediction, the FRN is typically sensitive to reward valence, whereas the P300 is sensitive to reward magnitude (i.e., the independent coding hypothesis; Kamarajan et al. 2009; Sato et al., 2005; Toyomaki & Murohashi, 2005; Yeung & Sanfey, 2004; Yu & Zhou, 2006). RL-ERN also predicts that ERPs will be more positive after large wins than small wins, and ERPs will be more negative after large losses than small losses. Few studies have reported such a valence-by-magnitude interaction (but for partial support, see Bellebaum et al., 2010b; Goyer et al., 2008; Hajcak et al., 2006; Holroyd et al., 2004a; Kreussel et al., 2012; Marco-Pallarés et al., 2008; Masaki et al., 2006; Santesso et al., 2011). These results might indicate that separate brain systems represent reward probability and magnitude, and that the FRN is sensitive to the former but not the latter dimension of expected value. This interpretation is at odds with the finding that anterior cingulate neurons and the BOLD response in the anterior cingulate are sensitive to outcome magnitude, however (Amiez et al., 2005; Fujiwara et al., 2009; Sallet et al., 2007).

In most FRN studies that manipulated reward magnitude, outcome values were known in advance. In such circumstances, the brain displays adaptive scaling. Neural firing rates and BOLD responses adapt to the range of outcomes such that maximum deviations from baseline remain constant regardless of absolute reward values (Bunzek et al., 2010; Nieuwenhuis et al., 2005b; Tobler et al., 2005). Failure to find an effect of reward magnitude on FRN amplitude might indicate that the FRN also scales with the range of reward values (i.e., the adaptive scaling hypothesis). In two studies that permit evaluation of this hypothesis, trial values were not known in advance (Hajcak et al., 2006; Holroyd et al., 2004a). In both studies, large magnitude wins produced more positive waveforms than small magnitude wins, whereas large and small magnitude losses produced identical waveforms.7 These results indicate that the FRN is sensitive to reward magnitude when trial values are not known in advance, and they replicate the win/loss asymmetry characteristic of reward probability.

Understanding the effect of outcome magnitude on FRN activity is complicated by the fact that subjective values may differ from objective utilities. For instance, participants may adopt a nonlinear value function (Tversky & Kahneman, 1981). In the extreme case, they may encode all outcomes that exceed an aspiration level as wins, and all outcomes that fall below an aspiration level as losses (Simon, 1955). Although no ERP study has attempted to infer subjective value functions, one study did find that the FRN is sensitive to how participants code feedback (Nieuwenhuis et al., 2004b). In that study, participants chose between two alternatives, and the valence and magnitude of each alternative was revealed. When feedback emphasized the valence of the selection (greater than or less than zero), choosing a negative outcome produced an FRN. When feedback emphasized the correctness of the selection (greater than or less than the alternative), choosing the lesser outcome produced an FRN. These results confirm that the FRN is sensitive to subjective interpretations of feedback, and is subsequently dependent upon participants’ representation of outcomes.

4.2. The FRN is evoked by stimuli that predict outcomes

The dopamine response transfers back in time from outcomes to the earliest events that predict outcomes (Schultz, 2007). RL-ERN also holds that outcomes and events that predict outcomes will evoke a frontal negativity. To test this hypothesis, investigators first examined the relationship between the ERN and the FRN. These components differ with respect to their eliciting events: the ERN immediately follows response errors, and the FRN follows negative feedback (Figure 1). When responses determine outcomes (i.e., the correct response is rewarded with certainty), the response itself provides complete information about future reward. When responses do not determine outcomes (i.e., reward is delivered randomly), the response provides no information about future reward. By varying the reliability of stimulus-response mappings, investigators have demonstrated an inverse relationship between the amplitude of the ERN and the FRN (Eppinger et al., 2008, 2009; Holroyd & Coles, 2002; Nieuwenhuis et al., 2002). The ERN is larger when responses strongly determine outcomes (i.e., punishment can be anticipated from the response), and the FRN is larger when responses weakly determine outcomes (i.e., punishment cannot be anticipated from the response).

The inverse relationship between the ERN and the FRN also holds as the detectability of response errors vary (i.e., the first-indicator hypothesis). For example, in a task where participants had to respond within an allocated time interval, large timing errors produced an ERN, but subsequent negative feedback did not produce an FRN. Response errors committed marginally beyond the response deadline did not produce an ERN, but subsequent negative feedback did produce an FRN (Heldmann et al., 2008). This presumably reflects the fact that participants could more readily detect large timing errors than marginal timing errors.

More recently, researchers have examined whether stimulus cues that predict outcomes also evoke an FRN. In some studies, cues provided complete information about forthcoming outcomes. Cues that predicted future losses produced more-negative waveforms than cues that predicted future rewards (Baker & Holroyd, 2009; Dunning & Hajcak, 2008). In other studies, cues provided probabilistic information about forthcoming outcomes. Again, waveforms were more negative after cues that predicted probable future losses than after cues that predicted probable future rewards (Holroyd et al., 2011; Liao et al., 2011; Walsh & Anderson, 2011b). In all of these cases, the topography of the negativity produced by cues that predicted future losses coincided with the topography of the negativity produced by losses themselves.

The relative magnitude of cue-locked and feedback-locked FRNs varies considerably across studies. According to RL-ERN, the size of cue-locked prediction errors should vary with the amount of information that the cue conveys about the outcome (i.e., reward probability). As the amount of information conveyed by cues increases, so too do their predictive values and the resulting cue-locked FRN. The predictive values of cues also shape neural responses to feedback. Outcomes that confirm expectations induced by cues produce smaller prediction errors (and feedback-locked FRNs), and outcomes that violate expectations induced by cues produce larger prediction errors (and feedback-locked FRNs).

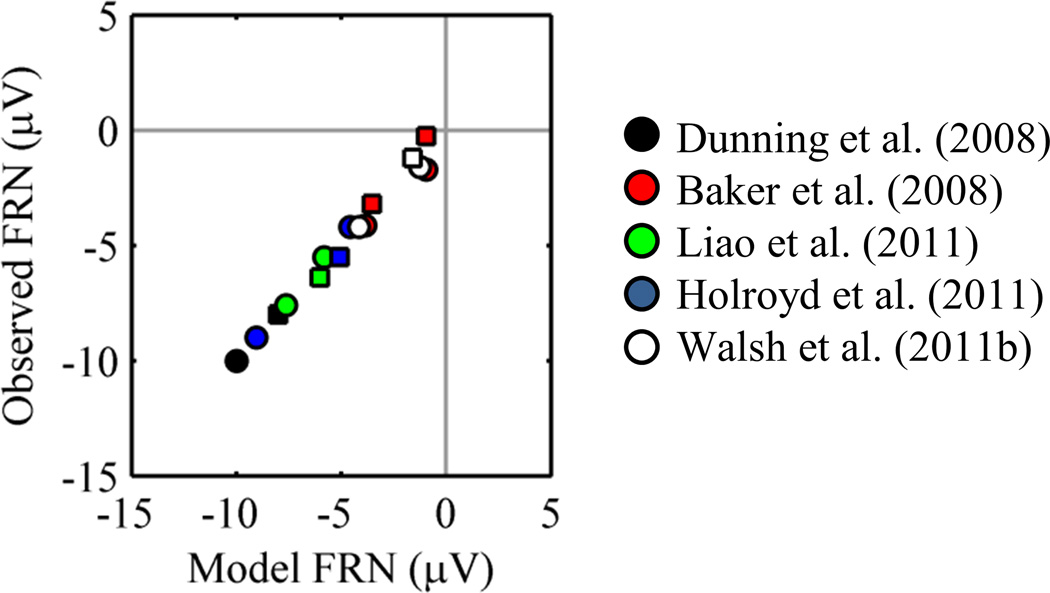

For data sets that included cue- and feedback-locked FRNs, we calculated model prediction errors (Baker & Holroyd, 2009; Dunning & Hajcak, 2008; Holroyd et al., 2011; Liao et al., 2011; Walsh & Anderson, 2011b). Cue values depended on the amount of information the cue conveyed about the outcome (i.e., reward probability). We estimated the value of the temporal discount parameter (γ) that minimized the sum of the squared errors between observed FRNs and model FRNs across the five data sets. We also estimated slope and intercept terms to scale model FRNs to observed FRNs for each data set (Appendix). A value of γ near one would indicate that the FRN is sensitive to future reward. Figure 5 plots observed FRNs against model FRNs for the best-fitting value of γ (0.86).8 The results of this analysis make clear two points. First, the magnitudes of cue- and feedback-locked FRNs are consistent with a temporal difference learning model. Second, the FRN is sensitive to future reward.

Figure 5.

Model FRNs and observed FRNs. Squares correspond to cue-locked FRNs, and circles correspond to feedback-locked FRNs.

Some researchers have incorporated eligibility traces into models of dopamine responses (Pan et al., 2005). When a state is visited or an action is selected, a trace is initiated. The trace marks the state or action as eligible for update and gradually decays. Traces permit prediction errors to bridge gaps between states, actions, and rewards (Sutton & Barto, 1998). In one study of sequential choice, we found that behavior was most consistent with a model that used eligibility traces (Walsh & Anderson, 2011b). The ERP results were not conclusive with respect to this issue, however. One avenue for future research is to understand how temporal delays and intervening events between actions and outcomes affect the FRN.

4.3. The FRN and behavior change with experience

4.3.1. Block-wise analyses

Reinforcement learning seeks to explain how experience influences ongoing behavioral responses. Likewise, RL-ERN is a theory of how experience influences ongoing neural responses. As such, it is informative to ask how behavior and the FRN change over time. Many studies report concomitant behavioral and neural adaptation. For example, in one condition of Eppinger et al. (2008), the correct response to a cue was rewarded with 100%. Response accuracy in adults was initially low and positive feedback evoked a reward positivity. As response accuracy increased, the feedback positivity decreased, indicating that participants came to expect reward after correct responses. At the same time, the amplitude of the response-locked ERN following errors increased, indicating that participants came to expect punishment after incorrect responses. Other studies have found that as participants learn which responses are likely to be rewarded and which are not, the FRN develops increasing sensitivity to outcome likelihood in parallel (Cohen et al., 2007; Morris et al., 2008; Müller et al., 2005; Pietschmann et al., 2008; Walsh & Anderson, 2011a, 2011b).

Interestingly, the FRN only changes in participants who exhibit behavioral learning (Bellebaum & Daum, 2008; Krigolson et al., 2009; Salier et al., 2010). One recent study used a blocking paradigm to explore this issue (Luque et al., 2012). In the first phase of the experiment, participants learned to predict whether different stimuli would produce an allergy. One stimulus did (conditioned stimulus), and the other did not (neutral stimulus). In the second phase of the experiment, novel stimuli appeared in compounds with the conditioned stimulus and the neutral stimulus. In the test phase, participants predicted whether the novel stimuli alone would produce the allergy. Participants predicted “allergy” more frequently for the novel stimulus that appeared with the neutral stimulus than for the novel stimulus that appeared with the conditioned stimulus, replicating the standard blocking effect. More critically, the FRN was greater when participants received punishment for responding “allergy” to the predictive stimulus than when they received punishment for responding “allergy” to the blocked stimulus, indicating that they came to expect reward in the former case but not the latter.

Collectively, these results are consistent with the hypothesis that the neural system that generates the FRN influences behavior. These results are also consistent with the hypothesis that expectations, which shape behavior, influence the system that generates the FRN. Thus, these results do not unambiguously demonstrate that the FRN contributes to behavior.

Not all studies report concomitant behavioral and neural adaptation. For example, the FRN sometimes remains constant as response accuracy increases (Bellebaum et al., 2010a; Eppinger et al., 2009; Holroyd & Coles, 2002).9 Additionally, participants sometimes learn despite the absence of any clear FRN (Groen et al., 2007; Hämmerer et al., 2010; Nieuwenhuis et al., 2002). Finally, response accuracy sometimes remains constant as the FRN becomes more sensitive to outcome likelihood. In one study that demonstrated such a dissociation, participants performed a probabilistic learning task (Walsh & Anderson, 2011a). In the instruction condition, they were told how frequently each of three stimuli was rewarded (0%, 33%, and 66%). In the no instruction condition, they were not. Two stimuli appeared in each trial. Participants selected a stimulus and received feedback about whether their selection was rewarded. Although response accuracy began and remained at asymptote in the instruction condition, the FRN only distinguished between probable and improbable outcomes after participants experienced the consequences of several choices. Collectively, these results demonstrate that behavioral and neural adaption can occur independently.

4.3.2. Verbal reports

Establishing a relationship between the FRN and reward prediction errors is complicated by the fact that prediction errors depend on participants’ ongoing experience. Block-wise analyses eschew this issue by assuming that expectations gradually converge to true reward values. Such analyses may be too coarse to detect rapid neural adaptation, however. To overcome this limitation, researchers have examined the trial-by-trial correspondence between EEG activity and participants’ verbally reported expectations. For example, in Hajcak et al. (2007), participants guessed which of four doors contained a reward. Before the outcome was revealed, participants predicted whether they would be rewarded in that trial. Waveforms were more negative after unpredicted losses than after predicted losses, and waveforms were more positive after unpredicted wins than after predicted wins. Other studies have since confirmed that trial-by-trial changes in FRN amplitude relate to participants’ reported expectations (Ichikawa et al., 2010; Moser & Simons, 2009).

4.3.3. Model-based analyses

Verbal reports, though informative, are obtrusive. An alternate approach is to construct a computational model of the task the participant must solve. Free parameters like temporal discounting rate (γ) and learning rate (α) are estimated from observable behavioral responses. One can then simulate how latent model variables like reward prediction error change over time (Mars et al., 2012).

Studies have increasingly employed this model-based approach (Cavanagh et al., 2010; Chase et al., 2011; Ichikawa et al., 2010; Philiastides et al., 2010; Walsh & Anderson, 2011a, 2011b). For example, Walsh and Anderson fit computational models to participants’ behavioral and neural data in two experiment conditions (2011a). Behavior in the instruction condition was consistent with a model that only learned from instruction, whereas behavior in the no instruction condition was consistent with a model that only learned from feedback. In both conditions, changes in the FRN were consistent with a model that only learned from feedback. Besides establishing a relationship between the FRN and trial-by-trial prediction errors, these results demonstrated that behavioral and neural responses could arise from separate processes as evidenced by the different computational models that best characterized each in the instruction condition. Other model-based analyses have found a relationship between negative prediction errors and FRN amplitude (Cavanagh et al., 2010; Chase et al., 2011; Ichikawa et al., 2010), while one study found that the FRN was only sensitive to the valence of prediction errors (Philiastides et al., 2010). These model-based analyses establish a link between behavior and the FRN by showing that prediction errors, which guide behavior, influence neural responses as well.

4.3.4. Sequential effects

Researchers have also used traditional signal averaging techniques to examine trial-by-trial changes in FRN amplitude. These analyses show that previous outcomes affect FRN amplitude. For example, when wins and losses occurred with equal probability (Holroyd & Coles, 2002), FRN amplitude was greater after outcomes that disconfirmed expectations induced by the immediately preceding trial (e.g., losses following wins). These analyses also show that FRN amplitude predicts subsequent behavioral adaptation. For example, as the size of the FRN following negative outcomes increases, so too does the probability that participants will not repeat the punished response in the next trial (Cohen & Ranganath, 2007; van der Helden et al., 2010; Yasuda et al., 2004). Lastly, time-frequency analyses reveal associations between neural oscillations and behavioral adaptation. Increases in midline frontal theta following negative feedback predict post-error slowing and error correction, whereas increases in midline frontal beta following positive feedback predict response repetition (Cavanagh et al., 2010; van de Vijver et al, 2011).

4.3.5. Integration

Theories of behavioral control propose that choices can arise from a habitual system situated in the basal ganglia, or a goal-directed system situated in the prefrontal cortex and medial temporal lobes (Daw et al., 2005). The habitual system uses temporal difference learning to select actions that have been historically advantageous. The goal-directed system learns about rewards contained in different world states and the probability that actions will lead to those states. The goal-directed system uses this internal world model to prospectively identify actions that result in goal attainment. The FRN is thought to arise from the reward signals of dopamine neurons in the basal ganglia, which are conveyed to the anterior cingulate. As such, the FRN and behavior may coincide when the habitual system controls responses. When behavior is goal-directed, dopamine neurons may continue to compute reward prediction errors even though these signals do not impact behavior. As such, the FRN and behavior may dissociate when the goal-directed system controls responses.

4.4. The system that produces the FRN is maximally engaged by volitional actions

According to RL-ERN, the anterior cingulate maps onto the actor element in the actor-critic architecture (Holroyd & Coles, 2002). As such, the anterior cingulate should be maximally engaged when participants must learn action values. Physiological studies show that neurons in the anterior cingulate do respond more strongly when monkeys must learn action-outcome contingencies as compared to when rewards are passively delivered (Matsumoto et al., 2007; Michelet et al., 2007). Likewise, the FRN is larger when instrumental responses are required than when rewards are passively delivered (Itagaki & Katayama, 2008; Marco-Pallarés et al., 2010; Martin & Potts, 2011; Yeung et al., 2005). Although it is not a requisite of the actor-critic architecture, anterior cingulate activation is also greater when participants monitor outcomes of freely selected responses as compared to fixed responses (Walton et al., 2004). Likewise, the FRN is larger when outcomes are attributed to one’s own actions (Holroyd et al., 2009; Li et al., 2010; 2011). Collectively, these findings indicate that the FRN tracks values of volitional actions.

These results notwithstanding, the FRN has been observed in tasks that do not feature overt responses (Donkers & van Boxtel, 2005; Holroyd et al., 2011; Martin et al., 2009; Potts et al., 2006, 2010; Yeung et al., 2005). For example, in Martin et al. (2011), participants passively viewed a cue followed by an outcome. The cue indicated whether the trial was likely to result in reward. Participants exhibited an FRN that scaled with outcome likelihood even though they made no response. Additionally, in studies that reported neural responses to cues that predicted future losses or wins, cue-locked FRNs were not preceded by responses (Dunning & Hajcak, 2008; Holroyd et al., 2011). These results challenge the notion that response selection is necessary for FRN generation.

These results can be reconciled with RL-ERN in three ways. First, the FRN could reflect the critic’s prediction error signal. By this view, the FRN appears in instrumental and classical conditioning tasks alike. Physiological and neuroimaging studies show that the anterior cingulate is especially engaged in tasks that involve learning action-outcome associations, however, whereas other regions such as the orbitofrontal cortex and ventral striatum show equal or greater activation in tasks that involve learning stimulus-outcome associations (Kennerley et al., 2006; Ridderinkhof et al., 2004; Walton et al., 2004). Thus, the profile of anterior cingulate activation across tasks is more consistent with the actor element than the critic element.

Second, the anterior cingulate could represent and credit abstract actions not included in the task set (e.g., the decision to enter the experiment). Alternatively, the anterior cingulate could compute fictive error signals to learn the values of selecting different cues in the absence of actual choices. It is unclear why other tasks that fail to produce anterior cingulate activation would not also evoke such action representations, however.

Third, the FRN could reflect separate signals arising from distinct actor and critic elements. These elements could be instantiated in heterogeneous populations of anterior cingulate neurons or in separate divisions of the prefrontal cortex and basal ganglia. This proposal is in line with existing data that highlights the multifaceted responses of anterior cingulate neurons to different tasks (Bush et al., 2002; Shima & Tanji, 1998).

The FRN is evoked in another scenario that does not involve behavioral responses; observation of aversive outcomes administered to others (Leng & Zhou, 2010; Marco-Pallarés et al., 2010; Yu & Zhou, 2006). This is true even when outcomes do not affect the observer (Leng & Zhou, 2010; Marco-Pallarés et al., 2010). The anterior cingulate represents affective dimensions of pain (Singer et al., 2004). Experiencing and observing pain produces overlapping activation in the anterior cingulate (Singer et al., 2004). The finding that the FRN is also evoked when people observe aversive outcomes dovetails with this result. The relationship between the observer and performer mediates the direction of the FRN, however. When the observer is punished for the performer’s wins, outcomes produce an inverted FRN (Fukushima & Hiraki, 2006; Itagaki & Katayama, 2008; Marco-Pallarés et al., 2010). The experience of aversive outcomes apparently outweighs empathetic responses.

5. Discussion

To behave adaptively, the cognitive system must monitor performance and regulate ongoing behavior. Studies of error detection provided early evidence of such monitoring (Rabbitt, 1966, 1968). The discovery of the error-related negativity (ERN) provided further insight into the neural basis of error detection and cognitive control. More recently, experiments have revealed a frontocentral component that appears after negative feedback (Miltner et al., 1997). Converging evidence indicates that this feedback-related negativity (FRN) arises in the anterior cingulate, a region that transforms motivational and cognitive inputs into actions. Four features of the FRN suggest that it tracks a reinforcement learning process: (1) the FRN represents a quantitative prediction error; (2) the FRN is evoked by rewards and by reward-predicting stimuli; (3) the FRN and behavior change with experience; and (4) the system that produces the FRN is maximally engaged by volitional actions.

5.1. Alternate accounts

RL-ERN is but one account of the FRN (Holroyd & Coles, 2002). According to another proposal, the anterior cingulate monitors response conflict (Botvinick et al., 2001; Yeung et al., 2004). Upon detecting activation of mutually incompatible responses, the anterior cingulate signals the need to increase control to the prefrontal cortex in order to resolve the conflict. The conflict monitoring hypothesis accounts for the ERN in the following manner. Activation of the incorrect response quickly reaches the decision threshold, causing the participant to commit an error. Ongoing stimulus processing increases activation of the correct response. The ERN reflects co-activation of the correct and incorrect responses immediately following errors.

The conflict monitoring hypothesis also accounts for the no-go N2, a frontocentral negativity that appears when participants must inhibit a response (Pritchard et al., 1991). Source localization studies indicate that the N2, like the ERN, arises from the anterior cingulate (Nieuwenhuis et al., 2003; van Veen & Carter, 2002; Yeung et al., 2004). The N2 is maximal when participants must inhibit a prepotent response, as with incongruent trials in the flanker and Stroop tasks. According to the conflict monitoring hypothesis, incongruent trials concurrently activate correct and incorrect responses. The N2 reflects co-activation of the correct and incorrect responses prior to successful resolution.

RL-ERN and the conflict monitoring hypothesis are difficult to compare because RL-ERN focuses on the ERN and the FRN, whereas the conflict monitoring hypothesis focuses on the N2 and the ERN. RL-ERN can be augmented to account for the N2, however, by assuming that conflict resolution incurs cognitive costs, penalizing high conflict states (Botvinick, 2007). Alternatively, high conflict states may have lower expected value because they engender greater error likelihoods (Brown & Braver, 2005).

The conflict monitoring hypothesis has been augmented to account for the FRN (Cockburn & Frank, 2011). In the augmented model, negative feedback decreases activation of the selected response, which reduces lateral inhibition of the unselected response. The FRN reflects co-activation of the selected and unselected responses following negative feedback. The function of such a post-feedback conflict signal is unclear, however. When errors are due to impulsive responding, augmenting cognitive control will improve performance by facilitating stimulus processing. When errors are due to response uncertainty, however, augmenting cognitive control will not directly improve performance. Even if stimuli are fully processed, response uncertainty will remain.

According to another account, the ERN and FRN are evoked by all outcomes, positive and negative alike, that violate expectations (Alexander & Brown, 2011; Jessup et al., 2010; Oliveira et al., 2007). By this view, errors produce an ERN because they are rare. Similarly, negative feedback produces an FRN because participants learn which responses reduce the frequency of losses. Even when outcome likelihoods are equated, losses may be more subjectively surprising because people are overly optimistic (Miller & Ross, 1975). This theory appears to be inconsistent with key findings, however. For example, in a challenging time interval estimation task where participants received negative feedback with 70% (Holroyd & Krigolson, 2007), losses produced an FRN even though they were more likely than wins. Additionally, in probabilistic learning tasks that manipulate outcome likelihoods, ERPs are more negative after high probability losses than after low probability wins (Cohen et al., 2007; Holroyd et al., 2009, 2011; Walsh & Anderson, 2011a, 2011b). In these examples, positive outcomes that violate expectations do not produce negativities, while negative outcomes that confirm expectations do.

According to a final account, the ERN and FRN reflect affective responses of the limbic system to errors and negative feedback (Gehring & Willoughby, 2002; Hajcak & Foti, 2008; Luu et al., 2003). It is not clear where this account’s predictions diverge from RL-ERN and the conflict-monitoring hypothesis. Prediction errors and conflict could trigger negative affect, or negative affect could signal the need to adjust behavior.

5.2. Outstanding questions

In addition to synthesizing research on the neural basis and cognitive significance of the FRN, this review raises several questions. First, does the FRN win/loss asymmetry reflect the limited firing range of dopamine neurons, the superposition of a P300 upon loss waveforms, or something else entirely? Techniques like PCA seem ideal for distinguishing among these accounts, but the results of PCA analyses to date have been conflicting. Careful manipulations aimed at disentangling the N2, the P300, and the FRN (e.g., Donkers & van Boxtel, 2005) will provide insight into this question. Interestingly, fMRI studies have also revealed asymmetries in neural responses to rewards and punishments (Robinson et al., 2010; Seymour et al., 2007; Yacubian et al., 2006). This raises the possibility that the win/loss asymmetry is a general feature of neural reward processing (Daw et al., 2002).

Second, might some FRN results actually arise from component overlap (Holroyd et al., 2008)? Folstein and van Petten proposed an N2 classification schema that included two classes of anterior N2 components (2008). The first class, to which the ERN and FRN belong, relate to cognitive control. The second class relate to perceptual mismatch detection. Several studies that manipulate perceptual properties of outcome stimuli have shown that neural responses are sensitive to the content and form of feedback (Donkers & van Boxtel, 2005; Jia et al., 2007; Liu & Gehring, 2009). For example, waveforms were most negative following feedback stimuli that conveyed losses and that deviated from an established stimulus template (Donkers & van Boxtel, 2005; Jia et al., 2007). One puzzling feature of the FRN in several studies is that its amplitude is greater following uninformative feedback than negative feedback (Hirsh & Inzlicht, 2008; Holroyd et al., 2006; Nieuwenhuis et al., 2005a). This may reflect the fact that perceptual features of uninformative feedback deviated most from positive and negative feedback, and thus evoked a larger perceptual mismatch N2.

Third, when do behavior and the FRN coincide, and when do they differ? Theories of behavioral control posit that choices can arise from a habitual system or a goal-directed system (Balleine & O’Doherty, 2010; Daw et al., 2005). If the habitual system produces the FRN, experiment manipulations that favor goal-directed control should weaken the association between the FRN and behavior. For example, humans and animals display sensitivity to assays of goal-directness early in training, but not after extended training (Balleine & O’Doherty, 2010). Consequently, the strength of the association between the FRN and behavior should increase over the course of training. Additionally, instruction promotes goal-directed control by minimizing uncertainty in the goal-directed system’s value estimates. As such, instruction should weaken the association between the FRN and behavior. The results of Walsh & Anderson (2011a) support this prediction.10 Lastly, pharmacological challenges that disrupt the goal-directed system (i.e., midazolam; Frank et al., 2006) should enhance the association between the FRN and behavior. This prediction has not yet been tested.

Fourth, how do heterogeneous signals in the anterior cingulate contribute to the FRN? Although most studies report punishment-sensitive neurons within the anterior cingulate, some neurons show elevated responses to reward, and still others show elevated responses to punishment and reward alike (Fujiwara et al., 2009; Matsumoto et al., 2007; Sallet et al., 2007). Likewise, neuroimaging experiments have shown that while the dorsal anterior cingulate codes negative outcomes, the closely adjacent rostral anterior cingulate and posterior cingulate code positive outcomes (Liu et al., 2011). The anterior cingulate is also sensitive to abstract costs and benefits (Bush et al., 2002; Shima & Tanji, 1998). For example, anterior cingulate neurons signal the value of information conveyed by events (Matsumoto et al., 2007), and the physical costs of performing actions (Kennerley et al., 2009).

Fifth, and finally, how is the FRN affected by other functions of the anterior cingulate? In our research, we have often observed anterior cingulate activity when participants must update internal goal states (Anderson et al., 2008). Unexpected outcomes could conceivably signal the need to update goal states. Additionally, the relationship between FRN activity and behavioral adaptation is logically consistent with this function. Yet RL-ERN ascribes the FRN a separate role in updating action values rather than updating goal states. Future experiments that vary task demands and reward properties will help to characterize the diverse signals that arise in the anterior cingulate, and to understand their impact on the FRN.

Highlights.

The FRN encodes a quantitative reward prediction error.

The FRN shifts back in time from outcomes to stimuli that predict outcomes.

The FRN and behavior change with experience.

The system that produces the FRN is maximally engaged by volitional actions.

Acknowledgements

This project was supported by the National Center for Research Resources and the National Institutes of Mental Health through grant T32MH019983 to the first author and the National Institutes of Mental Health grant MH068243 to the second author.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

These findings also support the claim that the ERN is a manifestation of conflict monitoring, a possibility that we return to.

Mood disorders (i.e., depression), anxiety disorders (i.e., obsessive compulsive disorder), and schizophrenia are also associated with abnormal ERNs and FRNs (for a review, see Weinberg et al., 2012). Because these disorders have complex pharmacological etiologies, the pathways by which they affect the ERN and FRN are not clear.

The P300 is also sensitive to outcome likelihood (Johnson, 1986). By comparing outcomes that are equally likely, one can control for the P300 and isolate the FRN (Holroyd et al., 2009).

The same events that produce an FRN cause changes in neural oscillatory activity. Time-frequency analyses show that negative feedback and response errors are accompanied by increased power in the theta (5 to 7 Hz) frequency band (Cavanagh et al., 2010; Cohen et al., 2007; Marco-Pallares et al., 2008; van de Vijver et al., 2011), and positive feedback is accompanied by increased power in the beta (15 to 30 Hz) frequency band (Cohen et al., 2007; Marco-Pallares et al., 2008; van de Vijver et al., 2011).

Neurological patients with lesions to the lateral prefrontal cortex and basal ganglia also show attenuated responses to errors relative to correct trials (Gehring & Knight, 2000; Ullsperger & von Cramon, 2006). The reduced ERN is thought to arise indirectly from impaired inputs from the lateral prefrontal cortex and basal ganglia to the anterior cingulate.

Because few studies report results separately for wins and losses, we classified effects using peak values from grand-averaged waveforms.

Hajcak et al. (2006) may not have detected an effect of outcome magnitude on neural activity following wins because they only measured the amplitude of negative deflections in the ERP waveforms.

As a further test, we found the value of γ that maximized the correlation between observed and model FRNs for each of the five data sets. This analysis eliminates the need to estimate slope and intercept terms, which do not affect correlations between model and observed FRNs. Consistent with our earlier analysis, the value of γ that maximized the correlation was 0.90.

Such null effects are difficult to interpret. Binning trials to create learning curves leaves few observations per time point, reducing statistical power.

When instruction dictates how participants must respond, the anterior cingulate becomes less responsive (Walton et al., 2004). The results of Walsh & Anderson (2011a) suggest that when instruction dictates how participants should respond, the anterior cingulate remains engaged.

References

- Alexander WH, Brown JW. Medial prefrontal cortex as an action-outcome predictor. Nat. Neurosci. 2011;14:1338–1344. doi: 10.1038/nn.2921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amalric M, Koob GF. Functionally selective neurochemical afferents and efferents of the mesocorticolimbic and nigrostriatal dopamine system. Prog. Brain Res. 1993;99:209–226. doi: 10.1016/s0079-6123(08)61348-5. [DOI] [PubMed] [Google Scholar]

- Amiez C, Joseph JP, Procyk E. Anterior cingulate error-related activity is modulated by predicted reward. Eur. J. Neurosci. 2005;21:3447–3452. doi: 10.1111/j.1460-9568.2005.04170.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson JR, Fincham JM, Qin Y, Stocco A. A central circuit of the mind. Trends Cogn. Sci. 2008;12:136–143. doi: 10.1016/j.tics.2008.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bäckman L, Lindenberger U, Li SC, Nyberg L. Linking cognitive aging to alterations in dopamine neurotransmitter functioning: Recent data and future avenues. Neurosci. Biobehav. Rev. 2010;34:670–677. doi: 10.1016/j.neubiorev.2009.12.008. [DOI] [PubMed] [Google Scholar]

- Badgaiyan RD, Posner MI. Mapping the cingulate cortex in response selection and monitoring. Neuroimage. 1998;7:255–260. doi: 10.1006/nimg.1998.0326. [DOI] [PubMed] [Google Scholar]

- Baker TE, Holroyd CB. Which way do I go? Neural activation in response to feedback and spatial processing in a virtual T-Maze. Cereb. Cortex. 2009;19:1708–1722. doi: 10.1093/cercor/bhn223. [DOI] [PubMed] [Google Scholar]

- Balleine BW, O’Doherty JP. Human and rodent homologies in action control: Coritcostriatal determinants of goal-directed and habitual action. Neuropsychopharmacology. 2010;35:48–69. doi: 10.1038/npp.2009.131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayer HM, Glimcher PW. Midbrain dopamine neurons encode a quantitative reward prediction error signal. Neuron. 2005;47:129–141. doi: 10.1016/j.neuron.2005.05.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellebaum C, Daum I. Learning-related changes in reward expectancy are reflected in the feedback-related negativity. Eur. J. Neurosci. 2008;27:1823–1835. doi: 10.1111/j.1460-9568.2008.06138.x. [DOI] [PubMed] [Google Scholar]

- Bellebaum C, Kobza S, Thiele S, Daum I. It was not MY fault: Event-related brain potentials in active and observational learning from feedback. Cereb. Cortex. 2010a;20:2874–2883. doi: 10.1093/cercor/bhq038. [DOI] [PubMed] [Google Scholar]

- Bellebaum C, Kobza S, Thiele S, Daum I. Processing of expected and unexpected monetary performance outcomes in healthy older subjects. Behav. Neurosci. 2011;125:241–251. doi: 10.1037/a0022536. [DOI] [PubMed] [Google Scholar]

- Bellebaum C, Polezzi D, Daum I. It is less than you expected: The feedback-related negativity reflects violations of reward magnitude expectations. Neuropsychologia. 2010b;48:3343–3350. doi: 10.1016/j.neuropsychologia.2010.07.023. [DOI] [PubMed] [Google Scholar]

- Benes FM. The development of prefrontal cortex: The maturation of neurotransmitter systems and their interactions. In: Nelson CA, Luciana M, editors. Handbook of Developmental Cognitive Neuroscience. Cambridge: MIT Press; 2001. pp. 79–92. [Google Scholar]

- Beste C, Saft C, Andrich J, Gold R, Falkenstein M. Error processing in Huntington’s disease. PLOS One. 2006;1:1–5. doi: 10.1371/journal.pone.0000086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boksem MAS, Kostermans E, Milivojevic B, De Cremer D. Social status determines how we monitor and evaluate our performance. Soc. Cogn. Affect. Neurosci. 2012;7:304–313. doi: 10.1093/scan/nsr010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick M. Conflict monitoring and decision making: Reconciling two perspectives on anterior cingulate function. Cogn. Affect. Behav. Neurosci. 2007;7:356–366. doi: 10.3758/cabn.7.4.356. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychol. Rev. 2001;108:624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Brown JW, Braver TS. Learned predictions of error likelihood in the anterior cingulate cortex. Science. 2005;18:1118–1121. doi: 10.1126/science.1105783. [DOI] [PubMed] [Google Scholar]

- Bunzeck N, Dayan P, Dolan RJ, Duzel E. A common mechanism for adaptive scaling of reward and novelty. Hum. Brain Mapp. 2010;31:1380–1394. doi: 10.1002/hbm.20939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bush G, Luu P, Posner MI. Cognitive and emotional influences in anterior cingulate cortex. Trends Cogn. Sci. 2000;4:215–222. doi: 10.1016/s1364-6613(00)01483-2. [DOI] [PubMed] [Google Scholar]

- Bush G, Vogt BA, Holmes J, Dale AM, Greve D, Jenike MA, Rosen BR. Dorsal anterior cingulate cortex: A role in reward-based decision making. Proc. Natl. Acad. Sci. U.S.A. 2002;99:523–528. doi: 10.1073/pnas.012470999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camille N, Tsuchida A, Fellows LK. Double dissociation of stimulus-value and action-value learning in humans with orbitofrontal or anterior cingulate cortex damage. J. Neurosci. 2011;31:15048–15052. doi: 10.1523/JNEUROSCI.3164-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinal RN, Parkinson JA, Hall J, Everitt BJ. Emotion and motivation: The role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci. Biobehav. Rev. 2002;26:321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- Carlson JM, Foti D, Mujica-Parodi LR, Harmon-Jones E, Hajcak G. Ventral striatal and medial prefrontal BOLD activation is correlated with reward-related electrocortical activity: A combined ERP and fMRI study. Neuroimage. 2011;57:1608–1616. doi: 10.1016/j.neuroimage.2011.05.037. [DOI] [PubMed] [Google Scholar]

- Cavanagh JF, Frank MJ, Klein TJ, Allen JJB. Frontal theta links prediction errors to behavioral adaptation in reinforcement learning. Neuroimage. 2010;49:3198–3209. doi: 10.1016/j.neuroimage.2009.11.080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chase HW, Swainson R, Durham L, Benham L, Cools R. Feedback-related negativity codes prediction error but not behavioral adjustment during probabilistic reversal learning. J. Cogn. Neurosci. 2011;23:936–946. doi: 10.1162/jocn.2010.21456. [DOI] [PubMed] [Google Scholar]

- Chergui K, Suaud-Chagny MF, Gonon F. Nonlinear relationship between impulse flow, dopamine release and dopamine elimination in the rat brain in vivo. Neuroscience. 1994;62:641–645. doi: 10.1016/0306-4522(94)90465-0. [DOI] [PubMed] [Google Scholar]

- Cockburn J, Frank M. Reinforcement learning, conflict monitoring, and cognitive control: An integrative model of cingulate-striatal interactions and the ERN. In: Mars R, Sallet J, Rushworth M, Yeung N, editors. Neural Basis of Motivational and Cognitive Control. Cambridge: MIT Press; 2011. pp. 311–331. [Google Scholar]

- Cohen M, Elger CE, Ranganath C. Reward expectation modulates feedback-related negativity and EEG spectra. Neuroimage. 2007;35:968–978. doi: 10.1016/j.neuroimage.2006.11.056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen MX, Ranganath C. Reinforcement learning signals predict future decisions. J. Neurosci. 2007;27:371–378. doi: 10.1523/JNEUROSCI.4421-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coles MGH, Scheffers MK, Holroyd CB. Why is there an ERN/Ne on correct trials? Response representations, stimulus-related components, and the theory of error-processing. Biol. Psychol. 2002;56:173–189. doi: 10.1016/s0301-0511(01)00076-x. [DOI] [PubMed] [Google Scholar]

- Daw ND, Kakade S, Dayan P. Opponent interactions between serotonin and dopamine. Neural Netw. 2002;15:603–616. doi: 10.1016/s0893-6080(02)00052-7. [DOI] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat. Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- de Bruijn ERA, Hulstijn W, Verkes RJ, Ruigt GSF, Sabbe BGC. Drug-induced stimulation and suppression of action monitoring in healthy volunteers. Psychopharmacology (Berl.) 2004;177:151–160. doi: 10.1007/s00213-004-1915-6. [DOI] [PubMed] [Google Scholar]

- de Bruijn ERA, Sabbe BGC, Hulstijn W, Ruigt GSF, Verkes RJ. Effects of antipsychotic and antidepressant drugs on action monitoring in healthy volunteers. Brain Res. 2006;1105:122–129. doi: 10.1016/j.brainres.2006.01.006. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Posner MI, Tucker DM. Localization of a neural system for error detection and compensation. Psychol. Sci. 1994;5:303–305. [Google Scholar]

- Dien J. Evaluating two-step PCA of ERP data with Geomin, Infomax, Oblimin, Promax, and Varimax rotations. Psychophysiology. 2010;47:170–183. doi: 10.1111/j.1469-8986.2009.00885.x. [DOI] [PubMed] [Google Scholar]

- Doñamayor N, Marco-Pallarés J, Heldmann M, Schoenfeld MA, Münte TF. Temporal dynamics of reward processing revealed by magnetoencephalography. Hum. Brain Mapp. 2011;32:2228–2240. doi: 10.1002/hbm.21184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donkers FCL, van Boxtel GJM. Mediofrontal negativities to averted gains and losses in the slot-machine task: A further investigation. J. Psychophysiol. 2005;19:256–262. [Google Scholar]

- Dunning JP, Hajcak G. Error-related negativities elicited by monetary loss and cues that predict loss. Neuroreport. 2008;18:1875–1878. doi: 10.1097/WNR.0b013e3282f0d50b. [DOI] [PubMed] [Google Scholar]

- Elliott R, Newman JL, Longe OA, Deakin JFW. Instrumental responding for rewards is associated with enhanced neuronal response in subcortical reward systems. Neuroimage. 2004;21:984–990. doi: 10.1016/j.neuroimage.2003.10.010. [DOI] [PubMed] [Google Scholar]

- Emeric EE, Brown JW, Leslie M, Pouget P, Stuphorn V, Schall JD. Performance monitoring local field potentials in the medial frontal cortex of primates: Anterior cingulate cortex. J. Neurophysiol. 2008;99:759–772. doi: 10.1152/jn.00896.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eppinger B, Kray J, Mock B, Mecklinger A. Better or worse than expected? Aging, learning, and the ERN. Neuropsychologia. 2008;46:521–539. doi: 10.1016/j.neuropsychologia.2007.09.001. [DOI] [PubMed] [Google Scholar]

- Eppinger B, Mock B, Kray J. Developmental differences in learning and error processing: Evidence from ERPs. Psychophysiology. 2009;46:1043–1053. doi: 10.1111/j.1469-8986.2009.00838.x. [DOI] [PubMed] [Google Scholar]

- Falkenstein M, Hielscher H, Dziobek I, Schwarzenau P, Hoormann J, Sundermann B, Hohnsbein J. Action monitoring, error detection, and the basal ganglia: An ERP study. Neuroreport. 2001;12:157–161. doi: 10.1097/00001756-200101220-00039. [DOI] [PubMed] [Google Scholar]

- Falkenstein M, Hohnsbein J, Hoormann J, Blanke L. Effects of crossmodal divided attention on late ERP components. II. Error processing in choice reaction tasks. Electroencephalogr. Clin. Neurophysiol. 1991;78:447–455. doi: 10.1016/0013-4694(91)90062-9. [DOI] [PubMed] [Google Scholar]

- Folstein JR, van Petten CV. Influence of cognitive control and mismatch on the N2 component of the ERP: A review. Psychophysiology. 2008;45:152–170. doi: 10.1111/j.1469-8986.2007.00602.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foti D, Hajcak G. Depression and reduced sensitivity to non-rewards versus rewards: Evidence from event-related potentials. Biol. Psychol. 2009;81:1–8. doi: 10.1016/j.biopsycho.2008.12.004. [DOI] [PubMed] [Google Scholar]

- Foti D, Weinberg A, Dien J, Hajcak G. Event-related potential activity in the basal ganglia differentiates rewards from non-rewards: Temporospatial principal components analysis and source localization of the feedback negativity. Hum. Brain Mapp. 2011;32:2207–2216. doi: 10.1002/hbm.21182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frank MJ, O’Reilly RC, Curran T. When memory fails, intuition reigns. Midazolam enhances implicit inference in humans. Psychol. Sci. 2006;17:700–707. doi: 10.1111/j.1467-9280.2006.01769.x. [DOI] [PubMed] [Google Scholar]

- Fujiwara J, Tobler PN, Taira M, Iijima T, Tsutsui KI. Segregated and integrated coding of reward and punishment in the cingulate cortex. J. Neurophysiol. 2009;101:3284–3293. doi: 10.1152/jn.90909.2008. [DOI] [PubMed] [Google Scholar]

- Fukushima H, Hiraki K. Perceiving an opponent’s loss: Gender-related differences in the medial-frontal negativity. Soc. Cogn. Affect. Neurosci. 2006;1:149–157. doi: 10.1093/scan/nsl020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gehring WJ, Goss B, Coles MGH, Meyer DE, Donchin E. A neural system for error detection and compensation. Psychol. Sci. 1993;4:385–390. [Google Scholar]

- Gehring WJ, Knight RT. Prefrontal-cingulate interactions in action monitoring. Nat. Neurosci. 2000;3:516–520. doi: 10.1038/74899. [DOI] [PubMed] [Google Scholar]

- Gehring WJ, Willoughby AR. The medial frontal cortex and the rapid processing of monetary gains and losses. Science. 2002;295:2279–2282. doi: 10.1126/science.1066893. [DOI] [PubMed] [Google Scholar]

- Gemba H, Sasaki K, Brooks VB. “Error” potentials in limbic cortex (anterior cingulate area 24) of monkeys during motor learning. Neurosci. Lett. 1986;70:223–227. doi: 10.1016/0304-3940(86)90467-2. [DOI] [PubMed] [Google Scholar]

- Gentsch A, Ullsperger P, Ullsperger M. Dissociable medial frontal negativities from a common monitoring system for self- and externally caused failure of goal achievement. Neuroimage. 2009;47:2023–2030. doi: 10.1016/j.neuroimage.2009.05.064. [DOI] [PubMed] [Google Scholar]

- Godlove DC, Emeric EE, Segovis CM, Young MS, Schall JD, Woodman GF. Event-related potentials elicited by errors during the stop-signal task. I. Macaque monkeys. J. Neurosci. 2011;31:15640–15649. doi: 10.1523/JNEUROSCI.3349-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goyer JP, Woldorff MG, Huettel SA. Rapid electrophysiological brain responses are influenced by both valence and magnitude of monetary reward. J. Cogn. Neurosci. 2008;20:2058–2069. doi: 10.1162/jocn.2008.20134. [DOI] [PMC free article] [PubMed] [Google Scholar]