Abstract

It is well documented that the human amygdala responds strongly to human faces, especially when depicting negative emotions. The extent to which the amygdala also responds to other animate entities - as well as to inanimate objects - and how that response is modulated by the object's perceived affective valence and arousal value remains unclear. To address these issues, subjects performed a repetition detection task to photographs of negative, neutral, and positive faces, animals, and manipulable objects equated for emotional valence and arousal level. Both the left and right amygdala responded more to animate entities than manipulable objects, especially for negative objects (fearful faces, threatening animals, versus weapons) and to neutral stimuli (faces with neutral expressions, neutral animals, versus tools). Thus, in the absence of contextual cues, the human amygdala responds to threat associated with some object categories (animate things) but not others (weapons). Although failing to activate the amygdala, relative to viewing other manipulable objects, viewing weapons did elicit an enhanced response in dorsal stream regions linked to object action. Thus, our findings suggest two circuits underpinning an automatic response to threatening stimuli; an amygdala-based circuit for animate entities, and a cortex-based circuit for responding to manmade, manipulable objects.

Keywords: Amygdala, fMRI, object category, faces, affect

1. Introduction

A substantial body of evidence has established that the human amygdala responds strongly to material judged to be arousing or emotion laden, particularly for negative and threatening stimuli (for reviews, see Adolphs, 2008; Phelps & LeDoux, 2005; Zald, 2003). It has also been well documented that the amygdala responds selectively to visual depictions of humans, irrespective of the affective valence – ranging from images of human faces with neutral expressions (e.g., Breiter et al., 1996; Fitzgerald et al., 2005; Wright & Liu, 2006), to more abstract depictions of biological motion (point-light stimuli, Bonda et al., 1996) and social interactions (moving geometric shapes interpreted as social; Castelli et al., 2000, 2002; Martin & Weisberg, 2003; Wheatley et al., 2007). Thus, the human amygdala appears to be particularly tuned to processing threat and visual information about others.

One possibility is that the response of the human amygdala to images of other individuals is related to a more general advantage for detecting any animate entity, perhaps due, at least in part, to a bias for detecting threat. If so, then this threat-detection bias should extend to non-human animals that have posed a threat to our survival throughout our evolutionary history. In support of this conjecture, studies have shown that fear is more readily learned, and more resistant to extinction, in response to animals that threaten survival than to objects that have only recently emerged in our cultural history (for review, see Ohman & Mineka, 2001; Mineka & Ohman, 2002).

It has also been suggested that the human visual attention system is better tuned to detecting animals than other objects (New et al., 2007). Moreover, this detection advantage may be particularly strong for threatening humans and animals. For example, using visual search paradigms, a number of studies have found that we are quicker to detect angry faces than happy faces (e.g., Fox et al., 2000; Ohman et al., 2001a), and faster to detect fear-relevant (e.g., spiders) than non-fear relevant objects (e.g., flowers) (Ohman et al., 2001b). These and related findings have been used to support the idea that evolution has provided us with a hardwired “fear module”, assumed to depend on the amygdala, that allows us to quickly and automatically detect threatening stimuli (Ohman & Mineka, 2001; Seligman, 1970). Such an amygdala-based neural mechanism would undoubtedly provide a survival advantage. Thus, a strong prediction from this view is that we should not only be faster to detect threatening relative to non-threatening objects (the threat superiority effect), we also should be faster to detect evolutionary-relevant than non-evolutionary threatening objects. Contrary to this prediction, however, Blanchette (2006) found that, although subjects were quicker to detect fear-relevant stimuli (e.g., snakes) than non-fear-relevant objects (e.g., flowers), subjects were not faster to detect evolutionary-relevant (snakes, spiders) than modern (guns, syringes) threats, supporting a general, rather than a category-specific threat-superiority effect. Clearly, evolution could not have equipped us to detect potential threats from recently created objects (Blanchette, 2006). Thus, Blanchette's findings suggest the critical property that guides visual detection under these conditions is perceived threat, not evolutionary significance (Blanchette, 2006).

Despite these behavioral findings, studies on neural underpinnings of the interaction between an object category and perceived emotional valence and arousal are lacking. Here, we addressed this issue using fMRI while subjects viewed images of faces, animals, and manipulable objects equated for affective valence and arousal level. To evaluate the amygdala's automatic response to different object categories, subjects performed a simple repetition detection task while viewing pictures from different object categories displayed against a neutral background (i.e., devoid of contextual information). Categories included faces with fearful, neutral, and happy expressions, animals judged to be threatening, neutral, and pleasant, and threatening, neutral, and pleasant manipulable objects. We predicted that threatening objects would yield an enhanced amygdala response regardless of object category, and that this threat-superiority effect would be greater for animate entities (human faces, animals) than for manmade objects.

2. Methods

2.1 Subjects

Sixteen individuals (8 male; mean SD age = 32.58 ± 7.10 years) participated in the fMRI experiment. A separate group of 14 subjects (6 male; mean SD age = 30.33 ± 7.03 years) participated in the emotional rating of the stimuli that were used in the brain imaging study. All subjects were right-handed, native English speakers, and gave written informed consent in accordance with procedures and protocols approved by NIH institutional Review Board.

2.2 Stimuli

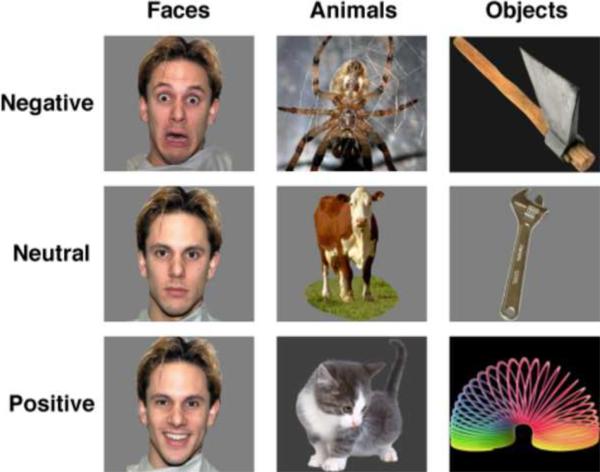

The stimuli consisted of photographs of human faces, animals, and manipulable objects, displayed against a uniform gray or black background (Figure 1). Faces included 48 individuals (24 males, 24 females) who posed with fearful, neutral and happy expressions. Of these, 44 were drawn from the NimStim Emotional Face Stimuli set (Tottenham et al., 2009) and 4 others were selected from the Karolinska Directed Emotional Faces (KDEF) set (Lundqvist et al., 1998). Photographs of 144 animals and 144 manipulable objects were selected from a larger picture corpus following a rating procedure that allowed us to equate the categories on affective valence and level of arousal (see below). Based on these ratings the animals were classified as negative (threatening, e.g., spider, snake), neutral (e.g., cow, sheep) and positive (e.g., kitten, puppy); manipulable objects were classified as negative (weapons, e.g., gun, knife), neutral (common tools, e.g., hammer, wrench), and positive (toys, e.g., slinky, spinning top) (see the Appendix for a complete list of animals and manipulable objects). Control stimuli consisted of phase-scrambled images of each object picture that preserved the color and the spatial frequency of the original image.

Figure 1.

Examples of stimuli used in the fMRI study.

2.3 Picture Ratings

Subjects were presented with an initial picture set that included 221 animal photographs (31 different basic level animal categories; e.g., dog, cat) and 219 manipulable object photographs (34 different basic level object categories; hammer, saw). There were 6–12 exemplars of each basic level object (i.e., six pictures of different hammers). For each photograph, subjects (N = 14) provided a rating of affect valence (1 = extremely negative; 9 = extremely positive), and arousal (1 = extremely calm, 9 = extremely excited/aroused). When rating affective valence, subjects were instructed to use the “negative” end of the scale to indicate how much “fear or threat” they associated with the object. They were also told to rate the object type (i.e., the basic object category; gun, knife, etc.), not the specific photograph. During the rating tasks, all of the photographs, including the 144 faces (fearful, neutral and happy expressions of 48 individuals), were presented in random order. Each picture remained visible on the screen until the subjects completed his/her rating. Based on these ratings, the faces, animal and manipulable objects were divided into three emotional valence sets; unpleasant, neutral, and pleasant. The final stimulus set included eight basic level animals and eight basic level objects in each valence condition, with each object type represented, on average, by 6 exemplars (range 3–9), and with categories balanced for emotional valence and arousal ratings (Figure 1). Animal and manipulable objects were also equated for name word length, word frequency, familiarity and imagability based on the MRC Psycholinguistic Database (Coltheart, 1981).

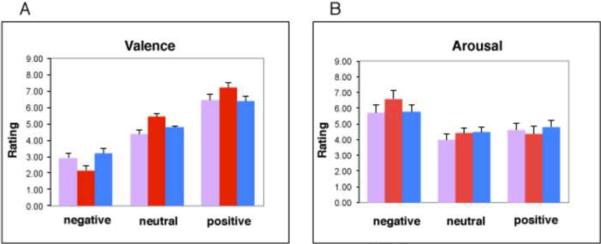

An ANOVA confirmed that the final set of items used to construct the object categories were equated, overall, with regard to ratings of affective valence and arousal (valence ratings, main effect of Category, p > 0.10; arousal ratings, main effect of Category, p > 0.15) (Figure 2). By design, the main effect of Affect was significant for the ratings of affective valence (F = 58.2, p < 0.0001), with significant differences between affect levels (negative, neutral, positive) for each stimulus category (faces, animals, objects) (all p's < 0.0001). By design, there was also a main effect of Affect for the arousal ratings (F = 16.22, p < 0.0001). Negative stimuli had higher arousal rating scores than positive (p < 0.01) and neutral stimuli (p < 0.0001). Positive and neutral stimuli had comparable ratings of arousal (p = 0.83).

Figure 2.

Mean (±SEM) ratings of affective valence (A) and arousal (B) for the photographs assigned to the negative, neutral, and positive blocks for each category (faces = pink, animals = red, manipulable objects = blue).

In addition, the Category × Affect and the Category × Arousal interactions were significant (F = 11.99, p < .001, and F = 3.22, p, .05, respectively). However, nearly all the specific contrasts between categories at each valence and arousal level were not significant. The only exceptions were that the threatening animals were rated as more negative than the fearful faces, and the neutral animals were rated as more pleasant than the neutral faces (p < .05; Bonforonni corrected). Overall, as illustrated in Figure 2, the ratings of the animals tended to be a bit more extreme (more negative, more positive) than the ratings of the faces and manipulable objects. Subjects simply find bats and spiders etc., more negative than faces expressing fear, dogs and cats etc., more positive than faces with neutral expressions, and puppies and kittens, etc., more positive than smiling faces.

2.4 fMRI procedure

Pictures were blocked by category and affect. Each block consisted of 20 pictures (16 different objects, 4 repeats) and lasted 30 sec, with pictures presented for 1000ms followed by a 500ms fixation cross. To insure that subjects attended to each image, they were instructed to press a button whenever the exact same picture was repeated (repetition detection). Six alternating blocks of pictures and their corresponding scrambled images were presented in each of nine runs for a total of 27 picture blocks (3 unique blocks for each of the 9 category × affect combinations) and 27 scrambled image blocks.

2.5 MRI acquisition

MR data were collected on a General Electric (GE) 3 Tesla Signa scanner. Functional data were acquired using a gradient echo, echo-planar imaging (EPI) sequence. Anatomical data were acquired using high-resolution MP-RAGE sequence (TR=7.6ms, flip angle=6°, FOV=22cm, 224×224 matrix, resolution= 0.98× 0.98×1.2 mm3) after functional scanning. GE 8-channel arrayed head coil was used. The parameters used for EPI sequence was TR = 3000ms, TE = 40ms, flip angle = 90°, FOV = 24cm, matrix = 96×96, slice=34, resolution = 2.5×2.5×3 mm3. These imaging parameters have been shown to yield sufficient tSNR to detect significant activity in the amygdala, as well as in entorhinal and perirhinal cortices (Bellgowan et al., 2006, 2009).

2.6 Image analysis

AFNI was used for imaging data pre-processing and statistical analysis. The first three EPI volumes in each run were discarded to account for magnetization equilibrium. The remaining volumes were registered, smoothed with RMS width of 3mm (FWHM = 4.08mm), and standardized to a mean of 100. Multiple regression was used to calculate the response to each condition compared with the scrambled baseline for each subject. The regression model included 9 regressors of interest (a gamma-variate function for each condition), and 6 regressors of non-interest (motion parameters). Anatomical and statistical volumes were then warped into standard stereotaxic space of the Talairach and Tournoux atlas (1988).

To evaluate activity in the amygdala, an anatomical mask was created for each subject and placed into standardized stereotaxic space. For each subject, the left and right amygdala was manually drawn on the subject's T1 image. The amygdala - hippocampal boundary was first identified and marked in each hemisphere using the sagittal view. Then in the coronal view, the amygdala was traced starting from its inferio-lateral border (for details see Doty et al., 2008). These anatomical masks were averaged to create a group anatomical mask that consisted of all voxels showing overlap for at least 75% of standardized individual subject amygdala masks (Volume = 1302mm3 in the left and Volume = 1672mm3 in the right). Beta weights were extracted from each subject, averaged across all voxels in the amygdala mask, and evaluated with a mixed-effects ANOVA, with subject as a random factor, and Category (Faces, Objects & Animals) and Affect (Positive, Neutral, Negative) as fixed factors. Activity outside the amygdala was evaluated with a voxelwise mixed-effects ANOVA, with subject as a random factor, and Category and Affect as fixed factors. These activations were corrected to p < 0.01 using AlphaSim program in AFNI (Volume = 234mm3).

3. Results

3.1 Behavioral data

During scanning, subjects responded quickly and accurately to stimulus repetitions. Analyses of the accuracy and reaction time data failed to reveal any effect of category, affect, or their interaction (all F's < 1.0). Thus, the imaging data could be interpreted without concern of behavioral differences.

3.2 Imaging data

3.2.1 Evaluating amygdala activity

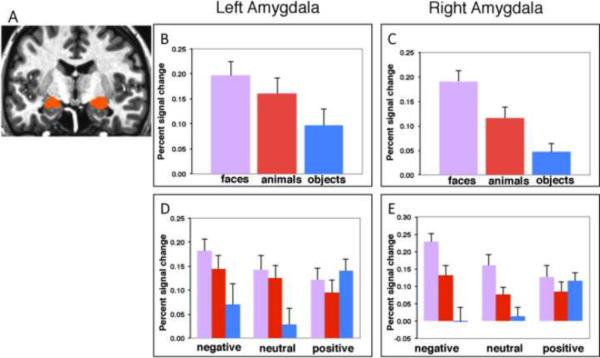

A repeated-measures ANOVA of the BOLD responses averaged across all amygdala voxels revealed a main effect of Category, bilaterally (p < 0.005 in each hemisphere), with faces and animals each evoking stronger activity than the manipulable objects in the left (faces > objects, p < 0.005; animals > objects, p < 0.015) and right (faces > objects, p < 0.0001; animals > objects, p < 0.05) amygdala. In addition, faces evoked stronger activity than animals in the right (p < 0.01), but not in the left amygdala (p > 0.20) (Figure 3 B & C). Although the main effect of Affective Valence was not significant (p > 0.15 in the left and in the right amygdala), responses to the different categories were modulated by affective valence, especially in the right amygdala (Category × Affect, p < 0.01 in the right, p < 0.07 in the left). As illustrated in Figure 3 D & E, fearful faces elicited a stronger response than did threatening animals (p < .005) and neutral faces elicited a stronger response than common animals (p < .06) in the right amygdala (no significant face versus animal differences were noted in the left amygdala). In addition, fearful faces and threatening animals elicited stronger amygdala responses than weapons (fearful faces > weapons, p < 0.0001 in the right, p = 0.015 in the left amygdala; threatening animals > weapons, p < 0.05 in the right, p = 0.085 in the left amygdala), and neutral faces and common animals elicited stronger responses than tools (neutral faces > tools, p < 0.001 in the right, p < 0.01 in the left amygdala; neutral animals > tools, p < 0.06 in the right and p = 0.05 in the left amygdala).

Figure 3.

(A) Group anatomical amygdala mask. (B – E) Histograms show mean (±SEM) percent signal change in the left and right amygdala in response to faces (pink), animals (red), and manipulable objects (blue) for the main effect of Category (B & C), and the Category × Affect interaction (D & E). The percent signal change to the weapons (negative objects), relative to the scrambled object baseline, = −.002. (see text for details).

This enhanced response to animate entities, however, did not hold for stimuli assigned a positive valence (happy faces, pleasant animals, and toys). The lack of category differences for positive stimuli was largely due to the aberrant response to toys, relative to the other object categories (Figure 3 D & E). Specifically, whereas faces and animals assigned a positive valence produced the weakest amygdala activity relative the category-related stimuli assigned a negative or neutral valence, viewing toys produced the strongest activity in the amygdala relative to weapons, tools.

3.2.2 Evaluating responses to toys

We performed two post-hoc analyses to explore possible factors contributing to the enhanced amygdala response to toys relative to the other manmade, manipulable objects. The first analysis focused on the influence of object color and potential spatial frequency differences between the photographs of toys and the other manipulable objects. Analysis of the responses to the phase-scrambled images from each category (which preserved color and spatial frequency information of the original objects) failed to support this possibility. No amygdala activity was found for the scrambled images of the toys relative to other scrambled images of weapons or tools (p's > 0.05). A second set of analyses focused on the possibility that the toys were more associated with social factors than the other manipulable objects. To explore this possibility we asked subjects questions about the different types of manipulable objects used in our study (N = 14, 6 male, none of whom participated in the functional imaging study or in the initial stimulus ratings study). The questions were designed to probe manipulability (How much hand movement is needed to use this object?) self-propelled motion (Once set in motion, how much do the actions of this object appear to continue on their own?) and social interaction (When you think about this object, to what extent is this object associated with people interacting with each other?) Each question was answered by giving a rating ranging from 1 (“not at all”) to 9 (“very much”). Analysis of these data indicated that although the toys were judged to be less manipulable than tools (p < 0.05), and were rated as being more likely to move on their own relative to tools (p < 0.001) (toys and weapons did not differ on either probe; p's > 0.20). The toys were also judged to be more associated with people interacting with each other than were either the tools (p < 0.001) or the weapons (p < .05).

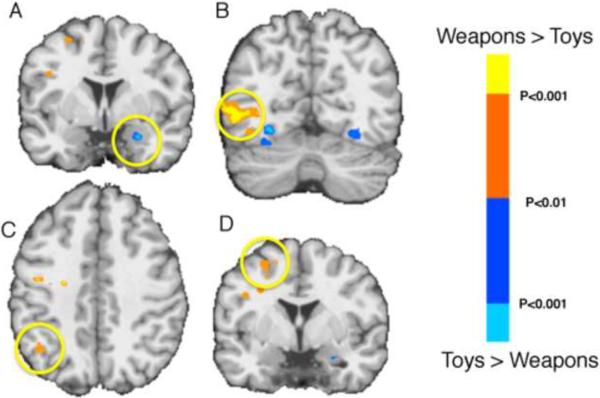

3.2.3 Evaluating responses to weapons

The amygdala's response to the weapons was also particularly noteworthy because these objects were judged to be as threatening/negative, and as arousing, as the fearful faces and the threatening animals. Nevertheless, neither the weapons (nor the tools) produced a significant response in either the left or right amygdala, even when compared to their scrambled object images (p > 0.13 in the left and p > 0.90 in the right for weapons, p > 0.40 in the left and p > 0.60 in the right for tools, relative to their scrambled image baseline). To explore the response to the manipulable objects in greater detail we performed a whole-brain, voxelwise analysis. Consistent with previous findings (e.g., Chao et al., 2002; Mahon et al., 2007), relative to viewing animals and faces, the manipulable objects yielded activity in the left medial region of the fusiform gyrus (−30 −26 −17 for objects > animals, and −30 −30 −16 for objects > faces), as well as in the regions of the dorsal stream in the left hemisphere; a posterior region of the middle temporal gyrus (−47 −63 −5 for objects > animals, and −39 −60 −3 for objects > faces) and the intraparietal sulcus (−47 −42 +35 for objects > animals, and −45 −33 +37 for objects > faces). In each of these dorsal stream regions there was a stronger response to viewing the weapons than toys, whereas, viewing the toys yielded greater activity in the amygdala, consistent with the ROI analysis of the amygdala (Figure 4).

Figure 4.

Responses to weapons and toys. Regions showing enhanced responses to weapons (relative to toys; orange/yellow spectrum) and toys (relative to weapons; blue spectrum) (p < .01). Yellow circle indicates greater activity for viewing photographs of toys than weapons in the right amygdala (A), and greater activity for viewing weapons in left middle temporal gyrus (B) (−38 −63 −6), left posterior parietal cortex (C) (−20 −55 +43), and left motor/premotor cortex (D) (−33 −6 +34). The left middle temporal and parietal regions were also identified by contrasting viewing photographs of tools to viewing photographs of neutral animals. Activity associated with viewing weapons was significantly greater than toys in these independently localized regions (p's < .01).

4. Discussion

There were four main findings. (1) Although matched overall on ratings of affective valence and arousal, human faces and animals produced stronger activity than manipulable objects in the left and right amygdala. (2) Faces produced stronger activity that animals in the right, but not left, amygdala. In particular, in the right amygdala, viewing fearful faces elicited a stronger response than viewing threatening animals, and neutral faces elicited a stronger response than common animals. (3) Viewing weapons failed to elicit a response in the amygdala, but did produce a stronger response than other manipulable objects in regions of the dorsal stream typically associated object manipulation and action. (4) The enhanced amygdala response to animate entities was not found for stimuli equated for positive valence. Viewing manipulable objects associated with positive affect (toys) elicited a response in the left and right amygdala that was greater than the response to viewing tools and viewing weapons, and comparable to the response to happy faces and animals associated with a positive affect.

Overall, our results provided relatively strong support for the idea that the amygdala is part of the domain-specific circuitry for quickly responding to and representing information about animate entities (Adolphs, 2009; Caramazza & Shelton, 1998; Martin, 2009; Pessoa & Adolphs, 2010, Phelps & LeDoux, 2005, Simmons & Martin, 2011). Relative to the manipulable objects, not only was there a stronger response in the amygdala to viewing photographs of faces and animals, neither the weapons nor the tools elicited an amygdala response over and above the response to the scrambled images. Moreover, for animate stimuli, the amygdala seems to be particularly tuned to images of human faces, showing enhanced responses to faces relative to animals, especially in the right amygdala. This face-superiority effect occurred even though the animal stimuli were assigned a more negative rating than the fearful faces and a more positive rating than the neutral faces. The right hemisphere superiority for faces is consistent with both neuropsychological (e.g., Bouvier & Engel, 2006; Drane et al., 2008) and neuroimaging (e.g., Kriegeskorte et al., 2007) investigations of face recognition in posterior and anterior temporal cortices. Our findings show that for the amygdala, the right hemisphere face recognition bias holds not only in comparison to inanimate objects, but also in comparison to another category of animate things.

The amygdala response to the faces and animals was strongest for the stimuli judged to have the most negative valence and the most arousing, with faces expressing fear and threatening animals producing significant enhanced amygdala responses relative to weapons. This finding is consistent with the notion that the amygdala functions, in part, as a hardwired “fear module”, responding faster to threats from evolutionary-relevant animate entities (conspecific and heterospecific) than to equally threatening, but manmade, modern objects (Ohman & Mineka, 2001; Mineka & Ohman, 2002). Threatening manmade objects (weapons), however, were not without effect. Indeed, viewing photographs of weapons yielded enhanced responses throughout a number of regions in the dorsal visual processing stream that typically respond when viewing tools and other manipulable objects (e.g., Chao et al., 2002; Mahon et al., 2007). This finding, in turn, may help to explain the failure to find a detection speed advantage for threatening, evolutionary-relevant versus non-evolutionary relevant objects in behavior studies (Blanchette et al., 2006). Specifically, all fear-relevant, threatening objects may be detected more quickly than non-threatening objects, but via different circuitry: An amygdala-based circuit for evolutionary-relevant entities, and a cortex-based circuit for threatening modern, manmade objects.

The most unexpected finding was the bilateral amygdala response to toys. Not only was this response stronger than the response to the other manipulable objects – tools and weapons – it was equal to the response to the animate entities, faces and animals, that received comparable positive affective and arousal ratings. Clearly, many factors potentially could have contributed to this result. One possibility was that the response to the toys was driven by differences in lower level visual features such object shape, spatial frequencies, and object color, relative to the other manipulable objects. For example the photographs of the toys contained more and brighter colors than the tools or the weapons that may have served as an amygdala-alerting signal. However, this possibility received no support from a post-hoc analysis of the responses to the phase-scrambled images from each category (which preserved color, as well as spatial frequency information, of the original object photographs). Another possibility was that the response to toys was related to social factors. There is an extensive literature associating amygdala activity to social perception and understanding of social interactions (e.g., for reviews see Adolphs, 2010; Frith, 2009). Viewing toys might be associated with amygdala activity because toys are particularly effective in automatically eliciting inferences of a social nature. Consistent with this hypothesis, toys were found to be more strongly associated with `animacy' and with social interactions. Specifically, the toys were seen as more likely to move on their own relative to tools, and more associated with people interacting with each other than either tools or weapons. Thus, although post-hoc, these rating data were consistent with the possibility that the amygdala response to toys reflected the automatic generation social inferences elicited when viewing these objects.

When interpreting these findings, it is important to keep in mind that comparing objects belonging to different conceptual categories always has an unavoidable, apples-to-oranges quality. This is especially so when one of the categories is faces. Animals and the manipulable objects have distinct, basic level names whereas faces do not (cats and dogs, hammers and knives, versus `face'). Similarly, animals and manipulable objects vary greatly in their physical properties whereas faces do not (distinct shapes, colors, and textures versus ovals, all with similar color, shape, and spatial arrangement of their features). These categories also have an unavoidable difference in affective context. Facial expressions signal how another individual is feeling. Although animal faces also can signal how they are feeling, in the context of our experiment, the animal pictures were chosen because they were representative of objects that would elicit an emotional response in the viewer in the real world. The same held for the manipulable objects. One could argue that faces displaying different emotions also would elicit an emotional response in the viewer based on our propensity for empathy. Nevertheless, overall, it is unlikely that our stimuli elicited the exact same emotions. This is another example of the unavoidable problem associated with comparing objects from different conceptual categories. However, this fact alone does not invalidate our findings any more so than it invalidates other studies that directly compare different object categories using pictures or names.

Investigations in human and non-human primates have documented that the amygdala is a complex structure that serves multiple functions in the service of social processing including modulating the influence of emotion on attention, perception, learning, and long-term memory (Adolphs, 2010; Dolan, 2007; Murray, 2007; Phelps, 2006; Vuilleumier, 2005). The amygdala is also critically involved in detecting salient stimuli (e.g., Phelps & LeDoux, 2005). Our findings suggest that with regard to saliency detection, the amygdala has a strong bias for animate entities (Mormann et al., 2011; Rutishauser et al, 2011) especially when they are perceived as threatening, presumably because threatening animate things share certain properties. Although the nature of these properties remains to be determined, one possibility is that the amygdala contains a mechanism that allow us to more readily learn to associate threat with certain object properties (e.g., curvy things that move on their own) than to other properties (e.g., angular objects that are stationary).

In contrast, rather than eliciting an amygdala response, threat associated with manmade objects appears to be signaled via a cortical response in regions associated with object action. Although these findings are consistent with a relatively `hardwired', evolutionary perspective underpinning the role of the amygdala in visual detection, the response to toys, and the lack of an animate category bias for objects assigned a positive affect, clearly does not. One possibility, supported by our post-hoc rating data, is that toys are more associated with social interaction. Within this view, activation of the amygdala activity would have reflected the automatic inference of social interaction. This possibility deserves further study.

Acknowledgement

This work was supported by the NIMH Intramural Research Program at the National Institutes of Health.

Appendix

Complete list of animals and manipulable objects

| Animal | Negative | Neutral | Positive |

|---|---|---|---|

| Alligator | Cow | Bunny | |

| Bat | Elephant | Butterfly | |

| Lion | Frog | Chicken | |

| Roach | Guinea pig | Dolphin | |

| Shark | Ladybug | Kitten | |

| Snake | Pig | Panda | |

| Spider | Sheep | Penguin | |

| Wolf | Turtle | Puppy | |

| Object | Axe | Broom | Ball |

| Chainsaw | Hammer | Blocks | |

| Dental drill | Pliers | Cart | |

| Machine Gun | Pump | Piano | |

| Hand Gun | Scissors | Rings | |

| Knife | Screwdriver | Slinky | |

| Rifle | Tweezers | Spin top | |

| Syringe | Wrench | Toy Train |

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Adolphs R. Fear, faces, and the human amygdala. Current Opinion in Neurobiology. 2008;18(2):166–172. doi: 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R. The social brain: Neural basis of social knowledge. Annual Review of Psychology. 2009;60:693–716. doi: 10.1146/annurev.psych.60.110707.163514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R. What does the amygdala contribute to social cognition? Year in Cognitive Neuroscience 2010. 2010;1191:42–61. doi: 10.1111/j.1749-6632.2010.05445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bellgowan PSF, Bandettini PA, van Gelderen P, Martin A, Bodurka J. Improved BOLD detection in the medial temporal region using parallel imaging and voxel volume reduction. NeuroImage. 2006;29:1244–1251. doi: 10.1016/j.neuroimage.2005.08.042. [DOI] [PubMed] [Google Scholar]

- Bellgowan PSF, Buffalo EA, Bodurka J, Martin A. Lateralized spatial and object memory encoding in entorhinal and perirhinal cortices. Learning & Memory, 2009:433–438. doi: 10.1101/lm.1357309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanchette I. Snakes, spiders, guns, and syringes: How specific are evolutionary constraints on the detection of threatening stimuli? Quarterly Journal of Experimental Psychology. 2006;59(8):1484–1504. doi: 10.1080/02724980543000204. [DOI] [PubMed] [Google Scholar]

- Bonda E, Petrides M, Ostry D, Evans A. Specific involvement of human parietal systems and the amygdala in the perception of biological motion. Journal of Neuroscience. 1996;16(11):3737–3744. doi: 10.1523/JNEUROSCI.16-11-03737.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouvier SE, Engel SA. Behavioral deficits and cortical damage loci in cerebral achromatopsia. Cerebral Cortex. 2006;16(2):183–191. doi: 10.1093/cercor/bhi096. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, et al. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17(5):875–887. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain: The animate-inanimate distinction. Journal of Cognitive Neuroscience. 1998;10(1):1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- Castelli F, Happe F, Frith U, Frith C. Movement and mind: A functional imaging study of perception and interpretation of complex intentional movement patterns. Neuroimage. 2000;12(3):314–325. doi: 10.1006/nimg.2000.0612. [DOI] [PubMed] [Google Scholar]

- Chao LL, Weisberg J, Martin A. Experience-dependent modulation of category-related cortical activity. Cerebral Cortex. 2002;12(5):545–551. doi: 10.1093/cercor/12.5.545. [DOI] [PubMed] [Google Scholar]

- Coltheart M. The MRC Psycholinguistic Database. Quarterly Journal of Experimental Psychology Section a-Human Experimental Psychology. 1981;33(NOV):497–505. [Google Scholar]

- Dolan RJ. The human amygdala and orbital prefrontal cortex in behavioural regulation. Philosophical Transactions of the Royal Society B-Biological Sciences. 2007;362(1481):787–799. doi: 10.1098/rstb.2007.2088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Doty TJ, Payne ME, Steffens DC, Beyer JL, Krishnan KRR, Labar KS. Age-dependent reduction of amygdala volume in bipolar disorder. Psychiatry Research-Neuroimaging. 2008:84–94. doi: 10.1016/j.pscychresns.2007.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drane DL, Ojemann GA, Aylward E, Ojemann JG, Johnson LC, Silbergeld DL, et al. Category-specific naming and recognition deficits in temporal lobe epilepsy surgical patients. Neuropsychologia. 2008;46(5):1242–1255. doi: 10.1016/j.neuropsychologia.2007.11.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald DA, Angstadt M, Jelsone LM, Nathan PJ, Phan KL. Beyond threat: Amygdala reactivity across multiple expressions of facial affect. Neuroimage. 2006;30(4):1441–1448. doi: 10.1016/j.neuroimage.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Fox E, Lester V, Russo R, Bowles RJ, Pichler A, Dutton K. Facial expressions of emotion: Are angry faces detected more efficiently? Cognition & Emotion. 2000;14(1):61–92. doi: 10.1080/026999300378996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith C. Role of facial expressions in social interactions. Philosophical Transactions of the Royal Society B-Biological Sciences. 2009;364(1535):3453–3458. doi: 10.1098/rstb.2009.0142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Formisano E, Sorger B, Goebel R. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proceedings of the National Academy of Sciences of the United States of America. 2007;104(51):20600–20605. doi: 10.1073/pnas.0705654104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhman A. The Karolinska Directed Emotional Faces - KDEF. CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, ISBN; 1998. 91-630-7164-9. [Google Scholar]

- Mahon BZ, Milleville SC, Negri GAL, Rumiati RI, Caramazza A, Martin A. Action-related properties shape object representations in the ventral stream. Neuron. 2007;55(3):507–520. doi: 10.1016/j.neuron.2007.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin A. Circuits in mind: The neural foundations for object concepts. In: Gazzaniga M, editor. The Cognitive Neurosciences. 4th Edition MIT Press; 2009. pp. 1031–1045. [Google Scholar]

- Martin A, Weisberg J. Neural foundations for understanding social and mechanical concepts. Cognitive Neuropsychology. 2003;20(3–6):575–587. doi: 10.1080/02643290342000005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mineka S, Ohman A. Phobias and preparedness: The selective, automatic, and encapsulated nature of fear. Biological Psychiatry. 2002;52(10):927–937. doi: 10.1016/s0006-3223(02)01669-4. [DOI] [PubMed] [Google Scholar]

- Mormann F, Dubois J, Kornblith S, Milosavljevic M, Cerf M, Ison M, et al. A category-specific response to animals in the right human amygdala. Nature Neuroscience. 2011;14:1247–1249. doi: 10.1038/nn.2899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray EA. The amygdala, reward and emotion. Trends in Cognitive Sciences. 2007;11(11):489–497. doi: 10.1016/j.tics.2007.08.013. [DOI] [PubMed] [Google Scholar]

- New J, Cosmides L, Tooby J. Category-specific attention for animals reflects ancestral priorities, not expertise. Proceedings Of The National Academy Of Sciences Of The United States Of America. 2007;104:16598–16603. doi: 10.1073/pnas.0703913104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ohman A, Mineka S. Fears, phobias, and preparedness: Toward an evolved module of fear and fear learning. Psychological Review. 2001;108(3):483–522. doi: 10.1037/0033-295x.108.3.483. [DOI] [PubMed] [Google Scholar]

- Ohman A, Lundqvist D, Esteves F. The face in the crowd revisited: A threat advantage with schematic stimuli. Journal of Personality and Social Psychology. 2001a;80(3):381–396. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Ohman A, Flykt A, Esteves F. Emotion drives attention: Detecting the snake in the grass. Journal of Experimental Psychology-General. 2001b;130(3):466–478. doi: 10.1037/0096-3445.130.3.466. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: from a `low road' to `many roads' of evaluating biological significance. Nature Reviews Neuroscience. 2010;11(11):773–782. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phelps EA. Emotion and cognition: Insights from studies of the human amygdala. Annual Review of Psychology. 2006;57:27–53. doi: 10.1146/annurev.psych.56.091103.070234. [DOI] [PubMed] [Google Scholar]

- Phelps EA, LeDoux JE. Contributions of the amygdala to emotion processing: From animal models to human behavior. Neuron. 2005;48(2):175–187. doi: 10.1016/j.neuron.2005.09.025. [DOI] [PubMed] [Google Scholar]

- Rutishauser U, Tudusciuc O, Neumann D, Mamelak AN, Heller AC, Ross IB, et al. Single-unit responses selective for whole human faces in the human amygdala. Current Biology. 2011;21:1654–1660. doi: 10.1016/j.cub.2011.08.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seligman ME. On general of laws of learning. Psychological Review. 1970;77(5):406–418. [Google Scholar]

- Simmons WK, Martin A. Spontaneous resting-state BOLD fluctuations reveal persistent domain-specific neural networks. Social Cognitive and Affective Neuroscience. 2011 doi: 10.1093/scan/nsr018. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Thieme, New York. 1988. Co-planar stereotaxic atlas of the human brain. 3- dimensional proportional system: An approach to cerebral imaging. [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, et al. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168(3):242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends in Cognitive Sciences. 2005;9(12):585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Wheatley T, Milleville SC, Martin A. Understanding animate agents - Distinct roles for the social network and mirror system. Psychological Science. 2007;18(6):469–474. doi: 10.1111/j.1467-9280.2007.01923.x. [DOI] [PubMed] [Google Scholar]

- Wright P, Liu YJ. Neutral faces activate the amygdala during identity matching. Neuroimage. 2006;29(2):628–636. doi: 10.1016/j.neuroimage.2005.07.047. [DOI] [PubMed] [Google Scholar]

- Zald DH. The human amygdala and the emotional evaluation of sensory stimuli. Brain Research Reviews. 2003;41(1):88–123. doi: 10.1016/s0165-0173(02)00248-5. [DOI] [PubMed] [Google Scholar]