Abstract

Cochlear implant (CI) users differ in their ability to perceive and recognize speech sounds. Two possible reasons for such individual differences may lie in their ability to discriminate formant frequencies or to adapt to the spectrally shifted information presented by cochlear implants, a basalward shift related to the implant’s depth of insertion in the cochlea. In the present study, we examined these two alternatives using a method-of-adjustment (MOA) procedure with 330 synthetic vowel stimuli varying in F1 and F2 that were arranged in a two-dimensional grid. Subjects were asked to label the synthetic stimuli that matched ten monophthongal vowels in visually presented words. Subjects then provided goodness ratings for the stimuli they had chosen. The subjects’ responses to all ten vowels were used to construct individual perceptual “vowel spaces.” If CI users fail to adapt completely to the basalward spectral shift, then the formant frequencies of their vowel categories should be shifted lower in both F1 and F2. However, with one exception, no systematic shifts were observed in the vowel spaces of CI users. Instead, the vowel spaces differed from one another in the relative size of their vowel categories. The results suggest that differences in formant frequency discrimination may account for the individual differences in vowel perception observed in cochlear implant users.

I. INTRODUCTION

Although cochlear implants allow profoundly deaf people to hear, cochlear implant users show a very wide range of speech perception skills. The most successful cochlear implant users can easily hold a face-to-face conversation, and they can even communicate on the telephone, a difficult task because there are no visual cues available and because the acoustic signal itself is highly degraded (Gstoettner et al., 1997). On the other hand, the least successful cochlear implant users have a difficult time communicating even in a face-to-face situation, and can barely perform above chance on auditory-alone speech perception tasks (Dorman, 1993).

One long term goal of our research is to understand the mechanisms that underlie speech perception by cochlear implant (CI) users and, in so doing, gain an understanding of the individual differences in psychophysical characteristics which may explain individual differences in speech perception with a CI. It is important to remember that electrical hearing as provided by a cochlear implant is quite different from normal acoustic hearing. One important difference lies in listeners’ ability to discriminate formant frequencies. Kewley-Port and Watson (1994) report difference limens between 12 and 17 Hz in the F1 frequency region for highly practiced normal hearing listeners. In the F2 frequency region, they found a frequency resolution of approximately 1.5%. For cochlear implant users, formant frequency discrimination depends on two factors: the frequency-to-electrode map that is programmed into their speech processor, and the individual’s ability to discriminate stimulation pulses delivered to different electrodes. It is not uncommon for some cochlear implant users to have formant frequency difference limens that are one order of magnitude larger than those of listeners with normal hearing, or even more (Nelson et al., 1995; Kewley-Port and Zheng, 1998). It is reasonable to hypothesize that cochlear implant users with such limited frequency discrimination skills will find it quite difficult to identify vowels accurately because formant frequencies are important cues for vowel recognition.

Another important difference between acoustic and electric hearing is related to the finding that cochlear implants do not stimulate the entire neural population of the cochlea but only the most basal 25 mm at best, because the electrode array cannot be inserted completely into the cochlea. Therefore, cochlear implants stimulate cochlear locations that are more basal and thus elicit higher pitched percepts than normal acoustic stimuli. For example, when the input speech signal has a low frequency peak (e.g., 300 Hz), the most apical electrode is stimulated, regardless of the particular frequency-to-electrode table employed. The neurons stimulated in response to this signal may have characteristic frequencies of 1000 Hz or even higher. This represents a rather extreme modification of the peripheral frequency map. To the extent that the auditory nervous system of CI users is adaptable enough to successfully “re-map” the place frequency code in the cochlea, the basalward shift provided by a CI should not hinder speech perception. On the other hand, an inability to adapt and re-map the place frequency code may severely limit speech perception in CI users and may be an important source of individual differences in speech perception (Fu and Shannon, 1999a, b). Although there is consensus in the literature that cochlear implants stimulate neurons with higher characteristic frequencies than those stimulated by the same sound in normal ears, there is controversy about the amount of this possible basalward shift. For example, Blamey et al. (1996), in a study where CI users with some residual hearing were asked to match the electrical percepts in one ear to the acoustic percepts in the other ear, concluded that the electrode positions that matched acoustic pure tones were more basal than predicted from the characteristic frequency coordinates of the basilar membrane in a normal human cochlea. However, Blamey et al. (1996) also acknowledge that “the listeners may have adapted to the sounds that they hear through the implant and hearing aid in everyday life so that simultaneously occurring sounds in the two ears are perceived as having the same pitch.” On the other hand, Eddington et al. (1978) conducted the only experiment we are aware of, where a unilaterally deaf volunteer received a cochlear implant and was asked to match the pitch of acoustic and electric stimuli while still in the operating room, before he had much of a chance to adapt to the basalward shift in the way described by Blamey et al. (1996). Eddington et al. (1978) concluded that their pitch-matching data were “consistent with frequency versus distance relationships derived from motion of the basilar membrane.”

Several previous studies have addressed the issue of adaptation to changes in frequency-to-electrode assignments for cochlear implant users. Skinner et al. (1995) showed that users of the SPEAK stimulation strategy identified vowels better with a frequency-to-electrode table that mapped a more restricted acoustic range into the subject’s electrodes than the default frequency-to-electrode table. The experimental table that resulted in better vowel perception represented a more extreme basalward shift in spectral information than the default table, suggesting that listeners with cochlear implants can indeed adapt to such shifts, at least within certain limits. Another study that demonstrates the adaptation ability of human listeners in response to spectral shifts was conducted by Rosen et al. (1999), who used acoustic simulations of the information received by a hypothetical cochlear implant user who had a basalward spectral shift of 6.5 mm on the basilar membrane (equivalent to 1.3–2.9 octaves, depending on frequency). Initially, the spectral shift reduced word identification in normal-hearing subjects (1% correct, as compared to 64% for the unshifted condition), but after only three hours of training, subjects’ performance improved to 30% correct. Whether or not such performance represents the maximum possible by CI users was not addressed in this study, given the relatively short time spent in training.

Recently, Fu et al. (submitted) performed an experiment in which the frequency-to-electrode tables of three cochlear implant users were shifted one octave with respect to the table they had been using daily for at least three years. It is important to note that this one-octave shift was in addition to the original shift imposed by the cochlear implant. After three months of experience with the new table, it was apparent that adaptation was not complete because, on average, subjects did not reach the same levels of speech perception that they had achieved before the table change. Taken together, these previous studies show that auditory adaptation to a modified frequency map is possible but it may be limited, depending on the size of the spectral shift that listeners are asked to adapt to.

In the present study, we investigated the adaptation of human listeners to a basalward shift using a new paradigm, a method-of-adjustment (MOA) procedure. This methodology was used to obtain maps of the perceptual vowel spaces of adult, postlingually deafened cochlear implant users. Similar tasks have been used with normal hearing listeners as well as CI users (Johnson et al., 1993; Hawks and Fourakis, 1998). In this task, subjects select the region of the F1 – F2 plane that sounds to them like a given vowel, and the procedure is repeated for ten English vowels. This task simultaneously assesses a cochlear implant user’s auditory adaptation ability, by comparing the locations of his/her selected regions to those selected by normal hearing listeners, and his/her frequency discrimination skills, by examining the spread of the selected regions. More specifically, a listener who was unable to adapt to the basalward spectral shift introduced by their cochlear implant would be expected to select regions whose centers are systematically shifted to lower frequencies with respect to the regions of the vowel space selected by normal hearing listeners in mapping their vowel categories. The extent to which cochlear implant listeners show relatively normal vowel category centers could be used as a measure of their adaptation to basalward spectral shift.

An alternative to this hypothesis predicts that the spread of selected regions (category sizes), as well as category centers, would increase (or “smear”) as a result of perceptual adaptation. The resulting vowel categories may be larger (show greater spread) reflecting the cochlear implant listener’s need to map a greater range of frequencies to a given vowel. To differentiate between the spectral smearing hypothesis and the frequency discrimination explanation would require a longitudinal study of the changes in vowel spaces and frequency discrimination. For the purposes of this study, a simple shift hypothesis limited to vowel category centers was tested and compared with the frequency discrimination hypothesis.

In addition to the MOA task, two other perceptual tests were administered to CI users, an F1 jnd test with synthetic vowel stimuli and a closed set identification test with naturally produced vowels. Taken together, these measures were intended to investigate the role of formant frequency discrimination and auditory adaptation in vowel perception by CI users.

II. EXPERIMENT

A. Methods

1. Participants

Forty-three Indiana University undergraduates with no reported history of speech or hearing problems and eight adult cochlear implant (CI) users, all monolingual speakers of English, participated in this experiment. The normal-hearing participants consisted of 20 males and 23 females ranging in age between 18 and 28. The normal-hearing participants were recruited to represent the dialect of American English spoken in central Indiana with a common inventory of vowels. Only normal-hearing listeners who reported living their entire lives in central Indiana were included in this experiment. Central Indiana was defined in terms of a 60-mile radius around Indianapolis, roughly covering the Midland dialect as described by Wolfram and Schilling-Estes (1998). This criterion was used to exclude two other regional dialects found at the northern and southern extremes of the state. These other regional dialects are reported to differ from the Midland dialect in terms of vowel quality and degree and type of dipthongization (Labov, 1991). For participating in two 1-h sessions, the participants received either $7.50 per hour or two credits towards their research requirement if they were enrolled in an undergraduate psychology class.

The CI users were recruited from the population of adult patients served by the Department of Otolaryngology-Head and Neck Surgery at the Indiana University School of Medicine in Indianapolis. The demographics of the CI users are given in Table I, while information concerning their cochlear implants is provided in Table II. All of the CI users were native speakers of American English, with the exception of CI1, who was a native speaker of British English. British and Midland American English are not reported to differ substantively from one another in vowel quality for the ten vowels used in this study (Gimson, 1962; Pilch, 1994). Thus the American English vowel spaces were deemed an acceptable benchmark for CI1 as well as the other CI users.

TABLE I.

Demographic and other information for patients with cochlear implants.

| Patient | Age | Gender | Age at onset of profound deafness | Age at implantation | Implant use (years) | CNC |

|---|---|---|---|---|---|---|

| CI1 | 67 | F | 43 | 61 | 6 | 52% |

| CI2 | 35 | M | 29 | 31 | 3 | 68% |

| CI3 | 37 | M | 34 | 36 | 1 | 46% |

| CI4 | 74 | F | 27 | 71 | 2 | 25% |

| CI5 | 63 | M | 56 | 57 | 5 | 11% |

| CI6 | 70 | M | * | 66 | 3 | 32% |

| CI7 | 68 | F | * | 65 | 2 | 1% |

| CI8 | 58 | M | * | 52 | 6 | 0% |

CNC=Percentage of correctly identified words on CNC word lists (male talker). Each patient was administered three 50-word lists.

CI6, CI7 Progressive; CI8, Progressive childhood.

TABLE II.

Information concerning the patients’ cochlear implants. Insertion depth indicates the distance from the most apical electrode to the round window. Frequency range indicates the acoustic range that was mapped into the available stimulation channels.

| Patient | Implant type | Insertion depth (mm) | Frequency range (Hz) | Number of active channels | Stimulation mode |

|---|---|---|---|---|---|

| CI1 | Clarion 1.0 | 25 | 350–5500 | 8 | Bipolar |

| CI2 | Clarion 1.2 | 25 | 350–6800 | 8 | Bipolar |

| CI3 | Nucleus 24 | 21.25 | 120–7800 | 21 | Monopolar |

| CI4 | Nucleus 22 | 19.75 | 170–4800 | 14 | BP+1 |

| CI5 | Nucleus 22 | 22 | 300–4000 | 20 | BP+1 |

| CI6 | Nucleus 22 | 19.75 | 150–9200 | 19 | BP+1 |

| CI7 | Nucleus 22 | 19 | 120–6300 | 18 | BP+3 |

| CI8 | Clarion 1.0 | 25 | 350–5500 | 8 | Bipolar |

Note: All patients with Clarion devices used the CIS stimulation strategy, while those patients with Nucleus devices used the SPEAK strategy, with the exception of CI5 who used the MPEAK strategy.

All of the CI users had received their cochlear implants at least one year prior to participating in this study. The CI users differed from one another in terms of the type of co-chlear implant they received: Five were users of the Nucleus-22 or Nucleus-24 device, programmed with either the SPEAK strategy or the MPEAK strategy, while three were users of the Clarion device, programmed with the CIS strategy. The SPEAK strategy (Skinner et al., 1994) filters the incoming speech signal into a maximum of 20 frequency bands, which are associated with different intracochlear stimulation channels. Typically, six channels are sequentially stimulated in a cycle, and this cycle is repeated 250 times per second. The channels to be stimulated during each cycle are chosen based on the frequency bands with the highest output amplitude. In contrast, the CIS strategy (Wilson et al., 1991) as implemented in the Clarion device filters the signal into eight bands, one for each stimulation channel. All channels are sequentially stimulated with pulses whose amplitudes are determined by the filters’ outputs. The stimulation cycle is repeated at a rate of at least 833 times per second. The CIS strategy differs from the SPEAK strategy in its use of a different stimulation rate, fewer stimulation channels, and in its stimulation of all channels in a cycle rather than only a subset of the available channels.

The CI users also differed from one another in terms of the depth of insertion of the electrode array in the cochlea. The array’s depth of insertion, in turn, determines the magnitude of the basalward spectral shift induced by the implant. It is possible to roughly estimate the size of this basalward shift for an individual CI user with three pieces of information: the location of the electrodes, the frequency to electrode mapping used by the cochlear implant’s speech processor, and estimates of the characteristic frequency of the neurons stimulated by a given electrode pair. The speech processors of the participants in this study divide the acoustic frequency spectrum into channels. Each channel is specified by an acoustic frequency range that is assigned to a pair of intra-cochlear electrodes. Low frequencies are mapped to the apical electrodes, while high frequencies are mapped to the basal electrodes.

In the present study, the basalward spectral shift was calculated for two channels for every subject, the channels corresponding to 475 Hz and 1500 Hz. These frequencies correspond to the F1 and F2 of a neutral vowel for an average male speaker. First, the place of stimulation for a specific channel was defined as occurring half way between the electrodes for that channel. When stimulation was bipolar (i.e., both electrodes were intracochlear), the place of stimulation was defined as occurring half way between the electrodes for that channel. For patients who received monopolar stimulation (i.e., the return electrode was extracochlear) the location of electrical stimulation was considered to be at the intracochlear electrode. Second, the intraoperative report of insertion depth was used to adjust this place estimate based on the depth of electrode insertion. Cochlear lengths of the subjects were not measured individually. Instead, the basalward shift was calculated assuming that all subjects had average sized cochleas with a length of 35 mm (Hinojosa and Marion, 1983). Third, the electrically assigned frequency for this location was calculated as the geometric mean of the frequency boundaries defining the channel being studied in the speech processor. Finally, the characteristic frequency of the neurons stimulated by a given electrode was calculated from Greenwood (1961) and compared to the frequency calculated in step three. This discrepancy for each CI user, measured in Hz and octave, is shown in Table III. Alternatively, the shift is also reported in terms of the location difference between electrical and acoustic stimulation, in mm. The use of Greenwood’s equation is based on the assumption that the average characteristic frequency of neurons stimulated by an electrode pair placed x mm from the round window is the frequency that would cause maximum displacement of the basilar membrane at the same x mm from the round window. Clearly, these are only rough estimates of the amount of basalward shift. In particular, the estimate of a 35-mm cochlea may lead to substantial overestimates or underestimates of the actual basalward shift. Future studies may improve the precision of these estimates by measuring the length of each individual cochlea using 3D reconstructions of CAT scans (Skinner et al., 1994; Ketten et al., 1998); by using the same 3D reconstructions to obtain more precise estimates of electrode location in the cochlea; and by obtaining physiological and behavioral data that may help determine the characteristic frequency of the neurons stimulated by different electrode pairs.

TABLE III.

The estimated basalward shift for the eight CI users for two cochlear locations corresponding to 475 Hz and 1500 Hz. Shifts are listed either as a frequency shift (Hz), an octave shift, or as the location difference in electrical and acoustic stimulation (mm).

| Patient | 475 Hz

|

1500 Hz

|

||||

|---|---|---|---|---|---|---|

| Hz | Octave | mm | Hz | Octave | mm | |

| CI1 | 235 | 0.7 | 2.7 | 409 | 0.3 | 1.4 |

| CI2 | 235 | 0.7 | 2.7 | 409 | 0.3 | 1.4 |

| CI3 | 1301 | 1.9 | 8.1 | 2320 | 1.3 | 6.1 |

| CI4 | 1719 | 2.2 | 9.2 | 2068 | 1.3 | 6.0 |

| CI5 | 645 | 1.3 | 5.2 | 1669 | 1.1 | 5.1 |

| CI6 | 1128 | 1.8 | 7.6 | 1542 | 1.2 | 5.3 |

| CI7 | 1707 | 1.6 | 9.1 | 2334 | 2.1 | 7.4 |

| CI8 | 235 | 0.7 | 2.7 | 409 | 0.3 | 1.4 |

2. Stimulus materials

a. Method-of-adjustment task

The stimulus set consisted of 330 synthetic, steady-state isolated vowels that varied from one another in their first and second formants in 0.377 Bark increments. The vowels were generated using the Klatt 88 synthesizer (Klatt and Klatt, 1990). The Bark increment size was chosen as a close approximation of the just noticeable difference for vowel formants of Flanagan (1957). The F1 and F2 values for this stimulus set ranged between 2.63 Z (250 Hz)–7.91 Z (900 Hz) and 7.25 Z (800 Hz)–15.17 Z (2800 Hz). These ranges were chosen to represent the full range of possible values for speakers of American and British English, and were successfully used in piloting the present experiment and in an earlier method-of-adjustment study of vowel perception in normal-hearing listeners (Johnson et al., 1993). All of the other synthesis parameters for this stimulus set also followed Johnson et al. (1993). The formulas for calculating the values of the most relevant synthetic parameters are summarized in Table IV. The F0 parameter was varied to generate two sets of the 330 stimuli, one representing a male voice and one representing a female voice. All of the synthetic speech sounds were presented at a 70 dB C-weighted SPL listening level. The stimuli were presented over Beyer Dynamic DT-100 headphones for normal-hearing listeners, and over an Acoustics Research loudspeaker for CI users.

TABLE IV.

The formulas for an important subset of the parameters used in generating the synthetic stimulus sets. Bn=the bandwidth of formants F1 – F4.

| Parameter | Formulas |

|---|---|

| Duration | 250 ms |

| F0 | Male: 120 Hz over the first half, dropping to 105 Hz at the end Female: 186 Hz over first half, dropping to 163 Hz at the end |

| F3 | {(0.522*F1)+(1.197*F2)+57} or {(0.7866*F1)−(0.365*F2)+2341} Hza |

| F4 | 3500 Hz or (F3+300 Hz), whichever higher |

| B1 | {29.27+(0.061*F1)−(0.027*F2)+(0.02*F3)} Hz |

| B2 | {−120.22−(0.116*F1)+(0.107*F3)} Hz |

| B3 | {−432.1+(0.053*F1)+(0.142*F2)+(0.151*F3)} Hz |

| B4 | 200 Hz |

The first formula was used for the half of the grid with higher F2 values, while the second was used for the half of the grid with lower F2 values.

b. Vowel identification task

The vowel identification task employed a closed-set procedure that used nine vowels in an “h-vowel-d” format. The stimuli were digitized from the female vowel tokens of the Iowa laserdisc (Tyler et al., 1987). Only steady-state vowels (no diphthongs) were used from this stimulus set. There were three separate productions of each vowel. Listeners were administered three lists that consisted of five repetitions of each vowel and three practice tokens. The stimuli were presented at a level of 70 dB C-weighted SPL over an Acoustics Research loudspeaker.

c. F1 jnd task

The stimuli for this task were seven synthetic three-formant vowels, with an F2 value of 1500 Hz and an F3 of 2500 Hz. F1 varied linearly, from 250 Hz for stimulus 1 to 850 Hz for stimulus 7. Three-formant rather than two-formant vowels were used in order to measure place-pitch discrimination with more realistic and speechlike stimuli. The stimuli were created using the Klatt 88 (Klatt and Klatt, 1990) speech synthesizer software. Voicing amplitude increased linearly in dB from zero to steady state over the first 10 ms and back to zero over the last 10 ms of the stimulus. Total stimulus duration was 1 s. Stimuli were presented at a level of 70–72 dB C-weighted SPL over an Acoustic Research loudspeaker.1

3. Procedures

a. Method-of-adjustment task

The procedures varied slightly for each participant group in the study. Normal-hearing participants were tested in a quiet room in two 1-h sessions. The second session took place approximately one week after the first session. In the first session, normal-hearing participants completed the method-of-adjustment task using one of the synthetic stimulus sets, either the male or the female voice set. In the second session, participants completed the method-of-adjustment task with the remaining stimulus set. The experiment was balanced for the order in which the two stimulus sets were presented to the normal-hearing participants. In contrast, the CI users were tested in a quiet room or a sound-attenuated chamber, in a single test session, varying in length by individual CI user between 1 and 3 h. Given the length of time CI users required to complete the MOA task and other demands on their time, they received only one of the two stimulus sets, the male voice set.

Each participant was presented with a two-dimensional (15 rows and 22 columns) visual grid centered in a computer display screen. The grid consisted of the 330 synthetic stimuli described above. A single English word appeared above this grid, constituting the target stimulus for a given trial. The visual target stimulus for a given trial was one of ten words, “heed,” “hid,” “aid,” “head,” “had,” “who’d,” “hood,” “owed,” “odd,” and “hut,” each of which contained one of the ten vowels under study, /i/, /ɪ/, /e/, /ɛ/, /æ/, /ʌ/, /ɑ/, /u/, /U/, and /o/. Subjects were instructed to search the grid, playing out individual sounds until they located one or more synthetic sounds that matched the vowel in the visual target stimulus. Subjects were not required to search the grid exhaustively before making any selections. After making their choice(s), subjects were asked to give each synthetic sound a rating on a 1–7 scale, grading how close a match the synthetic sound was to the target. One repetition of the visual target stimulus set was presented to listeners. The order of presentation of the stimulus set varied randomly from participant to participant.

The particular stimuli chosen and their respective ratings were used to calculate category centers and category sizes for each vowel type for each listener group. The category center for a given vowel was determined by averaging the F1 and F2 frequencies of the stimuli selected to match the given vowel, with their contribution to the average weighted by their rating. The category sizes were computed from the standard deviation of the selected stimuli in both dimensions, taking the weights into account. Normal-hearing listeners’ category centers were expected to appear in the F1 by F2 space in a similar arrangement to that observed in earlier vowel production studies with American English (i.e., Peterson and Barney, 1952; Hillenbrand et al., 1995). If the category centers of CI patients deviated from those of normal-hearing listeners, the extent of that deviation was expected to be dependent on the magnitude of the basalward shift that individual listeners had to adapt to (see Table III). Failure to adapt completely to this kind of stimulation would result in an overall space that is shifted lower in F1 and F2 (i.e., the subject would select synthetic stimuli that were lower in F1 and F2 than those chosen by normal-hearing subjects for the same target vowel).

b. Vowel identification task

The vowel identification task, administered only to the CI users, was a closed-set speech perception task in which three separate tokens of each of nine /hVd/ tokens were presented in random order, one at a time. The CI users had to say which one of the nine stimuli they thought they heard by responding verbally. They were instructed to guess if they did not know which vowel was presented. All subjects heard a total of at least 15 presentations of each vowel (except CI5 who heard 10). The subjects’ responses were tabulated and scored for total percentage of correct responses.

c. F1 jnd task

The F1 jnd task, administered only to the CI users, required listeners to make an absolute judgment. The stimuli for this task were labeled “1” through “7” in order of increasing F1. In this task, all stimuli were played in sequence several times, so the subjects could become familiar with the stimuli. The stimuli were then presented ten times each in random order and subjects were asked to identify the stimulus that was presented using one of the seven responses. The subject’s response and the correct response were displayed on the computer monitor before the presentation of the next stimulus. After each block of 70 trials (10 presentations of each of 7 stimuli), the mean and standard deviations of the responses to each of the 7 stimuli were calculated. The d′ for each pair of successive stimuli was calculated as the difference of the two means divided by the average of the two standard deviations. These d′ measurements were then cumulated to calculate a cumulative d′ curve, which provided an overall measure of the subject’s ability to discriminate and pitch rank the seven stimuli (Durlach and Braida, 1969; Levitt, 1972). To calculate the cumulative d′ curves, we followed the common assumption that the maximum possible value of d′ was three. The average jnd (defined as the mean stimulus difference resulting in d′=1) was calculated based on the cumulative d′ curve. Given that the F1 range spanned by the seven stimuli was 600 Hz, the jnd was defined as (600/cumulative d′). At least eight blocks of 70 trials were administered in order for all subjects to reach a plateau in performance as measured by the cumulative d′. The cumulative d′ reported here is the average of the best two blocks for each subject. The normative mean cumulative d′ from this procedure was calculated to be 53 Hz, based on a pilot study with six normal-hearing listeners.

B. Results

1. Normal-hearing participants

The normal-hearing listeners were expected to select vowel category centers with first and second formant values that corresponded to those typical in vowel production in American English. Figure 1 shows the mean vowel categories obtained from the group of normal-hearing subjects, for the male-voice stimulus set.2 Each category center is shown along with error bars denoting the “size” of each category, that is, the relative spread of the category in both formant dimensions. The error bars represent the standard deviation from the category centers in both formant dimensions. In panel (A) of this figure, on the left, all of the ratings have been used to calculate the center and size of all ten vowel categories. Panel (B) of this figure, on the right, shows the vowel space of normal-hearing subjects calculated using only ratings of four and above. The rating of four was chosen because it was the highest rating that still allowed for category sizes to be calculated for all ten vowels of all of the normal-hearing and CI participants. The center of each category was determined by weighting the Bark values (in each dimension) of all synthetic stimuli that were chosen by their goodness ratings, and then averaging the weighted values.

FIG. 1.

The mean vowel space of normal-hearing listeners, calculated (a) using all of the ratings provided and (b) using only ratings of four or above.

The perceptual spaces shown in Fig. 1 for the normal-hearing listeners demonstrate that the method-of-adjustment technique for measuring vowel categories can be successfully used to generate vowel spaces that display the typical intervowel relationships that have been observed in F1 by F2 spaces generated from vowel production data (Peterson and Barney, 1952; Hillenbrand et al., 1995). For instance, front vowel category centers have a higher F2 than back vowel centers; high vowel category centers have a lower F1 than low vowel category centers. While no vowel production data have been reported for English speakers from central Indiana,3 Hillenbrand et al. (1995) examined the vowel production spaces of 45 men, 48 women, and 46 children who were native speakers of American English of the variety spoken in southern Michigan. The study by Hillenbrand et al. (1995) was a replication and extension of the classic work on the acoustics of American English vowels carried out by Peterson and Barney (1952). The two panels in Fig. 2 show the plots of the vowel production centers from these two studies compared with the MOA vowel category centers obtained from the normal-hearing subjects in the present study (calculated using ratings of 4 and above). Panel (A) shows the vowel category centers from the male talkers in Hillenbrand et al. (1995). Panel (B) shows the vowel category centers from the male talkers in Peterson and Barney (1952). While there are differences in the exact locations of the vowel perception and vowel production centers between the two studies, all three vowel spaces (the MOA perceptual vowel space, and the vowel production spaces of Peterson and Barney (1952) and Hillenbrand et al. (1995)) show a common set of F1 and F2 relations between vowels. The first and second formants from the normal-hearing listeners were significantly correlated with their counterparts in both the Hillenbrand et al. (1995) set (r=+0.98, p<0.01 for F1; r=+0.89, p<0.01 for F2) and the Peterson and Barney (1995) set (r=+1.0, p<0.01 for F1; r=+0.83, p<0.05 for F2).

FIG. 2.

A comparison of the vowel category centers from the MOA task (in bold, connected by straight lines) with the vowel production centers (in plain text, connected by dotted lines) from (A) Hillenbrand et al. (1995) and (B) Peterson and Barney (1952).

2. Cochlear implant patients

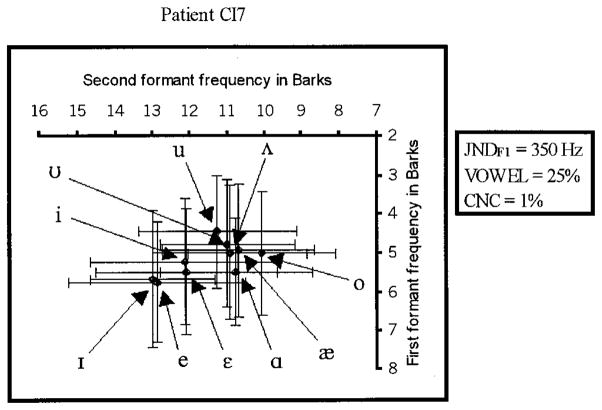

The results of the MOA, F1 jnd, and vowel perception tasks for the vowel spaces of the eight CI users are shown in Figs. 3–10. Unlike Figs. 1 and 2, Figs. 3–10 show individual CI user spaces, rather than an averaged group space. Individual spaces for CI users were presented separately given the large potential individual differences in the CI vowel spaces and the stated goal of this research in examining individual differences in the CI user population. Each figure shows the labeled centers of the ten vowel categories. The size of each category in each dimension is indicated by error bars. The centers of the categories were computed using the mean in each dimension weighted by the rating given to each synthetic stimulus, using ratings of only four or above, just as in the normal-hearing listeners’ vowel space in panel (B) of Fig. 1. The category sizes represent the standard deviation from the category center in the F1 and F2 dimensions. To the right of each CI user’s vowel space, the results of his/her F1 jnd (jndF1), vowel perception (VOWEL), and CNC word list (CNC) tests are listed.

FIG. 3.

The vowel space of patient CI1.

FIG. 10.

The vowel space of patient CI8.

An examination of all eight vowel spaces reveals little evidence of any systematic shift due to a lack of adaptation to a basalward spectral shift. The one possible exception is CI1’s space. Instead, we find individual CI user spaces that differ from the normal space in terms of the sizes of perceptual categories, their degree of overlap, and the region of perceptual space that particular categories occupy. These observations are supported by measures of the differences between CI and normal vowel categories. Table V lists the absolute differences, in Bark, between the category centers of both normal and individual CI users, in both F1 and F2. Positive differences indicate that the formant frequency (F1 or F2) of the normal-hearing listeners for that vowel was greater than the equivalent formant frequency of the CI patient. If a shift were observable with a particular CI user, one would expect to see positive differences in one or both of the formants of most of the vowels of that user. Of the eight CI users, seven showed positive and negative differences, depending on the vowel and formant in question. Only one subject, CI1, showed systematically lowered formants for her category centers, as would be expected for a listener who has not adapted completely to the spectral shift introduced by the cochlear implant. In Fig. 3, CI1’s categories are clearly shifted toward the lowest formant values in the upper right corner, resulting in a more compressed space. However, the magnitude of this shift was not the same for all ten vowels, or for both formants. The magnitude of the shift varied from 0.28 Z to 3.53 Z, with on average a greater shift observed in F1 than F2. Surprisingly, this shift was not accompanied by a high jndF1 or low scores on the vowel identification and CNC word recognition tests. This subject did differ from the other seven in terms of her native dialect (British English) and in her professional background as a speech-language pathologist. It is possible, but difficult to determine, that her background biased her in some manner to provide a shifted vowel space in the MOA task.

TABLE V.

The differences in Bark between normal and CI vowel categories, for each formant (F), for individual vowel categories, and the mean difference across all categories.

| Patient | F | Vowel category

|

Mean | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| i | ɪ | e | ε | æ | ʌ | ɑ | u | U | o | |||

| CI1 | F1 | 0.43 | 1.06 | 1.79 | 2.37 | 3.53 | 0.62 | 2.82 | 0.45 | 1.32 | 1.52 | 1.59 |

| F2 | 0.28 | 0.8 | 1.09 | 1.46 | 1.46 | 0.39 | 0.57 | 1.41 | 2.35 | 0.66 | 1.05 | |

| CI2 | F1 | 0.05 | −1.7 | 0.02 | 0.14 | −0.86 | −1.16 | −0.93 | −0.52 | 0.07 | −0.92 | −0.58 |

| F2 | −0.13 | 0.31 | −0.77 | −1.13 | 0.52 | −0.19 | 0.57 | 0.24 | 1.97 | −4.1 | −0.27 | |

| CI3 | F1 | −0.62 | −0.57 | −1.47 | −1.65 | −0.75 | 0.62 | −0.15 | 0.2 | 0.09 | 1.15 | −0.32 |

| F2 | 0.27 | 0.2 | 0.06 | 0.29 | 1.16 | 0.64 | 0.43 | −1.29 | 1.54 | 0.21 | 0.35 | |

| CI4 | F1 | −0.47 | 0.39 | −0.35 | 0.08 | 0.09 | −0.44 | 1.04 | −0.11 | 0.89 | 0.63 | 0.18 |

| F2 | 0.24 | −1.3 | −0.59 | −0.81 | −0.53 | −0.57 | −1.83 | 0.46 | −0.6 | 0 | −0.55 | |

| CI5 | F1 | −2.01 | −2.91 | −0.01 | −0.89 | −0.31 | 0.29 | −0.1 | −0.55 | −0.69 | 0.12 | −0.71 |

| F2 | 0.05 | 2.08 | −0.1 | 0.13 | 4.31 | −0.38 | −0.26 | −1.07 | −0.14 | −2.07 | 0.26 | |

| CI6 | F1 | −2.03 | −1.23 | −1.16 | −1.2 | 0.44 | 0.51 | 0.6 | −0.14 | −0.65 | 0.86 | −0.4 |

| F2 | 0.3 | −0.45 | 0.38 | −1.04 | 0.9 | 0.75 | −1.08 | 0.1 | 0.6 | −0.38 | 0.01 | |

| CI7 | F1 | −2.09 | −1.74 | −0.94 | −0.27 | 1.97 | 0.67 | 1.49 | −1.25 | −0.62 | −0.49 | −0.33 |

| F2 | 2.57 | 0.15 | 1.33 | 1.35 | 2.73 | −0.27 | −2.09 | −2.49 | −1.01 | −2.15 | 0.01 | |

| CI8 | F1 | −2.18 | −0.6 | −0.06 | −0.05 | 0.65 | −0.08 | 0.93 | −2.22 | −1.28 | −1 | −0.59 |

| F2 | 3.42 | 1.23 | 2.72 | 1.1 | 1.85 | −1.89 | −2.47 | −2.71 | −2.34 | −2.93 | −0.2 | |

The results shown in Figs. 3–10 indicate that most CI users adapted to their frequency-shifted input, although they varied widely in the degree to which their vowel spaces were similar to the vowel spaces of normal-hearing listeners. Moreover, this adaptation by CI users did not seem to be related to the depth of insertion of the electrode array of their implant. Table III listed the predicted basalward spectral shifts of the eight CI users in F1 and F2. If the individual differences in the magnitude of the predicted shifts were responsible for individual differences in vowel spaces, then we would expect to see a correlation between the actual differences between the normal and CI user category centers and the predicted shifts in each formant. In fact, neither of these two correlations was significant using Spearman rank order correlations. Thus despite the different “starting points” of individual CI users in learning to normalize for spectrally shifted input, most patients seem to manage to completely adapt after, at the most, one year of experience.

While most CI users showed little evidence of a lack of adaptation to the basalward spectral shift, many of their vowel category centers differed from those of the normal-hearing listeners in nonsystematic ways. For example, the /e/ and /ɛ/ categories of CI3 are shifted lower with respect to the equivalent normal categories; /i/ and /ɪ/ of CI4 overlap almost completely with one another; and all of CI7 and CI8’s categories are centralized and involve massive overlap. There are no obvious explanations for such discrepant category centers. For some categories at the periphery of the space, the centers may have differed from those of normal-hearing listeners if the grid did not encompass the entire relevant F1*F2 space for that listener. However, that would not account for the “interior” categories (mapped entirely out in the grid provided) that still differ from those of normal-hearing listeners (e.g., CI5’s /U/).

The CI users’ vowel spaces also differed from the average normal vowel space in terms of vowel category size. This pattern indicates differences in frequency discrimination ability. Among the CI users’ vowel spaces, we find several that are composed of categories that overlap very little, and that appear to be in roughly the same regions in perceptual space as those obtained from normal hearing listeners. Examples of such “normal” CI spaces are those from CI2 and CI3. In these spaces, front and back vowels occupy their “normal” regions of perceptual space. The sizes of the categories in these spaces are not much greater than those of normal hearing listeners. In contrast, the vowel spaces for CI7 and CI8 show much larger categories and a great deal of overlap between front and back vowels. The size of these categories and their arrangement in perceptual space suggest that these users might have great difficulty in using spectral information to discriminate among vowel sounds, or in identifying particular vowels in running speech. The vowel spaces of the four other CI users fall between these two extremes on a continuum characterized by category location and size.

These observations are supported by the difference measures displayed in Table V, and also by the mean sizes of categories (in Bark) for each CI space. These category sizes are shown in Table VI, for both the F1 and F2 dimension, along with the mean sizes averaged over both F1 and F2. The sizes for each perceptual dimension were calculated by averaging the Bark distances encompassed by one error bar in each formant dimension of each category center to get a mean category size for a given subject. Below the individual CI user measures are the averages across all CI users(”CI”) and the equivalent averages for all normal-hearing listeners (”NH”). The differences in all three measures between the CI users and the normal-hearing listeners was on the order of a 3:1 ratio, and all were significant at the p<0.01 level (F1: t=9.1, df=81.1; F2: t=6.9, df=81; F1 and F2 combined: t=10.6, df=163.6).

TABLE VI.

The mean size of individual CI user’s categories in the F1 dimension, the F2 dimension, and both dimensions, along with the mean category sizes across all normal-hearing (NH) and CI users’ categories. Size refers to one standard deviation (one error bar) from the vowel category center.

| Patient | F1 (Z) | F2 (Z) | F1 and F2 (Z) |

|---|---|---|---|

| CI1 | 0.23 | 0.17 | 0.2 |

| CI2 | 0.22 | 0.36 | 0.29 |

| CI3 | 0.37 | 0.37 | 0.37 |

| CI4 | 0.75 | 0.65 | 0.7 |

| CI5 | 0.7 | 1.05 | 0.88 |

| CI6 | 1.01 | 0.98 | 0.99 |

| CI7 | 1.6 | 2.1 | 1.85 |

| CI8 | 1.33 | 2.48 | 1.9 |

| Mean CI | 0.78 | 1.02 | 0.9 |

| Mean NH | 0.25 | 0.40 | 0.33 |

The vowel spaces of CI2 and CI3, the relatively “normal” spaces, have the lowest mean category sizes (along with CI1), while CI7 and CI8 have the highest. Large vowel categories typically overlap with one or more neighboring categories in perceptual space, which may be predictive of potential problems in the perception of vowels in natural speech. These findings are consistent with the results of the F1 jnd and vowel identification tests: the worst performers on these tests were also the subjects whose vowel spaces included the largest, overlapping categories. The pattern of results is reflected in Spearman rank order correlations between the category size measures and the F1 jnd and vowel identification tests. The F1 jnd score correlated significantly with category size in F1 (r=+0.76, p<0.05), while the vowel identification score correlated significantly with all three measures (F1: r=−0.91, p<0.05; F2: r=−0.98, p<0.01; F1 and F2: r=−0.95, p<0.05). All of the other correlations were only marginally significant. Thus there was a significant positive correlation between category size, as measured in this study, and performance on F1 discrimination, and a significant negative correlation between category size and vowel identification.

In addition, the three category size measures also correlated very strongly with the CNC word recognition scores obtained from the CI users (F1: r=−0.88, p<0.05; F2: r=−0.95, p<0.05; F1 and F2: r=−0.91, p<0.05). In other words, there was a significant negative correlation between category size and a word identification test. The CNC word lists measure listeners’ ability to perform open set word identification. Thus it requires listeners to access their mental lexicons, making the task more similar to speech perception in natural settings than the MOA or phonetic identification tests reported here. The correlations between the CNC word recognition scores and category size results indicate that the MOA procedure provides useful information for characterizing the speech perception abilities of CI users.

III. DISCUSSION AND CONCLUSIONS

Despite the large individual differences observed among cochlear implant users in all three tests used in this study, the results revealed an orderly relationship between the perceptual vowel spaces of these listeners and their performance on the F1 jnd and the vowel identification tests. In Sec. I, we discussed two potential factors that may limit vowel identification by cochlear implant users: reduced formant frequency discrimination and lack of adaptation to basalward spectral shift. Overall, the results show that the major problem in vowel identification in these listeners appears to involve formant frequency discrimination. Only one of the eight CI users, CI1, showed a systematic shift of her vowel space that was consistent with limited auditory adaptation. For each vowel, she selected regions with lower F1 and F2 than those selected by normal hearing listeners. This result is consistent with the hypothesis that she has not completely adapted to the basalward spectral shift and thus selects vowels with very low formants as the best exemplars, to compensate for the frequency shift imposed by the cochlear implant.

Other than CI1, none of the other CI subjects showed evidence of any systematic shifts in their perceptual vowel spaces, suggesting that they were able to adapt to the frequency shift introduced by the cochlear implant. However, CI users clearly differ in the size of their vowel categories, reflecting differences in their ability to discriminate small differences in formant frequency. For example, CI7 and CI8 were the two CI users with the poorest jndF1 values, vowel identification scores, and CNC word recognition scores. Their vowel spaces showed substantial overlap among most vowel regions. In contrast, CI users such as CI1 and CI2 with the best (smallest) jndF1’s, high vowel identification, and high CNC word recognition scores, tended to have little overlap among vowel regions.

Our results on perceptual vowel spaces are consistent with those reported recently by Hawks and Fourakis (1998). Their cochlear implant subjects also showed widely divergent amounts of overlap among vowel categories obtained using an identification task with synthetic stimuli. In terms of adaptation to basalward spectral shift, the present results are interesting because they suggest that most cochlear implant users are able to adapt to the frequency shifts typically introduced by their devices. However, although CI1’s space was abnormal and thus not the best example of incomplete adaptation, her results do remind us that not all listeners may be able to adapt in a similar way. In addition, perhaps more listeners would find it difficult to adapt if the spectral shift was greater than that estimated for the cochlear implant subjects in this study. Moreover, the larger categories of the CI users could themselves reflect incomplete adaptation to basalward spectral shift, as the spectral smear hypothesis suggests.

In summary, the data reported in this paper suggest that vowel perception by cochlear implant users may be limited by the listener’s formant frequency discrimination skills, in combination with his/her ability to adapt to basalward spectral shift. The present findings also have implications for understanding the perception of consonant sounds by cochlear implant users, because formant frequencies not only provide cues for vowel perception but also provide important information for consonant recognition. In future studies we intend to explore longitudinal changes in adaptation to basalward shift and in frequency discrimination, in order to investigate the nature of the improvement in speech perception observed in most postlingually deaf cochlear implant users after they receive their devices. An additional avenue for research may involve applying the same methods used in this study to prelingually deafened pediatric CI users. In this case, however, we would not expect to see major frequency shifts in the vowel spaces of children because they would not have prior expectations concerning the location of vowels in the vowel space. Instead, the frequency discrimination skills of prelingually deafened pediatric CI users may be, on average, even worse than those of postlingually deafened adult CI users. Finally, it may be interesting to determine whether the listeners who show incomplete adaptation have less behavioral plasticity than the other listeners, or whether their lack of adaptation is due to a shallower insertion, which would result in a greater spectral shift to be overcome by the listener. Taken together, these studies should help us account for the enormous individual differences in speech perception by CI users, which should provide the theoretical rationale for improved devices and intervention strategies in this clinical population.

FIG. 4.

The vowel space of patient CI2.

FIG. 5.

The vowel space of patient CI3.

FIG. 6.

The vowel space of patient CI4.

FIG. 7.

The vowel space of patient CI5.

FIG. 8.

The vowel space of patient CI6.

FIG. 9.

The vowel space of patient CI7.

Acknowledgments

This work was supported by NIH-NIDCD Training Grant No. DC00012, NIH-NIDCD Grant No. R01-DC00111, and NIH-NIDCD Grant No. R01-DC03937. We would like to thank Dr. Richard Miyamoto for providing the electrode insertion information for the cochlear implant users. We would also like to thank two anonymous reviewers for their helpful comments, as well as Chris Quillet for his technical assistance.

Footnotes

Some stimuli were slightly louder than others, but these small differences in loudness should be very hard to perceive for most CI users.

The female stimulus set results were not included in the normal-hearing listeners’ averaged space because the CI users were only presented with the male stimulus set.

However, work is in progress in our laboratory on a vowel production study of Central Indiana English, using as talkers the same normal-hearing listeners that participated in this study.

References

- Blamey PJ, Dooely GJ, Parisi ES, Clark GM. Pitch comparisons of acoustically and electrically evoked auditory sensations. Hear Res. 1996;99:139–150. doi: 10.1016/s0378-5955(96)00095-0. [DOI] [PubMed] [Google Scholar]

- Dorman MF. Speech perception by adults. In: Tyler RS, editor. Cochlear Implants. Singular Publishing; San Diego: 1993. pp. 145–190. [Google Scholar]

- Durlach NI, Braida LD. Intensity perception. I. Preliminary theory of intensity resolution. J Acoust Soc Am. 1969;46:372–383. doi: 10.1121/1.1911699. [DOI] [PubMed] [Google Scholar]

- Eddington DK, Dobelle WH, Brackmann DE, Mladejovsky MG, Parking JL. Auditory prostheses research with multiple channel intracochlear stimulation in man. Ann Otol Rhinol Laryngol. 1978;53:5–39. [PubMed] [Google Scholar]

- Flanagan J. Estimates of the maximal precision necessary in quantizing certain ‘dimensions’ of vowel sounds. J Acoust Soc Am. 1957;29:533–534. [Google Scholar]

- Fu QJ, Shannon RV. Effects of electrode location and spacing on phoneme recognition with the Nucleus-22 Cochlear Implant. Ear Hear. 1999a;20:321–331. doi: 10.1097/00003446-199908000-00005. [DOI] [PubMed] [Google Scholar]

- Fu QJ, Shannon RV. Effects of electrode configuration and frequency allocation on vowel recognition with the Nucleus-22 Cochlear Implant. Ear Hear. 1999b;20:332–344. doi: 10.1097/00003446-199908000-00006. [DOI] [PubMed] [Google Scholar]

- Fu Q-J, Shannon RV, Galvin J. Performance over time of Nucleus-22 cochlear implant listeners wearing a speech processor with a shifted frequency-to-electrode assignment. J Acoust Soc Am submitted. [Google Scholar]

- Gimson AC. An Introduction to the Pronunciation of English. Edward Arnold; London: 1962. [Google Scholar]

- Greenwood DD. Critical bandwidth and the frequency coordinates of the basilar membrane. J Acoust Soc Am. 1961;33:1344–1356. [Google Scholar]

- Gstoettner W, Hamzavi J, Czerny C. Rehabilitation of patients with hearing loss by cochlear implants. Radiologe. 1997;37:991–994. doi: 10.1007/s001170050312. [DOI] [PubMed] [Google Scholar]

- Hawks JW, Fourakis MS. Perception of synthetic vowels by cochlear implant recipients. J Acoust Soc Am. 1998;104:1854–1855. [Google Scholar]

- Hillenbrand J, Getty LA, Clark MJ, Wheeler K. Acoustic characteristics of American English vowels. J Acoust Soc Am. 1995;97:3099–3111. doi: 10.1121/1.411872. [DOI] [PubMed] [Google Scholar]

- Hinjosa R, Marion M. Histopathology of profound sensorineural deafness. Ann NY Acad Sci. 1983;405:459–484. doi: 10.1111/j.1749-6632.1983.tb31662.x. [DOI] [PubMed] [Google Scholar]

- Johnson K, Flemming E, Wright R. The hyperspace effect: Phonetic targets are hyperarticulated. Language. 1993;69:505–528. [Google Scholar]

- Ketten DR, Skinner MW, Wang G, Vannier MW, Gates GA. In vivo measures of cochlear length and insertion depth of nucleus cochlear implant electrode arrays. Ann Otol Rhinol Laryngol. 1998;175:1–16. [PubMed] [Google Scholar]

- Kewley-Port D, Watson CS. Formant-frequency discrimination for isolated English vowels. J Acoust Soc Am. 1994;95:485–496. doi: 10.1121/1.410024. [DOI] [PubMed] [Google Scholar]

- Kewley-Port D, Zheng Y. Auditory models of formant frequency discrimination for isolated vowels. J Acoust Soc Am. 1998;103:1654–1666. doi: 10.1121/1.421264. [DOI] [PubMed] [Google Scholar]

- Klatt DH, Klatt LC. Analysis, synthesis, and perception of voice quality variations among female and male talkers. J Acoust Soc Am. 1990;87:820–857. doi: 10.1121/1.398894. [DOI] [PubMed] [Google Scholar]

- Labov W. The three dialects of English. In: Eckert P, editor. New Ways of Analyzing Sound Change. Academic; New York: 1991. pp. 1–44. [Google Scholar]

- Levitt H. Decision theory, signal-detection theory, and psychophysics. In: David EE, Denes PB, editors. Human Communication: A Unified View. McGraw-Hill; New York: 1972. pp. 114–174. [Google Scholar]

- Nelson DA, Van Tasell DJ, Schroder AC, Soli S, Levine S. Electrode ranking of ‘place pitch’ and speech recognition in electrical hearing. J Acoust Soc Am. 1995;98:1987–1999. doi: 10.1121/1.413317. [DOI] [PubMed] [Google Scholar]

- Peterson GE, Barney HL. Control methods used in the study of vowels. J Acoust Soc Am. 1952;24:175–184. [Google Scholar]

- Pilch H. Manual of English Phonetics. W. Fink; Munchen: 1994. [Google Scholar]

- Rosen S, Faulkner A, Wilkinson L. Adaptation by normal listeners to upward spectral shifts of speech: Implications for cochlear implants. J Acoust Soc Am. 1999;106:3629–3636. doi: 10.1121/1.428215. [DOI] [PubMed] [Google Scholar]

- Skinner MW, Holden LK, Holden TA. Effect of frequency boundary assignment on speech recognition with the SPEAK speech-coding strategy. Ann Otol Rhinol Laryngol. 1995;166:307–311. [PubMed] [Google Scholar]

- Skinner MW, Ketten DR, Vannier MW, Gates GA, Yoffie RL, Kalender WA. Determination of the position of nucleus cochlear implant electrodes in the inner ear. Am J Otolaryngol. 1994a;15:644–651. [PubMed] [Google Scholar]

- Skinner MW, Clark GM, Whitford LA, Seligman PM, Staller SJ, Shipp DB, Shallop JK, Everingham C, Menapace CM, Arndt PL, Antogenelli T, Brimacombe JA, Pijl S, Daniels P, George CR, McDermott H, Beiter AL. Evaluation of a new spectral peak coding strategy for the Nucleus 22 channel cochlear implant system. Am J Otolaryngol. 1994b;15 (Suppl 2):15–27. [PubMed] [Google Scholar]

- Tyler RS, Preece JP, Lowder MW. Laser Videodisk and Laboratory Report. University of Iowa at Iowa City, Department of Otolaryngology-Head and Neck Surgery; 1987. The Iowa audiovisual speech perception laser video disk. [Google Scholar]

- Wilson BS, Finley CC, Lawson DT, Wolford RD, Eddington DK, Rabinowitz WM. Better speech recognition with cochlear implants. Nature (London) 1991;352:236–238. doi: 10.1038/352236a0. [DOI] [PubMed] [Google Scholar]

- Wolfram W, Schilling-Estes N. American English. Blackwell; Malden, MA: 1998. [Google Scholar]