Summary

Objective

We adapted a behavioral procedure that has been used extensively with normal-hearing (NH) infants, the visual habituation (VH) procedure, to assess deaf infants’ discrimination and attention to speech.

Methods

Twenty-four NH 6-month-olds, 24 NH 9-month-olds, and 16 deaf infants at various ages before and following cochlear implantation (CI) were tested in a sound booth on their caregiver’s lap in front of a TV monitor. During the habituation phase, each infant was presented with a repeating speech sound (e.g. ‘hop hop hop’) paired with a visual display of a checkerboard pattern on half of the trials (‘sound trials’) and only the visual display on the other half (‘silent trials’). When the infant’s looking time decreased and reached a habituation criterion, a test phase began. This consisted of two trials: an ‘old trial’ that was identical to the ‘sound trials’ and a ‘novel trial’ that consisted of a different repeating speech sound (e.g. ‘ahhh’) paired with the same checkerboard pattern.

Results

During the habituation phase, NH infants looked significantly longer during the sound trials than during the silent trials. However, deaf infants who had received cochlear implants (CIs) displayed a much weaker preference for the sound trials. On the other hand, both NH infants and deaf infants with CIs attended significantly longer to the visual display during the novel trial than during the old trial, suggesting that they were able to discriminate the speech patterns. Before receiving CIs, deaf infants did not show any preferences.

Conclusions

Taken together, the findings suggest that deaf infants who receive CIs are able to detect and discriminate some speech patterns. However, their overall attention to speech sounds may be less than NH infants’. Attention to speech may impact other aspects of speech perception and spoken language development, such as segmenting words from fluent speech and learning novel words. Implications of the effects of early auditory deprivation and age at CI on speech perception and language development are discussed.

Keywords: Speech perception, Deaf infants, Cochlear implantation

1. Introduction

Recent advances in cochlear implant (CI) technology have allowed an increasing number of deaf individuals to have access to sound and audition. For prelingually deaf children, CIs represent a novel sensory input, which provides a means for learning spoken language. The success of cochlear implantation in enabling deaf children to learn spoken language has led to a broadening of candidacy criteria to include increasingly younger children. In 2000, the Food and Drug Administration guidelines approved cochlear implantation for children as young as 1 year of age, and some surgeons are providing CIs to infants under 1 year of age when there are circumstances that warrant it, such as the possibility of cochlear ossification or a clear lack of benefit from conventional hearing aids.

The population of early-implanted deaf infants is likely to increase substantially because of the broadening of candidacy criteria and because hearing loss can now be detected at younger ages due to new screening methods. Position statements and guidelines from the Joint Committee on Infant Hearing [1] and the American Academy of Pediatrics [2] have persuaded most state lawmakers to implement Universal Newborn Hearing Screening (UNHS), which requires hospitals to test the hearing of all newborns. Currently, 38 states in the US and several countries throughout Europe and the rest of the world have adopted or will soon adopt UNHS.

The trend to detect and identify hearing loss and to intervene at younger ages is driven by the general belief that the earlier in development a child has access to sound and hearing, the better the chances that he or she will acquire spoken language skills that are comparable to normal-hearing (NH) children. Investigations conducted at a number of CI research centers provide support for this view. Several investigators have shown that deaf children who receive CIs at younger ages tend to perform better on spoken language comprehension and production tasks than deaf children who receive CIs at older ages [3–5]. For example, in one recent study, Kirk et al. [6] reported results of a study that measured receptive and expressive language skills of children every 6 months up to 2 years following cochlear implantation. They found that the rate of improvement on the language measures was greater for children implanted before 2 years of age than for children implanted between 2 and 4 years of age. Given these and other similar findings, it is reasonable to expect that providing CIs to deaf infants at an earlier age (i.e. before the first year) will yield even greater benefits for prelingually deaf children.

The new population of very young CI recipients presents challenges to both researchers and clinicians who are concerned with evaluating the benefits of CIs in children. While steady progress has been made in developing behavioral techniques to evaluate the speech and language skills of children aged 2 years or older, measuring these skills in infants who are too young to follow verbal instructions is extremely difficult. The only current methods of assessing speech and language outcomes in infants who use sensory aids rely exclusively on parental-report questionnaires [7]. At the present time, it is not known if providing CIs at even younger ages will lead to even greater benefits in speech and language outcomes. It is important that researchers and clinicians develop new behavioral methodologies to measure the perceptual and linguistic skills of these young infants before and after they receive their CIs and track, longitudinally, how these abilities develop and change over time.

In order to understand what kinds of speech perception and language skills we might expect from deaf infants following cochlear implantation, it is useful to consider how these skills typically develop in NH infants. Over the last 30 years, developmental scientists have used several behavioral procedures to investigate the perceptual and linguistic capacities of NH infants [8,9]. We briefly review some of these skills and discuss how attention to speech might be important for acquiring these skills. Then we report preliminary data on deaf infants’ attention to and discrimination of speech sounds. The results reported below represent the first effort to measure and describe some of the fundamental speech perception capacities of deaf infants following cochlear implantation.

2. Speech perception skills during the first year of life

2.1. Speech discrimination

The speech perception capacities that infants exhibit during the first 6 months of life appear to be general rather than language specific [8]. Infants are born equipped to learn any of the world’s languages. During the first half-year, NH infants are able to detect and discriminate fine-grained differences in speech sounds that differentiate words in any of the world’s languages. Numerous investigations have shown that young infants are able to discriminate vowels [10–12] and consonants that differ with respect to voicing [13], place [14–16], and manner [17,18] of articulation. Moreover, up to about 8 months of age, infants are able to detect and discriminate many phonetic contrasts that are not phonologically relevant in the ambient language but are relevant in other languages [11,19–22] (see [8] for a review).

During the second half of the first year of life, the initial, language-general speech perception capacities develop into language-specific speech perception skills [11,19,22,23]. For example, Wer-ker and Tees [22] tested English-learning 6–8-month-olds and 10–12-month-olds’ ability to detect sound contrasts that were distinctive in Hindi but not in English. Only the younger infants could discriminate these specific contrasts. These findings suggest that sometime during the second 6 months of life, NH infants become less sensitive to acoustic phonetic characteristics of speech that are not distinctive in their native language. This attenuation of perceptual sensitivity to nonnative speech contrasts reflects an important shift from language-general to language-specific speech perception skills based on early experience in the language-learning environment. Learning about the organization and properties of speech sounds and speech patterns in the ambient language helps infants discover how to segment continuous speech into words and provides the fundamental basis for learning words and acquiring a grammar [8].

2.2. Segmentation of words from fluent speech

In written language, words are separated by unambiguous spaces on a page. By contrast, words in spoken language are not reliably marked by pauses or acoustic cues that are the same across talkers and speaking rates. Speech is a continuous acoustic signal when spoken to adults and when spoken to children and infants. This has been confirmed by investigations of caregivers’ speech to infants that have shown that caregivers tend to speak to infants using fluent speech rather than speaking each word in isolation [24,25]. Although adults automatically use their knowledge of words in the ‘mental lexicon’ to facilitate word recognition in fluent speech [26], infants may not have any words at all in memory to rely on. In order to build a vocabulary of the language, infants must develop perceptual skills that allow them to recognize and extract the sound patterns of words from the context of fluent speech and organize them in some systematic fashion in long-term lexical memory.

Over the past 10 years, developmental scientists have investigated the problem of segmentation in NH infants and the role of various types of linguistic cues to segmentation such as rhythmic [27,28], statistical/distributional [29–31], coarticulatory [32], phonotactic [33,34], and allophonic [35]. Some of these cues, such as statistical/distributional and coarticulatory information may be similar across languages, while others vary substantially from language to language. Infants learn language-specific segmentation cues by discovering the organization of sounds in the ambient language. Peter Jusczyk and colleagues have focused on when infants begin segmenting words in fluent speech and when they use language-specific information to influence their segmentation strategies. In their seminal study, Jusczyk and Aslin [36] tested 6- and 7.5-month-olds’ ability to recognize words in fluent speech. During a familiarization phase, infants were presented with repetitions of two words presented in isolation (cup and dog or bike and feet). During a test phase, the infants were presented with four passages, two contained the familiarized words and two contained the unfamiliar target words. The 7.5-month-olds, but not the 6-month-olds, attended significantly longer to the passages with the familiarized words. Jusczyk and Aslin interpreted these results as evidence that by 7.5 months of age, infants are able to segment and recognize familiar words in fluent speech even after only a brief period of exposure.

During the second half of the first year of life, infants develop greater sensitivities to language-specific attributes of speech that may facilitate speech segmentation. In one study, Jusczyk, Cutler, and Redanz [37] investigated English-learning infants’ sensitivity to the rhythmic properties of English words. Approximately 90% of content words in English begin with a stressed (or ‘strong’) syllable [38]. Jusczyk et al. (1993) tested English-learning infants’ preferences for lists of bisyllabic words that follow the predominant strong/weak stress pattern of English (e.g. doctor, candle) versus lists of bisyllabic words that follow a weak/strong stress pattern (e.g. guitar, surprise). They found that 9-month-olds, but not 6-month-olds, attended significantly longer to lists of words that followed the predominant stress pattern of English words—strong/weak. In a subsequent study, Jusczyk, Houston, and Newsome [28] discovered that 7.5-month-old English-learning infants were able to segment strong/weak words from fluent speech but not weak/strong (also see [27]). Taken together, both sets of findings suggest that English-learning infants’ sensitivity to the rhythmic properties of words in their language plays an important role in their ability to segment words from fluent speech.

In addition to the rhythmic properties of spoken language, infants also attend to other language-specific aspects of speech that are useful for word segmentation. For example, by 9 months of age, infants appear to be sensitive to the phonotactic properties of speech [39,40]. Phonotactics refers to how the sound segments (i.e. phonemes of language) are sequenced and ordered in different contexts. For example, the sequence/mt/occurs more often between words than within words in English. Attention to these sequential properties of speech patterns can further inform infants about the types of sounds or sequences of sounds that are more likely to occur within or between words, which will contribute to more mature and sophisticated speech segmentation skills. Indeed, in a recent study, Mattys and Jusczyk [33] found that by 9 months of age, English-learning infants can use phonotactic information to locate word boundaries.

At a somewhat more fine-grained level, variants (or allophones) of the same phoneme can also serve as word boundary cues [41,42]. For example, in English, aspirated stop consonants (such as the/th/in ‘top’) mark word beginnings because they do not occur in other word positions [43]. Jusczyk, Hohne, and Bauman [35] found that 10.5-month-old, but not 9-month-old, English-learning infants treat a two-syllable sequence as ‘nitrates’ or as ‘night rates,’ depending on the variant of/t/they hear. The findings of Jusczyk et al. [35] in combination with the results reported by Mattys and Jusczyk [33] suggest that infants’ sensitivity to language-specific properties of phonemes, their variants, and the constraints on orderings influences how they segment words from fluent speech.

Recently, Jusczyk [8,44] has proposed that Eng-lish-learning infants who have normal hearing may initially begin segmenting the speech stream using rhythmic information and simply assume that every stressed syllable is a word onset. This may be a good ‘first-pass’ strategy for word segmentation; breaking the input signal into smaller, more manageable sound patterns allows infants to notice the internal organization of segments and other language-specific properties (e.g. phonotactic and allophonic properties) at different locations within words. As infants integrate multiple cues and learn to segment words from fluent speech, they begin the process of learning words and acquiring a grammar of the language. In order to do this, they must not only hear the speech in their immediate environment and surroundings, but they must also attend to speech patterns so they can encode its organization and structure and begin to recognize the repetition of similar patterns on different occasions. NH infants automatically attend to and learn about the organization of sounds and sound patterns in their native language naturally, without any formal or explicit training. However, the same might not be true for congenitally deaf infants who have received CIs after some period of deafness. Since the early part of their development occurred with little if any auditory input, the neural mechanisms involved in speech perception, attention and learning may be quite different from those of NH infants.

3. Consequences of early auditory deprivation on development of speech perception skills

The absence of sound during the first few months of life may affect neurobiological development at several points along the peripheral auditory pathway as well as other higher-level cortical areas. In a recent paper, Shepherd and Hardie [45] reviewed findings relating to changes in the auditory pathway caused by deafness. At the level of the cochlea, deafness leads to degeneration of spiral ganglion cells as well as a reduction in the efficiency, spontaneous activity and temporal resolution of auditory nerve fibers [45]. At the level of the central auditory pathway, bilateral hearing loss results in reduction of synaptic density in the inferior colliculus. It is possible that auditory deprivation may affect auditory acuity post-co-chlear implantation. Leake et al. investigated this possibility using cats that were deafened for different lengths of time and then given cochlear implants [46,47]. They found that spatial selectivity of electrode impulses in the inferior colliculus was affected by the length of deafness and the amount of degeneration of the spiral ganglion cells.

At higher cortical levels, numerous studies over the last 40 years have shown that early sensory experience plays a critical role in the organization of the sensory cortices (see [48] for a review). When input from one sensory modality is unavailable, regions in the brain that normally subserve that modality appear to become more responsive to inputs from other sensory modalities [49]. Helen Neville et al. have investigated neural reorganization in humans using a variety of neural imaging techniques. They have found that some regions of the auditory cortex that only respond to auditory information in NH individuals respond to some types of visual information in deaf individuals who have learned sign language [50].

Intercortical projections also appear to be affected by sensory deprivation. In deaf cats, Kral et al. recorded responses from different layers of the auditory cortex. They found reduced synaptic activity in the infragranular layers, which output to the other cortical regions [51]. Ponton and Eggermont [52] collected auditory evoked potentials from children who had a history of early onset, profound bilateral hearing loss and who use co-chlear implants. They found responses that are consistent with immature superficial cortical layers, which are important for intracortical and interhemispheric communication [52]. Taken together, these findings on neural development suggest that early auditory deprivation may impair or attenuate the development of neural pathways connecting the auditory cortex to other cortical areas of the brain. Connections between the auditory cortex and other cortices, particularly the frontal and prefrontal cortices, are important for establishing higher-level attentional and cognitive neural networks linked to auditory processing. Thus, to understand the effects of auditory deprivation on language development in young prelin-gually deaf infants, it is important to investigate not only auditory acuity after CI but also processes involved in perception, attention, learning, and other cognitive skills that may be affected by the absence of sound during early development.

4. Assessing speech perception skills of deaf infants after cochlear implantation

Infants’ attention to and discrimination of speech sounds are crucial for further language acquisition. To assess these skills in infant CI users, we have constructed a new research laboratory within the ENT Clinic at the Indiana University School of Medicine (IUSM) to assess the speech perception and language skills of deaf infants before implantation and at regular intervals following cochlear implantation. One of the procedures we have adapted for this research program is the visual habituation (VH) procedure, which has been used extensively for the past three decades to assess the linguistic skills of NH infants [11,53,54]. Our goals in this initial research were to: (1) validate VH with this population of deaf and hard-of-hearing infants, and (2) use VH to track and assess infants’ attention to speech and measure their speech discrimination skills before and after receiving a cochlear implant.

Measuring and tracking the perceptual and linguistic development of young prelingually deaf infants who receive CIs are important for both clinical and theoretical reasons. From a clinical perspective, it is essential that new behavioral techniques be developed to assess the benefits of implanting deaf infants with CIs at very young ages and to measure changes in benefit and outcomes over time after implantation. From a theoretical perspective, this research provides a unique opportunity to compare language development of normally hearing infants to deaf infants who have been deprived of auditory input and then have their hearing restored at a later age via a CI. Do these deaf children follow the same developmental time course as NH infants, even though their early auditory experience was radically different? Also, how does the initial absence of auditory information affect infants’ subsequent ability to attend to and acquire spoken language? These are important fundamental questions that address neural development and behavior.

5. Experiment: visual habituation procedure

The VH procedure has been used extensively over the years to assess NH infants’ ability to discriminate speech contrasts [11,53]. In the standard implementation of VH, infants are first habituated to several trials of a repeating speech sound presented simultaneously with a visual display (e.g. a checkerboard pattern) during a habituation phase. The same stimuli are presented on each trial, and the infant’s looking times to the visual display are measured. When the infant’s looking time decreases and he or she reaches a predetermined habituation criterion, a novel auditory stimulus is presented with the same visual display that was used during habituation. An increase in looking time to the visual display when the novel auditory stimulus is presented is taken as evidence that the infant was able to detect the difference in the speech stimuli and respond to the novelty of the new sound pattern.

We have modified the VH procedure to assess infants’ attention to speech as well as their speech discrimination skills. During the initial habituation phase, half of the trials include an auditory stimulus (‘sound trials’). On the other half of the trials, the infants are presented with only the visual display (‘silent trials’). By comparing infants’ looking times to the visual display on sound and silent trials, we can obtain an objective measure of their attention to speech. In this study, both NH infants (6- and 9-month-olds) and deaf infants before and following cochlear implantation were tested to assess their attention to sound and to measure their speech discrimination skills. We believe that these basic skills are clinically relevant and important for understanding deaf infants’ potential for perceiving speech and learning spoken language. Another important goal of this investigation was to validate the VH procedure to determine if is a viable tool to use with a clinical population of infants whose speech perception and language-processing skills are completely unknown.

6. Method

6.1. Participants

To date, we have tested 16 prelingually deaf infants (eight female, eight male) who were enrolled in the IUSM’s cochlear implant program. Eleven deaf infants used the Nucleus 24 CI system, three used the MedEl CI system, and one used the Clarion CI system. Inclusion criteria: profound bilateral hearing loss, cochlear implantation prior to 2 years of age, and evidence of auditory detection at pure tone average of 50 dB within the first 3 months after CI (measured by visual reinforcement audiometry). The data from two infants (one female, one male) were excluded because they did not demonstrate auditory detection within 3 months after CI. Of the remaining fourteen infants, eight of the infants were tested prior to cochlear implantation, seven were tested at least once at approximately 1 month post-CI, eight were tested at least once at approximately 3 months post-CI, and eight were tested at approximately 6 months post-CI. The deaf infants’ mean ages and age ranges are displayed in Table 1. One participant, (CI01), who was the youngest cochlear implant recipient at IUSM received a CI at 6 months of age. We have followed CI01 closely and will report his individual data collected across multiple testing sessions. Finally, for comparison, we have also tested 24 NH 6-month-olds and 24 NH 9-month-olds.

Table 1.

Age of deaf infants at test intervals

| Interval (n) | Age at testing

|

|

|---|---|---|

| Mean (months) | Range (months) | |

| Pre-implant (8) | 10.9 | 5.8–20.7 |

| 1 month post-CI (7) | 16.4 | 8.7–24.6 |

| 3 months post-CI (8) | 18.4 | 9.6–27.3 |

| 6 months post-CI (8) | 20.4 | 13.9–29.9 |

6.2. Apparatus

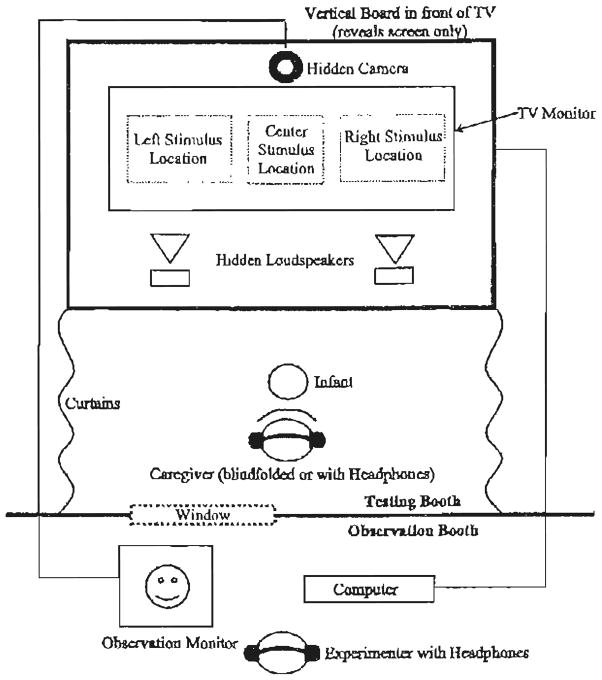

The testing was conducted in a custom-made, double-walled IAC sound booth. As shown in Fig. 1, infants sat on their caregiver’s lap in front of a large 55″ wide-aspect TV monitor, which was used to present all of the visual and auditory stimuli. The experimenter observed the infant via a hidden closed circuit TV camera and coded how long and in which direction infants looked by pressing keys on a computer keyboard. The experiments were implemented on the computer using the habit software package [55].

Fig. 1.

Apparatus. During the VH, the caregiver wears headphones playing masking music. The visual stimuli for the VH appear at the ‘Center Stimulus Location’.

6.3. Stimulus materials

To validate VH with this population of infants, we selected two very simple speech contrasts. These particular speech sounds are used clinically and have been found to be among the first sound contrasts that hearing-impaired children can detect and discriminate. One stimulus contrast was a 4 s. continuous vowel (‘ahh’) versus a 4 s. discontinuous CVC pattern (‘hop hop hop’) contrast. The other contrast was a 4 s. rising vowel/i/versus a 4 s. falling vowel/i/intonation contrast. At each testing session, the infant was presented with one of the two contrast pairs. All of the stimuli were produced by a female talker and recorded digitally into sound files. The stimuli were presented to the infants at 70±5 dB SPL via loudspeakers on the TV monitor. A computer representation of a red and white checkerboard pattern was created to serve as the visual display. Using VH, we assessed the ability to detect and discriminate these simple speech sounds in a group of congenitally deaf infants with CIs and a group of typically developing NH infants.

6.4. Procedure

The procedure we used was similar to the standard VH speech discrimination experiment. There was a habituation phase followed by a test phase. During the habituation phase, two types of trials were presented. Sound trials consisted of a pairing of the visual display and one of the sound stimuli (e.g. ‘hop hop hop’ or ‘ahh’). Silent trials consisted of the visual display only with no sound presented. Two sound and two silent trials were presented, in random order, in each block of four trials. Infants’ attention was initially drawn to the TV monitor using an ‘attention getter’ (i.e. a small dynamic video display of a laughing baby’s face).

Each trial was initiated when the infant looked to the visual display. The trial continued until the infant looked away from the visual checkerboard display for 1 s or more. The duration of the infant’s looking time toward the checkerboard was measured for each trial. During the habituation phase, the blocks of trials continued until the infant’s average looking time to the visual display across a block of four trials (two sound, two silent) was 50% or less than the average looking time across the first block of four trials. When this habituation criterion was met, the infant was then presented with two more trials, an old trial and a new trial (order of trials was counterbalanced across participants) during a test phase. The old trial was identical to the sound trials that the infant heard during the earlier habituation phase. The novel trial consisted of the other speech sound (e.g. ‘ahhh’) of the pair and the same visual display. Based on previous research with NH infants, we predicted that if speech sounds elicited infants’ attention then they would look longer to the visual display during the sound trials than during the silent trials. We also predicted that if the deaf infants could discriminate differences between speech sounds, they would exhibit longer looking times during the novel trial than during the old trial.

7. Results

We obtained two measures of performance. Attention was measured as the difference in the infants’ looking times to the sound versus the silent trials. Speech discrimination was measured as the difference in the infants’ looking times to novel versus old trials. NH infants were only tested one time each, at either 6 or 9 months of age. Deaf infants who received CIs were tested at several intervals before and after implantation. Data were grouped into 3 post-CI intervals: ‘1 month’ (1 day, 2 week, and 1 month post-CI intervals), ‘3 months’ (2 and 3 month post-CI intervals), and ‘6 months (5 and 6 month post-CI intervals). Some of the deaf infants were tested more than once during a single interval group. In the final data analyses, each session was treated as an independent sample, rather than averaging across sessions within a post-CI interval group.

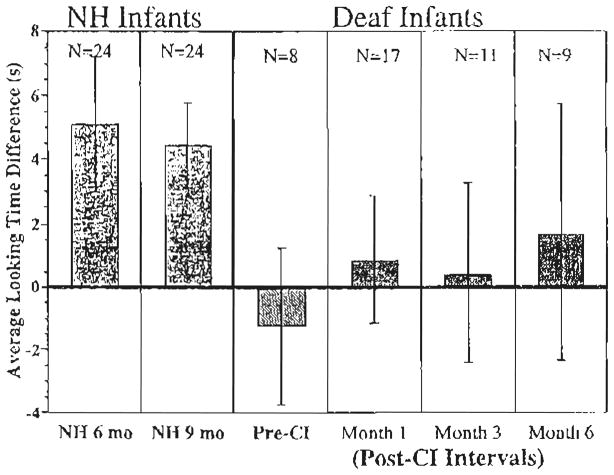

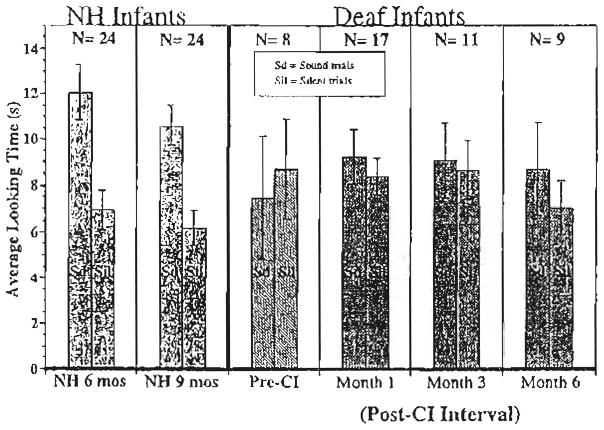

7.1. Attention to speech sounds

The looking times to the sound and silent trials were averaged separately for each infant. The average looking times were then subjected to a 3-way repeated-measures analysis of variance (AN-OVA) with Auditory Condition (sound vs. silence) as a within-subjects factor and Stimulus Condition (‘ahh’, ‘hop hop hop’, rising/i/, and falling/i/) and Group (Pre-implantation, post-CI, and NH)1 as between-subjects factors. There was no main effect of Stimulus Condition (F <1) and no interactions of Stimulus Condition with any of the other factors (all Fs <1), suggesting that the infants’ looking times were similar across the different stimulus conditions in the experiment. Based on these findings, we combined the data across the four stimulus conditions and re-analyzed the data using a 2-way repeated-measures ANOVA. Fig. 2 displays the average difference in looking times (and 95% confidence intervals) to the sound versus silent trials for the NH 6- and 9-month olds (solid bars) shown on the left, the deaf infants pre-CI shown in the middle (striped bar), and the deaf infants at the 1-, 3-, and 6-month post-CI intervals (patterned bars) shown on the right. Bars above the line at zero represent longer looking times to the sound trials than the silent trials.

Fig. 2.

Attention to speech sounds. The mean difference in looking times (and 95% confidence intervals) to the sound vs. silent trials for NH 6- and 9-month olds (solid bars), deaf infants pre-CI shown (striped bar), and deaf infants at the 1-, 3-, and 6-month post-CI intervals (patterned bars). The number of observations is given for each interval. Some deaf infants after implantation were tested more than once, yielding more observations than number of participants.

Overall, infants looked longer during the sound trials than during the silent trials (F(1, 90)= 6.79, P <0.05). There was no main effect of Group (F(5, 90)< 1), but there was a significant Group X Auditory Condition interaction (F(2, 90)= 13.21, P <0.001), indicating that not all of the groups looked significantly longer to the sound than to the silent trials. Additional analyses were conducted to determine the nature of the Group X Auditory Condition interaction. Comparing the two deaf groups, a 2-way repeated-measures ANOVA revealed no statistically significant interaction between Deaf Group (pre-CI vs. post-CI) and Auditory Condition (F(1, 43)= 1.89, P >0.1). In contrast, comparing the looking times of the NH infants with deaf infants post-CI revealed a significant Group (NH, post-CI) X Auditory Condition interaction (F(1, 83)= 17.55, P <0.001). This finding indicates that the difference in looking times between sound and silent trials was significantly greater for the NH infants than for the deaf infants who received CIs.

Further analyses were conducted to assess differences in performance between subgroups within the post-CI group (i.e. month 1, 3, and 6 post-CI) and within NH group (i.e. 6- and 9-month-olds) and to determine which groups (pre-implantation, post-CI, and NH) attended reliably longer to the sound than to the silent trials. The looking times to the sound and the silent trials were compared for each group separately. For deaf infants before receiving a CI, the looking times were subject to a paired t-test. The analysis revealed that the difference in looking times to sound and silent trials did not approach statistical significance (t(7)= − 1.17, P>0.2). The looking times of the deaf infants after CI were subjected to a 2-way repeated-measures ANOVA with Audition Condition as the repeated measure and Post-CI interval (month 1, 3, and 6) as the between-subject factor. Following CI, deaf infants did attend longer to the sound than to the silent trials, but the looking time difference did not reach statistical significance (F(1, 34)= 1.79, P >0.1). Also, the interaction between Auditory Condition and Post-CI Interval did not approach statistical significance (F(2, 34)< 1), suggesting that the pattern of looking times to the sound versus the silent trials was similar across the post-CI intervals. The looking times of the NH infants were subjected to a 2-way repeated-measures ANOVA with Auditory Condition as the repeated measure and Age Group (6 and 9 months) as the between-subjects factor. The analyses revealed that NH infants attended significantly longer to the sound than to the silent trials (F(1, 46)= 62.51, P <0.001), and there was no interaction with Age Group (F(1, 46)< 1).

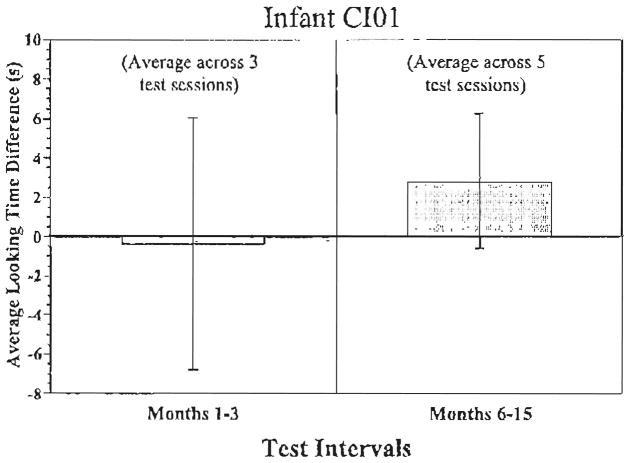

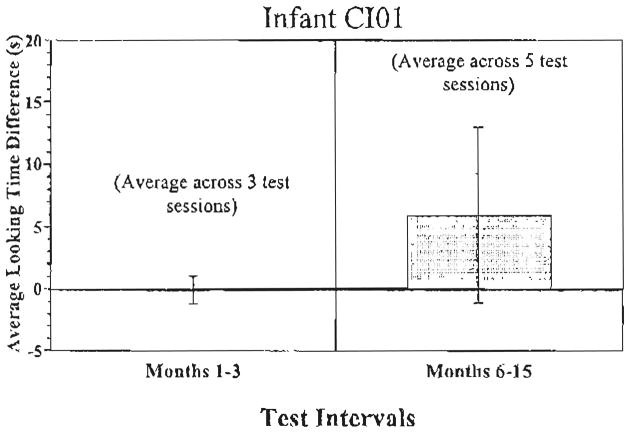

Fig. 3 displays the data from deaf infant CI01. He was tested three different times between 1 and 3 months after receiving his cochlear implant. Over this time period, he showed very little difference in his looking times for sound versus silent trials. However, he was also tested five times between 6 and 15 months after cochlear implantation. Over this period, he displayed a trend to look longer during the sound than silent trials that approached statistical significance (t(4)= 2.30, P=0.08).

Fig. 3.

CI01 attention to speech sounds. Looking time differences to the sound vs. the silent trials for participant CI01.

In summary, NH infants exhibited a strong preference for sound trials over silent trials. Before CI, deaf infants showed no such preference. After CI, deaf infants attended longer to the sound trials than the silent trials but the difference was not statistically reliable, and their preference for the sound trials was significantly smaller than the NH infants’. In contrast, the deaf infant who was implanted at 6 months of age, CI01, showed a preference for sound trials at his later CI intervals that was similar in magnitude to the NH infants’ preference.

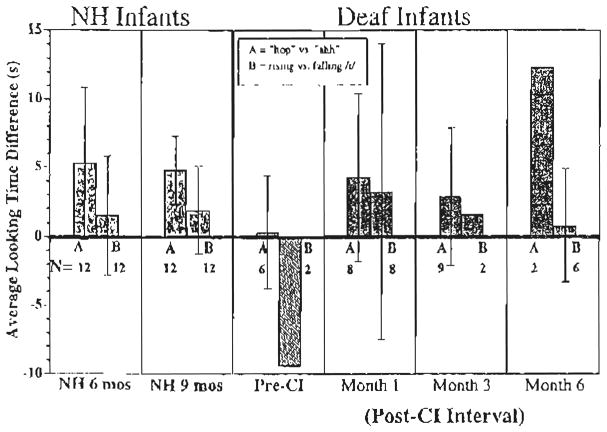

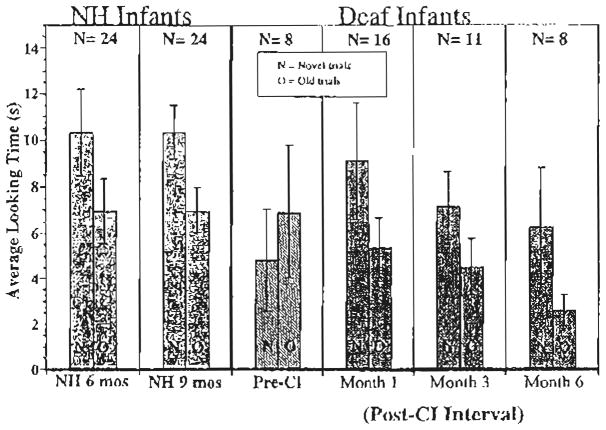

7.2. Speech discrimination

The looking times to the novel and old trials were subjected to a 3-way repeated-measures ANOVA with Discrimination Condition (novel vs. old) as a within-subjects factor and Stimulus Condition (‘ahh’ vs. ‘hop hop hop’ and rising vs. falling/i/) and Group (Pre-CI, post-CI, and NH) as between-subjects factors. Fig. 4 displays differences in looking times to the novel versus old trials for the NH infants shown on the left, the deaf infants pre-CI shown in the middle, and the deaf infants following CI shown on the right. The looking times are further divided by stimulus condition with the looking time differences for the ‘hop hop hop’ versus ‘ahh’ conditions indicated by the left bar in each panel and the rising versus falling/i/indicated by the right bar within each panel in the figure.

Fig. 4.

Speech discrimination. The mean differences in looking times to the novel vs. old trials for the NH infants (solid bars); the deaf infants pre-CI (striped bars), and the deaf infants following CI (patterned bars). The looking times are further divided by stimulus condition with the looking time differences for the ‘hop hop hop’ vs. ‘ahh’ conditions indicated by the left bar in each panel (A) and the rising vs. falling/i/indicated by the right bar within each panel (B). The number of observations is given for each interval. Ninety five percent confidence interval bars are displayed in cases where there were 6 or more observations.

As a whole, the three groups of infants did not look significantly longer to the novel trials than the old trials (F(1, 85)<1). However, there was a significant Group X Discrimination Condition interaction (F(2, 85)=3.45, P <0.05), suggesting that one or more of the groups may have discriminated the contrasts. There was also a significant interaction between Stimulus Condition and Discrimination Condition (F(1, 85)=5.49, P <0.05), reflecting a larger difference in discrimination for the ‘hop hop hop’ versus ‘ahh’ condition than the rising versus falling/i/condition. Further analyses comparing groups of infants revealed that the effect of discrimination was significantly different for the pre-CI and post-CI groups (F(1, 39)=4.98, P <0.05) and between the pre-CI and NH groups (F(1, 52)=8.63, P <0.01), but the interaction between post-CI and NH groups did not approach significance (F(1, 79)<1). These results suggest that NH infants and deaf infants who received CIs discriminated the sound contrasts to similar degrees while the deaf infants pre-CI were unable to discriminate any differences reliably.

Additional analyses were conducted for each group to determine which groups exhibited discrimination of the two different speech contrasts and to analyze for differences between subgroups within groups. The looking times of the deaf infants before CI was subjected to a 2-way repeated-measures ANOVA with Discrimination Condition as the repeated measure and Stimulus Condition as the between-subjects factor. The analyses revealed that the deaf infants before CI looked longer to the old than to the new trials, but this difference did not reach significance (F(1, 6)=3.22, P >0.1). Also, there was no significant main effect of Discrimination Condition (F(1, 6)<1), and the interaction of these factors did not reach statistical significance (F(1, 6)=3.66, P 0.1). In contrast, a 3-way repeated-measures ANOVA of the looking times of deaf infants at the three intervals after CI (month 1, 3, and 6) showed significantly longer looking times to the novel than to the old trials (F(1, 29)=5.85, P <0.05) and no significant interactions with post-CI interval (F(1, 29)< 1) or with Stimulus Condition (F(1, 29)=1.80, P >0.1). Likewise, a 3-way repeated-measures ANOVA revealed that the NH infants attended significantly longer to the new trials than to the old trials (F(1, 44)=13.57, P <0.001). There was no significant interaction with Age Group (F(1, 44)<1). However, the interaction between Discrimination and Stimulus Condition approached statistical significance (F(1, 44)=3.31, P <0.08), reflecting a larger discrimination effect in the ‘hop hop hop’ versus ‘ahh’ stimulus condition.

Fig. 5 displays the looking times of deaf infant CI01 during early (1–3 month) and later (6–15 month) post-implantation intervals. Infant CI01 showed no preference for the novel stimulus during the early test intervals, but he did display a trend to look longer during the novel trials at later post-implantation intervals (t(4)=2.34, P=0.08).

Fig. 5.

CI01 speech discrimination. Looking time differences to the novel vs. the old stimulus trial for participant CI01.

7.3. Mean looking times

The difference scores used to assess looking times to the sound and silent trials plotted in Fig. 2 provide a simple way to see the stimulus preferences of infants in the VH procedure. However, difference scores do not reveal how much time the infants actually looked to the visual displays for the two types of trials. To gain another perspective on the results, Fig. 6 displays average looking times (and standard errors), rather than difference scores. In each panel, the bar shown on the left bar displays the average looking time to the sound trials, while the bar shown on the right displays the average looking time to the silent trials. This figure reveals that while the NH infants and deaf infants’ overall looking times were generally quite similar, the pattern of looking times differed in several important ways. First, NH infants looked longer to the sound trials than the deaf infants did. In contrast, NH infants looked less to the silent trials than the deaf infants did. These two results show that the overall looking times of the NH infants and deaf infants during the habituation trials were similar but that the NH infants looked longer during the sound trials and shorter during the silent trials than the deaf infants who use CIs. During the test phase, when both trials were ‘sound’ trials, NH infants’ exhibited a trend to look longer during both the novel and old trials than the deaf infants, although this trend did not reach statistical significance. The infants’ mean looking times during the test phase are displayed in Fig. 7.

Fig. 6.

Attention to speech sounds. Mean looking times to the sound and the silent trials for NH controls, for deaf infants before cochlear implantation, and for deaf infants at several intervals after CI. This figure represents the same looking time data as in Fig. 2 but shows mean looking times rather than mean difference in looking times.

Fig. 7.

Speech discrimination. Mean looking times to the novel and the old trial for NH controls and for deaf infants before and at several intervals after CI. This figure represents the same looking time data as in Fig. 4 but shows mean looking times rather than mean difference in looking times, and, in this figure, looking times are combined across stimulus conditions.

8. Discussion

The attrition rates observed in the VH task were similar across both groups of deaf and NH infants—about 20–25%. These rates are low-to-average compared with other speech perception experiments with NH infants [9]. Hence, it appears that the VH procedure is a viable behavioral technique that can be used with deaf infants before and after cochlear implantation to assess benefit and measure change in auditory attention and speech discrimination skills over time.

In the present investigation, deaf and NH infants’ attention to speech sounds was assessed during a habituation phase. One of four repeating speech sounds was paired with a checkerboard pattern on half of the trials while the other half of the trials consisted of the checkerboard pattern with no sound. We observed no significant effect of stimulus type for any of the groups of infants, suggesting that when compared with silence, the infants were similarly interested in each type of speech stimulus. Preference for the sound trials over the silent trials differed across groups, however. Both 6- and 9-month-old NH looked significantly longer to the checkerboard pattern when accompanied by a repeating speech sound. Before implantation, deaf infants did not look longer during the sound trials. In contrast, after implantation, deaf infants did look longer during the sound trials, although the difference in looking times between sound and silent trials did not reach statistical significance2.

The NH infants’ preference for the sound trials was significantly greater than that of the deaf infants’ with CIs, even though the deaf infants’ auditory detection thresholds (measured using visual reinforcement audiometry) were far below the intensity level of the stimuli (70±5 dB). In other words, although both groups of infants can detect the repeating speech stimuli, the NH infants increased their attention (i.e. looking times) much more in the presence of speech sounds than the deaf infants did following CI. These findings suggest that while deaf infants’ attention to speech may increase slightly during the first 6 months following CI, it does not reach the levels observed in NH infants. However, deaf infant CI01’s preference for the sound trials at later intervals after CI was similar to the NH infants’, suggesting that age at implantation and duration of CI use may contribute to the development of attention to speech. Infant CI01 was our youngest CI recipient. He received his CI at 6 months of age.

During the test phase of the VH experiment, we assessed infants’ discrimination of two gross-level speech contrasts. NH infants looked significantly longer to novel sound trials than the old sound trials, validating this version of the VH procedure for use as a way of measuring speech discrimination. Similarly, deaf infants who received CIs demonstrated evidence of speech discrimination as well. Before receiving a CI, deaf infants did not look longer during novel sound trials than old sound trials. However, after receiving CIs, deaf infants did show a difference, suggesting that deaf infants’ discrimination of some speech patterns improves with access to sound within the first few months after CI. For both the NH and deaf infants with CIs, the continuous (‘ahh’) and discontinuous (‘hop hop hop’) speech contrast appeared to be much more salient than the rising versus falling intonation pattern of/i/. Further investigations will examine if deaf infants who have received CIs also demonstrate discrimination when the acoustic differences in speech sounds are smaller, such as minimal pairs of words, which will provide more detailed information about the acuity of their speech perception skills for discriminating fine phonetic details in speech and spoken words.

9. Attention to speech

Comparing two different speech perception skills in deaf infants before and after CI with NH infants provides valuable new information about the immediate benefits deaf infants will likely gain from their CIs. These comparisons may also help researchers and clinicians understand the nature of the challenges these infants will face when acquiring spoken language. The results from this initial investigation provide some clues about deaf infants’ speech perception skills after receiving their CI. The looking time response to gross-level changes in speech sounds after CI was similar to NH infants but was different from deaf infants pre-CI, demonstrating the development of fundamental attention and speech discrimination skills. In contrast, the pattern of looking behavior to sound versus silent trials in the deaf infants after CI was significantly different from NH infants. Importantly, the looking-time differences do not appear to be due to differences between the groups in general arousal or attention; the results are more selective in nature and are based on stimulus differences (Fig. 6). These findings suggest that even after receiving a CI, deaf infants do not appear to be as interested in speech as NH infants were in the VH procedure. However, several factors may be responsible for the findings that deaf infants post-CI did not respond similarly to NH infants in the attention task.

One factor that may have contributed to the differences in looking times between NH and deaf infants with CIs was the difference in chronological age between the two groups of infants. All of the NH infants used in this study were younger than the deaf infants at their post-CI intervals, except for CI01 at his month 1 post-CI interval. Thus, the NH infants were more closely matched to the deaf infants based on ‘hearing age’ than on chronological age. This allowed us to compare, for example, NH 6-month-olds to older, deaf infants who have had 6 months of auditory experience with a CI. However, older infants may not show the same kinds of preferences for sound trials as young infants, regardless of hearing status. Hence, we cannot be sure if the differences in looking times we observed between deaf infants and NH infants were due to differences in early auditory experience or due simply to age differences. Further comparisons with older NH infants would provide more information about the differences between deaf infants and age-matched NH peers. However, the data collected so far from the deaf infants with CIs suggest that attention to speech will not diminish with age. In fact, infants who have used their CIs for longer periods of time and who were, on average, somewhat older than infants tested at earlier intervals showed increased preference for sound trials. Also, CI01’s preference for sound trials consistently increased with age and CI experience.

Another factor that may have played a role in the pattern of results is the deaf infants’ auditory acuity following CI. While CIs provide deaf individuals with access to sound, they do not provide nearly the richness of information that a healthy cochlea does. As a result, deaf infants who receive CI may not be able to detect and discriminate as many fine-grained details in speech as NH infants. As a consequence, the impoverished speech signal provided by a CI may be inherently less interesting than the speech signals processed by a healthy cochlea because deaf infants may not be able to detect, discriminate, and encode fine acoustic–phonetic features that represent the linguistically significant sound contrasts in the target language. Unfortunately, at the present time, very little is known about the auditory sensitivity and acuity of deaf infants in discriminating speech sounds. Further studies of speech discrimination by deaf infants with CIs will provide more knowledge of their speech perception skills and how discriminability and distinctiveness contribute to attention to speech sounds.

Developmental factors also may contribute to differences observed between the groups of infants. The deaf infants studied here developed with little, if any, exposure to sound until they received their CIs. Early sensory deprivation and lack of auditory experience with meaningful sounds as well as speech may have a significant impact on how infants learn to interact with objects and sound sources in their environment [56]. Neuro-physiological studies provide evidence that sensory cortices are re-organized by early sensory experience [49,50] and that intercortical projections from the auditory cortex are affected by auditory deprivation [51,52].

At this time, little is actually known about the extent of neural reorganization in human infants due to early auditory deprivation. And nothing is known about how absence of sound during early development might affect attention to speech after a child receives a CI. However, given that infants’ ability to orient their attention to sensory input develops during the first year of life [57], it is quite possible that neural connections linking sensory perception to attention and other aspects of perception and cognition may not develop normally after a period of auditory deprivation and lack of stimulation. Moreover, before intervention deaf infants are not likely to respond to sound with activities such as visual orientation and vocal imitation. This lack of active response to sound may also affect the development of neural connections between auditory and other cortices.

Infants’ attention to speech may have consequences for acquiring other speech perception skills that are important for learning spoken language. Perception and attention to fine-grained acoustic–phonetic details in speech are known to be important for distinguishing spoken words. Paying attention to the ordering of sounds in speech may play a role in learning about the organization of sound patterns in the native language. Deaf infants who do not maintain the same level of attention to speech that NH infants do may not develop normal sensitivities to language-specific properties, such as rhythmic, distributional, coarticulatory, phonotactic, and allophonic cues, all of which have been shown to be important sources of information for segmenting words from fluent speech and acquiring the vocabulary of a given language [8,44]. And, because infant-directed speech is typically continuous in nature [24,25], difficulties segmenting words from fluent speech may cascade and produce atypical word learning and lexical development as well as morphological irregularities that affect syntax and language comprehension processes as well [58,59].

10. Some future directions

The present investigation, using the VH procedure, provides some preliminary data suggesting that deaf infants who have received CIs are able to discriminate gross-level speech sound contrasts, but they appear to pay less attention to speech than NH 6- and 9-month-olds. One deaf infant, CI01, who was tested repeatedly over time, however, did pay more attention to sound trials than silent trials. It is possible that deaf infants who receive CIs at very young ages, like CI01 who received his CI at 6 months of age, will also demonstrate attention to speech that is more similar to NH infants. We hope to be able to more thoroughly assess the effects of age at implantation on attention and speech discrimination skills when we have collected more data from additional infants who have received CIs at different ages.

The sound contrasts that we studied in this investigation were gross-level, simple discriminations used to validate the VH procedure. After having established the VH as a viable measure of speech discrimination, it will be important to test deaf infants with acoustic–phonetic contrasts that are used to distinguish words in their native language. Measures of infants’ perceptual sensitivity and acuity of speech in addition to other speech perception measures will be helpful in understanding the relation between the quality of their perception and how that cascades to affect other speech perception skills.

Several speech perception skills are important for segmenting words from fluent speech. Many of these skills involve acquiring sensitivity to language-specific statistical properties that are informative about the organization of sounds in the native language. Assessing deaf infants’ sensitivity to these sequential properties will provide valuable new information about the kinds of information they attend to in speech. If attention to speech underlies the sensitivity to language-specific properties, then we might expect infants who show poor attention to speech to also display poor sensitivity to language-specific properties relative to NH peers, even when the NH infants are matched for the amount of time they have had access to sound. Moreover, sensitivity to language-specific properties may be important for speech segmentation and later word learning. Assessing these skills in deaf infants at several intervals after implantation and comparing individual infants’ skills using different speech perception and word-learning measures may inform us about the links between attention to speech, sensitivity to language-specific properties, word segmentation, and word learning and about the effects of auditory deprivation on the development of these skills.

Finally, while it is important to acquire detailed knowledge about the development of the receptive skills necessary for segmenting and identifying words in fluent speech, these skills alone are not sufficient for learning language. Children must also develop the expressive productive skills necessary for forming and articulating intelligible utterances. The linguistic environment plays an important role in the development of NH infants’ early productive skills. Investigations have shown that the particular language NH infants are exposed to influences the segmental [60] and the rhythmic [61] characteristics of their babbling by the end of the first year of life. The language learner’s ability to produce language is also influenced by input from the visual modality. Kuhl and Meltzoff [62] reported that 20-week-old infants attended longer to a video display of a speaker articulating the vowel they were hearing than to a video of the same speaker articulating a different vowel, suggesting that NH infants are able to detect and use cross-modal correspondences between the auditory and visual properties of speech. Noticing auditory and visual correspondences may be integral to NH infants’ imitations of sounds in their environment. Their own vocal imitations may, in turn, provide infants with auditory feedback that they can use to adjust their productions to more closely resemble utterances in the target language.

For profoundly deaf infants, the opportunity to integrate auditory and visual information is absent until auditory information is made available by CI. Consequently, their ability to integrate auditory and visual information may be delayed or impaired, which may impact their early speech production skills. Indeed, Lachs et al. [63] recently found that deaf children who were better at integrating auditory and visual information of spoken words in sentences produced more intelligible speech. However, the participants in the Lachs et al. study were much older children than the deaf infants we are studying now. Investigating the audiovisual speech perception skills of deaf infants who receive CIs would provide valuable information about how providing CIs at an early age may affect audiovisual integration skills and may be useful for predicting their later speech and language production skills [64].

11. Conclusions

We have adapted the VH procedure to assess the speech perception skills of deaf infants who have received CIs. So far, the results are very encouraging. The attrition rates are relatively low, and deaf infants who have received CIs are showing trends in their looking times that are similar to findings obtained with NH infants. This pattern of responses was observed strongly in one participant who received his CI at 6 months of age and was studied repeatedly over time. We suspect that earlier implantation may facilitate the development of attention to speech sounds because sound and the information specified by sound sources in the environment will become available at an earlier point in neural and perceptual development. Attention to speech and spoken language is an important prerequisite for learning about the organization of sounds in the ambient language and developing knowledge of the sound patterns and regularities of sounds. The initial results obtained so far using the VH procedure are consistent with the general hypothesis that early exposure to sound, and especially exposure to speech, underlies the development of auditory attention and speech discrimination skills, although more data from more deaf and NH infants will be needed to see if these trends are reliable.

Acknowledgments

Research supported by the American Hearing Research Foundation, IU Intercampus Research Grant, IU Strategic Directions Charter Initiative Fund, the David and Mary Jane Sursa Perception Laboratory Fund, and by NIH-NIDCD Training Grant DC00012 and NIH-NIDCD Research Grant DC00064 to Indiana University. We would like to thank Riann Mohar, Marissa Schumacher, and Steve Fountain for assistance recruiting and testing infants; the faculty and staff at Wishard Memorial Hospital, Methodist Hospital, and Indiana University Hospital for their help in recruiting participants; and the infants and their parents for their participation. Finally, we would like to thank Dr Robert Ruben and anonymous reviewers for helpful comments on an earlier version of this paper.

Footnotes

For this analysis, the data from the 6- and 9-month-old NH infants were grouped together into the ‘NH’ group. Also, the data from the deaf infants at the three post-CI intervals were grouped together into the ‘post-CI’ group.

We expect that with a larger sample size, the deaf infants’ who use cochlear implants difference in looking times between sound and silent trials would reach statistical significance. However, their preference for sound trials would still be significantly smaller than the NH infants’, if the pattern of looking times was consistent with what has been observed so far.

References

- 1.Joint Committee on Infant Hearing: year 2000 position statement: principles and guidelines for early hearing detection and intervention programs. Am J Audiol. 2000;9:9–29. [PubMed] [Google Scholar]

- 2.American Academy of Pediatrics Task Force on Newborn and Infant Hearing: newborn and infant hearing loss: detection and intervention. Pediatrics. 1999;103(2):527–530. doi: 10.1542/peds.103.2.527. [DOI] [PubMed] [Google Scholar]

- 3.Fryauf-Bertschy H, Tyler RS, Kelsay DMR, Gantz BJ. Cochlear implant use by prelingually deafened children: the influences of age at implant use and length of device use. J Speech Hear Res. 1997;40:183–199. doi: 10.1044/jslhr.4001.183. [DOI] [PubMed] [Google Scholar]

- 4.Tobey E, Pancamo S, Staller S, Brimacombe J, Beiter A. Consonant production in children receiving a multichannel cochlear implant. Ear Hear. 1991;12:23–31. doi: 10.1097/00003446-199102000-00003. [DOI] [PubMed] [Google Scholar]

- 5.Waltzman SB, Cohen NL. Cochlear implantation in children younger than 2-years-old. Am J Otol. 1998;19:158–162. [PubMed] [Google Scholar]

- 6.Kirk KI, Miyamoto RT, Ying EA, Perdew AE. Zuganelis: cochlear implantation in young children: effects of age at implantation and communication mode. Volta Review. 2002;102:127–144. (monograph) [Google Scholar]

- 7.Hayes D, Northern JL. Infants and Hearing. Singular; San Diego, CA: 1996. [Google Scholar]

- 8.Jusczyk PW. The Discovery of Spoken Language. MIT Press; Cambridge, MA: 1997. [Google Scholar]

- 9.Werker JF, Shi R, Desjardins R, Pegg JE, Polka L, Patterson M. Three methods for testing infant speech perception. In: Slater A, editor. Perceptual Development: Visual, Auditory, and Speech Perception in Infancy. Psychology Press; East Sussex, UK: 1998. pp. 389–420. [Google Scholar]

- 10.Kuhl PK. Perception of auditory equivalence classes for speech in early infancy. Infect Behav Dev. 1983;6:263–285. [Google Scholar]

- 11.Polka L, Werker JF. Developmental changes in perception of non-native vowel contrasts. J Exp Psychol Hum Percept Perform. 1994;20:421–435. doi: 10.1037//0096-1523.20.2.421. [DOI] [PubMed] [Google Scholar]

- 12.Trehub SE. Infants’ sensitivity to vowel and tonal contrasts. Dev Psychol. 1973;9:91–96. [Google Scholar]

- 13.Eimas PD, Siqueland ER, Jusczyk P, Vigorito J. Speech perception in infants. Science. 1971;171(968):303–306. doi: 10.1126/science.171.3968.303. [DOI] [PubMed] [Google Scholar]

- 14.Bertoncini J, Bijeljac-Babic R, Jusczyk PW, Kennedy LJ, Mehler J. An investigation of young infants’ perceptual representations of speech sounds. J Exp Psychol Gen. 1988;117:21–33. doi: 10.1037//0096-3445.117.1.21. [DOI] [PubMed] [Google Scholar]

- 15.Jusczyk PW, Copan H, Thompson E. Perception by 2-month-old infants of glide contrasts in multisyllabic utterances. Percept Psychophys. 1978;24(6):515–520. doi: 10.3758/bf03198777. [DOI] [PubMed] [Google Scholar]

- 16.Moffitt AR. Consonant cue perception by 20–24-week old infants. Child Dev. 1971;42:717–731. [PubMed] [Google Scholar]

- 17.Hillenbrand JM, Minifie FD, Edwards TJ. Tempo of spectrum change as a cue in speech sound discrimination by infants. J Speech Hear Res. 1979;22:147–165. doi: 10.1044/jshr.2201.147. [DOI] [PubMed] [Google Scholar]

- 18.Miller JL, Eimas PD. Studies on the categorization of speech by infants. Cognition. 1983;13:135–165. doi: 10.1016/0010-0277(83)90020-3. [DOI] [PubMed] [Google Scholar]

- 19.Best CT. Learning to perceive the sound patterns of English. In: Rovee-Collier C, Lipsitt LP, editors. Advances in Infancy Research. Vol. 9. Ablex; Norwood, NJ: 1995. pp. 217–304. [Google Scholar]

- 20.Trehub SE. The discrimination of foreign speech contrasts by infants and adults. Child Dev. 1976;47:466–472. [Google Scholar]

- 21.Tsushima T, Takizawa O, Sasaki M, et al. Discrimination of English/r-l/and/w-y/by Japanese infants at 6–12 months: language specific developmental changes in speech perception abilities. Paper presented at: International Conference on Spoken Language Processing; Yoko-hama, Japan. October, 1994. [Google Scholar]

- 22.Werker JF, Tees RC. Cross-language speech perception: evidence for perceptual reorganization during the first year of life. Infect Behav Dev. 1984;7:49–63. [Google Scholar]

- 23.Werker JF, Lalonde CE. Cross-language speech perception: initial capabilities and developmental change. Dev Psychol. 1988;24:672–683. [Google Scholar]

- 24.van de Weijer J. Language Input for Word Discovery. Vol. 9. Max Planck Institute; Nijmegen, The Netherlands: 1998. [Google Scholar]

- 25.Woodward JZ, Aslin RN. Segmentation cues in maternal speech to infants. Paper presented at: Seventh Biennial Meeting of the International Conference on Infant Studies; Montreal, Quebec, Canada. April 1990. [Google Scholar]

- 26.Suomi K. An outline of a developmental model of adult phonological organization and behavior. J Phonetics. 1993;21:29–60. [Google Scholar]

- 27.Echols CH, Crowhurst MJ, Childers J. Perception of rhythmic units in speech by infants and adults. J Mem Lang. 1997;36:202–225. [Google Scholar]

- 28.Jusczyk PW, Houston DM, Newsome M. The beginnings of word segmentation in English-learning infants. Cognit Psychol. 1999;39:159–207. doi: 10.1006/cogp.1999.0716. [DOI] [PubMed] [Google Scholar]

- 29.Goodsitt JV, Morgan JL, Kuhl PK. Perceptual strategies in prelingual speech segmentation. J Child Lang. 1993;20:229–252. doi: 10.1017/s0305000900008266. [DOI] [PubMed] [Google Scholar]

- 30.Morgan JL, Saffran JR. Emerging integration of sequential and suprasegmental information in preverbal speech segmentation. Child Dev. 1995;66(4):911–936. [PubMed] [Google Scholar]

- 31.Saffran JR, Aslin RN, Newport EL. Statistical learning by 8-month-old infants. Science. 1996;274:1926–1928. doi: 10.1126/science.274.5294.1926. [DOI] [PubMed] [Google Scholar]

- 32.Johnson EK, Jusczyk PW. Word segmentation by 8-month-olds: when speech cues count more than statistics. J Mem Lang. 2001;44:548–567. [Google Scholar]

- 33.Mattys SL, Jusczyk PW. Phonotactic cues for segmentation of fluent speech by infants. Cognition. 2001;78:91–121. doi: 10.1016/s0010-0277(00)00109-8. [DOI] [PubMed] [Google Scholar]

- 34.Mattys SL, Jusczyk PW, Luce PA, Morgan JL. Phono-tactic and prosodic effects on word segmentation in infants. Cognit Psychol. 1999;38(4):465–494. doi: 10.1006/cogp.1999.0721. [DOI] [PubMed] [Google Scholar]

- 35.Jusczyk PW, Hohne EA, Bauman A. Infants’ sensitivity to allophonic cues for word segmentation. Percept Psycho-phys. 1999;61:1465–1476. doi: 10.3758/bf03213111. [DOI] [PubMed] [Google Scholar]

- 36.Jusczyk PW, Aslin RN. Infants’ detection of the sound patterns of words in fluent speech. Cognit Psychol. 1995;29(1):1–23. doi: 10.1006/cogp.1995.1010. [DOI] [PubMed] [Google Scholar]

- 37.Jusczyk PW, Cutler A, Redanz NJ. Infants’ preference for the predominant stress patterns of English words. Child Dev. 1993;64(3):675–687. [PubMed] [Google Scholar]

- 38.Cutler A, Carter DM. The predominance of strong initial syllables in the English vocabulary. Comput Speech Lang. 1987;2:133–142. [Google Scholar]

- 39.Friederici AD, Wessels JMI. Phonotactic knowledge and its use in infant speech perception. Percept Psychophys. 1993;54:287–295. doi: 10.3758/bf03205263. [DOI] [PubMed] [Google Scholar]

- 40.Jusczyk PW, Luce PA, Charles-Luce J. Infants’ sensitivity to phonotactic patterns in the native language. J Mem Lang. 1994;33(5):630–645. [Google Scholar]

- 41.Bolinger DL, Gerstman LJ. Disjuncture as a cue to constraints. Word. 1957;13:246–255. [Google Scholar]

- 42.Church K. Phonological parsing and lexical retrieval. Cognition. 1987;25:53–69. doi: 10.1016/0010-0277(87)90004-7. [DOI] [PubMed] [Google Scholar]

- 43.Church KW. Phonological Parsing in Speech Recognition. Kluwer Academic Publishers; Dordrecht: 1987. [Google Scholar]

- 44.Jusczyk PW. How infants adapt speech-processing capacities to native-language structure. Curr Direct Psychol Sci. 2002;11(1):18. [Google Scholar]

- 45.Shepherd RK, Hardie NA. Deafness-induced changes in the auditory pathway: implications for cochlear implants. Audiol Neurootol. 2001;6:305–318. doi: 10.1159/000046843. [DOI] [PubMed] [Google Scholar]

- 46.Leake PA, Hradek GT. Cochlear pathology of long term neomycin induced deafness in cats. Hear Res. 1988;33:11–34. doi: 10.1016/0378-5955(88)90018-4. [DOI] [PubMed] [Google Scholar]

- 47.Rebscher SJ, Snyder RL, Leake PA. The effect of electrode configuration and duration of deafness on threshold and selectivity of responses to intracochlear electrical stimulation. J Acoust Soc Am. 2001;109(5):2035–2048. doi: 10.1121/1.1365115. [DOI] [PubMed] [Google Scholar]

- 48.Kujala T, Alho K, Naatanen R. Cross-modal reorganization of human cortical functions. Trends Neurosci. 2000;23(3):115–120. doi: 10.1016/s0166-2236(99)01504-0. [DOI] [PubMed] [Google Scholar]

- 49.Rauschecker JP, Korte M. Auditory compensation for early blindness in cat cerebral cortex. J Neurosci. 1993;13:4538–4548. doi: 10.1523/JNEUROSCI.13-10-04538.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Neville HJ, Bruer JT. Language processing: how experience affects brain organization. In: Bailey DB, Bruer JT, editors. Critical Thinking about Critical Periods. Paul H. Brookes; Baltimore: 2001. pp. 151–172. [Google Scholar]

- 51.Kral A, Hartmann R, Tillein J, Held S, Klinke R. Congenital auditory deprivation reduces synaptic activity within the auditory cortex in a layer-specific manner. Cereb Cortex. 2000;10:714–726. doi: 10.1093/cercor/10.7.714. [DOI] [PubMed] [Google Scholar]

- 52.Ponton CW, Eggermont JJ. Of kittens and kids: altered cortical maturation following profound deafness and co-chlear implant use. Audiol Neurootol. 2001;6:363–380. doi: 10.1159/000046846. [DOI] [PubMed] [Google Scholar]

- 53.Best CT, McRoberts GW, Sithole NM. Examination of the perceptual re-organization for speech contrasts: zulu click discrimination by English-speaking adults and infants. J Exp Psychol Hum Percept Perform. 1988;14:345–360. doi: 10.1037//0096-1523.14.3.345. [DOI] [PubMed] [Google Scholar]

- 54.Horowitz FD. Infant attention and discrimination: Methodological and substantive issues. Monogr Soc Res Child Dev. 1975;39 (Serial no. 158) [PubMed] [Google Scholar]

- 55.Cohen LB, Atkinson DJ, Chaput HH. HABIT 2000: A New Program for Testing Infant Perception and Cognition, Version 10 (computer program) the University of Texas; Austin: 2000. [Google Scholar]

- 56.Gaver WW. What in the world do we hear? An ecological approach to auditory event perception. Ecol Psychol. 1993;5:1–29. [Google Scholar]

- 57.Posner MI, Rothbart MK. Developing mechanisms of self-regulation. Dev Psychopathol. 2000;12:427–441. doi: 10.1017/s0954579400003096. [DOI] [PubMed] [Google Scholar]

- 58.Houston DM, Carter AK, Pisoni DB, Kirk KI, Ying EA. Research on Spken Language Processing Progress Report No 25. Indiana University; Bloomington, IN: 2002. Word learning skills of deaf children following cochlear implantation: a first report; pp. 35–60. [Google Scholar]

- 59.Svirsky MA, Stallings LM, Ying E, Lento CL, Leonard LB. Grammatical Morphologic development in pediatric cochlear implant users may be affected by the perceptual prominence of the relevant markers. Ann Otol Rhinol Laryngol. 2002;111(suppl 189):109–112. doi: 10.1177/00034894021110s522. [DOI] [PubMed] [Google Scholar]

- 60.Boysson-Bardies BD, Vihman MM, Roug-Hellichius L, Durand C, Landberg I, Arao F. Material evidence of selection from the target language. In: Ferguson CA, Menn L, Stoel-Gammon C, editors. Phonological Development: Models, Research, Implications. York Press; Timonium, MD: 1992. pp. 369–391. [Google Scholar]

- 61.Levitt AG, Utman J, Aydelott J. From babbling towards the sound systems of English and French: a longitudinal two-case study. J Child Lang. 1992;19:19–49. doi: 10.1017/s0305000900013611. [DOI] [PubMed] [Google Scholar]

- 62.Kuhl PK, Meltzoff AN. The bimodal perception of speech in infancy. Science. 1982;218:1138–1141. doi: 10.1126/science.7146899. [DOI] [PubMed] [Google Scholar]

- 63.Lachs L, Pisoni DB, Kirk KI. Use of audiovisual information in speech perception by prelingually deaf children with cochlear implants: a first report. Ear Hear. 2001;22:236–251. doi: 10.1097/00003446-200106000-00007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Bergeson TR, Pisoni DB. Audiovisual speech perception in deaf adults and children following cochlear implantation. In: Calvert G, Spence C, Stein BE, editors. Handbook of Multisensory Integration. in press. [Google Scholar]