Abstract

The functional specialization and hierarchical organization of multiple areas in rhesus monkey auditory cortex were examined with various types of complex sounds. Neurons in the lateral belt areas of the superior temporal gyrus were tuned to the best center frequency and bandwidth of band-passed noise bursts. They were also selective for the rate and direction of linear frequency modulated sweeps. Many neurons showed a preference for a limited number of species-specific vocalizations (“monkey calls”). These response selectivities can be explained by nonlinear spectral and temporal integration mechanisms. In a separate series of experiments, monkey calls were presented at different spatial locations, and the tuning of lateral belt neurons to monkey calls and spatial location was determined. Of the three belt areas the anterolateral area shows the highest degree of specificity for monkey calls, whereas neurons in the caudolateral area display the greatest spatial selectivity. We conclude that the cortical auditory system of primates is divided into at least two processing streams, a spatial stream that originates in the caudal part of the superior temporal gyrus and projects to the parietal cortex, and a pattern or object stream originating in the more anterior portions of the lateral belt. A similar division of labor can be seen in human auditory cortex by using functional neuroimaging.

The visual cortex of nonhuman primates is organized into multiple, functionally specialized areas (1, 2). Among them, two major pathways or “streams” can be recognized that are involved in the processing of object and spatial information (3). Originally postulated on the basis of behavioral lesion studies (4), these “what” and “where” pathways both originate in primary visual cortex V1 and are, respectively, ventrally and dorsally directed. Already in V1 neurons are organized in a domain-specific fashion, and separate pathways originate from these domains before feeding into the two major processing streams (5). Neurons in area V4, which is part of the “what” pathway or ventral stream, are highly selective for the color and size of visual objects (6, 7) and, in turn, project to inferotemporal areas containing complex visual object representations (8, 9). Neurons in area V5 (or MT), as part of the “where” pathway or dorsal stream, are highly selective for the direction of motion (10) and project to the parietal cortex, which is crucially involved in visual spatial processing (11–13). Both pathways eventually project to prefrontal cortex, where they end in separate target regions (14) but may finally converge (15). A similar organization has been reported recently for human visual cortex on the basis of neuroimaging studies (16, 17).

Compared with this elaborate scheme that has been worked out for visual cortical organization, virtually nothing has been known about the functional organization of higher auditory cortical pathways, even though a considerable amount of anatomical information had been collected early on (18–23). Around the same time, initial electrophysiological single-unit mapping studies with tonal stimuli were also undertaken (24). These studies described several areas on the supratemporal plane within rhesus monkey auditory cortex. Primary auditory cortex A1 was found to be surrounded by several other auditory areas. A rostrolateral area (RL, later renamed rostral area, R) shares its low-frequency border with A1, whereas a caudomedial area (CM) borders A1 at its high-frequency end. All three of these areas are tonotopically organized and mirror-symmetric to each other along the frequency axis. In addition, medial and lateral regions were reported as responsive to auditory stimuli but could not be characterized further with tonal stimuli.

Organization of Thalamocortical Auditory Pathways

Interest in the macaque's auditory cortical pathways was revived with the advent of modern histochemical techniques in combination with the use of tracers to track anatomical connections (25–28). Injection of these tracers into physiologically identified and characterized locations further strengthens this approach. Thus, after determining the tonotopic maps on the supratemporal plane with single-unit techniques, three different tracers were injected into identical frequency representations in areas A1, R, and CM (29). As a result of these injections, neurons in the medial geniculate nucleus (MGN) became retrogradely labeled. Label from injections into A1 and R was found in the ventral division of the MGN, which is the main auditory relay nucleus, whereas injections into CM labeled only the dorsal and medial divisions. This means that A1 and R both receive input from the ventral part of the MGN in parallel, whereas CM does not.

As a consequence, making lesions in primary auditory cortex A1 has different effects on responses in areas R and CM (29). When auditory responses in area R of the same animal before and after the A1 lesion were compared, they were essentially unchanged. By contrast, auditory responses in area CM, especially those to pure tones, were virtually abolished after the lesion. Thus, area R seems to receive its input independently of A1, whereas CM responses do depend on the integrity of A1. In other words, the rhesus monkey auditory system, beginning at the level of the medial geniculate nucleus (or even the cochlear nuclei), is organized both serially and in parallel, with A1 and R both receiving direct input from the ventral part of the medial geniculate nucleus.

As part of the mapping studies of the supratemporal plane, numerous examples of spatially tuned neurons were discovered in area CM (30), although this was not systematically explored at that time. However, Leinonen et al. (31) had earlier described auditory spatial tuning in neurons of area Tpt of Pandya and Sanides (19), which is adjacent to or overlapping with CM. The lateral areas receiving input from both A1 and R, on the other hand, may be the beginning of an auditory pattern or object stream: as we will see in the following, these areas contain neurons responsive to species-specific vocalizations and other complex sounds.

Use of Complex Sound Stimuli in Neurons of the Lateral Belt

Band-Passed Noise (BPN) Bursts.

From the earlier comparison with the visual system it becomes clear almost immediately that stimulation of neurons in higher visual areas with small spots of light is doomed to failure, because neurons integrate over larger areas of the sensory epithelium. By analogy, neurons in higher auditory areas should not and will not respond well to pure tones of a single frequency. The simplest step toward designing effective auditory stimuli for use on higher-order neurons is, therefore, to increase the bandwidth of the sound stimuli. BPN bursts centered at a specific frequency (Fig. 1A) are the equivalent of spots or bars of light in the visual system. It turns out that such stimuli are indeed highly effective in evoking neuronal responses from lateral belt (Fig. 1B). Virtually every neuron can now be characterized, and a tonotopic (or better cochleotopic) organization becomes immediately apparent (Fig. 1C). On the basis of reversals of best center frequency within these maps, three lateral belt areas can be defined, which we termed anterolateral (AL), middle lateral (ML), and caudolateral (CL) areas (32). These three areas are situated adjacent to and in parallel with areas R, A1, and CM, respectively. The availability of these lateral belt areas for detailed exploration brings with it the added bonus that they are situated on the exposed surface of the superior temporal gyrus (STG), an advantage that should not be underestimated. A parabelt region even more lateral and ventral on the STG has been defined on histochemical grounds (25, 33, 34), but belt and parabelt regions have not yet been distinguished electrophysiologically.

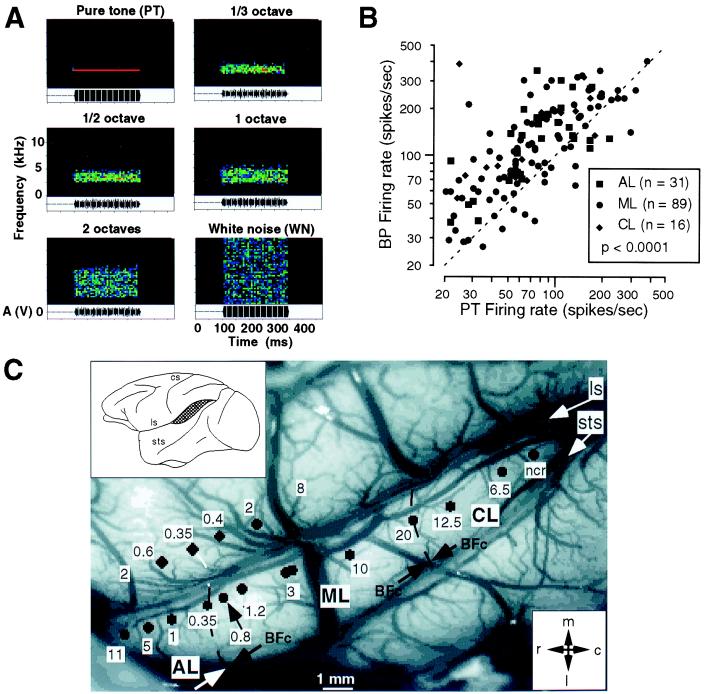

Figure 1.

Mapping of lateral belt areas in the rhesus monkey. (A) Band-passed noise (BPN) bursts of various bandwidths and constant center frequency are displayed as spectrograms. (B) Scattergram comparing responses to BPN and pure-tone (PT) stimuli in the same neurons. (C) Reconstruction of best center frequency maps showing cochleotopic organization of anterolateral (AL), middle lateral (ML), and caudolateral (CL) areas on the STG of one monkey (32). BFc, best center frequency; cs, central sulcus; ls, lateral sulcus; sts, superior temporal sulcus; ncr, no clear response.

Responses of lateral belt neurons to BPN bursts are usually many times stronger than responses to pure tones (Fig. 1B). Facilitation of several hundred percent is the rule. Furthermore, the neurons often respond best to a particular bandwidth of the noise bursts, a property referred to as bandwidth tuning (32), and the response does not increase simply with bandwidth. Only few neurons respond less well to the BPN bursts than to the pure tones. The peak in the bandwidth tuning curve was generally unaffected by changes in intensity of the stimulus, which is important if one considers the involvement of such neurons in the decoding of auditory patterns. Best bandwidth seems to vary along the mediolateral axis of the belt areas, orthogonally to the best center frequency axis.

Frequency-Modulated (FM) Sweeps.

Adding temporal complexity to a pure-tone stimulus creates an FM sweep. Neurons in the lateral belt respond vigorously to linear FM sweeps and are highly selective to both their rate and direction (30, 35, 36). Selectivity to FM is already found in primary auditory cortex or even the inferior colliculus (37–40), but is even more pronounced in the lateral belt. FM selectivity differs significantly between areas on the lateral belt (35), with AL neurons responding better to slow FM rates (≈10 kHz/s) and neurons in CL responding best to high rates (≈100 kHz/s).

It is attractive to use FM sweeps as stimuli in the auditory cortex for another reason: as argued previously (35), FM sweeps are equivalent to moving light stimuli, which have proven so highly effective for neurons of the visual cortex (41). The comparable selectivity in both sensory modalities suggests that cortical modules across different areas could apply the same temporal–spatial algorithm onto different kinds of input.

Monkey Vocalizations.

A third class of complex sounds that we have used extensively for auditory stimulation in the lateral belt are vocalizations from the rhesus monkey's own repertoire. Digitized versions of such calls, recorded from free-ranging monkeys on the island of Cayo Santiago, were available to us from a sound library assembled by Marc Hauser at Harvard University. Hauser (42) classifies rhesus monkey vocalizations into roughly two dozen different categories, which can be subdivided phonetically into three major groups: tonal, harmonic, and noisy calls (35). Tonal calls are characterized by their concentration of energy into a narrow band of frequencies that can be modulated over time. Harmonic calls contain large numbers of higher harmonics in addition to the fundamental frequency. Noisy calls, often uttered in aggressive social situations, are characterized by broadband frequency spectra that are temporally modulated. The semantics of these calls have been studied extensively (42).

A standard battery of seven different calls, which were representative on the basis of both phonetic and semantic properties, was routinely used for stimulation in single neurons of the lateral belt. A great degree of selectivity of neuronal responses was found for different types of calls. Despite a similar bandwidth of some of the calls, many neurons respond better to one type of call than to another, obviously because of the different fine structure in both the spectral and the temporal domain of the different calls. A preference index (PI) was established by measuring the peak firing rate in response to each of the seven calls and counting the number of calls that elicit a significant increase in firing rate. PI 1 refers to neurons that respond only to a single call, an index of 7 refers to neurons that respond to all seven calls, and indices of 2 to 6 refer to the corresponding numbers in between. The PI distribution in most animals reveals that few neurons respond only to a single call, few respond to all seven calls, but most respond to a number in between, usually 3, 4, or 5 of the calls. This suggests that the lateral belt areas are not yet the end stage of the pathway processing monkey vocalizations. Alternatively, monkey calls (MCs) could be processed by a network of neurons rather than single cells, a suggestion that is of course not mutually exclusive with the first.

Spectral and Temporal Integration in Lateral Belt Neurons

The next step in our analysis was to look for the mechanisms that make neurons in the lateral belt selective for certain calls rather than others. One pervasive mechanism that we found was “spectral integration.” MCs can, for example, be broken down into two spectral components, a low-pass and a high-pass filtered version. The neuron in the example of Fig. 2A, which responded well to the total call with the full spectrum, did not respond as well to the low-pass-filtered version and not at all to the high-pass-filtered version. When both spectral components were combined again, the response was restored to the full extent. Thus, neurons in the lateral belt combine information from different frequency bands, and the nonlinear combination of information in the spectral domain leads to response selectivity. In some instances, however, suppression instead of facilitation of the response by combining two spectral components was also found.

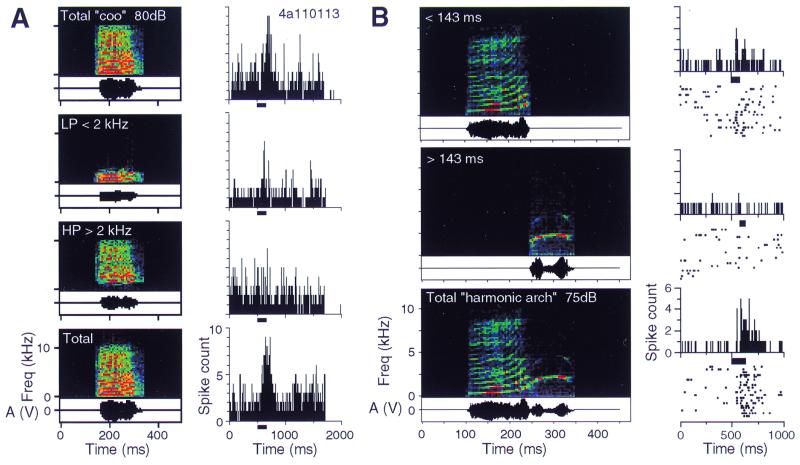

Figure 2.

Spectral and temporal integration in single neurons of the lateral belt in primates. Digitized monkey vocalizations were presented as stimuli, either as complete calls or as components manipulated in the spectral or temporal domain. (A) Nonlinear spectral summation. The “coo” call consists of a number of harmonic components and elicits a good response. If the call is low-pass-filtered with a cutoff frequency of 2 kHz, a much smaller response is obtained. The same is true for the high-pass-filtered version. Stimulation with the whole signal is repeated to demonstrate the reliability of the result (Bottom) (32). (B) Nonlinear temporal summation. The “harmonic arch” call consists of two “syllables.” Each of them alone elicits a much smaller response than the complete call.

A similar combination sensitivity is found in the time domain. If an MC with two syllables is used for stimulation, it is often found that the response to each syllable alone is minimal, whereas the combination of the two syllables in the right temporal order leads to a large response (Fig. 2B). Temporal integration will have to occur within a window as long as several hundreds of milliseconds. The neural and synaptic mechanisms that can implement such integration times have yet to be clarified.

Neurons of the kind reported here are much more frequently found in lateral belt than in A1 (P < 0.001). They also must be at a higher hierarchical level than the types of bandpass-selective neurons described earlier. Combining inputs from different frequency bands (“formants”) or with different time delays in a specific way could thus lead to the creation of certain types of “call detectors” (35). Similar models have been suggested for song selectivity in songbirds (43) and selectivity to specific echo delay combinations in bats (44).

Origins of “What” and “Where” Streams in the Lateral Belt

In our next study we compared the response selectivity of single neurons in the lateral belt region of rhesus macaque auditory cortex simultaneously to species-specific vocalizations and spatially localized sounds (45). The purpose of this study was not only to learn more about the functional specialization of the lateral belt areas, but more specifically to test the hypothesis that the cortical auditory system divides into two separate streams for the processing of “what” and “where.”

After mapping the lateral belt areas in the usual manner, using BPN bursts centered at different frequencies (32), the same set of MCs as in our previous studies was used to determine the selectivity of the neurons for MCs. A horizontal speaker array was used to test the spatial tuning of the same neurons in 20°-steps of azimuth. To determine a neuron's selectivity for both MCs and spatial position, 490 responses were evaluated quantitatively in every neuron.

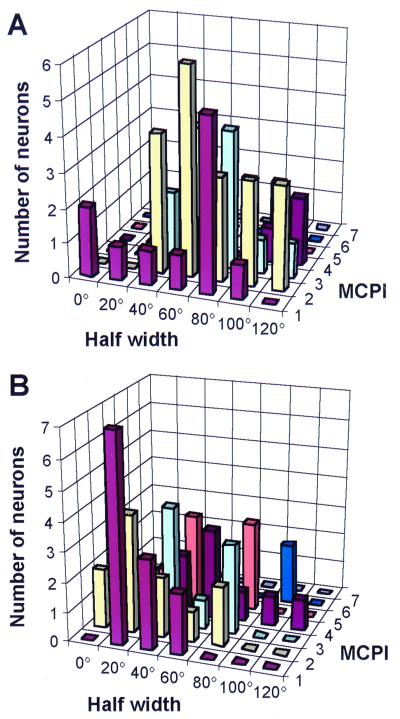

The results of the study from a total of 170 neurons in areas AL, ML, and CL can be summarized as follows (Fig. 3):

Figure 3.

Monkey-call preference index (MCPI) and spatial half width in the same neurons of rhesus monkey lateral belt. Results from the AL and CL areas are plotted in A and B, respectively.

(i) Greatest spatial selectivity was found in area CL.

(ii) Greatest selectivity for MCs was found in area AL.

(iii) In CL, monkey call selectivity often covaried with spatial selectivity.

In terms of processing hierarchies in rhesus monkey auditory cortex the following can be determined:

Spatial selectivity increases from ML → CL, and is lowest in AL.

|

MC selectivity increases from ML → AL, but also from ML → CL.

|

We can conclude, therefore, that the caudal belt region is the major recipient of auditory spatial information from A1 (and subcortical centers). This spatial information is relayed from the caudal belt to posterior parietal cortex and to dorsolateral prefrontal cortex (46). The caudal part of the STG (areas CL and CM) can thus be considered the origin of a “where”-stream for auditory processing. The anterior areas of the STG, on the other hand, are major recipients of information relevant for auditory object or pattern perception. Projecting on to orbitofrontal cortex, they can thus be thought of as forming an auditory “what”-stream (46). As can be seen in the following section, recent results of human imaging strongly support this view. However, more traditional theories of speech perception have emphasized a role of posterior STG in phonological decoding. It is important to note, therefore, that selectivity for communication signals is also relayed to the caudal STG, where it is combined with information about the localization of sounds (see Comparison of Monkey and Human Data below for further discussion).

“What” and “Where” in Human Auditory Cortex

Processing of Speech-Like Stimuli in the Superior Temporal Cortex.

How can research on nonhuman primates be relevant to the understanding of human speech perception? First, there is a striking resemblance of the spectrotemporal phonetic structure of human speech sounds to those of other species-specific vocalizations (35). Looking at human speech samples, one can recognize BPN portions contained in the coding of different types of consonants, e.g., fricatives or plosives. In addition, the presence of FM sweeps in certain phonemes and formant transitions is noticeable. Fast FM sweeps are critical for the encoding and distinction of consonant/vowel combinations such as “ba,” “da,” and “ga” (47, 48). It appears more than likely that human speech sounds are decoded by types of neurons similar to the ones found in macaque auditory cortex, perhaps with even finer tuning to the relevant parameter domains.

Second, there are intriguing similarities between the two species in terms of anatomical location. The STG in humans has been known for some time to be involved in the processing of speech or phonological decoding. This evidence stems from a number of independent lines of investigation. Lesions of the STG by stroke lead to sensory aphasia (49) and word deafness (50). Electrical stimulation of the cortical surface in the STG leads to temporary “functional lesions” used during presurgical screening in epileptic patients (51). Using this approach, it can be shown that the posterior superior temporal region is critically involved in phoneme discrimination. In addition, Zatorre et al. (52) have shown, using positron-emission tomography (PET) techniques, that the posterior superior temporal region lights up with phoneme stimulation.

Using techniques of functional magnetic resonance imaging (MRI), we are able to map the activation of auditory cortical areas directly in the human brain. Functional MRI gives much better resolution than PET and is therefore capable of demonstrating such functional organizational features as tonotopic organization (53). The same types of comparisons as in our monkey studies were used and clearly demonstrate that pure tones activate only limited islands within the core region of auditory cortex on the supratemporal plane, whereas BPN stimulation leads to more extensive zones of activation, particularly in the lateral belt. Several discrete cortical maps can be discerned with certainty, and they correspond to the maps identified in monkey auditory cortex (35, 54).

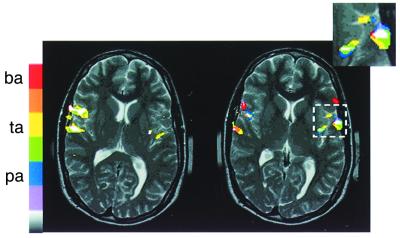

In a next step, the comparison of BPN stimulation with stimulation by consonant/vowel (CV) combinations shows that the latter leads to yet more extensive activation. Different CV tokens (corrected for fundamental frequency) lead to distinct but overlapping activations (Fig. 4), which suggest the existence of a “phonetic mapping.” In some subjects, stimulation with phonemes leads to asymmetry between the left and right hemispheres, with CV combinations often leading to more prominent activation on the left, although this is not always consistently the case (55, 56).

Figure 4.

Functional MRI study of the STG in a human subject while listening to speech sounds. A phonemic map may be recognized anterior of Heschl's gyrus resulting from superposition of activation by three different consonant/vowel combinations [ba, da, ga (64)†; courtesy of Brandon Zielinski, Georgetown University].

One should also emphasize that experimental set-up and instructions are critical for the success of functional MRI studies. Asking subjects to pay specific attention to target words within a list of words presented to them during the functional MRI scan greatly enhances the signal that can be collected as compared with simple passive listening (57).

Auditory Spatial Processing in the Inferior Parietal Cortex.

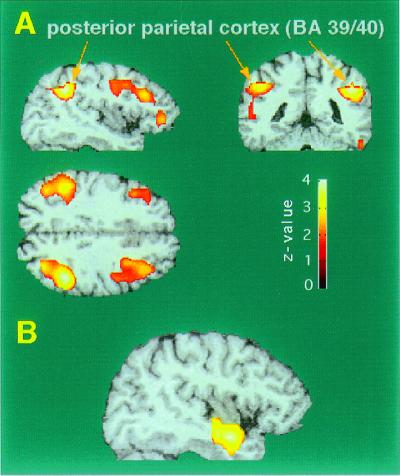

Evidence for the existence of a dorsal stream in humans, as in the visual system, having to do with the processing of spatial information comes from PET studies of activation by virtual auditory space stimuli (58, 59). In these studies, the stimuli were generated by a computer system (Tucker-Davies Technology, Gainesville, FL, Power Dac PD1) based on head-related transfer functions established by Wightman and Kistler (60), and presented by headphones. The sounds had characteristic interaural time and intensity differences, as well as spectral cues encoding different azimuth locations. Use of these stimuli led to specific activation in a region of the inferior parietal lobe (Fig. 5A). The latter is normally associated with spatial analysis of visual patterns (61). However, the activation by auditory spatial analysis led to a focus that was about 8 mm more inferior than the location usually found by visual stimuli. Furthermore, when visual and auditory space stimuli were tested in the same subjects, there were clearly distinct activation foci stemming from the two modalities (59).

Figure 5.

PET activation of the human brain during localization of virtual auditory space stimuli. (A) Statistical parametric mapping (SPM) projections of significant areas of activation from sagittal, coronal, and axial directions. PET areas are superimposed onto representative MRI sections. (B) Area of de-activation in the right anterior STG caused by auditory localization. [Based on data from Weeks et al. (58).]

There was also a slight bias toward activation in the right hemisphere, which is consistent with the idea that the right hemisphere is more involved in spatial analysis than the left hemisphere. At the same time as we received activation of inferior parietal areas by virtue of auditory space stimuli we also got a de-activation in temporal areas bilaterally (Fig. 5B), which supports the idea that these areas are involved in auditory tasks other than spatial ones—for example, those involved in the decoding of auditory patterns, including speech.

Comparison of Monkey and Human Data

In comparing monkey and human data, one apparent paradox may be noted: Speech perception in humans is traditionally associated with the posterior portion of the STG region, often referred to as “Wernicke's area.” In rhesus monkeys, on the other hand, we and others (31, 62) have found neurons in this region (areas Tpt, CM, and CL) that are highly selective for the spatial location of sounds in free field, which suggests a role in auditory localization. Neurons in the anterior belt regions, on the other hand, are most selective for MCs. Several explanations for this paradox, which are not mutually exclusive, appear possible: (i) Speech processing in humans may be localized not only in posterior but also in anterior STG. Evidence for this comes from recent imaging studies (56, 63). (ii) In evolution, the anterior part of the temporal lobe may have grown disproportionately, as has also been argued with regard to prefrontal cortex (17). The precursor of Wernicke's area in the monkey may thus be situated relatively more anterior and encompass a good portion of the anterolateral belt (area AL) or even more anterior regions of the monkey's STG. (iii) Spatial selectivity in the caudal belt may play a dual role in sound localization as well as identification of sound sources on the basis of location (“mixture party effect”). Hypothetically, its medial portion (CM) may be more specialized in localization (62) than its lateral portion (CL).

Conclusions

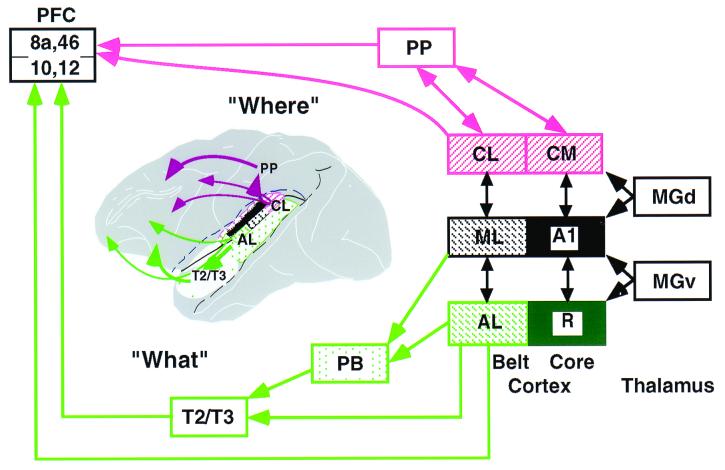

In summary, we have collected evidence from studies in nonhuman as well as human primates that the auditory cortical pathways are organized in parallel as well as serially. The lateral belt areas of the STG seem to be critically involved in the early processing of species-specific vocalizations as well as human speech. By contrast, a pathway originating from the caudal or caudomedial part of the supratemporal plane and involving the inferior parietal areas seems to be an important way station for the processing of auditory spatial information (Fig. 6). As we have emphasized before, it is important that investigations in human and nonhuman primates continue concurrently, using both functional brain imaging techniques noninvasively in humans and microelectrode studies in nonhuman primates. Although direct homologies between the two species have to be drawn with care, only the combination of both techniques can eventually reveal the mechanisms and functional organization of higher auditory processing in humans and lead to effective therapies for higher speech disorders.

Figure 6.

Schematic flow diagram of “what” and “where” streams in the auditory cortical system of primates. The ventral “what”-stream is shown in green, the dorsal “where”-stream, in red. [Modified and extended from Rauschecker (35); prefrontal connections (PFC) based on Romanski et al. (46).] PP, posterior parietal cortex; PB, parabelt cortex; MGd and MGv, dorsal and ventral parts of the MGN.

Acknowledgments

We deeply appreciate the collaborative spirit of Patricia Goldman-Rakic (Yale University), Mark Hallett (National Institute of Neurological Disorders and Stroke), Marc Hauser (Harvard University), and Mortimer Mishkin (National Institute of Mental Health). The help of the following individuals is acknowledged gratefully: Jonathan Fritz (National Institute of Mental Health), Khalaf Bushara and Robert Weeks (National Institute of Neurological Disorders and Stroke), Liz Romanski (Yale University), and Amy Durham, Alexander Kustov, Aaron Lord, David Reser, Jenny VanLare, Mark Wessinger, and Brandon Zielinski (all Georgetown University). This work was supported by Grants R01 DC 03489 from the National Institute on Deafness and Other Communicative Disorders and DAMD17–93-V-3018 from the U.S. Department of Defense.

Abbreviations

- R

rostral area

- CM

caudomedial area

- AL

anterolateral area

- ML

middle lateral area

- CL

caudolateral area

- MGN

medial geniculate nucleus

- STG

superior temporal gyrus

- BPN

band-passed noise

- FM

frequency modulated

- MC

monkey call

- PET

positron-emission tomography

Footnotes

This paper was presented at the National Academy of Sciences colloquium “Auditory Neuroscience: Development, Transduction, and Integration,” held May 19–21, 2000, at the Arnold and Mabel Beckman Center in Irvine, CA.

Zielinski, B. A., Liu, G., and Rauschecker, J. P. (2000) Soc. Neurosci. Abstr. 26, 737.3 (abstr.).

References

- 1.Zeki S M. Nature (London) 1978;274:423–428. doi: 10.1038/274423a0. [DOI] [PubMed] [Google Scholar]

- 2.DeYoe E A, Van Essen D C. Trends Neurosci. 1988;11:219–226. doi: 10.1016/0166-2236(88)90130-0. [DOI] [PubMed] [Google Scholar]

- 3.Ungerleider L G, Mishkin M. In: Analysis of Visual Behaviour. Ingle D J, Goodale M A, Mansfield R J W, editors. Cambridge, MA: MIT Press; 1982. pp. 549–586. [Google Scholar]

- 4.Mishkin M, Ungerleider L G, Macko K A. Trends Neurosci. 1983;6:414–417. [Google Scholar]

- 5.Livingstone M S, Hubel D H. Science. 1988;240:740–749. doi: 10.1126/science.3283936. [DOI] [PubMed] [Google Scholar]

- 6.Zeki S. Neuroscience. 1983;9:741–765. doi: 10.1016/0306-4522(83)90265-8. [DOI] [PubMed] [Google Scholar]

- 7.Desimone R, Schein S J. J Neurophysiol. 1987;57:835–868. doi: 10.1152/jn.1987.57.3.835. [DOI] [PubMed] [Google Scholar]

- 8.Desimone R. J Cogn Neurosci. 1991;3:1–8. doi: 10.1162/jocn.1991.3.1.1. [DOI] [PubMed] [Google Scholar]

- 9.Tanaka K. Curr Opin Neurobiol. 1997;7:523–529. doi: 10.1016/s0959-4388(97)80032-3. [DOI] [PubMed] [Google Scholar]

- 10.Movshon J A, Newsome W T. J Neurosci. 1996;16:7733–7741. doi: 10.1523/JNEUROSCI.16-23-07733.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Colby C L, Goldberg M E. Annu Rev Neurosci. 1999;22:319–349. doi: 10.1146/annurev.neuro.22.1.319. [DOI] [PubMed] [Google Scholar]

- 12.Andersen R A, Snyder L H, Bradley D C, Xing J. Annu Rev Neurosci. 1997;20:303–330. doi: 10.1146/annurev.neuro.20.1.303. [DOI] [PubMed] [Google Scholar]

- 13.Sakata H, Kusunoki M. Curr Opin Neurobiol. 1992;2:170–174. doi: 10.1016/0959-4388(92)90007-8. [DOI] [PubMed] [Google Scholar]

- 14.O'Scalaidhe S P, Wilson F A, Goldman-Rakic P S. Science. 1997;278:1135–1138. doi: 10.1126/science.278.5340.1135. [DOI] [PubMed] [Google Scholar]

- 15.Rao S C, Rainer G, Miller E K. Science. 1997;276:821–824. doi: 10.1126/science.276.5313.821. [DOI] [PubMed] [Google Scholar]

- 16.Ungerleider L G, Haxby J V. Curr Opin Neurobiol. 1994;4:157–165. doi: 10.1016/0959-4388(94)90066-3. [DOI] [PubMed] [Google Scholar]

- 17.Courtney S M, Petit L, Maisog J M, Ungerleider L G, Haxby J V. Science. 1998;279:1347–1351. doi: 10.1126/science.279.5355.1347. [DOI] [PubMed] [Google Scholar]

- 18.Woolsey C N, Walzl E M. Bull Johns Hopkins Hosp. 1942;71:315–344. [PubMed] [Google Scholar]

- 19.Pandya D N, Sanides F. Z Anat Entwicklungsgesch. 1973;139:127–161. doi: 10.1007/BF00523634. [DOI] [PubMed] [Google Scholar]

- 20.Mesulam M M, Pandya D N. Brain Res. 1973;60:315–333. doi: 10.1016/0006-8993(73)90793-2. [DOI] [PubMed] [Google Scholar]

- 21.Galaburda A M, Pandya D N. J Comp Neurol. 1983;221:169–184. doi: 10.1002/cne.902210206. [DOI] [PubMed] [Google Scholar]

- 22.Burton H, Jones E G. J Comp Neurol. 1976;168:249–302. doi: 10.1002/cne.901680204. [DOI] [PubMed] [Google Scholar]

- 23.Pandya D N, Seltzer B. Trends Neurosci. 1982;5:386–390. [Google Scholar]

- 24.Merzenich M M, Brugge J F. Brain Res. 1973;50:275–296. doi: 10.1016/0006-8993(73)90731-2. [DOI] [PubMed] [Google Scholar]

- 25.Morel A, Garraghty P E, Kaas J H. J Comp Neurol. 1993;335:437–459. doi: 10.1002/cne.903350312. [DOI] [PubMed] [Google Scholar]

- 26.Jones E G, Dell'Anna M E, Molinari M, Rausell E, Hashikawa T. J Comp Neurol. 1995;362:153–170. doi: 10.1002/cne.903620202. [DOI] [PubMed] [Google Scholar]

- 27.Molinari M, Dell'Anna M E, Rausell E, Leggio M G, Hashikawa T, Jones E G. J Comp Neurol. 1995;362:171–194. doi: 10.1002/cne.903620203. [DOI] [PubMed] [Google Scholar]

- 28.Kosaki H, Hashikawa T, He J, Jones E G. J Comp Neurol. 1997;386:304–316. [PubMed] [Google Scholar]

- 29.Rauschecker J P, Tian B, Pons T, Mishkin M. J Comp Neurol. 1997;382:89–103. [PubMed] [Google Scholar]

- 30.Rauschecker J P. Acta Otolaryngol Suppl. 1997;532:34–38. doi: 10.3109/00016489709126142. [DOI] [PubMed] [Google Scholar]

- 31.Leinonen L, Hyvärinen J, Sovijärvi A R A. Exp Brain Res. 1980;39:203–215. doi: 10.1007/BF00237551. [DOI] [PubMed] [Google Scholar]

- 32.Rauschecker J P, Tian B, Hauser M. Science. 1995;268:111–114. doi: 10.1126/science.7701330. [DOI] [PubMed] [Google Scholar]

- 33.Hackett T A, Stepniewska I, Kaas J H. J Comp Neurol. 1998;394:475–495. doi: 10.1002/(sici)1096-9861(19980518)394:4<475::aid-cne6>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- 34.Kaas J H, Hackett T A, Tramo M J. Curr Opin Neurobiol. 1999;9:164–170. doi: 10.1016/s0959-4388(99)80022-1. [DOI] [PubMed] [Google Scholar]

- 35.Rauschecker J P. Audiol Neurootol. 1998;3:86–103. doi: 10.1159/000013784. [DOI] [PubMed] [Google Scholar]

- 36.Rauschecker J P. Curr Opin Neurobiol. 1998;8:516–521. doi: 10.1016/s0959-4388(98)80040-8. [DOI] [PubMed] [Google Scholar]

- 37.Whitfield I C, Evans E F. J Neurophysiol. 1965;28:655–672. doi: 10.1152/jn.1965.28.4.655. [DOI] [PubMed] [Google Scholar]

- 38.Suga N. J Physiol (London) 1968;198:51–80. doi: 10.1113/jphysiol.1968.sp008593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Suga N. J Physiol (London) 1969;200:555–574. doi: 10.1113/jphysiol.1969.sp008708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mendelson J R, Cynader M S. Brain Res. 1985;327:331–335. doi: 10.1016/0006-8993(85)91530-6. [DOI] [PubMed] [Google Scholar]

- 41.Hubel D H, Wiesel T N. J Physiol (London) 1962;160:106–154. doi: 10.1113/jphysiol.1962.sp006837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Hauser M D. The Evolution of Communication. Cambridge, MA: MIT Press; 1996. [Google Scholar]

- 43.Margoliash D, Fortune E S. J Neurosci. 1992;12:4309–4326. doi: 10.1523/JNEUROSCI.12-11-04309.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Suga N, O'Neill W E, Manabe T. Science. 1978;200:778–781. doi: 10.1126/science.644320. [DOI] [PubMed] [Google Scholar]

- 45.Rauschecker J P, Durham A, Kustov A, Lord A, Tian B. Soc Neurosci Abstr. 1999;25:394. [Google Scholar]

- 46.Romanski L M, Tian B, Fritz J, Mishkin M, Goldman-Rakic P S, Rauschecker J P. Nat Neurosci. 1999;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Liberman A M, Cooper F S, Shankweiler D P, Studdert-Kennedy M. Psychol Rev. 1967;74:431–461. doi: 10.1037/h0020279. [DOI] [PubMed] [Google Scholar]

- 48.Tallal P, Piercy M. Nature (London) 1973;241:468–469. doi: 10.1038/241468a0. [DOI] [PubMed] [Google Scholar]

- 49.Wernicke C. Der aphasische Symptomenkomplex. Breslau, Poland: Cohn Weigert; 1874. [Google Scholar]

- 50.Barrett A M. J Nerv Ment Dis. 1910;37:73–92. [Google Scholar]

- 51.Boatman D, Lesser R P, Gordon B. Brain Lang. 1995;51:269–290. doi: 10.1006/brln.1995.1061. [DOI] [PubMed] [Google Scholar]

- 52.Zatorre R J, Evans A C, Meyer E, Gjedde A. Science. 1992;256:846–849. doi: 10.1126/science.1589767. [DOI] [PubMed] [Google Scholar]

- 53.Wessinger C M, Buonocore M, Kussmaul C L, Mangun G R. Hum Brain Mapp. 1997;5:18–25. doi: 10.1002/(SICI)1097-0193(1997)5:1<18::AID-HBM3>3.0.CO;2-Q. [DOI] [PubMed] [Google Scholar]

- 54.Wessinger C M, Tian B, VanMeter J W, Platenberg R C, Pekar J, Rauschecker J P. J. Cognitive Neurosci. in press; 2000. [DOI] [PubMed] [Google Scholar]

- 55.Binder J R, Frost J A, Hammeke T A, Cox R W, Rao S M, Prieto T. J Neurosci. 1997;17:353–362. doi: 10.1523/JNEUROSCI.17-01-00353.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Binder J R, Frost J A, Hammeke T A, Bellgowan P S F, Springer J A, Kaufman J N, Possing E T. Cereb Cortex. 2000;10:512–528. doi: 10.1093/cercor/10.5.512. [DOI] [PubMed] [Google Scholar]

- 57.Grady C L, VanMeter J W, Maisog J M, Pietrini P, Krasuski J, Rauschecker J P. Neuroreport. 1997;8:2511–2516. doi: 10.1097/00001756-199707280-00019. [DOI] [PubMed] [Google Scholar]

- 58.Weeks R A, Aziz-Sultan A, Bushara K O, Tian B, Wessinger C M, Dang N, Rauschecker J P, Hallett M. Neurosci Lett. 1999;262:155–158. doi: 10.1016/s0304-3940(99)00062-2. [DOI] [PubMed] [Google Scholar]

- 59.Bushara K O, Weeks R A, Ishii K, Catalan M-J, Tian B, Rauschecker J P, Hallett M. Nat Neurosci. 1999;2:759–766. doi: 10.1038/11239. [DOI] [PubMed] [Google Scholar]

- 60.Wightman F L, Kistler D J. J Acoust Soc Am. 1989;85:858–867. doi: 10.1121/1.397557. [DOI] [PubMed] [Google Scholar]

- 61.Haxby J V, Horwitz B, Ungerleider L G, Maisog J M, Pietrini P, Grady C L. J Neurosci. 1994;14:6336–6353. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Recanzone G H, Guard D C, Phan M L, Su T K. J Neurophysiol. 2000;83:2723–2739. doi: 10.1152/jn.2000.83.5.2723. [DOI] [PubMed] [Google Scholar]

- 63.Belin P, Zatorre R J, Lafaille P, Ahad P, Pike B. Nature (London) 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- 64.Morad A, Perez C V, Van Lare J E, Wessinger C M, Zielinski B A, Rauschecker J P. Neuroimage. 1999;9:S996. [Google Scholar]