Abstract

How does visual perception shape the way we coordinate movements? Recent studies suggest that the brain organizes movements based on minimizing reaching errors in the presence of motor and sensory noise. We present an alternative hypothesis in which movement trajectories also result from acquired knowledge about the geometrical properties of the object that the brain is controlling. To test this hypothesis, we asked human subjects to control a simulated kinematic linkage by continuous finger motion, a completely novel experience. This paradigm removed all biases arising from influences of limb dynamics and past experience. Subjects were exposed to two different types of visual feedback; some saw the entire simulated linkage and others saw only the moving extremity. Consistent with our hypothesis, subjects learned to move the simulated linkage along geodesic lines corresponding to the geometrical structure of the observed motion. Thus, optimizing final accuracy is not the unique determinant of trajectory formation.

Introduction

How do we move our arms to reach the things we need? Goal-directed reaches, predominantly in the transverse plane, are good candidate movements to study this issue due to their relative simplicity. Early studies report that we move our hand, typically displayed as a cursor-like point, in straight lines and with a bell-shaped velocity profile (Morasso, 1981; Soechting and Lacquaniti, 1981; Abend et al., 1982; Flash and Hogan, 1985). This result has been repeated many times and persists through unusual visual distortions (Flanagan and Rao, 1995; Scheidt and Ghez, 2007), perturbing forces (Lackner and Dizio, 1994; Shadmehr and Mussa-Ivaldi, 1994), and experimentally imposed reaching strategies (Mazzoni and Krakauer, 2006; Taylor et al., 2010). It seems hard to refute the statement by Flanagan and Rao (1995) that “reaching movements are adapted so as to produce straight lines in visually perceived space.” An emerging trend is to consider this empirical observation to be a result of a particular type of optimal controller (Uno et al., 1989; Wolpert et al., 1995; Dingwell et al., 2004). According to this view, the brain is not concerned about the trajectory of a movement, but instead, only about reaching a final goal in the most efficient way. This could be done, for example, by minimizing the effect of motor noise on the final accuracy of a reaching movement (Todorov and Jordan, 2002).

We hypothesize that, when we execute movements of a controlled endpoint, we explicitly plan a trajectory, which would require an expansion of some common optimal control-based descriptions of movement beyond cost functions based on final errors. Furthermore, we propose that the planned trajectory is based on the available information about the geometry of the entire system, not just the endpoint. Therefore, the resulting movements need not necessarily be straight-line trajectories as previously thought. Instead, they may be curved paths corresponding to minimum length segments within the inherent geometry of the system.

To test this hypothesis, subjects remotely controlled objects of different shape presented on a computer screen via movements of their fingers. The finger-to-object mapping was novel, eliminating experience confounds associated with reaching. Subjects who controlled a planar arm-like linkage, but were only shown a cursor at its endpoint, attempted to move in straight lines. Subjects who were shown the complete linkage moved in curved arcs of the endpoint rather than straight paths. Moreover, these arcs are consistent with the geometrical structure of the linkage, suggesting that the nervous system develops an implicit understanding of the linkage geometry that causes these particular trajectories.

Our results may explain observed movement curvatures (Atkeson and Hollerbach, 1985), as well as extend computational work suggesting that “[trajectory] geodesics are an emergent property of the motor system” (Biess et al., 2007). Moreover, subject trajectories cannot be accounted for by task demands or error feedback alone, suggesting an adjustment must be made to the standard formulation of optimal control theory. Together, our experiments demonstrate that the machinery of visual perception is capable of identifying the geometry of the controlled device from visual observation of its motion and adapts to produce maximally efficient movements in that geometry.

Materials and Methods

Experiment design.

Fifty-nine subjects (27 females; 32 males) consented to participate in this experiment, which was approved by Northwestern University's Institutional Review Board. Subjects wore a CyberGlove (Immersion Corporation) on their hand that captured the movements of each finger joint, palm arch, thumb rotation, and separation between fingers via 19 resistive sensors. Data from the glove were sampled at a rate of 50 Hz (Simulink). Only adult subjects whose hands fit comfortably (and without noticeable slip) inside the CyberGlove, did not have hand tremors, had no history of neurological disorders, and had no prior knowledge of the experimental procedure were allowed to participate.

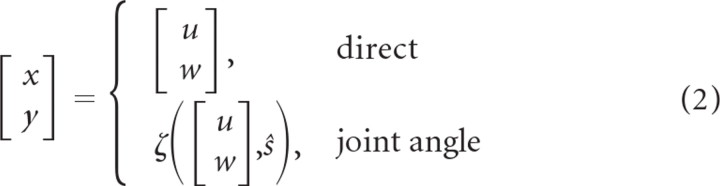

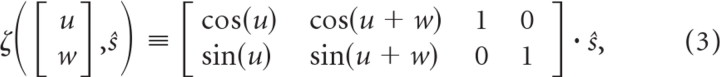

All groups of subjects used finger manipulation to execute movements of a remotely controlled endpoint on a computer monitor directly in front of them (see Fig. 1, panel 2). The posture of the hand was represented as a vector in the 19-dimensional space of glove sensors, h. The h vector was continuously projected from hand space to a two-dimensional command plane by the 2 × 19 linear mapping, A (see Fig. 1, panel 1). The location on the command plane, u and w (Eq. 1), either directly determined the Cartesian coordinates of the controlled endpoint (Experiment III) or it was the joint angle inputs (Experiments I and II) that defined the configuration of a two-link simulated planar linkage via the forward kinematics, ζ (Eqs. 2, 3):

|

|

|

where ŝ = [l1,l2,x0,y0]T is a constant parameter vector that includes the link lengths and the origin of the proximal (shoulder) joint.

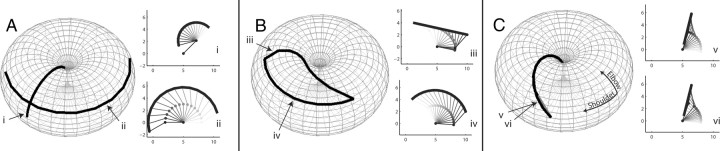

Figure 1.

Experiment design. (1) Subjects wore an instrumented data glove that captured the angles of 19 joints on their hand and fingers. These joint angles were collected into a vector, h(t), that varied through time as subjects moved their fingers. h(t) was continuously multiplied by the linear operator, A, to produce the command vector [u,w]T. The command vector was then treated as the joint angles of a simulated planar two-link mechanism to determine the position of the linkage free moving endpoint (Experiments I and II), or the command vector directly determined the endpoint position (Experiment III). (2) The experimental setup—the subject's hand was completely decoupled from the simulated object it controlled. (3) The four calibration postures for participants in all experiments. (4) In Experiment I, subjects controlled the joint angles of the simulated mechanism and were divided into two groups. One group had complete vision of the simulated linkage (CV) and the other had only minimal vision of the linkage endpoint (MV). Both the CV and MV groups used finger manipulations to move the linkage endpoint into targets presented at vertices of a rectangle. In panels 4–6, the command vector elements are shown in gray and what subjects actually saw is shown in black. (5) Experiment II followed the protocol of Experiment I, but used an irregular target set designed to increase the separation between straight-line paths joining targets over the screen and straight-line paths jointing the targets over the torus (for explanation, see Fig. 4). (6) Experiment III followed the protocol of Experiment I, but implemented direct endpoint control, bypassing the kinematics of the linkage. The linkage displayed to the CV group in Experiment III was determined via the inverse kinematics (Eq. 4) and had no contribution to the movement of the controlled endpoint.

We used this paradigm for greater experimental control and to eliminate confounds. To determine whether trajectory planning is influenced by the perception of geometry of the moving object, we required the ability to control the subject's perception of that geometry. Therefore, we avoided common reaching movement paradigms that carry the heavy confounds of past experience. Everyone who can make reaches has done so millions of times throughout their lives and has acquired so much information about their arms that any experimental condition that attempts to alter their knowledge of the geometry of their arm would be in a losing battle with that enormous database. This finger–object interface is a novel scheme that should have carried minimal control bias for the subjects. The specific choice to use the cursor-like object (Eq. 2, direct) was for comparison with the reaching paradigms currently in use that display the hand as a point on a monitor during movement. The possible choices for the second object were vast, virtually infinite, since in principle it could have had any kinematic structure. To have well defined curvilinear geodesics, the controlled kinematics needed to be smoothly nonlinear. Another practical constraint was that the object be sufficiently simple, so that the brain could develop a model of its kinematics within the time span of the experimental protocol. We therefore chose the two-linkage object (Eq. 2, joint angle) because our previous work had shown that it was a transformation that was learnable within 10 training blocks.

Subjects were divided into three experiments and two conditions per experiment: subjects received either complete vision of the simulated linkage (CV) or minimal vision of the linkage endpoint only (MV). In Experiment I, the CV group (n = 16) had joint angle control and saw a line rendering of the mechanism attached to the circular controlled endpoint. The MV group (n = 16) had joint angle control and saw only the controlled endpoint (a 17th MV subject was excluded from all analysis because no improvement was shown throughout training). Both groups trained on four targets presented at the vertices of a rectangle (see Fig. 1, panel 4).

Subjects in Experiment II were likewise divided into CV (n = 5) and MV (n = 5) groups. They performed the same protocol as those in Experiment I with the exception that the four targets on which they trained were in different locations in the workspace (see Fig. 1, panel 5). These targets were chosen so as to increase the separation between joint angle geodesics (curved dashed lines) and straight paths joining the targets. The target layout was created via genetic algorithm whose fitness function was the mean Hausdorff distance (see below, Geodesic similarity measure) of the six path pairs joining all targets.

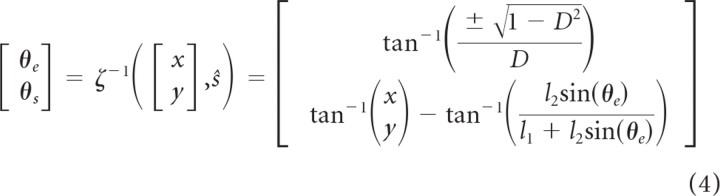

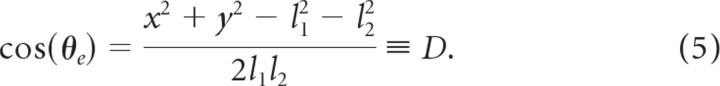

In Experiment III, the MV group (n = 8) had direct control and saw only the controlled endpoint. The CV group (n = 8) had direct control and saw the same rendering as the CV groups from Experiments I and II that was determined through the inverse kinematics, ζ−1 (Eq. 4) (Spong et al., 2006). Here, θe and θs are the “elbow” and “shoulder” joint angles of the mechanism (which for the CV group in Experiments I and II were simply u and w, respectively):

|

and where

|

The command plane for direct control groups allowed movement over a (theoretically) infinite x–y space on the monitor, while the joint angle control groups were limited to the range of motion of the simulated linkage. To enforce consistent visual feedback across experiments, and to prevent imaginary solutions to the inverse kinematics in Equation 4, boundaries were placed on the direct control groups. If the controlled endpoint passed beyond the reach of the kinematics (Eq. 3), its location would be projected back onto a circle defined by the maximum reach of the linkage. The location of the projection on the circle was calculated as the intersection of the circle with the line connecting the linkage shoulder location, [x0,y0]T, and the location [u,w]T. This looked like a hard boundary to MV groups across all experiments at the limit of the reach of the mechanism, and ensured that feedback remained parsimonious for the CV group in Experiment III (see Fig. 1, panel 6).

The mapping matrix, A, was created by having the subject generate four preset hand gestures. These were identical for all subjects and were a flat spread open posture, a pistol-like posture, a slightly curled posture of the fingers as though one were unscrewing a cap and a lightly clenched fist with the thumb curled behind the middle phalanges (see Fig. 1, panel 3). The mapping matrix was then calculated as follows: A = θ · H+, where θ is a 2 × 4 matrix of either angle pairs at the extreama of the linkage workspace or corners of the x, y workspace, depending on the group. H+ is the Moore–Penrose pseudoinverse of H, the 19 × 4 matrix whose columns are signal vectors corresponding to the calibration postures. As a result of this redundant geometry, the controlled endpoint could reach all points on the rectangular workspace with many anatomically feasible gestures for both direct and joint angle control groups. The initial calibration postures were chosen empirically such that all points in a large convex workspace were reachable. During calibration, subjects did not see the linkage or the endpoint and thus had no information about the correspondence between hand postures and the endpoint positions (Danziger et al., 2009).

Protocol.

Subjects moved the controlled endpoint to four different targets (whose locations differed by experiment) that appeared on the screen. Reaching error, the Euclidean distance from the moving endpoint location to target center, was calculated 800 ms after movement onset, at which point the target changed color. Subjects were instructed to minimize this error by positioning the endpoint as close as possible to the target before it changed color. The next trial was initiated only after the subject acquired the target and remained inside for 2 s. Once a new target appeared, the subject had unlimited time to plan the movement, and the countdown to endpoint error initiated only after the subject left the previous target.

For all six experimental groups, the four targets were presented in pseudorandom order such that each of the six paths connecting the targets was traversed heading in each direction two times for a total of 24 movements per epoch. Subjects performed 12 epochs, after each of which they were allowed to rest if they desired.

During the final two epochs, visual feedback of the linkage was suppressed for all CV groups such that the CV and MV groups terminated the experiment in the same feedback condition, that is, seeing only the controlled endpoint. This change is marked by vertical dashed lines on appropriate figures.

Statistics.

Significance tests on populations were done using repeated-measures ANOVA (NCSS and PASS 97 software) unless otherwise specified. The points on line graphs were plotted as the mean values for each subject, averaged across subjects. All statistical tests other than the ANOVA tests were performed using the MATLAB statistics toolbox, version 7.8.0.347 (R2009a). Confidence intervals were calculated using Student's t statistic over subject averages. Power analysis of the error metrics in Figures 2 and 10 was calculated post hoc by estimating the effect size, 0.66, from data collected in the study by Danziger et al. (2009), a task very similar to Experiment I in this paper, and only from the first 10 epochs of training to match the amount of training subjects had in this experiment (GPower 3.1).

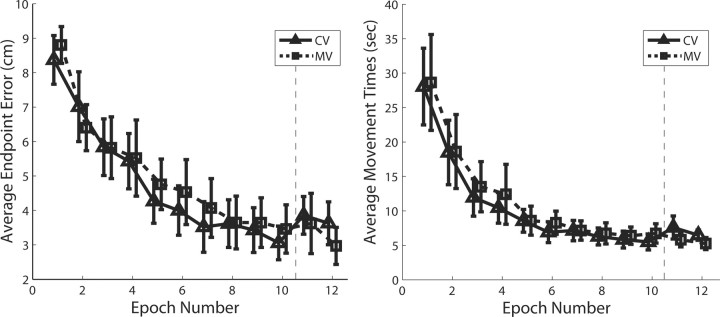

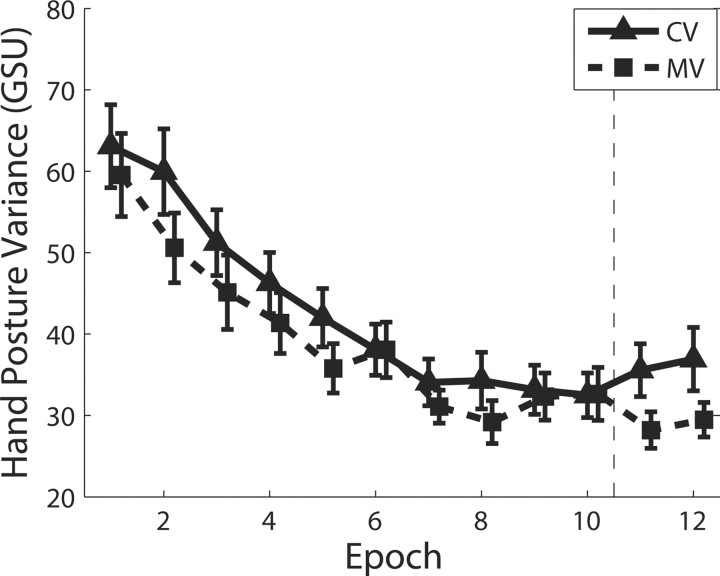

Figure 2.

Performance evaluation metrics for Experiment I. Subjects were instructed to move quickly and accurately to displayed targets. Average endpoint error (left) is the distance of the endpoint to the target center after 800 ms (see Materials and Methods). Errors were measured as on-screen distances in centimeters, and targets had a 2 cm radius. Average movement time (right) is the mean total time taken to reach a target in a given epoch. Results indicate commensurate execution of the explicit task goals for the CV and MV groups. This result is mirrored by the CV and MV groups from Experiments II and III (Fig. 10). The vertical dashed line denotes when the feedback of the simulated linkage was discontinued for the CV group, which resulted in a significant increase in errors and movement times. All error bars are 95% confidence intervals.

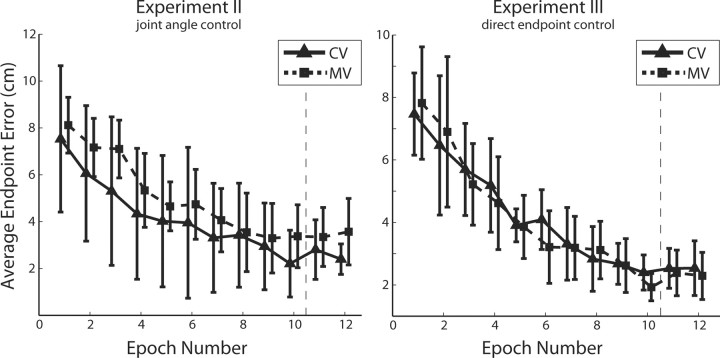

Figure 10.

Endpoint errors throughout training for Experiments II and III. Performance differences between CV and MV groups for both experiments were not found to be statistically significant. The slightly steeper learning rates seen in Experiment III may be attributed to controlling a completely linear, and therefore less challenging, system. The vertical dashed line denotes when the feedback of the simulated linkage was discontinued for the CV groups.

Rectilinearity was measured by the aspect ratio, the maximum lateral deviation from a straight line joining the start and end of the movement divided by the length of that line. Only a completely collinear set of points has an aspect ratio of zero, and a perfect semicircle is one example of a set of points having an aspect ratio of 0.5.

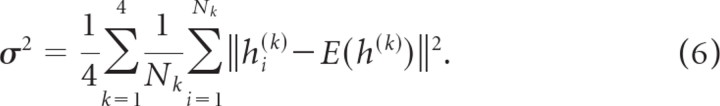

If a hand posture can be represented as a 19-dimensional vector (the position along each dimension defined by a glove sensor value), then typically a 19 × 19 covariance matrix would be required to present the variability of hand postures in the 19-dimensional vector space. We distill this matrix down to a scalar to show the data trends in a manageable way as follows:

|

For each target, k (k = 1, …, 4), there are Nk movements, and each movement, i, terminates with a 19-dimensinal hand configuration vector hi(k). From each configuration vector, we subtract the expected value at target k, E(h(k)), calculated as the mean of all h-vectors at the moment that the subjects hit target. The L2-norm of this difference is then taken and summed over all such movements. This is done separately for each target (because of their different expected values) and then averaged across targets. Between-group and within-group differences were detected using repeated-measures ANOVA.

The geodesic similarity measure was calculated as the proportion of a subject's distinguishable movements closer to the joint angle geodesic than to the screen geodesic at the end of training (epochs 9 and 10; Eq. 10). The Lilliefors normality test fails to reject the null hypothesis for both the CV and MV subject distributions in Experiment I at p = 0.22 and p = 0.32, respectively. Therefore, a two-sample t test was used and rejects the null hypothesis that the MV and CV subject data were drawn from the same distribution at p < 0.005.

Likewise, in Experiment II, the Lilliefors test fails to reject the null for CV and MV subject distributions at p > 0.5 for both. A two-sample t test rejects the null that MV and CV subject data were drawn from the same distribution p = 0.046.

For Experiment III data, the Lilliefors test rejected that the MV group was drawn from a normal distribution (p = 0.02). Therefore, the Mann–Whitney–Wilcoxon test was used and rejected that MV and CV groups had the same distribution (p = 0.049). One MV subject was >2 SDs above the mean in geodesic similarity measure, errors, and times, and was therefore excluded from the statistics.

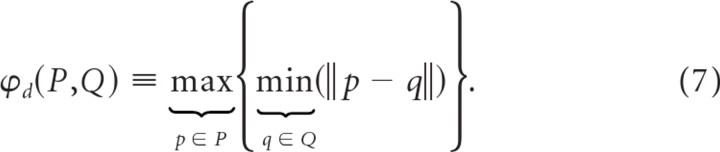

Geodesic similarity measure.

The similarity between two trajectories was measured with the Hausdorff distance between the two sets of sampled x–y endpoint pairs comprising the trajectories. The directed Hausdorff distance from set P to Q is defined as follows:

|

The Hausdorff distance is then the following:

The Hausdorff distance is zero if and only if the two sets are identical and generally becomes more positive as the sets become more different.

An actual trajectory (T) was determined to be more similar to one of two geodesics: either it was more similar to (1) the straight-line path on the screen from the beginning and ending points of the movement (Gs), or (2) the trajectory of the controlled endpoint on the screen resulting in a geodesic in the configuration space of the simulated linkage (Ga). (Note that the starting and ending points on the torus were taken to be simply the initial and final values on the command plane, [u,w]T, for the joint angle control groups. However, the direct endpoint control groups did not control joint angles, and so the position in the configuration space was calculated via the angle pairs resulting from the inverse kinematics.) A trajectory was determined to be more similar to Gs than Ga if Φ(T,Gs) < Φ(T,Ga), and more similar to Ga otherwise.

There are some cases in which deciding whether T is more similar to one geodesic than another would be either unsubstantiated or meaningless. Specifically, there are cases in which Gs and Ga are themselves so similar that declaring that T is closer to one than another is meaningless (see Fig. 8, top right). In cases like these, Ga is approximately equivalent to a straight-line anyway; therefore, even if we were to force a similarity decision, it would not enhance the ability of the geodesic similarity metric to discern which types of trajectories a subject was making most frequently.

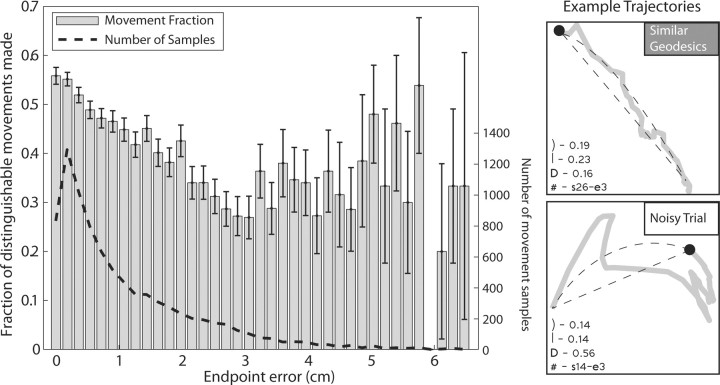

Figure 8.

The fraction of trajectories that conformed to a geodesic path (called “distinguishable” trajectories) increases as a function of decreasing endpoint error in Experiment I (left). The gray histogram illustrates a clear trend showing that, with increasing accuracy, there is increased distinguishability (left axis). Each bar corresponds to the number of distinguishable movements divided by the total number of movements that fell into that endpoint error bin. Therefore, the measure represents the fraction of movements with a given endpoint error that were distinguishable. Beyond ∼3 cm of endpoint error, noise in our estimate of the true fraction of distinguishable movements dominates the trend, which can be seen in the error bars (1 sample SD). Estimates for these bins are noisy because subjects made few movements with very large errors. The number of movements in each error bin is overlaid as the dashed line (right axis) and drops off rapidly for large errors. Because the sample SD for proportions shrinks with , many samples are needed for accurate estimates. Trajectories were not distinguishable for one of two reasons: The toroidal geodesic corresponding to the observed trajectory was itself very close to a straight line, thereby making a judgment on which geodesic the trajectory was most similar to uninformative (top right). If the trajectory was “noisy” (in the sense that it was very nonsmooth), it would not correspond well to either geodesic, making a determination arbitrary (bottom right). Trajectories that were not distinguishable did not impact the geodesic similarity measure (Fig. 7). Formal classification is given in Equation 10.

Another difficulty arises when, rather than T being too similar to Gs and Ga, it is too dissimilar. Due to the difficulty of this learning task, many trajectories were spatially noisy and had such large errors that T resembled neither Gs nor Ga (see Fig. 8, bottom right). Deciding such a movement was similar to either geodesic seemed to be not only arbitrary, but to actually be wrong, a misclassification.

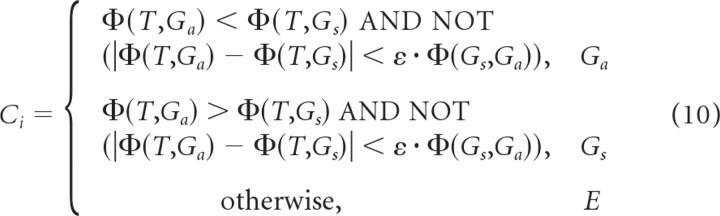

Therefore, we were left with three determinations or “classes”; either T was more similar to the Euclidean straight-path geodesic, Gs, more similar to the joint angle configuration space geodesic, Ga, or of equivalent similarity (or dissimilarity) to both, E (called indistinguishable in the text). We assign a class, Ci, to each of the i trajectories from the set of our three possible classes, Ci ϵ {Ga,Gs,E}. We designate Ci as class E when

If T is similar to one geodesic and different from the other, the left-hand side of Equation 9 will be large, and the likelihood of an E classification being made will be smaller. However, if the geodesics are very similar to each other, the left-hand side of Equation 9 will be small, even if T is identical with Gs or Ga. To account for this, we include the Hausdorff distance between the two geodesics on the right-hand side of Equation 9, which will be smaller when the two geodesics are more similar, and thus the likelihood of an E classification will decrease. ε is a scalar that determines the burden of distinguishability between T and the geodesics; a larger value of ε implies that T must be decisively more similar to one geodesic than the other. Considering our entire dataset of trajectories from Experiment I, the number of Ga or Gs classifications is linear with the magnitude of ε (r2 = 0.93), such that when ε = 0, 100% of trajectories are thus classified, and when ε = 1, 0.07% are.

To summarize the entire classification process, we have the following:

|

Results

Experiment I

Proficiency with explicit task goals

Thirty-two healthy adult subjects without history of neural or motor deficits participated in this learning task. Subjects wore an instrumented glove that recorded 19 joint angles throughout their hand. These signals were continuously mapped into two command variables, which were used as the joint angles of a remotely operated two-link planar kinematic chain—a simplified arm model—displayed to them on a computer screen (Eqs. 1–3; Fig. 1, panels 1, 2) (Danziger et al., 2009).

Subjects were asked to shape their fingers so as to position the endpoint cursor into displayed targets within 800 ms and hold it there for 2 s. The four targets were presented in pseudorandom order such that each of the six paths connecting the targets was traversed heading in each direction two times for a total of 24 movements per epoch (Fig. 1, panel 4, bottom). After each training epoch, subjects were allowed to rest if needed. To prevent reaction time effects, subjects were allowed unlimited planning time before moving. Finally, subjects were divided into two groups, those with complete vision of the entire simulated kinematic linkage (CV, n = 16) and those with minimum vision of the linkage extremity only (MV, n = 16). But we stress that both CV and MV groups controlled the identical system, and only differed in the visual feedback presented of that system.

The subjects' task was to minimize endpoint error, the distance from the endpoint of the linkage to the center of the target 800 ms after movement onset (Fig. 2, left). The time-to-completion metric (Fig. 2, right) measures the entire movement time: the initial 800 ms phase plus any additional time taken during the corrective movements needed to acquire the target. Subjects were able to occasionally reach and hold position in the target in 800 ms or less (this happened 4.8% of the time in epochs 1 and 2 and 27.4% of the time by the end of training in epochs 9 and 10), but all subjects were required to hold position in the target for 2 s for a hit to register.

Neither endpoint errors nor the time taken to acquire the targets indicates that being exposed to less visual information about the underlying system has an effect on the rate at which subjects improve performance. A repeated-measures ANOVA finds the effect of feedback on error and times to be insignificant (p = 0.88, F(1,381) = 0.02, and p = 0.73, F(1,381) = 0.12, respectively) with overall group means differing by very small margins, 4.69 − 4.64 cm = 0.05 cm in error and 10.68 − 10.27 s = 0.41 s in movement times (for MV-CV). Post hoc power analysis based on effect size from a similar study in which between-group differences were observed estimates our power at 99% for N = 32 (Danziger et al., 2009). As expected, the ANOVA confirms that the effect of training is significant for both measures (p < 10−5; F(1,11) > 50) and that the interaction effect between training and feedback is not significant for errors (p = 0.35; F(1,381) = 1.1) and times (p = 0.93; F(1,381) = 0.45).

After 10 training epochs, visual feedback of the linkage was suppressed for the CV group, and both the CV and MV groups performed the experiment under identical conditions for epochs 11 and 12. Two-sample t tests show that the feedback change between epochs 10 and 11 induced a significant adverse change in endpoint error (p = 0.041) and in time to completion (p = 0.037) in the CV group, whereas no significant difference was seen in the MV group between epochs 10 and 11 (p = 0.651, error, and p = 0.277, time to completion). This suggests that CV subjects integrated the supplemental visual feedback, the entire graphical structure of the simulated linkage, into the development of their learning strategy during training because its absence negatively affected performance.

As a consequence of engaging in a completely novel and very unusual task, subjects struggled to make accurate movements and acquire targets. This effect is particularly pronounced in the early stages of training in which errors averaged 9 cm on an 11 cm workspace and trial times could be over 1 min for a single target. This result is not unexpected, and in fact, it is welcome because it shows that this paradigm succeeds in implementing a system whose geometry is initially foreign to subjects. Even at the end of this learning task, subjects were not landing inside the targets on every attempt, and large on-line corrections during these movements made some of these trajectories nonsmooth.

Effects of visual feedback on endpoint trajectories

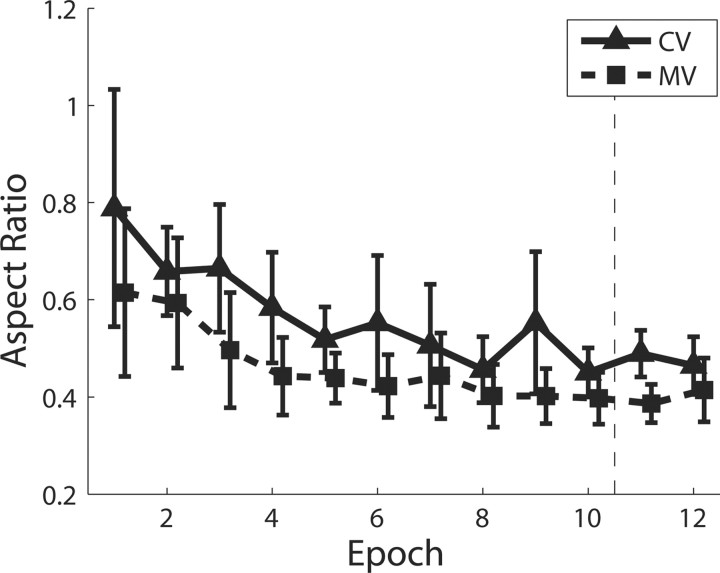

Evidence has been presented that reaching movements and other similar motor tasks are planned in a visually perceived coordinate system (Hogan, 1984; Flash and Hogan, 1985; Wolpert et al., 1995) and that subjects attempt to execute straight trajectories in that visual reference frame (Morasso, 1981; Flanagan and Rao, 1995; Mosier et al., 2005). Figure 3 shows the aspect ratio, a measure of straightness, in both the CV and MV groups. We found that, with practice, both groups executed increasingly straighter movements of the cursor. A repeated-measures ANOVA confirms that the decrease in aspect ratio over training is significant for both groups (p < 10−5; F(1,11) = 6.14). The analysis also revealed a persistent and significant difference of aspect ratio between CV and MV groups (p = 0.005; F(1,381) = 9.11), meaning that MV subjects executed straighter movement trajectories than CV subjects. This finding presents the possibility that either the MV group was able to learn the mapping more effectively, and thus make straighter movements than the CV group, or that the CV group developed a distinct strategy from the MV group, one whose goal was not to produce straight-line trajectories of the endpoint. The strong initial decrease in the aspect ratio in both groups reflects subjects' learning; the task was initially novel and difficult, and as both groups improved they produced smoother trajectories, which are being picked up by this measure as straighter movements. The convergence of the CV group to a higher aspect ratio at the end of training and the consistently higher aspect ratio throughout training suggest the possibility of a meaningful difference in trajectory shape between groups.

Figure 3.

Trajectory straightness for Experiment I. Endpoint trajectories in visually perceived space were significantly more rectilinear for the MV group than for the CV group. The aspect ratio is used to measure the Euclidean straightness of the endpoint trajectory and is the maximum lateral deviation divided by the straight line path length joining the start and end of the movement. A zero aspect ratio is a perfectly straight line trajectory of the endpoint. The vertical dashed line indicates where the feedback of the simulated arm was suppressed for the CV group. All error bars are 95% confidence intervals.

We considered the possibility that CV subjects controlled the motion of the simulated linkage so as to follow paths that were consistent with the observed linkage geometry. The intrinsic geometry of a double pendulum has the structure of a two-dimensional torus (Fig. 4). Paths of locally minimum length (i.e., arcs on a torus, circle segments on a sphere, or straight lines on a plane) are termed “geodesics” of their respective surfaces. The shortest line joining two points on the torus defined by the two-joint linkage maps into a curved line on the plane in which the linkage endpoint moves. Therefore, we considered the hypothesis that by observing the motions of the simulated linkage while practicing to make reaching movements, the subjects formed an implicit representation of the geometric structure of the linkage.

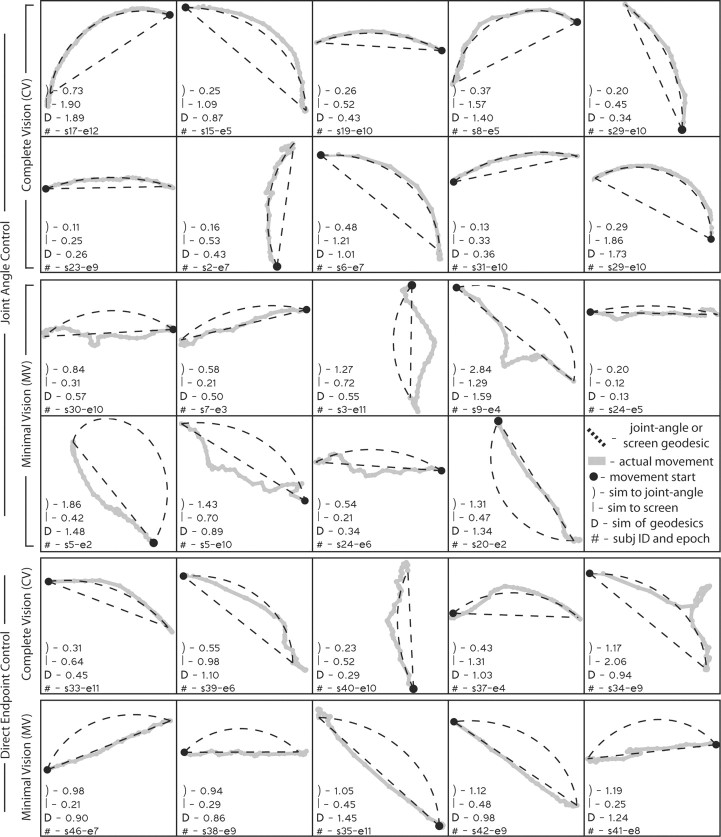

Figure 4.

The endpoint of a two-joint planar linkage is completely represented by a point on the surface of a torus that spans the joint angle space of the linkage, embedded isometrically in a three-dimensional Euclidean space. Artificial endpoint trajectories i–vi (right) are mapped onto the configuration space of the two-link revolute-joint kinematic chain (left). The light gray mesh forms orthogonal geodesics (minimum pathlength excursions) spanning the torus. A shows a trajectory corresponding to a fixed “shoulder” angle and a linear excursion of the “elbow” angle (i), an outstretched flexing movement, and a trajectory of fixed “elbow” angle with a linear excursion of the “shoulder” angle (ii). B shows iii and iv, two trajectories with the same start and end location, iii with a zero curvature movement of the linkage endpoint and iv with a linear excursion of both shoulder and elbow angle. It is shown that linear joint angle excursions (i, ii, iv) follow geodesics on the torus and curved endpoint trajectories, while straight endpoint trajectories (iii) do not generally follow geodesics on the torus. C shows a case in which a zero curvature movement of the endpoint (v) corresponds to a geodesic on the torus (vi). This is true for all straight-line endpoint movements projecting radially outward from the fixed base of the linkage.

To test this hypothesis, we compared the endpoint trajectories during the initial 800 ms movement phase to both a straight line and the endpoint trajectory that would have resulted if the movement followed a geodesic path in the joint angle space of the simulated kinematic linkage. Figure 5, top four rows, contains selected examples from the CV and MV subject groups that illustrate the behavioral differences we want to highlight. CV subjects followed paths of the endpoint that correspond to minimum length excursions of the simulated linkage joint angles, namely joint space geodesics, and MV subjects attempted to move the controlled endpoint along straight lines on the screen more often than CV subjects.

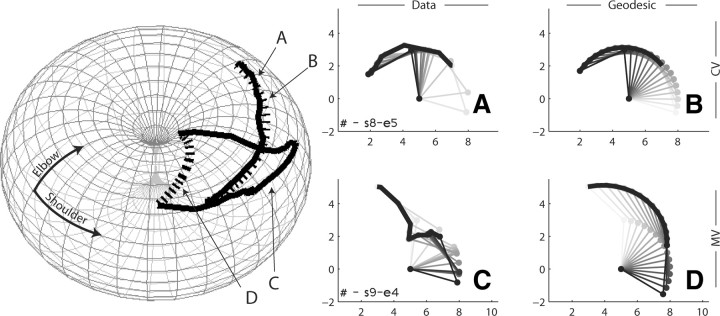

Figure 5.

Endpoint trajectories taken from all subject groups over various epochs illustrate the different control strategies used by the CV and MV groups. Each panel contains a single solid gray trajectory (T) beginning from the black circle (the previous target) and truncated after 800 ms. The curved dashed trace is the trajectory that sweeps out a geodesic in the configuration space of the simulated kinematic linkage, or the joint angle geodesic (Ga), associated with the starting and ending points of T. The straight dashed trace is the geodesic of the screen (Gs) associated with T. The “)” value indicates the similarity between T and Ga. The “|” value indicates similarity between T and Gs. The “D” value indicates the similarity between Ga and Gs. The similarity measure is the Hausdorff distance, and smaller values correspond to more similar trajectories. The “#” gives the subject identifier followed by the epoch number the movement was taken from. Trials are segregated by rows that correspond to specific experimental conditions, visual feedback, and control type. Trajectory sizes were scaled for clarity.

Two of these movements are shown over the joint angle torus of the simulated kinematic linkage (Fig. 6) as illustrative examples. It is apparent that straight-line movements of the endpoint on the screen (Fig. 6C), executed by MV subjects more often than CV subjects, result in large deviations from geodesics on the torus (Fig. 6D). It can also be seen that typical curved CV movements (Fig. 6A) correspond well to geodesics over the torus (Fig. 6B).

Figure 6.

Trajectory examples in the configuration space. The configuration space of a two-link revolute-joint kinematic chain forms a torus (left), a two-dimensional curved manifold embedded in the Euclidean R3. The light gray mesh forms orthogonal geodesics (minimum pathlength excursions) spanning the torus. The panels on the right contain trajectories of the endpoint of the kinematic chain, marked by the thick black traces. The time course of the linkage is parameterized by shading, where lighter shading is earlier in the movement. The left column contains trajectories taken from actual data (A from a CV subject and C from a MV subject), and the right column depicts the corresponding linkage movement resulting from ideal geodesic trajectories in the simulated linkage configuration space (geodesic B corresponds to movement A and geodesic D corresponds to movement C). The trajectories have been mapped onto the torus and labeled; on the torus, actual trajectories are rendered in solid black, and geodesics joining the start and end of the actual trajectories are rendered in dashed lines. It is shown that the linear joint angle excursions executed by CV subjects followed geodesics on the torus and curved endpoint trajectories, while the quasi-straight endpoint trajectories of MV subjects diverged considerably from geodesics on the torus. Movements A and C are reproduced from Figure 5 and can be located by their identification number, marked with #. Units are in 2.82 cm per screen unit or radians where appropriate.

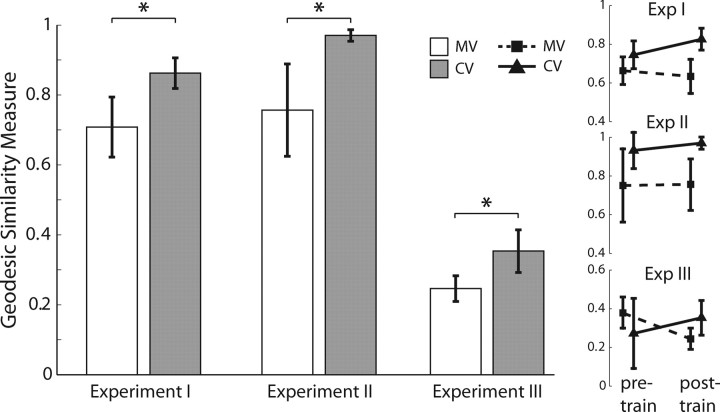

Figure 7, leftmost bars, confirms that, at the end of training, CV and MV groups created categorically different types of endpoint trajectories. The geodesic similarity measure represents the ratio of distinguishable trajectories made by subjects that are “more similar” to geodesics over the torus than the straight-line paths (Euclidean geodesics of the planar screen). A higher value indicates more trajectories corresponding to the torus. CV subjects executed significantly more endpoint trajectories that were more similar to geodesics over the torus than to straight lines on the monitor (p < 0.001).

Figure 7.

Average subject geodesic similarity index for Experiments I, II, and III (left). The geodesic similarity index is defined as the proportion of distinguishable endpoint trajectories closer to geodesics in the simulated linkage configuration space than to straight lines on the screen (the Euclidean geodesic). In Experiment I, subjects with feedback of the linkage execute more trajectories along geodesics of the torus (μ = 0.86 and se = 0.08 for CV, and μ = 0.70 and se = 0.16 for MV). Experiment II shows an increase in this effect size and a large reduction in CV between-subject variability due to the specialized target set (μ = 0.97 and se = 0.03 for CV, and μ = 0.75 and se = 0.24 for MV). In Experiment III, the effect of the visual perception of geometry persists even when the controlled system is operated directly as a point moving on a Euclidean plane (μ = 0.35 and se = 0.11 for CV, and μ = 0.24 and se = 0.06 for MV). Data shown are from the end of training (epochs 9 and 10), using ε = 0.5, and similarity is determined by the Hausdorff distance (Eq. 10). The effects of visually perceived geometry on trajectory formation persist across all three experiments. The pretraining (epochs 1 and 2) and posttraining (epochs 9 and 10) trends for Experiments I, II, and III (right) show the evolution of the geodesic similarity measure through training; x-axis offsets are for clarity only. All error bars are 95% confidence intervals, and asterisks indicate statistical comparisons with p < 0.05.

Because of the difficulty of the task (Fig. 2), subjects in both groups were often unable to precisely control the endpoint. This resulted in movements that were noisy (or included large corrections) and so dissimilar to either geodesic that it rendered a choice between them arbitrary (Fig. 8, right bottom). Additionally, the geodesic pairs corresponding to some movements made by subjects were so close to one another that a distinction between the two was meaningless (Fig. 8, right top). Therefore, trajectories that were equally similar or dissimilar to the geodesics, ∼50% when using ε = 0.5, did not impact the geodesic similarity score (Eqs. 9, 10; see Materials and Methods). These trajectories are termed indistinguishable movements. It should be noted that ε was chosen heuristically by matching as closely as possible the algorithm results to the similarity judgments made by the authors (blinded to the feedback groups). However, because of the large sample size in Experiment I, statistical tests retained significance at the α = 0.05 level for values of ε as low as 0.02 (classifying only 2% of movements as indistinguishable).

Figure 8 demonstrates that the fraction of movements made by subjects that were indistinguishable as either geodesic increased with error. In other words, as subjects learned the task and were able to more accurately move the endpoint to the location they were aiming for, they more often moved along geodesic trajectories. This suggests that the reason many trajectories are not distinguishable as being similar to their corresponding geodesic is that subjects find the task difficult and produce errors, not that subjects deliberately move along nongeodesic paths. Thus, the fact that noisy movements do not impact the geodesic similarity measure reflects the fact that they represent, in general, either poor movement execution or simply geodesics that are very similar to each other. The relationship between error and geodesic distinguishability can be clearly seen in the bar plot as the distinguishability decreases steadily from 0 to 3 cm of error. There were too few movements made (dashed line shows the number of samples in each bar; right axis) with >3 cm of error to have a good approximation of the true fraction of distinguishable movements, and the trend is lost in the noise. Unlike traditional upper-arm reaching experiments that produce smooth and well controlled movements, the deliberately unfamiliar and challenging control problem that subjects faced here caused these aberrant trajectories.

The difference in aspect ratio and the distribution of trajectory shapes appears to reflect a relevant distinction in motor behavior between CV and MV groups, one that became more pronounced as training progressed. At the start of training, the geodesic similarity scores were indistinguishable for CV and MV groups (p = 0.23), but by the final training epochs, the group behaviors were distinct (p < 0.001); the trend is shown in Figure 7, right top panel. A matched-pairs t test comparing the difference between initial and final epochs also finds differences in the group behavior (p = 0.018). This suggests that the difference in behavior is a function of motor learning. It is evidence against the hypothesis that both groups were attempting to produce straight lines of the endpoint and that the MV group was simply more adept at this. Instead, it favors the hypothesis that the groups are implementing distinct strategies.

Hand posture variability

The 19 dimensions of control available in the subjects' hand operated the endpoint on the screen, which was described completely with only two variables (x and y coordinates). This dimensionality reduction defines a null space of 19 − 2 = 17 dimensions, where different hand postures correspond to the same position of the controlled cursor. This gap of dimensionality implies that there are potentially infinite hand postures that map the endpoint directly onto each target. Since all signals are digitally sampled, the number of equivalent postures is finite but may be quite large. Yet, from this vast set of postures, limited only by the physiology of the hand, subjects chose only a small subset.

Our results indicate that variability in subjects' chosen postures (those used to reach targets) decreased as training proceeded (p < 10−5) for both experimental groups (Fig. 9). There is a strong trend in the data that suggests that the MV group performed this task with lower final posture variability than the CV group. In fact, the CV group had higher average variability at every epoch and the Wilcoxon signed-rank test rejects the null at p = 0.007. This effect was, however, not large enough to obtain significance in ANOVA tests and did not appear to influence the overall proficiency that subjects obtained during the task (Fig. 2).

Figure 9.

Comparison of terminal hand posture variability. MV subjects exhibited slightly, but significantly less hand posture variability when acquiring targets than CV subjects (Eq. 4). The vertical dashed line indicates where the feedback of the simulated arm was suppressed for the CV group. The (significant) increase in variability from the CV group after the feedback switch occurred indicates that continuous visual feedback of the system kinematics had an influence on control strategy.

The variability in the postures used to acquire targets does not correspond to task proficiency; it is only a measure of consistency in the chosen solutions. However, the result of hand posture variability corresponding to any given cursor location is relevant to the construction of an effective inverse model. From the subject's point of view, developing a map of the motor space corresponds to creating a well defined function, which transforms each position on the screen into a corresponding configuration of the hand. In effect, this amounts to finding an inverse of the hand-to-screen mapping by regularizing an ill posed problem. The data imply that subjects that received the most parsimonious feedback were slightly better in forming a function-like representation of the task. The greater variability in CV subjects' hand postures associated with each 2D target suggests a less well formed inverse map from target space to control space. This observation further supports the hypothesis that the different types of visual feedback between groups caused the development of distinct motor learning and control strategies.

Experiment II

The results of Experiment I provided evidence for the hypothesis that subjects learned to execute trajectories in accordance with the geometrical properties of the controlled system shown to them. CV subjects, viewing the entire linkage, demonstrated a propensity to move along minimum-length paths in the linkage joint angle space. MV subjects, viewing only a circular cursor, moved along straight-line paths more often than CV subjects, corresponding to geodesics on a Euclidean plane (Fig. 7).

If the hypothesis is true, then we would predict a larger difference in the geodesic similarity measure between CV and MV groups if the difference between Euclidean and toroidal geodesics connecting the targets were larger. Experiment II tested this by replicating Experiment I with a new target set in which geodesics joining targets had greater (Hausdorff) distances between them (Fig. 1, panel 5). For reference, the Euclidean (straight solid lines) and toroidal (dashed curves) geodesics joining targets are shown in Figure 1. As measured by the Hausdorff distance, geodesics joining targets from Experiment I had a mean distance of 0.69 and geodesics from Experiment II had a mean distance of 1.37.

The results of Experiment II offered further support of the hypothesis. Ten subjects participated in Experiment II, and Figure 7 displays the mean geodesic similarity measure for the CV (n = 5) and MV (n = 5) groups. The Student t test shows a significant difference between groups (p = 0.046). This difference (0.21) was greater than the difference between the CV and MV groups of Experiment I (0.15). Strikingly, every one of the CV subjects in Experiment II had a geodesic similarity measure of >0.92. This across-subject consistency resulted in a very low SD (0.03) and a very narrow confidence interval (Fig. 7), which is remarkable for such a difficult learning task and relatively small sample size compared with Experiment I. Statistical tests retained significance at the α = 0.05 level for values of ε as low as 0.36, a narrower range than Experiment I likely due to a smaller sample size.

An ANOVA of data collected in Experiment II confirmed the performance trends seen in Experiment I. Differences in endpoint errors (p = 0.41; Fig. 10) and trial times (p = 0.10) were not statistically significant for CV and MV groups, and the effects of training on errors and times were significant (p < 10−5). Power estimates are 73% for N = 10.

Experiment III

All subjects in Experiments I and II controlled the simulated linkage (Fig. 1) whether they were shown the full mechanism (CV) or only the linkage endpoint (MV). The CV and MV groups displayed differing control strategies that led to different trajectory shapes. Interestingly, both groups executed a preponderance of their trajectories along straight lines in the joint angle space of the linkage defined by the geometry of that linkage, which represent minimum-length paths from start to end of the movement.

In Experiment III, hand postures were mapped directly (linearly) into the Cartesian coordinates of the controlled cursor location (Fig. 1, panel 6). The MV (n = 8) group was again given visual feedback of the cursor moving on the monitor. The CV (n = 8) group was given visual feedback of the simulated linkage used in Experiments I and II. In this experiment, the joint angles of the displayed mechanism were derived by the standard inverse kinematics transformation of the cursor location (Eq. 4) and were completely irrelevant to the hand-to-screen map. This created a dual situation to Experiments I and II, in which visual feedback conditions across groups were preserved, but the control variables were changed.

Perhaps the most notable effect in Figure 7 is the drop of geodesic similarity of Experiment III subjects compared with Experiments I and II for both MV and CV groups. Subjects exposed to the direct map from hand to cursor were naturally producing straighter trajectories from the very beginning of the experiment, before learning could take place. To understand this effect, one should consider that subjects tended to produce finger-joint motions in a synchronous manner. This is consistent with the concept that, in the absence of other task constraints, the motor control system follows simple patterns in joint coordinates: when subjects were first exposed to the task of moving the cursor from point A to point B, they tended to perform synchronous transitions between a starting and an ending posture. These transitions produced quasi-rectilinear motions in the space of glove signals. In fact, the data glove signals represent a good linear approximation of the finger joints angles (Kessler et al., 1995) and the glove-to-cursor map in Experiment III was linear by construction. Therefore, under constraints of linearity, synchronous interpolated motions of the finger joints would map naturally onto quasi-straight lines on the screen. By the same argument, synchronous joint-interpolated motions in Experiments I and II would have a tendency to match the geodesic motions in joint space. We believe that this bias is primarily responsible for the gap displayed in Figure 7 between Experiments III and Experiments I and II. The purpose of Experiment III was to consider and possibly rule out the hypothesis that the trend toward joint-space geodesics observed in Experiments I and II was not a consequence of this bias.

We found that subjects in Experiment III tended to increase their similarity to the geodesics of the respective visual geometries (Fig. 7): subjects who saw the full two-joint linkage ended up producing movements that were more similar to the joint angle geodesics than subjects who saw only the moving endpoint. Importantly, the learning trends for the two groups progressed in opposite directions. With practice, MV movements became more rectilinear (less similar to joint-geodesics) and CV movements became more curvilinear (Fig. 7, bottom right panel). These results, illustrated also by trajectory examples (Fig. 5, bottom two panels), confirmed and strengthened the conclusions drawn from the joint angle control groups.

Both endpoint errors (Fig. 10) and movement times were statistically indistinguishable between MV and CV groups in Experiment III (p = 0.20 and p = 0.40, respectively). Training effects were significant for both measures (p < 10−5), and interaction effects between training and feedback were not significant for errors (p = 0.88) or times (p = 0.92). Power estimates are 93% for N = 16.

Discussion

In each experiment, subjects guided a controlled endpoint into targets (Fig. 1) and were either given visual feedback of a two-joint planar linkage (CV) or a free-moving cursor (MV), regardless of the object they actually controlled. This design was used to tightly control the information subjects received about the controlled objects and to eliminate preexisting influences of earlier practice present in natural reaching tasks. Neither feedback condition influenced learning rate, and both groups became commensurately skilled in the performance of the task (Fig. 2). Yet in all experiments and in all training epochs, the MV group learned to move the controlled endpoint along more rectilinear paths than the CV group. Illustrative examples are shown in Figure 5 and the pattern is grossly quantified by the aspect ratio (Fig. 3). These data support the hypothesis that subjects in these groups executed categorically different types of trajectories.

The observed differences in trajectory shapes can be explained by the geometries presented to subjects during the task (cursor or linkage), suggesting that the information was used in the development of subjects' control policies. Rectilinear endpoint movements are consistent with the isotropy of space surrounding a circular point-like cursor moving on a Euclidean plane. As pointed out by Flanagan and Rao (1995), straight movements in joint angle space do not generally correspond to straight lines over the monitor. However, while the concept of a “straight line in joint space” appears occasionally in the motor control literature, it is mathematically ambiguous. This concept is based on drawing a Cartesian plane with the two axes representing the two joint angles. Angles, however, do not map to straight lines but to circles, since angles differing by 2π are congruent. Therefore, while it is possible to place pairs of real numbers over pairs of Cartesian axes, this is not always geometrically meaningful. And it is not meaningful, in particular, for the two joint angles of a double pendulum, which are better represented over the topology of a torus (Fig. 4). This work demonstrates that the nervous system, trough practice, is capable to capture this geometrical structure based on the information contained in the visual feedback.

Three additional observations point to MV and CV subjects using different control strategies. (1) Higher performance error among CV subjects between epochs 10 and 11, when linkage feedback was suppressed, indicates CV subjects incorporated that information into their movement strategy. This did not have to be the case because feedback of the simulated linkage is supplementary in the sense that only feedback of the endpoint is strictly required to complete the explicit task goals. (2) CV subjects showed greater finger variability, suggesting a difference in their representation of the map. We ruled out this additional variability as a symptom of incomplete learning because all performance metrics between groups were equivalent (Figs. 2, 10). (3) In Experiment II, a target set was chosen that emphasized the trajectory differences we would expect to see between straight paths in screen space and straight paths in joint angle space if subjects were incorporating the vision of geometry into their movement strategies. The results confirmed that CV subjects in Experiment II executed more straight trajectories over the torus than those from Experiment I, and the between-subject variability for the CV group dropped markedly.

The effects of visually perceived geometry on trajectory formation persisted across all three variants of the experiment. This result appears inconsistent with the hypothesis that coordinated movements with multiple degrees of freedom tend always to occur in straight lines of the controlled endpoint (Morasso, 1981; Flash and Hogan, 1985; Flanagan and Rao, 1995) and suggests that the outcome of past experiments may have been influenced by the particular form of visual feedback offered to the subjects. Namely, when presented with a point on a Euclidean plane, subjects move it in straight paths, but when presented with feedback of more complex kinematics, subjects favor geodesic paths over its configuration space.

How can we reconcile our results with the observation that trajectories are straight during normal reaching movements of the hand? First, one needs to stress that hand trajectories are, in fact, not always straight. Furthermore, experiments that reported curved trajectories during hand movements typically studied large reaches without a fixed robot (Atkeson and Hollerbach, 1985; Boessenkool et al., 1998). The overwhelming majority of reaching studies reporting straight reaches examined movement in the proximal transverse plane toward and away from the shoulder. In that workspace, regardless of feedback, straight paths in joint angle space correspond to straight lines of the hand (Fig. 4C), thereby masking differences between control strategies. Finally, even when subjects are allowed vision of their arm during reaching, the arm occupies only a small portion of their visual field, and it has been shown that gaze leads the hand during reaching (Johansson et al., 2001), further reducing attention to the arm. In contrast, in our study, the small size of the monitor allowed subjects with complete vision to maintain their attention focused on the entire moving mechanism.

There may be a concern that subjects memorized hand postures rather than improving their control through understanding the finger-to-endpoint map. Had subjects memorized postures we would expect trajectory differences between experiments and not between CV and MV groups within any one experiment (Fig. 7) because trajectories would be a by-product of the transition from one hand posture to the next. Furthermore, in similar protocols, subjects were able to easily acquire unpracticed targets (generalization), indicating the absence of memorization tactics. For example, subjects training with a linkage, similar to the Experiment I MV group, showed complete generalization (Danziger et al., 2009), subjects training with direct endpoint control, similar to the Experiment III MV group, showed generalization in both extrapolations and interpolations (Mosier et al., 2005), and subjects acquiring targets corresponding to sign language characters showed clear generalization in continuous-feedback conditions (Liu and Scheidt, 2008).

Our results suggest that theoretical frameworks that treat motor behaviors as coordination patterns that emerge from minimizing the final accuracy of movements is an incomplete view. For example, in some formulations of optimal feedback control, in which cost functions might be jerk minimization (Flash and Hogan, 1985), control of variability (Todorov and Jordan, 2002), or minimization of effort and variability combinations (O'Sullivan et al., 2009), the optimizations of these functions constrained by task error produce the required trajectory without having to specify it explicitly. In this study, the possibility of explaining trajectory differences with error feedback is not possible because all groups have the same task-relevant feedback and reach the same performance levels. Neither is it possible to explain the difference by appealing to effort or other dynamical costs because the controlled objects had no dynamics. The only dynamical component in our framework is the musculoskeletal dynamics of the hand, which we assume that all subjects were expert at controlling. The novel map from finger kinematics to object kinematics—cursor or two-link system—is strictly of the static/algebraic type. Appealing to the slight differences in variability between groups is also insufficient because that variability did not impact task performance as we would expect in some optimal control models. To fold these results into an optimal control theory, it would require the assumption that trajectories could be planned explicitly or that some additional optimization criteria—such as smoothness—be considered.

Finally, our results extend the ongoing investigation into neural control of reaching. It is known that during both normal (Arce et al., 2009) and novel (Mosier et al., 2005) reaching tasks without continuous endpoint visual feedback trajectories are curved. The curvature observed in these studies could have resulted from the absence of an error feedback signal rather than from geometrical information. We unambiguously show that perceived geometry is a factor by maintaining continuous feedback throughout all trials. Recently, a three-dimensional dynamic arm model has been used to explain the curvatures noted in normal pointing based on geodesics of kinetic energy (Biess et al., 2007). The model of Biess and coworkers considers the metric structure of kinetic energy as a basis to explain movement trajectories. The concept is simple and elegant: Kinetic energy is a quadratic form in the space of velocities, in which limb inertia plays a role analogous to a metric tensor. Following this approach, minimization of kinetic energy is a way to derive optimal trajectories. In our case, the controlled system—either the cursor or the simulated linkage—has no inertia and there is no kinetic energy cost associated with its motion. Nevertheless, the geometrical structure associated with the simulated linkage (the torus) provides us with a framework to compute kinetic energy. Therefore, we speculate that the observed ability of the neural control system to extract the geometry of a controlled object may be the basis for the optimization of quantities such as kinetic energy, whenever the controlled object has an effective mass. Yet it is no straightforward task to equate the control of a moving endpoint with the control processes in natural reaching in which dynamics certainly play a role. Ultimately, the manifestation of the effects of geometry on learning and adaptation will be different in cursor, hand gesticulation, reaching, and multijoint movements (Liu et al., 2011). We expect that many of the same high-level controllers that plan hand movements through space will also govern movements of other controlled objects, such as the cursor in our task or the devices operated by a brain–computer interface.

Footnotes

This work was supported by NINDS Grant 1R01 NS03581-01A2, the Neilsen Foundation, and the Davee Foundation. We wish to thank Jim Patton, Lee Miller, and Eric Perreault for many insightful comments on this work.

The authors declare no competing financial interests.

References

- Abend W, Bizzi E, Morasso P. Human arm trajectory formation. Brain. 1982;105:331–348. doi: 10.1093/brain/105.2.331. [DOI] [PubMed] [Google Scholar]

- Arce F, Novick I, Shahar M, Link Y, Ghez C, Vaadia E. Differences in context and feedback result in different trajectories and adaptation strategies in reaching. PLoS One. 2009;4:e4214. doi: 10.1371/journal.pone.0004214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Atkeson CG, Hollerbach JM. Kinematic features of unrestrained vertical arm movements. J Neurosci. 1985;5:2318–2330. doi: 10.1523/JNEUROSCI.05-09-02318.1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Biess A, Liebermann DG, Flash T. A computational model for redundant human three-dimensional pointing movements: integration of independent spatial and temporal motor plans simplifies movement dynamics. J Neurosci. 2007;27:13045–13064. doi: 10.1523/JNEUROSCI.4334-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boessenkool JJ, Nijhof EJ, Erkelens CJ. A comparison of curvatures of left and right hand movements in a simple pointing task. Exp Brain Res. 1998;120:369–376. doi: 10.1007/s002210050410. [DOI] [PubMed] [Google Scholar]

- Danziger Z, Fishbach A, Mussa-Ivaldi FA. Learning algorithms for human-machine interfaces. IEEE Trans Biomed Eng. 2009;56:1502–1511. doi: 10.1109/TBME.2009.2013822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dingwell JB, Mah CD, Mussa-Ivaldi FA. Experimentally confirmed mathematical model for human control of a non-rigid object. J Neurophysiol. 2004;91:1158–1170. doi: 10.1152/jn.00704.2003. [DOI] [PubMed] [Google Scholar]

- Flanagan JR, Rao AK. Trajectory adaptation to a nonlinear visuomotor transformation: evidence of motion planning in visually perceived space. J Neurophysiol. 1995;74:2174–2178. doi: 10.1152/jn.1995.74.5.2174. [DOI] [PubMed] [Google Scholar]

- Flash T, Hogan N. The coordination of arm movements: an experimentally confirmed mathematical model. J Neurosci. 1985;5:1688–1703. doi: 10.1523/JNEUROSCI.05-07-01688.1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogan N. An organizing principle for a class of voluntary movements. J Neurosci. 1984;4:2745–2754. doi: 10.1523/JNEUROSCI.04-11-02745.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johansson RS, Westling G, Bäckström A, Flanagan JR. Eye–hand coordination in object manipulation. J Neurosci. 2001;21:6917–6932. doi: 10.1523/JNEUROSCI.21-17-06917.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kessler GD, Hodges LF, Walker N. Evaluation of the CyberGlove as a whole hand input device. ACM Trans Comput Hum Interact. 1995;2:20. [Google Scholar]

- Lackner JR, Dizio P. Rapid adaptation to Coriolis force perturbations of arm trajectory. J Neurophysiol. 1994;72:299–313. doi: 10.1152/jn.1994.72.1.299. [DOI] [PubMed] [Google Scholar]

- Liu X, Scheidt RA. Contributions of online visual feedback to the learning and generalization of novel finger coordination patterns. J Neurophysiol. 2008;99:2546–2557. doi: 10.1152/jn.01044.2007. [DOI] [PubMed] [Google Scholar]

- Liu X, Mosier KM, Mussa-Ivaldi FA, Casadio M, Scheidt RA. Reorganization of finger coordination patterns during adaptation to rotation and scaling of a newly learned sensorimotor transformation. J Neurophysiol. 2011;105:454–473. doi: 10.1152/jn.00247.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazzoni P, Krakauer JW. An implicit plan overrides an explicit strategy during visuomotor adaptation. J Neurosci. 2006;26:3642–3645. doi: 10.1523/JNEUROSCI.5317-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morasso P. Spatial control of arm movements. Exp Brain Res. 1981;42:223–227. doi: 10.1007/BF00236911. [DOI] [PubMed] [Google Scholar]

- Mosier KM, Scheidt RA, Acosta S, Mussa-Ivaldi FA. Remapping hand movements in a novel geometrical environment. J Neurophysiol. 2005;94:4362–4372. doi: 10.1152/jn.00380.2005. [DOI] [PubMed] [Google Scholar]

- O'Sullivan I, Burdet E, Diedrichsen J. Dissociating variability and effort as determinants of coordination. PLoS Comput Biol. 2009;5:e1000345. doi: 10.1371/journal.pcbi.1000345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scheidt RA, Ghez C. Separate adaptive mechanisms for controlling trajectory and final position in reaching. J Neurophysiol. 2007;98:3600–3613. doi: 10.1152/jn.00121.2007. [DOI] [PubMed] [Google Scholar]

- Shadmehr R, Mussa-Ivaldi FA. Adaptive representation of dynamics during learning of a motor task. J Neurosci. 1994;14:3208–3224. doi: 10.1523/JNEUROSCI.14-05-03208.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soechting JF, Lacquaniti F. Invariant characteristics of a pointing movement in man. J Neurosci. 1981;1:710–720. doi: 10.1523/JNEUROSCI.01-07-00710.1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spong MW, Hutchinson S, Vidyasagar M. Robot modeling and control. Hoboken, NJ: Wiley; 2006. [Google Scholar]

- Taylor JA, Klemfuss NM, Ivry RB. An explicit strategy prevails when the cerebellum fails to compute movement errors. Cerebellum. 2010;9:580–586. doi: 10.1007/s12311-010-0201-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todorov E, Jordan MI. Optimal feedback control as a theory of motor coordination. Nat Neurosci. 2002;5:1226–1235. doi: 10.1038/nn963. [DOI] [PubMed] [Google Scholar]

- Uno Y, Kawato M, Suzuki R. Formation and control of optimal trajectory in human multijoint arm movement. Minimum torque-change model. Biol Cybern. 1989;61:89–101. doi: 10.1007/BF00204593. [DOI] [PubMed] [Google Scholar]

- Wolpert DM, Ghahramani Z, Jordan MI. An internal model for sensorimotor integration. Science. 1995;269:1880–1882. doi: 10.1126/science.7569931. [DOI] [PubMed] [Google Scholar]