Abstract

The term “sensory reinforcer” has been used to refer to sensory stimuli (e.g. light onset) that are primary reinforcers in order to differentiate them from other more biologically important primary reinforcers (e.g. food and water). Acquisition of snout poke responding for a visual stimulus (5 s light onset) with fixed ratio 1 (FR 1), variable-interval 1 minute (VI 1 min), or variable-interval 6 minute (VI 6 min) schedules of reinforcement was tested in three groups of rats (n = 8/group). The VI 6 min schedule of reinforcement produced a higher response rate than the FR 1 or VI 1 min schedules of visual stimulus reinforcement. One explanation for greater responding on the VI 6 min schedule relative to the FR 1 and VI 1 min schedules is that the reinforcing effectiveness of light onset habituated more rapidly in the FR 1 and VI 1 min groups as compared to the VI 6 min group. The inverse relationship between response rate and the rate of visual stimulus reinforcement is opposite to results from studies with biologically important reinforcers which indicate a positive relationship between response and reinforcement rate. Rapid habituation of reinforcing effectiveness may be a fundamental characteristic of sensory reinforcers that differentiates them from biologically important reinforcers, which are required to maintain homeostatic balance.

Keywords: Learning, Novelty, Operant Conditioning, Rat, Sensory Reinforcement

1 Introduction

Recently, it has been reported that the primary reinforcing effects of visual stimuli play an important role in nicotine self-administration experiments (Caggiula, Donny, Chaudhri, et al. 2002; Caggiula et al. 2009; Caggiula et al. 2001; Caggiula, Donny, White, et al. 2002; Palmatier et al. 2007; Raiff and Dallery 2009). This work motivated us to become interested in studying the interaction of visual stimulus (VS) reinforcement with the reinforcing effectiveness of other stimulant drugs such as methamphetamine (Gancarz, Ashrafioun, et al. 2011; Gancarz, San George, et al. 2011). With this goal in mind, we set out to measure the reinforcing effectiveness of visual stimuli. Although there is a large older literature about sensory reinforcement (For Reviews See Berlyne 1969; Eisenberger 1972; Kish 1966; Lockard 1963; Tapp 1969), we found that measuring the reinforcing effectiveness of light onset was more nuanced than expected. Through trial and error we learned that variable-interval (VI) schedules seemed to produce more reliable responding for visual stimuli than a continuous or fixed ratio 1 (FR 1) schedule of reinforcement. The work described in this paper was undertaken to help us better understand why VI schedules of sensory reinforcement produced more robust responding than FR 1 schedules.

McSweeney and Murphy (2009) have argued that habituation to the sensory properties of biologically important reinforcers may be an important regulator of reinforcing effectiveness. We suspect that habituation may play an even more important role in determining the reinforcing effectiveness of VS reinforcers because they are purely sensory and the reinforcing effectiveness of sensory reinforcers is not regulated by homeostatic mechanisms as is the case for biologically important reinforcers such as food and water.

A recent review paper described ten empirically derived common characteristics of habituation (Rankin et al. 2009). The first common characteristic was that repeated application of a stimulus results in a progressive decrease in response. A progressive decrease in responding is also a characteristic of VS reinforced responding. Previous studies of VS reinforcement have consistently reported large within-session decrements of responding with the highest rates of responding for the light stimulus occurring during the first minutes of the test session, and thereafter falling off to lower levels (Gancarz, Ashrafioun, et al. 2011; McCall 1966; Premack and Collier 1962; Roberts, Marx, and Collier 1958; Tapp and Simpson 1966).

The second common characteristic was that when the stimulus is withheld after a response decrease due to habituation, the response recovers (spontaneous recovery). Recovery of responding following long intersession intervals is also a characteristic of VS reinforced responding. Previous studies have shown that increasing the between-session interval attenuates or prevents the decreases in response rate that occurs with repeated testing (Eisenberger 1972; Forgays and Levin 1961; Fox 1962; Premack and Collier 1962).

A third characteristic of habituation is that more frequent stimulation causes more rapid and/or pronounced decrements in responding. If we view the reinforcing effectiveness of visual stimuli as being a function of the common characteristics of habituation, then this last characteristic indicates that more frequent presentations of the VS reinforcer could result in a decreased rate of responding. In contrast, a large amount of empirical data from operant experiments with biologically important reinforcers generally indicate that response rate increases as a function of reinforcer frequency (de Villiers and Herrnstein 1976; Herrnstein 1974; Heyman 1983). The functional relationship between reinforcer rate and response rate may be different for sensory and biologically important reinforcers.

Based on our experience with VS reinforcers both published (Gancarz, Ashrafioun, et al. 2011; Gancarz et al. 2012; Gancarz, San George, et al. 2011) and unpublished, we have developed the sense that longer VI schedules of VS reinforcement produce more robust responding than FR 1 or even shorter VI schedules. The goal of the research reported in this paper was to more systematically explore this informal observation by comparing both within- and between-session changes in light contingent responding on FR 1, VI 1 min, and VI 6 min schedules of reinforcement. Based on our past experience and a habituation interpretation of the effectiveness of VS reinforcement, we expected that the VI 6 min schedule would produce higher rates of responding than either the FR 1 or VI 1 min schedules of reinforcement.

2 Materials and Methods

2.1 General Methods

2.1.1 Subjects

Twenty-four naïve Holtzman Sprague-Dawley (Harlan) male rats weighing between 250–400 g at the time of testing were used. The rats were housed in pairs in plastic cages in a colony room on a twelve hour light-dark cycle. Testing took place once per day, during the light phase, six days a week. The rats were water-restricted, but had constant access to food. Twenty minute access to water was given in the home cage at the end of each test session. The study was conducted in accordance with the guidelines set up by the Institutional Animal Care and Use Committee of the State University of New York at Buffalo.

2.1.2 Apparatus

Twenty-four experimental chambers, previously described in detail (Richards et al. 1997) were used for the experiment. These chambers have stainless steel grid floors, aluminum front and back panels and Plexiglas sides and tops. One wall had two snout poke apertures measuring 4 cm in diameter and located 3 cm from the left and right edges of the wall. The test chambers were located inside of sound and light attenuating boxes with wall mounted fans that provided ventilation and masking noise. The VS reinforcer used in the experiment was the onset of two lights: one of the lights was located above and midway between the two snout poke apertures and the other was located in the middle of the back wall of the test chamber. Onset of the VS reinforcer produced an illuminance of 68 lux, as measured from the center of the test chamber. The VS reinforcer was illuminated for five seconds each time it was presented. Snout pokes were monitored with infrared photo sensors located in snout poke apertures. The chambers were connected to a computer using a MED Associates interface. MED PC® programming language was used for programming of the experimental contingencies.

2.2 Procedure

2.2.1 Pre-exposure (Sessions 1–10)

During pre-exposure, rats were placed in dark experimental chambers for ten 30 minute sessions. Responses to both snout poke apertures were recorded, but had no programmed consequences.

2.2.2 Response-contingent light testing (Sessions 11–20)

During testing, rats were placed in dark experimental chambers and snout pokes into the aperture designated as ‘active’ resulted in a five second illumination of the two chamber lights. The ‘active’ aperture was selected as the poke hole receiving the fewest pokes on the average of the last two days of pre-exposure. Pokes into the other aperture, designated ‘inactive’, were recorded but had no programmed consequence. The rats were divided into one of three groups, with each group experiencing a different schedule of response-contingent light presentation. For the fixed ratio 1 (FR 1) group (n = 8) any poke into the active hole resulted in light presentation. For the variable-interval 1 minute (VI 1 min) group (n = 8) snout poking into the active hole resulted in light presentation according to a VI schedule with an average of one minute, and for the variable-interval 6 minute (VI 6 min) group (n = 8) snout poking into the active hole resulted in light presentation according to a VI schedule with an average of six minutes. The VI schedules were produced from a list of 20 intervals generated using Fleshler-Hoffman progressions (Fleshler and Hoffman 1962). During the test session, the computer selected intervals from this list without replacement. The first response after the interval elapsed resulted in presentation of the VS and another VI value was then selected from the list. While the light was presented, the VI timer was paused until light offset. Testing took place over ten 30 minute sessions.

2.3 Data Analysis

2.3.1 Dependent variables

Snout pokes to the active and inactive holes were dependent measures of responding. A snout poke was operationally defined as interruption of the infrared photobeam. Only one response was recorded for each photobeam interruption. Although there were no programmed consequences of poking during the pre-exposure phase, pokes into holes that would later be designated as active and inactive during the testing phase were recorded. An additional dependent variable used during statistical analysis was the number of reinforcers presented. This was simply defined to be the total number of light presentations earned during a given session.

2.3.2 Statistical analysis

Data were analyzed as averages of two-session blocks. Statistical analysis was conducted using IBM SPSS 19. One animal in the FR 1 condition had a rate of responding to the inactive hole that was 25 standard deviations above the mean for the other seven rats in the group. This rat was identified as an extreme outlier, and data collected from this rat was excluded from statistical testing. For all statistical tests an alpha criterion of p = 0.05 was used.

3 Results

3.1 Between-session analysis of active and inactive responding

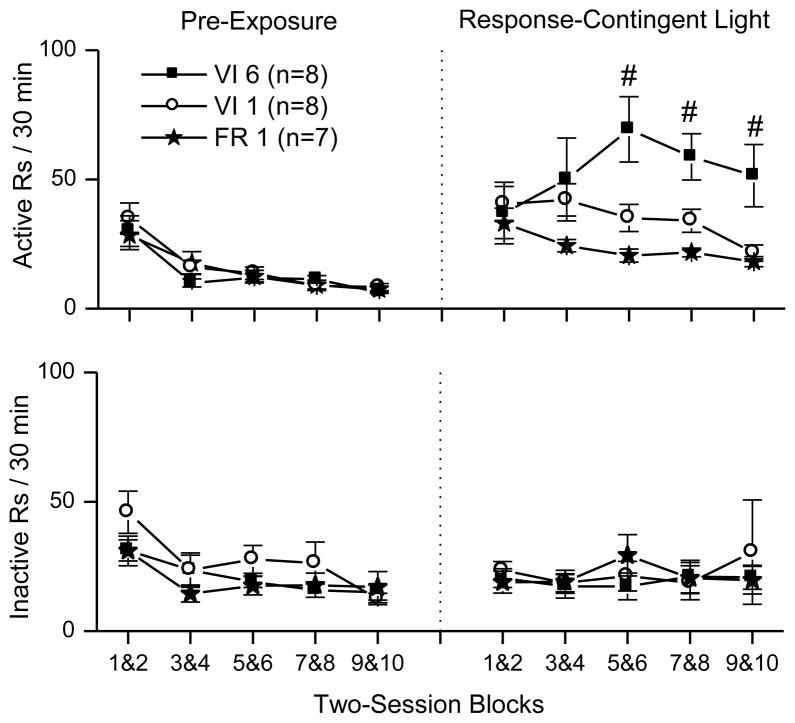

The data for active responding (top row), and inactive responding (bottom row) are shown in Fig. 1.

Fig. 1.

These plots show between-session patterns of responding during the pre-exposure and testing phases. The measures depicted are active responding (top row), and inactive responding (bottom row). Symbols to the left of the dotted line show responding during the pre-exposure phase, and symbols to the right of the line show responding during the response-contingent light testing phase. Data are plotted as two-session blocks calculated by taking the average of the responding over two consecutive sessions. During the response-contingent light testing phase, light onset was available according to three reinforcement schedules: squares indicate variable-interval 6 min (VI 6), circles indicate variable-interval 1 min (VI 1), and stars indicate fixed ratio 1 (FR 1). Pound signs designate significant Tukey HSD tests (p < 0.05), indicating that the VI 6 group had greater responding than the FR 1 or VI 1 groups during the last three two-session blocks. Data are expressed as means with error bars indicating standard error of the mean.

3.1.1 Pre-exposure phase

Responding to the snout poke holes that would be designated as active and inactive during the test phase was analyzed using an analysis of variance (ANOVA) with the five two-session blocks as the within-subject factor and schedule (FR 1, VI 1 min, and VI 6 min) as the between-subject factor. This analysis produced a significant main effect of session block for both active F(4,80) = 37.03, p < 0.001 and inactive F(4,80) = 9.68, p < 0.001 responding. There was no significant main effect of schedule or interactions. Follow-up paired t-tests with Bonferroni correction indicated that responding during the first two-session block of the pre-exposure phase was higher than responding during the last two-session block of the pre-exposure phase for both active t(22) = 7.203, p < 0.001 and inactive t(22) = 4.98, p < 0.001 responding.

3.1.2 Introduction of response-contingent light

In order to evaluate the effects of introducing the response-contingent light after the pre-exposure phase for FR 1, VI 1 min, and VI 6 min schedules of reinforcement, a mixed-factor ANOVA with phase (last two-session block of the pre-exposure phase, and ten-session average of response-contingent light testing phase) as the within-subject factor, and schedule (FR 1, VI 1 min, and VI 6 min) as the between-subject factor, was used to analyze both active and inactive responding. For active responding, there was a significant main effect of phase F(1,20) = 51.532, p < 0.001 and a phase by schedule interaction F(2,20) = 4.768, p < 0.05. There were no significant effects of schedule or phase on inactive responding. The main effect of phase, observed for active responding, indicates that response-contingent light increased active responding. Additionally, the phase by schedule interaction indicates that the effectiveness of light reinforcement depended upon reinforcement schedule.

3.1.3 Reinforcement schedule and responding for response-contingent light

In order to evaluate the effect of schedule (FR 1, VI 1 min, and VI 6 min) on the rate of responding during the test phase, mixed two-factor ANOVAs with two-session blocks as the within-subject factor, and schedule as the between-subject factor, were used to analyze both active and inactive responding. One-factor ANOVAs were used as tests of simple effects in order to identify sources of significant interactions. When interactions were found, Tukey Honestly Significant Difference (HSD) tests were used to identify homogenous subsets.

For active responding, the ANOVA indicated main effects of both block F(4,80) = 2.685, p < 0.05, and schedule F(2,20) = 4.071, p < 0.05, and a block by schedule interaction F(8,80) = 4.884, p < 0.001. Tests of simple effects showed that active responding in all three schedules changed over sessions FR 1 F(4,24) = 5.280, p < 0.01; VI 1 min F(4,28) = 6.246, p < 0.01; VI 6 min F(4,28) = 3.713, p < 0.05. Tukey HSD tests indicated that on the 5&6, 7&8, and 9&10 session blocks, the VI 6 min group had significantly more active responding than the other groups, while the FR 1 and VI 1 min groups were not significantly different.

For inactive responding, the ANOVA indicated that there were no significant effects of schedule or session.

3.1.4 Reinforcer frequency

The average number of light presentations during each two-session block of the test phase is presented in Table 1. A mixed-factor ANOVA, with block as the within-subject factor and schedule as the between-subject factor, was used to evaluate the number of reinforcers presented during the testing phase. There was a main effect of both block F(4,80) = 5.471, p < 0.01, and schedule F(2,20) = 200.010, p < 0.001, and a block by schedule interaction F(8,80) = 4.229, p < 0.001. Follow-up ANOVAs revealed a significant change over two-session blocks in the number of reinforcers presented for all schedules FR 1 F(4,24) = 3.984, p < 0.05; VI 1 min F(4,28) = 5.599, p < 0.01; VI 6 min F(4,28) = 3.094, p < 0.05. Visual inspection of the data indicated the number of reinforcers generally decreased for the FR 1 and VI 1 min schedules across the ten days of testing. In contrast, the number of reinforcers increased across test sessions for the VI 6 min schedule with a peak in the number of reinforcers earned at sessions 5&6.

Table 1.

This table lists the mean (in bold) and standard error of the mean (in italics) of the number of reinforcers (5 s light onset) earned on each of the three schedules of reinforcement, (fixed ratio 1 - FR 1; variable-interval 1 minute - VI 1 min; and variable-interval 6 minute - VI 6 min), during the test phase.

| Schedule | Test Phase Blocks

|

||||

|---|---|---|---|---|---|

| 1&2 | 3&4 | 5&6 | 7&8 | 9&10 | |

| FR 1 | 20.643 | 15.929 | 13.643 | 13.857 | 12.357 |

| 4.318 | 1.942 | 1.828 | 1.025 | 1.234 | |

| VI 1 min | 9.000 | 9.625 | 8.563 | 7.875 | 6.688 |

| 1.290 | 1.281 | 1.222 | 1.168 | 1.092 | |

| VI 6 min | 2.813 | 2.875 | 4.188 | 4.063 | 3.125 |

| 0.721 | 0.621 | 0.462 | 0.326 | 0.645 | |

3.2 Within-session analysis of active and inactive responding

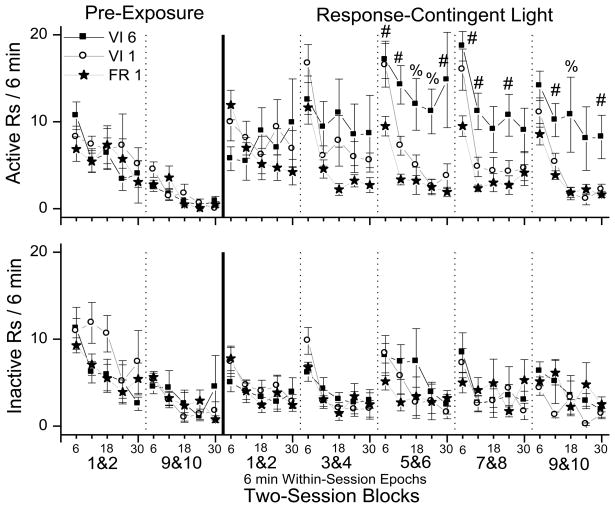

Fig. 2 shows the pattern of within-session active and inactive responding during the pre-exposure and test phases of the study. The first and last two-session blocks of pre-exposure are shown along with the five two-session blocks of the test phase. The active responding (top row), and inactive responding (bottom row) are shown for the three schedules of reinforcement.

Fig. 2.

These plots show the within-session patterns of responding for response-contingent light. Active responding is plotted in the top row and inactive responding is plotted in the bottom row. Each plot shows the data for three schedules of response-contingent light onset: squares indicate variable-interval 6 min (VI 6), circles indicate variable-interval 1 min (VI 1), and stars indicate fixed ratio 1 (FR 1). The first two columns on the left side of the solid vertical lines show the first and last two-session blocks of the pre-exposure phase. The five columns to the right of the solid line show all five two-session blocks of the testing phase. The individual two-session blocks are indicated by vertical dashed lines. Active and inactive responding are plotted in five six-minute epochs within the 30 min test sessions. Pound signs designate significant Tukey HSD tests (p < 0.05), indicating that the VI 6 group had greater responding than the FR 1 group. The percent signs indicate that the VI 6 group had greater responding than both the FR 1 and VI 1 groups. Data are expressed as means with error bars indicating standard error of the mean.

3.2.1 Pre-exposure phase

A three-way mixed-factor ANOVA was used to evaluate active and inactive responding during the pre-exposure phase. The five six-minute epochs that made up each 30 min session and the first and last two-session blocks of the pre-exposure phase were the within-subject factors, and schedule was the between-subject factor. Separate ANOVAs were used to analyze both active and inactive responding. There was a significant main effect of epoch for both active F(4,80) = 7.34, p < 0.001 and inactive F(4,80) = 8.50, p < 0.001 responding, as well as a significant main effect of session block for active F(1,80) = 47.50, p < 0.001 and inactive F(1,80) = 27.29, p < 0.001 responding. There were no other significant effects. This analysis indicates that there was a within-session decline in responding with peak responding occurring during the first six-minute epoch of each pre-exposure session. This pattern of responding was observed in both the first and last two-session blocks of the pre-exposure phase. Additionally, the overall rate of responding during each session was lower during the last two-session block than in the first two-session block (see Fig. 1).

This pattern of responding is consistent with the interpretation that the animals showed both within- and between-session habituation to the test chamber during the pre-exposure phase. Between-session habituation is indicated by greater levels of responding occurring during the first two-session block of the pre-exposure phase compared to the last two-session block of the pre-exposure phase. Within-session habituation is indicated by greater levels of responding occurring in the first six-minute epoch of the session than in the last six-minute epoch of the session.

3.2.2 Response-contingent light testing phase

A three-way mixed-factor ANOVA was used to evaluate active and inactive responding during the test phase. The five six-minute epochs that made up each 30 min session and the five two-session blocks of the test phase were the within-subject factors, and schedule was the between-subject factor. For active responding, there were significant main effects of block F(4,80) = 2.69, p < 0.05), epoch F(4,80) = 23.26, p < 0.001, and schedule F(2,20) = 4.07, p < 0.05. There were also significant interactions between block and schedule F(8,320) = 4.884, p < 0.001, and block and epoch F(16,320) = 2.675, p < 0.01.

In order to interpret the significant effects of the overall ANOVA on active responding, each of the five two-session blocks of the test phase were analyzed with separate mixed-factor ANOVAs with epoch as the within-subject factor and schedule as the between-subject factor. These ANOVAs produced no significant effects at session block 1&2. At session block 3&4, there was a main effect of epoch only Fepoch(4,80) = 14.62 p < 0.001. There were significant main effects of both schedule and epoch with no other significant interactions during the last three two-session blocks; 5&6 Fepoch(4,80) = 12.67, p < 0.001; Fschedule(2,20) = 8.94, p < 0.01, 7&8 Fepoch(4,80) = 21.08, p < 0.001; Fschedule(2,20) = 9.47, p < 0.001, and 9&10 Fepoch(4,80) = 15.31, p < 0.001; Fschedule(2,20) = 5.91, p < 0.001. Based on the results of this analysis, differences between the three schedules were evaluated using Tukey HSD tests conducted on active responding at each epoch during session blocks 5&6, 7&8, and 9&10. The results of this analysis are shown in the top panel of Fig. 2. In general, this analysis indicates that rats in the VI 6 min group had higher levels of responding, greater between-session recovery and less within-session habituation than rats in the FR 1 and VI 1 min groups.

3.2.3 Inactive responding

The same overall ANOVA described above for active responding was performed on inactive responding. This analysis produced a significant main effect of epoch F(4,80) = 16.71, p < 0.001 and no other significant effects.

4 Discussion

Examination of total responding across sessions (Fig. 1) indicated that the operant level of snout poking decreased across the ten sessions of pre-exposure to the test chamber. Introduction of the response-contingent VS increased active responding in all three reinforcer groups relative to the last two-session block of pre-exposure, and had no significant effect on inactive responding. The VI 6 min schedule produced a higher rate of responding than both the FR 1 and VI 1 min schedules. The greatest increase in total session responding varied based on light presentation schedule such that schedules permitting more frequent presentations reached maximal levels of responding on earlier test sessions. Greatest responding occurred for the FR 1 schedule during test sessions 1&2, for the VI 1 min schedule during sessions 3&4, and for the VI 6 min schedule during sessions 5&6. These increases occurred in active but not inactive responding and indicate that the VS reinforced active snout poke responses. One explanation for the lower frequency of responding emitted in the FR 1 and VI 1 min groups in comparison to the VI 6 min group is decreased reinforcer effectiveness due to habituation. More frequent occurrence of the VS reinforcer in the FR 1 and VI 1 min groups relative to the VI 6 min group may have resulted in greater habituation to the reinforcing properties of the VS. The large differences in reinforcer frequency generated by the three schedules support this interpretation (Table 1).

In a recent review of habituation (Rankin et al. 2009), the ten most commonly cited characteristics of habituation were described. The results described in this paper and results from past research on VS reinforcement indicate that habituation may be an important determinant of VS reinforcer effectiveness. The data from the current paper and from past research are well described by the first four of the ten common characteristics of habituation.

The first common characteristic of habituation is that, “Repeated application of a stimulus results in a progressive decrease in some parameter of a response to an asymptotic level.” For all three reinforcement schedules, responding was greatest during the first six-minutes of the test session and then decreased. The FR 1 schedule produced reliable within-session decreases in responding for all ten test sessions. The VI 1 min schedule produced reliable within-session decreases in responding for test sessions 3–10. The VI 6 min schedule produced reliable within-session decreases in responding for test sessions 5–10. The pattern of results is consistent with responding being reduced to “asymptotic levels”. It appears from Fig. 2 that the asymptotic level of responding would be greater for the VI 6 min than for the FR 1 or VI 1 min schedules. However, longer periods of testing would be needed to establish this with certainty. In addition to the present data, previous studies have consistently shown both between- and within-session decrements of responding for visual stimuli (Gancarz, Ashrafioun, et al. 2011; McCall 1966; Premack and Collier 1962; Roberts, Marx, and Collier 1958; Tapp and Simpson 1966). The data are consistent with the interpretation that repeated response-contingent presentations of visual stimuli result in a progressive decrease in the reinforcing effectiveness of visual stimuli.

The second common characteristic of habituation is that, “If the stimulus is withheld after response decrement, the response recovers at least partially (spontaneous recovery).” Following the increases in responding due to the initial primary reinforcing effects of the VS there was clear evidence of spontaneous recovery from the decrements in responding that occurred during the previous test session. In all cases, responding during the first six-minute epoch of testing is greater than responding during the last six-minute epoch of the previous test session. In addition, previous studies of the reinforcing effectiveness of visual stimuli have shown that increasing the intersession intervals results in greater recovery of responding (Eisenberger 1972; Forgays and Levin 1961; Fox 1962; Premack and Collier 1962). These data are consistent with the interpretation that longer intervals between test sessions result in greater spontaneous recovery of the reinforcing effectiveness of the VS.

The third common characteristic is that, “After multiple series of stimulus repetitions and spontaneous recoveries, the response decrement becomes successively more rapid and/or more pronounced.” For the FR 1 schedule, between-session decreases in total session responding were observed for the eight test sessions following test sessions 1&2. For the VI 1 min schedule, between-session decreases in responding were observed for the six test sessions following test sessions 3&4. For the VI 6 min schedule, between-session decreases in total session responding were observed for the four test sessions following test sessions 5&6.

For all three schedules the within-session pattern of responding generally indicates that the decrease in responding from the first six-minute epoch to the second six-minute epoch became larger with repeated testing. However, the data in Fig. 2 do not unambiguously indicate that the decrement in responding was more rapid. More precise testing methods, with a larger number of rats tested for a longer period of time would be needed for an unambiguous test. In terms of the present data, the between-session decreases in total session responding (Fig. 1) suggest more rapid or pronounced response decrements. Taken together, the data are consistent with the interpretation that within-session decreases in reinforcer effectiveness due to habituation are accelerated by repeated cycles of testing and recovery.

The fourth common characteristic is that, “Other things being equal, more frequent stimulation results in more rapid and/or more pronounced response decrement, and more rapid spontaneous recovery.” The schedule that produced the highest rate of VS presentation was the FR 1, which also produced the lowest rate of responding. The schedule that produced the lowest rate of VS presentation was the VI 6 min schedule, which also produced the highest rate of responding. This inverse relationship is consistent with the interpretation that the initial reinforcing effectiveness of the VS was decreased by the frequency of its occurrence. One interpretation of these data is that the initial primary reinforcing effectiveness of the visual stimuli, was equivalent for all three schedule groups and that reinforcer effectiveness was decreased by habituation. The degree of habituation was determined by the schedule of reinforcement, with schedules that permitted higher frequencies of response-contingent VS presentation resulting in more rapid habituation.

The fifth common characteristic is that, “The less intense the stimulus, the more rapid and/or more pronounced the behavioral response decrement. Very intense stimuli may yield no significant observable response decrement.” In a previous experiment we tested thirsty rats with a concurrent choice procedure in which water (25 μL) was available according to a VI 12 min schedule and light reinforcement (5 s onset) was available according to a VI 1 min schedule. On this schedule, rats made approximately 300 responses for the water reinforcer and approximately 40 responses for the light reinforcer in 40 min test sessions (see Fig. 6 & 7 Gancarz, Ashrafioun, et al. 2011). Responding for the sensory reinforcer was so low in comparison to the water reinforcer that it was difficult to detect a light reinforcement effect. These results in conjunction with the small number of responses reported for sensory reinforcers in the current paper support the idea that visual stimuli are weak reinforcers which may habituate rapidly in contrast to stronger biologically important reinforcers.

Finally, it is notable that the patterns of between- and within-session changes in responding are strikingly similar for the active and inactive snout poke holes and for both the pre-exposure (1–10), and the response-contingent (11–20) sessions (see Fig. 2). One implication of the concept of sensory reinforcement is that there are a variety of stimuli in the testing chamber other than (or in addition to) the programmed VS reinforcers. For example, the sensory consequences of snout poking into the inactive alternative may be reinforcing. The between- and within-session changes in inactive responding may reflect habituation to non-programmed sensory reinforcers associated with inactive responding. The similarities in the patterns of between- and within-session changes in responding suggest that the mechanism that produces these changes is robust, highly general, and present in all of these different situations. Habituation provides a good candidate for such a mechanism.

4.2 Habituation of the effectiveness of sensory and biologically important reinforcers

According to McSweeney and Murphy (2009), habituation to the sensory properties of biologically important reinforcers influences reinforcer effectiveness. They argue that physiological indicators of satiation such as stomach distension and blood sugar levels cannot completely account for observed within-session decreases in operant responding. Manipulations that would be expected to affect habituation, but not physiological satiation, can be used to increase operant responding for food and water. For example, presenting a strong irrelevant stimulus (dishabituation) or changing the properties of the controlling stimulus (stimulus specificity) increases operant responding for food and alcohol reinforcers (Murphy et al. 2006; Murphy, McSweeney, and Kowal 2007). Unlike visual stimuli, biologically important reinforcers, such as food and water, are important for maintaining homeostasis. Since VS reinforcers are purely sensory and do not have an immediate obvious role in maintaining homeostasis, it may be that the reinforcing effectiveness of visual stimuli are even more strongly determined by habituation than are biologically important reinforcers.

The habituation explanation of the schedule differences does not account for the initial primary reinforcing effectiveness of visual stimuli. One attribute of visual stimuli which has been shown to modify the reinforcing effectiveness of visual stimuli is novelty. There is much evidence indicating that novel stimuli are reinforcing (Bardo, Donohew, and Harrington 1996; Bevins 2001). Novel visual stimuli are more effective reinforcers than familiar visual stimuli (Berlyne, Koenig, and Hirota 1966; Kish and Baron 1962; Lloyd et al. Submitted). There is also evidence indicating that unpredictable intermittent stimuli are, in general slower to habituate (Aoyama and McSweeney 2001; Broster and Rankin 1994; Sasaki et al. 2001). It is arguable that occurrence of a response-contingent stimulus may maintain some elements of novelty relative to an otherwise uniform unchanging environment. Itis also possible that a relatively unpredictable intermittent response-contingent VS would maintain some degree of novelty (or surprise) for prolonged periods of time which may prevent or attenuate decreases in reinforcer effectiveness due to habituation. This “short-term novelty” explanation (Berlyne 1955) is consistent with spontaneous recovery of reinforcing effectiveness after a longer than usual between-session interval.

However, there are a number of problems with a simple novelty explanation for the initial primary reinforcing effectiveness of the VS. First, a novelty explanation of the reinforcing effectiveness of visual stimuli cannot be operationally differentiated from a habituation explanation since the operations required to make a novel stimulus familiar (i.e., pre-exposure) are the same operations used to cause habituation. Second, with repeated testing the VS becomes familiar and further responding to produce the VS cannot be easily explained by the reinforcing effects of novelty. Third, a novelty explanation ignores the nature of the particular sensory stimulus that is being tested. In general, habituation provides a more detailed and testable explanation of changes in responding for visual stimuli than novelty.

4.3 Limitations

The goals of the present study which compared responding on FR 1, VI 1 min, and VI 6 min schedules were limited. However, the results of the present study have important theoretical implications which suggest that further studies of sensory reinforcement may increase understanding of operant responding in general. The finding that the rate of responding on the VI 6 min schedule of VS reinforcement was higher than the rate of responding on the FR 1 min and VI 1 min schedules has theoretical importance because these results are opposite to much previous research indicating a direct relationship between rate of responding and rate of reinforcement on VI schedules (de Villiers and Herrnstein 1976; Herrnstein 1974; Heyman 1983). However, because only a highly restricted range of rates of reinforcement (approximately 3 to 20 reinforcers per 30 min session) were characterized in the present study, these results need to be followed up with parametric studies that systematically vary rates of sensory reinforcement over a wide range before a strong conclusion can be made.

Furthermore, the relationship between rate of responding and rate of reinforcement may be bitonic, not monotonic (Baum 1981; Staddon 1979). It may be that the relationship between response and reinforcer rates is bitonic for both sensory and biologically important reinforcers and that the higher rates of responding produced by the VI 6 min schedule (which would not be expected with a biologically important reinforcer) is the result of studying different segments of a bitonic function relating response rate to reinforcement rate. If this were the case then the difference between sensory and biologically important reinforcers would be quantitative, rather than qualitative. However, the decreasing limb of the bitonic function would still be found at a much lower rate of reinforcement for sensory than for biologically important reinforcers and this finding would still be consistent with the overall argument that habituation is stronger for sensory than for biologically important reinforcers.

It is important to acknowledge that the process of habituation has generally been associated with Pavlovian conditioning processes or unconditioned reflexive responses. Habituation has only recently been hypothesized to be a factor in determining reinforcer effectiveness for voluntary” or goal-directed” operant behavior (McSweeney and Murphy 2009). These authors note that most studies of habituation use neutral stimuli, such as light onset, to study habituation processes rather than important biological reinforcers such as food. It is noteworthy that sensory reinforcement includes only “neutral” or “indifferent” sensory stimuli and does not include biologically important reinforcers. An implication of this is that previous research on habituation may be particularly applicable to sensory reinforcement.

As was described above, the patterns of responding reported in this paper are consistent with an important role for habituation in determining the reinforcing effectiveness of visual stimuli. However, further studies are needed to confirm the role of habituation. The first common characteristic of habituation indicates that responding should reach asymptotic levels. The current data indicate that both between- and within-session responding appear to approach asymptotic levels. Testing for more test sessions is needed to confirm this. The second common characteristic of habituation predicts that the degree of spontaneous recovery observed at the start of each test session should be increased by longer intersession intervals and decreased by shorter intersession intervals. Explicitly manipulating the intersession interval could confirm or refute this prediction. It is notable that previous work has done precisely this manipulation with sensory reinforcement and demonstrated the predicted intersession interval effect (Premack and Collier 1962). The third common characteristic of habituation indicates that the response decrement should become more rapid with repeated stimulus repetitions and recoveries. The pattern of results depicted in Fig. 2 appears to support this prediction. However, testing for a greater number of test sessions would allow a better quantitative assessment of this characteristic.

Perhaps the strongest evidence for habituation is provided by tests of dishabituation and stimulus specificity. These tests would convincingly rule out other explanations of the results such as the idea that snout poking fatigues, or that the subjects’ attention to the operant task varies within- and between-session. Dishabituation could easily be tested by presenting a tone after within-session responding has decreased to low asymptotic levels. The prediction of dishabituation would be an increase in responding. Stimulus specificity could also be readily tested in a manner similar to dishabituation except that a property of the VS could be manipulated. Several previous studies have made the location and/or pattern (blinking) of the VS variable (Barnes and Baron 1961; Gomer and Jakubczak 1974; Harrington 1963). These studies all found that making the properties of the VS more variable increased the reinforcing effectiveness of the VS. Based on these results, it would seem likely that changing similar properties of a previously habituated VS reinforcer would result in increased responding. Further tests which include tests of dishabituation and stimulus specificity are necessary before a strong inference can be made about the role of habituation in determining the reinforcing effectiveness of sensory reinforcers. A major strength of the habituation hypothesis of sensory reinforcement is that it makes strong predictions that can be tested.

4.4 Conclusions

The initial goal of the experiment described in this paper was to investigate informal observations that VI schedules seemed to produce better evidence for the reinforcing effectiveness of visual stimuli than a continuous (FR 1) schedule of reinforcement. The results confirmed our informal observation that VI schedules produced more robust responding for visual stimuli and suggested that rapid habituation of reinforcer effectiveness may explain this effect. These data are consistent with the interpretation that habituation, due to the rapid predictable presentation of visual stimuli on FR 1 and VI 1 min schedules, caused rapid loss of reinforcer effectiveness. In contrast, slower less predictable presentation of visual stimuli on the VI 6 min schedule resulted in slower habituation of reinforcer effectiveness, and higher rates of responding. An important outcome of this study is that it provides good reason for further studies that: 1) more directly test the hypothesis that habituation is an important determinant of the reinforcing effectiveness of visual stimuli and, 2) systematically characterize the functional relationship between response rate and the rate of sensory reinforcement.

Highlights.

The reinforcing effectiveness of light onset rapidly habituates

Response rate was inversely related to rate of visual stimulus reinforcement

Sensory reinforcers habituate more rapidly than biologically important reinforcers

Acknowledgments

The initial experiments that led to the experiment described in this report were completed by Lisham Ashrafioun in partial fulfillment of his undergraduate honors thesis, and master’s degree in the Department of Psychology at the State University of New York at Buffalo. We would like to acknowledge the help of Kathy Hausknecht and Linda Beyley in data collection. This work was supported by DA10588 and DA026600 awarded to Jerry B. Richards.

Abbreviations

- ANOVA

analysis of variance

- FR

fixed ratio

- Tukey HSD

Tukey honestly significant difference

- VI

variable-interval

- VS

visual stimulus

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aoyama K, McSweeney FK. Habituation may contribute to within-session decreases in responding under high-rate schedules of reinforcement. Animal Learning & Behavior. 2001;29:79–91. [Google Scholar]

- Bardo MT, Donohew RL, Harrington NG. Psychobiology of novelty seeking and drug seeking behavior. Behav Brain Res. 1996;77:23–43. doi: 10.1016/0166-4328(95)00203-0. [DOI] [PubMed] [Google Scholar]

- Barnes GW, Baron A. Stimulus complexity and sensory reinforcement. J Comp Physiol Psychol. 1961;54:466–9. doi: 10.1037/h0046708. [DOI] [PubMed] [Google Scholar]

- Baum WM. Optimization and the matching law as accounts of instrumental behavior. Journal of the Experimental Analysis of Behavior. 1981;36:387–403. doi: 10.1901/jeab.1981.36-387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berlyne DE. The arousal and satiation of perceptual curiosity in the rat. J Comp Physiol Psychol. 1955;48:238–46. doi: 10.1037/h0042968. [DOI] [PubMed] [Google Scholar]

- Berlyne DE. The Reward-Value of Indifferent Stimulation. In: Tapp JT, editor. Reinforcement and Behavior. Academic Press; New York: 1969. [Google Scholar]

- Berlyne DE, Koenig ID, Hirota T. Novelty, arousal, and the reinforcement of diversive exploration in the rat. Journal of Comparative and Physiological Psychology. 1966;62:222–226. doi: 10.1037/h0023681. [DOI] [PubMed] [Google Scholar]

- Bevins RA. Novelty seeking and reward: Implications for the study of high-risk behaviors. Current Directions in Psychological Science. 2001;10:189–193. [Google Scholar]

- Broster BS, Rankin CH. Effects of changing interstimulus interval during habituation in Caenorhabditis elegans. Behavioral Neuroscience. 1994;108:1019–1029. doi: 10.1037//0735-7044.108.6.1019. [DOI] [PubMed] [Google Scholar]

- Caggiula AR, Donny EC, Chaudhri N, Perkins KA, Evans-Martin FF, Sved AF. Importance of nonpharmacological factors in nicotine self-administration. Physiol Behav. 2002;77:683–7. doi: 10.1016/s0031-9384(02)00918-6. [DOI] [PubMed] [Google Scholar]

- Caggiula AR, Donny EC, Palmatier MI, Liu X, Chaudhri N, Sved AF. The role of nicotine in smoking: a dual-reinforcement model. Nebr Symp Motiv. 2009;55:91–109. doi: 10.1007/978-0-387-78748-0_6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caggiula AR, Donny EC, White AR, Chaudhri N, Booth S, Gharib MA, Hoffman A, Perkins KA, Sved AF. Cue dependency of nicotine self-administration and smoking. Pharmacol Biochem Behav. 2001;70:515–30. doi: 10.1016/s0091-3057(01)00676-1. [DOI] [PubMed] [Google Scholar]

- Caggiula AR, Donny EC, White AR, Chaudhri N, Booth S, Gharib MA, Hoffman A, Perkins KA, Sved AF. Environmental stimuli promote the acquisition of nicotine self-administration in rats. Psychopharmacology (Berl) 2002;163:230–7. doi: 10.1007/s00213-002-1156-5. [DOI] [PubMed] [Google Scholar]

- de Villiers PA, Herrnstein RJ. Toward a law of response strength. Psychological Bulletin. 1976;83:1131–1153. [Google Scholar]

- Eisenberger R. Explanation of rewards that do not reduce tissue needs. Psychol Bull. 1972;77:319–39. doi: 10.1037/h0032483. [DOI] [PubMed] [Google Scholar]

- Fleshler M, Hoffman HS. A progression for generating variable-interval schedules. J Exp Anal Behav. 1962;5:529–530. doi: 10.1901/jeab.1962.5-529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forgays DG, Levin H. Learning as a function of change of sensory stimulation: Distributed vs. massed trials. Journal of Comparative and Physiological Psychology. 1961;54:59–62. doi: 10.1037/h0043396. [DOI] [PubMed] [Google Scholar]

- Fox SS. Self-maintained sensory input and sensory deprivation in monkeys: A behavioral and neuropharmacological study. Journal of Comparative and Physiological Psychology. 1962;55:438–444. doi: 10.1037/h0041679. [DOI] [PubMed] [Google Scholar]

- Gancarz AM, Ashrafioun L, San George MA, Hausknecht KA, Hawk LW, Richards JB. Exploratory studies in sensory reinforcement in male rats: Effects of Methamphetamine. Exp Clin Psychopharmacol. 2011;20:16–27. doi: 10.1037/a0025701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gancarz AM, Robble MA, Kausch MA, Richards JB. Association between locomotor response to novelty and light reinforcement: Sensory reinforcement as an animal model of sensation seeking. Behavioural Brain Research. 2012;230:380–388. doi: 10.1016/j.bbr.2012.02.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gancarz AM, San George MA, Ashrafioun L, Richards JB. Locomotor activity in a novel environment predicts both responding for a visual stimulus and self-administration of a low dose of methamphetamine in rats. Behav Processes. 2011;86:295–304. doi: 10.1016/j.beproc.2010.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gomer FE, Jakubczak LF. Dose-dependent selective facilitation of response-contingent light-onset behavior by d-amphetamine. Psychopharmacologia. 1974;34(3):199–208. doi: 10.1007/BF00421961. [DOI] [PubMed] [Google Scholar]

- Harrington GM. Stimulus intensity, stimulus satiation, and optimum stimulation with light-contingent bar-press. Psychological Reports. 1963;13:107–111. [Google Scholar]

- Herrnstein RJ. Formal properties of the matching law. Journal of the Experimental Analysis of Behavior. 1974;21:159–164. doi: 10.1901/jeab.1974.21-159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heyman GM. A parametric evaluation of the hedonic and motoric effects of drugs: Pimozide and amphetamine. Journal of the Experimental Analysis of Behavior. 1983;40:113–122. doi: 10.1901/jeab.1983.40-113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kish GB. Studies of Sensory Reinforcement. In: Honig W, editor. Operant Behavior: Areas of research and application. Appletone-Century-Crofts; New York: 1966. [Google Scholar]

- Kish GB, Baron A. Satiation of sensory reinforcement. Journal of Comparative and Physiological Psychology. 1962;55:1007–1010. doi: 10.1037/h0040362. [DOI] [PubMed] [Google Scholar]

- Lloyd DR, Kausch MA, Gancarz AM, Beyley LJ, Richards JB. Effects of novelty and methamphetamine on conditioned and sensory reinforcement. Behav Brain Res. doi: 10.1016/j.bbr.2012.07.012. Submitted. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lockard RB. Some Effects of Light Upon the Behavior of Rodents. Psychol Bull. 1963;60:509–29. doi: 10.1037/h0046113. [DOI] [PubMed] [Google Scholar]

- McCall RB. Initial-consequent-change surface in light-contingent bar pressing. Journal of Comparative and Physiological Psychology. 1966;62:35–42. doi: 10.1037/h0023490. [DOI] [PubMed] [Google Scholar]

- McSweeney FK, Murphy ES. Sensitization and habituation regulate reinforcer effectiveness. Neurobiol Learn Mem. 2009;92:189–98. doi: 10.1016/j.nlm.2008.07.002. [DOI] [PubMed] [Google Scholar]

- Murphy ES, McSweeney FK, Kowal BP. Motivation to consume alcohol in rats: The role of habituation. In: O’Neal PW, editor. Motivation of Health Behavior. Nova Science; Hauppauge, NY: 2007. [Google Scholar]

- Murphy ES, McSweeney FK, Kowal BP, McDonald J, Wiediger RV. Spontaneous recovery and dishabituation of ethanol-reinforced responding in alcohol-preferring rats. Experimental and Clinical Psychopharmacology. 2006;14:471–482. doi: 10.1037/1064-1297.14.4.471. [DOI] [PubMed] [Google Scholar]

- Palmatier MI, Matteson GL, Black JJ, Liu X, Caggiula AR, Craven L, Donny EC, Sved AF. The reinforcement enhancing effects of nicotine depend on the incentive value of non-drug reinforcers and increase with repeated drug injections. Drug Alcohol Depend. 2007;89:52–9. doi: 10.1016/j.drugalcdep.2006.11.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Premack D, Collier G. Analysis of nonreinforcement variables affecting response probability. Psychological Monographs: General and Applied. 1962;76:1–20. [Google Scholar]

- Raiff BR, Dallery J. Responding maintained by primary reinforcing visual stimuli is increased by nicotine administration in rats. Behav Processes. 2009;82:95–9. doi: 10.1016/j.beproc.2009.03.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rankin CH, Abrams T, Barry RJ, Bhatnagar S, Clayton DF, Colombo J, Coppola G, Geyer MA, Glanzman DL, Marsland S, McSweeney FK, Wilson DA, Wu CF, Thompson RF. Habituation revisited: an updated and revised description of the behavioral characteristics of habituation. Neurobiol Learn Mem. 2009;92:135–8. doi: 10.1016/j.nlm.2008.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JB, Mitchell SH, de Wit H, Seiden LS. Determination of discount functions in rats with an adjusting-amount procedure. J Exp Anal Behav. 1997;67:353–66. doi: 10.1901/jeab.1997.67-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roberts CL, Marx MH, Collier G. Light onset and light offset as reinforcers for the albino rat. Journal of Comparative and Physiological Psychology. 1958;51:575–579. doi: 10.1037/h0042974. [DOI] [PubMed] [Google Scholar]

- Sasaki A, Wetsel WC, Rodriguiz RM, Meck WH. Timing of the acoustic startle response in mice: Habituation and dishabituation as a function of the interstimulus interval. International Journal of Comparative Psychology. 2001;14:258–268. [Google Scholar]

- Staddon JE. Operant behavior as adaptation to constraint. Journal of Experimental Psychology: General. 1979;108:48–67. [Google Scholar]

- Tapp JT. Activity, Reactivity, and the Behavior-Directing Properties of Stimuli. In: Tapp JT, editor. Reinforcement and Behavior. Academic Press; New York: 1969. [Google Scholar]

- Tapp JT, Simpson LL. Motivational and response factors as determinants of the reinforcing value of light onset. Journal of Comparative and Physiological Psychology. 1966;62:143–146. doi: 10.1037/h0023501. [DOI] [PubMed] [Google Scholar]