Abstract

Human hearing depends on a combination of cognitive and sensory processes that function by means of an interactive circuitry of bottom-up and top-down neural pathways, extending from the cochlea to the cortex and back again. Given that similar neural pathways are recruited to process sounds related to both music and language, it is not surprising that the auditory expertise gained over years of consistent music practice fine-tunes the human auditory system in a comprehensive fashion, strengthening neurobiological and cognitive underpinnings of both music and speech processing. In this review we argue not only that common neural mechanisms for speech and music exist, but that experience in music leads to enhancements in sensory and cognitive contributors to speech processing. Of specific interest is the potential for music training to bolster neural mechanisms that undergird language-related skills, such as reading and hearing speech in background noise, which are critical to academic progress, emotional health, and vocational success.

Keywords: musicians, language, attention, memory, brain

Debates concerning music training’s impact on general cognitive and perceptual abilities are easily sparked, partly due to the widespread popular interest that has stemmed from poorly controlled research. Effects of musical experience have been proposed through comparing musician and nonmusician groups without first ensuring that these groups do not differ according to overarching factors such as IQ, socioeconomic status, and level of education, to name a few (Schellenberg, 2005). Even amidst this lack of scientific control, compelling evidence has arisen to support the power of music practice to shape basic sensory and cognitive auditory function (Kraus & Chandrasekaran, 2010). In addition to contributing to great amusement and well-being, practicing music does, in fact, appear to make you smarter – at least, smarter when it comes to how you hear. In this review, we provide neurobiological evidence that music training shapes human auditory function not only as it relates to music, but also as it relates to speech and other language-related abilities, such as reading. This evidence is presented in the context of discussions of common neural mechanisms for processing speech and music.

Music Training Makes us Better Listeners: No Easy Feat

Although cortical specializations for music and speech have each been established (Abrams et al., 2010; Brown, Martinez, & Parsons, 2006; Rogalsky, Rong, Saberi, & Hickok, 2011; Zatorre, Belin, & Penhune, 2002), there is no doubt that the human brain also recruits similar cortical mechanisms for processing sound in both domains (Koelsch et al., 2002; Patel, 2003; Rogalsky et al., 2011; Zatorre & Gandour, 2008). Research has substantiated direct links between musicianship and human sound processing, both within and outside of the domain of music. This work suggests that musicians are “better listeners” than nonmusicians with regard to how they perceive and neurally process sound in any domain. From a neurobiological standpoint, that music training has the power to make people better listeners is no simple feat. Here, we review evidence for musicians’ auditory processing enhancements in the context of a brief description of their underlying neuronal mechanisms, emphasizing the potential of music training to strengthen cognitive control over sensory function.

Perceptually, musicians demonstrate heightened auditory acuity, as evidenced by their enhanced ability (compared to nonmusicians) to discriminate pitch discrepancies and temporal gaps between sounds (Kishon-Rabin, Amir, Vexler, & Zaltz, 2001; Micheyl, Delhommeau, Perrot, & Oxenham, 2006; Parbery-Clark, Strait, Anderson, Hittner, & Kraus, 2011; Strait, Kraus, Parbery-Clark, & Ashley, 2010). Musicians’ fine-tuned auditory perception may account for their increased sensitivity to the pitch and temporal components of language and music and their enhanced cortical evoked potentials to deviations in the pitch and meter of a sound stream (Chobert, Marie, Francois, Schön & Besson, 2011; Marie, Magne, & Besson, 2010; Marques, Moreno, Castro, & Besson, 2007; Schön, Magne, & Besson, 2004; Tervaniemi, Ilvonen, Karma, Alho, & Naatanen, 1997; van Zuijen, Sussman, Winkler, Naatanen, & Tervaniemi, 2005). Even subcortically, musicians demonstrate faster and more robust auditory brainstem responses to music (Lee, Skoe, Kraus, & Ashley, 2009; Musacchia, Sams, Skoe, & Kraus, 2007), speech (Bidelman, Gandour, & Krishnan, 2009; Bidelman & Krishnan, 2010; Musacchia et al., 2007; Parbery-Clark, Skoe, & Kraus, 2009; Wong, Skoe, Russo, Dees, & Kraus, 2007), and emotional communication sounds (Strait, Kraus, Skoe, & Ashley, 2009).

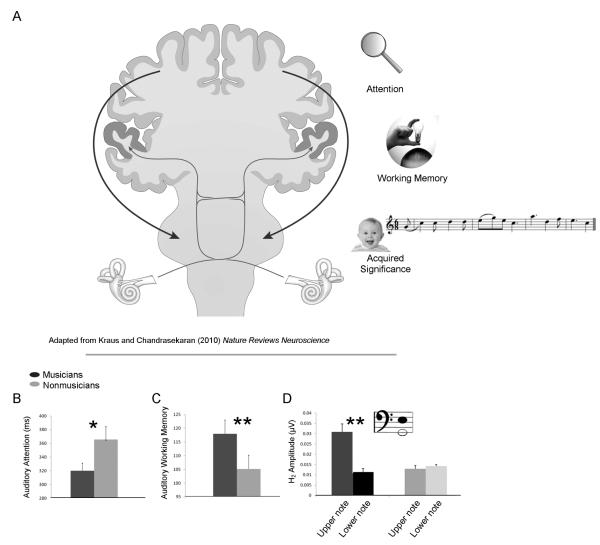

Musicians’ subcortical enhancements do not reflect a simple “volume knob” effect, resulting in overall larger and faster responses. Rather, musicians demonstrate selective enhancements for the most behaviorally relevant aspects of sound (e.g., the upper note of a musical interval, which often carries the melody; Figure 1d), a finding that is consistent with animal work indicating selective neural tuning to behaviorally relevant stimuli (Fritz, Elhilali, & Shamma, 2007; Suga, Xiao, Ma, & Ji, 2002; Woolley, Gill, & Theunissen, 2006). In fact, the ability of the nervous system to modify auditory function based on survival-promoting interactions with its environment is a fundamental operating principle seen throughout the animal kingdom. Selective tuning is likely dependent, at least in part, on cortico-subcortico reciprocity facilitated by activation of the neuromodulatory system (notably, acetylcholine release from the basal forebrain; Ji & Suga, 2009; Suga & Ma, 2003).

FIGURE 1.

The human auditory system is interconnected by a complex circuitry of bottom up (thin gray lines) and top down (thick black lines) neural fibers that extend from the cochlea to the cortex and back again (A). Together, these pathways facilitate the modulation of neural function according to parameters that include directed attention to particular sounds or sound features, recent experiences being held in temporary memory storage sites, and a sound or sound pattern’s acquired behavioral relevance, such as through associations gained with training. Evidence suggests that music training refines human auditory processing in each of these domains. With regard to attention (B), adult musicians demonstrate faster reaction times during a sustained attention task than nonmusicians. Similarly, musicians demonstrate increased auditory working memory capacity compared to nonmusicians (C), which is thought to contribute to musicians’ enhanced speech in noise perception (see Figure 2). Music training also facilitates the subcortical differentiation of the upper and lower notes of musical intervals (D), with musicians demonstrating enhanced representations of an upper note of a musical interval compared to the lower note. Musically untrained participants, by contrast, do not show selective subcortical enhancements to either tone. *p < .05, **p < .01.

Even beyond subcortical and cortical functional enhancements, music training may shape auditory function in structures as peripheral as the cochlea, with musicians demonstrating a greater degree of efferent control over outer hair cell activity along the basilar membrane than nonmusicians (Brashears, Morlet, Berlin, & Hood, 2003; Perrot, Micheyl, Khalfa, & Collet, 1999). Such comprehensive perceptual and neural enhancements may be driven, at least in part, by strengthened cognitive control over basic auditory processing, as engendered by auditory attention (Strait et al., 2010; Tervaniemi et al., 2009) and working memory (Ho, Cheung, & Chan, 2003; Pallesen et al., 2010; Parbery-Clark, Skoe, Lam, & Kraus, 2009; Parbery-Clark et al., 2011) – two auditory cognitive skills that are enhanced in musicians (Figure 1b, c). In fact, it has been repeatedly demonstrated that how we hear (e.g., how well we are able to hear speech in noisy environments, discriminate frequencies, or separate rapidly occurring sounds temporally) cannot be predicted by hearing thresholds alone (He, Dubno, & Mills, 1998; Killion & Niquette, 2000). Auditory acuity can be predicted by prefrontal cortical activation (Wong, Ettlinger, Sheppard, Gunasekera, & Dhar, 2010) and is not only shaped by cognitive control over auditory processing (Conway, Cowan, & Bunting, 2001; Hafter, Sarampalis, & Loui, 2008; Strait et al., 2010) but also by the languages we speak (Bent, Bradlow, & Wright, 2006; Krishnan, Gandour, & Bidelman, 2010; Krishnan, Xu, Gandour, & Cariani, 2005; So & Best, 2010; Van Engen & Bradlow, 2007), the activities we perform (Marques et al., 2007; Parbery-Clark, Skoe, Lam, & Kraus, 2009; Schön et al., 2004), and auditory training (Alain, Campeanu, & Tremblay, 2010; Song, Skoe, Wong, & Kraus, 2008; Tremblay, Shahin, Picton, & Ross, 2009; Wright & Zhang, 2009).

Hearing does not solely rely on an auditory relay system that passes sound from the cochlea to the cortex. As described above, it is influenced by cognitive function, behavioral significance, and experience. Such influences are facilitated by the human auditory system’s complex symphony of afferent (i.e., bottom-up) and efferent (i.e., top-down) neuronal pathways that interact to shape how we experience our auditory world (Figure 1a). That the brain responds to sound in a bottom-up manner is intuitive: sound waves are converted into mechanical vibrations followed by their transduction along the basilar membrane into a neural code. This neural code then gets passed along in quasi-relay fashion, up the auditory pathway from the cochlea to the cortex. A more holistic and perhaps less intuitive view of the human auditory system must consider how auditory function is guided both by local transformation of an incoming signal at different processing sites and by efferent neural activity. The human top-down auditory system is both vast and intricate, matching the volume of ascending fibers (Winer, 2005) and demonstrating more complex inter-connectivity with subcortical processing sites than other sensory domains, such as vision. This is due to the sheer number of innervated nuclei between the cochlea and the cortex and the alternative pathways by which signals can travel from one nucleus to the next. Furthermore, efferent neuronal paths originate not only in the auditory cortex but also in non-auditory areas such as the limbic system and cognitive centers of memory and attention. By means of these pathways, the efferent network changes basic response properties at the aforementioned auditory relay sites through top-down modulation.

A central contribution of this top-down system to auditory function is to improve sound processing at low-level sensory centers by tuning them to relevant auditory input (Bajo, Nodal, Moore, & King, 2010; Suga, 2008). The strength of the efferent system predicts how well humans hear in challenging listening environments (e.g., in background noise), and individuals with stronger efferent control over auditory processing are more apt to improve at an auditory task with brief experience (de Boer & Thornton, 2008). This bodes well for musicians, who we argue demonstrate enhanced top-down control over auditory processing (Kraus & Chandrasekaran, 2010; Strait et al., 2010). Although individuals who pursue music training may innately possess stronger efferent auditory control mechanisms than those who do not, we suggest that auditory advantages in musicians stem from focused and consistent interactive experience with sound. We base this argument on correlations between years of music practice and auditory task performance (Kishon-Rabin et al., 2001; Parbery-Clark, Skoe, Lam, & Kraus, 2009; Strait et al., 2010).

A Spotlight on Attention and Memory for Sound

Even in the quietest of rooms, our senses are inundated with a barrage of sound. From air ventilation systems to the hum of traffic, the human auditory system must adapt to a variety of listening conditions and hone in on signals of interest. What we hear is determined by how well we listen and by our capacity to retrieve what we’ve just heard from working memory, directing our attention to the input of highest interest while monitoring our surroundings for changes that require immediate attention. Focusing on a single auditory stream of interest becomes particularly challenging in the context of increasing noise levels, such as in the presence of multiple ongoing conversations (a cocktail party) or amidst environmental noise (a busy street). The adaptation of the human auditory system to a variety of listening environments is both impressive and essential for everyday human communication and auditory function. Deficits in auditory working memory and attention have been associated with a wide range of functional impairments such as auditory processing disorders (Moore, Ferguson, Edmondson-Jones, Ratib, & Riley, 2010), specific language impairment (Montgomery, 2002; Stevens, Sanders, & Neville, 2006) and developmental dyslexia (Facoetti et al., 2003; Jeffries & Everatt, 2004). In Principles of Psychology, William James asserts that “an education which should improve [attention] would be the education par excellence” (1890, p. 424). Our work and the work of others indicate that music training provides a mechanism for that very education in the auditory domain, enhancing our ability to direct our attentional spotlight, to remember what was recently heard, and to separate a target sound stream from other auditory input – not just for music but for other auditory domains as well.

Findings from our laboratory have demonstrated enhanced auditory attention and working memory in musicians (Figures 1b and c; Parbery-Clark, Skoe, Lam, & Kraus, 2009; Parbery-Clark et al., 2011; Strait et al., 2010). Increased auditory working memory in musicians has also been observed by others (Chan, Ho, & Cheung, 1998). The extent to which music training engenders an enhancement of general versus domainspecific (i.e., auditory-specific) working memory mechanisms is of interest. Whereas some studies report memory enhancements among musicians only for auditory tasks (Ho et al., 2003), others have observed carryover effects of music training in the visual domain (Jakobson, Lewycky, Kilgour, & Stoesz, 2008; see also Schellenberg, 2009). Through testing adult musicians and nonmusicians on auditory and visual working memory tasks, we found that adult musicians demonstrate enhanced auditory but not visual working memory compared to nonmusicians (Parbery-Clark, Skoe, Lam, & Kraus, 2009; Parbery-Clark et al., 2011). This finding could not be attributed to a non-verbal IQ advantage in musicians. We have reported similar auditory-specific enhancements in musicians with regard to attention in that musicians demonstrate faster reaction times over the course of a sustained auditory attention task than nonmusicians (Figure 1b). They perform no better than nonmusicians, however, on an analogous visual attention task (Strait et al., 2010).

Although we have not demonstrated visual working memory or visual attention enhancements in musicians compared to nonmusicians, some degree of visual cognitive enhancement would not be surprising given the multisensory nature of music practice and performance. In fact, our work and the work of others has revealed audiovisual perceptual (Petrini et al., 2009) and neural processing enhancements (Musacchia et al., 2007; Musacchia, Strait, & Kraus, 2008) in musically trained adults. In considering the domain specificity of working memory and attention enhancements in musicians, it is possible that auditory and visual working memory enhancements are each a matter of degree. Although music training may strengthen both processes, auditory cognitive abilities might be strengthened to a greater extent. Moreover, audiovisual processing enhancements in musicians may indicate that music training strengthens the perception and neural encoding of auditory and visual information, particularly when they co-occur. Given that memory and attention are multifaceted cognitive constructs with numerous subdivisions (e.g., attention can be sustained, phasic, selective, divided, or focused), considerable work is needed to tease apart the nature of music training’s impact on both cognitive functions and to determine potential carryover effects in other sensory domains.

How musicians perform on tasks that place a premium on auditory attention motivates hypotheses concerning the impact of music training on brain mechanisms that underlie auditory attention and working memory. During such tasks (e.g., when subjects are instructed to listen for certain target tones or timbres), musicians demonstrate heightened recruitment of cortical areas associated with sustained auditory attention and working memory (Baumann et al., 2007; Baumann, Meyer, & Jäncke, 2008; Gaab & Schlaug, 2003; Haslinger et al., 2005; Pallesen et al., 2010; Stewart et al., 2003), such as the superior parietal cortex, as well as more consistent activation of prefrontal control regions (Strait & Kraus, 2011). Indications that music training increases contributions of the superior parietal and prefrontal cortices to active auditory processing and their roles in sustaining auditory attention and working memory may support the hypothesis that music training tunes the brain’s auditory cognitive networks for cross-domain auditory processing.

Not surprisingly, cortical networks invoked during attention to music are similar to those that underlie the activation of attention in other auditory domains, such as language. In addition to primary auditory areas (the superior temporal gyrus), these sites include the frontoparietal attention and working memory networks, which comprise the intraparietal sulcus, supplementary and presupplementary motor areas, and the precentral gyrus (Janata, Tillmann, & Bharucha, 2002). These findings corroborate previous results suggesting that a combination of modality-specific (e.g., superior temporal gyrus) and general attention and working memory centers (e.g., frontoparietal cortex) contribute to sustained auditory attention (Petkov et al., 2004; Zatorre, Mondor, & Evans, 1999). If music training increases musicians’ neural capacity for directing and sustaining auditory attention, music may prove to be the holy grail of auditory training, providing an avenue for the prevention, habilitation, and remediation of a wide range of auditory processing deficits.

Enhanced Processing of Speech in Noise in Musicians

Attention and working memory: Key ingredients

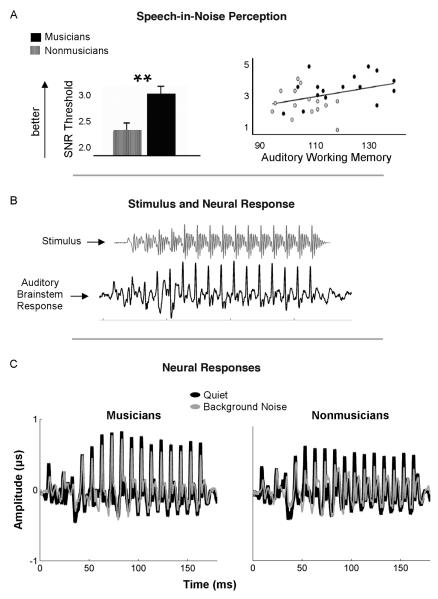

Given the frequency with which the human auditory system must encode signals of interest in challenging listening environments, the ability of the nervous system to process a target signal and suppress competing noise is essential for everyday human communication and auditory function. As noted, auditory attention and auditory working memory contribute to the ability to focus one’s auditory spotlight. Our laboratory has demonstrated associations between auditory attention/working memory performance and the ability to perceive speech in background noise (Figure 2a; Parbery-Clark, Skoe, Lam, & Kraus, 2009; Parbery-Clark et al., 2011; Strait & Kraus, 2011; see also Heinrich, Schneider, & Craik, 2008). Listening to speech in increasing levels of background noise requires augmented attentional resources, resulting in a decreased buffer capacity for auditory working memory storage and making quick recall of an entire sentence more difficult. Therefore, the greater one’s auditory attention and working memory, the better one’s ability to perceive speech in noise. Because of musicians’ enhanced auditory cognitive skills and their vast experience attending to and remembering distinct elements in complex soundscapes, we were not surprised to find that musicians demonstrate better perception of speech in background noise than nonmusicians. Specifically, musicians demonstrate an enhanced ability to repeat speech presented amidst louder levels of background noise (Figure 2a; Parbery-Clark, Skoe, Lam, & Kraus, 2009). That musicians’ speech-in-noise perceptual enhancement persists into the later decades of life is particularly relevant for older adults, who experience difficulty hearing speech in noise due to aging (Parbery-Clark et al., 2011; Zendel & Alain, 2011).

FIGURE 2.

Compared to nonmusicians, musicians’ speech processing is more resistant to the degradative effects of background noise. For example, musicians are better able to repeat sentences correctly when they are presented in noise at lower signal-to-noise ratios (A, left panel); this benefit may be partially driven by enhanced auditory cognitive abilities (A, right panel). Musicians also demonstrate decreased neural response degradation by background noise (C), as revealed in musicians’ and nonmusicians’ auditory brainstem responses (ABRs) to the speech sound /da/ with and without background noise. Because the ABR physically resembles the acoustic properties of incoming sounds, the elicited ABR waveform in each subject (B, lower waveform) resembles the waveform of the evoking stimulus (B, upper waveform). Although both musicians and nonmusicians demonstrate robust neural responses to the speech sound when presented in quiet, nonmusicians’ responses are particularly degraded by the addition of background noise (C). **p < .01.

Neurobiological evidence

Neurobiological underpinnings of musicians’ enhanced perception of speech in noise have been documented in subcortical auditory processing. The human auditory brainstem response (ABR) provides a window into how complex sounds such as speech or music are transcribed into a neural code by the brain. Because ABRs preserve temporal and spectral components of evoking stimuli with exquisite temporal precision (on the order of fractions of a millisecond, as shown in Figure 3; see color plate section), they have proven useful for quantifying impairments and enhancements in human auditory processing, including enhancements in musicians. Because auditory brainstem neurons can phase lock to the frequency components of incoming sound up to ~2,000 Hz, auditory brainstem responses physically resemble the acoustic waveforms of evoking stimuli (see Figure 2b; Chandrasekaran & Kraus, 2010a). Subcortical encoding of the temporal features of incoming sound can be assessed by measuring the timing (i.e., latencies and phase) of neural response peaks that correspond to a sound’s onset, offset, rapid spectrotemporal changes, or sudden changes in level that occur with mid-stimulus amplitude bursts. The neural encoding of spectral components of incoming sound, such as its fundamental frequency and harmonics, and how these spectral components change over time can be assessed by measuring their representation within the ABR itself (via Fourier analysis, autocorrelation, or cross-correlations between the time-frequency components of the stimulus versus those in the ABR; Skoe & Kraus, 2010; Skoe, Nicol, & Kraus, 2011). Compared to quiet, ABRs to speech in background noise are slower and less robust (Burkard & Sims, 2002; Russo, Nicol, Musacchia, & Kraus, 2004). Notably, the amount of noise-induced degradation observed in ABRs is associated with behavior, with good speech-in-noise perceivers demonstrating less of a timing delay and more robust spectral encoding in noise (Anderson, Chandrasekaran, Skoe, & Kraus, 2010; Parbery-Clark, Skoe, & Kraus, 2009; Song, Skoe, Banai, & Kraus, 2010).

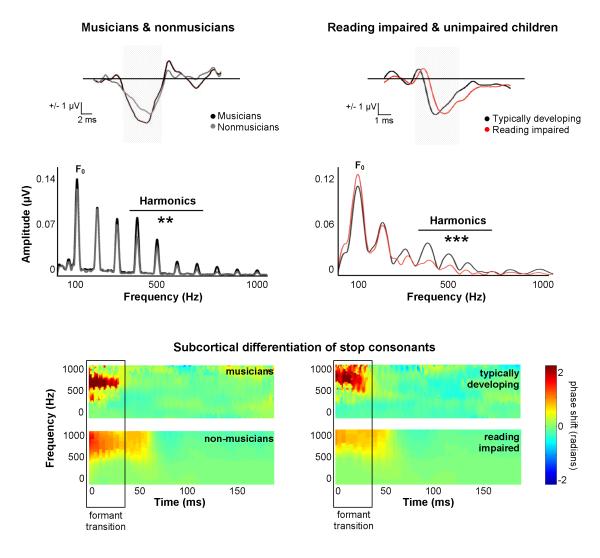

Figure 3. Core neural mechanisms that are deficient in poor readers are enhanced in musicians.

We recorded auditory brainstem responses (ABRs) to the speech sound /da/ in children with a wide range of reading ability and in musician and non-musician adults. The timing of individual responses was analyzed by measuring the latencies (i.e., the timing) of individual neural response peaks relative to the presentation of the stimulus. The neural encoding of the frequency components of speech was also quantified. The neural encoding of spectral components of an evoking stimulus can be assessed by applying a fast Fourier transform to the brainstem response, facilitating the analysis of auditory brainstem responses in the spectral domain (lower panel). Auditory brainstem responses represent the pitch (i.e., fundamental frequency) and higher harmonics (H2-H10 plotted here) of evoking stimuli. With regard to timing, musicians demonstrate faster ABRs to speech than non-musicians (A, upper panel). Musicians also demonstrate enhanced neural encoding of the harmonics of speech compared to non-musicians (H4–H7 in the quiet condition; A, lower panel) (F(1,29)=6.63, p<0.01). On the contrary, poor readers demonstrate delayed responses (B, upper panel) as well as decreased neural encoding of the harmonics of speech when compared with typically developing children (B, lower panel) (F(1,40)=14.67, p<0.001).

Compared to nonmusicians, musicians demonstrate decreased neural degradation to a speech stimulus (/da/) presented in background noise. Less neural degradation is seen in musicians’ responses in both temporal and spectral dimensions (Figure 2c). Related results have been reported by Bidelman and Krishnan (2010) in response to reverberated speech, with reverberation similarly complicating accurate speech perception and degrading speech-evoked ABRs. Musicians’ ABRs are more resistant to the degradative effects of stimulus reverberation on the subcortical encoding of speech, which points to their heightened ability to overcome challenging listening environments. That the degree of musicians’ speech-in-noise enhancement correlates with their duration of music training suggests that music training improves the ability of sensory systems to encode sound in challenging listening environments (Parbery-Clark, Skoe, Lam, & Kraus, 2009).

A wealth of neurobiological studies have used correlational analyses to conclude that functional differences between the brains of musicians and nonmusicians are a consequence, at least in part, of music practice. In addition to predicting the perception of speech in noise, duration of training and its age of onset predict subcortical responses to speech, music, and emotional communication sounds (Musacchia et al., 2007; Strait et al., 2009; Wong et al., 2007), cortical structure (Gaser & Schlaug, 2003; Hutchinson, Lee, Gaab, & Schlaug, 2003) and cortical function (Elbert, Pantev, Wienbruch, Rockstroh, & Taub, 1995; Ohnishi et al., 2001; Pantev et al., 1998; Strait & Kraus, 2011; Trainor, Desjardins, & Rock, 1999). Although causality cannot be inferred from correlation, cortical (Margulis, Mlsna, Uppunda, Parrish, & Wong, 2009; Pantev, Roberts, Schulz, Engelien, & Ross, 2001; Shahin, Bosnyak, Trainor, & Roberts, 2003; Shahin, Roberts, & Trainor, 2004) and subcortical enhancements that are specific to the sound of one’s instrument of practice (Strait, Chan, Ashley, & Kraus, 2011) implicate the power of music training to shape brain function. Because experience-related and innate factors of musicianship undoubtedly coexist, future research needs to delineate their respective roles in shaping brain function. Longitudinal studies that assess different aspects of brain function before and after music training have been useful in this regard (Fujioka, Ross, Kakigi, Pantev, & Trainor, 2006; Hyde et al., 2009; Moreno et al., 2009; Norton et al., 2005; Schlaug, Forgeard, Zhu, Norton, & Winner, 2009) and are likely to provide the most informative outcomes.

Speech, Music, Rhythm and the Brain

Attentional involvement with sound can be mediated by sequential cueing, or rhythm (Jones, Kidd, & Wetzel, 1981). Rhythmic regularity provides listeners with a predictive framework, pushing their attentional spotlight to the most likely point in time where the next stimulus is likely to occur. That human nervous system tracks the occurrence of rhythmic signals (Snyder & Large, 2005), even when subjects are instructed to ignore them (Elhilali, Xiang, Shamma, & Simon, 2009), is not surprising because the human brain functions in inherently rhythmic ways. Rhythm, or periodicity, in neural activity is observed in electrophysiological recordings in the form of neural oscillations that are thought to drive a variety of cognitive functions and the synthesis of multisensory input (for review see Ward, 2003). Speech and music are likewise organized in similarly oscillatory manners. We now turn to a discussion of the importance of rhythm for the perception of both music and language. The available findings suggest that musicians’ experience producing, manipulating, and attending to musical sound promotes the brain’s ability to track regularities in sequential signals, even outside the domain of music (Conway, Pisoni, & Kronenberger, 2009).

Rhythm is a structural hallmark of both music and language. In music, rhythm has the power to drive our perception of phrase structure and tonality (Boltz, 1989) as well as to guide our anticipation of structural boundaries and cadences. Although less periodic than music, language is also structured metrically, with rhythm guiding the perception of speech prosody (e.g., syllabic stress; Marie et al., 2010) at rates that are consistent across all languages studied to date (Greenberg, 2003). Changes in speech at the syllable rate are fundamental to accurate speech perception (Drullman, Festen, & Plomp, 1994), with sensitivity to syllabic stress being a prerequisite for normal speech production and perception, as well as for the development of language-related skills. Indeed, the accurate perception of metrical structure in language is crucial to phonological development and, consequently, to the development of language-related skills such as reading (Corriveau, Pasquini, & Goswami, 2007; Goswami et al., 2002).

Cross-cultural study of the rhythmic structures of language and music suggests that the rhythm of a culture’s music is reflected in the rhythm of native-language prosody (Iversen, Patel, & Ohgushi, 2008; Patel, Iversen, & Rosenberg, 2006), implying that the two domains are inherently connected. Because music practice requires sustained attention to rhythm during the production and manipulation of instrumental output, cross-domain effects of music training on language perception would not be surprising. Enhanced sensitivity to the rhythmic components of speech could, in turn, promote syllabic discrimination and prosodic perception—two fundamental features of language processing that, when deficient, are associated with language and literacy dysfunction (Abrams, Nicol, Zecker, & Kraus, 2009; Goswami et al., 2002; cf. Tallal & Gaab, 2006).

Recent evidence points to an association between music perception—more specifically, rhythmic discrimination—and perceptual sensitivity to the metrical components of speech, such as syllabic stress (Huss, Verney, Fosker, Mead, & Goswami, 2010). This association points to the possibility of common mechanisms for processing sound in domains that depend on the sequential parsing of incoming information, enhancing that which is consistent (i.e., the metrical regularities of a target signal) to facilitate perception and stream segregation. In fact, sensitivity to regularities in sensory input is crucial for accurate perception in challenging listening environments. In a restaurant, for example, one must be sensitive to the regularities in an individual speaker’s voice, such as their rhythmic cadence, in order to focus on it amidst other simultaneously occurring conversations.

It is thought that the brain shapes perception according to higher-level predictions that it makes based on sensory regularities, sharpening sensory encoding at sequentially lower levels of the auditory pathway in a top-down manner (Ahissar, Lubin, Putter-Katz, & Banai, 2006; Ahissar, Nahum, Nelken, & Hochstein, 2009; Nahum, Nelken, & Ahissar, 2008). Recent work from our laboratory shows neural enhancements to regularities in an ongoing speech stream. Moreover, the extent of this enhancement relates to better performance on both music and language-related tasks, such as hearing speech in noise, reading, and music aptitude (more specifically, rhythm aptitude; Chandrasekaran, Hornickel, Skoe, Nicol, & Kraus, 2009; Strait, Hornickel, & Kraus, 2011). We propose that, in combination with auditory cognitive abilities (working memory and attention), neural sensitivity to rhythmic components and regularities in ongoing sound streams provides a common mechanism that underlies music and reading abilities, potentially contributing to the observed covariance in child music and literacy skills (Anvari, Trainor, Woodside, & Levy, 2002; Atterbury, 1985; Forgeard, Schlaug, Norton, Rosam, & Iyengar, 2008; Overy, 2003; Strait, Hornickel, et al., 2011).

On the Association Between Music Training and Child Literacy

In our view, the auditory expertise gained over years of music training fine-tunes the auditory system in a comprehensive fashion, strengthening the neurobiological and cognitive underpinnings of both speech and music processing. Because of this, music training may promote the sensory and cognitive mechanisms that underlie child literacy (Besson, Schön Moreno, Santos, & Magne, 2007; Chandrasekaran & Kraus, 2010b, in press; Gaab et al., 2005; Huss et al., 2010). In fact, a growing body of work reports covariance in music and reading abilities (Forgeard et al., 2008; Overy, 2003), even after controlling for nonverbal IQ and phonological awareness (Anvari et al., 2002). Rhythm aptitude may be a better predictor of reading ability than pitch-based aptitude (Douglas & Willatts, 1994; Huss et al., 2010; Strait, Hornickel, et al., 2011), although earlier studies have been inconclusive in this regard (see Huss et al., 2010, for discussion; Forgeard et al., 2008; Overy, Nicolson, Fawcett, & Clarke, 2003; cf. Anvari et al., 2002).

In contrast to Hyde and Peretz’s (2004) classification of amusic brains as “out of tune but in time,” Goswami and colleagues have proposed that children with reading impairment have brains that are “in tune but out of time.” The connection between rhythm and reading abilities may reflect the fundamental importance of rhythm for both music and language production and perception. Indeed, we now have evidence for selective impairments in rhythm processing among individuals with dyslexia and Specific Language Impairment (SLI), including deficits in keeping a beat (Corriveau et al., 2007; Corriveau & Goswami, 2009; Goswami et al., 2002; Wolff, Michel, & Ovrut, 1990). Deficits in processing the fast temporal components of slow rhythmic events (i.e., syllables) have long been linked with reading impairment (Goswami, Fosker, Huss, Mead, & Szucs, 2011; Tallal, 1980; Tallal, Miller, & Fitch, 1993; Tallal & Stark, 1981) and the neural processing of rapid, nonlinguistic sound sequences appears to be impaired in poor readers and children with SLI (Benasich & Tallal, 2002; Temple et al., 2000). Wisbey (1980) was one of the first to propose that music training, by facilitating the development of multisensory awareness and auditory acuity, could facilitate reading in impaired children. Although this proposal has been verified by a number of experiments (Douglas & Willatts, 1994; Moreno et al., 2009), more research using pre and post training paradigms is necessary to determine the specific impact of music training on child reading skill and underlying brain function, as well as to unravel contributions of tonal versus rhythmic aspects of music training.

Core neural and cognitive mechanisms that are deficient in poor readers are enhanced in musicians (Figure 3 in color plate section; Chandrasekaran & Kraus, 2010b; Kraus & Chandrasekaran, 2010; Kraus, Skoe, Parbery-Clark, & Ashley, 2009; Tzounopoulos & Kraus, 2009). Such mechanisms include auditory cognitive skills (working memory and attention; Figure 1b, c; Gathercole, Alloway, Willis, & Adams, 2006; Gathercole & Baddeley, 1990; Siegel, 1994), neural timing in response to speech (Figure 3 in color plate section, upper panel; Abrams et al., 2009; Banai et al., 2009; Hornickel, Skoe, Nicol, Zecker, & Kraus, 2009; Musacchia et al., 2007, 2008; Parbery-Clark, Skoe, & Kraus, 2009; Strait et al., 2009), the neural encoding of the spectral components of speech (Figure 3 in color plate section, middle panel), the neural discrimination of closely related speech syllables (Figure 3 in color plate section, lower panel; Chobert et al., 2011; Hornickel et al., 2009; Tierney, Parbery-Clark, Strait, & Kraus, 2011), and the perception and neural encoding of speech in noise (Figure 2; Anderson et al., 2010; Banai et al., 2009; Parbery-Clark, Skoe, & Kraus, 2009; Parbery-Clark, Skoe, Lam, & Kraus, 2009).

Because reading requires rapid and automatic mapping of written phonemes onto words stored in auditory memory, it is not surprising that how well the nervous system encodes speech sounds relates to reading ability (Banai et al., 2009; Chandrasekaran et al., 2009; Hornickel et al., 2009). Furthermore, increasing evidence indicates that some children with reading impairment are particularly susceptible to neural response degradation to speech in noise. For example, Anderson at al. (2010) demonstrated that, compared to good readers, children with reading impairment exhibit increased background noise-induced degradation in their neural responses to speech. By contrast, musicians demonstrate a relatively small impact of background noise on speech-evoked neural responses (Figure 2c; Parbery-Clark, Skoe, & Kraus, 2009). In light of connections between child reading impairment, the inability of the auditory system to encode a target signal of interest in the presence of noise (Sperling, Lu, Manis, & Seidenberg, 2005, 2006), impaired auditory cognitive skills (Gathercole et al., 2006; Gathercole & Baddeley, 1990; Siegel, 1994), and deficits in processing the fast temporal components of slow rhythmic events (i.e., syllables; Goswami et al., 2011; Tallal, 1980; Tallal et al., 1993; Tallal & Stark, 1981), we suggest that music may provide an efficient mechanism for auditory training, aiding in the prevention and remediation of speech-in-noise and reading impairments (Chandrasekaran & Kraus, 2010b; Kraus & Chandrasekaran, 2010).

Conclusions

Due to its multisensory nature, attentional demands, complex sound structure, rhythmic organization, and reliance on rapid audio-motor feedback, music is a powerful tool for shaping neuronal structure and function, especially with regard to auditory processing (Norton et al., 2005; Schlaug, 2001; Schlaug et al., 2009; Schlaug, Norton, Overy, & Winner, 2005). Its effects are not constrained to the brain’s music networks but apply to general auditory processing, including the processing of speech. We have herein defined common mechanisms that underlie music and language skills such as reading and hearing speech in noise. These mechanisms include auditory attention, working memory, neural function in challenging listening environments (e.g., in background noise), sequential sound processing, and neural sensitivity to temporal and spectral aspects of complex sounds and sound regularities. Given these common mechanisms, music may be useful for promoting the development and maintenance of auditory skills and for improving the efficacy of remedial attempts for individuals with auditory impairments.

Acknowledgments

This research is supported by National Science Foundation 0921275 and 1057556, the National Institutes for Health F31DC011457-01 and by the Grammy Research Foundation.

References

- Abrams DA, Bhatara A, Ryali S, Balaban E, Levitin DJ, Menon V. Decoding temporal structure in music and speech relies on shared brain resources but elicits different fine-scale spatial patterns. Cerebral Cortex. 2010;21:1507–1518. doi: 10.1093/cercor/bhq198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abrams DA, Nicol T, Zecker S, Kraus N. Abnormal cortical processing of the syllable rate of speech in poor readers. Journal of Neuroscience. 2009;29:7686–7693. doi: 10.1523/JNEUROSCI.5242-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahissar M, Lubin Y, Putter-Katz H, Banai K. Dyslexia and the failure to form a perceptual anchor. Nature Neuroscience. 2006;9:1558–1564. doi: 10.1038/nn1800. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Nahum M, Nelken I, Hochstein S. Reverse hierarchies and sensory learning. Philosophical Transactions of the Royal Society B: Biologial Sciences. 2009;364:285–299. doi: 10.1098/rstb.2008.0253. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alain C, Campeanu S, Tremblay K. Changes in sensory evoked responses coincide with rapid improvement in speech identification performance. Journal of Cognitive Neuroscience. 2010;22:392–403. doi: 10.1162/jocn.2009.21279. [DOI] [PubMed] [Google Scholar]

- Anderson S, Chandrasekaran B, Skoe E, Kraus N. Neural timing is linked to speech perception in noise. Journal of Neuroscience. 2010;30:4922–4926. doi: 10.1523/JNEUROSCI.0107-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anvari SH, Trainor LJ, Woodside J, Levy BA. Relations among musical skills, phonological processing, and early reading ability in preschool children. Journal of Experimental Child Psychology. 2002;83:111–130. doi: 10.1016/s0022-0965(02)00124-8. [DOI] [PubMed] [Google Scholar]

- Atterbury MJ. Musical differences in learningdisabled and normal-achieving readers aged seven, eight and nine. Psychology of Music. 1985;13:114–123. [Google Scholar]

- Bajo VM, Nodal FR, Moore DR, King AJ. The descending corticocollicular pathway mediates learninginduced auditory plasticity. Nature Neuroscience. 2010;13:253–260. doi: 10.1038/nn.2466. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banai K, Hornickel JM, Skoe E, Nicol T, Zecker S, Kraus N. Reading and subcortical auditory function. Cerebral Cortex. 2009;19:2699–2707. doi: 10.1093/cercor/bhp024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumann S, Koeneke S, Schmidt CF, Meyer M, Lutz K, Jäncke L. A network for audio-motor coordination in skilled pianists and non-musicians. Brain Research. 2007;1161:65–78. doi: 10.1016/j.brainres.2007.05.045. [DOI] [PubMed] [Google Scholar]

- Baumann S, Meyer M, Jäncke L. Enhancement of auditory-evoked potentials in musicians reflects an influence of expertise but not selective attention. Journal of Cognitive Neuroscience. 2008;20:2238–2249. doi: 10.1162/jocn.2008.20157. [DOI] [PubMed] [Google Scholar]

- Benasich AA, Tallal P. Infant discrimination of rapid auditory cues predicts later language impairment. Behavioural Brain Research. 2002;136:31–49. doi: 10.1016/s0166-4328(02)00098-0. [DOI] [PubMed] [Google Scholar]

- Bent T, Bradlow AR, Wright BA. The influence of linguistic experience on the cognitive processing of pitch in speech and nonspeech sounds. Journal of Experimental Psychology: Human Perception and Performance. 2006;32:97–103. doi: 10.1037/0096-1523.32.1.97. [DOI] [PubMed] [Google Scholar]

- Besson M, Schön D, Moreno S, Santos A, Magne C. Influence of musical expertise and musical training on pitch processing in music and language. Restorative Neurology and Neuroscience. 2007;25:399–410. [PubMed] [Google Scholar]

- Bidelman GM, Gandour JT, Krishnan A. Crossdomain effects of music and language experience on the representation of pitch in the human auditory brainstem. Journal of Cognitive Neuroscience. 2009;23:425–434. doi: 10.1162/jocn.2009.21362. [DOI] [PubMed] [Google Scholar]

- Bidelman GM, Krishnan A. Effects of reverberation on brainstem representation of speech in musicians and non-musicians. Brain Research. 2010;1355:112–125. doi: 10.1016/j.brainres.2010.07.100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boltz M. Rhythm and “good endings”: Effects of temporal structure on tonality judgments. Perception and Psychophysics. 1989;46:9–17. doi: 10.3758/bf03208069. [DOI] [PubMed] [Google Scholar]

- Brashears SM, Morlet TG, Berlin CI, Hood LJ. Olivocochlear efferent suppression in classical musicians. Journal of the American Academy of Audiology. 2003;14:314–324. [PubMed] [Google Scholar]

- Brown S, Martinez MJ, Parsons LM. Music and language side by side in the brain: a PET study of the generation of melodies and sentences. European Journal of Neuroscience. 2006;23:2791–2803. doi: 10.1111/j.1460-9568.2006.04785.x. [DOI] [PubMed] [Google Scholar]

- Burkard RF, Sims D. A comparison of the effects of broadband masking noise on the auditory brainstem response in young and older adults. American Journal of Audiology. 2002;11:13–22. doi: 10.1044/1059-0889(2002/004). [DOI] [PubMed] [Google Scholar]

- Chan AS, Ho YC, Cheung MC. Music training improves verbal memory. Nature. 1998;396:128. doi: 10.1038/24075. [DOI] [PubMed] [Google Scholar]

- Chandrasekaran B, Hornickel J, Skoe E, Nicol T, Kraus N. Context-dependent encoding in the human auditory brainstem relates to hearing speech in noise: Implications for developmental dyslexia. Neuron. 2009;64:311–319. doi: 10.1016/j.neuron.2009.10.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandrasekaran B, Kraus N. The scalp-recorded brainstem response to speech: Neural origins and plasticity. Psychophysiology. 2010a;47:236–246. doi: 10.1111/j.1469-8986.2009.00928.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chandrasekaran B, Kraus N. Music, noiseexclusion, and learning. Music Perception. 2010b;27:297–306. [Google Scholar]

- Chandrasekaran B, Kraus N. Biological factors contributing to reading ability: subcortical auditory function. In: Benasich AA, Fitch RH, editors. Developmental Dyslexia: Early Precursors, Neurobehavioral Markers and Biological Substrates. (in press) [Google Scholar]

- Chobert J, Marie C, Francois C, Schön D, Besson M. Enhanced passive and active processing of syllables in musician children. Journal of Cognitive Neuroscience. 2011;23:3874–3887. doi: 10.1162/jocn_a_00088. [DOI] [PubMed] [Google Scholar]

- Conway AR, Cowan N, Bunting MF. The cocktail party phenomenon revisited: The importance of working memory capacity. Psychonomic Bulletin and Review. 2001;8:331–335. doi: 10.3758/bf03196169. [DOI] [PubMed] [Google Scholar]

- Conway CM, Pisoni DB, Kronenberger WG. The importance of sound for cognitive sequencing abilities: The auditory scaffolding hypothesis. Current Directions in Psychological Science. 2009;18:275–279. doi: 10.1111/j.1467-8721.2009.01651.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corriveau K, Pasquini E, Goswami U. Basic auditory processing skills and specific language impairment: A new look at an old hypothesis. Journal of Speech, Language and Hearing Research. 2007;50:647–666. doi: 10.1044/1092-4388(2007/046). [DOI] [PubMed] [Google Scholar]

- Corriveau KH, Goswami U. Rhythmic motor entrainment in children with speech and language impairments: Tapping to the beat. Cortex. 2009;45:119–130. doi: 10.1016/j.cortex.2007.09.008. [DOI] [PubMed] [Google Scholar]

- de Boer J, Thornton AR. Neural correlates of perceptual learning in the auditory brainstem: Efferent activity predicts and reflects improvement at a speech-in-noise discrimination task. Journal of Neuroscience. 2008;28:4929–4937. doi: 10.1523/JNEUROSCI.0902-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Douglas S, Willatts P. The relationship between musical ability and literacy skills. Journal of Research in Reading. 1994;17:99–107. [Google Scholar]

- Drullman R, Festen JM, Plomp R. Effect of temporal envelope smearing on speech reception. Journal of the Acoustical Society of America. 1994;95:1053–1064. doi: 10.1121/1.408467. [DOI] [PubMed] [Google Scholar]

- Elbert T, Pantev C, Wienbruch C, Rockstroh B, Taub E. Increased cortical representation of the fingers of the left hand in string players. Science. 1995;270:305–307. doi: 10.1126/science.270.5234.305. [DOI] [PubMed] [Google Scholar]

- Elhilali M, Xiang J, Shamma SA, Simon JZ. Interaction between attention and bottom-up saliency mediates the representation of foreground and background in an auditory scene. PLoS Biology. 2009;7:e1000129. doi: 10.1371/journal.pbio.1000129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Facoetti A, Lorusso ML, Paganoni P, Cattaneo C, Galli R, Umilta C, et al. Auditory and visual automatic attention deficits in developmental dyslexia. Cognitive Brain Research. 2003;16:185–191. doi: 10.1016/s0926-6410(02)00270-7. [DOI] [PubMed] [Google Scholar]

- Forgeard M, Schlaug G, Norton A, Rosam C, Iyengar U. The relation between music and phonological processing in normal-reading children and children with dyslexia. Music Perception. 2008;25:383–390. [Google Scholar]

- Fritz JB, Elhilali M, Shamma SA. Adaptive changes in cortical receptive fields induced by attention to complex sounds. Journal of Neurophysiology. 2007;98:2337–2346. doi: 10.1152/jn.00552.2007. [DOI] [PubMed] [Google Scholar]

- Fujioka T, Ross B, Kakigi R, Pantev C, Trainor LJ. One year of musical training affects development of auditory cortical-evoked fields in young children. Brain. 2006;129:2593–2608. doi: 10.1093/brain/awl247. [DOI] [PubMed] [Google Scholar]

- Gaab N, Schlaug G. The effect of musicianship on pitch memory in performance matched groups. Neuroreport. 2003;14:2291–2295. doi: 10.1097/00001756-200312190-00001. [DOI] [PubMed] [Google Scholar]

- Gaab N, Tallal P, Kim H, Lakshminarayanan K, Archie JJ, Glover GH, et al. Neural correlates of rapid spectrotemporal processing in musicians and nonmusicians. Annals of the New York Academy of Sciences. 2005;1060:82–88. doi: 10.1196/annals.1360.040. [DOI] [PubMed] [Google Scholar]

- Gaser C, Schlaug G. Brain structures differ between musicians and non-musicians. Journal of Neuroscience. 2003;23:9240–9245. doi: 10.1523/JNEUROSCI.23-27-09240.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gathercole SE, Alloway TP, Willis C, Adams AM. Working memory in children with reading disabilities. Journal of Experimental Child Psychology. 2006;93:265–281. doi: 10.1016/j.jecp.2005.08.003. [DOI] [PubMed] [Google Scholar]

- Gathercole SE, Baddeley A. Phonological memory deficits in language disordered children: Is there a causal connection? Journal of Memory and Language. 1990;29:336–360. [Google Scholar]

- Goswami U, Fosker T, Huss M, Mead N, Szucs D. Rise time and formant transition duration in the discrimination of speech sounds: the Ba-Wa distinction in developmental dyslexia. Developmental Science. 2011;14:34–43. doi: 10.1111/j.1467-7687.2010.00955.x. [DOI] [PubMed] [Google Scholar]

- Goswami U, Thomson J, Richardson U, Stainthorp R, Hughes D, Rosen S, et al. Amplitude envelope onsets and developmental dyslexia: A new hypothesis. Proceedings of the National Academy of Sciences, U.S.A. 2002;99:10911–10916. doi: 10.1073/pnas.122368599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greenberg S. Temporal properties of spontaneous speech: A syllable–centric perspective. Journal of Phonetics. 2003;31:465–485. [Google Scholar]

- Hafter ER, Sarampalis A, Loui P. Auditory attention and filters. In: Yost WA, Popper AN, Fay RR, editors. Auditory Perception of Sound Sources. Springer; New York: 2008. pp. 115–142. [Google Scholar]

- Haslinger B, Erhard P, Altenmüller E, Schroeder U, Boecker H, Ceballos-Baumann AO. Transmodal sensorimotor networks during action observation in professional pianists. Journal of Cognitive Neuroscience. 2005;17:282–293. doi: 10.1162/0898929053124893. [DOI] [PubMed] [Google Scholar]

- He N, Dubno JR, Mills JH. Frequency and intensity discrimination measured in a maximum-likelihood procedure from young and aged normal-hearing subjects. Journal of the Acoustical Society of America. 1998;103:553–565. doi: 10.1121/1.421127. [DOI] [PubMed] [Google Scholar]

- Heinrich A, Schneider BA, Craik FI. Investigating the influence of continuous babble on auditory short-term memory performance. Journal of Experimental Psychology. 2008;61:735–751. doi: 10.1080/17470210701402372. [DOI] [PubMed] [Google Scholar]

- Ho YC, Cheung MC, Chan AS. Music training improves verbal but not visual memory: Cross-sectional and longitudinal explorations in children. Neuropsychologia. 2003;17:439–450. doi: 10.1037/0894-4105.17.3.439. [DOI] [PubMed] [Google Scholar]

- Hornickel J, Skoe E, Nicol T, Zecker S, Kraus N. Subcortical differentiation of voiced stop consonants: Relationships to reading and speech in noise perception. Proceedings of the National Academy of Sciences, U.S.A. 2009;106:13022–13027. doi: 10.1073/pnas.0901123106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huss M, Verney JP, Fosker T, Mead N, Goswami U. Music, rhythm, rise time perception and developmental dyslexia: Perception of musical meter predicts reading and phonology. Cortex. 2010;47:674–689. doi: 10.1016/j.cortex.2010.07.010. [DOI] [PubMed] [Google Scholar]

- Hutchinson S, Lee LH, Gaab N, Schlaug G. Cerebellar volume of musicians. Cerebral Cortex. 2003;13:943–949. doi: 10.1093/cercor/13.9.943. [DOI] [PubMed] [Google Scholar]

- Hyde KL, Lerch J, Norton A, Forgeard M, Winner E, Evans AC, et al. Musical training shapes structural brain development. Journal of Neuroscience. 2009;29:3019–3025. doi: 10.1523/JNEUROSCI.5118-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyde KL, Peretz I. Brains that are out of tune but in time. Psychological Science. 2004;15:356–360. doi: 10.1111/j.0956-7976.2004.00683.x. [DOI] [PubMed] [Google Scholar]

- Iversen JR, Patel AD, Ohgushi K. Perception of rhythmic grouping depends on auditory experience. Journal of the Acoustical Society of America. 2008;124:2263–2271. doi: 10.1121/1.2973189. [DOI] [PubMed] [Google Scholar]

- Jakobson LS, Lewycky ST, Kilgour AR, Stoesz BM. Memory for verbal and visual material in highly trained musicians. Music Perception. 2008;26:41–55. [Google Scholar]

- James W. The principles of psychology. H. Holt and Company; New York: 1890. [Google Scholar]

- Janata P, Tillmann B, Bharucha JJ. Listening to polyphonic music recruits domain–general attention and working memory circuits. Cognitive, Affective and Behavioral Neuroscience. 2002;2:121–140. doi: 10.3758/cabn.2.2.121. [DOI] [PubMed] [Google Scholar]

- Jeffries S, Everatt J. Working memory: its role in dyslexia and other specific learning difficulties. Dyslexia. 2004;10:196–214. doi: 10.1002/dys.278. [DOI] [PubMed] [Google Scholar]

- Ji W, Suga N. Tone-specific and nonspecific plasticity of inferior colliculus elicited by pseudo-conditioning: Role of acetylcholine and auditory and somatosensory cortices. Journal of Neurophysiology. 2009;102:941–952. doi: 10.1152/jn.00222.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones MR, Kidd G, Wetzel R. Evidence for rhythmic attention. Journal of Experimental Psychology, Human Perception and Performance. 1981;7:1059–1073. doi: 10.1037//0096-1523.7.5.1059. [DOI] [PubMed] [Google Scholar]

- Killion MC, Niquette PA. What can the puretone audiogram tell us about a patient’s SNR loss? Hearing Journal. 2000;53:46–53. [Google Scholar]

- Kishon-Rabin L, Amir O, Vexler Y, Zaltz Y. Pitch discrimination: Are professional musicians better than nonmusicians? Journal of Basic Clinical Physiology and Pharmacology. 2001;12:125–143. doi: 10.1515/jbcpp.2001.12.2.125. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Gunter TC, v Cramon DY, Zysset S, Lohmann G, Friederici AD. Bach speaks: A cortical “language-network” serves the processing of music. Neuroimage. 2002;17:956–966. [PubMed] [Google Scholar]

- Kraus N, Chandrasekaran B. Music training for the development of auditory skills. Nature Reviews Neuroscience. 2010;11:599–605. doi: 10.1038/nrn2882. [DOI] [PubMed] [Google Scholar]

- Kraus N, Skoe E, Parbery-Clark A, Ashley R. Training-induced malleability in neural encoding of pitch, timbre and timing: Implications for language and music. Annals of the New York Academy of Sciences. 2009;1169:543–557. doi: 10.1111/j.1749-6632.2009.04549.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnan A, Gandour JT, Bidelman GM. The effects of tone language experience on pitch processing in the brainstem. Journal of Neurolinguistics. 2010;23:81–95. doi: 10.1016/j.jneuroling.2009.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnan A, Xu Y, Gandour J, Cariani P. Encoding of pitch in the human brainstem is sensitive to language experience. Cognitive Brain Research. 2005;25:161–168. doi: 10.1016/j.cogbrainres.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Lee KM, Skoe E, Kraus N, Ashley R. Selective subcortical enhancement of musical intervals in musicians. Journal of Neuroscience. 2009;29:5832–5840. doi: 10.1523/JNEUROSCI.6133-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Margulis E, Mlsna LM, Uppunda AK, Parrish TB, Wong PCM. Selective neurophysiologic responses to music in instrumentalists with different listening biographies. Human Brain Mapping. 2009;30:267–275. doi: 10.1002/hbm.20503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marie C, Magne C, Besson M. Musicians and the metric structure of words. Journal of Cognitive Neuroscience. 2010;23:294–305. doi: 10.1162/jocn.2010.21413. [DOI] [PubMed] [Google Scholar]

- Marques C, Moreno S, Castro SL, Besson M. Musicians detect pitch violation in a foreign language better than nonmusicians: Behavioral and electrophysiological evidence. Journal of Cognitive Neuroscience. 2007;19:1453–1463. doi: 10.1162/jocn.2007.19.9.1453. [DOI] [PubMed] [Google Scholar]

- Micheyl C, Delhommeau K, Perrot X, Oxenham AJ. Influence of musical and psychoacoustical training on pitch discrimination. Hearing Research. 2006;219:36–47. doi: 10.1016/j.heares.2006.05.004. [DOI] [PubMed] [Google Scholar]

- Montgomery JW. Working memory and comprehension in children with specific language impairment: What we know so far. Journal of Communication Disordorders. 2002;36:221–231. doi: 10.1016/s0021-9924(03)00021-2. [DOI] [PubMed] [Google Scholar]

- Moore DR, Ferguson MA, Edmondson-Jones AM, Ratib S, Riley A. Nature of auditory processing disorder in children. Pediatrics. 2010;126:e382–390. doi: 10.1542/peds.2009-2826. [DOI] [PubMed] [Google Scholar]

- Moreno S, Marques C, Santos A, Santos M, Castro SL, Besson M. Musical training influences linguistic abilities in 8-year-old children: More evidence for brain plasticity. Cerebral Cortex. 2009;19:712–723. doi: 10.1093/cercor/bhn120. [DOI] [PubMed] [Google Scholar]

- Musacchia G, Sams M, Skoe E, Kraus N. Musicians have enhanced subcortical auditory and audiovisual processing of speech and music. Proceedings of the National Academy of Sciences, U.S.A. 2007;104:15894–15898. doi: 10.1073/pnas.0701498104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Musacchia G, Strait D, Kraus N. Relationships between behavior, brainstem and cortical encoding of seen and heard speech in musicians and non-musicians. Hearing Research. 2008;241:34–42. doi: 10.1016/j.heares.2008.04.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nahum M, Nelken I, Ahissar M. Low-level information and high-level perception: The case of speech in noise. PLoS Biology. 2008;6:e126. doi: 10.1371/journal.pbio.0060126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norton A, Winner E, Cronin K, Overy K, Lee DJ, Schlaug G. Are there pre-existing neural, cognitive, or motoric markers for musical ability? Brain and Cognition. 2005;59:124–134. doi: 10.1016/j.bandc.2005.05.009. [DOI] [PubMed] [Google Scholar]

- Ohnishi T, Matsuda H, Asada T, Aruga M, Hirakata M, Nishikawa M, et al. Functional anatomy of musical perception in musicians. Cerebral Cortex. 2001;11:754–760. doi: 10.1093/cercor/11.8.754. [DOI] [PubMed] [Google Scholar]

- Overy K. Dyslexia and music: From timing deficits to musical intervention. Annals of the New York Academy of Sciences. 2003;999:497–505. doi: 10.1196/annals.1284.060. [DOI] [PubMed] [Google Scholar]

- Overy K, Nicolson RI, Fawcett AJ, Clarke EF. Dyslexia and music: Measuring musical timing skills. Dyslexia. 2003;9:18–36. doi: 10.1002/dys.233. [DOI] [PubMed] [Google Scholar]

- Pallesen KJ, Brattico E, Bailey CJ, Korvenoja A, Koivisto J, Gjedde A, et al. Cognitive control in auditory working memory is enhanced in musicians. PLoS ONE. 2010;5:e11120. doi: 10.1371/journal.pone.0011120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pantev C, Oostenveld R, Engelien A, Ross B, Roberts LE, Hoke M. Increased auditory cortical representation in musicians. Nature. 1998;392:811–814. doi: 10.1038/33918. [DOI] [PubMed] [Google Scholar]

- Pantev C, Roberts LE, Schulz M, Engelien A, Ross B. Timbre-specific enhancement of auditory cortical representations in musicians. Neuroreport. 2001;12:169–174. doi: 10.1097/00001756-200101220-00041. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Kraus N. Musical experience limits the degradative effects of background noise on the neural processing of sound. Journal of Neuroscience. 2009;29:14100–14107. doi: 10.1523/JNEUROSCI.3256-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parbery-Clark A, Skoe E, Lam C, Kraus N. Musician enhancement for speech-in-noise. Ear and Hearing. 2009;30:653–661. doi: 10.1097/AUD.0b013e3181b412e9. [DOI] [PubMed] [Google Scholar]

- Parbery-Clark A, Strait DL, Anderson S, Hittner E, Kraus N. Musical experience and the aging auditory system: Implications for cognitive abilities and hearing speech in noise. PLoS ONE. 2011;6:e18082. doi: 10.1371/journal.pone.0018082. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patel AD. Language, music, syntax and the brain. Nature Neuroscience. 2003;6:674–681. doi: 10.1038/nn1082. [DOI] [PubMed] [Google Scholar]

- Patel AD, Iversen JR, Rosenberg JC. Comparing the rhythm and melody of speech and music: The case of British English and French. Journal of the Acoustical Society of America. 2006;119:3034–3047. doi: 10.1121/1.2179657. [DOI] [PubMed] [Google Scholar]

- Perrot X, Micheyl C, Khalfa S, Collet L. Stronger bilateral efferent influences on cochlear biomechanical activity in musicians than in non-musicians. Neuroscience Letters. 1999;262:167–170. doi: 10.1016/s0304-3940(99)00044-0. [DOI] [PubMed] [Google Scholar]

- Petkov CI, Kang X, Alho K, Bertrand O, Yund EW, Woods DL. Attentional modulation of human auditory cortex. Nature Neuroscience. 2004;7:658–663. doi: 10.1038/nn1256. [DOI] [PubMed] [Google Scholar]

- Petrini K, Dahl S, Rocchesso D, Waadeland CH, Avanzini F, Puce A, et al. Multisensory integration of drumming actions: Musical expertise affects perceived audiovisual asynchrony. Experimental Brain Research. 2009;198:339–352. doi: 10.1007/s00221-009-1817-2. [DOI] [PubMed] [Google Scholar]

- Rogalsky C, Rong F, Saberi K, Hickok G. Functional anatomy of language and music perception: Temporal and structural factors investigated using functional magnetic resonance imaging. Journal of Neuroscience. 2011;31:3843–3852. doi: 10.1523/JNEUROSCI.4515-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russo N, Nicol T, Musacchia G, Kraus N. Brainstem responses to speech syllables. Clinical Neurophysiology. 2004;115:2021–2030. doi: 10.1016/j.clinph.2004.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schellenberg EG. Music and cognitive abilities. Current Directions in Psychological Science. 2005;14:317–320. [Google Scholar]

- Schellenberg EG. Music training and nonmusical abilities: Commentary on Stoesz, Jakobson, Kilgour, and Lewycky (2007) and Jakobson, Lewycky, Kilgour, and Stoesz (2008) Music Perception. 2009;27:139–143. [Google Scholar]

- Schlaug G. The brain of musicians. A model for functional and structural adaptation. Annals of the New York Academy of Sciences. 2001;930:281–299. [PubMed] [Google Scholar]

- Schlaug G, Forgeard M, Zhu L, Norton A, Winner E. Training-induced neuroplasticity in young children. Annals of the New York Academy of Sciences. 2009;1169:205–208. doi: 10.1111/j.1749-6632.2009.04842.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schlaug G, Norton A, Overy K, Winner E. Effects of music training on the child’s brain and cognitive development. Annals of the New York Academy of Sciences. 2005;1060:219–230. doi: 10.1196/annals.1360.015. [DOI] [PubMed] [Google Scholar]

- Schön D, Magne C, Besson M. The music of speech: Music training facilitates pitch processing in both music and language. Psychophysiology. 2004;41:341–349. doi: 10.1111/1469-8986.00172.x. [DOI] [PubMed] [Google Scholar]

- Shahin A, Bosnyak DJ, Trainor LJ, Roberts LE. Enhancement of neuroplastic P2 and N1c auditory evoked potentials in musicians. Journal of Neuroscience. 2003;23:5545–5552. doi: 10.1523/JNEUROSCI.23-13-05545.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shahin A, Roberts LE, Trainor LJ. Enhancement of auditory cortical development by musical experience in children. Neuroreport. 2004;15:1917–1921. doi: 10.1097/00001756-200408260-00017. [DOI] [PubMed] [Google Scholar]

- Siegel LS. Working memory and reading: A life-span perspective. International Journal of Behavioral Development. 1994;17:109–124. [Google Scholar]

- Skoe E, Kraus N. Auditory brain stem response to complex sounds: A tutorial. Ear Hear. 2010;31:302–324. doi: 10.1097/AUD.0b013e3181cdb272. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skoe E, Nicol T, Kraus N. Cross-phaseogram: Objective neural index of speech sound differentiation. Journal of Neuroscience Methods. 2011;196:308–317. doi: 10.1016/j.jneumeth.2011.01.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Snyder JS, Large EW. Gamma-band activity reflects the metric structure of rhythmic tone sequences. Cognitive Brain Research. 2005;24:117–126. doi: 10.1016/j.cogbrainres.2004.12.014. [DOI] [PubMed] [Google Scholar]

- So CK, Best CT. Cross-language perception of non-native tonal contrasts: Effects of native phonological and phonetic influences. Language and Speech. 2010;53:273–293. doi: 10.1177/0023830909357156. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song J, Skoe E, Banai K, Kraus N. Perception of speech in noise: Neural correlates. Journal of Cognitive Neuroscience. 2010;23:2268–2279. doi: 10.1162/jocn.2010.21556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song JH, Skoe E, Wong PC, Kraus N. Plasticity in the adult human auditory brainstem following short-term linguistic training. Journal of Cognitive Neuroscience. 2008;20:1892–1902. doi: 10.1162/jocn.2008.20131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sperling AJ, Lu ZL, Manis FR, Seidenberg MS. Deficits in perceptual noise exclusion in developmental dyslexia. Nature Neuroscience. 2005;8:862–863. doi: 10.1038/nn1474. [DOI] [PubMed] [Google Scholar]

- Sperling AJ, Lu ZL, Manis FR, Seidenberg MS. Motion-perception deficits and reading impairment: It’s the noise, not the motion. Psychological Science. 2006;17:1047–1053. doi: 10.1111/j.1467-9280.2006.01825.x. [DOI] [PubMed] [Google Scholar]

- Stevens C, Sanders L, Neville H. Neurophysiological evidence for selective auditory attention deficits in children with specific language impairment. Brain Research. 2006;1111:143–152. doi: 10.1016/j.brainres.2006.06.114. [DOI] [PubMed] [Google Scholar]

- Stewart L, Henson R, Kampe K, Walsh V, Turner R, Frith U. Brain changes after learning to read and play music. Neuroimage. 2003;20:71–83. doi: 10.1016/s1053-8119(03)00248-9. [DOI] [PubMed] [Google Scholar]

- Strait DL, Chan K, Ashley R, Kraus N. Specialization among the specialized: Auditory brainstem function is tuned in to timbre. Cortex. 2011 doi: 10.1016/j.cortex.2011.03.015. doi: 10.1016/j. cortex.2011.03.015 (epub ahead of print) [DOI] [PubMed] [Google Scholar]

- Strait DL, Hornickel J, Kraus N. Subcortical processing of speech regularities predicts reading and music aptitude in children. Behavioral and Brain Functions. 2011;7:44. doi: 10.1186/1744-9081-7-44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strait DL, Kraus N. Can you hear me now? Musical training shapes functional brain networks for selective auditory attention and hearing speech in noise. Frontiers in Psychology. 2011;2:113. doi: 10.3389/fpsyg.2011.00113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Strait DL, Kraus N, Parbery-Clark A, Ashley R. Musical experience shapes top-down auditory mechanisms: Evidence from masking and auditory attention performance. Hearing Research. 2010;261:22–29. doi: 10.1016/j.heares.2009.12.021. [DOI] [PubMed] [Google Scholar]

- Strait DL, Kraus N, Skoe E, Ashley R. Musical experience and neural efficiency: Effects of training on subcortical processing of vocal expressions of emotion. European Journal of Neuroscience. 2009;29:661–668. doi: 10.1111/j.1460-9568.2009.06617.x. [DOI] [PubMed] [Google Scholar]

- Suga N. Role of corticofugal feedback in hearing. Journal of Comparative Physiology. A, Neuroethology, Sensory, Neural and Behavioral Physiology. 2008;194:169–183. doi: 10.1007/s00359-007-0274-2. [DOI] [PubMed] [Google Scholar]

- Suga N, Ma X. Multiparametric corticofugal modulation and plasticity in the auditory system. Nature Reviews Neuroscience. 2003;4:783–794. doi: 10.1038/nrn1222. [DOI] [PubMed] [Google Scholar]

- Suga N, Xiao Z, Ma X, Ji W. Plasticity and corticofugal modulation for hearing in adult animals. Neuron. 2002;36:9–18. doi: 10.1016/s0896-6273(02)00933-9. [DOI] [PubMed] [Google Scholar]

- Tallal P. Auditory temporal perception, phonics, and reading disabilities in children. Brain and Language. 1980;9:182–198. doi: 10.1016/0093-934x(80)90139-x. [DOI] [PubMed] [Google Scholar]

- Tallal P, Gaab N. Dynamic auditory processing, musical experience and language development. Trends in Neurosciences. 2006;29:382–390. doi: 10.1016/j.tins.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Tallal P, Miller S, Fitch RH. Neurobiological basis of speech: A case for the preeminence of temporal processing. Annals of the New York Academy of Sciences. 1993;682:27–47. doi: 10.1111/j.1749-6632.1993.tb22957.x. [DOI] [PubMed] [Google Scholar]

- Tallal P, Stark RE. Speech acoustic-cue discrimination abilities of normally developing and language-impaired children. Journal of the Acoustical Society America. 1981;69:568–574. doi: 10.1121/1.385431. [DOI] [PubMed] [Google Scholar]

- Temple E, Poldrack RA, Protopapas A, Nagarajan S, Salz T, Tallal P, et al. Disruption of the neural response to rapid acoustic stimuli in dyslexia: Evidence from functional MRI. Proceedings of the National Academy of Sciences, U.S.A. 2000;97:13907–13912. doi: 10.1073/pnas.240461697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tervaniemi M, Ilvonen T, Karma K, Alho K, Naatanen R. The musical brain: Brain waves reveal the neurophysiological Basis of musicality in human subjects. Neuroscience Letters. 1997;226:1–4. doi: 10.1016/s0304-3940(97)00217-6. [DOI] [PubMed] [Google Scholar]

- Tervaniemi M, Kruck S, De Baene W, Schroger E, Alter K, Friederici AD. Top-down modulation of auditory processing: effects of sound context, musical expertise and attentional focus. European Journal of Neuroscience. 2009;30:1636–1642. doi: 10.1111/j.1460-9568.2009.06955.x. [DOI] [PubMed] [Google Scholar]

- Tierney A, Parbery-Clark A, Strait DL, Kraus N. Neural differentiation of speech sounds is more precise in musicians. Paper presented at the The Neurosciences and Music IV; Edinburgh, Scotland. Jun, 2011. [Google Scholar]

- Trainor LJ, Desjardins RN, Rockel C. A comparison of contour and interval processing in musicians and nonmusicians using event-related potentials. Australian Journal of Psychology. 1999;51:147–153. [Google Scholar]

- Tremblay KL, Shahin AJ, Picton T, Ross B. Auditory training alters the physiological detection of stimulus-specific cues in humans. Clinical Neurophysiology. 2009;120:128–135. doi: 10.1016/j.clinph.2008.10.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tzounopoulos T, Kraus N. Learning to encode timing: Mechanisms of plasticity in the auditory brainstem. Neuron. 2009;62:463–469. doi: 10.1016/j.neuron.2009.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Engen KJ, Bradlow AR. Sentence recognition in native- and foreign-language multi-talker background noise. Journal of the Acoustical Society of America. 2007;121:519–526. doi: 10.1121/1.2400666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Zuijen TL, Sussman E, Winkler I, Naatanen R, Tervaniemi M. Auditory organization of sound sequences by a temporal or numerical regularity—A mismatch negativity study comparing musicians and nonmusicians. Cognitive Brain Research. 2005;23:270–276. doi: 10.1016/j.cogbrainres.2004.10.007. [DOI] [PubMed] [Google Scholar]

- Ward LM. Synchronous neural oscillations and cognitive processes. Trends in Cognitive Sciences. 2003;7:553–559. doi: 10.1016/j.tics.2003.10.012. [DOI] [PubMed] [Google Scholar]

- Weissman DH, Roberts KC, Visscher KM, Woldorff MG. The neural bases of momentary lapses in attention. Nature Neurosci. 2006;9:971–978. doi: 10.1038/nn1727. [DOI] [PubMed] [Google Scholar]

- Winer JA. Decoding the auditory corticofugal systems. Hearing Research. 2005;212:1–8. doi: 10.1016/j.heares.2005.06.007. [DOI] [PubMed] [Google Scholar]

- Wisbey AS. Music as the source of learning. M.T.P. Press, Ltd; Lancaster, UK: 1980. [Google Scholar]

- Wolff PH, Michel GF, Ovrut M. The timing of syllable repetitions in developmental dyslexia. Journal of Speech and Hearing Research. 1990;33:281–289. doi: 10.1044/jshr.3302.281. [DOI] [PubMed] [Google Scholar]

- Wong PC, Ettlinger M, Sheppard JP, Gunasekera GM, Dhar S. Neuroanatomical characteristics and speech perception in noise in older adults. Ear and Hearing. 2010;31:471–479. doi: 10.1097/AUD.0b013e3181d709c2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wong PC, Skoe E, Russo NM, Dees T, Kraus N. Musical experience shapes human brainstem encoding of linguistic pitch patterns. Nature Neuroscience. 2007;10:420–422. doi: 10.1038/nn1872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolley SM, Gill PR, Theunissen FE. Stimulus-dependent auditory tuning results in synchronous population coding of vocalizations in the songbird midbrain. Journal of Neuroscience. 2006;26:2499–2512. doi: 10.1523/JNEUROSCI.3731-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright BA, Zhang Y. A review of the generalization of auditory learning. Philosophical Transactions of the Royal Society B: Biologial Sciences. 2009;364:301–311. doi: 10.1098/rstb.2008.0262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P, Penhune VB. Structure and function of auditory cortex: Music and speech. Trends in Cognitive Sciences. 2002;6:37–46. doi: 10.1016/s1364-6613(00)01816-7. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Gandour JT. Neural specializations for speech and pitch: Moving beyond the dichotomies. Philosophical Transactions of the Royal Society B: Biologial Sciences. 2008;363:1087–1104. doi: 10.1098/rstb.2007.2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Mondor TA, Evans AC. Auditory attention to space and frequency activates similar cerebral systems. Neuroimage. 1999;10:544–554. doi: 10.1006/nimg.1999.0491. [DOI] [PubMed] [Google Scholar]

- Zendel BR, Alain C. Musicians experience less agerelated decline in auditory processing. Psychology and Aging. 2011 doi: 10.1037/a0024816. doi: 10.1037/a0024816 (epub ahead of print) [DOI] [PubMed] [Google Scholar]