Abstract

Background

Community engagement has been a cornerstone of NIAID's HIV/AIDS clinical trials programs since 1990. Stakeholders now consider this critical to success, hence the impetus to develop evaluation approaches.

Objectives

The purpose was to assess the extent to which community advisory boards (CABs) at HIV/AIDS trials sites are being integrated into research activities.

Methods

CABs and research staff (RS) at NIAID research sites were surveyed for how each viewed: a) the frequency of activities indicative of community involvement; b) the means for identifying, prioritizing and supporting CAB needs; c) mission and operational challenges.

Results

Overall, CABs and RS share similar views about the frequency of community involvement activities. Cluster analysis reveals three groups of sites based on activity frequency ratings, including a group notable for CAB-RS discordance.

Conclusions

Assessing differences between community and researcher perceptions about the frequency of, and challenges posed by specific engagement activities, may prove useful in developing evaluation tools for assessing community engagement in collaborative research settings.

Introduction

In 2006, the U.S. National Institute of Allergy and Infectious Diseases (NIAID) restructured its clinical trials networks for HIV/AIDS, and initiated development of a comprehensive utilization-focused evaluation system for this program. A collaboratively-authored evaluation framework identified factors critical to the success of this large research initiative (1), among which community involvement and the relevance of network research to community were ranked highly, across a broad array of stakeholders. This generated the impetus to develop measures to meaningfully assess the activities that constitute community engagement.

Historically, community participation has been a cornerstone of NIAID's research activities since early in the HIV/AIDS epidemic (2, 3). In 1990, addressing demands of AIDS activists, NIAID established Community Advisory Boards (CABs) to provide community input to network trial design and prioritization. Soon thereafter NIAID made community involvement a requirement for clinical research site funding (2). To support community representatives and enhance their meaningful contribution, NIAID developed training programs for CAB members throughout the networks. In 2006 Community Partners was formed with the mission to: 1) improve intra- and inter-network community input; 2) support training for local CABs; 3) increase representation of vulnerable populations and communities in resource-limited areas; 4) address challenges to trial participation, and; 5) harmonize best practices for community participation.

With more than 20 years experience fostering community partnerships, NIAID recognizes an opportunity to begin evaluating community-researcher engagement. Whereas early assessments were limited to determining whether or not a network or site had a CAB, we are now exploring the extent to which CABs are being effectively integrated into research activities. This report details results from a survey of CABs and research staff (RS) at NIAID sites. The survey was designed by Community Partners, in association with the Office of HIV/AIDS Network Coordination (HANC), and NIAID staff. Iterations of the survey were critiqued and modified by each group, individually and together, until a consensus was reached. The survey was intended to assess: 1) the frequency of activities indicative of: community involvement in protocol selection and implementation, efforts to assure research relevance, and communication/collaboration; 2) means by which community needs are identified, prioritized and supported; 3) mission-related and operational challenges faced in community-researcher collaborations.

Materials and Methods

Survey purpose and design

A self-administered survey questionnaire was utilized by HANC, to reach the globally-distributed clinical trials sites. Both CABs and RS were queried about the frequency of occurrence of specific activities, and the types of challenges faced in their engagement. The rationale for this approach was to learn about how similarly (or dissimilarly) these partners viewed the functioning of their engagement. Respondents were asked about: 1) the frequency of activities reflective of community involvement in protocol selection and implementation, assuring research relevance to community, and community-researcher communication and collaboration; 2) mission and operational challenges; 3) means for identifying, prioritizing and supporting CAB needs. The community involvement activities selected for study, and the mission and operational challenges selected for assessment in the survey, were derived from a guideline and best practices document authored by the Community Partners, “Recommendations for Community Involvement in NIAID HIV/AIDS Clinical Trials Research” (4). Given the importance of this document in guiding how sites should approach collaboration and interaction with the community, framing our assessment with these activities was viewed as a logical first step in a more evolved understanding of community engagement. RS were requested to consult site research colleagues and complete a single survey, per site. CABs were requested to meet, review the survey, and designate an individual to submit a single response for their CAB. Respondents were given six weeks to complete and enter their data directly online, using Survey Monkey (5).

Sampling

From 163 clinical research sites and 138 CABs, 105 RS (64%) and 92 CAB (66%) responses were received. Fifty-six sites submitted both a CAB and RS response, six of which were excluded for missing data, with the final set for analysis consisting of data from 50 paired responses. Within pairs, CAB and RS reported on the same community-researcher collaboration, providing a unique opportunity to learn how researchers and community members working together, view their collaboration. Whether the 50 sites selected for analysis are representative of the all sites is uncertain. The subset selected for the paired analysis appeared similar in several respects to the overall set of responding sites (n=105; 64%), but may have differed somewhat from the non-responding sites (n=58; 36%), among which a slightly higher proportion were affiliated with prevention research, and a slightly lower proportion were involved in both treatment and prevention research (Table 1).

Table 1. Site Demographics.

| Site Descriptor | Sites Submitting a CAB1 Response (n = 92) |

Sites Submitting a RS2 Response (n = 105) |

Sites Submitting Complete CAB and RS Responses (n =50) |

Sites Not Submitting a RS Response (n = 58) |

|---|---|---|---|---|

| US-based | 54.9 | 52.0 | 66.0 | 65.5 |

| Non US-based | 45.1 | 48.0 | 34.0 | 34.5 |

| Affiliation with treatment research | 58.7 | 58.1 | 60.0 | 62.0 |

| Affiliation with prevention research | 16.3 | 14.3 | 12.0 | 24.1 |

| Affiliation with both treatment and prevention research | 22.8 | 26.7 | 28.0 | 13.9 |

| > 50% former/current clinical trial participants on CAB | 34.2 | 34.0 | 31.8 | NA3 |

All results expressed as percentages of total (n) for the column

CAB: Community Advisory Board;

RS: Research Staff;

Not Available

Data analysis

Our analyses focused on three main questions: 1) what, if any, similarities exist in the frequency rating patterns across different best practice activities? 2) how are sites grouped in terms of how CABs and RS rate activity frequencies? and, 3) based on this grouping, are there operational or mission-related challenges that are associated with sites? First, to evaluate the similarity of CAB and RS responses on the activities for community involvement, research relevance, and communication and collaboration, we conducted a multivariate profile analysis for each activity set. This enabled us to determine if different patterns of ratings were present, and if so, on which activities there were differences. To identify variation of specific activities from the mean frequency within each set, we evaluated the profiles in terms of activities for which group averages fell outside of the confidence interval of the pooled profile. We compensated for multiple testing of activities within each set by establishing an alpha rate of .01 generating a 99% confidence interval. Second, a case-wise hierarchical cluster analysis using Ward's algorithm(6) was conducted to classify groups of sites, based on the CAB and RS frequency ratings across the different activity areas. Finally, we examined the mission-related and operational challenge assessments in relation to the clustered sites through a separate univariate analysis of variance of the overall number of challenges by cluster of sites. Subsequent to these overall mean difference tests, we examined which challenges, if any, were significantly different by cluster.

Results

Comparison of best practice frequency ratings

CAB and RS rated (on a seven point Likert-type scale, with: 1=never; 7=always) the perceived frequency of occurrence of 25 best practice activities, eleven of which were CAB-based and fourteen describing RS functions. In using this scale, we assumed frequency could be measured as an interval variable with the primary interest to determine if the two groups differ in their responses. Analysis of data from the 50 paired responses revealed a striking degree of similarity between the two groups. We detected no difference in the multivariate profiles of CAB and RS frequency ratings for the sets of activities related to community involvement, relevance of research, or communication and collaboration (Figures 1-3). Also, activity frequency ratings by both groups appear unrelated to whether an activity is specific to CABs or RS. We did observe significant variation in some of the activities reported to occur most frequently and least frequently within the community involvement [F(10, 89) = 13.30, p < .001], relevance of research [F(5, 94) = 5.36, p < .001], and communication and collaboration activities [F(7, 92) = 35.64, p < .001]. Given the variation (non-flatness) of these rating profiles, we assessed which of the rated activities significantly deviated from the pooled means for the respective set of activities. Applying a 99% confidence limit to account for multiple testing, we examined the marginal means for each activity in a set relative to the estimated parameters of the pooled means for each set.

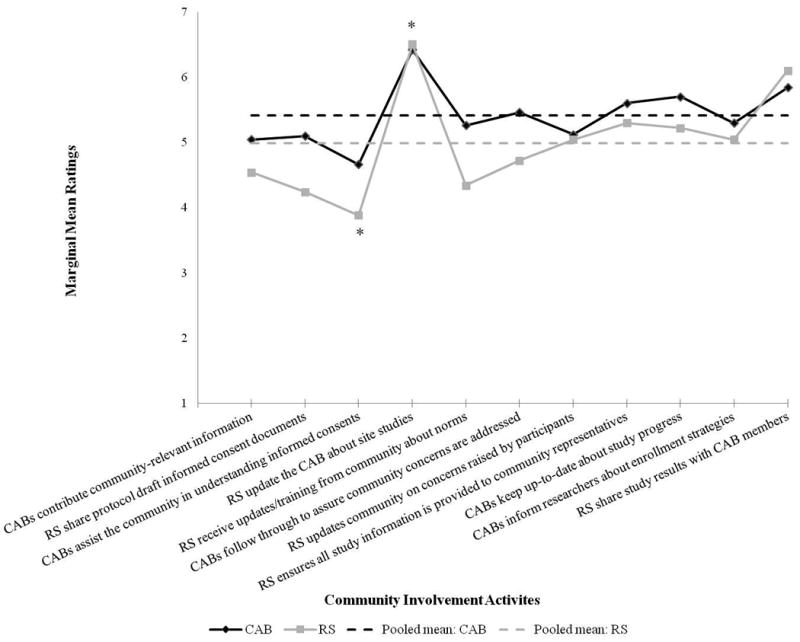

Figure 1.

Community Involvement activities. Marginal mean frequency ratings, by CAB and RS. Dotted lines represent the pooled mean ratings for all activities in the set. Rating Scale: 1=Never; 7=Always. Abbreviations: CAB = Community Advisory Boards; RS = Research Staff. * For each pair, activity rating significantly different than the pooled mean at p < .01.

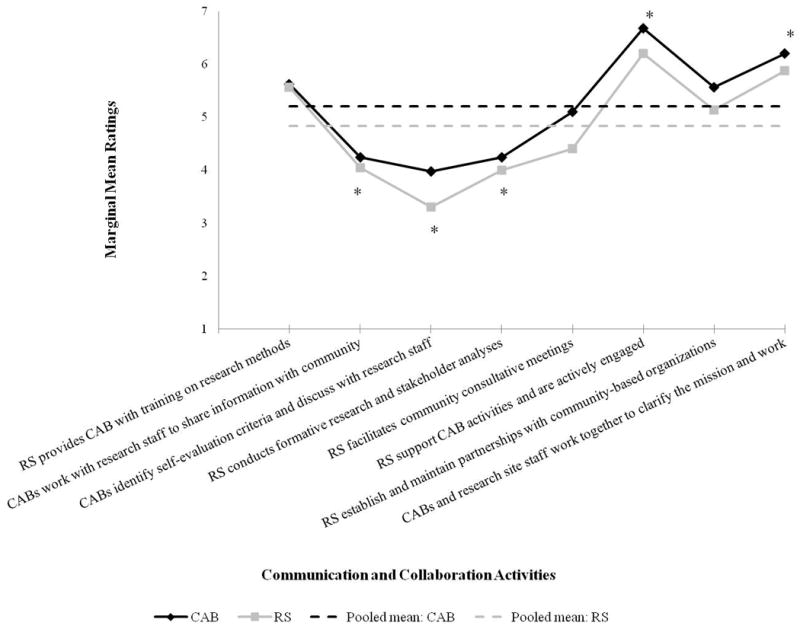

Figure 3.

Communication and Collaboration activities. Marginal mean frequency ratings, by CAB and RS. Dotted lines represent the pooled mean ratings for all activities in the set. Rating Scale: 1=Never; 7=Always. Abbreviations: CAB = Community Advisory Boards; RS = Research Staff. * For each pair, activity rating significantly different than the pooled mean at p < .01.

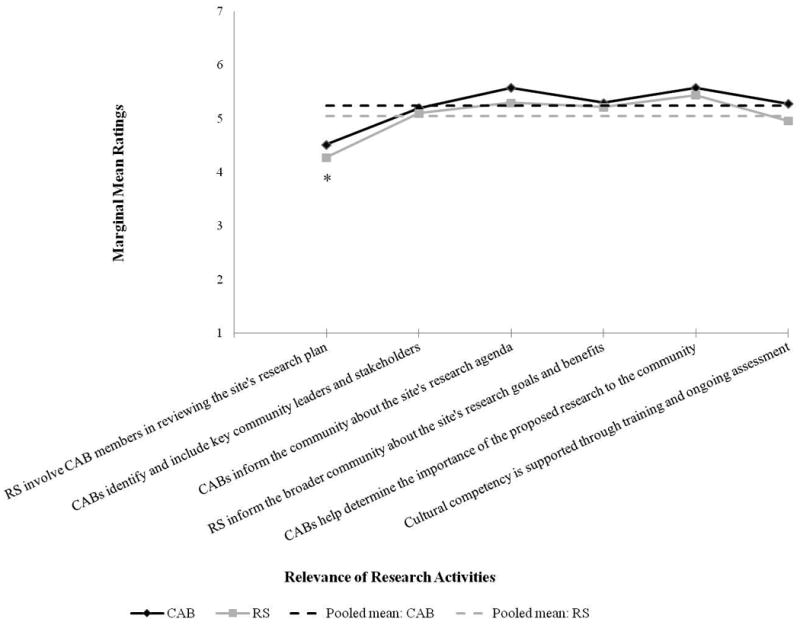

Figure 1 shows that CABs and RS rated the activity frequency for RS update the CAB about site studies significantly higher than the respective pooled means for the entire set of community involvement activities. In contrast, RS and CABs indicated that the activity: CABs assist the community in understanding informed consents occurred significantly less frequently than their respective pooled means for the set of community involvement activities. Figure 2 shows that CAB and RS rated the activity RS involve CAB in reviewing site research plan significantly lower than their respective pooled means for the set of relevance of research activities. Figure 3 shows that CAB and RS indicated that the activities RS support CAB activities and are actively engaged and CAB and RS work together to clarify the mission and the work occur significantly more frequently than the respective pooled mean for the entire set of communication and collaboration activities. In contrast, CAB and RS rated the activities RS performs formative research and stakeholder analyses, CABs identify self-evaluation criteria and discuss with research staff, and CABs work with research staff to share information with community as occurring significantly less frequently than their respective pooled mean frequencies for the entire set of communication and collaboration activities.

Figure 2.

Relevance of Research activities. Marginal mean frequency ratings, by CAB and RS. Dotted lines represent the pooled mean ratings for all activities in the set. Rating Scale: 1=Never; 7=Always. Abbreviations: CAB = Community Advisory Boards; RS = Research Staff. * For each pair, activity rating significantly different than the pooled mean at p < .01.

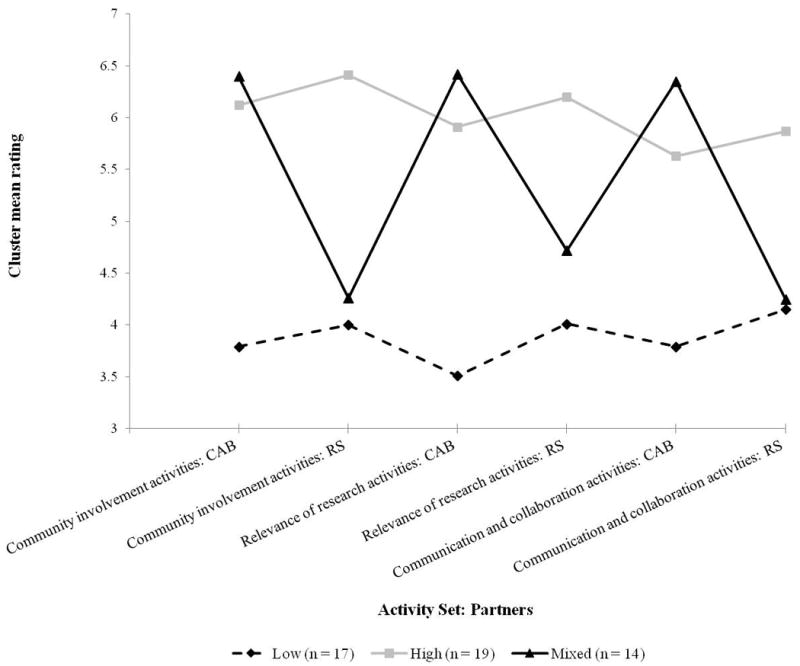

Although highly comparable patterns of responses were found between CAB and RS overall, the range of frequency ratings from CAB and RS (data not shown) suggested that there may be substantial variation among individual sites. As such, we sought to determine if there were subgroups of sites. Using individual sites' summed total frequency ratings for the community involvement, relevance of research, and communication and collaboration activities, from both CAB and RS responses, a hierarchical cluster analysis produced three groups. Figure 4 shows the plot of average CAB and RS ratings for the three groups of sites, across the three activity areas. The largest group of sites (n=19) was characterized by high average frequency ratings (mean range =5.62 to 6.41) across all three areas of activity, from both CABs and RS (High group). A second group of sites (n = 17), was characterized by substantially lower average frequency ratings across all three areas, from both CAB and RS (mean range = 3.51 to 4.14) (Low group). A third group (n = 14) was characterized by high frequency ratings for activities related to community involvement, relevance of research, and communication and collaboration as assessed by CABs (mean range = 6.34-6.42), but substantially lower frequency ratings for all three activity areas as assessed by the RS (mean range =4.25 to 4.75). This group, (Mixed group), representing 28% of the sites, appears quite distinct from the rest, with a level of apparent discordance between CAB and RS not seen in the other 72% of sites.

Figure 4.

Site clusters: Mean frequency ratings, by CAB and RS, by site cluster (High, Mixed, Low). Data represent the mean rating for all activities within each of three sets: Community Involvement; Relevance of Research; Communication and Collaboration. Rating Scale: 1=Never; 7=Always. Abbreviations: CAB = Community Advisory Boards; RS = Research Staff.

Mission-related and operational challenge assessment

CABs and RS also provided information indicating which activities, or aspects of community-researcher interactions, posed significant challenges. Challenges were categorized in the survey, as either mission-related or operational-related, with respondents selecting all that applied from a checklist. Among the three site groupings identified in the cluster analysis there were no differences in the overall mean number of mission-related challenges by group as reported by either CAB or RS. However, the presence of two particular challenges, when indicated by both CABs and RS as significant, was associated with a specific group. CAB member recruitment and participation and Effective communication between CAB and broader community were indicated as challenges more often by the Low group than by either the High or Mixed. There were no differences in the overall mean number of operational-related challenges by group, as reported by either CAB or RS, and none of the operational-related challenges was significantly associated with any of the groups.

CAB needs assessment, budget awareness, resource prioritization

We also gathered information regarding: a) the frequency and means by which CAB support needs are assessed; b) budgets for CAB activities, and; c) means and impact of prioritizing CAB resources. The strong overall agreement between CAB and RS persisted. Both reported that almost two-thirds (CAB: 63.8%; RS: 64%) of sites use more than one method, and nearly all CABs have their needs assessed at least yearly. Similarly, CABs and RS agreed on CAB budget awareness, with 32% of CABs, and 42% of RS (difference NS) reporting that their CAB was aware of the budget for CAB activities. Finally, regarding CAB expenditure prioritization, 44.9% of CABs and 46.0% of RS indicated a joint effort involving both partners. Sixteen percent of CAB and 20% of RS (difference NS) reported that the means by which CAB resources are prioritized pose obstacles to CAB functioning. None of these variables differed significantly among the three groups of sites.

Discussion

Since its initiation in 1990, community engagement has emerged to take its place among the factors now considered as critical to the success of NIAID's HIV/AIDS clinical trials networks (1). However, whereas other factors (e.g., scientific objectives, operations and management, resource utilization) have been subject to evaluation, little has been done to research the practices associated with effective community engagement or its meaningful integration into research activities. This study reports on our initial effort to explore this vital yet complex aspect of NIAID's HIV/AIDS clinical trials programs at the research site level, to better understand the extent to which community engagement is occurring.

Our most salient finding was that overall, CABs and research staff had a high degree of concurrence about the frequency with which a diverse array of activities took place at their sites (Figures 1-3). This agreement was robust, observed in all three activity categories, and across a broad range of frequency ratings, and was not related to whether an activity was CAB- or RS-specific. We presumed that observed congruence is indicative of a common view of the extent to which the recommended best-practices to community engagement manifest at research sites. We surmise that our results signify, for most CABs and RS, a good awareness of the engagement activities at their sites.

Despite strong overall CAB-RS agreement, cluster analysis revealed a subset of sites (28%) in which CAB and RS activity assessments were discordant. Within this group, CABs consistently gave high frequency ratings for all three activity sets, compared to RS. At this time, we are unable to identify the reason(s) for the discordance. None of the factors including: 1) US vs. non-US; 2) prevention vs. treatment research; 3) proportion of CAB members with experience as trial participants; 4) means or frequency of CAB needs assessment; 4) budget awareness among CAB members; or 5) means of CAB expenditure prioritization, correlated with any of the clusters. Future research will investigate other potential correlates: site longevity, CAB membership turnover, and funding for community activities (including paying CAB members) to determine if any of these distinguish discordant sites from the others. We observed one cluster-specific association, albeit for the “Low” frequency group, in their joint (both CAB and RS) designation of two significant mission-related challenges: CAB member recruitment and participation and Effective communication between CAB and broader community. These findings bear some resemblance to those of Cox et al. (2) who described a subset of AIDS research unit-affiliated CABs with lower participation.

We found variability in the perceived frequency of different activities, with several occurring at above average rates. Why these activities are reported to take place more frequently than others is unknown. Several explanations are plausible, including CAB and RS skills in addressing the activities, the general nature of the activities with multiple elements contributing to the frequency, and the importance of the activities to CABs and RS. By contrast, we also saw four low frequency activities potentially signifying their relatively lower importance, or an ineffectiveness in the CAB-research staff interaction. In the case of the evaluative activities by CAB and RS receiving the lowest frequency ratings, it may be that sites lack expertise or resources to support such work, that they happen at much longer intervals or they hold a lower priority. Collaborative, participatory data analysis of these findings, and future studies shall seek to extend these results and aim to identify activities that should be given priority for community engagement.

There are limitations to our approach and results. For instance, the use of a self-administered survey, the research partners' objectivity, the collection of a single, consensus-type response from each site, and the assessment of the perceived frequency with which activities ‘usually’ occur at sites, (7,8) constrained our ability to fully understand the complexity of community engagement. At this early juncture, it would be premature to generalize our findings to all sites. Though the subset of sites selected for analysis appears similar in several respects to the overall set of responding sites, slightly greater than a third of sites did not return a survey. If the non-responding sites were lower functioning (or differed in some other material way), their absence may have biased our findings. Also, our analytic treatment of the 7-point response scale as interval data (rather than ordinal) could be subject to debate. Our primary interest was in seeing if CABs and RS differ, rather than precisely estimating the central tendency of the frequencies. Thus, even if the data were ordinal, we believe that they would be similarly ordinal for both groups. Unless the nature of the “misinterpretation” of intervals differed systematically between the two groups, similar non-normal distributions in both groups would not bias a test of mean differences. As such, given the intent of our analysis, we believe that our treatment of the data as interval is justified in this context. Our survey may also have had an observable process use effect (9) as indicated by respondent comments reflecting the stimulation of evaluative thinking and learning from the survey questions. Further research is needed to determine the replicability of findings or their relevance to other contexts, given the many different models of community engagement in health research (10-15). We studied CAB-RS partnerships because this has been the primary form of engagement in our programs (2, 16-18) for many years. Though CABs certainly can vary in many ways (2, 16-21), the NIAID site CABs are joined in a well-established long-term partnership framework, operate in similar contexts, and follow a shared set of community-authored best practice guidelines (4). As such, we believe that their comparison is reasonable.

Many have pointed to the challenges of evaluating the effectiveness of community engagement in health research including the complexities of defining community and specifying what is meant by effectiveness (10, 13, 20, 22-26). The National Institutes of Health Clinical and Translational Science Awards (CTSAs) have outlined fundamental principles and guidelines (27). Our approach is distinct in that it used a collaborative, participatory process as the basis for conceptualizing not only the survey described here, but a larger, integrated evaluation framework that identified several categories of success factors, outputs and measures for evaluating the NIAID HIV/AIDS clinical trials networks (1). We also utilized a systems thinking perspective (28, 29) in the way that we integrated different success factors (e.g., scientific agenda setting, relevance of research to communities, communication and collaboration) with the evaluation of the community engagement. Rather than looking at community engagement in isolation as a ‘stand alone’ activity, we seek to study and understand it in the context of other key success factors so we can learn how they interrelate. To date, we are unaware of any comparable efforts to assess community engagement in the context of such a large, complex research initiative. In our context, community engagement is represented in the form of CAB-RS partnerships, with effectiveness seen primarily in terms of the beneficial impact on the research. Green and Mercer (15) have indicated that, in such partnerships, the benefits to research are usually in the form of improved applicability and usability in the settings in which the studies take place. Our study gathered quantifiable data about three sets of activities that relate closely to these benefits. However, since these activities are processes and not outcomes, they do not measure the effectiveness of CAB-RS partnerships on the research, but with further study, may be shown to be useful indicators.

We plan to explore other means of assessing the impact of community engagement in our research. Presuming that effective community-researcher partnerships result in studies with greater relevance and fit to local communities, we plan to investigate trial parameters (e.g., screening, enrollment, and retention rates, meeting demographic targets, etc.) which may be indicators of quality engagement (if other influences are accounted for). Systematic case studies of select protocols, especially if integrated with process modeling, could provide a detailed picture of how CABs and RS engage in protocol selection and implementation, and point towards better or additional measures. We also seek collaboration with experts in theory-based approaches (30), especially if these can be adapted to address ways in which partnerships influence research.

Because most research does not get translated into health improvements (31-35), researchers and funders are increasingly accountable for demonstrating impact (36, 37) and assessing “payback” (38). Community partnerships can play a vital role in knowledge dissemination for policy making and implementation (39, 40). These and subsequent efforts to improve evaluation will be a key component in the effectiveness of these partnerships.

Acknowledgments

This project has been funded in whole or in part with funds from the United States Government Department of Health and Human Services, National Institutes of Health (NIH), National Institute of Allergy and Infectious Diseases (NIAID), under: 1) Grant U01AI068614, “Leadership Group for a Global HIV Vaccine Clinical Trials Network” (Office of HIV/AIDS Network Coordination) to the Fred Hutchinson Cancer Research Center, and; 2) a subcontract to Concept Systems, Inc. under Contract N01AI-50022, “HIV Clinical Research Support Services”. The project also received support under Grant UL1RR024996, “Clinical Translational Science Award” (CTSA), from the NIH National Center for Research Resources (NCRR), to Weill Cornell Medical College.

The authors would like to thank the Community Coordinators of the six NIAID HIV/AIDS Clinical Trials Networks who assisted with the design, dissemination and interpretation of this survey: Allegra Cermak (AIDS Clinical Trials Group); Jonathan Lucas (HIV Prevention Trials Network); Carrie Schonwald (HIV Vaccine Trials Network); Claire Schuster (International Maternal Pediatric Adolescent AIDS Clinical Trials Group); Claire Rappaport (International Network for Strategic Initiatives in Global HIV Trials); and Rhonda White (Microbicide Trials Network). Thanks also to Brendan Cole (NIAID Division of AIDS) for project support, and special recognition to Daniel Montoya for his vision in establishing the Community Partners.

References

- 1.Kagan JM, Kane M, Quinlan KM, Rosas S, Trochim WMK. Developing a conceptual framework for an evaluation system for the NIAID HIV/AIDS clinical trials networks. Health Res Policy Syst. 2009;7:12. doi: 10.1186/1478-4505-7-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Cox LE, Rouff JR, Svendsen KH, Markowitz M. Abrams DI and the Terry Beirn Community Programs for Clinical Research on AIDS: Community advisory boards: Their role in AIDS clinical trials. Health Soc Work. 1998;23(4):290–297. doi: 10.1093/hsw/23.4.290. [DOI] [PubMed] [Google Scholar]

- 3.Fauci AS. After 30 Years Of HIV/AIDS, Real Progress And Much Left To Do. [Accessed 05 Aug 2011];Washington Post. 2011 May 27; http://www.washingtonpost.com/opinions/after-30-years-of-hivaids-real-progress-and-much-left-to-do/2011/05/27/AGbimyCH_story.html.

- 4.Recommendations for Community Involvement in National Institute of Allergy and Infectious Diseases HIV/AIDS Clinical Trials Research. [Accessed 05 Aug 2011];2009 http://www.niaid.nih.gov/about/organization/daids/Networks/Documents/cabrecommendations.pdf.

- 5.www.surveymonkey.com

- 6.Ward JH. Hierarchical grouping to optimize an objective function. J Am Stat Assoc. 1963;58:236–244. [Google Scholar]

- 7.Hayward LM, Demarco R, Lynch MM. Interprofessional collaborative alliances: Health Care Educators Sharing and Learning from Each Other. J Allied Health. 2000;29(4):220–226. [PubMed] [Google Scholar]

- 8.Kegler MC, Steckler A, McLeroy K, Malek SH. Factors that Contribute to Effective Community Health Promotion Coalitions: A Study of 10 Project ASSIST Coalitions in North Carolina. Health Educ Behav. 1998;25(3):338–353. doi: 10.1177/109019819802500308. [DOI] [PubMed] [Google Scholar]

- 9.Patton MQ. Utilization Focused Evaluation. 4th. Thousand Oaks, CA: Sage Publications; 2008. [Google Scholar]

- 10.Israel BA, Schultz AJ, Parker EA, Becker AB. Review of community-based research: assessing partnership approaches to improve public health. Ann Rev Pub Health. 1998;19:173–202. doi: 10.1146/annurev.publhealth.19.1.173. [DOI] [PubMed] [Google Scholar]

- 11.Leung MW, Yen IH, Minkler M. Community-based participatory research: a promising approach for increasing epidemiology's relevance in the 21st century. Int J Epidemiol. 2004;33:499–506. doi: 10.1093/ije/dyh010. [DOI] [PubMed] [Google Scholar]

- 12.Australian Government, National Health and Medical Research Council. A Model Framework for Consumer and Community Participation in Health and Medical Research. [Accessed 05 Aug 2011];2004 Dec; http://www.nhmrc.gov.au/_files_nhmrc/publications/attachments/r33.pdf.

- 13.Tindana PO, Singh JA, Tracy CS, Upshur REG, Daar AS, Singer PA, Frohlich J, Lavery JV. Grand Challenges in Global Health: Community Engagement in Research in Developing Countries. PLoS Med. 2007;4(9):e273. doi: 10.1371/journal.pmed.0040273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Brenner BL, Manice MP. Community Engagement in Children's Environmental Health Research. Mt Sinai J Med. 2011;78:85–97. doi: 10.1002/msj.20231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Green LW, Mercer SL. Can Public Health Researchers and Agencies Reconcile the Push from Funding Bodies and the Pull from Communities? Am J Public Health. 2001;91(12):1926–1929. doi: 10.2105/ajph.91.12.1926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Morin SF, Maiorana A, Koester KA, Sheon NM, Richards TA. Community Consultation in HIV Prevention Research: A Study of Community Advisory Boards at 6 Research Sites. JAIDS. 2003;33:513–520. doi: 10.1097/00126334-200308010-00013. [DOI] [PubMed] [Google Scholar]

- 17.Strauss RP, Sengupta S, Quinn SC, Goeppinger J, Spaulding C, Kegeles SM, Millett G. The Role of Community Advisory Boards: Involving Communities in the Informed Consent Process. Am J Public Health. 2001;91(12):1938–1943. doi: 10.2105/ajph.91.12.1938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Strauss RP. Community Advisory Board-Investigator Relationships in Community-Based HIV/AIDS Research. In: King NMP, Henderson GE, Stein J, editors. Beyond Regulations: Ethics in Human Subjects Research. Chapel Hill, NC: University of North Carolina Press; 1999. [Google Scholar]

- 19.MacQueen KM, McLellan E, Metzger DS, Kegeles S, Strauss RP, Scotti R, Blanchard L, Trotter R. What is Community? An Evidence-Based Definition for Participatory Public Health. Am J Public Health. 2001;91(12):1929–1938. doi: 10.2105/ajph.91.12.1929. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Quinn SC. Protecting Human Subjects: The Role of Community Advisory Boards. Am J Public Health. 2004;94(6):918–922. doi: 10.2105/ajph.94.6.918. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Merzel C, D'Afflitti J. Reconsidering Community-Based Health Promotion: Promise, Performance, and Potential. Am J Public Health. 2003;93(4):557–574. doi: 10.2105/ajph.93.4.557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Newman PA. Towards a Science of Community Engagement. Lancet. 2006;367:302. doi: 10.1016/S0140-6736(06)68067-7. [DOI] [PubMed] [Google Scholar]

- 23.Page-Shaer K, Saphonn V, Sun LP, Vun MC, Cooper DA, Kaldor JM. HIV Prevention Research in a Resource-Limited Setting: The Experience of Planning a Trial in Cambodia. Lancet. 2005;366:1499–1503. doi: 10.1016/S0140-6736(05)67146-2. [DOI] [PubMed] [Google Scholar]

- 24.Dickert N, Sugarman J. Ethical Goals of Community Consultation in Research. Am J Public Health. 2005;95(7):1123–1127. doi: 10.2105/AJPH.2004.058933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Christoffel KK, Eder M, Kleinman LC, Hacker K. Developing a Logic Model to Guide Community Engagement Metrics. [Accessed 05 Aug 2011];2011 https://www.dtmi.duke.edu/about-us/organization/duke-center-for-community-research/community-engagement-consultative-service-cecs/ce-literary-resources/LogicModel.pdf.

- 26.Rainwater J. Evaluation and Community Engagement. [Accessed 05 Aug 2011];2011 http://wiki.labctsi.org/download/attachments/1639943/Rainwater_CE_Prog_Eval_Workshop__062111.pdf?version=1&modificationDate=1309303800000.

- 27.Clinical and Translational Science Awards Consortium: Community Engagement Key Function Committee Task Force on Principles of Community Engagement. Principles of Community Engagement. Second Edition. NIH; Bethesdsa, MD: 2011. [Accessed 05 Aug 2011]. NIH Publication No. 11-7782. http://www.atsdr.cdc.gov/communityengagement/pdf/PCE_Report_508_FINAL.pdf. [Google Scholar]

- 28.Best A, Clark PI, Leischow SJ, Trochim WMK, editors. Greater Than the Sum: Systems Thinking in Tobacco Control. Bethesda, MD: U.S. Department of Health and Human Services, National Institutes of Health, National Cancer Institute; Apr, 2007. [Accessed 05 Aug 2011]. Tobacco Control Monograph No. 18. NIH Pub. No. 06-6085. http://cancercontrol.cancer.gov/tcrb/monographs/18/m18_complete.pdf. [Google Scholar]

- 29.Trochim W, Cabrera DA, Milstein B, Gallagher RS, Leischow SJ. Practical Challenges of Systems Thinking and Modeling in Public Health. Am J Public Health. 2006;96(3):538–546. doi: 10.2105/AJPH.2005.066001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.King G, Servais M, Kertoy M, Specht J, Currie M, Rosenbaum P, Law M, Forchuk C, Chalmers H, Willoughby T. A Measure of community members' perceptions of the impacts of research partnerships in health and social services. Eval Program Plann. 2009;32:289–299. doi: 10.1016/j.evalprogplan.2009.02.002. [DOI] [PubMed] [Google Scholar]

- 31.Graham ID, Logan J, Harrison MB, Straus SE, Tetroe J, Caswell W, et al. Lost in knowledge translation: time for a map? J Contin Educ Health Prof. 2006;26(1):13–24. doi: 10.1002/chp.47. [DOI] [PubMed] [Google Scholar]

- 32.Kerner JF. Knowledge translation versus knowledge integration: a “funder's” perspective. J Contin Educ Health Prof. 2006;26(1):72–80. doi: 10.1002/chp.53. [DOI] [PubMed] [Google Scholar]

- 33.Lavis JN. Research, public policymaking, and knowledge translation processes: Canadian efforts to build bridges. J Contin Educ Health Prof. 2006;26(1):37–45. doi: 10.1002/chp.49. [DOI] [PubMed] [Google Scholar]

- 34.Pablos-Mendez A, Shademani R. Knowledge translation in global health. J Contin Educ Health Prof. 2006;26(1):81–86. doi: 10.1002/chp.54. [DOI] [PubMed] [Google Scholar]

- 35.Trochim W, Kane C, Graham MJ, Pincus HA. Evaluating Translational Research: A Process Marker Model. Clin Transl Sci. 2011;4(3):153–162. doi: 10.1111/j.1752-8062.2011.00291.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Kuruvilla S, Mays N, Walt G. Describing the impact of health services and policy research. J Health Serv Res Policy. 2007;(1)(2):23–31. doi: 10.1258/135581907780318374. [DOI] [PubMed] [Google Scholar]

- 37.Lavis J, Ross S, McLeod C, Gildiner A. Measuring the impact of health research. J Health Serv Res Policy. 2003;8(3):165–170. doi: 10.1258/135581903322029520. [DOI] [PubMed] [Google Scholar]

- 38.Buxton M, Hanney S. How can payback for health services research beassessed? J Health Serv Res Policy. 1996;1:35–43. [PubMed] [Google Scholar]

- 39.Lairumbi GM, Molyneux S, Snow RB, Marsh K, Peshu N, English M. Promoting the social value of research in Kenya: Examining the practical aspects of collaborative partnerships using an ethical framework. Soc Sci Med. 2008;67:734–747. doi: 10.1016/j.socscimed.2008.02.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Fernández-Peña JR, Moore L, Goldstein E, DeCarlo PM, Grinstead O, Hunt C, Bao D, Wilson H. Making Sure Research Is Used: Community-Generated Recommendations for Disseminating Research. Progress in Community Health Partnerships: Research, Education, and Action. 2008;2(2):171–176. doi: 10.1353/cpr.0.0013. [DOI] [PubMed] [Google Scholar]