Abstract

The conditions under which we identify entities as animate agents and the neural mechanisms supporting this ability are central questions in social neuroscience. Prior studies have focused upon 2 perceptual cues for signaling animacy: 1) surface features representing body forms such as faces, torsos, and limbs and 2) motion cues associated with biological forms. Here, we consider a third cue—the goal-directedness of an action. Regions in the social brain network, such as the right posterior superior temporal sulcus (pSTS) and fusiform face area (FFA), are activated by human-like motion and body form perceptual cues signaling animacy. Here, we investigate whether these same brain regions are activated by goal-directed motion even when performed by entities that lack human-like perceptual cues. We observed an interaction effect whereby the presence of either human-like perceptual cues or goal-directed actions was sufficient to activate the right pSTS and FFA. Only stimuli that lacked human-like perceptual cues and goal-directed actions failed to activate the pSTS and FFA at the same level.

Keywords: animacy, fMRI

Introduction

Differentiating animate and inanimate agents is an early-emerging ability that is critical for adaptive social behavior. Recognizing that an entity is animate is crucial for informing whether we should allocate attentional resources toward it, for anticipating how it might behave, for determining whether we should approach or avoid it, and for determining how to appropriately interact with it. Given the importance of animacy detection for ensuring adaptive responses to living beings, it is not surprising that infants as young as 2-day old exhibit a spontaneous preference for biological versus nonbiological motion (Simion et al. 2008). Indeed, visually inexperienced chicks show similar preferences, suggesting that the detection of animate motion is an evolutionarily well-conserved ability biasing animals to attend to other living things (Vallortigara et al. 2005). Yet, despite the centrality of animacy detection in social life, there is still much debate about the conditions under which we attribute animacy to an entity and the neural mechanisms that support this ability.

Here, we use functional magnetic resonance imaging (fMRI) to examine the conditions under which we perceive or classify entities in the environment as animate. One position is that entities are characterized as animate on the basis of 2 kinds of perceptual cues: 1) surface features, such as a human’s face or limbs (Carey and Spelke 1994, 1996; Baron-Cohen 1995; Guajardo and Woodward 2004) and 2) biological motion cues, such as self-propelled motion (Premack 1990; Leslie 1994, 1995; Baron-Cohen 1995), nonrigid transformation (Gibson et al. 1978), and the ability to react contingently and reciprocally with other entities (Premack 1990, 1991; Spelke et al. 1995). Thus, in this view, we attribute animacy to entities only insofar as their surface features and motion trajectories are human- or animal-like and characteristic of animate agents (henceforth, we will refer to human-like surface features and motion while recognizing that our argument includes the motions and features of non-human animals). Research shows that young infants use these perceptual cues to discriminate animate and inanimate entities and will exclusively imitate the goals of animate agents. For example, infants will imitate goals performed by human actors but not those performed by entities lacking perceptual animacy cues such as a robotic device (Meltzoff 1995; Woodward 1998; Hamlin et al. 2009) or even a human hand whose surface properties are obscured by a metallic glove (Guajardo and Woodward 2004).

A second and nonmutually exclusive view is that we attribute animacy to entities that behave in a meaningful goal-directed way. In other words, an action that is purposeful and efficient within the constraints of the surrounding environment is perceived as being initiated by an animate agent (Csibra et al. 1999, 2003; Gergely and Csibra 2003; Biro et al. 2007). In this view, human-like surface features and biological motion may both be sufficient for signaling animacy, but neither perceptual cue may be necessary. Rather, animacy is attributed to entities performing goal-directed actions, regardless of their surface features or motion trajectories. In support of this view, infants have been shown to attribute goals to computer-animated shapes (Gergely et al. 1995; Csibra et al. 2003; Wagner and Carey 2005), inanimate boxes (Csibra 2008), and even to biomechanically impossible actions (Southgate et al. 2008) if the observed actions are purposeful and efficient given the constraints of the surrounding environment. This strategy of attributing goal-directed actions to animate agents may be a functional evolutionary adaptation; before the advent of machines and mechanical devices, all goal-directed actions were initiated by animate agents.

Neuroimaging studies have shown that a network of brain regions, including the right fusiform gyrus, right posterior superior temporal sulcus (pSTS) and the adjacent temporoparietal junction (TPJ), and medial prefrontal cortex are activated by perceptual cues signaling animacy. For instance, viewing static representations of human surface features, such as faces and body parts, activates components of this network including the right fusiform gyrus (Haxby et al. 1994; Puce et al. 1995; Kanwisher et al. 1997), right pSTS (Puce et al. 1995), and a region inferior to the right pSTS and adjacent to motion sensitive (MT) area known as the extrastriate body area (EBA) (Downing et al. 2001; Grossman and Blake 2002).

This same network of brain regions also responds to entities that move in ways that are characteristic of humans (self-propelled motion, nonrigid transformation, and contingent reactivity), even in the absence of human-like surface features. Regions of the right pSTS and fusiform gyrus show equivalent levels of activation in response to the motion of a human actor and that of a robot (Pelphrey et al. 2003; Shultz et al. 2010). In addition, regions of the medial prefrontal cortex, TPJ, pSTS, and fusiform gyrus respond to animated non-human shapes that move in a contingent and self-propelled manner (Castelli et al. 2000; Blakemore et al. 2003; Schultz et al. 2003). Furthermore, a recent study (McCarthy et al. 2009) examined the role of motion cues in animacy detection using the “wolfpack” effect (Gao et al. 2010), wherein the percept of animacy is evoked by randomly moving shapes that consistently point toward a moving target. The authors demonstrated that the independently localized fusiform face area (FFA) and pSTS were activated when darts were perceived as animate but not in a control condition in which the darts were oriented 90° away from the target, thereby destroying the percept of animacy. The patterns of activation in the fusiform gyrus and pSTS for static face perception and biological motion perception are strikingly similar (e.g., cf. the results of Puce et al. (1996) and Bonda et al. (1996)). As form and motion are mutually constrained and highly correlated, this similarity may be expected.

The aforementioned neuroimaging studies demonstrate that viewing perceptual cues (human-like surface features and human-like motion) signaling animacy activate similar brain regions involved in social perception. Less is known about whether these same brain regions are activated by purposeful motion performed by entities that have neither human surface features nor motion characteristics. It could be argued that activation in response to clearly non-human stimuli, such as robotic arms or animated shapes, provides evidence that perceptual animacy cues are not necessary to evoke activity in these brain regions. However, it is important to note that the stimuli used in these studies, while not always human-like in their surface features, did have other perceptual characteristics of animacy such as self-propelled motion, nonrigid transformation, and the ability to adaptively alter their path of motion to accommodate changes in the environment. A stronger test of whether goal-directed actions signal animacy in the absence of perceptual animacy cues requires the use of inanimate motion whose surface features and motion trajectories do not resemble that of an animate entity.

The present study used fMRI to investigate whether brain regions involved in the perception of animacy are activated by these 2 different cues for animacy: human-like perceptual cues (human-like surface features combined with human-like motion trajectories) and purposeful goal-directed actions. Adult participants were presented with movies depicting machines engaged in different motions. Machines were chosen as stimuli because their properties can vary along our 2 dimensions of interest: 1) how human-like they appear in terms of surface features and motion and 2) whether their actions are goal-directed. For example, some machines, such as welding machines on a car assembly line, mimic human joints and their range of motions while carrying out goal-directed acts. Other machines, such as the pinsetter at a bowling alley, neither look nor move as a human would, but can nevertheless carry out a goal-directed act.

In the present study, machines were classified as human-like insofar as their surface features and motion trajectories resembled those of a human. Non-human-like machines did not have human-like surface features and moved in ways that were biomechanically impossible for humans. Goal-directed actions were operationalized as actions that have an immediate effect on the surrounding environment, such as moving an object or cutting an object. Non-goal-directed actions were defined as actions that had no immediate effect on the surrounding environment, such as simply moving through space. By selecting movies that varied along these 2 dimensions, humanness, and goal-directedness, we presented participants with movies comprising 4 conditions: 1) human-like machines producing goal-directed actions, 2) human-like machines producing non-goal directed actions, 3) non-human-like machines producing goal-directed actions, and 4) non-human-like machines producing non-goal-directed actions (see Fig. 1 for example still frames from each condition). These 4 conditions allowed us to test whether brain regions involved in animacy perception are driven exclusively by human-like perceptual cues signaling animacy or whether they are also sensitive to purposeful goal-directed actions, even in the absence of human-like surface and motion features.

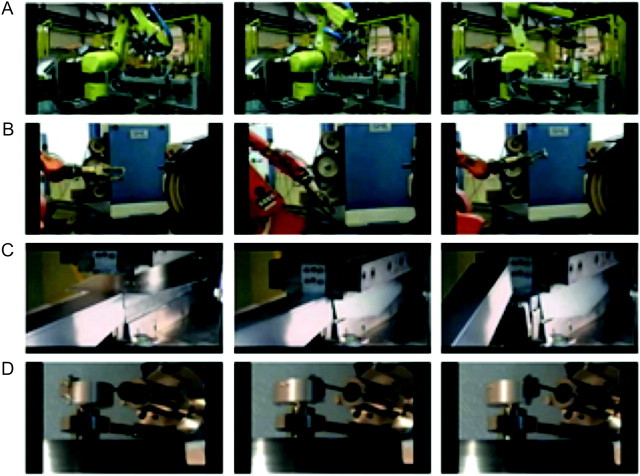

Figure 1.

Example still frames from the 4 experimental conditions. (A) Human-like goal-directed actions: An arm-like machine picks up an object and moves it to another location. (B) Human-like non-goal-directed actions: An arm-like machine moves through space without completing a goal. (C) Non-human-like goal-directed actions: A metal beam descends in a rigid linear manner and cuts a piece of metal. (D) Non-human-like non-goal-directed actions: The machine rotates and pumps without completing a goal. Images courtesy of Science Channel/DCL.

We were particularly interested in the response of the right pSTS and right fusiform gyrus to human-like and goal-directed actions given the consistency with which these regions have been reported in previous studies of animacy perception (Castelli et al. 2000; Blakemore et al. 2003; Schultz et al. 2003). In addition, it was recently demonstrated that the spontaneous blood oxygen level–dependent signal from the pSTS and fusiform gyrus temporally covary at rest, suggesting that these regions may be key nodes in a latent network for social processing (Turk-Browne et al. 2010). Consistent with prior studies indicating that the right pSTS and right fusiform activate in response to perceptual animacy cues, we predicted that these regions would respond more strongly to human-like machine motion. In addition, if goal-directedness is an effective animacy cue, even in the absence of human-like perceptual cues, then we would predict increased activation in the right pSTS and right FFA in response to goal-directed actions. Thus, if the right pSTS and right FFA are sensitive to both types of animacy cues, human-like perceptual cues and goal-directedness, then we expect to find an interaction whereby the pSTS and FFA respond more strongly to human-like goal-directed actions, human-like non-goal-directed actions, and non-human-like goal-directed actions compared with non-human-like non-goal-directed actions.

Materials and Methods

Subjects

Twenty-five subjects (14 female, 11 male, average age = 24 years, all right-handed) with normal vision and no history of neurological or psychiatric illness participated in this study. All subjects gave written informed consent and the Yale Human Investigations Committee approved the protocol.

Stimuli

Stimuli consisted of 112 movies comprising 4 conditions: 1) human-like machines producing goal-directed actions, 2) human-like machines producing non-goal-directed actions, 3) non-human-like machines producing goal-directed actions, and 4) non-human-like machines producing non-goal-directed actions. Movies were selected from a variety of online sources and edited into 3-s segments. We selected 28 unique movies per condition. The machine types, backgrounds, and type of motion varied among stimuli, and we attempted to distribute these variations in perceptual features equally across conditions. We also performed a frame-by-frame analysis of luminance and motion for each movie. A 2-way analysis of variance (ANOVA) revealed no main effect of humanness (F1,108 = 0.053, P = 0.82 and F1,108 = 0.90, P = 0.35, respectively), no main effect of goal-directedness (F1,108 = 0.007, P = 0.94 and F1,108 = 0.004, P = 0.95), and no interaction between humanness and goal-directedness (F1,108 = 0.436, P = 0.51 and F1,108 = 0.46, P = 0.50) on either luminance or motion.

To assess the validity of our classification of movies as human-like or non-human-like and goal-directed or non-goal-directed, participants rated the movies along the 2 dimensions of interest (humanness and goal-directedness) after completing the fMRI protocol. Participants were given the following verbal instructions: “You will be shown the movies of machines that you saw in the scanner. The movies will be presented one at a time. After a movie is shown, you will be asked 2 questions about it. The first question is “how human-like was the action performed by the machine?” Some of the machines may have seemed more human-like because they moved in a way that a human can move (e.g., machines that had an “arm-like” structure), whereas other machines may have seemed non-human-like because they moved in a way that would be impossible for a human to move. A bar will appear on the screen with one end labeled “most human-like imaginable” and the other end labeled “least human-like imaginable.” Make your rating by clicking anywhere along the bar. The second question is “was this action goal-directed?” By goal-directed we mean an action that has an immediate effect on the surrounding environment, such as moving an object or cutting an object. A non-goal-directed action would be when something moves through space without actually doing anything. You can respond to this question by clicking yes or no.”

Movie ratings were analyzed from 24 of the 25 subjects (one subject’s ratings were accidently overwritten). The ratings confirmed our classification of the movies into the 4 conditions of interest (human-like goal-directed actions, human-like non-goal-directed actions, non-human-like goal-directed actions, non-human-like non-goal-directed actions). Movies classified as human-like were rated as being significantly more human-like than movies classified as non-human-like (t23 = 14.98, P < 0.001). In addition, movies classified as goal-directed were rated significantly higher in goal-directedness compared with movies classified as non-goal-directed (t23 = 19.97, P < 0.001).

Task and Procedure

Main Experiment

The 112 movies (28 movies per condition) were presented over the course of 4 runs. Each movie lasted 3 s and the presentation of successive movies was separated by a randomly chosen 3-, 5-, or 7-s rest period. Movies were presented in pseudorandom order such that 7 movies per condition were played in each run. On 3 randomly selected trials per run, a movie repeated on the immediately following trial (one-back task) and participants were instructed to respond with a button press to the repeated movie. All participants responded correctly on at least 8 of the 12 repeated trials. The repeated trials were excluded from subsequent analyses.

Localizer Tasks

In addition to the main experiment, participants completed 2 localizer tasks to independently identify our a priori functional regions of interest, the FFA, and the pSTS. A face-scene localizer task was used to identify face-sensitive regions of the ventral occipitotemporal cortex (VOTC) including the FFA. A biological motion localizer task was used to localize regions of lateral occipitotemporal cortex (LOTC) including the pSTS activated by biological motion.

The face-scene localizer was presented to 17 of the 25 subjects. In the face-scene task, subjects were presented with a block of 10 black and white faces (1 per 1.6 s for 16 s), followed by a 12 s fixation rest block, and then by a block of 10 black and white scene trials (1 per 1.6 s for 16 s). This block pattern repeated 6 times (using different faces and different scenes) in a single run.

The biological motion localizer was presented to all 25 subjects. In the biological motion task, subjects viewed point light displays (PLDs) of moving humans and scrambled control PLDs. Scrambled PLDs were created by taking the human PLDs and randomly permuting the initial positions of the dots to eliminate the percept of human motion. There were 16 animations of human motion PLDs in a 32 s block (1 animation per 2 s), followed by a 12 s fixation rest block, and then 16 scrambled animations in a 32 s block. This pattern repeated 4 times in a single run.

Image Acquisition and Preprocessing

Brain images were acquired at the Magnetic Resonance Research Center at Yale University using a 3.0 T TIM Trio Siemens scanner with a 12-channel head coil. Functional images were acquired using an echo planar pulse sequence (repetition time [TR] = 2 s, echo time [TE] = 25 ms, flip angle α = 90°, matrix = 642, field of view [FOV] = 224 mm, slice thickness = 3.5 mm, 36 slices). Two sets of structural images were collected for registration: coplanar images, acquired using a T1 Flash sequence (TR = 300 ms, TE = 2.47 ms, α = 60°, FOV = 224 mm, matrix = 2562, slice thickness = 3.5 mm, 36 slices); and high-resolution images acquired using a 3D MPRAGE sequence (TR = 2530, TE = 2.4 ms, α = 9°, FOV = 256 mm, matrix = 2562, slice thickness = 1 mm, 176 slices).

Analyses were performed using the FMRIB Software Library (FSL, http://www.fmrib.ox.ac.uk/fsl). All images were skull-stripped using FSL’s brain extraction tool. The first 4 volumes (8 s) of each functional data set were discarded to diminish MR equilibration effects. Data were temporally realigned to correct for interleaved slice acquisition and spatially realigned to correct for head motion using FSL’s MCFLIRT linear realignment tool. Images were spatially smoothed with a 5 mm full-width-half-maximum isotropic Gaussian kernel. Each time series was high-pass filtered (0.01 Hz cutoff) to eliminate low-frequency drift. Functional images were registered to structural coplanar images, which were then registered to high-resolution anatomical images and then normalized to the Montreal Neurological Institute’s MNI152 template.

fMRI Data Analysis—Main Experiment

Whole-brain voxelwise regression analyses were performed using FSL’s fMRI expert analysis tool (FEAT). Each condition within each preprocessed run was modeled with a boxcar function convolved with as a single-gamma hemodynamic response function. The model included explanatory variables for the 2 factors of interest: humanness (human-like, non-human-like) and goal-directedness (goal-directed, non-goal-directed). Given our interest in brain regions involved in animacy perception, we report activation in regions exhibiting either a main effect of humanness (human-like–non-human-like) or a main effect of goal-directedness (goal-directed–non-goal-directed).

Group-level analyses were performed using a mixed effects model, with the random effects component of variance estimated using FSL’s FLAME stage 1 + 2 procedure. For both first and higher level analyses, clusters of active voxels were identified using FSL’s 2-stage procedure to correct for multiple comparisons. Voxels were first thresholded at an entry level of z = 2.3 and the significance of the resulting cluster was then evaluated at a corrected P < 0.05 using a Gaussian random field (GRF) theory approach.

Regions of Interest Analyses

Additional analyses were conducted to study the time course of activation differences among the conditions in independently localized regions previously associated with animacy perception (the right pSTS and right FFA). For both localizers, whole-brain voxelwise regression analyses were performed using the same approach as described for the main experiment.

The mean signal averaged time course for each condition was calculated for each region of interest (ROI) (right pSTS and right FFA) for each subject and statistically compared. A repeated measures ANOVA was conducted using humanness (human-like, non-human-like) and goal-directedness (goal-directed, non-goal-directed) as within-subject factors. Given our a priori hypotheses that the right pSTS and right FFA would activate in response to 2 types of animacy cues, human-like movement and goal-directedness, we were particularly interested in testing for interaction effects revealing increased signal change in response to both human-like conditions and non-human-like goal-directed actions compared with non-human-like non-goal-directed actions.

Results

Main Effect of Humanness and Goal-Directedness

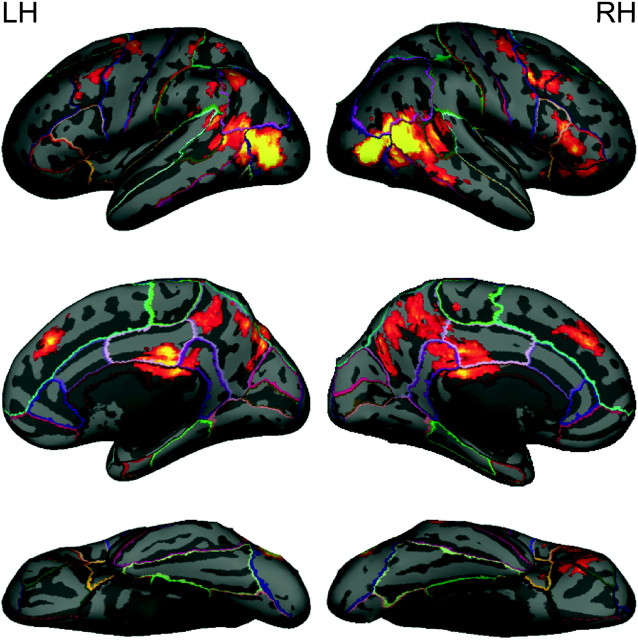

Figure 2 presents the cluster-corrected contrast for human-like > non-human-like motion. Activation occurred bilaterally over a large region of temporal occipital cortex. This region extended dorsally to the posterior continuation and ascending limb of the STS and ventrally to the middle temporal gyrus and lateral occipital cortex. Activation was also observed bilaterally in the superior, middle, and inferior frontal gyri, paracingulate gyrus, precentral gyrus, posterior cingulate gyrus, and precuneus. Finally, activation was seen in the left superior parietal lobule, right frontal orbital cortex, and right frontal pole. Peak coordinates and z-scores of significant clusters from the human-like > non-human-like contrast are given in Table 1.

Figure 2.

Activation map for human-like versus non-human-like contrast, displayed on a cortical surface representation. The color ranges from z = 2.3 (dark red) to 3.8 (bright yellow).

Table 1.

Peak coordinates (in MNI space) from the human-like versus non-human-like contrast.

| Region | Coordinates (mm) |

z-score | ||

| x | y | z | ||

| Right lateral occipital cortex | 54 | −64 | 8 | 6.55 |

| Left lateral occipital cortex | −58 | −68 | 8 | 5 |

| Posterior cingulate gyrus | −2 | −24 | 30 | 4.1 |

| Left precuneus cortex | −6 | −72 | 44 | 4.08 |

| Right precuneus cortex | 8 | −70 | 40 | 4.03 |

| Paracingulate gyrus | −2 | 40 | 34 | 4 |

| Left superior frontal gyrus | −22 | 0 | 66 | 3.96 |

| Left precentral gyrus | −26 | −10 | 50 | 3.85 |

| Right precentral gyrus | 34 | −6 | 56 | 3.54 |

| Right middle frontal gyrus | 40 | 6 | 40 | 3.78 |

| Left middle frontal gyrus | −42 | 12 | 48 | 3.3 |

| Right superior temporal sulcus | 50 | −40 | 10 | 3.72 |

| Right inferior frontal gyrus | 54 | 26 | −2 | 3.7 |

| Left middle temporal gyrus | −56 | −54 | 10 | 3.93 |

| Right middle temporal gyrus | 68 | −34 | −14 | 3.63 |

| Right frontal orbital cortex | 40 | 30 | −12 | 3.36 |

| Right frontal pole | 48 | 36 | −14 | 3.32 |

| Left superior parietal lobule | −38 | −46 | 64 | 3.07 |

| Right fusiform gyrusa | 44 | −42 | −22 | 5.45 |

No clusters in the right fusiform gyrus survived cluster-correction in the human > non-human contrast. However, clusters did survive voxel-based correction, a more conservative method for correcting for multiple comparisons.

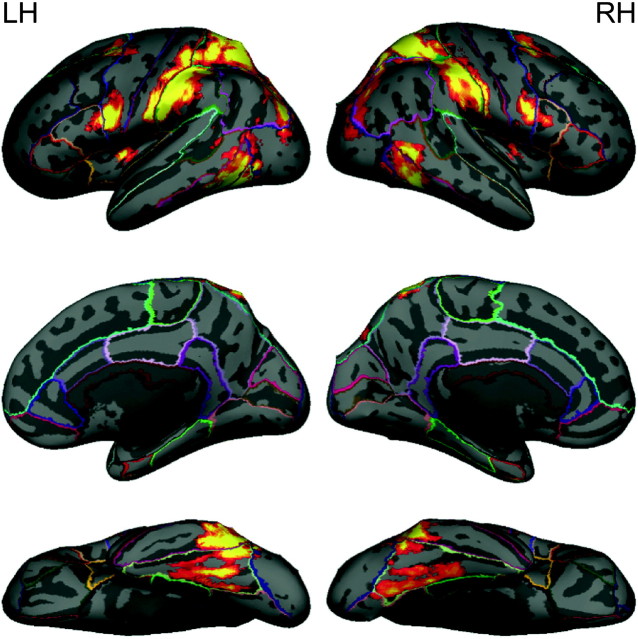

Figure 3 presents the cluster-corrected contrast for goal-directed > non-goal-directed motion. Activation occurred bilaterally in the parietal lobe, comprising the superior parietal lobule and supramarginal gyrus. Activation was also observed in the precentral gyrus, superior frontal gyrus, insula, postcentral gyrus, temporal occipital fusiform cortex, middle temporal gyrus, inferior temporal gyrus, and lateral occipital cortex. Peak coordinates and z-scores of significant clusters from the goal-directed > non-goal-directed contrast are given in Table 2.

Figure 3.

Activation map for goal-directed versus non-goal-directed contrast, displayed on a cortical surface representation. The color ranges from z = 2.3 (dark red) to 3.8 (bright yellow).

Table 2.

Peak coordinates (in MNI space) from goal-directed versus non-goal-directed contrast

| Region | Coordinates (mm) |

z-score | ||

| x | y | z | ||

| Left superior parietal lobule | −24 | −56 | 58 | 6.26 |

| Right superior parietal lobule | 24 | −60 | 62 | 6.2 |

| Left postcentral gyrus | −66 | −20 | 30 | 5.73 |

| Right postcentral gyrus | 56 | −24 | 48 | 5.24 |

| Left inferior temporal gyrus | −44 | −56 | −10 | 5.65 |

| Right inferior temporal gyrus | 56 | −54 | −14 | 4.9 |

| Left precentral sulcus | −56 | 6 | 24 | 5.33 |

| Right precentral sulcus | 60 | 14 | 26 | 4.5 |

| Left lateral occipital cortex | −32 | −74 | 30 | 5.23 |

| Right lateral occipital cortex | 36 | −78 | 32 | 3.93 |

| Left superior frontal gyrus | −26 | 4 | 54 | 4.4 |

| Right superior frontal gyrus | 26 | 4 | 50 | 4.35 |

| Right middle temporal gyrus | 62 | −54 | 2 | 4.38 |

| Left middle temporal gyrus | −52 | −56 | −2 | 3.98 |

| Left insula | −40 | −4 | 6 | 4.32 |

| Right insula | 40 | −2 | 8 | 3.5 |

| Left fusiform gyrus | −30 | −46 | −18 | 4.31 |

| Right fusiform gyrus | 34 | −42 | −14 | 4.05 |

| Right superior temporal sulcus | 68 | −42 | 14 | 2.9 |

Localizer Analyses

The cluster-corrected contrast for biological motion > scrambled motion revealed an expansive region of activation in occipitotemporal cortex (Fig. 4, panel A). In our experience, using cluster correction in FSL with large subject samples often results in the fusing of discrete peaks of activation into a large cluster with multiple peaks. To identify the peaks within these large clusters, we applied a more conservative voxel-based threshold to these clusters (see Fig. 4, panel B). Two ROIs were defined within the right STS: a cluster within the posterior continuation and ascending limb of the STS and a cluster anterior to the crux of the ascending limb and posterior continuation of the STS (see Fig. 4, panel B for locations of ROIs).

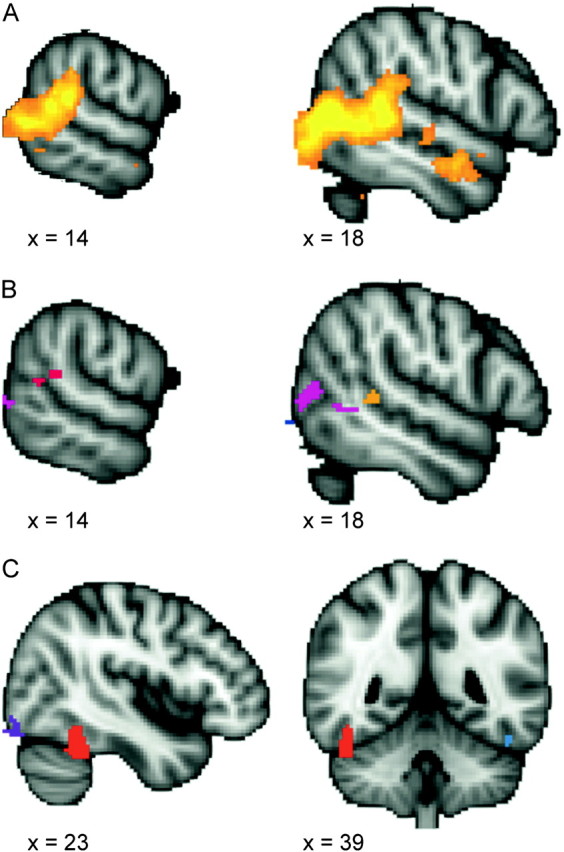

Figure 4.

Activation maps from localizer tasks. The top panel (A) shows the cluster-corrected contrast for the biological motion localizer task (biological > scrambled motion contrast). The color ranges from z = 2.3 (dark red) to 4.2 (bright yellow). Panel (B) shows the voxel-corrected contrast for the biological motion localizer task (biological > scrambled motion contrast). Two regions in the right STS were identified: a cluster within the posterior continuation and ascending limb of the STS (shown in red) and a cluster anterior to the crux of the STS (shown in orange). Panel (C) shows the uncorrected activation map from the face localizer task (face > scene contrast), thresholded at z = 2.57. The right FFA, used in subsequent ROI analyses, is shown in red.

In the face > scene contrast, the right FFA was clearly evident using a conservative whole-brain GRF voxelwise correction for multiple comparisons (P < 0.05, corrected). However, when applying the more liberal 2-stage FSL cluster correction, this FFA activation was too small to pass threshold. Because the right FFA identified in the whole-brain voxelwise correction consisted of only a few voxels, we enlarged the ROI prior to its application in our main experiment by including all immediately contiguous voxels where face > scene with z > 2.57 (P < 0.01, uncorrected) (see Fig. 4).

ROI Analyses of pSTS

To address our primary question of whether the right pSTS and right FFA would be activated when either human-like perceptual cues or goal-directedness are present, we examined the time course of the fMRI signal averaged for each voxel within our ROIs for each condition. The repeated measures ANOVA conducted on peak percent signal change in the cluster anterior to the crux of the STS revealed a significant main effect of humanness (F1,24 = 12.08, P < 0.01), indicating that this region was more active in response to human-like compared with non-human-like motion. No significant main effect of goal-directedness or interaction between humanness and goal-directedness were found (F1,24 = 0.283, P = 0.6 and F1,24 = 0.012, = 0.91, respectively) (see Fig. 5).

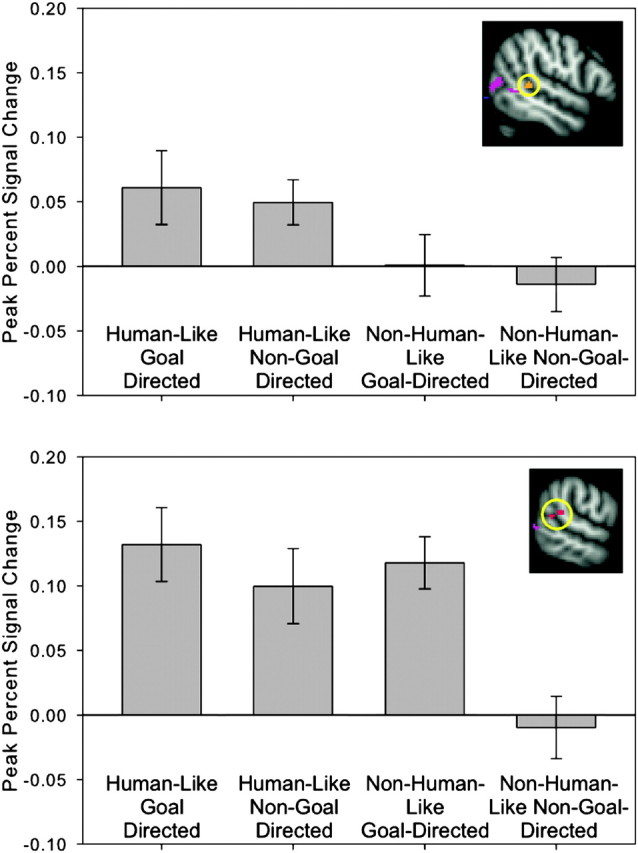

Figure 5.

ROI analysis results for the pSTS. The top panel shows results from the ROI anterior to the crux of the STS (circled). The bottom panel shows ROI analysis results from the crux of the STS (circled). Bar graphs show blood oxygen level–dependent signal change averaged at the time point of peak activation (3 s post stimulus onset) for each condition. Error bars indicate standard error of blood oxygen level–dependent signal at a given time point. In the region anterior to the crux of the STS, there is a main effect of humanness: blood oxygen level–dependent signal change is greater in response to the human-like compared with the non-human-like condition. In the crux of the STS, there is a significant interaction: blood oxygen level–dependent signal change is greater in response to human-like conditions and the non-human-like/goal-directed condition compared with the non-human-like/non-goal-directed condition.

The repeated measures ANOVA conducted on peak percent signal change in the pSTS cluster revealed a significant main effect of humanness (F1,24 = 17.74, P < 0.001), a significant main effect of goal-directedness (F1,24 = 7.71, P < 0.01), and a significant interaction between humanness and goal-directedness (F1,24 = 4.11, P = 0.054). Simple main effect analyses revealed that the pSTS responded more strongly to non-human goal-directed action compared to non-human non-goal directed actions (F1,48 = 16.35, P < 0.001). There was no difference in pSTS response to human-like goal-directed compared with human-like non-goal-directed actions (F1,48 = 0.62, P = 0.43) (see Fig. 5).

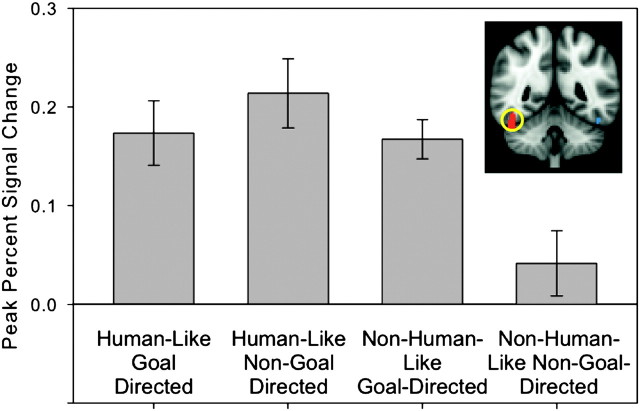

ROI Analyses of FFA

The repeated measures ANOVA conducted on peak percent signal change in the right FFA revealed a significant main effect of humanness (F1,24 = 12.56, P < 0.01), a significant main effect of goal-directedness (F1,24 = 7.82, P < 0.05, and a significant interaction between humanness and goal-directedness (F1,24 = 5.07, P < 0.05). Simple main effect analyses revealed that the FFA responded more strongly to non-human goal-directed action compared with non-human non-goal directed actions (F1,48 = 10.67, P < 0.01). There was no difference in FFA response to human-like goal-directed compared with human-like non-goal-directed actions (F1,48 = 0.7, P = 0.41) (see Fig. 6).

Figure 6.

ROI analysis results from the right FFA (circled). Bar graphs show blood oxygen level–dependent signal change averaged at the time point of peak activation (3 s post stimulus onset) for each condition. Error bars indicate standard error of blood oxygen level–dependent signal at a given time point. There is a significant interaction effect: blood oxygen level–dependent signal change is greater in response to human-like conditions and the non-human-like/goal-directed condition compared with the non-human-like/non-goal-directed condition.

Discussion

We have shown that viewing machines that resemble humans in form and motion activate the right FFA and a region within the right pSTS—regardless of whether the action of the machine was goal-directed. However, if the machine did not resemble a human in form and motion, it still activated the right FFA and right pSTS if the machine performed a goal-directed action. Put another way, the only stimulus that evoked little or no activity in these 2 regions were machines that did not have human-like surface features or motion and did not appear goal-directed. This supports our hypothesis that human-like perceptual cues or goal-directed actions are sufficient to identify an entity as animate. We shall provide a fuller discussion of this interpretation following a review of the results of our whole-brain analyses.

Neural Responses to Human-Like Compared with Non-human-Like Motion

As expected, we found that regions of the social brain network are sensitive to stimuli that have human-like surface features, such as arm-like structures, and human-like motion trajectories, such as self-propelled motion, nonrigid transformation, and contingent reactivity. As in previous studies of animacy detection (Castelli et al. 2000; Blakemore et al. 2003; Schultz et al. 2003), our human-like > non-human-like contrast revealed activation in the superior temporal sulcus, right TPJ, and medial prefrontal cortex—a series of structures sometimes referred to as the mentalizing network. While the FFA activation was not identified in FSL’s 2-stage cluster correction due to its small spatial extent, it emerged as highly significant using the (more conservative) standard GRF whole-brain voxel-based correction. These results are consistent with studies investigating neural correlates of human-like perceptual cues (Castelli et al. 2000; Blakemore et al. 2003; Schultz et al. 2003) and confirm previous findings indicating that we mentalize about the actions of non-human objects whose surface features and motion trajectories resemble those of humans.

Neural Responses to Goal-Directed Compared with Non-goal Directed Actions

Activation in response to goal-directed compared with non-goal-directed actions was greater in the superior parietal lobule, supramarginal gyrus, precentral gyrus, superior frontal gyrus, insula, postcentral gyrus, fusiform gyrus, inferior temporal gyrus, middle temporal gyrus, lateral occipital cortex, and right superior temporal sulcus. As mentioned previously, goal-directed actions were defined as actions that caused an immediate change in the surrounding environment (e.g., cutting an object), whereas non-goal-directed actions did not cause an immediate change in the environment (e.g., simply moving through space). Although the machines in both conditions moved, and while an assumption could be made that in all cases there was an eventual goal of completing some task, only machines that effected a change in the immediate environment activated the aforementioned brain regions, suggesting that these regions are sensitive to this relatively subtle distinction between goal-directed and non-goal-directed actions. Interestingly, there was a substantial absence of overlap between regions sensitive to human-like perceptual cues compared with goal-directed actions, suggesting that these cues to animacy invoke relatively distinct networks of regions. For instance, a large portion of parietal cortex demonstrated sensitivity to goal-directed actions but not to human-like perceptual cues. Importantly, however, the STS and the FFA were both responsive to either the presence of human-like perceptual cues or goal-directed actions, suggesting that these may be critical regions for connecting these cues to animacy.

Goal-Directedness as a Cue for Animacy Detection

Our main question was whether the pSTS and FFA can be activated by viewing goal-directed actions, even in the absence of human-like perceptual cues. Our ROI analysis revealed that both goal-directed actions and human-like perceptual cues independently activated the right pSTS. Moreover, we observed an interaction between these cues—only the stimuli that lacked both human-like perceptual cues and goal-directed action failed to activate the right pSTS at the same level. An important implication of these results is that the role of the pSTS in reasoning about intentions is not restricted to the analysis of agents with human-like perceptual cues. Rather, the pSTS can be activated by goal-directed motion, even in the absence of human-like perceptual cues.

Interestingly, our ROI analysis revealed a second cluster of activity that was situated anterior to the crux of the STS. This anterior region revealed a sensitivity to human-like motion compared with non-human-like motion but showed no difference when comparing goal-directed to non-goal-directed actions and no interaction between humanness and goal-directedness. This pattern of results suggests that this more anterior aspect of the STS is sensitive to human-like perceptual cues signaling animacy but not to goal-directed actions as a cue for animacy. This raises the intriguing possibility that different regions of the STS may be sensitive to different types of animacy cues. However, further studies are needed to replicate these results and explore regional differences in sensitivity to animacy cues.

Perhaps our most important finding is that both human-like perceptual cues and goal-directed actions activated the independently localized FFA. The FFA’s role in processing static faces (and bodies in the partially overlapping fusiform body area) is well known (Kanwisher et al. 1997), and studies of biological motion frequently demonstrate strong activation of the FFA by human-like motion of animate shapes (Castelli et al. 2000; Blakemore et al. 2003; Schultz et al. 2003). Here, we demonstrate for the first time that the FFA can be comparably activated by goal-directed motion from entities that possess neither human-like surface features nor motion trajectories. The goal-directed motion of machines completely devoid of human-like perceptual features or human-like motion trajectories, such as a metal beam descending in a rigid, linear motion that slices another piece of metal, activated the FFA just as strongly as the motion of human-like machines, such as the movement of a robotic arm-like structure. Non-human-like non-goal-directed actions failed to similarly activate the FFA. These results suggest that the role of the FFA in social perception extends beyond the detection of human-like perceptual features, by demonstrating the remarkable sensitivity of the FFA to the goal-directedness of action.

Our findings indicate that both the pSTS and the FFA are sensitive to 2 different cues that signal animacy: human-like perceptual cues and goal-directed actions. What then, is the functional difference between these 2 brain regions? Based on these results and previous work on the perception of animacy, we propose that animate agents can be detected through processing streams that are differentially sensitive to cues signaling animacy: human-like surface features, biological motion, and goal-directed actions. While the current study focused upon the goal-directedness of action as a signal for animacy detection, our proposed model of animacy detection hypothesizes the existence of 3 different processing streams that are differentially sensitive to human-like surface features, biological motion, and goal-directed actions. The first stream involves the VOTC and includes the occipital face area and the fusiform face and body areas. The second stream involves LOTC and includes the middle occipital gyrus, the EBA, and the pSTS. The third stream involves the parietal lobe and comprises the superior parietal lobule and supramarginal gyrus. According to our model, these processing streams comprise 3 routes to animacy detection and work together to detect the presence of animate agents and their underlying intentions. We posit that the VOTC processing stream is specialized for the detection of human-like surface features, such as faces and body forms. By contrast, the LOTC processing stream is specialized for both the detection of biological or animate motion. We hypothesize that the parietal system plays a role in the detection of goal-directed actions. Given the large extent of parietal activation unique to the goal-directed > non-goal-directed contrast, and the role of the parietal lobe in understanding action and intentions (Fogassi et al. 2005), we posit that the parietal processing stream is sensitive to goal-directed actions, even in the absence of biological motion or human-like surface features. Finally, we propose that the pSTS and its connection to the fusiform (Turk-Browne et al. 2010) is critical for integrating information from these processing streams to detect and reason about animate agents.

Although our model posits distinct neural routes that are differentially sensitive to cues signaling animacy, it is important to note that in the natural world, cues signaling the presence of animacy typically co-occur and are inherently linked: animate agents have a human form, move in biologically plausible ways, and engage in meaningful goal-directed behavior. As such, we propose that the VOTC, LOTC, and parietal processing streams are interdependent and act in concert to identify particular animate agents from their form and motion and infer their intentions from their actions. For example, a human face detected by the VOTC is likely to be attached to an agent that moves in a biologically plausible way and behaves in a goal-directed manner, thereby activating the LOTC and parietal systems. Likewise, meaningful goal-directed actions detected by the parietal system are likely to have been produced by animate agents with human-like surface features and biological motion, thereby activating the VOTC and LOTC. A further prediction of this model is that the directional flow of activation between the VOTC, LOTC, and parietal system may depend on the characteristics of the particular stimulus presented. For example, viewing static human faces may activate the VOTC, which then drives activation in the LOTC and parietal lobe. Similarly, viewing goal-directed actions may activate the parietal system, thereby driving activation in the VOTC and LOTC. As mentioned, the pSTS may serve as a site for integrating information from these multiple processing streams.

This proposed model may help explain functional differences and similarities between the pSTS and FFA. Despite the putative involvement of the pSTS and FFA in dissociable aspects of social perception, both regions are often found to be active in response to a range of social stimuli, such as human faces and biological motion. In the present study, activation was observed in both the pSTS and FFA in response to 2 types of stimuli: machines with human-like features and machines performing goal-directed actions. According to our model, viewing human-like machines activates the FFA, which may then drive activation in the STS. Similarly, input from LOTC and parietal processing streams may activate the pSTS, which may then drive activation in the FFA. This model explains why we observed activation in both the pSTS and FFA in response to stimuli that were either human-like or goal-directed. Future studies utilizing directed connectivity analyses are needed to test our hypotheses regarding the direction of causality between these different processing streams when animate agents are detected via human-like perceptual cues or goal-directed motion cues.

Funding

Yale University FAS Imaging Fund and National Science Foundation Graduate Research Fellowship to S.S.

Acknowledgments

Conflict of Interest: None declared.

References

- Baron-Cohen S. Mindblindness: an essay on autism and theory of mind. Cambridge (MA): MIT Press; 1995. [Google Scholar]

- Biro S, Csibra G, Gergely G. The role of behavioral cues in understanding goal-directed actions in infancy. Prog Brain Res. 2007;164:303–323. doi: 10.1016/S0079-6123(07)64017-5. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Boyer P, Pachot-Clouard M, Meltzoff A, Segebarth C, Decety J. The detection of contingency and animacy from simple animations in the human brain. Cereb Cortex. 2003;13:837–844. doi: 10.1093/cercor/13.8.837. [DOI] [PubMed] [Google Scholar]

- Bonda E, Petrides M, Ostry D, Evans A. Specific involvement of human parietal systems and the amygdala in the perception of biological motion. J Neurosci. 1996;16:3737–3744. doi: 10.1523/JNEUROSCI.16-11-03737.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carey S, Spelke ES. Domain-specific knowledge and conceptual change. In: Hirschfeld LA, Gelman SA, Hirschfeld LA, Gelman SA, editors. Mapping the mind: domain specificity in cognition and culture. New York: Cambridge University Press; 1994. pp. 169–200. [Google Scholar]

- Carey S, Spelke ES. Science and core knowledge. Philos Sci. 1996;63:515–533. [Google Scholar]

- Castelli F, Happe F, Frith U, Frith C. Movement and mind: a functional imaging study of perception and interpretation of complex intentional movement patterns. Neuroimage. 2000;12:314–325. doi: 10.1006/nimg.2000.0612. [DOI] [PubMed] [Google Scholar]

- Csibra G. Goal attribution to inanimate agents by 6.5-month-old infants. Cognition. 2008;107:705–717. doi: 10.1016/j.cognition.2007.08.001. [DOI] [PubMed] [Google Scholar]

- Csibra G, Biro S, Koos O, Gergely G. One-year-old infants use teleological representations of actions productively. Cognitive Sci. 2003;27:111–113. [Google Scholar]

- Csibra G, Gergely G, Biro S, Koos O, Brockbank M. Goal attribution without agency cues: the perception of ‘pure reason’ in infancy. Cognition. 1999;72:237–267. doi: 10.1016/s0010-0277(99)00039-6. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2405–2407. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Ferrari PF, Gesierich B, Rozzi S, Chersi F, Rizzolatti G. Parietal lobe: from action organization to intention understanding. Science. 2005;29:662–667. doi: 10.1126/science.1106138. [DOI] [PubMed] [Google Scholar]

- Gao T, McCarthy G, Scholl BJ. The wolfpack effect: perception of animacy irresistibly influences interactive behavior. Psychol Sci. 2010;21:1845–1853. doi: 10.1177/0956797610388814. [DOI] [PubMed] [Google Scholar]

- Gergely G, Csibra G. Teleological reasoning in infancy: the naïve theory of rational action. Trends Cogn Sci. 2003;7:287–292. doi: 10.1016/s1364-6613(03)00128-1. [DOI] [PubMed] [Google Scholar]

- Gergely G, Nadasdy Z, Csibra G, Biro S. Taking an intentional stance at 12 months of age. Cognition. 1995;56:165–193. doi: 10.1016/0010-0277(95)00661-h. [DOI] [PubMed] [Google Scholar]

- Gibson EJ, Owsley CJ, Johnson J. Perception of invariants by 5-month-old infants: differentiation of two types of motion. Dev Psychol. 1978;14:407–416. [Google Scholar]

- Grossman ED, Blake R. Brain areas active during visual perception of biological motion. Neuron. 2002;35:1167–1175. doi: 10.1016/s0896-6273(02)00897-8. [DOI] [PubMed] [Google Scholar]

- Guajardo J, Woodward A. Is agency skin deep? Surface attributes influence infants’ sensitivity to goal-directed actions. Infancy. 2004;6:361–384. [Google Scholar]

- Hamlin JK, Newman G, Wynn K. Eight-month-old infants infer unfulfilled goals, despite ambiguous physical evidence. Infancy. 2009;14:579–590. doi: 10.1080/15250000903144215. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J Neurosci. 1994;14:6336–6353. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun M. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;11:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leslie AM. ToMM, ToBy, and agency: core architecture and domain specificity. In: Hirschfeld LA, Gelman SA, editors. Mapping the mind: domain specificity in cognition and culture. New York: Cambridge University Press; 1994. pp. 119–148. [Google Scholar]

- Leslie AM. A theory of agency. In: Sperber D, Premack D, Premack AJ, editors. Causal cognition: a multidisciplinary debate. Oxford: Clarendon Press; 1995. pp. 121–141. [Google Scholar]

- McCarthy G, Gao T, Scholl B. Processing animacy in the posterior superior temporal sulcus. J Vision. 2009;9:775. [Google Scholar]

- Meltzoff AM. Understanding the intentions of others: re-enactments of intended acts by 18-month-old children. Dev Psychol. 1995;31:838–850. doi: 10.1037/0012-1649.31.5.838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Mitchell TV, McKeown MJ, Goldstein J, Allison T, McCarthy G. Brain activity evoked by the perception of human walking: controlling for meaningful coherent motion. J Neurosci. 2003;23:6819–6825. doi: 10.1523/JNEUROSCI.23-17-06819.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Premack D. The infant’s theory of self-propelled objects. Cognition. 1990;36:1–16. doi: 10.1016/0010-0277(90)90051-k. [DOI] [PubMed] [Google Scholar]

- Premack D. The infant’s theory of self-propelled objects. In: Frye D, Moore C, editors. Children’s theories of mind: mental states and social understanding. Hillsdale (NJ): Lawrence Erlbaum Associates; 1991. pp. 39–48. [Google Scholar]

- Puce A, Allison T, Asgari M, Gore JC, McCarthy G. Differential sensitivity of human visual cortex to faces, letterstrings, and textures: a functional magnetic resonance imaging study. J Neurosci. 1996;16:5205–5215. doi: 10.1523/JNEUROSCI.16-16-05205.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Allison T, Gore JC, McCarthy G. Face-sensitive regions in human extrastriate cortex studied by functional MRI. J Neurophysiol. 1995;74:1192–1199. doi: 10.1152/jn.1995.74.3.1192. [DOI] [PubMed] [Google Scholar]

- Schultz RT, Grelotti DJ, Klin A, Kleinman J, Van der Gaag C, Marois R, Skudlarksi P. The role of the fusiform face area in social cognition: implications for the pathobiology of autism. Philos Trans R Soc Lond B Biol Sci. 2003;358:415–427. doi: 10.1098/rstb.2002.1208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shultz S, Lee SM, Pelphrey K, McCarthy G. The posterior superior temporal sulcus is sensitive to the outcome of human and non-human goal-directed actions. Soc Cogn Affect Neur. 2010 doi: 10.1093/scan/nsq087. Advance Access published November 22, 2010 doi: 10.1093/scan/nsq087. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simion F, Regolin L, Bulf H. A predisposition for biological motion in the newborn baby. Proc Natl Acad Sci U S A. 2008;105:809–813. doi: 10.1073/pnas.0707021105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Southgate V, Johnson MH, Csibra G. Infants attribute goals even to biomechanically impossible actions. Cognition. 2008;107:1059–1069. doi: 10.1016/j.cognition.2007.10.002. [DOI] [PubMed] [Google Scholar]

- Spelke ES, Phillips AT, Woodward AL. Infants' knowledge of object motion and human action. In: Sperber D, Premack D, Premack AJ, editors. Causal cognition: a multidisciplinary debate. Oxford: Clarendon Press; 1995. pp. 44–77. [Google Scholar]

- Turk-Browne NB, Norman-Haignere SV, McCarthy G. Face-specific resting functional connectivity between the fusiform gyrus and posterior superior temporal sulcus. Front Hum Neurosci. 2010;4:1–14. doi: 10.3389/fnhum.2010.00176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vallortigara G, Regolin L, Marconato F. Visually inexperienced chicks exhibit spontaneous preference for biological motion patterns. PLoS Biol. 2005;3:e208. doi: 10.1371/journal.pbio.0030208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner L, Carey S. 12-month-old infants represent probable endings of motion events. Infancy. 2005;7:73–83. doi: 10.1207/s15327078in0701_6. [DOI] [PubMed] [Google Scholar]

- Woodward AL. Infants selectively encode the goal object of an actor’s reach. Cognition. 1998;69:1–34. doi: 10.1016/s0010-0277(98)00058-4. [DOI] [PubMed] [Google Scholar]