Abstract

Deaf individuals rely on facial expressions for emotional, social, and linguistic cues. In order to test the hypothesis that specialized experience with faces can alter typically observed gaze patterns, twelve hearing adults and twelve deaf, early-users of American Sign Language judged the emotion and identity of expressive faces (including whole faces, and isolated top and bottom halves), while accuracy and fixations were recorded. Both groups recognized individuals more accurately from top than bottom halves, and emotional expressions from bottom than top halves. Hearing adults directed the majority of fixations to the top halves of faces in both tasks, but fixated the bottom half slightly more often when judging emotion than identity. In contrast, deaf adults often split fixations evenly between the top and bottom halves regardless of task demands. These results suggest that deaf adults have habitual fixation patterns that may maximize their ability to gather information from expressive faces.

1 Introduction

When we look at the faces of others, the eyes possess a privileged status. Because eye contact is critical for social interactions (Emery 2000), typically developing individuals focus on the eyes of others most often (Henderson et al 2005; Janik et al 1978; Schwarzer et al 2005), and often rely on the eyes for recognition and discrimination of faces (Lacroce et al 1993; McKelvie 1976; O’Donnell and Bruce 2001; Schyns et al 2002; Vinette et al 2004). Furthermore, neural mechanisms involved with structural encoding of faces may be sensitive to information contained in the eye region (Eimer 1998; Itier et al 2006, 2007). The bias of gaze and attention toward the top half of the face is so robust that its absence has been proposed as a diagnostic symptom for social disorders such as autism and social phobias (Horley et al 2003; Klin et al 2002; Moukheiber et al 2010; Pelphrey et al 2002).

Although social developmental disorders that result in reduced attention toward faces have been associated with impairments in face perception (Dawson et al 2005; Teunisse and de Gelder 2003), the effects of increased attention or specialized experience on the development of face perception mechanisms remain unknown. Deaf users of American Sign Language (ASL) are a candidate population with which to explore this possibility. Deaf signers must pay special attention to faces and facial expressions for two reasons. First, in the absence of tone-of-voice information, the face conveys the majority of affective information in social contexts. Second, ASL uses facial expressions to convey linguistic cues typically carried by the voice in spoken languages. Rapid and precisely timed changes in facial expression (often mouth or eyebrow shape) demarcate clauses and questions, as well as adverbs and other semantic information (see Baker-Shenk 1985; Corina et al 1999 for reviews). Furthermore, although signers maintain their fixations on their conversation partner’s face (De Filippo and Lansing 2006; Emmorey et al 2009), they must perceive manual signs and facial expressions simultaneously. Because deaf signers must attend to the face for both affective and linguistic input, they may develop unique processing mechanisms that maximize their ability to gather information from expressive faces.

During ASL perception, signers have been shown to rest their gaze on the lower part of the face, rather than the upper part, allowing them to gather information from the hands and upper body in peripheral vision (Agrafiotis et al 2003; Muir and Richardson 2005; Siple 1978). However, there have been very few studies of attention or gaze patterns in this population during nonlinguistic tasks. Therefore, we do not know whether attending to the lower part of the face during daily communication shapes deaf adults’ gaze patterns in all face-perception tasks, or only those that are explicitly involved in sign language perception (ie perception of linguistic facial expressions). Such a population difference across tasks would suggest that specialized expertise with facial expressions can influence the development of face-perception mechanisms. Given that some aspects of face perception are so robust that researchers have debated whether they may be innate (Batki et al 2000; Gauthier and Nelson 2001; Johnson and Morton 1991; Park et al 2009), such plasticity would have important implications for our understanding of the development of face perception, and for the creation of interventions to aid populations with social developmental disorders.

Nevertheless, the effects of task demands on gaze and attention during face perception tasks have not been well established even in control populations. While the top half of the face is salient when subjects judge the identity of neutral faces or faces with unchanging expressions (Lacroce et al 1993; McKelvie 1976; O’Donnell and Bruce 2001; Schyns et al 2002; Vinette et al 2004), the bottom half may be more salient when subjects judge the emotion of faces with varying emotional expressions (Prodan et al 2001; Schyns et al 2002; Smith et al 2004). A controlled comparison using identical stimuli across identity and emotion tasks has not yet been made.

Therefore, in this study, we wished to determine not only the effects of deafness and early sign language use on behavior and gaze patterns in response to expressive faces, but also the effect of task demands. We compared the performance of deaf and hearing adults using a behavioral procedure that employed identical stimuli in emotion and identity tasks (as described by Calder et al 2000), and allowed measurement of the relative salience of information in the top versus bottom halves of the face.

2 Methods

2.1 Subjects

Twelve hearing adults (two male) aged 21 – 39 years (median age 26 years), and twelve deaf adults (four male) aged 23 – 51 years (median age 30 years) participated. Deaf subjects were severely to profoundly deaf, had been deaf since infancy, learned sign language as their first language (either natively from deaf family members or from school personnel by the age of 5 years), and used ASL daily as their primary form of communication (table 1). Hearing subjects had no experience with ASL. All subjects had no neurological/psychological diagnoses, and had normal/corrected-to-normal vision. Subjects provided informed consent under protocols approved by the University of Massachusetts Medical School and Brandeis University IRBs and were paid for their participation. Instructions were given in ASL for deaf participants.

Table 1.

Deaf participant demographics.

| Subject | Sex | Age/years | Deaf family members | Cause of deafness | Age when diagnosed/years | Age of ASL acquisition* |

|---|---|---|---|---|---|---|

| 1 | M | 47 | parents, sister | genetic | <1 | birth |

| 2 | F | 47 | parents, siblings (4th generation deaf family) | genetic | <1 | birth |

| 3 | M | 51 | parents, siblings (4th generation deaf family) | genetic | <1 | birth |

| 4 | F | 28 | parents (5th generation deaf family) | genetic | <1 | birth |

| 5 | F | 24 | older sister | unknown | 2 | birth |

| 6 | M | 45 | hard-of-hearing sisters | one ear deaf at birth, unknown cause. Mumps at 10 months deafened other ear |

<1 | 1 year |

| 7 | F | 23 | none | Kniest dysplasia | <1 | 1 year |

| 8 | F | 29 | none | spinal meningitis | 1 | 1 year |

| 9 | M | 29 | none | unknown | 1 | 1 year |

| 10 | F | 35 | none | unknown | 1.5 | Chinese SL: 3 years ASL: adult |

| 11 | F | 30 | none | unknown | 3 | 4 years |

| 12 | F | 26 | none | unknown | 1.5 | SEE: 3 years ASL: 5 years |

Notes:

Deaf individuals with deaf parents or older siblings were exposed to ASL at home from birth. For deaf individuals without deaf family members, the age at which they began learning ASL is listed. This usually took place at deaf community centers and deaf schools.

SEE = Signed Exact English. Chinese SL = Chinese sign language—this participant was fluent in ASL as an adult. All deaf participants used ASL daily as their primary means of communication.

2.2 Stimuli

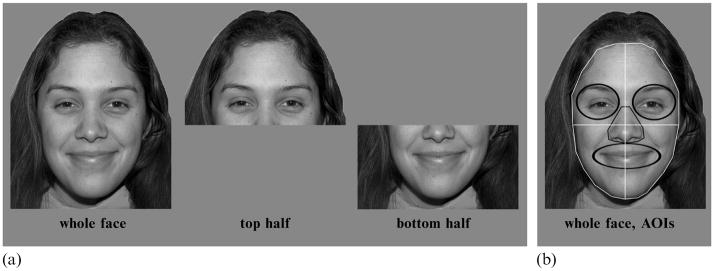

Stimuli were created using 56 images from the NimStim set (http://www.macbrain.org/resources.htm), including 4 male and 4 female models (with high validity ratings, similar hair length/color within each gender, and no facial hair), each posing happy, sad, fearful, angry, surprised, disgusted, and neutral facial expressions. Isolated top and bottom face halves were generated by splitting the faces horizontally across the middle of the nose (figure 1). Whole faces were 20 cm wide × 30 cm high (12.7 deg × 19.1 deg visual angle). Isolated top and bottom halves were 20 cm × 15 cm (12.7 deg × 9.6 deg) in size. Stimuli were presented in the center of the screen, in grayscale on a medium-gray background. Face halves were presented where they would have appeared on whole-face trials (see figure 1).

Figure 1.

Sample stimuli and areas of interest (AOIs) for eye-tracking analysis.

2.3 Procedure

Subjects sat 90 cm from a 21-inch computer monitor, and responded by pressing labeled keys on a keyboard.

2.3.1 Familiarization

In order to allow subjects to complete identity and emotion recognition tasks using identical stimuli, subjects first learned names for the models. Subjects viewed each model’s face five times above their assigned names (Jill, Ann, Meg, Kate, Bill, Dave, Jeff, Tom). The faces appeared for 4000 ms each, in random order. Facial expressions of the models varied during this familiarization, so subjects were exposed to all facial expressions during the training. We then presented each model’s face with a neutral expression and asked subjects to choose the correct name. If subjects did not correctly identify every model during this test, they repeated the familiarization. All subjects attained 100% accuracy after a second familiarization. Thus, all subjects began the study with the similar levels of familiarity with the models.

2.3.2 Experimental blocks

Subjects viewed the same set of stimuli in each of two experimental blocks. 56 stimuli (8 models, each posing 7 facial expressions) were presented three times within each block: once as a whole face, once with only the top half visible, and once with only the bottom half visible. These 168 stimuli were presented in random order, and each image appeared on the screen for 2000 ms. Subjects were instructed to look at the face until it disappeared from the screen before responding. During the intertrial interval, a fixation cross was presented in the center of the screen, roughly corresponding to the location of the center of the nose, slightly below eye level. Subjects completed two forced-choice tasks: in the identity (ID) block, they chose the name of the person in each picture by selecting one of 8 labeled keys on a keyboard; in the emotion (EM) block, they chose the emotion in each picture by selecting one of 7 labeled keys. Prior to the EM block, subjects were shown an exemplar of each emotional expression and its appropriate label on a novel model. The order of these two tasks was counterbalanced between subjects, and accuracy was recorded. Because responses were delayed until after the 2000 ms presentation time, and because subjects chose from several labeled keys to make their responses, reaction time was not analyzed.

2.3.3 Behavioral data analysis

Accuracies were analyzed using a mixed repeated-measures ANOVA with face type (whole face, top half, bottom half), and task (EM, ID) as within-subjects factors, and group (hearing, deaf) as a between-subjects factor.

2.4 Eye-tracking procedure and data analysis

During both experimental blocks, eye-tracking data were gathered with an ISCAN® ETL-300 system, consisting of an infrared (IR) beam illuminating the eyes and an IR camera, positioned in front of and beneath the stimulus-presentation monitor, approximately 65 cm from the subject. Eye position was recorded by tracking the position of binocular pupil IR sinks and corneal IR reflections at a sample rate of 60 Hz, with a spatial resolution of 0.3 deg. A 5-point grid calibration was performed prior to each experimental block, and subjects rested their heads in a chin-rest during calibration and all experimental tasks to minimize head movements.

Eye-tracking data were collected and analyzed only for whole-face presentations. For analysis, several areas of interest (AOIs) were defined using ISCAN® Point of Regard (PRZ) Software (figure 1b). Each whole-face stimulus was divided into four quadrants (top right/left, bottom right/left), and smaller areas for individual features (right eye, left eye, nose, mouth).

Fixations were defined as eye-position resting at one location (within 0.3 deg) for 100 ms or longer. Only fixations that fell on the interior of the face were considered; fixations on the external features of the face (eg hair, ears, neck), clothing, or image background were discarded. Gaze data consisted of:

Fixation time (in milliseconds), and the percentage of total fixation time on the face that fell within each AOI during each whole-face trial. Percentages were analyzed using mixed repeated-measures ANOVAs, with task (EM, ID), half (top, bottom), and side (left, right) as within-subjects factors, and group (hearing, deaf) as a between-subjects factor. No differences in fixation time were found between right and left sides, so this factor was removed.

Number of fixations on each AOI, and the percentage of the total number of fixations that fell on the top half of the face during each whole-face trial. This latter measure was used as another indicator of subjects’ bias toward the top half relative to the bottom half of the face. A value above 50% indicates a top-half preference, whereas a value below 50% indicates a bottom-half preference. Two-tailed independent samples t-tests were used to determine whether subjects were significantly biased from this 50% point.

3 Results

3.1 Behavior

Recognition of individual emotions and models was similar between the two groups. Both groups recognized emotions with accuracies above 80% [95% confidence interval of the mean (CI) across all facial expressions: 84 ± 2.98] and identities with accuracies above 90% (95% CI across models: 98 ± 0.77). Therefore, the training paradigm was equally successful for both groups.

Both groups had higher accuracies in the ID task (95% CI: 94 ±1.7) than the EM task (95% CI: 75.3 ±1.9; task main effect: F1,22 = 390.6, p < 0.001). In addition, both groups showed higher accuracies for whole faces than either isolated half in both tasks (95% CIs, whole faces: 91.5 ± 1.8, top halves: 80.8 ± 1.7, bottom halves: 83.0 ± 1.8; face-part main effect: F2,44 = 110.6, p < 0.001). There were no group main effects in response to whole faces.

3.1.1 Relative salience of top versus bottom halves

Emotion was recognized better from isolated bottom halves (95% CI: 74.8 ± 2.2) than top halves (65.4 ± 2.6, pairwise comparison, p < 0.001), while identity was recognized better from top halves (96.2 ± 1.9) than bottom halves (95% CI: 91.2 ± 3.1, p = 0.004; face part × task interaction: F2,44 = 58.1, p < 0.001). This pattern was present in both groups of subjects.

Nevertheless, the deaf group was less accurate than the hearing group when judging isolated top halves (face part × group interaction: F2,44 = 3.857, p = 0.029; 95% CI for top halves, hearing subjects: 81.4 ± 2.6, deaf subjects: 77.5 ± 2.5 pairwise comparison p = 0.031). This appeared to be driven by the emotion task; a face part × group interaction was significant only for the EM task (F2,44 = 4.582, p = 0.017), not the ID task (F2,44 = 0.227, p = 0.710). A-posteriori pairwise comparisons for the EM task revealed lower accuracies in response to isolated top halves for the deaf group (95% CI: 58.9 ± 3.7) than the hearing group (95% CI: 65.9 ± 3.8; group comparison, p = 0.019).

3.1.2 Individual emotions

Subjects in both groups recognized emotional expressions most accurately when shown a whole face, compared to either isolated half (face-part main effect: F2,44 = 161.9, p < 0.001). However, the two groups showed slightly different recognition patterns for individual emotional expressions (face-part × emotion × group interaction: F10,220 = 2.499, p = 0.029). Specifically, hearing adults recognized fear and anger expressions better from the top half than the bottom half (fear: 95% CI, top: 55.1 ± 17, bottom: 20.8 ± 8.5, half comparison, p < 0.001; anger: top: 76.0 ± 9.8, bottom: 62.5 ± 6.8, p = 0.019), while deaf adults recognized these expressions similarly well from the top and the bottom (fear: 95% CI, top: 37.5 ± 15.5, bottom: 22.9 ± 7.4, half comparison, p = 0.186; anger: top: 67.7 ± 12.9, bottom: 60.4 ± 10, p = 0.352). The two groups differed only in response to top halves, with deaf subjects performing less accurately than hearing subjects (face-part × group interaction: F2,44 = 4.873, p = 0.012).

This ‘equalizing’ of the top and bottom halves for these expressions suggests that deaf subjects’ performance may drop if any part of the face is missing. To test this hypothesis, a part-to-whole ratio was calculated: accuracy in response to either the isolated top or bottom halves, divided by the accuracy in response to whole faces. A value of 1 on this measure indicated that subjects were as accurate in response to an isolated face half as to the whole face. A value of less than 1 indicated better accuracies in response to the whole face than to the isolated half. Part-to-whole ratios were analyzed using a repeated-measures ANOVA with face half (ratio for top versus bottom) as a within-subjects factor, and group as a between-subjects factor. If deaf adults show a greater reliance on whole faces rather than face parts, then their ratios should be lower than those of hearing adults.

Indeed, the deaf group had significantly lower part-to-whole ratios than the hearing group during the EM task (group main effect: F1,22 = 7.037, p = 0.015; 95% CI, deaf subjects: 0.76 ± 0.03, hearing subjects: 0.83 ± 0.04). The group difference was larger when isolated top halves were presented (top: 95% CI, hearing subjects: 0.78 ± 0.06, deaf subjects: 0.67 ± 0.05, group comparison, p = 0.008; bottom: 95% CI, hearing subjects: 0.89 ± 0.05, deaf subjects: 0.85 ± 0.04, p = 0.2), indicating that the absence of information in the bottom half resulted in a drop in performance for deaf subjects. These group differences were not observed during the ID task.

3.2 Eye tracking results for whole-face trials

3.2.1 Fixations to the top versus bottom half of the face

Across the length of the trial, the hearing group spent an average of 86% of their total fixation time on the face looking at the top half (95% confidence interval of the mean, CI: 76.8 – 96.7%), while the deaf group spent only 65% of their total fixation time looking at the top half (95% CI: 55.8 – 74.7%). No significant differences between expressions were found in either the percentage of fixations (F6, 126 = 1.465, p = 0.210) or the percentage of total fixation time (F6, 126 = 1.930, p = 0.10) directed to the top half of the face relative to the bottom, and this was true for both subject groups. The variability across stimulus images may have accounted for the lack of observable differences across expressions, as subjects viewed each image only once in each block. We collapsed gaze data across expressions for all remaining analyses in order to examine the primary hypotheses that fixations to the top relative to the bottom of the face differed between the two tasks and between the two subject groups.

A half × group interaction for fixation time (F1,22 = 12.541, p = 0.002) indicated that, compared to the hearing group, the deaf group spent less fixation time on the top half of the face (hearing subjects: 857 ± 123 ms; deaf subjects: 549 ± 158 ms, p = 0.003) and more on the bottom half (hearing subjects: 134 ± 65 ms; deaf subjects: 260 ± 73 ms, p = 0.010). The deaf group also directed a greater number of fixations to the bottom half of the face than did the hearing group (half × group interaction: F1,22 = 9.865, p = 0.005; fixations to bottom half: hearing subjects: 0.75 ± 0.22, deaf subjects: 1.12 ± 0.22, p = 0.015). The two groups directed a similar number of fixations to the top half of the face (hearing subjects: 1.64 ± 0.1, deaf subjects: 1.53 ± 0.16, p = 0.189). The number of fixations directed at the face overall was not significantly different between the two groups (hearing subjects: 2.35 ± 0.24 fixations per trial, deaf subjects: 2.65 ± 0.25, F1,22 = 2.31, p = 0.143).

3.2.2 Fixations to the eyes versus mouth

Compared with the hearing group, the deaf group spent more fixation time on the mouth across tasks (one-way ANOVA: F1,23 = 4.436, p = 0.047, hearing subjects: 19 ± 11 ms, deaf subjects: 96 ± 80 ms), and spent a larger percentage of total face fixation time on the mouth in both tasks individually (emotion task: F1,22 = 4.857, p = 0.038, 95% CI, hearing subjects: 1.5 ± 1.1, deaf subjects: 13.6 ± 11; identity task: F1,22 = 4.144, p = 0.054, 95% CI: hearing subjects: 2.5 ± 1.7, deaf subjects: 12.9 ± 11). This difference was not at the cost of attending to the eyes, however, as there was no difference between groups in the amount of fixation time spent on the eyes (F1, 23 = 1.614, p = 0.217, hearing subjects: 282 ± 89 ms, deaf subjects: 215 ± 76 ms) or the percentage of total face fixation time spent in this area.

3.2.3 Task effects

Although the two groups directed a similar number of fixations to the top half of the face (one-way ANOVAs, EM task: F1,23 = 0.347, p = 0.562, ID task: F1, 23 = 1.966, p = 0.175), the deaf group spent less time than the hearing group fixating the top half in both tasks (EM task: one-way ANOVA: F1,23 = 9.245, p = 0.006, hearing subjects: 808 ± 122 ms, deaf subjects: 547 ± 145 ms; ID task: F1,23 = 10.334, p = 0.004, hearing subjects: 905 ± 157 ms, deaf subjects: 552 ± 184 ms). During the ID task, the deaf group directed both more fixations (F1,23 = 9.447, p = 0.005, hearing subjects: 0.615 ± 0.26, deaf subjects: 1.106 ± 0.24) and a greater amount of fixation time (F1,23 = 9.992, p = 0.002, hearing subjects: 120 ± 81 ms, deaf subjects: 272 ± 68 ms) to the bottom half of the face, as compared with the hearing group. In contrast, hearing and deaf groups were similar in the number of fixations (F1, 23 = 2.316, p = 0.142, hearing subjects: 0.88 ± 0.24, deaf subjects: 1.14 ± 0.29) and total fixation time (F1,23 = 2.932, p = 0.10, hearing subjects: 148 ± 76 ms, deaf subjects: 248 ± 103 ms) directed to the bottom half of the face during the EM task.

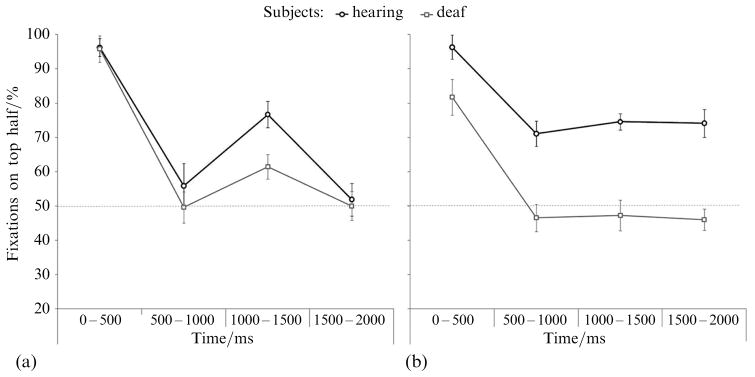

3.2.4 Fixations across the trial

To determine how gaze patterns evolved over the course of each trial, fixation data from each 2000 ms trial were divided into four 500 ms bins. The percentage of fixations made to the top half of the face within each bin is shown in table 2. t-tests were used to determine whether subjects directed significantly more or less than 50% of their fixations to the top half of the face.

Table 2.

Percentage of fixations directed to the top half of the face within each time bin: significance values and 95% confidence intervals of the mean.

| Task | Bin.ms | Hearing subjects

|

Deaf subjects

|

Group comparison p | ||

|---|---|---|---|---|---|---|

| t | 95% CI | t | 95% CI | |||

| Emotion | 0 – 500 | 31.396** | 96.3 ± 3.2 | 32.315** | 95.8 ± 3.1 | 0.8 (ns) |

| 500 – 1000 | 0.748 | 55.9 ± 17.4 | −0.05 | 49.6 ± 15.6 | 0.6 (ns) | |

| 1000 – 1500 | 7.941** | 76.7 ± 7.4 | 2.363* | 61.5 ± 10.7 | 0.02 | |

| 1500 – 2000 | 0.304 | 51.9 ± 13:8 | 0.003 | 50.0 ± 16.2 | 0.8 (ns) | |

| Identity | 0 – 500 | 22.210** | 96.3 ± 4.6 | 4.695** | 81.7 ± 14.9 | 0.05 |

| 500 – 1000 | 3.679** | 71.1 ± 12.7 | −0.420 | 46.6 ± 18.0 | 0.02 | |

| 1000 – 1500 | 5.469** | 74.6 ± 9.9 | −0.389 | 47.3 ± 15.4 | 0.003 | |

| 1500 – 2000 | 3.727** | 74.1 ± 14.3 | −0.562 | 46.0 ± 15.6 | 0.008 | |

Notes: Two-tailed t-tests were used to examine percentage of fixations to the top half of the face, test value t11 = 50.

Significant at the p = 0.05 level.

Significant at the p = 0.001 level. Group comparisons are pairwise a-posteriori t-tests (hearing subjects versus deaf subjects), with Bonferroni adjustments for multiple comparisons.

As shown in figure 2, during the first 500 ms of the trial, both groups directed a larger percentage of their fixations to the top half of the face than the bottom half during both EM judgments (hearing subjects: t11 = 31.396, p < 0.001, deaf subjects: t11 = 32.315, p < 0.001) and ID judgments (hearing subjects: t11 = 22.210, p < 0.001, deaf subjects: t11 = 4.695, p = 0.001). However, during the ID task, the deaf group directed an average of only 81.7% (±14.9%) of their fixations to the top half of the face, while the hearing group directed 96.3% (±4.6%), and this group difference was significant (table 2).

Figure 2.

Percentage of fixations directed toward the top half of the face in each time bin during emotion judgments (a) and identity judgments (b). Error bars represent the within-subjects standard error.

During the remainder of the trial, the hearing group remained biased toward the top half of the face during all time bins, while the deaf group did not show any bias toward the top half after the first 500 ms (table 2). Importantly, the deaf group also did not show a significant bias toward the bottom half of the face; they directed a similar percentage of fixations to the top and the bottom.

In contrast, fixation patterns during the EM task were similar between the two groups. Both groups fixated the top half of the face more than the bottom half during the first 500 ms of the trial. Between 500 and 1000 ms, and 1500 and 2000 ms, both groups split their fixations evenly between the top and bottom halves. Between 1000 and 1500 ms, both groups shifted their fixations slightly toward the top half of the face. However, this shift was smaller in the deaf group (group comparison, table 2). Therefore, although both hearing and deaf subjects fixated the top half of the face initially, and shifted their gaze toward the bottom half later in the trial, the deaf group was less likely to revisit the top half of the face.

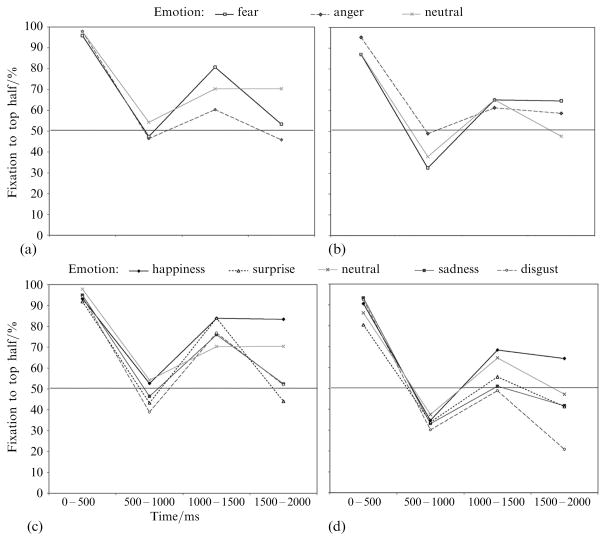

As shown in figure 3, fixation patterns across the trial were similar for all expressions within each group, in contrast to the behavioral findings that the hearing group recognized fear and anger better from the top half of the face than the bottom half, and both groups recognized the remaining emotions better from the bottom than the top half.

Figure 3.

Percentage of fixations directed to the top half of the face in the hearing group [(a) and (c)] and deaf group [(b) and (d)] for each emotional expression. (a) and (c) Gaze data for the emotions that subjects better recognized from the top half of the face than the bottom (fear and anger). (b) and (d) Gaze data for emotions better recognized by the bottom half of the face (happiness, sadness, surprise, and disgust). Fixation patterns were qualitatively similar for both categories of emotional expressions. Gaze data in response to neutral expressions are shown on both graphs for comparison.

4 Discussion

In this study we examined gaze patterns during emotion and identity tasks, and the relative salience of the top versus bottom half of the face during each type of judgment. This study was also the first to examine the effects of deafness and sign language use on gaze patterns in face processing during nonlinguistic tasks, and to conduct a controlled comparison of performance during identity and emotion judgments in deaf signers.

Both hearing and deaf subjects judged identity more accurately from isolated top than bottom halves. This result is in line with previous research, which has demonstrated the importance of the eyes for identity judgments (McKelvie 1976; O’Donnell and Bruce 2001) and for structural encoding of faces (Itier et al 2006; Taylor et al 2001). In contrast, the majority of emotional expressions were recognized more accurately from bottom than top halves (with the exception of fear and anger in the hearing group). This is also in line with previous work, which documented that the mouth region is of greater importance when processing emotional expressions than when processing identity (Calder et al 2000; Schyns et al 2002).

Deafness and the use of sign language did affect the relative distribution of attention to information in the top versus bottom halves of the face, specifically when emotion judgments were being made. Although both groups were given equal exposure to information in the top half of the face, deaf signers were less accurate when responding to isolated top halves. Analyses of part/whole ratios showed further that deaf signers’ accuracy in identifying emotions suffered particularly from the loss of information in the bottom half of the face. We hypothesize that the bottom half of the face contains more salient emotion information than the top half for deaf signers in their daily life, and this results in a reduction in attention to emotion information provided in the top half.

By comparing behavioral and eye-tracking measures in the current study, we were also able to determine whether subjects fixated the areas of the face that were most salient in each task. During the initial 500 ms of each whole-face trial, both groups of subjects directed the majority of their fixations to the top half of the stimulus in both tasks. Note that the fixation cross presented during the intertrial interval was located in the middle of the nose, roughly slightly below eye level, which would draw at least initial fixations to this area. However, 500 ms is sufficient time to make several saccades and the results indicated that subjects rarely shifted their fixation away from the top half of the face during the first 500 ms. Other eye-tracking studies with neutral-face stimuli have demonstrated a similar concentration of early fixations on the eye region of the face (Barton et al 2006; Bindemann et al 2009; Henderson et al 2005; Hsiao and Cottrell 2008).

Fixation data from later portions of the trial indicated that task demands affected hearing subjects’ gaze patterns. Compared with identity judgments, emotion judgments elicited more frequent fixations to the bottom half of the face. Nevertheless, the majority of hearing subjects’ fixations were directed to the top half of the face across the trial in both tasks. This was the case even during the emotion task, when the most salient information was contained in the bottom half of the face for the majority of emotional expressions.

Because the stimuli used in the emotion and identity tasks were identical, these results indicate that behavior and fixation patterns were affected specifically by attention to emotional expression, rather than the mere presence of emotion in the stimuli. Research using the ‘bubbles’ technique (in which small portions of the face are revealed on each trial) has also demonstrated that the eyes and upper face are most informative for identity judgments, and the mouth for expressiveness or emotion judgments (Schyns et al 2002). The current study extends these results by demonstrating that, even when a whole face is visible, subjects allocate gaze—and perhaps attention—to different regions of the face depending on task demands. Research on visual attention has demonstrated that gaze and attention can be directed to different parts of visual stimuli (Posner 1980, 1987), and recent studies have found similar effects during face processing specifically (Blais et al 2008; Caldara et al 2010). Similarly, our results suggest that adults can attend to emotion information in the bottom half of the face while fixating the top half. Future studies may directly address this hypothesis by requiring subjects to maintain fixation on individual parts of whole faces while judging identity or emotion.

Compared with the hearing group, the deaf group showed an increased tendency to fixate the bottom half of the face in both tasks. These results are directly in line with the behavioral results and indicate that deaf signers’ increased attention to the bottom half of the face is achieved, at least in part, by increased fixation to that portion of the stimulus and reduced fixation to the top half, in comparison with hearing nonsigners. The behavioral results also suggested that there may be a cost to deaf signers’ unique pattern of eye gaze: when emotional expressions had to be identified solely on the basis of information contained in the top half of the face, deaf subjects were less accurate than hearing subjects, possibly owing to deaf subjects’ increased reliance on emotional information normally present in the bottom half of the face.

The fact that these results were obtained with a relatively long stimulus presentation time suggests that deaf signers’ shift in gaze and attention to the bottom half of the face is robust and habitual. Nevertheless, it is unclear whether these patterns would hold under speeded conditions. Both groups fixated steadily on the top half of the face during the first 500 ms of each trial, and reaction times in speeded response paradigms would tend to occur within this period of time. If this would prevent deaf subjects from making saccades to the lower half of the face, then this could increase their processing of information in the top half of the face, which could subsequently diminish the behavioral group effects observed in this study. On the other hand, if deaf subjects are truly reliant on information in the bottom half of the face, a speeded paradigm would restrict their access to this information and could amplify the effects documented here.

Previous eye-tracking research with deaf signers has focused on gaze behavior during ASL comprehension, and has found that signers fixate the lower part of the face more often than other parts of the face, the upper body, or the hands (Agrafiotis et al 2003; Muir and Richardson 2005). Our results suggest that this fixation pattern generalizes to faces containing no linguistic or dynamic information. While hearing adults varied their fixation patterns depending on task demands (directing more fixations across the trial to the top half when judging identity than emotion, in line with their behavior), deaf adults used similar fixation patterns in both tasks (directing the majority of fixations to the top half at first, and then evenly distributing them to the top and bottom thereafter). The robustness of this gaze pattern suggests that it may be habitual in lifelong deaf signers.

Fixating the lower part of the face may allow signers to see the hands and upper body in peripheral visual space while maintaining social gaze on the other signer’s face (Siple 1978). In line with this hypothesis, deaf adults have also shown enhanced detection of and attention to movement in peripheral visual space (Armstrong et al 2002; Bavelier et al 2000; Neville and Lawson 1987), an adaptation which would improve their perception of manual signs in the periphery while looking at the faces of others. In the current study, deaf and hearing subjects did not differ in the percentage of fixations directed to the eyes themselves, even though the deaf group directed fewer fixations to the top half of the face overall. This suggests that deaf signers adjusted their fixation patterns in order to both maintain as much eye contact as possible and increase attention to relevant areas of the bottom half of the face.

Recent findings with hearing and deaf adults in East Asia also support the importance of eye contact for deaf signers across cultures, and demonstrate the influence of both social conventions and visual experience on gaze behaviors toward faces. Compared with hearing Western Caucasian adults, hearing East Asians direct more fixations to the center of the face (in particular, the area near the nose) in line with cultural norms that discourage direct and extended eye contact (Blais et al 2008). Watanabe et al (2011) recently demonstrated that, when judging the emotional valence of static faces, deaf signers in Japan actually fixate the eyes more often than their hearing counterparts do, suggesting that the importance of eye contact for deaf signers can override the cultural tendency to avoid direct eye contact. Together, these studies suggest that deafness and ASL use can affect attention toward faces differently in different cultures. Deaf signers in the current study showed reduced attention to the eye region compared to hearing adults in the US, and deaf signers in the study by Watanabe et al (2011) showed enhanced attention to the eye region compared to hearing adults in Japan. In both cases, deaf signers’ need to both maintain eye contact and attend to relevant information in the lower part of the face may have played a part in shaping their gaze behaviors toward faces, just as social conventions across cultures may have shaped hearing adults’ fixation patterns.

In conclusion, the results of this study provide evidence that gaze patterns toward faces are affected by deafness and/or ASL experience, possibly as a result of acquired skill with a visual language that emphasizes the use of facial expression for affective as well as linguistic information. Future studies may determine the role of deafness and ASL experience individually by examining hearing adults who learned ASL natively, or deaf adults who rely on lipreading rather than ASL to communicate. More generally, our results suggest that habitual gaze patterns toward faces can be altered in response to functional pressures and perhaps explicit training. If fixation patterns and face perception strategies are indeed gradually shaped over the course of development and affected by acquired skills, these results may have important implications for research not only on the deaf, but also on populations with social developmental disabilities.

Acknowledgments

This research was supported by NIDCD NRSA grant F31-DC009351. Many thanks to Leslie Zebrowitz, Arthur Wingfield, Charles Nelson, Giordana Grossi, and Melissa Maslin for their helpful feedback on this research.

Contributor Information

Susan M Letourneau, Email: suzy.letourneau@gmail.com, Brandeis University, 415 South Street, Waltham, MA 02453, USA.

Teresa V Mitchell, Eunice Kennedy Shriver Center, University of Massachusetts Medical School, 200 Trapelo Road, Waltham, MA 02452, USA.

References

- Agrafiotis D, Canagarajah N, Bull DR, Dye M, Twyford H, Kyle J, Chung-How J. Optimised sign language video coding based on eye-tracking analysis. Visual Communications and Image Processing. 2003;5150:1244–1252. [Google Scholar]

- Armstrong BA, Neville HJ, Hillyard SA, Mitchell TV. Auditory deprivation affects processing of motion, but not color. Brain Research. Cognitive Brain Research. 2002;14:422–434. doi: 10.1016/s0926-6410(02)00211-2. [DOI] [PubMed] [Google Scholar]

- Baker-Shenk C. The facial behavior of deaf signers: evidence of a complex language. American Annals of the Deaf. 1985;130:297–304. doi: 10.1353/aad.2012.0963. [DOI] [PubMed] [Google Scholar]

- Barton JJ, Radcliffe N, Cherkasova MV, Edelman J, Intriligator JM. Information processing during face recognition: the effects of familiarity, inversion, and morphing on scanning fixations. Perception. 2006;35:1089–1105. doi: 10.1068/p5547. [DOI] [PubMed] [Google Scholar]

- Batki A, Baron-Cohen S, Wheelwright S, Connellan J, Ahluwalla J. Is there an innate gaze module? Evidence from human neonates. Infant Behavior and Development. 2000;23:223–229. [Google Scholar]

- Bavelier D, Tomann A, Hutton C, Mitchell T, Corina D, Neville H. Visual attention to the periphery is enhanced in congenitally deaf individuals. Journal of Neuroscience. 2000;20:1–6. doi: 10.1523/JNEUROSCI.20-17-j0001.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bindemann M, Scheepers C, Burton AM. Viewpoint and center of gravity affect eye movements to human faces. Journal of Vision. 2009;9:1–16. doi: 10.1167/9.2.7. [DOI] [PubMed] [Google Scholar]

- Blais C, Jack RE, Scheepers C, Fiset D, Caldara R. Culture shapes how we look at faces. PLoS One. 2008;3:e3022. doi: 10.1371/journal.pone.0003022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caldara R, Zhou X, Miellet S. Putting culture under the ‘spotlight’ reveals universal information use for face recognition. PLoS One. 2010;5:e9708. doi: 10.1371/journal.pone.0009708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder AJ, Young AW, Keane J, Dean M. Configural information in facial expression perception. Journal of Experimental Psychology: Human Perception and Performance. 2000;26:527–551. doi: 10.1037//0096-1523.26.2.527. [DOI] [PubMed] [Google Scholar]

- Corina DP, Bellugi U, Reilly J. Neuropsychological studies of linguistic and affective facial expressions in deaf signers. Lang Speech. 1999;42(parts 2–3):307–331. doi: 10.1177/00238309990420020801. [DOI] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, McPartland J. Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Developmental Neuropsy-chology. 2005;27:403–424. doi: 10.1207/s15326942dn2703_6. [DOI] [PubMed] [Google Scholar]

- De Filippo CL, Lansing CR. Eye fixations of deaf and hearing observers in simultaneous communication perception. Ear & Hearing. 2006;27:331–352. doi: 10.1097/01.aud.0000226248.45263.ad. [DOI] [PubMed] [Google Scholar]

- Eimer M. Does the face-specific N170 component reflect the activity of a specialized eye processor? NeuroReport. 1998;9:2945–2948. doi: 10.1097/00001756-199809140-00005. [DOI] [PubMed] [Google Scholar]

- Emery NJ. The eyes have it: the neuroethology, function and evolution of social gaze. Neuroscience and Biobehavioral Reviews. 2000;24:581–604. doi: 10.1016/s0149-7634(00)00025-7. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Thompson R, Colvin R. Eye gaze during comprehension of American Sign Language by native and beginning signers. Journal of Deaf Studies and Deaf Education. 2009;14:237–243. doi: 10.1093/deafed/enn037. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Nelson CA. The development of face expertise. Current Opinion in Neurobiology. 2001;11:219–224. doi: 10.1016/s0959-4388(00)00200-2. [DOI] [PubMed] [Google Scholar]

- Henderson JM, Williams CC, Falk RJ. Eye movements are functional during face learning. Memory & Cognition. 2005;33:98–106. doi: 10.3758/bf03195300. [DOI] [PubMed] [Google Scholar]

- Horley K, Williams LM, Gonsalvez C, Gordon E. Social phobics do not see eye to eye: a visual scanpath study of emotional expression processing. Journal of Anxiety Disorders. 2003;17:33–44. doi: 10.1016/s0887-6185(02)00180-9. [DOI] [PubMed] [Google Scholar]

- Hsiao JH, Cottrell G. Two fixations suffice in face recognition. Psychological Science. 2008;19:998–1006. doi: 10.1111/j.1467-9280.2008.02191.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier RJ, Alain C, Sedore K, McIntosh AR. Early face processing specificity: it’s in the eyes. Journal of Cognitive Neuroscience. 2007;19:1815–1826. doi: 10.1162/jocn.2007.19.11.1815. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Latinus M, Taylor MJ. Face, eye and object early processing: what is the face specificity? NeuroImage. 2006;29:667–676. doi: 10.1016/j.neuroimage.2005.07.041. [DOI] [PubMed] [Google Scholar]

- Janik S, Wellens A, Goldberg M, Dell’Osso L. Eyes as the center of focus in the visual examination of human faces. Perceptual and Motor Skills. 1978;47:857–858. doi: 10.2466/pms.1978.47.3.857. [DOI] [PubMed] [Google Scholar]

- Johnson MH, Morton J. Biology and Cognitive Development: The Case of Face Recognition. Cambridge, MA: Blackwell Press; 1991. [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Lacroce MS, Brosgole L, Stanford RG. Discriminability of different parts of faces. Bulletin of the Psychonomic Society. 1993;31:329–331. [Google Scholar]

- McKelvie SJ. The role of eyes and mouth in the memory of a face. American Journal of Psychology. 1976;89:311–323. [Google Scholar]

- Moukheiber A, Rautureau G, Perez-Diaz F, Soussignan R, Dubal S, Jouvent R, Pelissolo A. Gaze avoidance in social phobia: objective measure and correlates. Behavioural Research and Therapy. 2010;48:147–151. doi: 10.1016/j.brat.2009.09.012. [DOI] [PubMed] [Google Scholar]

- Muir LJ, Richardson EG. Perception of sign language and its application to visual communications for deaf people. Journal of Deaf Studies and Deaf Education. 2005;10:390–401. doi: 10.1093/deafed/eni037. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task: an event-related potential and behavioral study. II. Congenitally deaf adults. Brain Research. 1987;405:268–283. doi: 10.1016/0006-8993(87)90296-4. [DOI] [PubMed] [Google Scholar]

- O’Donnell C, Bruce V. Familiarisation with faces selectively enhances sensitivity to changes made to the eyes. Perception. 2001;30:755–764. doi: 10.1068/p3027. [DOI] [PubMed] [Google Scholar]

- Park J, Newman LI, Polk TA. Face processing: the interplay of nature and nurture. Neuroscientist. 2009;15:445–449. doi: 10.1177/1073858409337742. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. Journal of Autism and Developmental Disorders. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Posner MI. Orienting of attention. Quarterly Journal of Experimental Psychology. 1980;32:3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Posner MI. Cognitive neuropsychology and the problem of selective attention. Electroencephalographic and Clinical Neurophysiology. 1987;39(Supplement):313–316. [PubMed] [Google Scholar]

- Prodan C, Orbelo D, Testa J, Ross E. Hemispheric differences in recognizing upper and lower facial displays of emotion. Neuropsychiatry, Neuropsychology, & Behavioral Neurology. 2001;14:206–212. [PubMed] [Google Scholar]

- Schwarzer G, Huber S, Dummler T. Gaze behavior in analytical and holistic face processing. Memory and Cognition. 2005;33:344–354. doi: 10.3758/bf03195322. [DOI] [PubMed] [Google Scholar]

- Schyns PG, Bonnar L, Gosselin F. Show me the features! Understanding recognition from the use of visual information. Psychological Science. 2002;13:402–409. doi: 10.1111/1467-9280.00472. [DOI] [PubMed] [Google Scholar]

- Siple P. Visual constraints for sign language communication. Sign Language Studies. 1978;19:95–110. [Google Scholar]

- Smith ML, Gosselin F, Schyns PG. Receptive fields for flexible face categorizations. Psychological Science. 2004;15:753–761. doi: 10.1111/j.0956-7976.2004.00752.x. [DOI] [PubMed] [Google Scholar]

- Taylor MJ, Edmonds GE, McCarthy G, Allison T. Eyes first! Eye processing develops before face processing in children. NeuroReport. 2001;12:1671–1676. doi: 10.1097/00001756-200106130-00031. [DOI] [PubMed] [Google Scholar]

- Teunisse J-P, de Gelder B. Face processing in adolescents with autistic disorder: The inversion and composite effects. Brain and Cognition. 2003;52:285–294. doi: 10.1016/s0278-2626(03)00042-3. [DOI] [PubMed] [Google Scholar]

- Vinette C, Gosselin F, Schyns PG. Spatio-temporal dynamics of face recognition in a flash: It’s in the eyes. Cognitive Science. 2004;28:289–301. [Google Scholar]

- Watanabe K, Matsuda T, Nishioka T, Namatame M. Eye gaze during observation of static faces in deaf people. PLoS One. 2011;6:e16919. doi: 10.1371/journal.pone.0016919. [DOI] [PMC free article] [PubMed] [Google Scholar]