Abstract

The number of economic evaluations in the field of spinal disorders and methodological studies have increased in the last decade. The objective of this paper is to provide an overview of current views on economic evaluations in the field of spinal disorders and to facilitate clinicians to interpret and use results from these studies. A full economic evaluation compares both costs and effects of two or more interventions. Key elements of economic evaluations such as identifying adequate alternatives, analytical perspective, cost methodology, missing values and sensitivity analyses are addressed. Further emphasis is placed on the interpretation of results of economic evaluations conducted alongside randomised clinical trials. Incremental cost–effectiveness ratios, cost–effectiveness planes, acceptability curves and cost–effectiveness thresholds are discussed. The contents may aid in taking the efficacy ‘hurdle’ in the field of spinal disorders.

Keywords: Economic evaluations, Spinal disorders, Methodology, Assessment

Introduction

Ever rising healthcare expenditures necessitate not only policymakers, but also healthcare providers, health insurance companies and patients to make choices in healthcare. Especially in spinal disorders where a small group of patients is responsible for a large amount of the costs, efficient interventions are needed. In an economic evaluation, both the costs and consequences of two or more interventions are compared [15]. The evaluation is aimed at answering the question if an intervention is worth doing compared to other strategies that could be performed within a certain budget. Economic evaluations may help to identify ‘value for money’ interventions. They do not necessarily answer the question what the cheapest intervention is. If an intervention is more effective than another intervention but associated with higher costs, the intervention may still be used by healthcare providers and patients may still be reimbursed by insurance companies.

During the last decade, the number of economic evaluations and methodological papers concerning economic evaluations have increased. In order to value and use economic evaluations in the decision-making process knowledge of the methodology is necessary. This paper discusses the interpretation and practical application of economic evaluations in the field of spinal disorders. Although economic evaluations can also be performed in modelling studies, this paper focuses on economic evaluations alongside randomised controlled trials.

Types of economic evaluations

A distinction is made between full and partial economic evaluations. The criteria for a full evaluation are (1) two or more interventions are being considered and (2) both costs and consequences of the interventions are assessed. Evaluations not meeting both criteria are considered partial evaluations and are often outcome and/or cost descriptions, effectiveness analyses or cost analyses. Although partial evaluations may contribute to understanding effectiveness or costs involved in an intervention, full economic evaluations are the most useful in resource allocation questions.

The most commonly used full economic evaluations are [15]:

Cost–minimisation analysis (CMA)

In this analysis, the consequences (or effects) are considered to be equal and therefore only the costs of the interventions are compared. For instance in the study by Seferlis et al., the costs of three conservative treatment programmes for acute low back pain were compared to identify a least costs alternative [39]. Although the relative simplicity of this analysis may be appealing, the circumstances that allow this analysis to be performed are rare [8].

Cost–benefit analysis (CBA)

In a CBA both costs and consequence are expressed in monetary terms. An intervention may be considered efficient when the benefits outweigh the costs. However, the translation of outcomes to monetary terms is challenging. One method for this translation is ‘willingness-to-pay’. Using this method, patients are asked what they would be willing to pay for an intervention given certain changes in, for example, their low back pain, physical functioning or quality of life [15].

Cost–effectiveness analysis (CEA)

In a CEA, consequences are expressed as disease specific effects. A core set of outcome measures is recommended for spinal disorders, including pain, functioning and work disability [5]. The incremental effects of an intervention are related to the incremental costs in a so-called cost–effectiveness ratio. In the study by Kovacs et al., for example, neuroreflexotherapy was compared to routine general practice in patients with sub-acute and chronic low back pain. As outcome measures low back pain intensity and disability were included and compared to the healthcare and indirect costs in both intervention groups [28].

Cost–utility analysis (CUA)

In a CUA, the outcomes are patients’ preferences, which are expressed as quality-adjusted life-years (QALYs) or disability-adjusted life-years. For instance, the EQ-5D was used to estimate how many QALYs participants had experienced in the UK BEAM trial, comparing best care in general practice, an exercise programme, a spinal manipulation package and combined treatment. The results were expressed as costs per QALY gained [1].

Because QALYs represent generic health status, theoretically, a ranking of different interventions across different disorders can be made. In practice, it is difficult to obtain information on the full costs and benefits of all health problems and alternative interventions [35]. Consequently, policymakers do not often use a ranking system.

In the field of spinal disorders, cost–effectiveness and CUA are the most commonly used full economic evaluations.

Key elements of economic evaluations

In order to use and interpret economic evaluations, knowledge of key elements is essential.

Alternatives

To determine the efficiency or cost–effectiveness of an intervention, a comparison must be made with one or more alternatives. The alternative generally might be ‘usual care’ or the best or most widely used alternative since these are the most informative comparisons to policymakers. In the case of a new intervention in a field where no other interventions are available, a ‘doing nothing’ alternative is possible. A ‘placebo intervention’ is not recommended as an alternative in economic evaluations since this is not a real treatment option and information derived from the evaluation is therefore not useful for policymakers. For example, economic evaluations in spinal disorders have compared surgery with usual care [19], manual therapy with exercise therapy, usual care by the general practitioner and combined therapy [1].

Perspective

Different perspectives can be chosen for the economic evaluation, such as the societal perspective, the patient’s perspective, the health insurance perspective, the healthcare provider perspective or the perspective of companies. Whether results can be generalised to other settings and which costs and outcomes are considered in the evaluation depends on the chosen perspective. If, for instance, the economic evaluation is performed from the perspective of the healthcare provider, effects such as patient satisfaction and functioning may be the most relevant outcomes and relevant costs are costs for the therapy. However, economic evaluations are usually performed from a societal perspective. In that case all relevant outcomes and costs are measured, regardless of who is responsible for the costs and who benefits from the effects. Since the chosen perspective has large implications for the design of the economic evaluation, the perspective of the study should be clearly stated.

Identifying, measuring and valuing outcomes

The outcomes should be relevant to the type of disorder. Bombardier et al. have recommended a core set of outcome measures for intervention studies in the field of spinal disorders [5]. Although this element is very important in economic evaluations, other papers in the current issue address this topic extensively and therefore further details are not provided here.

Identifying, measuring and valuing costs

One of the main challenges in economic evaluations is to decide which costs should be included and how these costs should be measured and valued. The type of costs that are relevant in a specific economic evaluation depends on the chosen perspective. A distinction can be made between direct and indirect costs, within and outside the healthcare sector [33]. Direct costs within the healthcare sector include all costs of healthcare services. For spinal disorders these costs include, for example, costs of general practitioner care, physical therapy and hospitalisation. Direct costs outside the healthcare sector include, for example, out-of-pocket costs and travel expenses. Indirect costs within the healthcare sector are costs during life years gained. For example, the costs of treating unrelated heart problems several years after a life saving operation of spinal cord trauma. In spinal disorder studies, these costs are usually not relevant, because interventions do not prolong life. Indirect costs outside the healthcare sector include costs of productivity loss [33]. The costs of work absenteeism amount to 93% of the total costs of back pain [48]. Because absenteeism has a substantial impact on the total costs of spinal disorders, this cost category should be included in economic evaluations in this field. Table 1 provides an overview of the different cost categories with examples of relevant costs within each category.

Table 1.

Different cost categories in economic evaluations in spinal disorders

| Within healthcare sector | Outside healthcare sector |

|---|---|

| Direct costs | |

| Visit to general practitioner | Alternative healers |

| Medication | Out-of-pocket costs |

| Homecare | Travel expenses |

| Treatment physical therapist, back school, etc. | Informal care |

| X-ray, MRI-scan, CT-scan | |

| Operation | |

| Hospitalisation day | |

| Outpatient visit | |

| Indirect costs | |

| Healthcare costs during life years gained | Productivity losses: absenteeism from paid and unpaid work |

Usually costs cannot be measured directly. Initially resource use is measured and subsequently valued. When performing an economic evaluation alongside a randomised clinical trial resource use can be measured with different instruments. Data on healthcare use can be collected in patient interviews or patients can be asked to fill in questionnaires or to keep a cost diary [22]. Databases of insurance companies, healthcare providers or employees and patient files can also be used to measure resource use. However, none of these methods are perfect. Patients may not adequately remember healthcare visits or days of absenteeism (recall bias) and databases do not always provide the necessary information (information bias). Using several methods simultaneously in one study generates a large amount of information but can be time consuming and expensive. This may also raise the question which method is the golden standard when results from different resources conflict.

When all relevant resource data have been collected, the next step is valuing the costs. Direct costs are determined by valuing resource use with valid unit prices. Five different ways of obtaining unit prices are distinguished: (1) prices derived from national registries; (2) prices derived from health economics literature and previous research; (3) standard costs; (4) tariffs and charges; (5) calculation of unit costs [33]. In Table 2 these methods are summarised. Two approaches are used to value indirect costs due to absenteeism: the Human Capital Approach [15] and the Friction Cost Method [26]. In the Human Capital Approach, the production losses for an individual worker are calculated from the moment of absence until full recovery or, in the absence of recovery, until the moment of death or retirement. This method is most frequently used. The Friction Cost Method takes into account that sick workers are replaced after a certain period of time, the friction period, depending on the elasticity of the labour market. For example, a worker is sick listed for more than 6 months due to low back pain and the estimated friction period is 4 months. The cost of absenteeism is calculated for 4 months; in theory, the worker can be replaced after this time period.

Table 2.

Advantages, disadvantages and recommendations for different methods of valuing resource use [33]

| Unit prices | Advantages | Disadvantages | Recommendation |

|---|---|---|---|

| (1) Prices from national registries | Limited efforts to collect data | Availability is often limited and prices may not be valid for the patient group being studied | Apply with care |

| (2) Prices from literature and previous research | Limited efforts to collect data | Availability is often limited, and it is often unclear how prices have been determined | Apply with care |

| (3) Standard costs | Represent average unit costs of standard resource units. When widely used it may eliminate price differences between studies | Standard costs do not yet take into account differences between patient groups or disease severity | Use when available |

| (4) Tariffs or charges | Largely available | Charges are the result of negotiations and do not necessarily represent the real costs | Apply with care |

| (5) Calculation of unit costs | Represents the real costs | Often difficult and time consuming to calculate | Use in units that have a substantial impact on the costs |

Analysing cost data

Reviews assessing the statistical methods used in economic evaluations showed that costs analyses need improvement [2, 24]. Analysing and interpreting costs data from a randomised clinical trial can be challenging due to the highly skewed distribution of cost data and relatively small sample sizes. The skewness is caused by a relatively small number of patients with high costs. For example, there may be a few subjects with substantial periods of absenteeism from paid work. Also, the sample size for economic evaluations need to be larger than is usually required in a randomised clinical trial due to the large variance in cost data [2]. Usually sample size calculations are based on expected differences in effects and not costs. Consequently, interpreting results from economic evaluations requires caution because the study may have been underpowered.

For the comparison of mean costs, non-parametric methods and data transformation are not regarded as appropriate methods. These methods do not necessarily compare arithmetic mean costs [3, 11, 46]. For policymakers, the arithmetic mean is the most informative measure since total costs for implementation of the intervention can be calculated from the arithmetic mean [3]. The non-parametric bootstrap method involves drawing samples with replacement from the original distribution [30]. For example, in an original dataset presenting the costs of 50 patients, random values are selected with replacement. In this way another dataset (bootstrap dataset) of 50 observations is created; this process can be repeated indefinitely. Although it does not make assumptions about the distribution, it does assume that the original dataset represents the true distribution of the data. The non-parametric bootstrap is recommended for analysing cost data or as a check on the robustness of standard parametric methods [3, 12, 46]. However, O’Hagan and Stevens have argued that non-parametric methods, such as the bootstrap based on the sample mean may be inappropriate because of the non-robustness of the sample mean to skewed data [31]. A recent study has shown that a single (non)parametric form for the distribution of costs cannot be assumed; modelling the tail of the distribution is problematic. Sample sizes should be large enough for accurate modelling of the tail of the cost distribution and sensitivity analysis should be performed for the model uncertainty [29].

Missing values

When economic data are collected alongside a randomised clinical trial, drop-outs and missing data will occur. However, few studies report drop out rates and missing values in cost data [2]. In case of missing values, a complete case analysis as well as an analysis with imputed data are recommended. Recent studies have shown that the different methods for dealing with missing data may influence outcomes of an economic evaluation, stressing the importance of reporting the completeness of economic data and the methods used to deal with missing data [32, 34]. For random missing values, the bootstrap Expectation Maximisation algorithm, multiple imputation regression and multiple imputation Monte Carlo Markov Chain are recommended as methods for analysing incomplete data [32]. However, as Briggs et al. have stated ‘imputation methods are not a cure for poor study design and/or a poor data collection process’ [9].

Discounting

Discounting means computing equivalent present values of future costs or benefits. Costs should be discounted in studies with a time horizon longer than 1 year. Although the value of the appropriate discount rate is debated, discount rates usually vary between 3 and 5% [14, 21]. Discounting effects is more controversial and discount rates varying from the same or a lower rate as the costs have been proposed [21, 23]. The use of discount rates should be clearly stated.

Sensitivity analysis

Different assumptions and choices made during economic evaluations cause uncertainty in the outcomes. In sensitivity analyses, the robustness of the various assumptions and choices are investigated. For example, in a cost–effectiveness study of physiotherapy, manual therapy and general practitioner care for neck pain a sensitivity analysis was performed leaving out two patients who had been hospitalised, generating considerably more costs than the remaining patients [27]. Different types of sensitivity analysis are identified: one way sensitivity analysis, extreme scenarios and probabilistic sensitivity analysis [7]. In the first variant, the impact of variables in the study are assessed by varying the range of plausible values. Extreme scenarios examine the most optimistic and/or the most pessimistic cost and effectiveness estimate. Monte Carlo simulations are used in a probabilistic sensitivity analysis where variables vary simultaneously [7, 37]. Sensitivity analyses enhance the interpretability and quality of an economic evaluation and should always be performed and reported.

Interpreting results

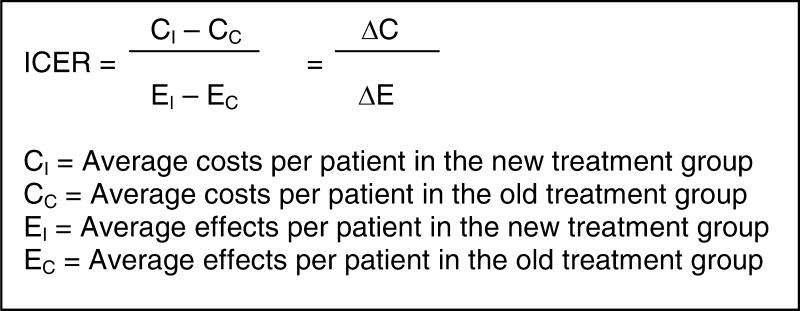

Interpreting results of CEA and/or CUA, is challenging. For each outcome measure, an incremental cost–effectiveness ratio (ICER) can be calculated. In the incremental approach additional costs of an intervention over another intervention are compared to the additional effects [15]. Costs and effects of two interventions can directly be compared since the ratio represents the difference in costs divided by the difference in effects.

The ICER indicates the additional investments needed to gain one extra unit of effect. For example, the additional costs of surgical treatment compared to non-surgical treatment to gain one point on the Roland Morris Disability Questionnaire or per QALY. Table 3 shows that the ICER is difficult to interpret. An ICER of € 2,000 could mean that the intervention is € 10,000 more expensive and five points more effective (situation A), but could also indicate that the intervention is € 10,000 less costly and five point less effective (situation B). Without the context of the values of the difference in costs and difference in effects the ICER is uninformative. To determine which treatment is to be preferred, measures of precision and the policymaker’s maximum willingness to pay (in the literature often referred to as λ) are needed.

Table 3.

Examples of incremental cost–effectiveness ratios (ICERs)

| Cost-difference between new and existing intervention (ΔC) | Effect difference between new and existing intervention (ΔE) | ICERs | |||

|---|---|---|---|---|---|

| (A) | € 10,000 | New treatment more costly | 5 | New treatment more effective | € 2,000 |

| (B) | € -10,000 | New treatment less costly | -5 | New treatment less effective | € 2,000 |

| (C) | € -10,000 | New treatment less costly | 5 | New treatment more effective | € -2,000 |

| (D) | € 10,000 | New treatment more costly | -5 | New treatment less effective | € -2,000 |

Note that the ICERs in situations A and B are identical, however in situation A, the new treatment is both more costly and more effective. While the new treatment in situation B is less costly and less effective

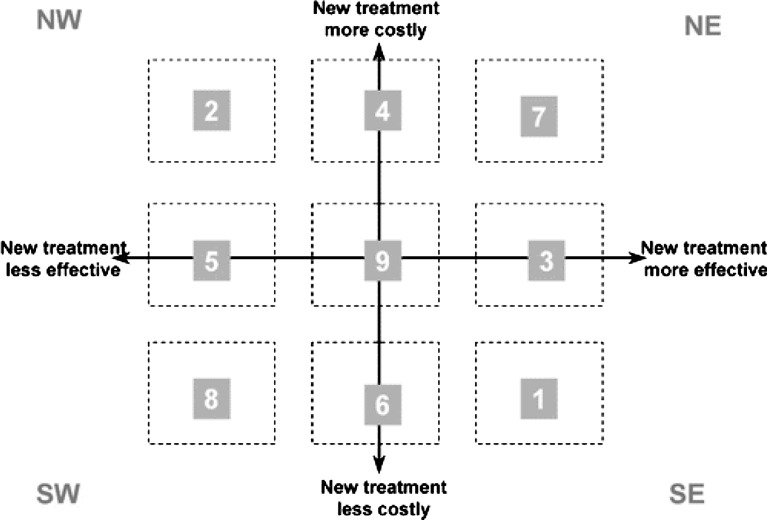

Different methods have been proposed for estimating confidence intervals for the ICER [6, 36]. On a cost–effectiveness plane, the bootstrap estimates can be displayed [30]. The x-axis represents the difference in effects and the y-axis represents the difference in costs. Four quadrants can be distinguished (see Fig. 1). In situations 1 and 2, one treatment dominates the other and pose no significant problems for interpretation. Situations 7 and 8 require information on the threshold value for determining cost–effectiveness. Interpreting the ICER when the confidence surfaces of the bootstrapped ratios overlap one of the axes is challenging. This is the case in situations 3, 4, 5 or 6 in Fig. 1 when there is no statistically significant difference in either the costs or effects.

Fig. 1.

Cost–effectiveness plane with nine possible situations resulting from an economic evaluation [9]. Reprinted, with permission, from the Annual Review of Public Health, Volume 23 ©2002 by Annual Reviews www.annualreviews.org

Negative ratios are also problematic for decision-making since they have no meaningful ordering [45]. Van Hout et al. have introduced the cost–effectiveness acceptability curve (CEAC) to overcome some of the difficulties of the ICER [47]. The curve represents the proportion of the sampling distribution of costs and effects that lie below the policymakers’ maximum willingness to pay. It shows the probability that an intervention is cost-effective for a wide range of threshold ratios [40]. Because ICERs and their confidence surfaces can lie in different quadrants the acceptability curve can take different forms [18]. Stinnett and Mullahy introduced another approach for the analysis of uncertainty: the net health benefit framework, in which the cost–effectiveness decision rule is reformulated [43].

Interpretation of an economic evaluation: an example

An example of an economic evaluation in the field of spinal disorders is the cost–effectiveness study of lumbar fusion versus non-surgical treatment by Fritzell et al. [19]. A total of 284 patients with severe and therapy-resistant chronic low back pain of unknown origin for at least 2 years were included in the study. Patients were randomly assigned to four treatment groups; three included different surgical procedures and one consisted of commonly used non-surgical treatments.

The authors clearly describe almost all of the key elements mentioned above. Although the study was initially not organised as an economic evaluation, which has consequences for the sample size and cost data collection, this is clearly discussed in the paper. Information on missing data and the choice for the cost analysis methods was not explained.

The ICER and confidence intervals all fall within the NE quadrant; lumbar fusion is both more costly and more effective than non-surgical treatment. Whether lumbar fusion is more cost-effective depends on the policymakers willingness to pay. The acceptability curves show that when the policymakers are willing to pay more for surgical treatment, the probability that lumbar fusion is more cost-effective than non-surgical treatment increases.

Cost–effectiveness threshold

Acceptability curves and net benefit only indicate the probability that a certain therapy is cost-effective. Whether the therapy is cost-effective depends on the policymakers’ maximum willingness to pay. Recent papers have focussed on cost–effectiveness thresholds [13, 16, 25]. Eichler et al. identified different types of thresholds: those proposed by individuals or institutions, thresholds estimated from willingness-to-pay or related studies, thresholds inferred from past allocation decisions and cost–effectiveness ratios from other (non-medical) programs [16]. However, the use of threshold values is debated. Gafni and Birch have argued that thresholds might lead to uncontrolled growth in healthcare expenditure and that the necessary assumptions for application of thresholds are not met in practice [4, 20]. The introduced ‘affordability curve’ combines budget constraints with cost–effectiveness. The curve shows the probability that the therapy under study is affordable given a wide range of threshold budgets [40]. It is unlikely that policymakers will use a single threshold value in the decision to implement an intervention. Other factors, such as the overall budget impact of the intervention and the absence of adequate alternatives influence policy decisions [44].

Progress to date

To assess the quality of economic evaluations for systematic reviews, several guidelines and recommendations have been developed [14, 41, 42]. Recently, the Consensus Health Economic Criteria (CHEC) list was designed [17]. The CHEC-list consists of 19 items and focuses on the methodological quality of economic evaluations. Table 4 summarises the 19 items of the CHEC-list. In a recent systematic review, economic evaluations in non-specific low back pain studies were assessed using the CHEC-list. Due to the heterogeneity of interventions, controls and study populations no definite conclusions could be drawn about the most cost-effective non-operative treatment in patients with low back pain [38].

Table 4.

Items of the Consensus on Health Economic Criteria (CHEC)-list

| 1. Is the study population clearly described? | |

| 2. Are competing alternatives clearly described? | |

| 3. Is a well-defined research question posed in answerable form? | |

| 4. Is the economic study design appropriate to the stated objective? | |

| 5. Is the chosen time horizon appropriate in order to include relevant costs and consequences? | |

| 6. Is the actual perspective chosen appropriate? | |

| 7. Are all important and relevant costs for each alternative identified? | |

| 8. Are all costs measured appropriately in physical units? | |

| 9. Are costs valued appropriately? | |

| 10. Are all important and relevant outcomes for each alternative identified? | |

| 11. Are all outcomes measured appropriately? | |

| 12. Are outcomes valued appropriately? | |

| 13. Is an incremental analysis of costs and outcomes of alternatives performed? | |

| 14. Are all future costs and outcomes discounted appropriately? | |

| 15. Are all important variables, whose values are uncertain, appropriately subjected to sensitivity analysis? | |

| 16. Do the conclusions follow from the data reported? | |

| 17. Does the study discuss the generalisability of the results to other settings and patient/client groups? | |

| 18. Does the article indicate that there is no potential conflict of interest of study researcher(s) and funder(s)? | |

| 19. Are ethical and distributional issues discussed appropriately? |

Conclusion and recommendations

It is often argued that decisions in clinical practice should not be based on cost issues but medical necessity or clinical effectiveness. With the rising healthcare expenditure and its consequences for budgets choices have to be made. These choices do not have to be made on costs considerations alone but at the same time, basing decisions solely on medical necessity would be insufficient. Implicitly, choices in clinical practice are already based on cost considerations. Simply providing all available care to one group of patients implies that other groups of patients are left with nothing. Economic evaluations can provide valuable information but the methodology and especially the cost methodology needs to improve. To be able to critically appraise economic evaluations and consequently use these studies, knowledge of the methodological aspects is of utmost importance. Although the ‘perfect’ study is an utopia, specific assumptions, choices and used methods should be clearly described to provide insight in the quality and practical use of the evaluation. In the near future, economic assessment may thus play an increasingly important role in the outcome evaluation of spinal interventions.

References

- 1.(2004) United Kingdom back pain exercise and manipulation (UK BEAM) randomised trial: cost effectiveness of physical treatments for back pain in primary care. BMJ 329:1381 [DOI] [PMC free article] [PubMed]

- 2.Barber JA, Thompson SG. Analysis and interpretation of cost data in randomised controlled trials: review of published studies. BMJ. 1998;317:1195–1200. doi: 10.1136/bmj.317.7167.1195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Barber JA, Thompson SG. Analysis of cost data in randomized trials: an application of the non-parametric bootstrap. Stat Med. 2000;19:3219–3236. doi: 10.1002/1097-0258(20001215)19:23<3219::AID-SIM623>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- 4.Birch S, Gafni A. Changing the problem to fit the solution: Johannesson and Weinstein’s (mis) application of economics to real world problems. J Health Econ. 1993;12:469–476. doi: 10.1016/0167-6296(93)90006-Z. [DOI] [PubMed] [Google Scholar]

- 5.Bombardier C. Outcome assessments in the evaluation of treatment of spinal disorders: summary and general recommendations. Spine. 2000;25:3100–3103. doi: 10.1097/00007632-200012150-00003. [DOI] [PubMed] [Google Scholar]

- 6.Briggs A, Fenn P. Confidence intervals or surfaces? Uncertainty on the cost–effectiveness plane. Health Econ. 1998;7:723–740. doi: 10.1002/(SICI)1099-1050(199812)7:8<723::AID-HEC392>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- 7.Briggs AH, Gray AM. Handling uncertainty in economic evaluations of healthcare interventions. BMJ. 1999;319:635–638. doi: 10.1136/bmj.319.7210.635. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Briggs AH, O’Brien BJ. The death of cost–minimization analysis? Health Econ. 2001;10:179–184. doi: 10.1002/hec.584. [DOI] [PubMed] [Google Scholar]

- 9.Briggs AH, O’Brien BJ, Blackhouse G. Thinking outside the box: recent advances in the analysis and presentation of uncertainty in cost–effectiveness studies. Annu Rev Public Health. 2002;23:377–401. doi: 10.1146/annurev.publhealth.23.100901.140534. [DOI] [PubMed] [Google Scholar]

- 10.Briggs A, Clark T, Wolstenholme J, Clarke P. Missing presumed at random: cost-analysis of incomplete data. Health Econ. 2003;12:377–392. doi: 10.1002/hec.766. [DOI] [PubMed] [Google Scholar]

- 11.Coyle D. Statistical analysis in pharmacoeconomic studies. A review of current issues and standards. Pharmacoeconomics. 1996;9:506–516. doi: 10.2165/00019053-199609060-00005. [DOI] [PubMed] [Google Scholar]

- 12.Desgagne A, Castilloux AM, Angers JF, LeLorier J. The use of the bootstrap statistical method for the pharmacoeconomic cost analysis of skewed data. Pharmacoeconomics. 1998;13:487–497. doi: 10.2165/00019053-199813050-00002. [DOI] [PubMed] [Google Scholar]

- 13.Devlin N, Parkin D. Does NICE have a cost–effectiveness threshold and what other factors influence its decisions? A binary choice analysis. Health Econ. 2004;13:437–452. doi: 10.1002/hec.864. [DOI] [PubMed] [Google Scholar]

- 14.Drummond MF, Jefferson TO. Guidelines for authors and peer reviewers of economic submissions to the BMJ The BMJ Economic Evaluation Working Party. BMJ. 1996;313:275–283. doi: 10.1136/bmj.313.7052.275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Drummond MF, O’Brien B, Stoddart GL, Torrance GW. Methods for the economic evaluation of health care programmes, 2nd edn. Oxford: Oxford University Press; 1997. [Google Scholar]

- 16.Eichler HG, Kong SX, Gerth WC, Mavros P, Jonsson B. Use of cost–effectiveness analysis in health-care resource allocation decision-making: how are cost–effectiveness thresholds expected to emerge? Value Health. 2004;7:518–528. doi: 10.1111/j.1524-4733.2004.75003.x. [DOI] [PubMed] [Google Scholar]

- 17.Evers S, Goossens M, de Vet HC, van Tulder M, Ament A (2005) Assessment of methodological quality of economic evaluations-CHEC. Int J Technol Assess Health Care [PubMed]

- 18.Fenwick E, O’Brien BJ, Briggs A. Cost–effectiveness acceptability curves—facts, fallacies and frequently asked questions. Health Econ. 2004;13:405–415. doi: 10.1002/hec.903. [DOI] [PubMed] [Google Scholar]

- 19.Fritzell P, Hagg O, Jonsson D, Nordwall A. Cost–effectiveness of lumbar fusion and nonsurgical treatment for chronic low back pain in the Swedish Lumbar Spine Study: a multicenter, randomized, controlled trial from the Swedish Lumbar Spine Study Group. Spine. 2004;29:421–434. doi: 10.1097/01.BRS.0000102681.61791.12. [DOI] [PubMed] [Google Scholar]

- 20.Gafni A, Birch S. Guidelines for the adoption of new technologies: a prescription for uncontrolled growth in expenditures and how to avoid the problem. CMAJ. 1993;148:913–917. [PMC free article] [PubMed] [Google Scholar]

- 21.Gold MR, Siegel JE, Russel LB, Weinstein MC. Cost–effectiveness in health and medicine. New York: Oxford University Press; 1996. [Google Scholar]

- 22.Goossens ME, Rutten-van Molken MP, Vlaeyen JW, Linden SM. The cost diary: a method to measure direct and indirect costs in cost–effectiveness research. J Clin Epidemiol. 2000;53:688–695. doi: 10.1016/S0895-4356(99)00177-8. [DOI] [PubMed] [Google Scholar]

- 23.Gravelle H, Smith D. Discounting for health effects in cost–benefit and cost–effectiveness analysis. Health Econ. 2001;10:587–599. doi: 10.1002/hec.618. [DOI] [PubMed] [Google Scholar]

- 24.Graves N, Walker D, Raine R, Hutchings A, Roberts JA. Cost data for individual patients included in clinical studies: no amount of statistical analysis can compensate for inadequate costing methods. Health Econ. 2002;11:735–739. doi: 10.1002/hec.683. [DOI] [PubMed] [Google Scholar]

- 25.Hirth RA, Chernew ME, Miller E, Fendrick AM, Weissert WG. Willingness to pay for a quality-adjusted life year: in search of a standard. Med Decis Making. 2000;20:332–342. doi: 10.1177/0272989X0002000310. [DOI] [PubMed] [Google Scholar]

- 26.Koopmanschap MA, Rutten FF, Ineveld BM, Roijen L. The friction cost method for measuring indirect costs of disease. J Health Econ. 1995;14:171–189. doi: 10.1016/0167-6296(94)00044-5. [DOI] [PubMed] [Google Scholar]

- 27.Korthals-de Bos IB, Hoving JL, Tulder MW, Rutten-van Molken MP, Ader HJ, Vet HC, et al. Cost effectiveness of physiotherapy, manual therapy, and general practitioner care for neck pain: economic evaluation alongside a randomised controlled trial. BMJ. 2003;326:911. doi: 10.1136/bmj.326.7395.911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kovacs FM, Llobera J, Abraira V, Lazaro P, Pozo F, Kleinbaum D. Effectiveness and cost–effectiveness analysis of neuroreflexotherapy for subacute and chronic low back pain in routine general practice: a cluster randomized, controlled trial. Spine. 2002;27:1149–1159. doi: 10.1097/00007632-200206010-00004. [DOI] [PubMed] [Google Scholar]

- 29.Nixon RM, Thompson SG. Parametric modelling of cost data in medical studies. Stat Med. 2004;23:1311–1331. doi: 10.1002/sim.1744. [DOI] [PubMed] [Google Scholar]

- 30.O’Brien BJ, Briggs AH. Analysis of uncertainty in health care cost–effectiveness studies: an introduction to statistical issues and methods. Stat Methods Med Res. 2002;11:455–468. doi: 10.1191/0962280202sm304ra. [DOI] [PubMed] [Google Scholar]

- 31.O’Hagan A, Stevens JW. Assessing and comparing costs: how robust are the bootstrap and methods based on asymptotic normality? Health Econ. 2003;12:33–49. doi: 10.1002/hec.699. [DOI] [PubMed] [Google Scholar]

- 32.Oostenbrink JB, Al MJ (2005) The analysis of incomplete cost data due to dropout. Health Econ [DOI] [PubMed]

- 33.Oostenbrink JB, Koopmanschap MA, Rutten FF. Standardisation of costs: the Dutch Manual for Costing in economic evaluations. Pharmacoeconomics. 2002;20:443–454. doi: 10.2165/00019053-200220070-00002. [DOI] [PubMed] [Google Scholar]

- 34.Oostenbrink JB, Al MJ, Rutten-van Molken MP. Methods to analyse cost data of patients who withdraw in a clinical trial setting. Pharmacoeconomics. 2003;21:1103–1112. doi: 10.2165/00019053-200321150-00004. [DOI] [PubMed] [Google Scholar]

- 35.Palmer S, Byford S, Raftery J. Economics notes: types of economic evaluation. BMJ. 1999;318:1349. doi: 10.1136/bmj.318.7194.1349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Polsky D, Glick HA, Willke R, Schulman K. Confidence intervals for cost–effectiveness ratios: a comparison of four methods. Health Econ. 1997;6:243–252. doi: 10.1002/(SICI)1099-1050(199705)6:3<243::AID-HEC269>3.0.CO;2-Z. [DOI] [PubMed] [Google Scholar]

- 37.Robinson R. Cost–effectiveness analysis. BMJ. 1993;307:793–795. doi: 10.1136/bmj.307.6907.793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.van der Roer N, Goossens M, Evers S, van Tulder M (2005) What is the most cost-effective treatment for patients with low back pain? A systematic review. Best Pract Res Clin Rheumatol [DOI] [PubMed]

- 39.Seferlis T, Lindholm L, Nemeth G. Cost-minimisation analysis of three conservative treatment programmes in 180 patients sick-listed for acute low-back pain. Scand J Prim Health Care. 2000;18:53–57. doi: 10.1080/02813430050202578. [DOI] [PubMed] [Google Scholar]

- 40.Sendi PP, Briggs AH. Affordability and cost–effectiveness: decision-making on the cost–effectiveness plane. Health Econ. 2001;10:675–680. doi: 10.1002/hec.639. [DOI] [PubMed] [Google Scholar]

- 41.Siegel JE, Weinstein MC, Russell LB, Gold MR. Recommendations for reporting cost–effectiveness analyses. Panel on cost–effectiveness in health and medicine. JAMA. 1996;276:1339–1341. doi: 10.1001/jama.276.16.1339. [DOI] [PubMed] [Google Scholar]

- 42.Siegel JE, Torrance GW, Russell LB, Luce BR, Weinstein MC, Gold MR. Guidelines for pharmacoeconomic studies. Recommendations from the panel on cost effectiveness in health and medicine. Panel on cost effectiveness in health and medicine. Pharmacoeconomics. 1997;11:159–168. doi: 10.2165/00019053-199711020-00005. [DOI] [PubMed] [Google Scholar]

- 43.Stinnett AA, Mullahy J. Net health benefits: a new framework for the analysis of uncertainty in cost–effectiveness analysis. Med Decis Making. 1998;18:S68–S80. doi: 10.1177/0272989X9801800209. [DOI] [PubMed] [Google Scholar]

- 44.Taylor RS, Drummond MF, Salkeld G, Sullivan SD. Inclusion of cost effectiveness in licensing requirements of new drugs: the fourth hurdle. BMJ. 2004;329:972–975. doi: 10.1136/bmj.329.7472.972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Thompson S. Statistical issues in cost–effectiveness analyses. Stat Methods Med Res. 2002;11:453–454. doi: 10.1191/0962280202sm303ed. [DOI] [PubMed] [Google Scholar]

- 46.Thompson SG, Barber JA. How should cost data in pragmatic randomised trials be analysed? BMJ. 2000;320:1197–1200. doi: 10.1136/bmj.320.7243.1197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Hout BA, Al MJ, Gordon GS, Rutten FF. Costs, effects and C/E-ratios alongside a clinical trial. Health Econ. 1994;3:309–319. doi: 10.1002/hec.4730030505. [DOI] [PubMed] [Google Scholar]

- 48.Tulder MW, Koes BW, Bouter LM. A cost-of-illness study of back pain in The Netherlands. Pain. 1995;62:233–240. doi: 10.1016/0304-3959(94)00272-G. [DOI] [PubMed] [Google Scholar]