Abstract

Image labeling is an essential step for quantitative analysis of medical images. Many image labeling algorithms require seed identification in order to initialize segmentation algorithms such as region growing, graph cuts, and the random walker. Seeds are usually placed manually by human raters, which makes these algorithms semi-automatic and can be prohibitive for very large datasets. In this paper an automatic algorithm for placing seeds using multi-atlas registration and statistical fusion is proposed. Atlases containing the centers of mass of a collection of neuroanatomical objects are deformably registered in a training set to determine where these centers of mass go after labels transformed by registration. The biases of these transformations are determined and incorporated in a continuous form of Simultaneous Truth And Performance Level Estimation (STAPLE) fusion, thereby improving the estimates (on average) over a single registration strategy that does not incorporate bias or fusion. We evaluate this technique using real 3D brain MR image atlases and demonstrate its efficacy on correcting the data bias and reducing the fusion error.

Keywords: Labeling, seed, continuous, STAPLE, atlas, statistics, fusion, bias, registration

1. INTRODUCTION

Image labeling is an important step in automated analysis of medical images. Several segmentation algorithms that can be used for labeling, such as region growing, the random walker[1], and graph cuts[2], require the identification of one or more initial seeds. Typically, seeds are manually picked by human raters, which means that these algorithms are not fully-automatic, and the burden of providing human raters to pick seeds on a very large database of images can become prohibitive due to either time or cost reasons. In some cases, multiple raters may be desirable for robustness in face of human error, and this becomes even more costly. It has become increasingly common to use human raters to establish an initial atlas of validated training data from which a fully-automated, multi-atlas algorithm is established to mimic the human raters to carry out the desired task on an otherwise prohibitively large dataset. The statistical fusion of object labels carried over from multiple registrations of a set of labeled atlases has been well explored and is being actively investigated and improved[3, 4, 5, 6]. In this paper, we explore the statistical fusion of seeds that are intended to initialize a segmentation algorithm that would run in the native subject space. At this stage of development, we consider seeds that are defined as object centers of mass, but the basic technique we present can be easily modified to estimate seeds that are optimized for a particular segmentation algorithm or to establish multiple seeds within each object.

2. METHODS

Suppose an atlas of labeled objects in a standardized space (such as affine-registered MNI space) is available. Then a subject image is registered into this standardized space and the centers of mass on each of its objects are desired. A "standard" approach might be to register all objects into the subject space, fuse the labels using (for example) classic discrete Simultaneous Truth And Performance Level Estimation (STAPLE) algorithm[3], and then recompute the centers of mass. In this work, we are looking for a general approach in which centers of mass that are known in the atlas spaces might be directly fused after registration without requiring a recomputation based on an estimated label fusion.

Therefore, first we deformably register[7] each atlas from the training set into the subject, establishing a collection of estimates of centers of mass for each object. Before these data can be fused, in correspondence to our previously reported method called MAP-CSTAPLE[6] where both the continuous STAPLE algorithm (CSTAPLE)[4, 5] and prior information on rater (training atlas) performance (bias and variance) are needed, we still need to establish a prior model of rater performance. So now we only consider the training set having the centers of mass of all labeled objects. Every atlas in it is deformably registered to all other atlases and the transformed centers of mass are compared to the true positions, generating a set of error vectors. Some atlases will perform well on some datasets and not so well on others, and in general each object within each atlas will have an a priori performance[8] characterized statistically by its bias and variance. With this prior information also incorporated, continuous data fusion is established by MAP-CSTAPLE resulting in reduced error due to the expected bias and variance of each training atlas taken into account.

To explain mathematically, let the subject atlas be denoted as At, and R training atlases are denoted as {A1, A2, …, AR} with corresponding labels {I1(x), I2(x), …, IR(x)}. Each label set contains same number of object labels, i.e. the jth label of atlas Am is donated with indicator function where it is 1 when coordinate x belongs to the label and 0 elsewhere. The center of mass of this object label is define as

| (1) |

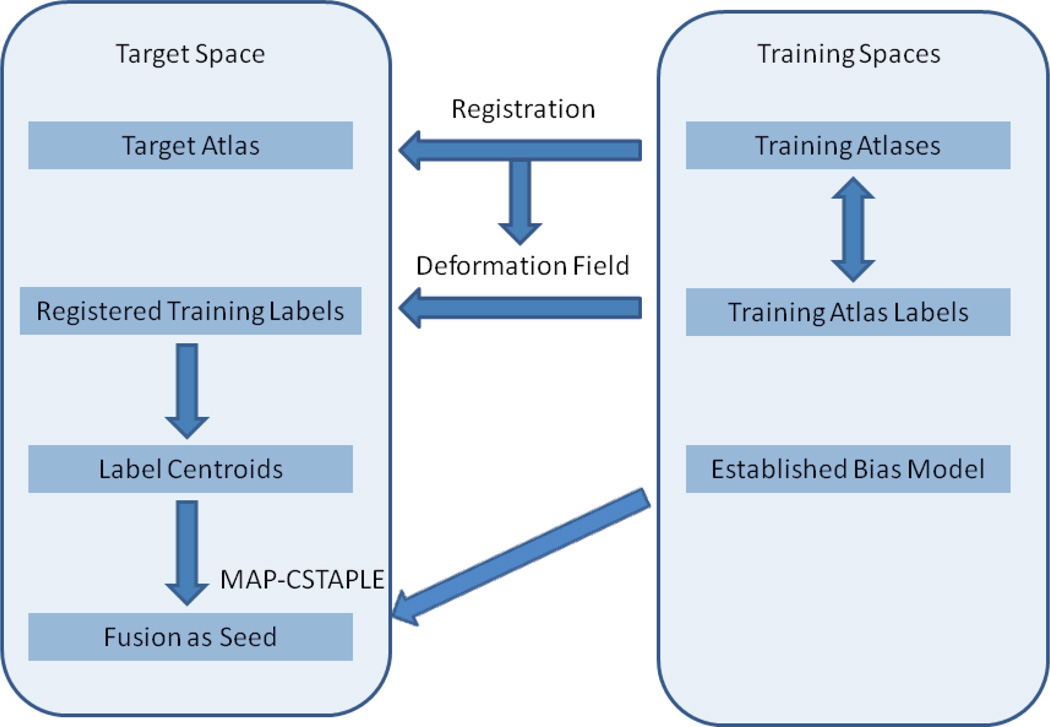

Our approach has the following steps, as illustrated using a flowchart in Figure 1.

Register every training atlas to the subject atlas. Deformable registration is required to properly align different atlases. In our experiments we apply FNIRT algorithm[7] for the registration. The deformation fields from atlas Am to At are calculated and stored as {φ1t, φ2t, …, φRt}.

- Deform the labels of all training atlases with the deformation field, which will result in R registered label sets in the subject space {I1t(x), I2t(x), …, IRt(x)}, where

(2) - For every label j, calculate the centers of mass after labels are transformed by registration, i.e., the centers of mass of {I1t(x), I2t(x), …, IRt(x)}, by

(3) - The bias of a center of mass must be learned as prior information to be applied within MAP-CSTAPLE. Both forward and backward registrations are performed on every pair of the training atlases, so that we know the location of every center of mass j in its own space Am as well as the location in the space of any other training atlas An. Under the assumption that the biases of all atlases lie in the same standardized space after registration, the bias of center of mass j is computed by the equation below.

(4) - Now we can apply the MAP-CSTAPLE algorithm as described in our previous report. In contrast to STAPLE, which estimates a fusion of discrete labels as well as rater performance parameters (sensitivity and specificity) simultaneously, CSTAPLE deals with multi-dimensional continuous variables. The labeled point location is usually written in the form of vector , which is the decision of rater m for point j. A fusion of these decisions denoted as tj is to be found for each point j. And also for each rater m, the performance parameter, i.e., bias μm, and variance Σm is to be estimated simultaneously. Mathematically, it can be proved that under the same maximum likelihood estimation framework as discrete STAPLE, CSTAPLE yields equal likelihood for arbitrary rater bias parameter μm so that bias is indeterminate[6]. If a rater has a systematic bias, the resulting fusion may not be accurate if this bias is not accounted. Therefore we previously proposed the MAP-CSTAPLE algorithm which utilizes prior information to restrict the rater bias and guide the iterations to converge to a proper bias estimate through a maximum a posteriori estimation framework. The proposed EM iteration[9, 10] equations are

- E-Step:

(5) - M-Step:

(6)

- E-Step:

(7) - M-Step:

(8)

Figure 1.

A flowchart for the proposed seed identification technique.

Note that the new bias updating equation avoids the averaging through all the points and now treat each point differently with a specific bias, even for the same rater. Using symbols from previous steps, for any target atlas t, we substitute decision and bias prior mean Also for bias prior variance we use the scalar variance of over all targets n. After equations (7) and (8) converge[11], the estimated fusion tj(∞) can be used as seed for label point j.

3. RESULTS

The dataset of 148 complete 3D real brain atlases were evaluated in the experiment. On each atlas, 38 labels were identified and validated which segmented the entire brain volume. In correspondence with our model, for any target atlas, the total number of points J was 38. First, 147 of the atlases were used as training dataset to get the bias prior for raters in order to evaluate the one rest subject atlas. We explored the fusion of different rater numbers from 1 to 147. For any fixed rater number, the raters were randomly drawn from the training dataset to be used to calculate the fusion. Every one of the atlases was regarded as the subject atlas with the rest as training. The entire setup was repeated in 50 Monte-Carlos with random raters and random subject atlas. We then computed the mean squared error (MSE) of fusion from the truth for every subject atlas, and got an average MSE of 1.9613±1.0565 voxel2. At the same time, we also did the evaluation on each of the same dataset with the fusion technique replaced with normal averaging and MAP-CSTAPLE with zero prior, and got an average MSE of 2.2881±1.0230 voxel2 and 2.3126±1.1153 voxel2 respectively. The MSE of one subject atlas is shown in Figure 2(d).

Figure 2.

(a) The bias model of one training atlas that has a symmetrical spatial pattern. (b) The MSE of every fusion point from the truth, which demonstrates MAP-CSTAPLE has an ability to reduce the fusion errors with the bias prior model. (c) The number of fusion points close to the truth. (d) The MSE of the fusion of one subject with respect to rater numbers. Training data has 147 atlases.

We also used 50 of the atlases as training data and the remaining 98 atlases as testing data. 50 Monte-Carlos were performed with the same setup as in previous experiment. For fixed training and testing datasets, the fusion on every single subject atlas was also evaluated with MAP-CSTAPLE and normal averaging. The average MSE was 1.6734±1.3962 voxel2 for MAP-CSTAPLE and 2.0130±1.4050 voxel2 for averaging. Figure 2(c) shows the number of fusion points with respect to distance from the truth. Generally speaking, at a certain distance from the truth (a ball centered at truth with certain radius), MAP-CSTAPLE is more likely to capture points than normal averaging technique.

4. CONCLUSION AND DISCUSSION

In this work we described a new approach to automatically determine seed locations for segmentation algorithms that require initial seeds. The approach was based on the STAPLE framework used for fusion approximation. We designed our model based on a previously proposed method – MAP-CSTAPLE – for continuous vector fusion problems and modified the equations and assumptions to fit the real brain atlas data we are evaluating, i.e., augmented the algorithm to handle the spatially bias-varying case. Experiment results demonstrated improved performance as compared to simple averaging. By using this approach, semi-automatic segmentation algorithms can become fully automatic, and this provides an alternative multi-atlas approach to image labeling over the conventional label fusion approaches.

By directly modifying the bias parameters to spatially vary at different label points, we may have introduced another problem of insufficiency. In particular, since each rater performs only once at each label point, it is difficult to reliably estimate a spatially-varying bias for the rater at that point. In the future, therefore, we will develop and investigate a model that incorporates measurement repetitions.

ACKNOWLEDGEMENTS

This project was supported by NIH/NINDS 1R01NS056307 and NIH/NINDS 1R21NS064534.

REFERENCES

- 1.Grady L. Random walks for image segmentation. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2006;28(no. 11):1768–1783. doi: 10.1109/TPAMI.2006.233. [DOI] [PubMed] [Google Scholar]

- 2.Freedman D, Zhang T. Interactive graph cut based segmentation with shape priors. Computer Vision and Pattern Recognition 2005. 2005;Vol 1:755–762. [Google Scholar]

- 3.Warfield SK, Zou KH, Wells WM. Simultaneous truth and performance level estimation (STAPLE): an algorithm for the validation of image segmentation. IEEE Trans Med Imaging. 2004;23(7):903–921. doi: 10.1109/TMI.2004.828354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Warfield S, Zou K, Wells W. Validation of image segmentation by estimating rater bias and variance. Medical Image Computing and Computer-Assisted Intervention. 2006;4190:839–847. doi: 10.1007/11866763_103. [DOI] [PubMed] [Google Scholar]

- 5.Commowick O, Warfield SK. A Continuous STAPLE for Scalar, Vector, and Tensor Images: An Application to DTI Analysis. IEEE Trans Med Imaging. 2009;28(6):838–846. doi: 10.1109/TMI.2008.2010438. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Xing F, Soleimanifarda S, Prince JL, Landman BA. Statistical Fusion of Continuous Labels: Identification of Cardiac Landmarks. Proceedings of SPIE. 2011;Vol. 7962 doi: 10.1117/12.877884. 796206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Woolrich MW, Jbabdi S, Patenaude B, Chappell M, Makni S, Behrens T, Beckmann C, Jenkinson M, Smith SM. Bayesian analysis of neuroimaging data in FSL. NeuroImage. 2009;45:S173–S186. doi: 10.1016/j.neuroimage.2008.10.055. [DOI] [PubMed] [Google Scholar]

- 8.Gauvain JL, Lee CH. Maximum a posteriori estimation for multivariate Gaussian mixture observations of Markov chains. IEEE Transactions on Speech and Audio Processing. 1994;2:291–298. [Google Scholar]

- 9.McLachlan GJ, Krishnan T. The EM algorithm and extensions. New York: John Wiley and Sons Ltd; 1997. [Google Scholar]

- 10.Rohlfing T, Russakoff DB, Maurer CR. Expectation maximization strategies for multi-atlas multi-label segmentation; Proc. Int. Conf. Information Processing in Medical Imaging; 2003. pp. 210–221. [DOI] [PubMed] [Google Scholar]

- 11.Wu CFJ. On the convergence properties of the EM algorithm. The Annals of Statistics. 1983;11(1):95–103. [Google Scholar]