Abstract

Background

Evidence is accumulating to suggest that clinical guidelines should be modified for patients with comorbidities, yet there is no quantitative and objective approach that considers benefits together with risks.

Methods

We outline a framework using a payoff time, which we define as the minimum elapsed time until the cumulative incremental benefits of a guideline exceed its cumulative incremental harms. If the payoff time of a guideline exceeds a patient’s comorbidity-adjusted life expectancy, then the guideline is unlikely to offer a benefit and should be modified. We illustrate the frame-work by applying this method to colorectal cancer screening guidelines for 50-year-old men with human immunodeficiency virus (HIV) and 60-year-old women with congestive heart failure (CHF).

Results

We estimated that colorectal cancer screening payoff times for 50-year-old men with HIV would range from 1.9 to 5.0 years and that colorectal cancer screening payoff times for 60-year-old women with CHF would range from 0.7 to 2.9 years. Because the payoff times for 50-year-oldmen with HIV were lower than their life expectancies (12.5–24.0 years), colorectal cancer screening may be beneficial for these patients. In contrast, because payoff times for 60-year-old women with CHF were sometimes greater than their life expectancies (0.6 to > 5 years), colorectal cancer screening is likely to be harmful for some of these patients.

Conclusion

Use of a payoff time calculation may be a feasible framework to tailor clinical guidelines to the comorbidity profiles of individual patients.

Evidence is accumulating to suggest that some clinical guidelines should be modified for patients with severe comorbidities.1–5 Patients with comorbid illness have higher competing risks of death and therefore may be unlikely to survive long enough to benefit from disease-specific guidelines that have delayed benefits but immediate harms.2,3 For example, it seems unwise to recommend colorectal cancer screening for a woman with severe congestive heart failure (CHF) because she may die before the benefits from colonoscopy outweigh the harms. Data supporting clinical guidelines usually exclude patients with substantial comorbidity burdens and therefore do not suggest how guidelines should be individualized for patients with comorbidities that substantially affect life expectancy.1–3,5

Although providers have the option of using clinical judgment to withhold interventions when harm is likely to exceed benefit, a substantial body of evidence suggests that providers do not make this assessment accurately. In a study of nearly 600 000 veterans in care, 33% of very elderly patients (aged ≥ 85 years) were screened for prostate cancer, even though few of them were likely to survive until the benefits of screening could exceed the harms.6 Also, those who had multiple chronic illnesses were screened as often as those who were well. Among patients in whom early-stage colorectal cancer was detected by screening, many had 3 or more chronic conditions and did not survive long enough to benefit from the early diagnosis.7 The advent of incentives and reinforcement mechanisms (eg, pay for performance, quality “benchmarks,” and rudimentary clinical reminder systems) will likely reinforce inclinations to apply guidelines rigidly.4,8

Approaches have been advocated for tailoring guidelines based on the scope and severity of comorbidities.1–5 However, these approaches may be difficult to apply at the point of care because they do not weigh benefits and harms quantitatively and therefore may not offer concise and transparent support for clinical decision making. We investigated whether we could develop a framework for individualizing guidelines that was simple enough to be used within a clinical decision aid at the point of care, yet was complex enough to weigh harms and benefits quantitatively. To illustrate our framework, we focus on 1 particular guideline (colorectal cancer screening) and 2 common comorbidities (human immunodeficiency [HIV] and CHF); however, our approach can be applied broadly to other guidelines and other chronic diseases.

METHODS

Our framework is based on the observation that potential benefits from guidelines often occur subsequent to potential harms. If a particular patient is unlikely to survive until the cumulative benefits from a guideline exceed its cumulative harms, the guideline is unlikely to help that patient.1–3 We define benefits as any outcomes that lessen morbidity and/or mortality, and these outcomes are generally the desired consequences of a guideline (eg, risk reduction of adverse outcomes such as colorectal cancer incidence). We define harms as any outcomes that increase morbidity and/or mortality, and these outcomes are generally the undesired consequences of a guideline (eg, side effects or complications such as perforation of the colon).

We define payoff time as the minimum time until the cumulative incremental benefits that are attributable to a guideline exceed its cumulative incremental harms. When the payoff time of a guideline is longer than a patient’s estimated life expectancy, then that guideline is unlikely to benefit that patient. The concept of a payoff time is applicable to any clinical guideline for which the expected harms occur over a shorter time horizon than the expected benefits. For example, using colonoscopy to screen for colorectal cancer is likely to have a payoff time because its harms (eg, chance of colon perforation or discomfort) occur quickly, at the time of the procedure, whereas its benefit (eg, decreased mortality risk from colon cancer) occur later, over a longer period of time. In contrast, other guidelines (eg, lipid-lowering treatment) may not have payoff times because their harms (adverse effects and inconvenience of medications) occur more contemporaneously with their benefits (decreased risk of cardiovascular events) or because their benefits never exceed their harms. It is rarely possible to identify every possible benefit and harm; therefore, only those benefits and harms that are of particular importance are likely to be considered in payoff time calculations. For payoff times to be clinically meaningful, benefits and harms should encompass events of comparable clinical impact, much like composite end points in clinical trials.

The comorbid populations that we chose to illustrate our approach were 50-year-old men with chronic HIV infection and 60-year-old women with CHF, and the clinical guideline that we chose to evaluate was colorectal cancer screening. Colorectal cancer screening may take a variety of forms. For this analysis, we decided to evaluate screening colonoscopy once every 10 years. We calculated payoff times based on 2 specifications of benefits and harms: the first includes only effects on mortality (mortality payoff time), and the second includes only effects on serious clinical events (adverse event payoff time).

CALCULATING THE PAYOFF TIME

We calculated the payoff time by determining when the cumulative incremental benefit of the guideline first becomes larger than the cumulative incremental harm. Correspondingly, the mortality payoff time for colorectal cancer screening occurs when the cumulative mortality decrease from fatal colorectal cancers first becomes larger than the cumulative mortality increase from fatal complications (eg, fatal perforation of the colon). Similarly, the adverse event payoff time for colorectal cancer screening occurs when the cumulative risk reduction of colorectal cancer incidence first becomes larger than the cumulative risk increase from serious complications (eg, perforation of the colon).

In this report, we estimate payoff times based on mortality and significant adverse events; however, it should be noted that payoff times may also be formulated using alternative specifications for outcomes (eg, considering decrements in quality as well as quantity of life), in which case they may take into consideration other consequences, including the discomfort associated with screening.

DATA SOURCES

Estimating the Payoff Time

We estimated age-and sex-specific colorectal cancer incidence and death rates from SEER (Surveillance, Epidemiology, and End Results) surveillance data (2000–2003).9 Because we sought to design a framework that could be linked to existing clinical guidelines, we based our analysis on the same data sources that were used by expert panels to formulate guidelines. In particular, we estimated the risk reduction for colorectal cancer based on those data sources used by the US Preventive Task Force to construct colorectal cancer screening guidelines.10 Because these studies11–17 did not agree on the magnitude of risk reduction (range, 49%–90%), we used an intermediate estimate (70%) for this analysis.

Similarly, we estimated the likelihood of colon perforation and the likelihood of death from colon perforation based on the data sources used by the US Preventive Task Force.11–17 Because we were applying these data to populations with significant comorbidites, we selected upper bound risk estimates from among the ranges reported in these studies (risk of colon perforation per colonoscopy, 0.20%; range, 0.07%–0.20%; risk of death from colon perforation, 0.020% per colonoscopy; range, 0.005%–0.024%).

Estimating Life Expectancy

Estimating comorbidity-specific life expectancy may be accomplished by a multitude of methods, including natural history models of particular diseases, estimations by the “declining exponential approximation of life expectancy” (DEALE),18 or approximation based on age and quartile of relative health.3 For the patient groups that are the focus of this report, we estimated the life expectancy for patients with HIV based on the mechanistic model of HIV progression developed by Braithwaite et al,19–21 which predicts survival and time to failure of antiretroviral regimens based on patient characteristics including age, CD4 lymphocyte count, and viral load; and we made inferences regarding the life expectancy for patients with CHF based on the Seattle Heart Failure Model,22 which predicts survival over a 3-year period based on a wide spectrum of patient and treatment characteristics, including age, sex, New York Heart Association class, ejection fraction, blood pressure, and medications.

We chose these particular prediction models because they have been extensively validated. The HIV model’s internal validity has been demonstrated by the agreement of predicted and observed times with treatment failure and survival in the 3545 patient sample on which it was calibrated. Its external validity has been demonstrated by the agreement of predicted and observed mortality rates in a sample of 12 574 patients who were almost entirely distinct from the derivation cohort,19 as well as its ability to predict the rate of accumulating highly active antiretroviral therapy resistance mutations20 and the U-shaped relationship between antiretroviral adherence and resistance accumulation.21 Patients with HIV were stratified by CD4 lymphocyte count and viral load. The Seattle Heart Failure Model’s validity has been demonstrated by accurately predicting survival in 5 separate cohorts that were distinct from the derivation cohort, totaling 9942 patients with heart failure and 17 307 person-years of follow-up.22 Patients with heart failure were stratified by their particular score, ranging from −1 (the most favorable score) to +4 (the worst score). (The model is available online free of charge at www.SeattleHeartFailureModel.org).

Interpreting the payoff time requires estimating life expectancy, whereas some predictive models may instead estimate median survival or the probability of survival over a designated time horizon (eg, the Seattle Heart Failure Model). In these circumstances, the DEALE can be used to estimate life expectancy from alternate survival metrics.18 Accordingly, we used the DEALE to estimate life expectancy from the Seattle Heart Failure Model’s survival predictions for those stratifications with sufficiently high predicted mortality (> 10% per year).

RESULTS

We first estimated the payoff times for colorectal cancer screening for our 2 target groups (50-year-old men with HIV and 60-year-old women with CHF). We then estimated the life expectancy for each group, stratifying these estimates based on important prognostic indicators. By comparing their life expectancies with our estimates of the payoff times, we were able to determine whether colorectal cancer screening should be advised for these groups.

ESTIMATING PAYOFF TIMES

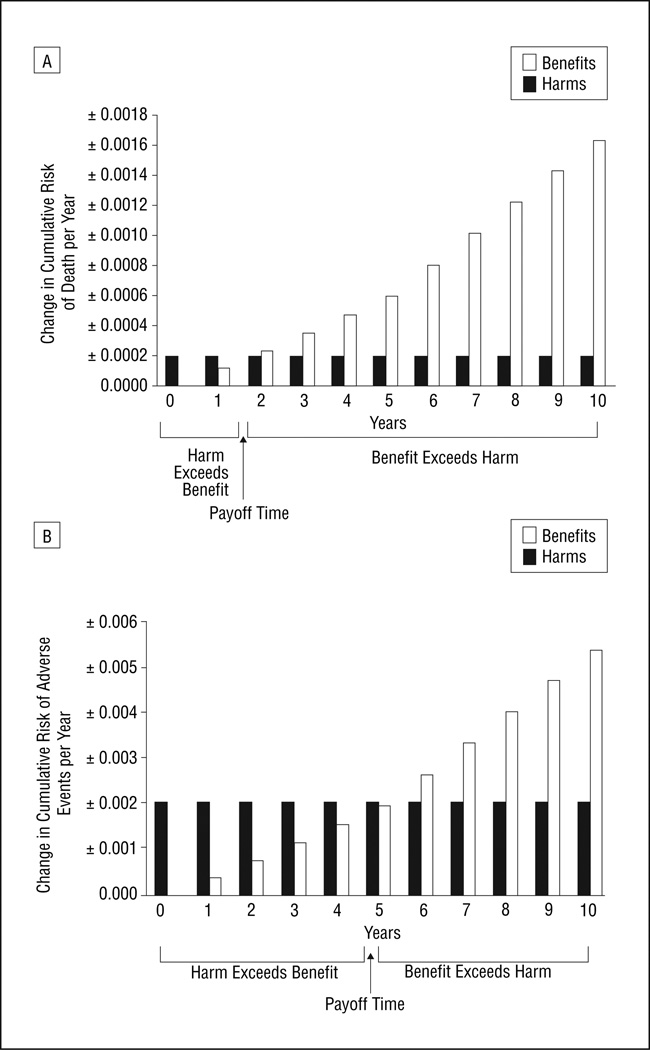

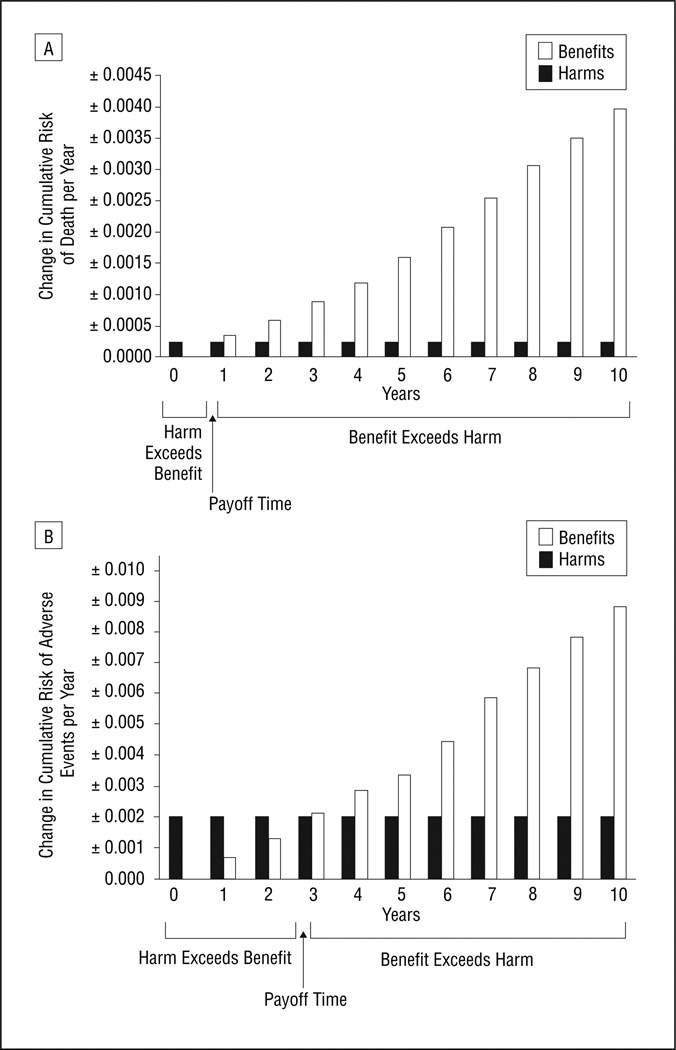

For 50-year-old men, the mortality payoff time was 1.9 years. As of that point in time, the mortality decrease from benefits of colorectal cancer screening started to out-weigh the mortality increase from harms of screening (Figure 1A). The adverse event payoff time was 5.0 years; as of that point in time, the reduction in adverse events from benefits of colorectal cancer screening started to out-weigh the increase in adverse events from harms of screening (Figure 1B). The increased colon cancer risk of 60-year-old women compared with 50-year-old men resulted in greater potential benefits from colorectal cancer screening and therefore in earlier payoff times. The mortality payoff time was 0.7 years (Figure 2A), and the adverse event payoff time was 2.9 years (Figure 2B).

Figure 1.

Payoff time for colorectal cancer screening for 50-year-old men, considering (A) mortality and (B) adverse events. The payoff time is the earliest time when the likelihood of benefit (decrease in cumulative risk of adverse outcomes mitigated by screening) exceeds the likelihood of harm (increase in cumulative risk of adverse outcomes induced by screening, ie, its complications and side effects). The payoff time, which occurs when the height of the open bars first exceeds the height of the solid bars, is approximately 1.9 years for mortality and 5.0 years for adverse events.

Figure 2.

Payoff times for colorectal cancer screening for 60-year-old women, considering (A) mortality and (B) adverse events. The payoff time is the earliest time when the likelihood of benefit (decrease in cumulative risk of adverse outcomes mitigated by screening) exceeds the likelihood of harm (increase in cumulative risk of adverse outcomes induced by screening, ie, its complications and side effects). The payoff time, which occurs when the height of the open bars first exceeds the height of the solid bars, is approximately 0.7 years for mortality and 2.9 years for adverse events.

ESTIMATING LIFE EXPECTANCY

Based on the survival model of Braithwaite and colleagues, 50-year-old men with HIV may be grouped into 9 separate risk strata according to pretreatment viral load and CD4 lymphocyte count (Table 1). Individuals in these strata have life expectancies ranging from 12.5 years (highest viral load, lowest CD4 lymphocyte count) to 24.0 years (lowest viral load, highest CD4 lymphocyte count).

Table 1.

Comparison of Payoff Times for Colorectal Cancer Screening With Life Expectancy for 50-Year-Old Men With Human Immunodeficiency Virusa

| Variable | Duration, y |

|---|---|

| Payoff time | |

| Considering mortality | 1.9 |

| Considering adverse events | 5.0 |

| Life expectancy | |

| CD4 lymphocyte count, 800/µL | 24.0 |

| RNA, 10 000 copies/mL | |

| CD4 lymphocyte count, 800/µL | 21.1 |

| RNA 100 000 copies/mL | |

| CD4 lymphocyte count, 800/µL | 16.4 |

| RNA, 1 000 000 copies/mL | |

| CD4 lymphocyte count, 500/µL | 22.6 |

| RNA, 10 000 copies/mL | |

| CD4 lymphocyte count, 500/µL | 19.5 |

| RNA, 100 000 copies/mL | |

| CD4 lymphocyte count, 500/µL | 14.8 |

| RNA, 1 000 000 copies/mL | |

| CD4 lymphocyte count, 200/µL | 19.2 |

| RNA, 10 000 copies/ml | |

| CD4 lymphocyte count, 200/µL | 16.6 |

| RNA, 100 000 copies/mL | |

| CD4 lymphocyte count, 200/µL | 12.5 |

| RNA, 1 000 000 copies/mL |

All strata have longer life expectancies than either payoff time; therefore, screening may be beneficial for this group.

Based on the Seattle Heart Failure Model, patients with CHF may be grouped into 6 separate risk strata (Table 2). The 3 most favorable risk strata (−1, 0, and 1) have estimated life expectancies of greater than 5 years, whereas the 3 least favorable strata (2, 3, and 4) have estimated life expectancies of 4.0, 1.4, and 0.6 years, respectively.

Table 2.

Comparison of Payoff Times for Colorectal Cancer Screening With Life Expectancy for 60-Year-Old Women With Congestive Heart Failurea

| Variable | Duration, y |

|---|---|

| Payoff time | |

| Considering mortality | 0.7 |

| Considering adverse events | 2.9 |

| Median survival score | |

| −1 | >5 |

| 0 | >5 |

| 1 | >5 |

| 2 | 4.0 |

| 3 | 1.4 |

| 4 | 0.6 |

Some strata (scores 3 and 4) have shorter life expectancies than one or both payoff times; therefore, colorectal cancer screening may not be advised for these groups.

COMPARING PAYOFF TIMES WITH LIFE EXPECTANCY

The life expectancies of 50-year-old men with HIV (12.5–24.0 years) exceed the payoff times for colorectal cancer screening, regardless of risk stratum and regardless of whether the payoff time reflected mortality (1.9years) or adverse events (5.0 years). Correspondingly, colorectal cancer screening maybe favorable for 50-year-old men with HIV. The life expectancies of 60-year-old women with CHF (0.6 to >5 years) do not always exceed the payoff times for colorectal cancer screening; therefore, colorectal cancer screening is likely to be unfavorable for some of these patients. In particular, women in risk strata 3 and 4 have shorter life expectancies (1.4 and 0.6 years, respectively) than one or both payoff times (0.7 and 2.9 years, respectively).

COMMENT

We developed a framework for individualizing guidelines based on comorbidity. This framework is designed “from the ground up” to be compatible with clinical decision aids because it quantitatively weighs guidelines’ benefits and harms and therefore can offer clear guidance for decision making. It is substantially different from other published approaches for individualizing guidelines, which require qualitative valuations that are often complex and are less likely to be applicable at the point of care.1–5 The recent development of comorbidity-based prognostic models that are simple6 or have user-friendly Web-based interfaces22 has greatly facilitated the development of this framework. Although some clinicians may wonder why any decision rule is necessary, when it is theoretically possible to recalculate risks and benefits each time a guideline is applied, it would be prohibitively difficult (if not impossible) to iterate all possible combinations of guidelines and comorbidities and to perform a distinct, evidence-based analysis for each combination.

Our approach estimates the time until a guideline’s incremental benefits are likely to exceed its incremental harms and then asks whether this time is longer than a patient’s comorbidity-adjusted life expectancy. In our illustration of the payoff time framework, we found that individuals with HIV are likely to benefit from screening because of the relatively long life expectancy conferred by current therapies. In contrast, individuals with severe CHF may be unlikely to benefit from colorectal cancer screening because of their relatively short life expectancies.

An important strength of our framework is its potential to affect health policy. Because the payoff time provides an objective method of inferring when a guideline may confer harm rather than benefit, it may be used to delineate circumstances when guideline compliance should not count toward quality benchmarks or toward pay for performance. For example, we would argue that if a clinician elects not to screen for colorectal cancer in a 60-year-old woman with risk strata 3 or 4 CHF, it should not harm the clinician’s quality “report card” or “performance” portfolio.

The concept of a payoff time is likely to be intuitive, as expert panels sometimes specify a minimum life expectancy that is a prerequisite for a guideline’s expected benefits to exceed its expected harms. For example, the US Preventive Services Task Force advocates waiving colorectal cancer screening when a patient’s estimated life expectancy is less than 5 years. These recommendations can be thought of as payoff times that are estimated by expert opinion rather than the more data-based approach of our framework.

An important limitation of our work is that we did not consider harm and benefit data particular to the comorbidities under consideration. For example, CHF is likely to increase the harm from colorectal cancer screening by increasing susceptibility to harm from complications, and our payoff time calculations did not consider this likely impact. However, it is important to note that using data that are not comorbidity specific is not an intrinsic limitation of the payoff time approach, but rather a limitation of the data sources that were available. Once data concerning the interaction between CHF and harm from colonoscopy are available, the information may be incorporated into the payoff time estimation. Furthermore, even when comorbidity-specific data are unavailable, payoff time inferences may still be valid if the comorbidity is likely to result in an underestimate of the payoff time. For example, CHF is likely to result in an underestimation of the colorectal cancer screening payoff time because it is likely to increase the chance of harm (eg, an increase in complication rate) more than it is likely to increase the chance of benefit. We may therefore infer that patients with risk strata 3 or 4 CHF should not undergo colorectal cancer screening because their life expectancies are lower than the underestimates (and would therefore also be lower than the true payoff times).

Our framework has other limitations. It does not consider patient preferences, which are an important consideration in any clinical decision. Indeed, it is important to emphasize that the payoff time should not be interpreted as a clinical dictum, but rather as a clinical decision support tool, one among many information sources in a shared decision between patient and clinician. Another limitation of our approach is that it does not consider costs. However, it would be straightforward to consider costs for any particular willingness to pay threshold (eg, $100 000 per life-year saved) by determining when a guideline first confers the corresponding life expectancy benefit. As more stringent willingness-to-pay criteria are specified, the associated payoff times would lengthen.

In conclusion, we present a practical framework for tailoring clinical guidelines to comorbid populations that may be applied at the point of care. This method has the potential to reduce morbidity and mortality, while decreasing use of resources, and therefore has implications for health policy and clinical care.

Acknowledgments

Funding/Support: This study was supported by grant K23 AA014483-04 from the National Institute of Alcohol Abuse and Alcoholism, National Institutes of Health, Bethesda, Maryland.

Footnotes

Author Contributions: Study concept and design: Braithwaite, Roberts, and Justice. Acquisition of data: Braithwaite and Justice. Analysis and interpretation of data: Braithwaite, Concato, Chang, Roberts, and Justice. Drafting of the manuscript: Braithwaite, Chang, Roberts, and Justice. Critical revision of the manuscript for important intellectual content: Braithwaite, Concato, Chang, Roberts, and Justice. Statistical analysis: Chang, Roberts, and Justice. Obtained funding: Braithwaite and Justice. Administrative, technical, and material support: Justice. Study supervision: Roberts and Justice.

Financial Disclosure: None reported.

REFERENCES

- 1.Durso SC. Using clinical guidelines designed for older adults with diabetes mellitus and complex health status. JAMA. 2006;295(16):1935–1940. doi: 10.1001/jama.295.16.1935. [DOI] [PubMed] [Google Scholar]

- 2.Holmes HM, Hayley DC, Alexander GC, Sachs GA. Reconsidering medication appropriateness for patients late in life. Arch Intern Med. 2006;166(6):605–609. doi: 10.1001/archinte.166.6.605. [DOI] [PubMed] [Google Scholar]

- 3.Walter LC, Covinsky KE. Cancer screening in elderly patients: a framework for individualized decision making. JAMA. 2001;285(21):2750–2756. doi: 10.1001/jama.285.21.2750. [DOI] [PubMed] [Google Scholar]

- 4.Boyd CM, Darer J, Boult C, Fried LP, Boult L, Wu AW. Clinical practice guidelines and quality of care for older patients with multiple comorbid diseases: implications for pay for performance. JAMA. 2005;294(6):716–724. doi: 10.1001/jama.294.6.716. [DOI] [PubMed] [Google Scholar]

- 5.Tinetti ME, Bogardus ST, Jr, Agostini JV. Potential pitfalls of disease-specific guidelines for patients with multiple conditions. N Engl J Med. 2004;351(27):2870–2874. doi: 10.1056/NEJMsb042458. [DOI] [PubMed] [Google Scholar]

- 6.Lee SJ, Lindquist K, Segal MR, Covinsky KE. Development and validation of a prognostic index for 4-year mortality in older adults. JAMA. 2006;295(7):801–808. doi: 10.1001/jama.295.7.801. [DOI] [PubMed] [Google Scholar]

- 7.Gross CP, McAvay GJ, Krumholtz HM, Paltiel AD, Bhasin D, Tinetti ME. The effect of age and chronic illness on life expectancy after a diagnosis of colorectal cancer: implications for screening. Ann Intern Med. 2006;145(9):646–653. doi: 10.7326/0003-4819-145-9-200611070-00006. [DOI] [PubMed] [Google Scholar]

- 8.Armour BS, Friedman C, Pitts MM, Wike J, Alley L, Etchason J. The influence of year-end bonuses on colorectal cancer screening. Am J Manag Care. 2004;10(9):617–624. [PubMed] [Google Scholar]

- 9.SEER Cancer Statistics Review 1975–2003. National Cancer Institute; [Accessed August 21, 2007]. Table VI-7: colon and rectum cancer (invasive): SEER incidence and U.S. death rates, age-adjusted and age-specific rates, by race and sex. Web site. http://seer.cancer.gov/csr/1975_2003/results_merged/sect_06_colon_rectum.pdf. [Google Scholar]

- 10.Pignone M, Saha S, Hoerger T, Mandelblatt J. Cost-effectiveness analyses of colorectal cancer screening: a systematic review for the U.S. Preventive Services Task Force. Ann Intern Med. 2002;137(2):96–104. doi: 10.7326/0003-4819-137-2-200207160-00007. [DOI] [PubMed] [Google Scholar]

- 11.Wagner J, Tunis S, Brown M, Ching A, Almeida R. Cost-effectiveness of colorectal cancer screening in average-risk adults. In: Young G, Rozen P, Levin B, editors. Prevention and Early Detection of Colorectal Cancer. Philadelphia, PA: WB Saunders Co; 1996. pp. 321–356. [Google Scholar]

- 12.Frazier AL, Colditz GA, Fuchs CS, Kuntz KM. Cost-effectiveness of screening for colorectal cancer in the general population. JAMA. 2000;284(15):1954–1961. doi: 10.1001/jama.284.15.1954. [DOI] [PubMed] [Google Scholar]

- 13.Khandker RK, Dulski JD, Kilpatrick JB, Ellis RP, Mitchell JB, Baine WB. A decision model and cost-effectiveness analysis of colorectal cancer screening and surveillance guidelines for average-risk adults. Int J Technol Assess Health Care. 2000;16(3):799–810. doi: 10.1017/s0266462300102077. [DOI] [PubMed] [Google Scholar]

- 14.Sonnenberg A, Delco F, Inadomi JM. Cost-effectiveness of colonoscopy in screening for colorectal cancer. Ann Intern Med. 2000;133(8):573–584. doi: 10.7326/0003-4819-133-8-200010170-00007. [DOI] [PubMed] [Google Scholar]

- 15.Vijan S, Hwang EW, Hofer TP, Hayward RA. Which colon cancer screening test? a comparison of costs, effectiveness, and compliance. Am J Med. 2001;111(8):593–601. doi: 10.1016/s0002-9343(01)00977-9. [DOI] [PubMed] [Google Scholar]

- 16.Loeve F, Brown ML, Boer R, van Ballegooijen M, van Oortmarssen GJ, Habbema JD. Endoscopic colorectal cancer screening: a cost-savings analysis. J Natl Cancer Inst. 2000;92(7):557–563. doi: 10.1093/jnci/92.7.557. [DOI] [PubMed] [Google Scholar]

- 17.Ness RM, Holmes AM, Klein R, Dittus R. Cost-utility of one-time colonoscopic screening for colorectal cancer at various ages. Am J Gastroenterol. 2000;95(7):1800–1811. doi: 10.1111/j.1572-0241.2000.02172.x. [DOI] [PubMed] [Google Scholar]

- 18.Beck JR, Kassirer JP, Pauker SG. A convenient approximation of life expectancy (the “DEALE”), I: validation of the method. Am J Med. 1982;73(6):883–888. doi: 10.1016/0002-9343(82)90786-0. [DOI] [PubMed] [Google Scholar]

- 19.Braithwaite RS, Justice AC, Chang CC, et al. Estimating the proportion of patients infected with HIV who will die of comorbid diseases. Am J Med. 2005;118(8):890–898. doi: 10.1016/j.amjmed.2004.12.034. [DOI] [PubMed] [Google Scholar]

- 20.Braithwaite RS, Schechter S, Chung CC, Schaefer A, Roberts MS. Estimating the rate of accumulating drug resistance mutations in the HIV genome. Value Health. 2007;10(3):204–213. doi: 10.1111/j.1524-4733.2007.00170.x. [DOI] [PubMed] [Google Scholar]

- 21.Braithwaite RS, Shechter S, Roberts MS, et al. Explaining variability in the relationship between antiretroviral adherence and HIV mutation accumulation. J Antimicrob Chemother. 2006;58(5):1036–1043. doi: 10.1093/jac/dkl386. [DOI] [PubMed] [Google Scholar]

- 22.Levy WC, Mozaffarian D, Linker DT, et al. The Seattle Heart Failure Model: prediction of survival in heart failure. Circulation. 2006;113(11):1424–1433. doi: 10.1161/CIRCULATIONAHA.105.584102. [DOI] [PubMed] [Google Scholar]