Abstract

Objective

Randomized controlled trials remain the gold standard for evaluating cancer intervention efficacy. Randomized trials are not always feasible, practical, or timely, and often don’t adequately reflect patient heterogeneity and real-world clinical practice. Comparative effectiveness research can leverage secondary data to help fill knowledge gaps randomized trials leave unaddressed; however, comparative effectiveness research also faces shortcomings. The goal of this project was to develop a new model and inform an evolving framework articulating cancer comparative effectiveness research data needs.

Study Design and Setting

We examined prevalent models and conducted semi-structured discussions with 76 clinicians and comparative effectiveness research researchers affiliated with the Agency for Healthcare Research and Quality’s cancer comparative effectiveness research programs.

Results

A new model was iteratively developed, and presents cancer comparative effectiveness research and important measures in a patient-centered, longitudinal chronic care model better-reflecting contemporary cancer care in the context of the cancer care continuum, rather than a single-episode, acute-care perspective.

Conclusion

Immediately relevant for federally-funded comparative effectiveness research programs, the model informs an evolving framework articulating cancer comparative effectiveness research data needs, including evolutionary enhancements to registries and epidemiologic research data systems. We discuss elements of contemporary clinical practice, methodology improvements, and related needs affecting comparative effectiveness research’s ability to yield findings clinicians, policymakers, and stakeholders can confidently act on.

Keywords: cancer, comparative effectiveness research, data models, outcomes, registries

Introduction

The accelerated pace of scientific discovery has yielded rapid advances in cancer care, underscoring the need for timely, evidence-based information to guide implementation of new interventions into clinical practice. Randomized controlled trials are valid tools for conducting comparative effectiveness research and remain the gold standard in determining efficacy of new interventions;1 however, this research design is not always feasible, practical, or sufficiently timely. Moreover, because of their very selective inclusion criteria and limited enrollment, randomized trials are commonly unable to characterize the myriad combinations of interventions and heterogeneous patient characteristics, and thus fall short of informing “real world” clinical practice. 2–6

Thanks to advances in information technology and recently developed statistical methods, ever-growing repositories of observational data may be leveraged to conduct cancer comparative effectiveness research. By leveraging rapidly expanding repositories of secondary data collected through patient registries, electronic health records, administrative data, interventional clinical trials, and elsewhere, non-experimental cancer comparative effectiveness research holds great promise for addressing many of the shortcomings of randomized trials and filling the knowledge gaps they leave unaddressed.7–10 However, a primary challenge in using secondary data is comprehensively and confidently characterizing important processes of care and outcomes while effectively controlling for potential confounders. As such, it remains a challenge for cancer comparative effectiveness research to generate valid, timely, and broadly generalizable new information reflecting diverse, “real-world” patients and meaningful outcomes, and many cancer care stakeholders remain skeptical or suspicious of non-experimental comparative effectiveness research.11–13 To overcome these challenges and generate findings with sufficient confidence to meet different evidentiary standards, we must clearly understand data shortcomings and use this knowledge to improve future data and methods.

To inform an evolving framework for understanding cancer comparative effectiveness research data needs, we reviewed prevalent data models and incorporated the feedback of over 70 cancer comparative effectiveness research and outcomes researchers, clinicians, and other stakeholders to develop a conceptual model for examining secondary data in cancer comparative effectiveness research. This model provides a template for informing future data collection and methods development efforts relevant to not only secondary data but also prospective research, with the ultimate goal of advancing the utility and acceptability of cancer comparative effectiveness research for clinical and policy-relevant decision-making.

Methods

Definitions

Comparative effectiveness research can take numerous forms, but for this discussion, we focus on the use of secondary data (i.e., data used for reasons other than that for which it was originally collected) and non-experimental studies in the context of the Institute of Medicine’s definition of comparative effectiveness research:

Comparative effectiveness research is the generation and synthesis of evidence that compares the benefits and harms of alternative methods to prevent, diagnose, treat, and monitor a clinical condition or to improve the delivery of care. The purpose of CER is to assist consumers, clinicians, purchasers, and policy makers to make informed decisions that will improve health care at both the individual and population levels.14,15

Examination of Prevalent Models

Grounded in this definition, members of the study team developed a baseline conceptual model from prevalent models in the recent literature. Models from multiple specialties were considered, though the focus was on the cancer-specific context. Search topics included multiple forms of the following concepts: cancer care continuum, data requirements of non-experimental studies, and models of cancer care, with an emphasis on framework, quality of care, outcomes, measures, measure inter-relationships, and key points of assessment. These topics were examined with regard to not only non-experimental studies, but also randomized trials because they are perceived to be of the highest evidentiary standard, they have been central in informing evidence-based practice and policy, and it is important to understand how models using secondary data may, of necessity, contrast to randomized trials or evolve from them. We concentrated primarily on developing an extensive model of data elements and explicit measures associated with clinical status, treatment selection, and outcomes to inform non-experimental comparative effectiveness research.

Perspectives of Cancer Outcomes Researchers

The primary study team included an epidemiologist, pharmacoepidemiologist, biostatistician, health services researcher, and two cancer-focused physician researchers, all of whom conduct patient-centered cancer outcomes research. First, we met with a convenience sample of cancer outcomes researchers associated with the Agency for Healthcare Research and Quality (AHRQ)’s Cancer-focused Developing Evidence to Inform Decisions about Effectiveness (Can-DEcIDE) Comparative Effectiveness Research Consortium.16 Discussants were from multiple disciplines and included clinicians, clinical trials experts, epidemiologists, health services researchers, biostatisticians, clinical data managers, state public health workers, and informaticians. Applying snowball sampling, discussants were asked to identify other researchers who might provide additional insight. The final sample of consisted of 41 discussants.† Discussions included the important measures and outcomes in specific datasets relevant to cancer comparative effectiveness research, as well as strengths and weaknesses, population/sample, linking capabilities with other data, and data structure and accessibility.

The study team reviewed findings from the literature and notes from key-informant discussions, and organized and integrated these to develop and refine the conceptual model. The model was shared with the key informants and evolved over multiple iterations. Subsequently, it was presented at a meeting of the Agency for Healthcare Research and Quality-funded Registry of Patient Registries (RoPR) project, the goal of which is to promote the use of standard health outcome measures and other data elements across registries. 17,18 At this meeting, the model was dissected and further refined through intensive discussion with 35 stakeholders including providers, payers, researchers, government officials, industry representatives, and journal editors.‡

Results

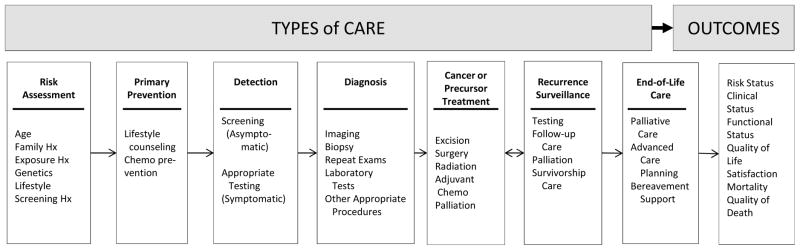

From a large but fragmented literature, 20 references were found to be most useful for this study and were focused on closely. 8,19–37 The model by Zapka and colleagues (Figure 1)25 well-characterizes the spectrum of cancer care and illustrates the overarching context of the cancer care continuum in which comparative effectiveness research studies focus.5,38,39 Although developed as a process model, it is useful for identifying relevant interventions, data elements, and intermediate outcomes important for effectiveness studies. Survivorship care is not specifically described, though is inherent in the longitudinality of cancer care presented. Its categories help demonstrate the broad range of stakeholders whose interests are relevant to this discussion, extending far beyond primary cancer treatment.

Figure 1.

The cancer care continuum and context of quality in which comparative effectiveness research and patient-centered outcomes research is conducted.

Adapted and reprinted with permission from the American Association for Cancer Research: JG Zapka, SH Taplin, LI Solberg, MM Manos. (2003) “A Framework for Improving the Quality of Cancer Care: The Case of Breast and Cervical Cancer Screening.” Cancer Epidemiology, Biomarkers, and Prevention. 12(1):4–13.

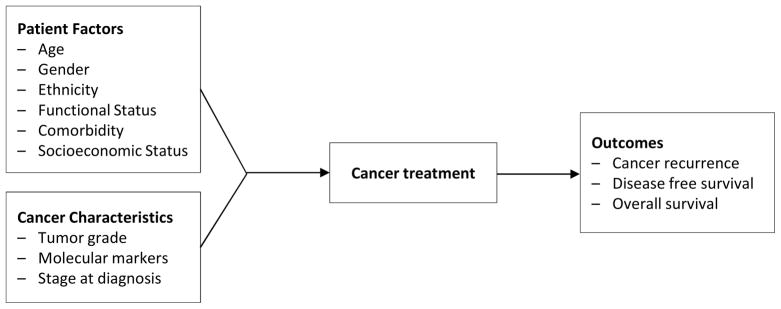

Geracci and colleagues 40 present a model reflecting randomized trials of cancer treatment efficacy, which provides a foundation from which to build an analogous model for assessing intervention effectiveness using secondary data (Figure 2). Though underspecified compared to the measures actually collected in most modern randomized trials, this model captures the fact that minimal specification is adequate in randomized trials since randomization controls for confounding in treatment selection and outcomes. It also serves as a foil against which to conceptually contrast the extensively specified model required when examining non-experimental data. Its simple temporal unidirectionality also reflects the “intention to treat ” principle prevalent in randomized trials, wherein the treatment or intervention (i.e., exposure) is defined by the first care episode or arm to which the person was randomized, regardless of whether that person subsequently changed their course of care.41

Figure 2.

A prevailing model, cancer outcome predictors.

Originally presented in: Geraci, JM, et al. (2005). “Comorbid Disease and Cancer: The Need for More Relevant Conceptual Models in Health Services Research.” Journal of Clinical Oncology. 23(30):7399-404. Reprinted with permission. © 2008 American Society of Clinical Oncology.

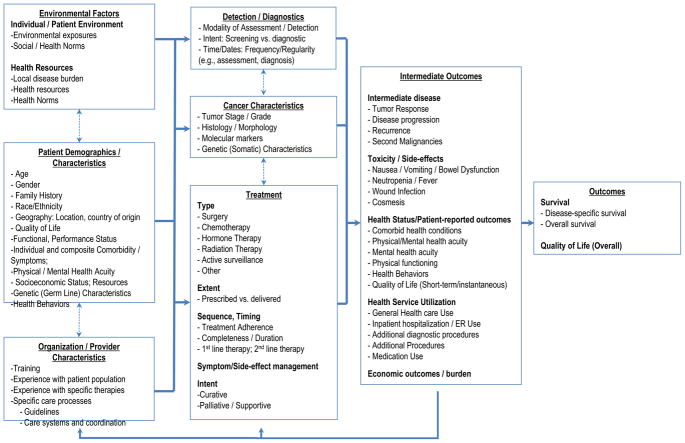

Figure 3 represents this study’s newly-developed conceptual model for examining data for non-experimental cancer comparative effectiveness research, integrating concepts presented in the prior two models, elsewhere in the literature, 11–32,40 and our discussions with comparative effectiveness researchers and stakeholders. Among predictor variables, it extends well beyond traditional measures of the person and the cancer by characterizing a hierarchical set of factors relevant to treatment selection and outcomes, including those at the patient-level, the health care provider level, and the environment-level. Within these different levels of measure there are different subcategories of measures. These multi-level measures feed into a model reflecting the simultaneously longitudinal and cyclical nature of cancer development, detection, and treatment. Among outcomes, the model extends beyond the traditional and familiar measure of survival or mortality by articulating intermediate outcomes, including treatment selection, and richer long-term outcomes such as overall quality of life. It can help identify confounders, mediators, and the multiple pathways driving treatments and outcomes, and thus serve as a guidepost for researchers to develop study designs that mitigate many of the biases inherent in comparative effectiveness research.42

Figure 3.

Proposed new model: Measures for patient-centered cancer outcomes research using observational data.

The model reflects the need for more extensive variable specification in the absence of randomization, and enumerates multiple data elements necessary for rigorous analyses that control for bias. Originally oriented towards treatment-focused interventions, it also reflects other points on the cancer care continuum including prevention, diagnosis, palliation, and supportive care. Cancer survivorship fits into this framework, as the measures can be tailored to fit each of the three stages of survivorship – acute, extended, and permanent43 – as the patient’s cancer experience continuously evolves and changes over time. Recognizing that selecting the most relevant measures may vary substantially by the specific study question being examined, the model includes illustrative, though not exhaustive examples of measures relevant to cancer, their interrelationships, and temporality, and specifies the relevance of intermediate outcomes both as important endpoints and also as they may moderate treatments. In taking this approach, the model moves conceptually beyond the intent to treat assumption. While intent to treat has its merits, this new model better-reflects the realities of clinical practice by addressing the notion of multiple lines of therapy, heterogeneous populations and the interactive and reciprocal feedback among multiple levels of relevant factors including different modalities of care, treatments and their sequencing, and intermediate outcomes, all of which may evolve and change over time. In doing so, it presents cancer and cancer comparative effectiveness research examinations in the context of a patient-centered, longitudinal chronic care perspective rather than a single-episode, acute-care perspective.

Discussion

The goal of this study was to inform a framework for understanding cancer comparative effectiveness research data needs to help advance comparative effectiveness research and its ability to meet different evidentiary standards and thus be confidently utilized in clinical and policy decision-making. The model developed by the study team (Figure 3) serves as a baseline guide for addressing these goals. Compared to prior models, it better reflects the contemporary practice of cancer care by articulating measures and outcomes of growing importance and the iterative longitudinal process of cancer care. By doing so, it informs the evolution of current data sources such as cancer registries, the development of future such data resources, and serves as a useful template to help inform future study design and analysis.

The model articulates multiple factors that have grown in relevance as cancer care has evolved. Historically, cancer treatment options were few, and survival was relatively short. Data collected in our national system of cancer registries reflect this legacy, in that they accommodate limited information beyond basic demographics, diagnosis, initial treatment, and duration of survival. Currently, cancer continues to be detected at earlier, more treatable stages, there are commonly multiple treatment options among different modalities, and many different clinical and non-clinical factors demark these treatments’ use. With these advances, cancer patients can often expect to live for many years after diagnosis.44 As such, additional measures must be developed and incorporated into existing systems. For example, genetic markers such as the KRAS test are increasingly able to provide predictive insight into treatment response, disease progression, or recurrence risk. 45,46 The measures presented in Figure 3 clearly are illustrative and not exhaustive, and are not necessarily intended to represent the key ingredients in a recipe for the single, definitive dataset. Nor does the model imply that all of these measures are a prerequisite for valid inferences from nonexperimental comparative effectiveness research studies. Rather, they are categorically representative of measures that should be tailored to the context of specific cancers and populations, since each cancer is unique.

The model also reflects contemporary cancer care in that it characterizes cancer as a chronic disease experienced through distinguishable phases rather than as a single acute-care event. Reflecting the multiple transitions of each individual cancer patient’s illness trajectory, data elements and pathways in the model can accommodate disease activity and remission, multiple modalities and waves of treatment and testing, equally relevant periods of non-treatment with varying levels of surveillance,47 and other patient outcomes and needs, all of which can occur through recurrent, iterative steps and change over the span of many years.48–51 Prior therapies, coexisting conditions, time, treatment sequence, and physical and psychosocial characteristics associated with aging can influence the choice of subsequent therapies and outcomes. As people live longer and have more options, the model moves beyond the familiar outcome of survival to include intermediate outcomes that are important in and of themselves and as they modulate a longitudinal care process. It also includes measures of the structure, process, and outcomes of care, which collectively compose quality of care.52–54 Overall quality of life joins survival at the end of the model to reflect the tradeoff between quality and quantity of life that many people make.55,56 In sum, in contrast to focusing on a particular intervention, outcome, or even stage of the cancer care continuum, by placing the patient at the center of the model, multiple levels of measures are evident as being relevant.

Although the model proved intuitive to practicing oncologists and outcomes researchers, it is critical to point out that almost no research datasets exist that capture all of these measures, the longitudinal course of care, and feedback among measures. Those that are closest were exceptionally expensive to create and maintain, and remain limited in size and/or difficult to access. Examples include myriad experimental and non-experimental studies, as well as the HMO Cancer Research Network,57 the Cancer Care Outcomes Research and Surveillance study,58 and the Women’s Health Initiative-linked Medicare data.59

Linkages among datasets will play an important role in bringing necessary measures into a single dataset for examination. Though not without its own limitations, the National Cancer Institute’s Surveillance Epidemiology and End Results (SEER)-Medicare data exemplify how the linked data “whole” can be greater than the sum of its constituent parts. Electronic health records or clinical patient-reported data systems that rely on standardized diagnosis and procedure coding systems such as the International Classification of Diseases (ICD), and Healthcare Common Procedural Coding System (HCPCS), can also add important measures and detail. It is helpful that these are nearly ubiquitous systems; however, it will be increasingly important to capture not only diagnoses and the performance of procedures or tests, but also the indication for the procedures and even test results. To some degree, this is captured by electronic health records, though commonly in large text fields that must be abstracted to be useful for researchers. Advances in information technology and migrating from ICD version 9 to ICD version 10 will help address some of these shortcomings.

With an adequate trust fabric protecting patient privacy, data-linkage at the individual patient level can couple detailed information like ICD with important markers such as stage and performance status longitudinally. The next generation of linked data includes distributed research networks, which are more dynamic and perpetually updated, helping address many such shortcomings of SEER-Medicare and similarly static linked data.60,61 Such networks may be the key to success for national quality of care improvement efforts, such as have been proposed under the Affordable Care Act,62,63 as well as the foundation for rapid learning healthcare as outlined by the Institute of Medicine, National Cancer Policy Forum, and the Office of the National Coordinator. 62–66

Dataset linkage will thus be of central importance, but it is important to point out that growing privacy and confidentiality concerns and increasingly restrictive policies governing protected health information (PHI) severely limit access to unique identifiers (e.g., Social Security Numbers, full names), which have been the mainstays of quality data linkages. Indeed, facing these restrictions and privacy concerns many registries, health care organizations, and public health programs have stopped collecting or transmitting important protected health information elements as a matter of policy. For example, federal agencies now prohibit collection of social security numbers for contract research, and Institutional Review Boards are increasingly adopting such restrictions for social security numbers and even participant names.36,67 These policies have created substantially greater burden for both health care providers and researchers, and even have been indicted as contributory to research bias.68–74 As a result, there is an urgent and growing need to develop novel linkage methods to preserve and perpetuate the viability of secondary data for cancer comparative effectiveness research.

Linkages will address some challenges and help strengthen data for cancer comparative effectiveness research; however, they will not necessarily address the challenge of data standardization.75 There are many stakeholders (e.g., researchers, providers, payers) collecting clinical, population, and health services data in numerous ways that often do not interdigitate well. Moreover, even among the multiple disciplines comprising the cancer comparative effectiveness research research community, there are substantial differences of opinion on essential variables and how they should be measured. This lack of standardization in measure definitions and data coding can inhibit comparability across and even within health datasets, and threaten the generalizability of findings in the context of population heterogeneity, sometimes even for simple measures such as race and ethnicity. Discussions with key informants and Registry of Patient Registries project participants reflected this variation in disciplinary perspectives, which led to challenges in developing mutually exclusive data categories, their constituent data elements, and definitive empirical definitions. Nonetheless, the overall model withstood this scrutiny.

Finally, many of the challenges facing cancer comparative effectiveness research data cannot be solved through more measures and more data alone. A greater quantity of data will not necessarily make comparative effectiveness research studies more generalizable or reproducible; rather, the design issues of existing studies need to be better understood and overcome, resulting in better quality data to address these needs. Future work should focus on methods to iteratively upgrade data quality, triangulate results to confirm findings, and refine expectations in response to reality. In parallel, the ongoing advancement of complex analytic methods and guidelines for their application is necessary.13 The majority of data currently used for comparative effectiveness research is collected for non-research purposes, yielding several potential sources of significant bias. Advanced statistical approaches such as propensity score trimming 76 and instrumental variable analysis77 can help address these structural limitations to extend the utility of current data. While advanced methods alone cannot resolve many limitations of currently available secondary datasets, continued investment in developing and applying better analytic methods can help address design limitations and further enhance what we may learn from non-experimental cancer comparative effectiveness research.

Conclusions

By leveraging secondary data we can fill knowledge gaps and provide timely and valid scientific knowledge to inform health care decisions for people with cancer or at risk for it. The model we present here serves a starting point for an evolving framework to systematically address cancer comparative effectiveness research data needs, accelerate the pace of cancer comparative effectiveness research, and ultimately enhance the adoption of comparative effectiveness research findings by the multiple stakeholders interested in improving patient care and outcomes. While the focus of this examination was on the use of secondary data, it is important to point out that many of the key elements here are also relevant for prospective comparative effectiveness research studies as well, both with regard to their primary research focus and also inasmuch as their data may be reexamined subsequently in examination of another study question. There are no doubt many challenges facing the comparative effectiveness research community as it seeks to advance this model and the data needed to fulfill its potential.42 However, for the cancer community to leverage and experience the benefit of these data, policy changes will be required before healthcare providers and other data holders will be willing or legally able to make these data available for research, an issue of substantial ongoing discussion.42,78–81 The engagement and substantial coordination of multiple organizations, agencies, and individuals from multiple disciplines to address several ongoing challenges will also be essential.42

What is new?

This conceptual model presents cancer and comparative effectiveness research in the context of a patient-centered, longitudinal chronic care model that better-reflects contemporary cancer care, rather than the traditional single-episode, acute-care perspective.

The model is grounded in the context of the cancer care continuum, and specifies the relevance of intermediate outcomes both as important end-points and also as they moderate treatments.

The model moves beyond the Intent-To-Treat principle by recognizing multiple modalities and lines of therapy, their interaction, and feedback which change over time. It includes illustrative examples of multiple factors associated with treatment decisions and outcomes and extensive measure specification required in the context of non-experimental data.

-

Going forward, the model serves as a template for current researchers and a roadmap for future improvements for registries and other comparative effectiveness research data, pointing out at least 2 central needs:

Systematically identified, standardized measures to fill data gaps and enhance transferability.

Targets for important, continued investment in improving study design, population sampling, and analytic methods.

Acknowledgments

We thank Timothy Carey, MD; Lisa DiMartino, MPH; Janet Freburger, PhD; and Deborah Schrag, MD, for their review and feedback, as well as Richard Gliklich and Daniel Campion from Outcome Sciences (AHRQ Contract HHSA290200500351; “American Recovery and Reinvestment Act: Developing a Registry of Registries,”). Work on this study was supported in part by the Integrated Cancer Information and Surveillance System (ICISS), UNC Lineberger Comprehensive Cancer Center with funding provided by the University Cancer Research Fund (UCRF) via the State of North Carolina.

Research Support:

This work was supported by funding from AHRQ through the Cancer DEcIDE Comparative Effectiveness Research Consortium, contract HHSA290-205-0040-I-TO4-WA5 – Data Committee for the DEcIDE Cancer Consortium.

Footnotes

Associated with UNC Can-DEcIDE from: the University of North Carolina at Chapel Hill, Duke University, the Brigham and Women’s Hospital and the Dana Farber Cancer Institute, the University of Virginia, the Epidemiologic Research and Information Center at the Durham Veteran’s Affairs Medical Center, the North Carolina Central Cancer Registry, Blue Cross and Blue Shield of NC, Agency for Healthcare Research and Quality (AHRQ), the National Cancer Institute (NCI), and the Centers for Disease Control and Prevention (CDC).

Associated with the RoPR Project from: AHRQ, the American College of Surgeons Commission on Cancer (CoC), American Society of Clinical Oncology (ASCO), American College of Radiology (ACR), Blue Cross and Blue Shield, the Centers for Medicare and Medicaid Services, Children’s Tumor Foundation, College of American Pathologists, Duke University, the NCI, Johns Hopkins University, National Comprehensive Cancer Networks (NCCN), the National Institutes of Health (NIH), North American Association of Central Cancer Registries, Northwestern University Feinberg School of Medicine, Outcome Science, Patient Advocate Foundation, RTI Health Solutions, University of California at San Francisco, University of Colorado at Denver, the University of North Carolina at Chapel Hill.

Disclaimer:

The views expressed in this article are those of the authors, and no official endorsement by the Agency for Healthcare Research and Quality or the U.S. Department of Health and Human Services is intended or should be inferred.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Sullivan P, Goldmann D. The promise of comparative effectiveness research. JAMA. 2011 Jan 26;305(4):400–401. doi: 10.1001/jama.2011.12. [DOI] [PubMed] [Google Scholar]

- 2.Clancy CM, Slutsky JR. Commentary: a progress report on AHRQ’s Effective Health Care Program.(AHRQ Update) Health Services Research. 2007;42(5):xi(9). doi: 10.1111/j.1475-6773.2007.00784.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Smith S. Preface. Medical Care. 2007;45(10 Suppl 2):S1–S2. [Google Scholar]

- 4.Bach PB. Limits on Medicare’s ability to control rising spending on cancer drugs. N Engl J Med. 2009 Feb 5;360(6):626–633. doi: 10.1056/NEJMhpr0807774. [DOI] [PubMed] [Google Scholar]

- 5.Institute of Medicine. Initial National Priorities for Comparative Effectiveness Research. Washington DC: National Academies Press; 2009. [Google Scholar]

- 6.Congressional Budget Office. Pub No 2975. Washington DC: 2007. Research on the Comparative Effectiveness of Medical Treatments: Issues and Options for an Expanded Federal Role. [Google Scholar]

- 7.Dreyer NA, Tunis SR, Berger M, Ollendorf D, Mattox P, Gliklich R. Why observational studies should be among the tools used in comparative effectiveness research. Health Aff (Millwood) 2010 Oct;29(10):1818–1825. doi: 10.1377/hlthaff.2010.0666. [DOI] [PubMed] [Google Scholar]

- 8.Etheredge LM. Medicare’s future: cancer care. Health Aff (Millwood) 2009 Jan-Feb;28(1):148–159. doi: 10.1377/hlthaff.28.1.148. [DOI] [PubMed] [Google Scholar]

- 9.Tinetti ME, Studenski SA. Comparative effectiveness research and patients with multiple chronic conditions. The New England journal of medicine. 2011 Jun 30;364(26):2478–2481. doi: 10.1056/NEJMp1100535. [DOI] [PubMed] [Google Scholar]

- 10.Fung V, Brand RJ, Newhouse JP, Hsu J. Using medicare data for comparative effectiveness research: opportunities and challenges. Am J Manag Care. 2011;17(7):488–496. [PMC free article] [PubMed] [Google Scholar]

- 11.Keyhani S, Woodward M, Federman AD. Physician views on the use of comparative effectiveness research: a national survey. Ann Intern Med. 2010 Oct 19;153(8):551–552. doi: 10.7326/0003-4819-153-8-201010190-00023. [DOI] [PubMed] [Google Scholar]

- 12.Sox HC. Comparative effectiveness research: a progress report. Ann Intern Med. 2010 Oct 5;153(7):469–472. doi: 10.7326/0003-4819-153-7-201010050-00269. [DOI] [PubMed] [Google Scholar]

- 13.Dreyer NA. Making observational studies count: shaping the future of comparative effectiveness research. Epidemiology. 2011 May;22(3):295–297. doi: 10.1097/EDE.0b013e3182126569. [DOI] [PubMed] [Google Scholar]

- 14.Sox HC. Defining comparative effectiveness research: the importance of getting it right. Med Care. 2010 Jun;48(6 Suppl):S7–8. doi: 10.1097/MLR.0b013e3181da3709. [DOI] [PubMed] [Google Scholar]

- 15.Institue of Medicine. Initial National Priorities for Comparative Effectiveness Research. Washington DC: National Academies Press; 2009. [Google Scholar]

- 16.Lohr KN. Comparative effectiveness research methods: symposium overview and summary. Med Care. 2010 Jun;48(6 Suppl):S3–6. doi: 10.1097/MLR.0b013e3181e10434. [DOI] [PubMed] [Google Scholar]

- 17.Outcome Sciences. [Accessed January 27, 2011.];Registry of Patient Registries (RoPR) 2010 http://www.outcome.com/ropr.htm.

- 18.U.S. Agency for Healthcare Research and Quality. 9. Registry of Patient Registries. [Accessed February 6, 2011.];Recovery Act Awards for Data Infrastructure. 2010 http://www.ahrq.gov/fund/recoveryawards/osawinfra.htm.

- 19.Aday L, Begley C, Lairson D, Balkrishnan R. Evaluating the Healthcare System: Effectiveness, Efficiency, and Equity. 3. Chicago: Health Administration Press; 2004. [Google Scholar]

- 20.McDowell I. Measuring Health. 3. New York: Oxford University Press; 2006. [Google Scholar]

- 21.Lipscomb J, Gotay C, Snyder C. Outcomes Assessment in Cancer: Measures, Methods, and Applications. Cambridge: Cambridge University Press; 2005. [Google Scholar]

- 22.National Cancer Policy Board of the Institue of Medicine. Ensuring Quality Cancer Care. Washington DC: National Academies Press; 1999. [Google Scholar]

- 23.National Cancer Policy Board of the Institue of Medicine. Assessing the Quality of Cancer Care: An Approach to Measurement in Georgia. Washington DC: National Academies Press; 2005. [Google Scholar]

- 24.National Cancer Policy Board of the Institue of Medicine. Enhancing Data Systems to Improve the Quality of Cancer Care. Washington DC: National Academies Press; 2000. [PubMed] [Google Scholar]

- 25.Zapka JG, Taplin SH, Solberg LI, Manos MM. A framework for improving the quality of cancer care: the case of breast and cervical cancer screening. Cancer Epidemiol Biomarkers Prev. 2003 Jan;12(1):4–13. [PubMed] [Google Scholar]

- 26.Shah NR, Stewart WF. Clin Med Res; Clinical effectiveness: Leadership in comparative effectiveness and translational research.: the 15th Annual HMO Research Network Conference; April 26–29, 2009; Danville, Pennsylvania. Mar, 2010. pp. 28–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Aiello Bowles EJ, Tuzzio L, Ritzwoller DP, et al. Accuracy and complexities of using automated clinical data for capturing chemotherapy administrations: implications for future research. Med Care. 2009 Oct;47(10):1091–1097. doi: 10.1097/MLR.0b013e3181a7e569. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sox HC, Greenfield S. Comparative effectiveness research: a report from the Institute of Medicine. Ann Intern Med. 2009 Aug 4;151(3):203–205. doi: 10.7326/0003-4819-151-3-200908040-00125. [DOI] [PubMed] [Google Scholar]

- 29.Hoffman A, Pearson SD. ‘Marginal medicine’: targeting comparative effectiveness research to reduce waste. Health Aff (Millwood) 2009 Jul-Aug;28(4):w710–718. doi: 10.1377/hlthaff.28.4.w710. [DOI] [PubMed] [Google Scholar]

- 30.Donabedian A. Evaluating the quality of medical care. 1966. Milbank Q. 2005;83(4):691–729. doi: 10.1111/j.1468-0009.2005.00397.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mandelblatt JS, Ganz PA, Kahn KL. Proposed agenda for the measurement of quality-of-care outcomes in oncology practice. J Clin Oncol. 1999 Aug;17(8):2614–2622. doi: 10.1200/JCO.1999.17.8.2614. [DOI] [PubMed] [Google Scholar]

- 32.Brookhart MA, Sturmer T, Glynn RJ, Rassen J, Schneeweiss S. Confounding control in healthcare database research: challenges and potential approaches. Med Care. 2010 Jun;48(6 Suppl):S114–120. doi: 10.1097/MLR.0b013e3181dbebe3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bloomrosen M, Detmer D. Advancing the framework: use of health data--a report of a working conference of the American Medical Informatics Association. J Am Med Inform Assoc. 2008 Nov-Dec;15(6):715–722. doi: 10.1197/jamia.M2905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bloomrosen M, Detmer DE. Informatics, evidence-based care, and research; implications for national policy: a report of an American Medical Informatics Association health policy conference. J Am Med Inform Assoc. 2010 Mar 1;17(2):115–123. doi: 10.1136/jamia.2009.001370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Manion FJ, Robbins RJ, Weems WA, Crowley RS. Security and privacy requirements for a multi-institutional cancer research data grid: an interview-based study. BMC Med Inform Decis Mak. 2009;9:31. doi: 10.1186/1472-6947-9-31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Safran C, Bloomrosen M, Hammond WE, et al. Toward a national framework for the secondary use of health data: an American Medical Informatics Association White Paper. J Am Med Inform Assoc. 2007 Jan-Feb;14(1):1–9. doi: 10.1197/jamia.M2273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wallace PJ. Reshaping cancer learning through the use of health information technology. Health Aff (Millwood) 2007 Mar-Apr;26(2):w169–177. doi: 10.1377/hlthaff.26.2.w169. [DOI] [PubMed] [Google Scholar]

- 38.Agency for Healthcare Research and Quality. [Accessed May 29, 2010.];Effective Health Care Program: What is Comparative Effectiveness Research. http://www.effectivehealthcare.ahrq.gov/index.cfm/what-is-comparative-effectiveness-research1/

- 39.Smith SR. Advancing methods for comparative effectiveness research. Med Care. 2010 Jun;48(6 Suppl):S1–2. doi: 10.1097/MLR.0b013e3181e1cde5. [DOI] [PubMed] [Google Scholar]

- 40.Geraci JM, Escalante CP, Freeman JL, Goodwin JS. Comorbid disease and cancer: the need for more relevant conceptual models in health services research. J Clin Oncol. 2005 Oct 20;23(30):7399–7404. doi: 10.1200/JCO.2004.00.9753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Moher D, Hopewell S, Schulz KF, et al. CONSORT 2010 Explanation and Elaboration: Updated guidelines for reporting parallel group randomised trials. J Clin Epidemiol. 2010 Aug;63(8):e1–37. doi: 10.1016/j.jclinepi.2010.03.004. [DOI] [PubMed] [Google Scholar]

- 42.Meyer AM, Carpenter WR, Abernethy AP, Sturmer T, Kosorok MR. Data for cancer comparative effectiveness research: Past, present, and future potential. Cancer. 2012 Apr 19; doi: 10.1002/cncr.27552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Mullan F. Seasons of survival: reflections of a physician with cancer. The New England journal of medicine. 1985 Jul 25;313(4):270–273. doi: 10.1056/NEJM198507253130421. [DOI] [PubMed] [Google Scholar]

- 44.Ganz PA. Cancer Survivorship: Today and Tomorrow. New York: Springer; 2007. [Google Scholar]

- 45.Lievre A, Bachet JB, Boige V, et al. KRAS mutations as an independent prognostic factor in patients with advanced colorectal cancer treated with cetuximab. J Clin Oncol. 2008 Jan 20;26(3):374–379. doi: 10.1200/JCO.2007.12.5906. [DOI] [PubMed] [Google Scholar]

- 46.Lievre A, Bachet JB, Le Corre D, et al. KRAS mutation status is predictive of response to cetuximab therapy in colorectal cancer. Cancer Res. 2006 Apr 15;66(8):3992–3995. doi: 10.1158/0008-5472.CAN-06-0191. [DOI] [PubMed] [Google Scholar]

- 47.Salz T, Weinberger M, Ayanian JZ, et al. Variation in use of surveillance colonoscopy among colorectal cancer survivors in the United States. BMC Health Serv Res. 2010;10:256. doi: 10.1186/1472-6963-10-256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Miller K, Merry B, Miller J. Seasons of survivorship revisited. Cancer J. 2008 Nov-Dec;14(6):369–374. doi: 10.1097/PPO.0b013e31818edf60. [DOI] [PubMed] [Google Scholar]

- 49.Mullan F. Seasons of survival: reflections of a physician with cancer. N Engl J Med. 1985 Jul 25;313(4):270–273. doi: 10.1056/NEJM198507253130421. [DOI] [PubMed] [Google Scholar]

- 50.Basch E, Abernethy AP. Supporting clinical practice decisions with real-time patient-reported outcomes. J Clin Oncol. 2011 Mar 10;29(8):954–956. doi: 10.1200/JCO.2010.33.2668. [DOI] [PubMed] [Google Scholar]

- 51.Basch E, Jia X, Heller G, et al. Adverse symptom event reporting by patients vs clinicians: relationships with clinical outcomes. J Natl Cancer Inst. 2009 Dec 2;101(23):1624–1632. doi: 10.1093/jnci/djp386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Donabedian A. Evaluating the quality of medical care. Milbank Mem Fund Q. 1966 Jul;44(3 Suppl):166–206. [PubMed] [Google Scholar]

- 53.Donabedian A. The quality of care. How can it be assessed? JAMA. 1988 Sep 23–30;260(12):1743–1748. doi: 10.1001/jama.260.12.1743. [DOI] [PubMed] [Google Scholar]

- 54.Cooperberg MR, Birkmeyer JD, Litwin MS. Defining high quality health care. Urol Oncol. 2009 Jul-Aug;27(4):411–416. doi: 10.1016/j.urolonc.2009.01.015. [DOI] [PubMed] [Google Scholar]

- 55.Wilke DR, Krahn M, Tomlinson G, Bezjak A, Rutledge R, Warde P. Sex or survival: short-term versus long-term androgen deprivation in patients with locally advanced prostate cancer treated with radiotherapy. Cancer. 2010 Apr 15;116(8):1909–1917. doi: 10.1002/cncr.24905. [DOI] [PubMed] [Google Scholar]

- 56.Singer PA, Tasch ES, Stocking C, Rubin S, Siegler M, Weichselbaum R. Sex or survival: trade-offs between quality and quantity of life. J Clin Oncol. 1991 Feb;9(2):328–334. doi: 10.1200/JCO.1991.9.2.328. [DOI] [PubMed] [Google Scholar]

- 57.National Cancer Institute. [Accessed May 1, 2012, 2012.];Cancer Research Network. 2012 http://crn.cancer.gov/

- 58.Ayanian JZ, Chrischilles EA, Fletcher RH, et al. Understanding cancer treatment and outcomes: the Cancer Care Outcomes Research and Surveillance Consortium. Journal of clinical oncology : official journal of the American Society of Clinical Oncology. 2004 Aug 1;22(15):2992–2996. doi: 10.1200/JCO.2004.06.020. [DOI] [PubMed] [Google Scholar]

- 59.Women’s Health Initiative. [Accessed May 7, 2012, 2012.];Women’s Health Initiative Scientific Resources Website. 2012 https://cleo.whi.org/SitePages/Home.aspx.

- 60.Maro J, Platt R, Holmes J, et al. Design of a National Distributed Health Data Network. Annals of Internal Medicine. 2009;151(5):341–344. doi: 10.7326/0003-4819-151-5-200909010-00139. [DOI] [PubMed] [Google Scholar]

- 61.Pace W, Cifuentes M, Valuck R, Staton E. An electronic practice-based network for observational comparative effectiveness research. Annals of Internal Medicine. 2009;151(5):338–340. doi: 10.7326/0003-4819-151-5-200909010-00140. [DOI] [PubMed] [Google Scholar]

- 62.Berwick DM. Launching Accountable Care Organizations - The Proposed Rule for the Medicare Shared Savings Program. N Engl J Med. 2011 Mar 31; doi: 10.1056/NEJMp1103602. [DOI] [PubMed] [Google Scholar]

- 63.Centers for Medicare and Medicaid Services. [Accessed April 12, 2011.];Shared Savings Program. 2011 http://www.cms.gov/sharedsavingsprogram/

- 64.Institue of Medicine. A Foundation for Evidence-Driven Practice: A Rapid Learning System for Cancer Care. Washington DC: National Academies Press; 2010. [PubMed] [Google Scholar]

- 65.Abernethy AP, Etheredge LM, Ganz PA, et al. Rapid-learning system for cancer care. J Clin Oncol. 2010 Sep 20;28(27):4268–4274. doi: 10.1200/JCO.2010.28.5478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.(ONC) Office of the National Coordinator for Health Information Technology. [Accessed April 25, 2011.];Federal Health Information Technology Strategic Plan 2011–2015. 2011 http://healthit.hhs.gov/portal/server.pt/community/fed_health_it_strategic_plan/1211/home/15583.

- 67.Bradley CJ, Penberthy L, Devers KJ, Holden DJ. Health services research and data linkages: issues, methods, and directions for the future. Health Serv Res. 2010 Oct;45(5 Pt 2):1468–1488. doi: 10.1111/j.1475-6773.2010.01142.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Pentecost MJ. HIPAA and the law of unintended consequences. J Am Coll Radiol. 2004 Mar;1(3):164–165. doi: 10.1016/j.jacr.2003.12.023. [DOI] [PubMed] [Google Scholar]

- 69.Dracup K, Bryan-Brown CW. The law of unintended consequences. Am J Crit Care. 2004 Mar;13(2):97–99. [PubMed] [Google Scholar]

- 70.Kulynych J, Korn D. The new HIPAA (Health Insurance Portability and Accountability Act of 1996) Medical Privacy Rule: help or hindrance for clinical research? Circulation. 2003 Aug 26;108(8):912–914. doi: 10.1161/01.CIR.0000080642.35380.50. [DOI] [PubMed] [Google Scholar]

- 71.Salem DN, Pauker SG. The adverse effects of HIPAA on patient care. N Engl J Med. 2003 Jul 17;349(3):309. doi: 10.1056/NEJM200307173490324. [DOI] [PubMed] [Google Scholar]

- 72.Kulynych J, Korn D. The new federal medical-privacy rule. N Engl J Med. 2002 Oct 10;347(15):1133–1134. doi: 10.1056/NEJMp020113. [DOI] [PubMed] [Google Scholar]

- 73.Beebe TJ, Ziegenfuss JY, StSauver JL, et al. Health Insurance Portability and Accountability Act (HIPAA) Authorization and Survey Nonresponse Bias. Medical Care. 2011 Mar 1; doi: 10.1097/MLR.0b013e318202ada0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Institute of Medicine. Beyond the HIPAA Privacy Rule: Enhancing Privacy, Improving Health Through Research. Washington DC: National Academies Press; 2009. [PubMed] [Google Scholar]

- 75.Hirsch BR, Giffin RB, Esmail LC, Tunis SR, Abernethy AP, Murphy SB. Informatics in action: lessons learned in comparative effectiveness research. Cancer journal. 2011 Jul-Aug;17(4):235–238. doi: 10.1097/PPO.0b013e31822c3944. [DOI] [PubMed] [Google Scholar]

- 76.Sturmer T, Rothman KJ, Avorn J, Glynn RJ. Treatment effects in the presence of unmeasured confounding: dealing with observations in the tails of the propensity score distribution--a simulation study. Am J Epidemiol. 2010 Oct 1;172(7):843–854. doi: 10.1093/aje/kwq198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Brookhart MA, Wang PS, Solomon DH, Schneeweiss S. Instrumental variable analysis of secondary pharmacoepidemiologic data. Epidemiology. 2006 Jul;17(4):373–374. doi: 10.1097/01.ede.0000222026.42077.ee. [DOI] [PubMed] [Google Scholar]

- 78.Burke T. The health information technology provisions in the American Recovery and Reinvestment Act of 2009: implications for public health policy and practice. Public Health Rep. 2010 Jan-Feb;125(1):141–145. doi: 10.1177/003335491012500119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Goldstein MM. The health privacy provisions in the American Recovery and Reinvestment Act of 2009: implications for public health policy and practice. Public Health Rep. 2010 Mar-Apr;125(2):343–349. doi: 10.1177/003335491012500225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Lee LM, Gostin LO. Ethical collection, storage, and use of public health data: a proposal for a national privacy protection. JAMA. 2009 Jul 1;302(1):82–84. doi: 10.1001/jama.2009.958. [DOI] [PubMed] [Google Scholar]

- 81.Etheredge LM. Creating a high-performance system for comparative effectiveness research. Health affairs. 2010 Oct;29(10):1761–1767. doi: 10.1377/hlthaff.2010.0608. [DOI] [PubMed] [Google Scholar]