Abstract

Normal aging has been associated with an increased propensity to wait for rewards. When this is tested experimentally, rewards are typically offered at increasing delays. In this setting, persistent responding for delayed rewards in aged rats could reflect either changes in the evaluation of delayed rewards or diminished learning, perhaps due to the loss of subcortical teaching signals induced by changes in reward; the loss or diminution of such teaching signals would result in slower learning with progressive delay of reward, which would appear as persistent responding. Such teaching signals have commonly been reported in phasic firing of midbrain dopamine neurons; however, similar signals have also been found in reward-responsive neurons in the basolateral amygdala (ABL). Unlike dopaminergic teaching signals, those in ABL seem to reflect surprise, increasing when reward is either better or worse than expected. Accordingly, activity is correlated with attentional responses and with the speed of learning after surprising increases or decreases in reward. Here we examined whether these attention-related teaching signals might be altered in normal aging. Young (3–6 months) and aged (22–26 months) male Long–Evans rats were trained on a discounting task used previously to demonstrate these signals. As expected, aged rats were less sensitive to delays, and this change was associated with a loss of attentional changes in orienting behavior and neural activity. These results indicate that normal aging alters teaching signals in the ABL. Changes in these teaching signals may contribute to a host of age-related cognitive changes.

Introduction

Normal aging is associated with a host of cognitive changes, including increased wisdom and patience, reduced impulsivity, perseverance in the face of criticism, and a greater ability to forgo short-term benefits in favor of more distant gratification. Many of these attributes are potentially captured by so-called delay discounting tasks; in these tasks, the subject is given a choice between an immediate, typically smaller reward and a delayed, larger reward (Herrnstein, 1961; Rachlin and Green, 1972; Thaler, 1981; Kahneman and Tverskey, 1984; Rodriguez and Logue, 1988; Lowenstein, 1992; Evenden and Ryan, 1996; Green et al., 1996; Richards et al., 1997; Ho et al., 1999; Kalenscher et al., 2005; Kalenscher and Pennartz, 2008). Subjects are normally willing to wait some amount of time for a significantly larger reward. However, subjects' willingness to wait declines as the delay to reward increases, evidenced by their increased selection of the small, immediate reward at longer delays. Recently, it has been shown that normal aging is associated with diminished discounting of or an increased willingness to wait for delayed rewards (Green et al., 1996; Petry, 2001a,b; Dixon et al., 2003; Simon et al., 2010).

This diminished discounting has been linked to prefrontal and particularly orbitofrontal cortex. The orbitofrontal cortex is implicated in discounting behavior (Mobini et al., 2002; Winstanley et al., 2004; Rudebeck et al., 2006), and some aged rats show changes in orbitofrontal function in other settings. For example, aged rats are slower to acquire reversals, and slower reversal learning has been linked to changes in processing in the orbitofrontal cortex (Schoenbaum et al., 2002, 2006). Consistent with this idea, we have recently shown that the increased selection of delayed rewards in aged rats is associated with the over-representation, relative to what is observed in young rats, of the delayed reward in the orbitofrontal cortex (Roesch et al., 2012). This relative over-representation suggests that the orbitofrontal cortex may be maintaining stronger and more persistent representations of the delayed reward, thereby driving stronger and more persistent responding for the delayed reward in aged rats.

However, while normal discounting requires the appropriate representation of the delayed reward, it also requires learning, particularly when discounting is assessed across blocks of trials in which the time to the larger reward is progressively increased. In this setting, the normal pattern of behavior (switching to the small, immediate reward) also depends upon the ability of the subject to recognize the reduced value of the delayed reward and learn to avoid it. In the design we have used, this occurs via titration of the delayed reward. In other words, the reward is progressively delayed until the rat begins to avoid it. We have found that this reward titration and changes in reward size drive teaching signals in reward-responsive dopaminergic and amygdala neurons (Roesch et al., 2007, 2010). The loss of one or both of these normal teaching signals might lead to a greater propensity to wait for the delayed reward, secondary to over-representation of delayed outcomes (due to a failure to learn their new, reduced value) in areas involved in discounting, such as the orbitofrontal cortex (Winstanley et al., 2004; Roesch et al., 2006). Here we tested this hypothesis by searching in aged rats for the attentional teaching signal we and others (Belova et al., 2007; Roesch et al., 2010; Li et al., 2011) have previously identified in the basolateral amygdala (ABL) of young animals.

Materials and Methods

Subjects.

Male Long–Evans rats in the aged group (n = 4) were acquired at ∼10 months of age (Charles River Laboratories) and housed for ∼1 year in preparation for the experiment. During this time they were handled weekly. Testing began when they were >22–24 months of age. Young controls (n = 12) were acquired at ∼3 months of age ∼2 weeks before testing (Charles River Laboratories). During this time they were handled daily. Rats were tested at the University of Maryland School of Medicine in accordance with SOM and National Institutes of Health guidelines.

Surgical procedures, inactivation, and histology: Surgical procedures followed guidelines for aseptic technique. Electrodes were manufactured and implanted as in prior recording experiments. Rats had a drivable bundle of 10 25 μm diameter FeNiCr wires (Stablohm 675; California Fine Wire) chronically implanted in the left hemisphere dorsal to either ABL (n = 7; 3.0 mm posterior to bregma, 5.0 mm laterally, and 7.5 mm ventral to the brain surface). Immediately before implantation, these wires were freshly cut with surgical scissors to extend ∼1 mm beyond the cannula and electroplated with platinum (H2PtCl6; Aldrich) to an impedance of ∼300 kΩ. Cephalexin (15 mg/kg, p.o.) was administered twice daily for 2 weeks postoperatively to prevent infection. At the end of recording, the final electrode position was marked by passing a 15 μA current through each electrode. The rats were then perfused, and their brains removed and processed for histology using standard techniques.

Behavioral task.

Recording was conducted in aluminum chambers ∼18 “on each side with sloping walls narrowing to an area of 12 × 12” at the bottom. A central odor port was located above with two adjacent fluid wells on a panel in the right wall of each chamber. Two lights were located above the panel. The odor port was connected to an air-flow dilution olfactometer to allow the rapid delivery of olfactory cues. Task control was implemented via computer. Port entry and licking were monitored by disruption of photobeams. Odors were chosen from compounds obtained from International Flavors and Fragrances. The basic design of a trial is illustrated in Figure 1. Trials were signaled by illumination of the panel lights inside the box. When these lights were on, nose poke into the odor port resulted in delivery of the odor cue to a small hemicylinder located behind this opening. One of three different odors was delivered to the port on each trial, in a pseudorandom order. At odor offset, the rat had 3 s to make a response at one of the two fluid wells located below the port. One odor (Verbena Oliffac) instructed the rat to go to the left to get reward, a second odor (Camekol DH) instructed the rat to go to the right to get reward, and a third odor (Cedryl Acet Trubek) indicated that the rat could obtain reward at either well. Odors were presented in a pseudorandom sequence such that the free-choice odor was presented on 7/20 trials and the left/right odors were presented in equal numbers (±1 over 250 trials). In addition, the same odor could be presented on no more than three consecutive trials. Odor identity did not change over the course of the experiment. Once the rats were shaped to perform this basic task, we introduced blocks in which we independently manipulated the size of the reward delivered at a given side or the length of the delay preceding reward delivery. Once the rats were able to maintain accurate responding through these manipulations, we began recording sessions. For recording, one well was randomly designated as short (500 ms) and the other long (1–7 s) at the start of the session (Fig. 1a, block 1). In the second block of trials these contingencies were switched (Fig. 1a, block 2). The length of the delay under long conditions abided the following algorithm. The side designated as long started off as 1 s and increased by 1 s every time that side was chosen until it became 3 s. If the rat continued to choose that side, the length of the delay increased by 1 s up to a maximum of 7 s. If the rat chose the side designated as long <8 out of the last 10 choice trials then the delay was reduced by 1 s to a minimum of 3 s. The reward delay for long forced-choice trials was yoked to the delay in free-choice trials during these blocks. In later blocks (blocks 3 and 4) we held the delay preceding reward delivery constant (500 ms) while manipulating the size of the expected reward (Fig. 1a). The reward was a 0.05 ml bolus of 10% sucrose solution. For big reward, an additional bolus was delivered after 500 ms. At least 60 trials per block were collected for each neuron. Rats were water deprived (∼30 min of ad libitum water per day) with ad libitum access on weekends.

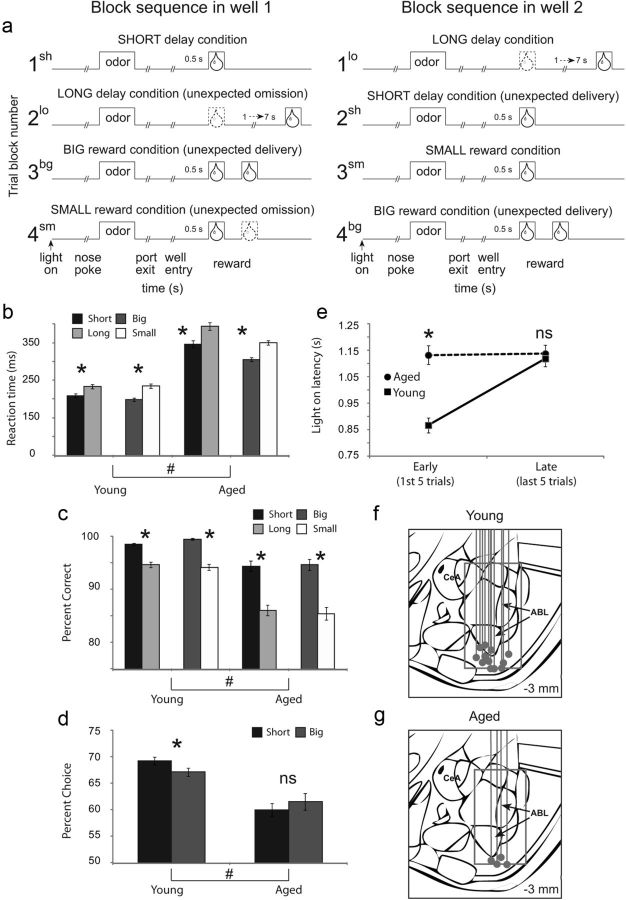

Figure 1.

Task, behavior, and recording sites. a, The sequence of events in each trial block. At the beginning of each recording session, one well was arbitrarily designated as short (a short 500 ms delay before reward) and the other designated as long (a relatively long 1–7 s delay before reward) (block 1). After the first block of trials (∼60 trials), the wells unexpectedly reversed contingencies (block 2). With the transition to block 3, the delays to reward were held constant across wells (500 ms), but the size of the reward was manipulated. The well designated as long during the previous block now offered two fluid boli whereas the opposite well offered one bolus. The reward contingencies again reversed in block 4. b, c, Height of each bar indicates the reaction time (port exit minus odor offset) and percentage correct for short (black), long (light gray), big (dark gray), and small (white) forced-choice trials for young and aged rats. d, The height of each bar indicates the percentage choice of short-delay (black) and big-reward (dark gray) taken over all free-choice trials. e, Latency to nose poke after onset of house lights during the first and last five trials in each block for aged (circles) and young (squares) rats. Asterisks, planned comparisons revealing statistically significant differences (t test, p < 0.05). Error bars indicate SEs. #, main effect of age in ANOVA. f, g, Recording sites for each rat. Gray dot represents the final position of the electrode as verified by histology. Box estimates the extent of the recording over all rats.

Single-unit recording.

Procedures were the same as described previously (Roesch et al., 2012). Wires were screened for activity daily; if no activity was detected, the rat was removed, and the electrode assembly was advanced 40 or 80 μm. Otherwise active wires were selected to be recorded, a session was conducted, and the electrode was advanced at the end of the session. Neural activity was recorded using two identical Plexon Multichannel Acquisition Processor systems, interfaced with odor discrimination training chambers. Signals from the electrode wires were amplified 20× by an op-amp headstage (Plexon HST/8o50-G20-GR), located on the electrode array. Immediately outside the training chamber, the signals were passed through a differential pre-amplifier (Plexon Inc PBX2/16sp-r-G50/16fp-G50), where the single unit signals were amplified 50× and filtered at 150–9000 Hz. The single unit signals were then sent to the Multichannel Acquisition Processor box, where they were further filtered at 250–8000 Hz, digitized at 40 kHz, and amplified at 1–32×. Waveforms (>2.5:1 signal-to-noise) were extracted from active channels and recorded to disk by an associated workstation with event timestamps from the behavior computer.

Data analysis.

Units were sorted using Offline Sorter software from Plexon, using a template-matching algorithm. Sorted files were then processed in Neuroexplorer to extract unit timestamps and relevant event markers. These data were subsequently analyzed in MATLAB (MathWorks). In the current study, we focused on activity 1000 ms after reward delivery. Wilcoxon tests were used to measure significant shifts from zero in distribution plots (p < 0.05) and t tests or ANOVAs were used to measure within cell differences in firing rate (p < 0.05). Pearson χ2 tests (p < 0.05) were used to compare the proportions of neurons.

Results

ABL neurons in aged and young rats were recorded in a choice task, illustrated in Figure 1a. On each trial, rats responded to one of two adjacent wells after sampling an odor at a central port. Rats were trained to respond to three different odor cues: one that signaled reward in the right well (forced-choice), a second that signaled reward in the left well (forced-choice), and a third that signaled reward in either well (free-choice). At the start of different blocks of trials, we manipulated the timing or size of the reward, thereby increasing (Fig. 1a, blocks 2sh, 3bg, and 4bg) or decreasing (Fig. 1a, blocks 2lo and 4sm) its value unexpectedly.

Both groups changed their behavior in response to these value manipulations. A two-factor ANOVA with age (young vs aged) and value manipulation (delay vs size blocks) as factors showed that, on forced-choice trials, both groups were faster (Fig. 1b) and more accurate (Fig. 1c) for cues that predicted high-value reward (main effect of value: F > 22.2; p < 0.0001); however, older rats were generally slower and less accurate overall (main effect of age: F > 98.5; p < 0.0001). Nevertheless, the difference between high and low value was significant for each post hoc comparison (t test; t > 4.45; p < 0.0001). Thus, like young rats, aged rats perceived the differently delayed and sized rewards as having different values. On free-choice trials, however, this analysis showed that aged rats chose the more valued reward less often than young rats (Fig. 1d; F(1,432) = 50.5; p < 0.0001). While there was no main effect of value manipulation (F(1,432) = 0.08; p = 0.77), there was a trend toward an interaction (F(1,432) = 3.02; p = 0.08). This effect is consistent with prior work showing that aged rats tend to be more willing to wait for delayed reward (Green et al., 1996; Petry, 2001a,b; Dixon et al., 2003; Simon et al., 2010).

Notably, performance differences in aged rats were associated with a loss of attentional shifts that we normally observe immediately after changes in reward. These shifts are evident in changes in rats' latency to orient to and approach the odor port at the start of each trial. Speed of orienting to the odor port precedes knowledge of the upcoming reward and thus cannot reflect the value of the upcoming reward; instead this measure, like changes in other measures of orienting (Kaye and Pearce, 1984; Pearce et al., 1988; Swan and Pearce, 1988), may reflect error-driven increases in the processing of trial events (e.g., cues and/or reward). Accordingly, young rats showed faster orienting at the start of blocks when the value of the expected rewards changed, responding significantly faster during early trials (first five trials) compared with later trials (last five trials) (Fig. 1e). This was true after either an upshift or downshift of reward value. In contrast, aged rats showed no significant difference in their speed of orienting immediately after a shift in reward versus later in these blocks (Fig. 1e). This interpretation was reflected in the results of a three-factor ANOVA with shift type (up vs down), learning (early vs late), and age (young vs old) as factors, which revealed a significant main effect of age, shift type, and learning, and a significant interaction between age and learning (F > 7.78; p < 0.05). Post hoc t tests demonstrated that young, but not old rats, were significantly faster during early compared with late trials (t test; t > 6.02; p < 0.0001) after upshifts and downshifts (t test; t > 6.02; p < 0.0001). There was no significant interaction between shift and learning or age and shift type (F < 2.68; p > 0.10).

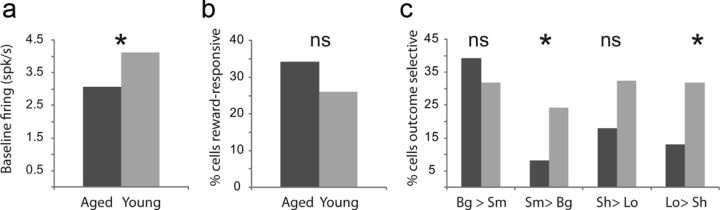

Against this backdrop, we recorded 193 neurons in 4 aged rats and 619 neurons in 12 young rats (Fig. 1f,g). Control data included 335 neurons from 5 rats run concurrently with the aged rats and 284 neurons from 7 rats recorded in a prior study (Roesch et al., 2010). There were no differences in the neural effects reported between the prior and the new controls. We focused our analysis on changes in reward-evoked firing, which we have previously shown correlates with surprise or attentional shifts after upshifts or downshifts in reward (Roesch et al., 2010; Esber et al., 2012). This signal is specifically evident in reward-responsive neurons, thus we began by screening for these cells in young and aged rats, comparing activity during reward to activity at baseline. Firing during baseline (1 s before nose poke) was significantly reduced in aged rats (t test; t = 2.01; p < 0.05; Fig. 2a); however, the number of neurons that increased firing to reward delivery (1 s after reward compared with1 s before nose poke) was not significantly different between groups (young: 157 neurons (25%); aged: 61 neurons (32%); χ2 = 3.7; p = 0.05; Figure 2b). Moreover, consistent with reports that ABL encodes value (Nishijo et al., 1988; Schoenbaum et al., 1998; Sugase-Miyamoto and Richmond, 2005; Belova et al., 2007,, 2008; Tye et al., 2008; Fontanini et al., 2009), neurons in both groups exhibited significant differential firing (t test; p < 0.05) based on either the timing or size of the reward after learning, although significantly fewer neurons showed reward value effects in aged rats (Fig. 2c). This was particularly evident for low-value outcomes (i.e., long delay and small reward; Fig. 2c).

Figure 2.

Outcome selectivity in ABL is reduced in aged animals. a, Baseline firing as computed during 1 s before nose poke. Baseline firing was reduced in aged ABL (t test; p < 0.05). b, The number of reward-responsive neurons in ABL (higher firing 1 s after reward compared with baseline; t test; p < 0.05) was not significantly different between aged and young rats (χ2 = 3.7; p = 0.05). c, Height of each bar indicates the percentage of neurons that showed a significant effect of delay or size in an ANOVA during the 1 s after reward delivery (p < 0.05). Asterisks reflect significant difference between counts of neurons in each group as measured by χ2 (p < 0.05).

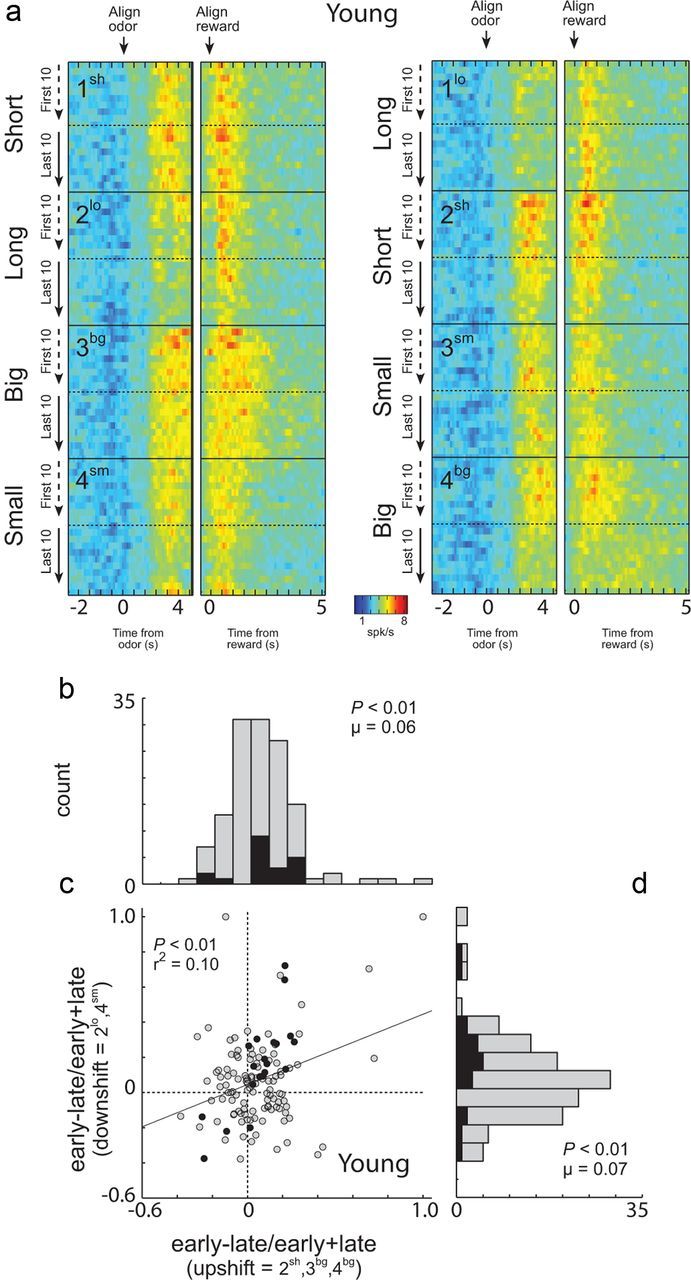

The outcome-selective ABL neurons in young rats also exhibited changes in reward-related firing between the beginning and end of each block of trials, consistent with registration of surprise in response to reward shifts. Differences were apparent when reward was delivered unexpectedly, as at the start of blocks 2sh, 4bg, and 3bg (Fig. 3A). Average reward-evoked activity appears higher at the start of these blocks, when choice performance was poor, than at the end, when performance had improved, even though the actual reward being delivered was the same. At the same time, reward-evoked activity also appears higher at the start of blocks 2lo and 4sm (Fig. 3a), when the value of the reward declined unexpectedly. This impression was confirmed by a two-factor ANOVA comparing activity at the time of reward and reward omission (1 s) in each neuron across learning (early vs late) and shift type (upshift vs downshift). According to this analysis, 16 of the outcome-selective 131 neurons (12%) fired significantly earlier in a block, after a change in reward, than later, after learning (ANOVA; p < 0.05; main effect of learning). Only four neurons showed the opposite firing pattern (χ2; p < 0.05).

Figure 3.

Neural activity in ABL of young animals was increased in response to unexpected reward delivery and omission. a, Heat plots showing average activity over all ABL neurons from young animals (n = 131) that showed a significant effect of delay or size in the ANOVA during the 1 s after reward delivery. Activity over the course of the trials is plotted during the first and last 20 (10 per direction) trials in each training block (Fig. 1a; blocks 1–4). Activity is shown, aligned on odor onset (“align odor”) and reward delivery (“align reward”). Blocks 1–4 are shown in the order performed (top to bottom). Thus, during block 1, rats responded after a “long ” delay or a “short ” delay to receive reward (actual starting direction—left/right—was counterbalanced in each block and is collapsed here). In block 2, the locations of the short delay and long delay were reversed. In blocks 3 and 4, delays were held constant, but the size of the reward (“big ” or “small”) varied. Line display between heat plots shows the rats' behavior on free-choice trials that were interleaved within the forced-choice trials. Value of 50% means that rats responded the same to both wells. b–d, Distribution of indices representing the difference in firing in individual neurons to reward delivery (b) or reward omission (d) early versus late in the relevant trial blocks. Correlation between indices shown in b and d is shown in c. Filled bars (b, d) and dots (c) indicate the values of neurons that showed a significant main effect (p < 0.05) of learning (early vs late) with no interaction in the two-factor ANOVA described in the text.

These effects were also apparent across the population as quantified in the distribution plots showing the contrast in activity (early vs late) for each neuron in response to upshift (Fig. 3b) or downshift in reward (Fig. 3d). These distributions were shifted significantly above zero, indicating higher maximal firing immediately after a change in reward than later after learning (Fig. 3b, Wilcoxon, p < 0.01, μ = 0.06; Fig. 3d, Wilcoxon, p < 0.01, μ = 0.07). The two distributions did not differ significantly (Wilcoxon; p = 0.57) and, in fact, were positively correlated (p < 0.01; r2 = 0.10; Fig. 3c), indicating that the neurons that tended to increase their firing at the start of upshift blocks also increased their firing at the start of downshift blocks.

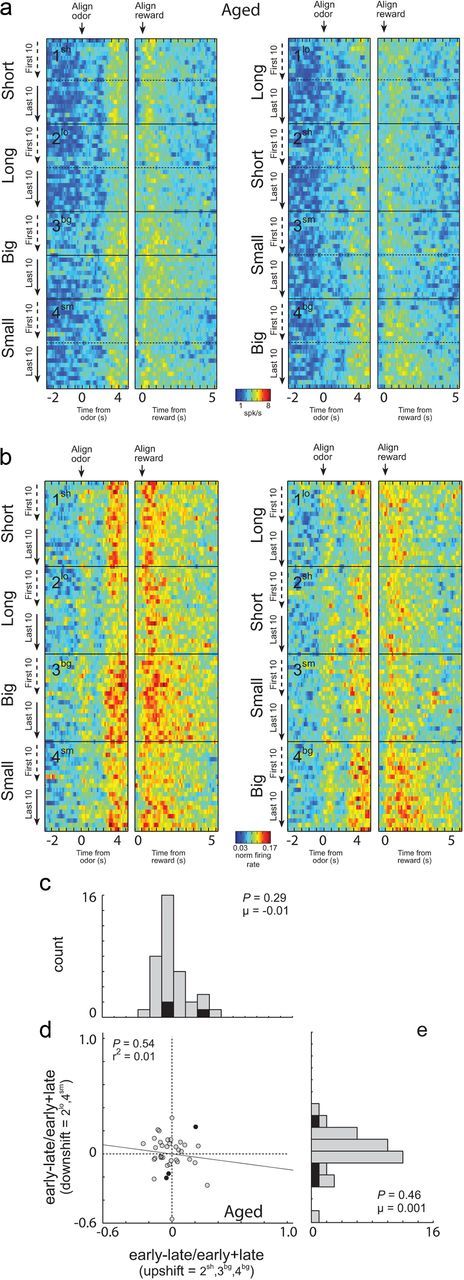

These effects were entirely absent in outcome-selective neurons (n = 72) recorded in aged rats. Average activity appeared similar at the start and the end of blocks in which reward was changed, in both the raw and normalized data (Fig. 4a,b), the distributions comparing firing early versus late in the relevant blocks were centered on zero for both upshifts and downshifts (Fig. 4c,e; Wilcoxon, p > 0.2), and there was no relationship between changes in firing for individual neurons in response to upshifts and downshifts (Fig. 4d; p = 0.54; r2 = 0.01).

Figure 4.

Neural activity in ABL of aged animals was not increased in response to unexpected reward delivery and omission. a, b, Heat plots showing average raw (a) and normalized (b) activity over all ABL neurons from aged animals (n = 72) that showed a significant effect of delay or size in the ANOVA during the 1 s after reward delivery. Contingencies are the same as in Figure 3a. Data normalized by dividing firing rate by the maximal firing during the trial. c–e, Distribution of indices representing the difference in firing in individual neurons to reward delivery (c) or reward omission (e) early versus late in the relevant trial blocks. Correlation between indices shown in c and e is shown in d. Filled bars (c, e) and dots (d) indicate the values of neurons that showed a significant main effect (p < 0.05) of learning (early vs late) with no interaction in the two-factor ANOVA described in the text.

Discussion

Normal aging is associated with changes in the relative weight given to immediate versus more remote or delayed rewards. These effects are evident experimentally as diminished discounting of or an increased willingness to wait for delayed rewards (Green et al., 1996; Petry, 2001a,b; Dixon et al., 2003; Simon et al., 2010). While such changes have typically been associated with prefrontal—and particularly orbitofrontal—changes (Roesch et al., 2012), the apparent prefrontal changes could be secondary to age-related shifts in teaching mechanisms in other structures, such as the midbrain dopamine system or amygdala. This is particularly true when discounting is run in a blocked design in which reward is progressively delayed. In this setting, the normal pattern of behavior depends upon preserved learning mechanisms to update the declining value of the delayed reward. Viewed from this perspective, encoding in an area like orbitofrontal cortex, which we have recently shown to over-represent delayed rewards in aged rats (Roesch et al., 2012), might be abnormal because of primary changes in how this region represents associative information, or it might be abnormal because afferent regions are not propagating the appropriate teaching signals when rewards are delayed (i.e., omitted or delivered at unexpected times) in these settings. The distinction is potentially critical because under the former hypothesis the orbitofrontal cortex is functioning differently in aged subjects, whereas under the latter hypothesis, the functional changes may reside elsewhere.

Here we found evidence consistent with the latter hypothesis. Specifically, normal aging was associated with an increased propensity to wait for delayed reward and also with a weakening of the normal effect of larger rewards on choice behavior. These behavioral changes were associated with a loss of attentional orienting responses, which we have previously shown to be ABL dependent (Roesch et al., 2010), and attention-related teaching signals in ABL, which we have previously found to be associated with facilitated learning in this task (Roesch et al., 2010). Notably, we did not find a general loss of phasic firing to reward. Thus aging does not seem to cause overt loss of associative or reward-related signaling in ABL; rather its affects are more selective. This is relevant, since frank lesions of ABL have been shown to reduce responding for delayed reward (Winstanley et al., 2004), which would be the opposite of the age-related behavioral effect observed here and in earlier studies.

These results suggest that persistent responding to and encoding of delayed rewards in prefrontal regions could be secondary to altered modulation of learning rate due to an inability to appropriately signal errors in the prediction of reward. They also suggest the need for a re-evaluation of other age-related cognitive changes that have heretofore been ascribed to prefrontal dysfunction. Like discounting, in many of these cases, the procedures used confound associative representation and decision making with the need to recognize and learn from shifts in rewards (or punishments including reward omission). For example, normal aging has been associated with slower reversal learning (Barense et al., 2002; Lamar and Resnick, 2004; Brushfield et al., 2008), and this alteration has been linked to changes in processing in the orbitofrontal cortex (Schoenbaum et al., 2002, 2006). Yet reversal performance obviously depends on an underlying ability to recognize and learn rapidly from shifts in rewards. Normal aging has also been associated with poor performance in working memory tasks and in the ability to acquire discriminations using previously irrelevant stimuli dysfunction (Zyzak et al., 1995; Rypma and D'Esposito, 2000; Barense et al., 2002; Lamar et al., 2004; Gazzaley et al., 2005; Wang et al., 2011), again changes that can equally well reflect slower learning as the ultimate representation and use of associative information. Viewed in light of the current data, it is reasonable to ask whether these age-related shifts in behavior truly reflect primary changes in the representation of associative information in the relevant prefrontal regions or whether they too may derive, at least in part, from changes in these subcortical teaching signals.

With regard to orbitofrontal function specifically, we have failed to find effects of normal aging on reinforcer devaluation (Singh et al., 2011). This result was surprising, since devaluation is closely associated with orbitofrontal function (Gallagher et al., 1999; Gottfried et al., 2003; Izquierdo et al., 2004). However, the change in conditioned responding after devaluation is not particularly dependent on subtle learning effects, since animals intentionally receive significant training on the fundamental underlying associations. Indeed, the critical probe test is designed to directly assess the use of the previously acquired and presumably stable associative representations without any interference from new learning (Holland and Rescorla, 1975), and the orbitofrontal cortex has been repeatedly shown as necessary in this probe test (Pickens et al., 2003, 2005; West et al., 2011). Aged rats failed to show any evidence of a deficit in this task, suggesting they do not lack the ability to represent and use associative information and favoring changes in teaching signals as an explanation for the aforementioned deficits.

Of course subcortical teaching signals depend, in turn, on afferent input from other regions. Most immediately, we have reported recently that the attention-related signal demonstrated here in amygdala is disrupted by 6-OHDA lesions (Esber et al., 2012). Thus changes in the signal identified here may reflect changes in dopaminergic error signals with aging (Samanez-Larkin et al., 2011). And of course these error signals require input regarding the expected and actual rewards. Any changes in these inputs would be expected to modulate these signals. This includes input from orbitofrontal cortex (Takahashi et al., 2011), which appears to contribute to the accuracy of the reward expectancies upon which the dopaminergic error signal depends. As noted earlier, we have found heightened representation of delayed rewards in this task in orbitofrontal cortex of aged rats (Roesch et al., 2012). Based on our prior results (Takahashi et al., 2011), this heightened firing should result in larger rather than smaller error signals. One possible explanation for our observation of smaller teaching signals here, in light of the earlier study, is that aging disrupted the normal interactions between prefrontal regions and the subcortical error signaling systems. Such a selective effect on prefrontal contributions to learning would be consistent with evidence, discussed earlier, indicating that aging has its most profound effects on prefrontal-dependent tasks that require learning. It would also be consistent with the self-evident observation that normal aging is unlikely to be well modeled by lesions or other point effects; rather it is a circuit phenomenon and thus likely to affect functions of circuits rather than discrete areas. Changes in the ability of prefrontal information to access subcortical teaching mechanisms would represent just such a circuit-level effect.

Footnotes

This work was supported by grants from National Institute on Aging (G.S.) and National Institute on Drug Abuse (M.R.R.). This article was prepared in part while G.S. was employed at University of Maryland, Baltimore. The opinions expressed in this article are the author's own and do not reflect the view of the National Institutes of Health, the Department of Health and Human Services, or the United States government.

References

- Barense MD, Fox MT, Baxter MG. Aged rats are impaired on an attentional set-shifting task sensitive to medial frontal cortex damage in young rats. Learn Mem. 2002;9:191–201. doi: 10.1101/lm.48602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55:970–984. doi: 10.1016/j.neuron.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Salzman CD. Moment-to-moment tracking of state value in the amygdala. J Neurosci. 2008;28:10023–10030. doi: 10.1523/JNEUROSCI.1400-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brushfield AM, Luu TT, Callahan BD, Gilbert PE. A comparison of discrimination and reversal learning for olfactory and visual stimuli in aged rats. Behav Neurosci. 2008;122:54–62. doi: 10.1037/0735-7044.122.1.54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon MR, Marley J, Jacobs EA. Delay discounting by pathological gamblers. J Appl Behav Anal. 2003;36:449–458. doi: 10.1901/jaba.2003.36-449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Esber GR, Roesch MR, Bali S, Trageser J, Bissonette GB, Puche AC, Holland PC, Schoenbaum G. Attention-related Pearce-Kay-Hall signals in basolateral amygdala require the midbrain dopamine system. Biol Psychiatry. 2012 doi: 10.1016/j.biopsych.2012.05.023. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evenden JL, Ryan CN. The pharmacology of impulsive behaviour in rats: the effects of drugs on response choice with varying delays of reinforcement. Psychopharmacology (Berl) 1996;128:161–170. doi: 10.1007/s002130050121. [DOI] [PubMed] [Google Scholar]

- Fontanini A, Grossman SE, Figueroa JA, Katz DB. Distinct subtypes of basolateral amygdala neurons reflect palatability and reward. J Neurosci. 2009;29:2486–2495. doi: 10.1523/JNEUROSCI.3898-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallagher M, McMahan RW, Schoenbaum G. Orbitofrontal cortex and representation of incentive value in associative learning. J Neurosci. 1999;19:6610–6614. doi: 10.1523/JNEUROSCI.19-15-06610.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzaley A, Cooney JW, Rissman J, D'Esposito M. Top-down suppression deficit underlies working memory impairment in normal aging. Nat Neurosci. 2005;8:1298–1300. doi: 10.1038/nn1543. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, O'Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- Green L, Myerson J, Lichtman D, Rosen S, Fry A. Temporal discounting in choice between delayed rewards: the role of age and income. Psychol Aging. 1996;11:79–84. doi: 10.1037//0882-7974.11.1.79. [DOI] [PubMed] [Google Scholar]

- Herrnstein RJ. Relative and abflute strength of response as a function of frequency of reinforcement. J Exp Anal Behav. 1961;4:267–272. doi: 10.1901/jeab.1961.4-267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ho MY, Mobini S, Chiang TJ, Bradshaw CM, Szabadi E. Theory and method in the quantitative analysis of “impulsive choice” behaviour: implications for psychopharmacology. Psychopharmacology (Berl) 1999;146:362–372. doi: 10.1007/pl00005482. [DOI] [PubMed] [Google Scholar]

- Holland PC, Rescorla RA. The effects of two ways of devaluing the unconditioned stimulus after first and second-order appetitive conditioning. J Exp Psychol Anim Behav Process. 1975;1:355–363. doi: 10.1037//0097-7403.1.4.355. [DOI] [PubMed] [Google Scholar]

- Izquierdo A, Suda RK, Murray EA. Bilateral orbital prefrontal cortex lesions in rhesus monkeys disrupt choices guided by both reward value and reward contingency. J Neurosci. 2004;24:7540–7548. doi: 10.1523/JNEUROSCI.1921-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahneman D, Tverskey A. Choices, values, and frames. Am Psychol. 1984;39:341–350. [Google Scholar]

- Kalenscher T, Pennartz CM. Is a bird in the hand worth two in the future? The neuroeconomics of intertemporal decision-making. Prog Neurobiol. 2008;84:284–315. doi: 10.1016/j.pneurobio.2007.11.004. [DOI] [PubMed] [Google Scholar]

- Kalenscher T, Windmann S, Diekamp B, Rose J, Güntürkün O, Colombo M. Single units in the pigeon brain integrate reward amount and time-to-reward in an impulsive choice task. Curr Biol. 2005;15:594–602. doi: 10.1016/j.cub.2005.02.052. [DOI] [PubMed] [Google Scholar]

- Kaye H, Pearce JM. The strength of the orienting response during Pavlovian conditioning. J Exp Psychol Anim Behav Process. 1984;10:90–109. [PubMed] [Google Scholar]

- Lamar M, Resnick SM. Aging and prefrontal functions: dissociating orbitofrontal and dorsolateral abilities. Neurobiol Aging. 2004;25:553–558. doi: 10.1016/j.neurobiolaging.2003.06.005. [DOI] [PubMed] [Google Scholar]

- Lamar M, Yousem DM, Resnick SM. Age differences in orbitofrontal activation: an fMRI investigation of delayed match and nonmatch to sample. Neuroimage. 2004;21:1368–1376. doi: 10.1016/j.neuroimage.2003.11.018. [DOI] [PubMed] [Google Scholar]

- Li J, Schiller D, Schoenbaum G, Phelps EA, Daw ND. Differential roles of human striatum and amygdala in associative learning. Nat Neurosci. 2011;14:1250–1252. doi: 10.1038/nn.2904. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowenstein G, Elster J. Choice over time. New York: Russell Sage Foundation; 1992. [Google Scholar]

- Mobini S, Body S, Ho MY, Bradshaw CM, Szabadi E, Deakin JF, Anderson IM. Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology. 2002;160:290–298. doi: 10.1007/s00213-001-0983-0. [DOI] [PubMed] [Google Scholar]

- Nishijo H, Ono T, Nishino H. Single neuron responses in alert monkey during complex sensory stimulation with affective significance. J Neurosci. 1988;8:3570–3583. doi: 10.1523/JNEUROSCI.08-10-03570.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearce JM, Wilson P, Kaye H. The influence of predictive accuracy on serial conditioning in the rat. Q J Exp Psychol. 1988;40B:181–198. [Google Scholar]

- Petry NM. Delay discounting of money and alcohol in actively using alcoholics, currently abstinent alcoholics, and controls. Psychopharmacology (Berl) 2001a;154:243–250. doi: 10.1007/s002130000638. [DOI] [PubMed] [Google Scholar]

- Petry NM. Pathological gamblers, with and without substance use disorders, discount delayed rewards at high rates. J Abnorm Psychol. 2001b;110:482–487. doi: 10.1037//0021-843x.110.3.482. [DOI] [PubMed] [Google Scholar]

- Pickens CL, Saddoris MP, Setlow B, Gallagher M, Holland PC, Schoenbaum G. Different roles for orbitofrontal cortex and basolateral amygdala in a reinforcer devaluation task. J Neurosci. 2003;23:11078–11084. doi: 10.1523/JNEUROSCI.23-35-11078.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pickens CL, Saddoris MP, Gallagher M, Holland PC. Orbitofrontal lesions impair use of cue-outcome associations in a devaluation task. Behav Neurosci. 2005;119:317–322. doi: 10.1037/0735-7044.119.1.317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H, Green L. Commitment, choice and self-control. J Exp Anal Behav. 1972;17:15–22. doi: 10.1901/jeab.1972.17-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JB, Mitchell SH, de Wit H, Seiden LS. Determination of discount functions in rats with an adjusting-amount procedure. J Exp Anal Behav. 1997;67:353–366. doi: 10.1901/jeab.1997.67-353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rodriguez ML, Logue AW. Adjusting delay to reinforcement: comparing choice in pigeons and humans. J Exp Psychol Anim Behav Process. 1988;14:105–117. [PubMed] [Google Scholar]

- Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, Schoenbaum G. Dopamine neurons encode the better option in rats deciding between differently delayed or sized rewards. Nat Neurosci. 2007;10:1615–1624. doi: 10.1038/nn2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Calu DJ, Esber GR, Schoenbaum G. Neural correlates of variations in event processing during learning in basolateral amygdala. J Neurosci. 2010;30:2464–2471. doi: 10.1523/JNEUROSCI.5781-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Bryden DW, Cerri DH, Haney ZR, Schoenbaum G. Willingness to wait and altered encoding of time-discounted reward in the orbitofrontal cortex with normal aging. J Neurosci. 2012;32:5525–5533. doi: 10.1523/JNEUROSCI.0586-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nat Neurosci. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- Rypma B, D'Esposito M. Isolating the neural mechanisms of age-related changes in human working memory. Nat Neurosci. 2000;3:509–515. doi: 10.1038/74889. [DOI] [PubMed] [Google Scholar]

- Samanez-Larkin GR, Mata R, Radu PT, Ballard IC, Carstensen LL, McClure SM. Age differences in striatal delay sensitivity during intertemporal choice in healthy adults. Front Neurosci. 2011;5:126. doi: 10.3389/fnins.2011.00126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Chiba AA, Gallagher M. Orbitofrontal cortex and basolateral amygdala encode expected outcomes during learning. Nat Neurosci. 1998;1:155–159. doi: 10.1038/407. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Nugent S, Saddoris MP, Gallagher M. Teaching old rats new tricks: age-related impairments in olfactory reversal learning. Neurobiol Aging. 2002;23:555–564. doi: 10.1016/s0197-4580(01)00343-8. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Saddoris MP, Gallagher M. Encoding changes in orbitofrontal cortex in reversal-impaired aged rats. J Neurophysiol. 2006;95:1509–1517. doi: 10.1152/jn.01052.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simon NW, LaSarge CL, Montgomery KS, Williams MT, Mendez IA, Setlow B, Bizon JL. Good things come to those who wait: attenuated discounting of delayed rewards in aged Fischer 344 rats. Neurobiol Aging. 2010;31:853–862. doi: 10.1016/j.neurobiolaging.2008.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singh T, Jones JL, McDannald MA, Haney RZ, Cerri DH, Schoenbaum G. Normal aging does not impair orbitofrontal-dependent reinforcer devaluation effects. Front Aging Neurosci. 2011;3:4. doi: 10.3389/fnagi.2011.00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugase-Miyamoto Y, Richmond BJ. Neuronal signals in the monkey basolateral amygdala during reward schedules. J Neurosci. 2005;25:11071–11083. doi: 10.1523/JNEUROSCI.1796-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swan JA, Pearce JM. The orienting response as an index of stimulus associability in rats. J Exp Psychol Anim Behav Process. 1988;14:292–301. [PubMed] [Google Scholar]

- Takahashi YK, Roesch MR, Wilson RC, Toreson K, O'Donnell P, Niv Y, Schoenbaum G. Expectancy-related changes in firing of dopamine neurons depend on orbitofrontal cortex. Nat Neurosci. 2011;14:1590–1597. doi: 10.1038/nn.2957. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thaler R. Some empirical evidence on dynamic inconsistency. Econ Lett. 1981;8:201–207. [Google Scholar]

- Tye KM, Stuber GD, de Ridder B, Bonci A, Janak PH. Rapid strengthening of thalamo-amygdala synapses mediates cue-reward learning. Nature. 2008;453:1253–1257. doi: 10.1038/nature06963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang M, Gamo NJ, Yang Y, Jin LE, Wang XJ, Laubach M, Mazer JA, Lee D, Arnsten AF. Neuronal basis of age-related working memory decline. Nature. 2011;476:210–213. doi: 10.1038/nature10243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- West EA, DesJardin JT, Gale K, Malkova L. Transient inactivation of orbitofrontal cortex blocks reinforcer devaluation in macaques. J Neurosci. 2011;31:15128–15135. doi: 10.1523/JNEUROSCI.3295-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winstanley CA, Theobald DE, Cardinal RN, Robbins TW. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J Neurosci. 2004;24:4718–4722. doi: 10.1523/JNEUROSCI.5606-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zyzak DR, Otto T, Eichenbaum H, Gallagher M. Cognitive decline associated with normal aging in rats: a neuropsychological approach. Learn Mem. 1995;2:1–16. doi: 10.1101/lm.2.1.1. [DOI] [PubMed] [Google Scholar]