SYNOPSIS

Objective

We compared the quality of data reported to the Centers for Disease Control and Prevention (CDC) from sites that received funding for acute viral hepatitis surveillance through CDC’s Emerging Infections Program (EIP) with sites that have electronic infrastructure to collect data but do not receive funding from CDC to support viral hepatitis surveillance.

Methods

Descriptive analysis was conducted on acute hepatitis A, B, and C cases reported from EIP sites and National Electronic Disease Surveillance System (NEDSS)-based states (NBS) sites from 2005 to 2007. Data were compared for (1) completeness of demographic and risk behavior/exposure information; (2) adherence to CDC/Council of State and Territorial Epidemiologists (CSTE) case definition for confirmed cases of acute hepatitis A, B, and C; and (3) timeliness of reporting to the health department.

Results

Data reported for sex and age were at least 98% complete for both EIP and NBS sites and race/ethnicity was more complete for EIP sites. For acute hepatitis A, B, and C, case reports from EIP sites were more likely than those from NBS sites to include a “yes” response to at least one risk behavior/exposure variable and were more likely to meet the CDC/CSTE case definition. EIP sites received case reports in a more timely fashion than did NBS sites. The case definition for acute hepatitis C proved problematic for both EIP and NBS sites.

Conclusions

Data from the EIP sites were more complete and reported in a more timely way to health departments than data from the NBS sites. Funding for follow-up activities is essential to providing surveillance data of higher quality for decision-making and public health response.

One of the main objectives of national hepatitis surveillance is to measure the burden of this disease in the population. Since 1966, the Centers for Disease Control and Prevention (CDC) has monitored viral hepatitis through the National Notifiable Diseases Surveillance System (NNDSS).1 This system enables all states to collect and report valuable data on demographic, laboratory, clinical, and exposure history on cases of acute viral hepatitis identified in their states. However, because data needs shift over time, the attributes of surveillance systems should be periodically evaluated to ensure the best use of scarce public health resources.2

Surveillance for viral hepatitis is critical, not only to measure the burden of disease, but also to identify and control outbreaks and facilitate targeted vaccination and other prevention initiatives. Various aspects of viral hepatitis pose unique challenges to conducting population-based surveillance, including the lack of signs and symptoms for many infections, the large number of laboratory positive results indicating potential infections, and the complexity of the viruses causing viral hepatitis. Hepatitis A virus (HAV), hepatitis B virus (HBV), and hepatitis C virus (HCV) cause most viral hepatitis infections in the United States; however, these viruses have differing modes of transmission, necessitating unique prevention strategies. In 2008, more than 8,000 cases of acute viral hepatitis A, B, and C were reported to CDC; however, CDC estimates that more than 30,000 people were newly infected.3 Infection with HBV and HCV can develop into chronic infection, leading to substantial morbidity and mortality. Although acute infection can result in clinical disease and mortality, most severe health outcomes are associated with persistent or chronic infection. People infected with HBV and HCV are typically asymptomatic; therefore, chronic infection can persist undetected for decades before causing life-threatening liver disease, including cirrhosis and cancer.

CDC funds select sites for viral hepatitis surveillance through its Emerging Infections Program (EIP), a network comprising state health departments, academic institutions, local health departments, and other federal agencies.4 Since 2004, EIP sites have been conducting enhanced population-based surveillance for acute infection with HAV, HBV, and HCV. Surveillance efforts include follow-up on cases and outbreak investigations to collect basic clinical and demographic information and information regarding risk behaviors and exposures.5 Sites submitted a cumulative dataset of cases without personal identifying information to CDC’s Division of Viral Hepatitis (DVH) on a monthly basis via a secure file transfer protocol site.

In 2003, CDC developed the National Electronic Disease Surveillance System (NEDSS), a passive, patient-centric surveillance system characterized by an Internet-based infrastructure for public health surveillance data exchange that employs specific data standards.6 By 2007, 15 states were using NEDSS to conduct viral hepatitis surveillance. While none of these NEDSS-based states (NBS) received viral hepatitis surveillance funding from CDC, the NBS sites voluntarily transmit case reports to CDC on a weekly basis via NNDSS, which has the capacity to receive the same information as the EIP-funded hepatitis surveillance sites. Case reporting forms for both EIP and NBS sites are identical and allow for the collection of demographic data, clinical information, laboratory information, and risk exposures.

The federal government has provided few resources—in the form of guidance, funding, and oversight—to local and state health departments to perform surveillance for viral hepatitis.7 To optimize use of available funding and resources, the adequacy of existing viral hepatitis surveillance mechanisms must be evaluated. This article assesses and compares CDC EIP-funded and non-funded surveillance mechanisms, with a focus on three core attributes of viral hepatitis surveillance: (1) completeness of demographic data (i.e., sex, age, and race/ethnicity) and risk behavior/exposure information; (2) adherence to the CDC/Council of State and Territorial Epidemiologists (CSTE) case definition for a confirmed case of acute hepatitis A, B, and C; and (3) timeliness of reporting to the health department.

METHODS

We selected laboratory-confirmed cases of acute hepatitis A, B, and C reported from six EIP demonstration sites (n=52,273 cases reported from Colorado, Connecticut, Minnesota, New York City, 34 counties in New York State, and Oregon) and 15 NBS sites (n=56,314 cases reported from Alabama, Idaho, Maine, Maryland, Montana, Nebraska, Nevada, New Mexico, Rhode Island, South Carolina, Tennessee, Texas, Vermont, Virginia, and Wyoming), all of which had collected at least one full year of data from 2005–2007. Ratio estimates were calculated and tested for completeness of demographic and risk information and proportion of cases meeting the case definition to measure the effect and describe the strength of association between EIP sites and NBS sites. Calculated p-values of,0.01 were considered statistically significant. All analyses were conducted using SAS® version 9.2.8

Completeness of demographic and risk information

We assessed completeness of reporting for sex, age, and race/ethnicity by calculating the percentage of reported cases with a valid response for these variables. Responses for sex were considered valid if either “male” or “female” was indicated on the reporting form. For age, responses that included a date of birth were considered valid, and for race/ethnicity, responses were valid only if “yes” was marked on the reporting form to indicate American Indian/Alaska Native, black, white, Asian/Pacific Islander, other race, or Hispanic race/ethnicity. Cases with any other response regarding demographics (e.g., unknown or missing) were excluded.

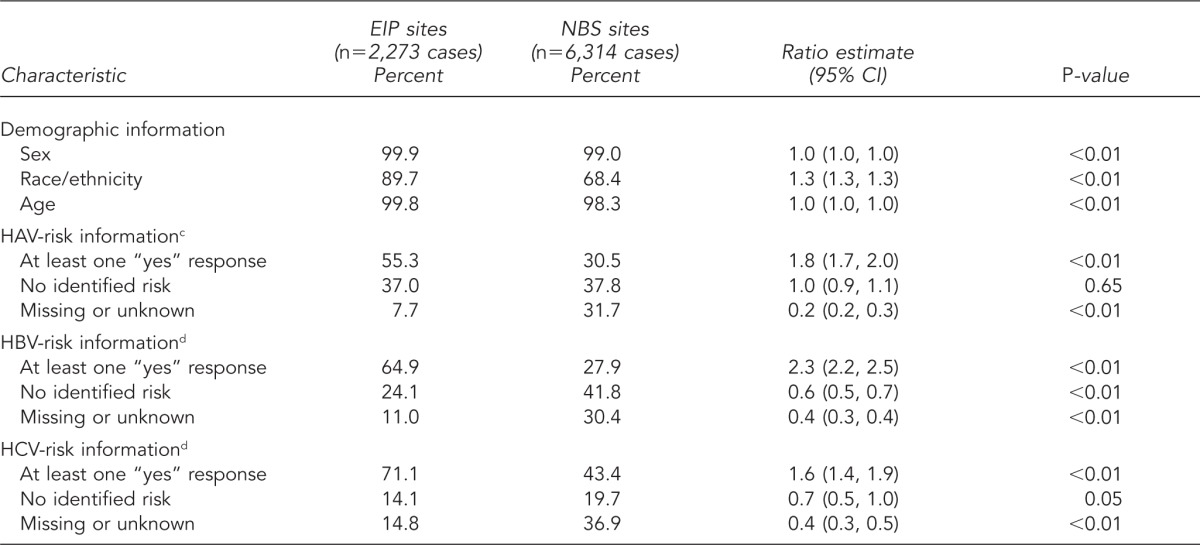

Completeness of reporting for risk behavior/exposure information (Table 1) was classified in one of three ways: (1) case reports indicating at least one risk behavior/exposure (i.e., at least one “yes” response to a risk behavior/exposure variable), (2) reports indicating no identified risk behavior/exposure (i.e., responses to all questions about risk behavior/exposure variables were “no”), and (3) reports with either unknown or missing information for all risk behavior/exposure variables. We determined the percentage of cases within each category and compared them using ratio estimates and 95% confidence intervals (CIs).

Table 1.

Percentage of viral hepatitis case reports with complete demographic and risk behavior/exposure information—EIP demonstration sitesa and NBS sites,b 2005–2007

aThe six EIP sites used in this analysis were Colorado, Connecticut, Minnesota, New York City, 34 counties in New York State, and Oregon.

bThe 15 NBS sites used in this analysis were Alabama, Idaho, Maine, Maryland, Montana, Nebraska, Nevada, New Mexico, Rhode Island, South Carolina, Tennessee, Texas, Vermont, Virginia, and Wyoming.

cRisk behavior/exposure variables two to six weeks before symptom onset for HAV included being a household, sexual, or other contact of someone diagnosed with HAV; having multiple sex partners; being a man who has sex with men; being a child in daycare or a daycare employee; traveling outside the United States and Canada or having a household contact with such travel three months before symptom onset; and having been exposed as part of a common-source outbreak, foodborne or waterborne.

dRisk behavior/exposure variables for HBV (six weeks to six months before symptom onset) and HCV (two weeks to six months before symptom onset) included having sexual, household, or other contact with an infected person; using injection drugs or non-injection street drugs; having multiple sex partners; being a man who has sex with other men; undergoing dialysis; having received a needlestick or blood transfusion; being employed in the medical field or public safety; having received a tattoo, piercing, dental work, or surgery; and being a resident of a long-term care facility.

EIP = Emerging Infections Program

NBS = National Electronic Disease Surveillance System-based states

CI = confidence interval

HAV = hepatitis A virus

HBV = hepatitis B virus

HCV = hepatitis C virus

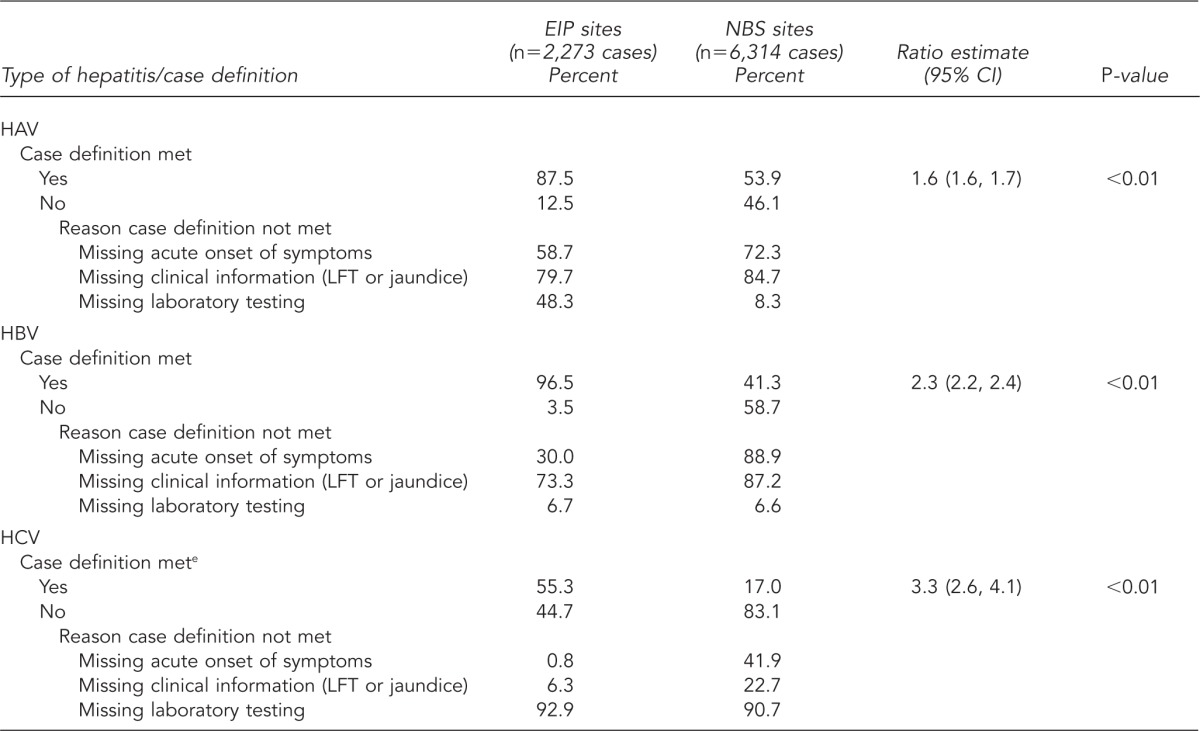

Meeting the case definition

We evaluated cases of acute viral hepatitis reported from EIP and NBS sites to determine whether they met the CDC/CSTE definition for a confirmed case. For hepatitis A, we used the 2000 case definition; for hepatitis B and C, the 2004 case definitions were used. Case definitions for all three types of acute viral hepatitis share the following clinical criteria: acute illness with (1) discrete onset of symptoms and (2) jaundice or elevated serum alanine aminotransferase (ALT) levels. For acute hepatitis A and B, elevated ALTs are defined as two times the upper limit of normal; for acute hepatitis C, ALT levels of .400 International Units per liter are considered elevated. Laboratory criteria, however, vary by type of viral hepatitis. Only those cases of acute hepatitis A with a positive assay for immunoglobulin M (IgM) antibody to hepatitis A virus meet laboratory requirements, and acute hepatitis B requires a positive IgM antibody to hepatitis B core antigen or positive hepatitis B surface antigen. Acute hepatitis C laboratory requirements include a positive antibody to a hepatitis C virus (anti-HCV) screening test (repeat reactive), verified by results from an additional, more specific assay (e.g., recombinant immunoblot assay for anti-HCV, nucleic acid testing for HCV ribonucleic acid, or a positive anti-HCV screening test with a signal-to-cutoff ratio predictive of a true positive as determined for the particular assay).

Cases meeting both the clinical and laboratory criteria associated with the CDC/CSTE case definition were considered confirmed. Cases that did not meet the case definition were further assessed to determine gaps in information, including missing data for acute onset of symptoms, clinical criteria, and laboratory testing. The percentage of cases meeting the definition and the reasons that cases did not meet the definition were determined and compared using ratio estimates and 95% CIs.

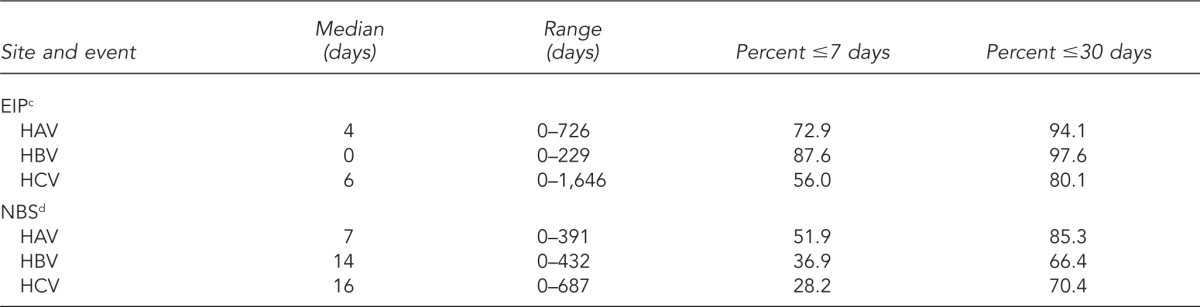

Timeliness of reporting

We assessed timeliness of case reporting by calculating the number of days between the date of diagnosis and the date the health department received the case report. To be considered valid, both the diagnosis date and the report-received date were required to have non-missing date responses. The percentages of cases reported within one week and within 30 days were determined and compared for EIP and NBS sites.

RESULTS

Completeness of reporting

Data reporting for sex and age were 99.9% and 99.8% complete, respectively, for EIP sites and 99.0% and 98.3% complete, respectively, for NBS sites. Although reporting for race/ethnicity was more complete for data reported from EIP sites (89.7%), NBS sites maintained 68.4% completeness. EIP sites were 1.3 times as likely as NBS sites to have complete race/ethnicity data (Table 1).

For hepatitis A, B, and C, case reports from EIP sites were more likely than those from NBS sites to include a “yes” response to at least one risk behavior/exposure variable (1.8, 2.3, and 1.6 times as likely for HAV, HBV, and HCV, respectively) (Table 1). However, when examining the percentage of case reports with a “no” response to all risk behavior/exposure variables, results varied by type of viral hepatitis. EIP and NBS sites were equally likely to report cases of HAV infection with no risk behavior/exposure, whereas for HBV and HCV infections, reports from EIP sites were less likely to have a “no” response to all risk behavior/exposure variables (0.6 and 0.7 times as likely for HBV and HCV, respectively). For all three types of viral hepatitis, case reports from EIP sites were less likely to have missing or unknown information about risk behaviors/exposures (0.2, 0.4, and 0.4 times as likely for HAV, HBV, and HCV, respectively) (Table 1).

Meeting the case definition

Compared with NBS sites, cases of hepatitis A, B, and C reported through EIP sites were more likely to meet the CDC/CSTE case definition (1.6, 2.3, and 3.3 times as likely, respectively) (Table 2). Failure to meet the case definition was attributable to different factors, depending on the type of viral hepatitis and surveillance program. For both EIP and NBS sites, reports of HAV and HBV infections were excluded from the case definition primarily because of missing information regarding clinical characteristics (i.e., data on ALT levels and jaundice). For NBS sites, absence of information regarding acute onset of symptoms also caused reports of HAV and HBV infections to be excluded from the case definition. Regardless of surveillance site, the reason most cases of HCV infection failed to meet the case definition was the absence of required laboratory testing data.

Table 2.

Percentage of viral hepatitis cases meeting the case definitiona of viral hepatitis and reason why cases did not meet the case definitionb—EIP demonstration sitesc and NBS sites,d 2005–2007

aElevated aminotransferase levels (ALTs) are defined as two times the upper limit of normal.

bNot mutually exclusive; multiple reasons could explain why the case definition was not met.

cThe six EIP sites used in this analysis were Colorado, Connecticut, Minnesota, New York City, 34 counties in New York State, and Oregon.

dThe 15 NBS sites used in this analysis were Alabama, Idaho, Maine, Maryland, Montana, Nebraska, Nevada, New Mexico, Rhode Island, South Carolina, Tennessee, Texas, Vermont, Virginia, and Wyoming.

eThe case definition used for acute HCV was a modified version of the 2005 Centers for Disease Control and Prevention/Council of State and Territorial Epidemiologists case definition. ALTs were elevated at .400 International Units per liter.

EIP = Emerging Infections Program

NBS = National Electronic Disease Surveillance System-based states

CI = confidence interval

HAV = hepatitis A virus

LFT = liver function test

HBV = hepatitis B virus

HCV = hepatitis C virus

Timeliness of reporting

Timeliness of reporting could be determined for 69% of the case reports from EIP sites and 49% of the cases received from NBS sites (i.e., reports contained both the diagnosis date and the date a case was reported) (Table 3). For all three types of viral hepatitis, health departments within EIP sites received case reports more quickly than did those in NBS sites. For EIP sites, most cases of HAV, HBV, and HCV infection were reported within seven days of diagnosis (median of four, zero, and six days, respectively). For NBS sites, although about one-half of all HAV infection cases were reported within seven days of diagnosis, reporting time was slower for cases of HBV and HCV infections (14 and 16 days, respectively). Health departments in both surveillance systems received more reports of all types of viral hepatitis by 30 days after diagnosis. For EIP sites, 94.1% of HAV, 97.6% of HBV, and 80.1% of HCV cases were reported within 30 days of diagnosis. In NBS sites, 85.3% of HAV, 66.4% of HBV, and 70.4% of HCV cases were reported within 30 days of diagnosis.

Table 3.

Timeliness of reporting cases of viral hepatitis from diagnosis date to reporting to the state health department—EIP demonstration sitesa and NBS sites,b 2005–2007

aThe six EIP sites used in this analysis were Colorado, Connecticut, Minnesota, New York City, 34 counties in New York State, and Oregon.

bThe 15 NBS sites used in this analysis were Alabama, Idaho, Maine, Maryland, Montana, Nebraska, Nevada, New Mexico, Rhode Island, South Carolina, Tennessee, Texas, Vermont, Virginia, and Wyoming.

c69% of case reports from EIP sites had both diagnosis date and report date.

d49% of case reports from NBS sites had both diagnosis date and report date.

EIP = Emerging Infections Program

NBS = National Electronic Disease Surveillance System-based states

HAV = hepatitis A virus

HBV = hepatitis B virus

HCV = hepatitis C virus

DISCUSSION

For the three categories examined in this study, the quality of data was higher for EIP sites than NBS sites. Compared with NBS sites, demographic and risk behavior/exposure data were more completely reported, significantly more cases met the case definition, and reporting was more timely in EIP sites. The success of any effort to measure the burden of viral hepatitis, identify and respond to outbreaks in a timely fashion, and develop and evaluate prevention strategies hinges on the availability of (1) complete, accurate, and reliable de-duplicated case data; and (2) the consistent application of case definitions, which provide uniform criteria for reporting cases that increase the specificity of reporting and improve the comparability of disease reports from different geographical areas.9 Timeliness of reporting is also an integral surveillance component, particularly for outbreak detection, to enable prompt public health response to changes in disease occurrence.10

The case definition for acute hepatitis C proved problematic for both surveillance programs. Slightly more than half (55.3%) of cases reported through EIP sites and only 17.0% of cases from NBS sites met the case definition for acute hepatitis C. Several factors contribute to case-definition challenges, including lack of a laboratory marker to distinguish between acute and chronic HCV infections. In the absence of such a marker, acute and chronic cases can only be distinguished by clinical information. Further, for both EIP and NBS sites, more than 90.0% of acute hepatitis C case reports were missing the laboratory test information needed to meet the case definition (e.g., signal-to-cutoff ratios) (Table 2).11 Lack of clinical information also is problematic—a substantial number of case reports did not include the basic clinical data needed to determine whether the case definition was met. Health departments are tasked with conducting follow-up for these case reports, which can be burdensome and particularly challenging for those with scarce resources.

The overwhelming number of laboratory reports of hepatitis C received by health departments serves as another barrier to reporting. Because chronic hepatitis C is highly prevalent in the United States, health departments process four laboratory reports for every new case of HCV infection.12 With numerous laboratory results and limited electronic laboratory reporting (ELR), health departments have a difficult time entering cases into their data systems. This difficulty is particularly problematic for EIP sites, which are in need of an enhanced infrastructure for electronic reporting. Although NBS sites have an adequate electronic infrastructure in place for collecting multiple laboratory results through ELR, lack of federal funding has rendered these sites understaffed to provide needed follow-up on potential cases.

A recent Institute of Medicine report suggested that completeness of reporting for hepatitis C could be improved by eliminating clinical requirements from the case definition.7 Such an approach would facilitate the identification of all HCV infections, regardless of whether they are symptomatic. This approach would be helpful in identifying chronic cases, as clinical symptoms are not a criterion in the case definition. However, because clinical characteristics are the only criteria used to differentiate acute from chronic cases, excluding clinical information from the case definition would compromise efforts to identify new cases of hepatitis C. Early recognition of HCV infection is critical to the health outcome of infected individuals because treatments for viral hepatitis are more effective when taken during the acute phase of infection.13

Limitations

The findings in this article are subject to several limitations. The results only represent cases reported from selected sites, and data quality and timeliness may not be comparable to other U.S. populations, who may have varying viral hepatitis surveillance resources. Also, there may be differences in overall population coverage between the two types of sites; however, these differences were not assessed. Additionally, we did not assess the qualitative process, such as case ascertainment and follow-up methods, used by NBS sites, as there were no site visits or follow-up with NBS sites on their specific methods used for viral hepatitis surveillance. Differences in qualitative processes between the two surveillance programs, therefore, could not be determined. For both EIP and NBS sites, data were not evaluated by specific site; consequently, observed variations in data between the two reporting systems may reflect data collection at certain sites rather than the system as a whole. Furthermore, to facilitate comparison of the two surveillance methods, only data obtained from the reporting forms were analyzed in this study. Although both EIP and NBS sites use the same forms to collect viral hepatitis data, NBS sites routinely collect additional information that might have provided further insight on acute viral hepatitis in these states.

CONCLUSIONS

Overall, viral hepatitis surveillance data from EIP sites were more completely reported; more often met the CDC/CSTE case definitions for hepatitis A, B, and C; and were reported in a more timely way than comparable surveillance data from NBS sites. Additional efforts are needed to better understand the factors that promote the timely collection of quality viral hepatitis data in the U.S. For instance, since 2009, two NBS sites began receiving viral hepatitis surveillance funding through EIP; analyses are needed to determine how additional funding affects data quality and completeness of reporting. Another important next step would be to evaluate the criteria required to meet the case definitions, modify the current case report forms as appropriate, and analyze the impact of a new case definition on case reporting. Finally, because electronic data systems provide increased opportunities for efficient data collection, development and expansion of electronic infrastructure are critical components of future viral hepatitis surveillance efforts. Providing funding for surveillance sites such as NBS that already have the electronic infrastructure in place would be beneficial. Taken together, all of these steps can help improve the quality of viral hepatitis surveillance data, even among sites with limited resources.

Acknowledgments

The authors acknowledge the contributions of the Centers for Disease Control and Prevention’s (CDC’s) Emerging Infections Program project investigators and surveillance staff; National Electronic Disease Surveillance System-based states staff; John Abellera from the Office of Surveillance, Epidemiology, and Laboratory Services at CDC; and Dr. Henry Roberts from the Division of Viral Hepatitis at CDC. The data used in this study were exempt from Institutional Review Board approval.

Footnotes

The findings and conclusions in this article are those of the authors and do not necessarily represent the official position of CDC.

REFERENCES

- 1.Daniels D, Grytdal S, Wasley A. Surveillance for acute viral hepatitis—United States, 2007. MMWR Surveill Summ. 2009;58(3):1–27. [PubMed] [Google Scholar]

- 2.German RR, Lee LM, Horan JM, Milstein RL, Pertowski CA, Waller MN. Updated guidelines for evaluating public health surveillance systems: recommendations from the Guidelines Working Group. MMWR Recomm Rep. 2001;50(RR-13):1–35. [PubMed] [Google Scholar]

- 3.Centers for Disease Control and Prevention (US) Atlanta: CDC; 2008. [cited 2012 Jul 3]. Estimates of disease burden from viral hepatitis. Also available from: URL: http://www.cdc.gov/hepatitis/PDFs/disease_burden.pdf. [Google Scholar]

- 4.Schuchat A, Hilger T, Zell E, Farley MM, Reingold A, Harrison L, et al. Active bacterial core surveillance of the emerging infections program network. Emerg Infect Dis. 2001;7:92–9. doi: 10.3201/eid0701.010114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Klevens RM, Miller JT, Iqbal K, Thomas A, Rizzo EM, Hanson H, et al. The evolving epidemiology of hepatitis A in the United States: incidence and molecular epidemiology from population-based surveillance, 2005-2007. Arch Intern Med. 2010;170:1811–8. doi: 10.1001/archinternmed.2010.401. [DOI] [PubMed] [Google Scholar]

- 6.Status of state electronic disease surveillance systems—United States, 2007. MMWR Morb Mortal Wkly Rep. 2009;58(29):804–7. [PubMed] [Google Scholar]

- 7.Institute of Medicine. Hepatitis and liver cancer: a national strategy for prevention and control of hepatitis B and C. Washingtion: National Academies Press; 2010. [PubMed] [Google Scholar]

- 8.SAS Institute, Inc. SAS®: Version 9.2. Cary (NC): SAS Institute, Inc; 2008. [Google Scholar]

- 9.Wharton M, Chorba TL, Vogt RL, Morse DL, Buehler JW. Case definitions for public health surveillance. MMWR Recomm Rep. 1990;39(RR-13):1–43. [PubMed] [Google Scholar]

- 10.Thacker SB, Choi K, Brachman PS. The surveillance of infectious diseases. JAMA. 1983;249:1181–5. [PubMed] [Google Scholar]

- 11.Alter MJ, Kuhnert WL, Finelli L. Guidelines for laboratory testing and result reporting of antibody to hepatitis C virus. MMWR Recomm Rep. 2003;52(RR-3):1–13. 15. [PubMed] [Google Scholar]

- 12.Klevens RM, Miller J, Vonderwahl C, Speers S, Alelis K, Sweet K, et al. Population-based surveillance for hepatitis C virus, United States, 2006-2007. Emerg Infect Dis. 2009;15:1499–502. doi: 10.3201/eid1509.081050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Jaeckel E, Cornberg M, Wedemeyer H, Santantonio T, Mayer J, Zankel M, et al. Treatment of acute hepatitis C with interferon alfa-2b. N Engl J Med. 2001;345:1452–7. doi: 10.1056/NEJMoa011232. [DOI] [PubMed] [Google Scholar]