Abstract

Deterministic chaos has been implicated in numerous natural and man-made complex phenomena ranging from quantum to astronomical scales and in disciplines as diverse as meteorology, physiology, ecology, and economics. However, the lack of a definitive test of chaos vs. random noise in experimental time series has led to considerable controversy in many fields. Here we propose a numerical titration procedure as a simple “litmus test” for highly sensitive, specific, and robust detection of chaos in short noisy data without the need for intensive surrogate data testing. We show that the controlled addition of white or colored noise to a signal with a preexisting noise floor results in a titration index that: (i) faithfully tracks the onset of deterministic chaos in all standard bifurcation routes to chaos; and (ii) gives a relative measure of chaos intensity. Such reliable detection and quantification of chaos under severe conditions of relatively low signal-to-noise ratio is of great interest, as it may open potential practical ways of identifying, forecasting, and controlling complex behaviors in a wide variety of physical, biomedical, and socioeconomic systems.

A hallmark of chaotic dynamics is its sensitive dependence on initial conditions, as indicated by the presence of a positive Lyapunov exponent (LE)—a measure of the exponential divergence of nearby states (1–4). Although the existence of a positive LE lends a definitive proof of chaos in ideal model systems, its applicability to real systems presents severe limitations. In particular, LE fails to specifically distinguish chaos from noise (5) and cannot detect chaos reliably (2, 3, 6,7) unless the data series is inordinately lengthy and virtually free of noise—requirements that are difficult (if not impossible) to fulfill for most empirical data. Similar problems of specificity and reliability (7, 8) also baffle other approaches to chaos detection that rely on certain topological or information measures of attractors reconstructed from the data (9, 10). The lack of a definitive test of chaos in experimental time series has thwarted the application of nonlinear dynamics theory (4, 11) to a variety of physical (6, 12–15), biomedical (16–24), and socioeconomic systems (25–30) where chaos is thought to play a role. Overcoming these hurdles may open exciting possibilities for practical applications such as the improved forecasting of weather (31) and economic (30) patterns, novel strategies for diagnosis and control of pathological states in biomedicine (23, 24, 32–35) or the unmasking of chaotically encrypted electronic or optical communication signals (36–39).

A different strategy, widely accepted at present, is to infer the presence of nonlinear determinism in a noisy signal on the basis of its nonlinear short-term predictability (11, 40–45) or its conformance with certain regularity patterns (22, 44, 46–48), often through a statistical comparison with randomly generated “surrogate” data (42, 43). However, these approaches are hampered by their high computational cost and, more importantly, by the fact that nonlinearity and determinism are only necessary conditions for chaos but do not by themselves constitute a sufficient proof of chaotic dynamics (44, 47, 48). The improper use of these and other techniques for chaos detection in experimental data has caused widespread controversy (49–53) as to the validity of such claims.

At the core of the current dilemma lies the inevitable noise contamination in experimental data. On the one hand, classic tests that could in principle provide sufficient proof of chaos, such as LE, are highly sensitive to noise. On the other hand, current algorithms that are robust to noise could at best verify some partial (necessary) conditions of chaos. None of these techniques, singly or in combination, provide a conclusive test for chaos in empirical data. Moreover, this critical problem cannot be resolved by filtering of the attendant noise (54, 55), because it is unclear whether the underlying dynamics (chaotic or otherwise) is well preserved by such noise-reduction procedures (56).

In contrast to these previous approaches, we propose here a drastic analytical technique that provides a sufficient and robust numerical test of chaos and a relative measure of chaotic intensity, even in the presence of significant noise contamination. The method itself is surprisingly simple and may seem deceptively trivial and counterintuitive at first sight: rather than remove or separate noise from the data, we deliberately add incremental amounts of noise as a contrasting agent for chaos. In essence, our approach is analogous to a chemical titration process in which the concentration of acid in a solution is determined by neutralization of the acid with added base, rather than by direct extraction of the acid. An essential component in such a titration is a sensitive chemical indicator that specifically reveals the changes in pH around the equivalence point of acid-base neutralization. In our case, the “indicator” for the numerical titration of chaos (“acid”) with random noise (“base”), could in theory be any noise-tolerant technique that can reliably detect nonlinear dynamics in short noisy series (40, 45, 57, 58). Used in this manner, the numerical titration described below empowers these techniques to provide a reliable test for chaos.

Methods

The Algorithm.

The heart of the proposed technique can be thought of as a numerical titration of the data: We add white (or linearly correlated) noise of increasing standard deviation (σ) to the data until its nonlinearity goes undetected (within a prescribed level of statistical confidence) by a particular indicator at a limiting value of σ = noise limit (NL). We denote this value as the “noise ceiling” of the signal and the corresponding limiting condition the “equivalence point.”

Under this numerical titration scheme, NL > 0 indicates chaos, and the value of NL gives an estimate of its relative intensity. Conversely, if NL = 0, then it may be inferred that the series either is not chaotic or the chaotic component is already neutralized by the background noise in the data (the “noise floor”). The condition NL > 0 therefore provides a simple sufficient test for chaos.

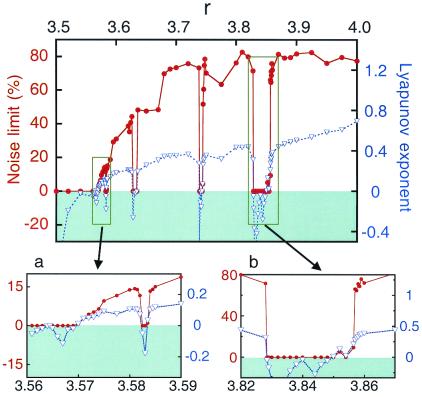

We demonstrate the validity of this numerical titration technique in a bifurcation diagram of the logistic map (Fig. 1). A close correlation between NL and the largest LE of the map is evident over the full bifurcation range, with NL > 0 wherever LE > 0.

Figure 1.

Standard bifurcation diagram of the logistic map yk+1 = r yk (1 − yk). Chaos is indicated by a positive Lyapunov exponent LE (blue, right scale), as derived analytically from the noiseless map. The noise limit NL (red, left scale) is the minimum amount of added white noise that prevents the detection of nonlinearity in the data. Note that NL is an indicator of chaos: NL > 0 when LE is positive, and NL varies roughly in proportion to LE in the chaotic regions. NL is calculated as follows: first, we generate a 1,000-point series yk from the map, and a white noise series ξk of equal length and unit variance. Then we apply a particular nonlinear identification algorithm in a bisection scheme to determine the maximum α at which the noisy series yk + α ξk is still classified as nonlinear at the 1% significance level. The noise limit for that particular realization of the noise is NL = α/σy, where σy is the standard deviation of the series yk. (See Fig. 2 for a more visual description of NL through the titration curve.) This procedure is repeated for 10 different examples of white noise to obtain the averaged NL plotted in the figure. Insets a and b show two different routes to chaos in the logistic map: (a) onset of chaos through a period-doubling bifurcation; (b) intermittency bifurcation from a period-3 region back into chaos below the critical value rc = 1 +  . All lines in these and the following figures are guides to the eye.

. All lines in these and the following figures are guides to the eye.

The Mathematical Basis of Numerical Titration.

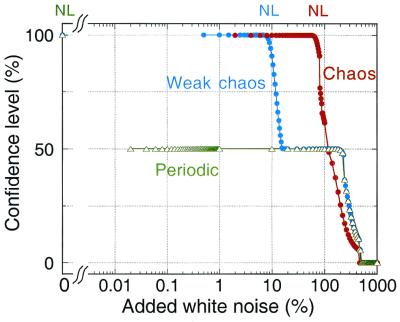

A key question remains as to why the condition NL > 0 represents a sufficient test for chaos even though nonlinear dynamics in a signal could also signify other (less complex) dynamics of oscillatory type, such as limit cycles or quasiperiodicity (4). To answer this question, consider a numerical titration curve (Fig. 2) where time series data are likened to acid-base solutions—with pure chaos being most “acidic” and white noise most “alkaline.” In this analogy, deterministic data such as periodic and quasiperiodic signals or random data such as linearly correlated noise are both considered “neutral,” because they all lie between chaos and white noise in an acid-base scale roughly corresponding to the degree of nonlinear predictability of the system (Fig. 2). Within this framework, only chaotic dynamics has appreciable titration power against white noise under a noise-resistant nonlinear indicator. The NL test is therefore highly selective for chaotic dynamics in that it effectively distinguishes chaos from all other forms of linear and nonlinear dynamics that have little or no resistance to added noise in a numerical titration assay.

Figure 2.

Titration curves of the logistic map at three values of the bifurcation parameter corresponding to periodic behavior, weak chaos, and strong chaos. Note how the addition of white noise buries the nonlinearity in the data. The noise limit NL (shown in the legend, Top) indicates when the titration curve crosses a prescribed (e.g., 99%) confidence level for the statistical rejection of the linear hypothesis; essentially, NL is the analog of the equivalence point in a chemical titration. In this case, NL ∼ 75% for the strongly chaotic signal (r = 3.7, LE = 0.357); NL ∼ 9% for the weakly chaotic series (r = 3.575, LE = 0.088); and NL ∼ 0% for the periodic signal (r = 3.565, LE = 0.018). NL can thus serve as an indicator for chaos. The confidence level on the ordinate is defined as the difference of the confidence levels of two F-tests: one comparing nonlinear vs. linear models (relevant in the 100 to 50% range) and the other comparing linear vs. white noise (relevant between 50 and 0%). In this scale, the 100 and 0% confidence levels represent deterministic chaos (acid) and white noise (base), respectively, whereas at the 50% level (the equivalence point in the titration analog), the data are best described by linear models (neutral). Note how periodic and quasiperiodic signals rapidly drop from the 100 to 50% level, because a nonlinear limit cycle can be described both by nonlinear models and by linear models of enough memory. The final drop of the statistical criterion to the 0% level signals the point where the linear models have no more prediction power than white noise. Similar results (not shown) are obtained if linearly correlated noise (e.g., randomized surrogate data) is used as titrant instead of white noise, except that the curves then shift slightly to the right (with higher NL) and never drop below the 50% level.

The above acid-base analogy of nonlinear dynamics is based on the mathematical observation that certain nonlinear modes can be equally well described through linear models. For example, a nonlinear limit cycle with M harmonics has Fourier series expansion similar to a linear oscillatory model of memory 2M, whereas a quasiperiodic torus yk = Π  [ai + bisin(ωik)] with N incommensurate frequencies ωi can be described by a linear autoregressive model of memory 3N. Such ambiguity for periodic and quasiperiodic signals is exacerbated by the presence of measurement noise, which tends to obscure the distinction between linear and nonlinear models. In contrast, chaotic signals are generally not expressible as sums of sinusoids, and hence are not prone to misdetection except under overwhelming levels of attendant noise and/or periodicity.

[ai + bisin(ωik)] with N incommensurate frequencies ωi can be described by a linear autoregressive model of memory 3N. Such ambiguity for periodic and quasiperiodic signals is exacerbated by the presence of measurement noise, which tends to obscure the distinction between linear and nonlinear models. In contrast, chaotic signals are generally not expressible as sums of sinusoids, and hence are not prone to misdetection except under overwhelming levels of attendant noise and/or periodicity.

On the other hand, linearly correlated random signals (colored noise) are also best represented by linear stochastic models (45) despite their lack of a Fourier series expansion. Consequently, for both oscillatory signals and colored noise the null hypothesis (linear dynamics) cannot be rejected readily by statistical model testing, especially in the presence of additive noise, unless the data have a significant chaotic component.

The Choice of Nonlinear Indicator.

The power of the numerical titration procedure depends critically on the choice of a suitable nonlinear indicator. As basic requirements, such an indicator must be specific to nonlinear dynamics (vs. linear dynamics) and tolerant to measurement noise, i.e., it should be capable of providing reliable nonlinear detection under the adverse condition of significant noise contamination. Our preliminary work suggested at least two possible candidates: a time-reversibility measure (57, 58), and the Volterra–Wiener nonlinear identification method (24, 45). Here we report the application of the latter in the titration procedure.

Details of the Volterra–Wiener method are described in ref 24 and 45. Briefly, the Volterra–Wiener algorithm produces a family of polynomial (linear and nonlinear) autoregressive models with varying memory and dynamical order optimally fitted to predict the data. The best linear and nonlinear models are chosen according to an information–theoretic (Akaike) criterion, and subsequently the null hypothesis (best linear model) is tested against the alternate hypothesis (best nonlinear model) using parametric (F-test) or nonparametric (Whitney–Mann) statistics. It should be emphasized that, unlike other nonlinear detection techniques, the Volterra–Wiener algorithm does not rely on the heavy use of surrogate data for hypothesis testing. The Volterra–Wiener algorithm provides a sensitive and robust indicator of nonlinear dynamics in short, noisy time series, thereby establishing a necessary (though not sufficient) condition for chaos.

The following simulation experiments demonstrate that, when used in conjunction with the above titration scheme, this nonlinear test can indeed serve to detect chaotic dynamics in a variety of systems.

Results

To examine the generality of this method, we have applied the above titration procedure to benchmark model systems representing the four standard routes to chaos (4): period doubling, intermittency, subcritical, and quasiperiodic. The examples include both discrete-time and continuous-time models, the latter discretized at fixed intervals that yielded maximum NL (45). In each case, we computed NL from a short (1,000-point) series of the model (averaging over several noise realizations; see Fig. 1 legend) and compared the average NL with the largest LE (1) or Poincaré sections obtained from long (>50,000 points) error-free series in order to validate the results of the NL test.

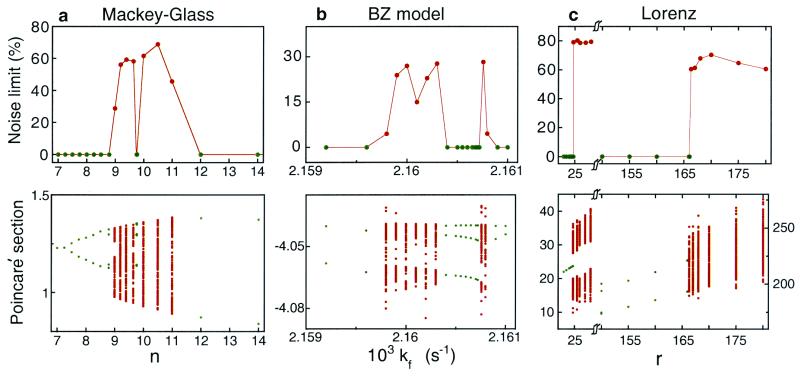

The detection of the first three routes to chaos with the titration procedure is demonstrated in Figs. 1 and 3. First, we studied the emergence of chaos through period doubling in two examples: the logistic map for population growth (Fig. 1, Inset a) and the Mackey–Glass delay–differential equation (59) (Fig. 3a), a model intended to mimic certain physiologic behaviors, which is also relevant to experimental feedback laser setups (60). Second, we considered two examples of intermittency. In the logistic map, as the bifurcation parameter r is decreased from the period-3 window, stretches of periodicity are interspersed with increasingly frequent surges of chaos until the full-blown chaotic regime ensues (Fig. 1, Inset b). Another example of intermittency is a three-variable continuous model of the Belousov–Zhabotinsky chemical reaction (61) (Fig. 3b). Third, in the subcritical route, chaos appears directly from a fixed point or a limit cycle. Fig. 3c shows that NL detects both subcritical bifurcations in the standard Lorenz system (4).

Figure 3.

Detection of three different routes to chaos in continuous systems. In all cases, regions with NL > 0 (red circles, Upper) closely match the chaotic regimes (red dots) in Poincaré sections of the corresponding noise-free systems (lower panels), whereas regions with NL = 0 (green circles, Upper) match the periodic regimes (green dots). (a) Period-doubling in the Mackey–Glass (59) delay differential equation ẋ(t) = −0.1 x(t) + 0.2x(t − τ)/(1 + x(t − τ)n), with τ = 20 and bifurcation parameter n. The series was discretized at intervals of τs = 4. (b) Intermittency in a three-dimensional model of the Belousov–Zhabotinsky reaction: high flow regime (figure 2 of ref. 61), sampled with τs = 0.012. In this region, a limit cycle turns chaotic through intermittency. (c) Two subcritical bifurcations in the standard Lorenz model with abrupt transitions from a fixed point to chaos at r ≃ 24.74, and from a limit cycle to chaos at r ≃ 166. We use the standard Lorenz system (4) with b = 8/3 and σ = 10, discretized with τs = 0.075.

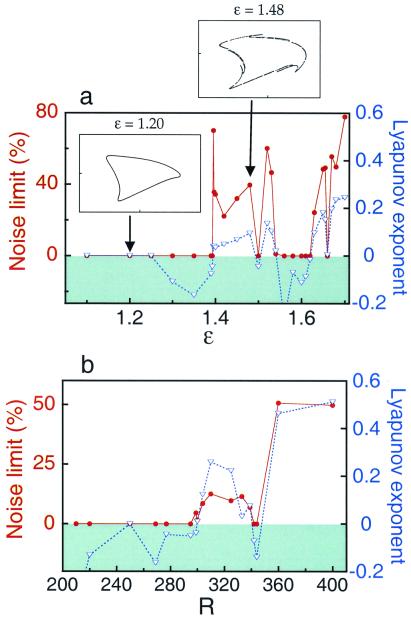

The fourth route to chaos involves a succession of quasiperiodic intermediates (tori) that precede the emergence of chaos. This phenomenon has been proposed to explain the onset of turbulence in fluid flows (4, 62) or of chaotic fluctuations in some coupled neural oscillators (63). The bifurcation via this route is typically assessed through a portrayal of the Poincaré sections of the attractor, which change from a smooth closed curve to a fractal pattern at the onset of chaos (Fig. 4a Insets). However, such fine-grained fractalization could be easily muddled by noise contamination in the data. In Fig. 4a, we show how the NL technique reliably detects chaos in a canonical example of torus fractalization (64) in the presence of additive noise, when the Poincaré sections become too fuzzy. Another difficulty with Poincaré sections arises when the system has no distinct intrinsic or extrinsic (forced) periodicity to which the stroboscopic sampling can be synchronized. This is exemplified by a fluid flow model (65) presented in Fig. 4b, where the ambiguity of Poincaré sampling is circumvented through direct titration of the data to accurately track the quasiperiodic route to chaos.

Figure 4.

Chaos detection in two examples of the quasiperiodic route: (a) Curry–Yorke discrete map (64), which mimics how the Poincaré section of a torus (a closed curve) becomes fractal (see Insets, which show the corresponding noise-free data). For noisy data, continuous and fractal Poincaré sections are difficult to distinguish. (b) Seven-variable continuous fluid mechanics model (65), sampled at τs = 0.03. This system passes from a periodic state to chaos through a complex cascade of quasiperiodic intermediates (two-frequency tori). In this case, the titration method reveals chaos without the need for Poincaré sampling of the data (which is difficult for a system that lacks external forcing especially in the presence of measurement noise). The minor misdetection close to the onset of chaos (R = 299) is because of the extreme wrinkling of the torus and can be avoided if more computationally intensive models are used.

The preceding examples are all relatively low dimensional, with attractors of dimension less than 3. It has been suggested that an increase in the dimensionality of the dynamics has a detrimental effect similar to an increase in noise, in that they both tend to degrade the reliability of nonlinear detection (47, 48). We have addressed this issue by analyzing another bifurcation route of the Mackey–Glass equation, which leads to very high-dimensional dynamics (66). Remarkably, the results in Fig. 5 indicate that the titration algorithm detects chaos even when the dimension is as high as 20. In addition, we have performed analyses (not shown) of bifurcations towards high-dimensional chaos (“hyperchaos”) in two types of systems: discrete models of ecological dispersion (67, 68) and networks of interconnected Rössler chaotic systems relevant to chaos communications (38, 39, 67, 68). In both cases, the NL algorithm performed equally well.

Figure 5.

Detection of high-dimensional chaos in the Mackey–Glass delay differential equation. The model equation is the same as in Fig. 3a, but now we fix n = 10 and study τ as the bifurcation parameter (66). The continuous signal is sampled at intervals τs = 12.5. As the delay τ grows, the dimension of the system increases roughly from 2 to 20, as shown by the Kaplan–Yorke dimension (DKY)—a standard estimator of the attractor dimension. The titration algorithm shows a remarkable effectiveness for chaos detection even when the dimension is high. Note how the periodic windows, marked by a value of DKY = 1, are detected with NL = 0.

Discussion

Comparison to Other Chaos Detection Methods.

The foregoing represents, to our knowledge, the most comprehensive study reported so far of chaos detection and estimation in the presence of measurement noise. The concept of numerical titration and its demonstrated applicability in all standard routes to chaos substantiate the NL test as a sensitive, robust, and sufficient measure of chaos even when the data are short and noisy.

The key step in this procedure is the controlled addition of noise to the data, which is continuously gauged by a nonlinear indicator. It is important to note that such added noise serves as a numeric titrant—unlike conventional surrogate data methods (40, 42–44), where noise is used solely as a statistical bootstrap for nonlinear detection. In this manner, the titration procedure fundamentally transcends the detection of nonlinearity in previous methods, thereby allowing not only a sufficient (rather than necessary) test of chaos but also a measure of its relative intensity. Such a test (NL > 0) has an intrinsic safety margin for measurement noise, in contrast to standard chaos detection methods (2, 3, 9, 10), which have virtually zero noise tolerance (NL = 0) unless the dataset is exceedingly long. The titration procedure thus provides a powerful combination of high sensitivity and specificity to chaos, immunity to noise, as well as simplicity and computational efficiency.

Detection of High-Dimensional Chaos.

Although the titration procedure based on a Volterra–Wiener nonlinear detection scheme has demonstrated excellent performance on certain high-dimensional problems, it might benefit from improvements in the nonlinear detector in other cases. For instance, a model by Lorenz (31) suggested that the weather system could be viewed as a high-dimensional collection of coupled nonlinear subsystems. If local variables are observed, the dynamics seems to take place on a lower dimension than that of the total system. However, the true dimension becomes apparent when analyzing a global variable obtained by averaging over all distinct subsystems. We have studied this model and successfully detected chaos in local variables with a correlation dimension of around 8. However, the NL test was unable to detect chaos in the averaged global variable of dimension roughly equal to 18. We believe this discrepancy stemmed not so much from the high dimension of the data as from the fact that averaging over loosely coupled asynchronous subsystems may produce a state variable with very weak autoregressive structure. If so, the solution to this problem might lie in the use of higher-order autoregressive models (at increased computation cost) or of a suitable nonlinear detector that does not rely on a strong autoregressive assumption.

Noise Limit as a Measure of Chaos Intensity in Noisy Data.

It should be emphasized that NL provides only a relative measure of chaos intensity. Its absolute value may depend on the intrinsic characteristics of the signal (such as the data length and the noise floor) or intrinsic properties of the system (such as its functional form, its nonlinear dimension, and the distribution of its Lyapunov spectrum). On the other hand, for any given dataset, the magnitude of NL is determined by the sensitivity and robustness of the nonlinear indicator being used. Thus, an important open task is a systematic evaluation and refinement of current nonlinear detection algorithms (40, 44–48) or the development of other algorithms, with a view to maximizing the value of NL for a general class of nonlinear systems, thereby elevating the ceiling of noise tolerance for chaos detection.

Our results show that NL faithfully follows LE in all standard routes to chaos. This feature of the titration technique should be especially useful for experiments designed to track the bifurcation to chaos (and its ensuing development) in relation to certain system parameters of interest. In practice, because empirical data are inevitably admixed with noise, any intrinsic chaos would be titrated a priori by the attendant noise floor—a phenomenon we refer to as autotitration. In this event, nonlinear detection with a suitable robust indicator would automatically imply NL > 0 because of the presence of a nonzero noise floor. This observation is reassuring for many previous studies on empirical data, in that such robust nonlinear detection can now be taken as satisfying a sufficient (rather than necessary) condition of chaos under the usual assumptions of autonomy (time invariance) and stationarity.

On the other hand, as a consequence of autotitration of empirical data, numerical titration would consistently underestimate the actual NL by an amount equal to the noise floor, with added variability that cannot be removed through repeated titrations on a single dataset alone. To reduce such variability, an ensemble (and/or temporal) average of the NL estimate from multiple independent data samples should be obtained where possible. The average NL so obtained gives (assuming NL > 0 in all samples) a refined estimate of the apparent noise limit, defined here as the difference between the noise ceiling and the noise floor of the data.

Conversely, a failure to detect nonlinearity (or NL = 0) in an empirical dataset does not necessarily constitute a disproof of chaos, given the sufficiency nature of the NL test. Even with chaotic data, the null hypothesis of linearity could prevail by chance, particularly if the noise floor is near or above the equivalence point, making the apparent noise limit effectively nil or becoming negative. Because NL cannot track negative values of LE or of the apparent noise limit, the titration procedure is inapplicable once nonlinearity is not detected. In this event, it may be helpful to obtain some statistical characterization of the NL test, such as a histogram of nonlinear vs. linear detections in multiple, distinct data sections (see ref. 24), rather than analyze a single dataset. A nonzero frequency of nonlinear detection would indicate NL > 0, at least in some sections. Furthermore, because this frequency should increase with an increase in the apparent noise limit, such a histogram affords an indirect measure of NL, even though direct numerical titration would be difficult in those data sections in which NL = 0. Thus, a decrease in the frequency of nonlinear detection with varying system conditions or parameter values would imply a corresponding reduction of the apparent noise limit (under similar assumptions of autonomy and stationarity). Such an implicit relation between numerical titration and the likelihood of nonlinear detection lends further support for the previous application of such statistical tools (24) to measure the frequency of nonlinear detection as an indication of corresponding changes in the intensity of chaos or other complex behaviors under varying system conditions.

Nonautonomous Nonlinear Dynamical Systems.

Although the present technique opens a promising avenue toward chaos detection in short datasets under measurement noise, a major remaining challenge is the discrimination of signals resulting from nonautonomous systems, namely systems that are perturbed by time-varying (deterministic or stochastic) drives. This is a difficult issue because, contrary to common belief, external perturbations could fundamentally alter the complexity of a nonlinear dynamical system, making it difficult to correlate the resultant behavior to that of the unperturbed system. For example, the literature on chaos control and anticontrol (34, 35, 46, 69–71) has demonstrated that judiciously timed inputs to a nonlinear system may serve to suppress or induce the occurrence of chaos, respectively. In another classic example, periodic drives may cause certain nonlinear oscillators (e.g., Duffing, van der Pol) to bifurcate from periodicity to chaos (4). Further, recent studies (72, 73) have shown that certain quasiperiodically driven systems may lapse into a peculiar state called “strange nonchaotic attractor,” which has fractal properties yet with an entirely negative Lyapunov spectrum. The increase in complexity (chaotic or nonchaotic fractal) induced by such periodic or quasiperiodic inputs translates into the condition NL > 0, consistent with our previous finding of a finite noise tolerance of nonlinear detection in these nonautonomous systems (45).

The effects of stochastic inputs (i.e., “dynamic noise”) on system complexity are less well characterized, although some similarities to the deterministic case may be drawn. A moderate level of dynamic noise could induce “chaos-like” (74) or other complex behaviors. Our preliminary results (45) indicate the possibility of detecting such noise-induced complexity with the NL test. On the other hand, if the dynamic noise is excessive, or if the system is heavily filter-like (30), the resultant behavior could reduce to linear dynamics or random noise. Indeed, rigorous mathematical analysis (75) has shown that any nonlinear process (chaotic or otherwise) perturbed by dynamic noise can be approximated arbitrarily closely by a linear moving–average model of sufficiently high order. It is important to recognize, however, that finite time series such functional approximation with linear or nonlinear models should take into account the parsimony principle enforced through objective criteria such as those described previously (24, 45), which are integral to the present NL test. These observations suggest possible extensions of the NL test to characterize the complex behaviors (inclusive of chaos) in a general class of autonomous and nonautonomous systems, although further work is necessary to delineate the limits of its applicability.

Conclusions and Precautions

As with any analytical procedure, some precaution is necessary for the proper application of the titration technique. In particular, care should be exercised under certain extreme conditions such as highly wrinkled tori on the verge of turning into strange attractors (see caption of Fig. 4b) or nonlinear oscillations with very high harmonic content or very long periods relative to the data length. Aside from such anomalies, the titration procedure offers a simple yet conclusive test for chaos in short and noisy time series from time-invariant autonomous systems. Because additive noise is used as a titrant for chaos, the NL test is intrinsically robust to measurement noise. The titration curve graphically depicts why chaos is often obscured by measurement noise, and how it can be reliably assessed when the noise floor is below the equivalence point.

Acknowledgments

We thank R. J. Field (University of Montana) for providing the computer code for his model of the Belousov–Zhabotinsky system. This research was supported in part by U.S. National Science Foundation Grant DMI-9760971 and National Institutes of Health Grant HL50614 (C.-S.P.). M.B. was the recipient of a postdoctoral fellowship from the Ministry of Education and Culture of Spain.

Abbreviations

- LE

Lyapunov exponent

- NL

noise limit

Footnotes

This paper was submitted directly (Track II) to the PNAS office.

References

- 1.Wolf A, Swift J B, Swinney H L, Vastano J A. Physica. 1985;16D:285–317. [Google Scholar]

- 2.Briggs K. Phys Lett A. 1990;151:27–32. [Google Scholar]

- 3.Rosenstein M T, Collins J J, De Luca C J. Physica. 1993;65D:117–134. [Google Scholar]

- 4.Drazin P G. Nonlinear Systems. Cambridge, U.K.: Cambridge Univ. Press; 1992. [Google Scholar]

- 5.Dämmig M, Mitschke F. Phys Lett A. 1993;178:385–394. [Google Scholar]

- 6.Palus M, Novotna D. Phys Rev Lett. 1999;83:3406–3409. [Google Scholar]

- 7.Eckmann J-P, Ruelle D. Physica. 1992;D56:185–187. [Google Scholar]

- 8.Provenzale A, Smith L A, Vio R, Murante G. Physica. 1992;D58:31–49. [Google Scholar]

- 9.Grassberger P, Procaccia I. Physica. 1983;D9:189–208. [Google Scholar]

- 10.Grassberger P, Procaccia I. Phys Rev A. 1983;28:2591–2593. [Google Scholar]

- 11.Sugihara G, May R M. Nature (London) 1990;344:734–741. doi: 10.1038/344734a0. [DOI] [PubMed] [Google Scholar]

- 12.Wilkinson P B, Fromhold T M, Eaves L, Sheard F W, Miura N, Takamasu T. Nature (London) 1996;380:608–610. [Google Scholar]

- 13.Gaspard P, Briggs M E, Francis M K, Sengers J V, Gammons R W, Dorfman J R, Calabrese R V. Nature (London) 1998;394:865–868. [Google Scholar]

- 14.Rind D. Science. 1999;284:105–107. doi: 10.1126/science.284.5411.105. [DOI] [PubMed] [Google Scholar]

- 15.Murray N, Holman M. Science. 1999;283:1877–1881. doi: 10.1126/science.283.5409.1877. [DOI] [PubMed] [Google Scholar]

- 16.Epstein I R. Nature (London) 1995;374:321–327. doi: 10.1038/374321a0. [DOI] [PubMed] [Google Scholar]

- 17.Faure P, Korn H. Proc Natl Acad Sci USA. 1997;94:6506–6511. doi: 10.1073/pnas.94.12.6506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Costantino R F, Desharnais R A, Cushing J M, Dennis B R F. Science. 1997;275:389–391. doi: 10.1126/science.275.5298.389. [DOI] [PubMed] [Google Scholar]

- 19.Makarenko V, Llinas R. Proc Natl Acad Sci USA. 1998;95:15747–15752. doi: 10.1073/pnas.95.26.15747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Coffey D S. Nat Med. 1998;4:882–885. doi: 10.1038/nm0898-882. [DOI] [PubMed] [Google Scholar]

- 21.Lindner J F, Meadows B K, Marsh T L, Ditto W L, Bulsara A R. Int J Bifurcat Control. 1998;8:767–781. [Google Scholar]

- 22.Pei X, Moss F. Nature (London) 1996;379:618–621. doi: 10.1038/379618a0. [DOI] [PubMed] [Google Scholar]

- 23.Sugihara G, Allan W, Sobel D, Allan K D. Proc Natl Acad Sci USA. 1996;93:2608–2613. doi: 10.1073/pnas.93.6.2608. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Poon C-S, Merrill C K. Nature (London) 1997;389:492–495. doi: 10.1038/39043. [DOI] [PubMed] [Google Scholar]

- 25.Decoster G P, Labys W C, Mitchell D W. J Futures Markets. 1992;12:291–305. [Google Scholar]

- 26.Kopel M. J Evol Econ. 1997;7:269–289. [Google Scholar]

- 27.Smith R D. Soc Res Online. 1998;3:U89–U109. [Google Scholar]

- 28.Bolland K A, Atherton C R. Fam Soc—J Contemp Hum Serv. 1999;80:367–373. [Google Scholar]

- 29.Hayward T, Preston J. J Inform Sci. 1999;25:173–182. [Google Scholar]

- 30.Barnett W A, Serletis A. J Econ Dyn Control. 2000;24:703–724. [Google Scholar]

- 31.Lorenz E N. Nature (London) 1991;353:241–244. [Google Scholar]

- 32.Skinner J E, Pratt C M, Vybiral T A. Am Heart J. 1993;125:731–743. doi: 10.1016/0002-8703(93)90165-6. [DOI] [PubMed] [Google Scholar]

- 33.Martinerie J, Adam C, Le Van Quyen M, Baulac M, Clemenceau S, Renault B, Varela F J. Nat Med. 1998;4:1173–1176. doi: 10.1038/2667. [DOI] [PubMed] [Google Scholar]

- 34.Garfinkel A, Spano M L, Ditto W L, Weiss J N. Science. 1992;257:1230–1235. doi: 10.1126/science.1519060. [DOI] [PubMed] [Google Scholar]

- 35.Schiff S J, Jerger K, Duong D H, Chang T, Spano M L, Ditto W L. Nature (London) 1994;370:615–620. doi: 10.1038/370615a0. [DOI] [PubMed] [Google Scholar]

- 36.Cuomo K M, Oppenheim A V. Phys Rev Lett. 1993;71:65–68. doi: 10.1103/PhysRevLett.71.65. [DOI] [PubMed] [Google Scholar]

- 37.VanWiggeren G D, Roy R. Science. 1998;279:1198–1200. doi: 10.1126/science.279.5354.1198. [DOI] [PubMed] [Google Scholar]

- 38.Pecora L M, Carroll T L, Johnson G A, Mar D J, Heagy J F. Chaos. 1997;7:520–543. doi: 10.1063/1.166278. [DOI] [PubMed] [Google Scholar]

- 39.Pecora L. Phys World. 1998;11:25–26. [Google Scholar]

- 40.Kennel M B, Isabelle S. Phys Rev A. 1992;46:3111–3118. doi: 10.1103/physreva.46.3111. [DOI] [PubMed] [Google Scholar]

- 41.Weigend A S, Gershenfeld N A, editors. Time Series Prediction, Santa Fe Institute Studies in Complexity. Reading, MA: Addison–Wesley; 1994. [Google Scholar]

- 42.Theiler J, Eubank S, Longtin A, Galdrikian B, Farmer J D. Physica. 1992;D58:77–94. [Google Scholar]

- 43.Schreiber T. Phys Rev Lett. 1998;80:2105–2108. [Google Scholar]

- 44.Chang T, Sauer T, Schiff S J. Chaos. 1995;5:118–126. doi: 10.1063/1.166093. [DOI] [PubMed] [Google Scholar]

- 45.Barahona M, Poon C-S. Nature (London) 1996;381:215–217. [Google Scholar]

- 46.So P, Ott E, Sauer T, Gluckman B J, Grebogi C, Schiff S J. Phys Rev E. 1997;55:5398–5417. [Google Scholar]

- 47.Christini D J, Collins J J. Phys Rev Lett. 1995;75:2782–2785. doi: 10.1103/PhysRevLett.75.2782. [DOI] [PubMed] [Google Scholar]

- 48.Pierson D, Moss F. Phys Rev Lett. 1995;75:2124–2127. doi: 10.1103/PhysRevLett.75.2124. [DOI] [PubMed] [Google Scholar]

- 49.Theiler J, Rapp P E. Electroencephalogr Clin Neurophysiol. 1993;98:213–222. doi: 10.1016/0013-4694(95)00240-5. [DOI] [PubMed] [Google Scholar]

- 50.Perry J N, Woiwod I P, Smith R H, Morse D. Science. 1997;276:1881. [Google Scholar]

- 51.Dettmann C P, Cohen E G D, van Beijeren H. Nature (London) 1999;401:875. [Google Scholar]

- 52.Grassberger P, Schreiber T. Nature (London) 1999;401:875–876. [Google Scholar]

- 53.Poon C-S. J Cardiovasc Electrophysiol. 2000;11:235. doi: 10.1111/j.1540-8167.2000.tb00329.x. [DOI] [PubMed] [Google Scholar]

- 54.Kostelich E J, Schreiber T. Phys Rev E. 1993;48:1752–1763. doi: 10.1103/physreve.48.1752. [DOI] [PubMed] [Google Scholar]

- 55.Walker D M. Phys Lett A. 1998;249:209–217. [Google Scholar]

- 56.Theiler J, Eubank S. Chaos. 1993;3:771–782. doi: 10.1063/1.165936. [DOI] [PubMed] [Google Scholar]

- 57.Diks C, Vanhouwelingen J C, Takens F, Degoede J. Phys Lett A. 1995;201:221–228. [Google Scholar]

- 58.Stone L, Landan G, May R M. Proc R Soc London Ser B. 1996;263:1509–1513. [Google Scholar]

- 59.Glass L, Mackey M C. Ann NY Acad Sci. 1979;316:214–235. doi: 10.1111/j.1749-6632.1979.tb29471.x. [DOI] [PubMed] [Google Scholar]

- 60.Bünner M J, Ciofini M, Giaquinta A, Hegger R, Kantz H, Meucci R, Politi A. Eur Phys J D. 2000;10:177–187. [Google Scholar]

- 61.Györgyi L, Field R J. Nature (London) 1992;355:808–810. [Google Scholar]

- 62.Ruelle D, Takens F. Commun Math Phys. 1971;20:167–192. [Google Scholar]

- 63.Matsugu M, Duffin J, Poon C-S. J Comput Neurosci. 1998;5:35–51. doi: 10.1023/a:1008826326829. [DOI] [PubMed] [Google Scholar]

- 64.Curry J H, Yorke J A. Lecture Notes in Mathematics. Vol. 668. Berlin: Springer; 1978. pp. 48–66. [Google Scholar]

- 65.Franceschini V. Physica. 1983;6D:285–304. [Google Scholar]

- 66.Farmer J D. Physica. 1982;4D:366–393. [Google Scholar]

- 67.Harrison M A, Lai Y C. Phys Rev E. 1999;59:R3799–R3802. [Google Scholar]

- 68.Harrison M A, Lai Y C. Int J Bifurcat Chaos. 2000;10:1471–1483. [Google Scholar]

- 69.Ott E, Grebogi C, Yorke J A. Phys Rev Lett. 1990;64:1196–1199. doi: 10.1103/PhysRevLett.64.1196. [DOI] [PubMed] [Google Scholar]

- 70.Shinbrot T, Ott E, Grebogi C, Yorke J A. Phys Rev Lett. 1990;65:3215–3218. doi: 10.1103/PhysRevLett.65.3215. [DOI] [PubMed] [Google Scholar]

- 71.Jackson E A, Hübler A. Physica. 1990;44D:407–420. [Google Scholar]

- 72.Grebogi C, Ott E, Pelikan S, Yorke J A. Physica. 1984;13D:261–268. [Google Scholar]

- 73.Ditto W L, Spano M L, Savage H T, Rauseo S N, Heagy J, Ott E. Phys Rev Lett. 1990;65:533–536. doi: 10.1103/PhysRevLett.65.533. [DOI] [PubMed] [Google Scholar]

- 74.Stone E F. Phys Lett A. 1990;148:434–442. [Google Scholar]

- 75.Bickel P J, Bühlmann P. Proc Natl Acad Sci USA. 1996;93:12128–12131. doi: 10.1073/pnas.93.22.12128. [DOI] [PMC free article] [PubMed] [Google Scholar]