Abstract

The response of a neuron to a time-dependent stimulus, as measured in a Peri-Stimulus-Time-Histogram (PSTH), exhibits an intricate temporal structure that reflects potential temporal coding principles. Here we analyze the encoding and decoding of PSTHs for spiking neurons with arbitrary refractoriness and adaptation. As a modeling framework, we use the spike response model, also known as the generalized linear neuron model. Because of refractoriness, the effect of the most recent spike on the spiking probability a few milliseconds later is very strong. The influence of the last spike needs therefore to be described with high precision, while the rest of the neuronal spiking history merely introduces an average self-inhibition or adaptation that depends on the expected number of past spikes but not on the exact spike timings. Based on these insights, we derive a ‘quasi-renewal equation’ which is shown to yield an excellent description of the firing rate of adapting neurons. We explore the domain of validity of the quasi-renewal equation and compare it with other rate equations for populations of spiking neurons. The problem of decoding the stimulus from the population response (or PSTH) is addressed analogously. We find that for small levels of activity and weak adaptation, a simple accumulator of the past activity is sufficient to decode the original input, but when refractory effects become large decoding becomes a non-linear function of the past activity. The results presented here can be applied to the mean-field analysis of coupled neuron networks, but also to arbitrary point processes with negative self-interaction.

Author Summary

How can information be encoded and decoded in populations of adapting neurons? A quantitative answer to this question requires a mathematical expression relating neuronal activity to the external stimulus, and, conversely, stimulus to neuronal activity. Although widely used equations and models exist for the special problem of relating external stimulus to the action potentials of a single neuron, the analogous problem of relating the external stimulus to the activity of a population has proven more difficult. There is a bothersome gap between the dynamics of single adapting neurons and the dynamics of populations. Moreover, if we ignore the single neurons and describe directly the population dynamics, we are faced with the ambiguity of the adapting neural code. The neural code of adapting populations is ambiguous because it is possible to observe a range of population activities in response to a given instantaneous input. Somehow the ambiguity is resolved by the knowledge of the population history, but how precisely? In this article we use approximation methods to provide mathematical expressions that describe the encoding and decoding of external stimuli in adapting populations. The theory presented here helps to bridge the gap between the dynamics of single neurons and that of populations.

Introduction

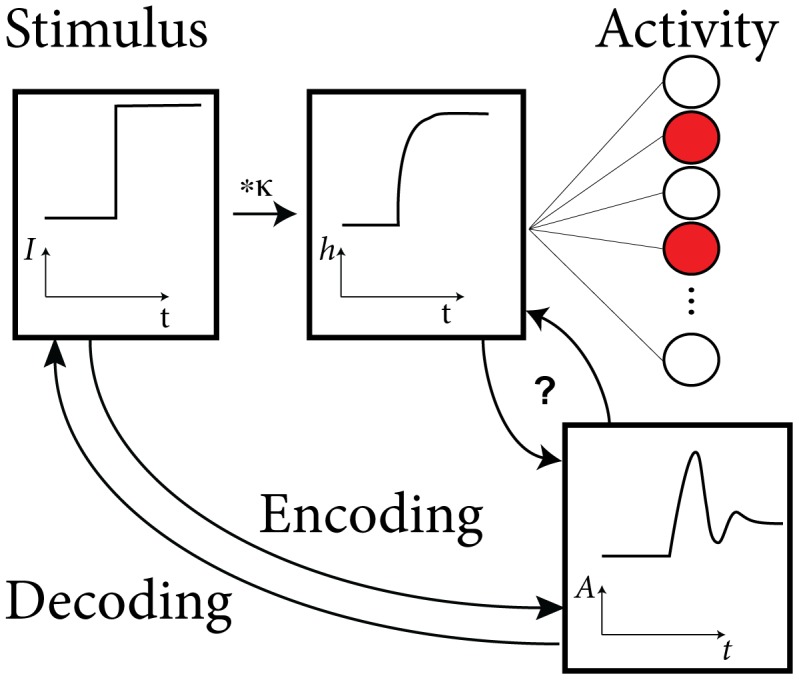

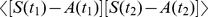

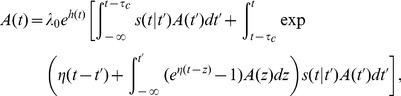

Encoding and decoding of information with populations of neurons is a fundamental question of computational neuroscience [1]–[3]. A time-varying stimulus can be encoded in the active fraction of a population of neurons, a coding procedure that we will refer to as population coding (Fig. 1). Given the need for fast processing of information by the brain [4], population coding is an efficient way to encode information by averaging across a pool of noisy neurons [5], [6] and is likely to be used at least to some degree by the nervous system [7]. For a population of identical neurons, the instantaneous population firing rate is proportional to the Peri-Stimulus Time Histogram (PSTH) of a single neuron driven repeatedly by the same stimulus over many trials.

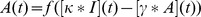

Figure 1. Encoding and Decoding with neuronal populations.

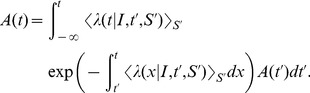

What is the function that relates an arbitrary stimulus to the population activity of adapting neurons? We focus on the problem of relating the filtered input  to the activity

to the activity  . The population activity is the fraction of active neurons (red) in the population of neurons (right). All neurons are identical and receive the same stimulus. One possible stimulus

. The population activity is the fraction of active neurons (red) in the population of neurons (right). All neurons are identical and receive the same stimulus. One possible stimulus  is a step current (left).

is a step current (left).

When driven by a step change in the input, the population of neurons coding for this stimulus responds first strongly but then adapts to the stimulus. To cite a few examples, the activity of auditory nerve fibers adapt to pure tones [8], cells in the retina and the visual cortex adapt to contrast [9], [10] and neurons in the inferior temporal cortex adapt to higher order structures of images [11]. Adaptation is energy-efficient [12] but leads to a potentially ambiguous code because adapting responses generate a population activity which does not directly reflect the momentary strength of the stimuli [13]. Putting the case of sensory illusions aside, the fact that our perception of constant stimuli does not fade away indicates that the adapting responses can be efficiently decoded by the brain areas further down the processing stream. In fact, illusions such as the motion after-effect are believed to reflect errors in decoding the activity of neuronal populations [14]. But what is the correct rule to decode population activity? What elements of the population history are relevant? What are the basic principles?

Synapse- and network-specific mechanisms merge with intrinsic neuronal properties to produce an adapting population response. Here we focus on the intrinsic mechanisms, commonly called spike-frequency adaptation. Spike-frequency adaptation appears in practically all neuron types of the nervous system [15]. Biophysical processes that can mediate spike-frequency adaptation include spike-triggered activation/inactivation of ion-channels [16]–[18] and a spike-triggered increase in the firing threshold [19]–[22]. Neurons adapt a little more each time they emit a spike, and it is the cumulative effect of all previous spikes that sets the level of adaptation. The effect of a single spike on future spiking probability cannot be summarized by a single time constant. Rather, the spike-triggered adaptation unfolds on multiple time scales and varies strongly across cell-types [22], [23].

Mean-field methods were used to describe: attractors [24]–[28], rapid-responses [6], [29] and signal propagation [30]. While adaptation is important to correctly predict the activity of single neurons [22], [31]–[33], it is difficult to include it in mean-field methods. A theory relating spike-frequency adaptation to population dynamics should be general enough to encompass a variety of different spike-triggered adaptation profiles, as observed in experiments. In the literature we find six main approaches to the population coding problem. The first and most simple one formulates the rate of a neuronal population (or the time-dependent rate in a PSTH) as a linear function of the stimulus. This phenomenological model relates to the concept of receptive fields [34] and can be made quantitative using a Wiener expansion [35]. Yet, early experimental tests showed that linear filtering must be complemented with a non-linear function [35], [36]. The linear-non-linear model can thus be considered as the second approach to population coding. In combination with a Poisson spike generator it is called the LNP model for Linear-Nonlinear-Poisson. It makes accurate predictions of experimental measurements for stationary stimulus ensembles, but fails when the stimulus switches either its first or second order statistics. Neural refractoriness is in part responsible for effects not taken into account in this linear-nonlinear model [37]–[40]. In a third approach proposed by Wilson and Cowan [41] the population activity is the solution to a non-linear differential equation. Unfortunately this equation has only a heuristic link to the underlying neuronal dynamics and cannot account for rapid transients in the population response. The fourth approach formulates the population activity in terms of an integral equation [6], [41], [42] which can be interpreted as a (time-dependent) renewal theory. While this renewal theory takes into account refractoriness (i.e. the effect of the most recent spike) and captures the rapid transients of the population response and PSTH, neither this one nor any of the other encoding frameworks mentioned above consider adaptive effects. To include adaptation into previously non-adaptive models, a common approach is to modify the effective input by rescaling the external input with a function that depends on the mean neuronal firing rate in the past [15], [43], [44]. This forms the fifth method. For example, Benda and Herz [15] suggested a phenomenological framework in which the linear-non-linear approach is modified as a function of the past activity while Rauch et al. [43] calculated the effective rate in integrate-and-fire neurons endowed with a frequency-dependent modification of the input current. Finally, there is also a sixth method to determine the population activity of adapting populations. Inspired by the Fokker-Planck approach for integrate-and-fire neurons [27], this last approach finds the population activity by evolving probability distributions of one or several state variables [45]–[49]. Isolating the population activity then involves solving a non-linear system of partial differential equations.

The results described in the present article are based on two principal insights. The first one is that adaptation reduces the effect of the stimulus primarily as a function of the expected number of spikes in the recent history and only secondarily as a function of the higher moments of the spiking history such as spike-spike correlations. We derive such an expansion of the history moments from the single neuron parameters. The second insight is that the effects of the refractory period are well captured by renewal theory and can be superimposed on the effects of adaptation.

The article is organized as follows: after a description of the population dynamics, we derive a mathematical expression that predicts the momentary value of the population activity from current and past values of the input. Then, we verify that the resulting encoding framework accurately describes the response to input steps. We also study the accuracy of the encoding framework in response to fluctuating stimuli and analyze the problem of decoding. Finally, we compare with simpler theories such as renewal theory and a truncated expansion of the past history moments.

Results

To keep the discussion transparent, we focus on a population of unconnected neurons. Our results can be generalized to coupled populations using standard theoretical methods [3], [6], [27].

Encoding Time-dependent Stimuli in the Population Activity

How does a population of adapting neurons encode a given stimulating current  ? Each neuron in the population will produce a spike train, denoted by

? Each neuron in the population will produce a spike train, denoted by  , such that the population can be said to respond with a set of spike trains. Using the population approach, we want to know how the stimulus is reflected in the fraction of neurons that are active at time

, such that the population can be said to respond with a set of spike trains. Using the population approach, we want to know how the stimulus is reflected in the fraction of neurons that are active at time  , that is, the population activity

, that is, the population activity  (Fig. 1). The population activity (or instantaneous rate of the population) is a biologically relevant quantity in the sense that a post-synaptic neuron further down the processing stream receives inputs from a whole population of presynaptic neurons and is therefore at each moment in time driven by the spike arrivals summed over the presynaptic population, i. e. the presynaptic population activity.

(Fig. 1). The population activity (or instantaneous rate of the population) is a biologically relevant quantity in the sense that a post-synaptic neuron further down the processing stream receives inputs from a whole population of presynaptic neurons and is therefore at each moment in time driven by the spike arrivals summed over the presynaptic population, i. e. the presynaptic population activity.

Mathematically, we consider a set of spike trains in which spikes are represented by Dirac-pulses centered on the spike time  :

:  [3]. The population activity is defined as the expected proportion of active neurons within an infinitesimal time interval. It corresponds, in the limit of a large population and small time interval, to the number of active neurons

[3]. The population activity is defined as the expected proportion of active neurons within an infinitesimal time interval. It corresponds, in the limit of a large population and small time interval, to the number of active neurons  in the time interval

in the time interval  divided by the total number of neurons

divided by the total number of neurons  and the time interval

and the time interval  [3]:

[3]:

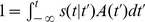

| (1) |

The angular brackets  denote the expected value over an ensemble of identical neurons. Experimentally, the population activity is estimated on a finite time interval and for a finite population. Equivalently the population activity can be considered as an average over independent presentations of a stimulus in only one neuron. In this sense, the population activity is equivalent to both the time-dependent firing intensity and the Peri-Stimulus Time Histogram (PSTH).

denote the expected value over an ensemble of identical neurons. Experimentally, the population activity is estimated on a finite time interval and for a finite population. Equivalently the population activity can be considered as an average over independent presentations of a stimulus in only one neuron. In this sense, the population activity is equivalent to both the time-dependent firing intensity and the Peri-Stimulus Time Histogram (PSTH).

Since the population activity represents the instantaneous firing probability, it is different from the conditional firing intensity,  , which further depends on the precise spiking history, or past spike train

, which further depends on the precise spiking history, or past spike train  . Suppose we have observed a single neuron for a long time (e.g. 10 seconds). During that time we have recorded its time dependent input current

. Suppose we have observed a single neuron for a long time (e.g. 10 seconds). During that time we have recorded its time dependent input current  and observed its firing times

and observed its firing times  . Knowing the firing history

. Knowing the firing history  for

for  and the time-dependent driving current

and the time-dependent driving current  for

for  , the variable

, the variable  describes the instantaneous rate of the neuron to fire again at time

describes the instantaneous rate of the neuron to fire again at time  . Intuitively,

. Intuitively,  reflects a likelihood to spike at time

reflects a likelihood to spike at time  for a neuron having a specific history while

for a neuron having a specific history while  is the firing rate at time

is the firing rate at time  averaged on all possible histories (see Methods):

averaged on all possible histories (see Methods):

| (2) |

Ideally, one could hope to estimate  directly from the data. However, given the dimensionality of

directly from the data. However, given the dimensionality of  and

and  , model-free estimation is not feasible. Instead we use the Spike Response Model (SRM; [6], [50]–[52]), which is an example of a Generalized Linear Model [53], in order to parametrize

, model-free estimation is not feasible. Instead we use the Spike Response Model (SRM; [6], [50]–[52]), which is an example of a Generalized Linear Model [53], in order to parametrize  , but other parametrizations outside the exponential family are also possible. In particular,

, but other parametrizations outside the exponential family are also possible. In particular,  can also be defined for nonlinear neuron models with diffusive noise in the input, even though explicit expressions are not available. The validity of the SRM as a model of neuronal spike generation has been verified for various neuron types and various experimental protocols [22], [31], [32]. In the SRM, the conditional firing intensity

can also be defined for nonlinear neuron models with diffusive noise in the input, even though explicit expressions are not available. The validity of the SRM as a model of neuronal spike generation has been verified for various neuron types and various experimental protocols [22], [31], [32]. In the SRM, the conditional firing intensity  increases with the effective input

increases with the effective input  :

:

| (3) |

where  is the total driving force of the neuron:

is the total driving force of the neuron:

| (4) |

where ‘ ’ denotes the convolution,

’ denotes the convolution,  is the input current convolved with

is the input current convolved with  the membrane filter,

the membrane filter,  encodes the effect of each spike on the probability of spiking,

encodes the effect of each spike on the probability of spiking,  is a scaling constant related to the instantaneous rate at the threshold with units of inverse time (see Methods for model parameters). The link-function

is a scaling constant related to the instantaneous rate at the threshold with units of inverse time (see Methods for model parameters). The link-function  can take different shapes depending on the noise process [3]. Here we will use an exponential link-function since it was shown to fit the noisy adaptive-exponential-integrate-and-fire model [54] as well as experimental data [22], [32], [55]. The exponential link-function:

can take different shapes depending on the noise process [3]. Here we will use an exponential link-function since it was shown to fit the noisy adaptive-exponential-integrate-and-fire model [54] as well as experimental data [22], [32], [55]. The exponential link-function:  corresponds to

corresponds to  after absorbing the scaling parameter

after absorbing the scaling parameter  in the constant

in the constant  and

and  and in the functions

and in the functions  and

and  to make these unit-free.

to make these unit-free.

To see that the function  can implement both adaptation and refractoriness, let us first distinguish these processes conceptually. The characteristic signature of refractoriness is that the interspike interval distribution for constant input is zero or close to zero for very short intervals (e.g. one millisecond) - and in the following we use this characteristic signature as a definition of refractoriness. With this definition, a Hodgkin-Huxley model (with or without noise) or a leaky integrate-and-fire model (with or without diffusive noise) are refractory, whereas a Linear-Nonlinear-Poisson Model is not. In fact, every neuron model with intrinsic dynamics exhibits refractoriness, but Poissonian models do not.

can implement both adaptation and refractoriness, let us first distinguish these processes conceptually. The characteristic signature of refractoriness is that the interspike interval distribution for constant input is zero or close to zero for very short intervals (e.g. one millisecond) - and in the following we use this characteristic signature as a definition of refractoriness. With this definition, a Hodgkin-Huxley model (with or without noise) or a leaky integrate-and-fire model (with or without diffusive noise) are refractory, whereas a Linear-Nonlinear-Poisson Model is not. In fact, every neuron model with intrinsic dynamics exhibits refractoriness, but Poissonian models do not.

While refractoriness refers to the interspike-interval distribution and therefore to the dependence upon the most recent spike, adaptation refers to the effect of multiple spikes. Adaptation is most clearly observed as a successive increase of interspike intervals in response to a step current. In contrast, a renewal model [56], where interspike intervals are independent of each other, does not exhibit adaptation (but does exhibit refractoriness). Similarly, a leaky or exponential integrate-and-fire model with diffusive noise does not show adaptation. A Hodgkin-Huxley model with the original set of parameters exhibits very little adaptation, but addition of a slow ion current induces adaptation.

Conceptually, contributions of multiple spikes must accumulate to generate spike frequency adaptation. In the Spike Response Model, this accumulation is written as a convolution:  . If

. If  for

for  and vanishes elsewhere, the model exhibits absolute refractoriness of duration

and vanishes elsewhere, the model exhibits absolute refractoriness of duration  but no adaptation. If

but no adaptation. If  for

for  and

and  with

with  ms, then the model exhibits adaptation in addition to refractoriness. In all the simulations, we use

ms, then the model exhibits adaptation in addition to refractoriness. In all the simulations, we use  with

with  and

and  , With this choice of

, With this choice of  we are in agreement with experimental results on cortical neurons [22], but the effects of adaptation and refractoriness cannot be separated as clearly as in the case of a model with absolute refractoriness. Loosely speaking, the long time constant

we are in agreement with experimental results on cortical neurons [22], but the effects of adaptation and refractoriness cannot be separated as clearly as in the case of a model with absolute refractoriness. Loosely speaking, the long time constant  causes adaptation, whereas the short time constant

causes adaptation, whereas the short time constant  mainly contributes to refractoriness. In fact, for

mainly contributes to refractoriness. In fact, for  and

and  equal to the membrane time constant, the model becomes equivalent to a leaky integrate-and-fire neuron [3], so that the neuron is refractory and non-adapting. In the simulations,

equal to the membrane time constant, the model becomes equivalent to a leaky integrate-and-fire neuron [3], so that the neuron is refractory and non-adapting. In the simulations,  is longer than the membrane time constant so that, for very strong stimuli, it may also contribute to adaptation. We note that the formalism developed in this paper does not rely on our specific choice of

is longer than the membrane time constant so that, for very strong stimuli, it may also contribute to adaptation. We note that the formalism developed in this paper does not rely on our specific choice of  . We only require (i) causality by imposing

. We only require (i) causality by imposing  for

for  and (ii)

and (ii)  so that the effect of a past spike decreases over time.

so that the effect of a past spike decreases over time.

The effects described by  can be mediated by a dynamic threshold as well as spike-triggered currents [22]. Throughout the remainder of the text we will refer to

can be mediated by a dynamic threshold as well as spike-triggered currents [22]. Throughout the remainder of the text we will refer to  as the effective spike after-potential (SAP). It is, however, important to note that

as the effective spike after-potential (SAP). It is, however, important to note that  has no units, i.e. it relates to an appropriately scaled version of the experimentally measured spike after-potential. A depolarizing (facilitating) SAP is associated with

has no units, i.e. it relates to an appropriately scaled version of the experimentally measured spike after-potential. A depolarizing (facilitating) SAP is associated with  , while a hyperpolarizing (adapting) SAP is associated with

, while a hyperpolarizing (adapting) SAP is associated with  .

.

Quasi-Renewal Theory

In a population of neurons, every neuron has a different spiking history defined by its past spike train  where

where  is the most recent spike,

is the most recent spike,  the previous one and so on. To find the population activity at any given time, we hypothesize that the strong effect of the most recent spike needs to be considered explicitly while the rest of the spiking history merely introduces a self-inhibition that is similar for all neurons and that depends only on the average firing profile in the past. Thus for each neuron we write the past spike train as

the previous one and so on. To find the population activity at any given time, we hypothesize that the strong effect of the most recent spike needs to be considered explicitly while the rest of the spiking history merely introduces a self-inhibition that is similar for all neurons and that depends only on the average firing profile in the past. Thus for each neuron we write the past spike train as  where

where  is the time of the last spike. Our hypothesis corresponds to the approximation

is the time of the last spike. Our hypothesis corresponds to the approximation  , i.e. the last spike needs to be treated explicitly, but we may average across earlier spike times. This approximation is not appropriate for intrinsically bursting neurons, but it should apply well to other cell types (fast-spiking, non-fast-spiking, delayed, low-threshold). According to this hypothesis, and in analogy to the time-dependent renewal theory [3], [42] we find (derivation in Methods):

, i.e. the last spike needs to be treated explicitly, but we may average across earlier spike times. This approximation is not appropriate for intrinsically bursting neurons, but it should apply well to other cell types (fast-spiking, non-fast-spiking, delayed, low-threshold). According to this hypothesis, and in analogy to the time-dependent renewal theory [3], [42] we find (derivation in Methods):

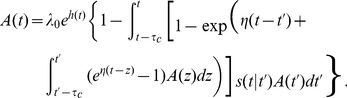

|

(5) |

Unfortunately Eq. 5 remains insolvable, because we do not know  . Using Eqs. 3 and 4 we find:

. Using Eqs. 3 and 4 we find:

| (6) |

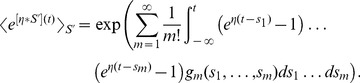

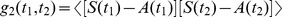

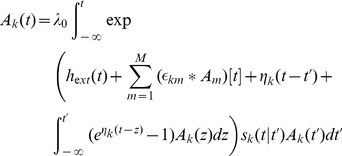

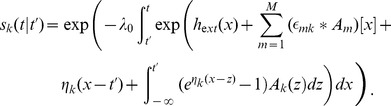

As mentioned above, we hypothesize that the spiking history before the previous spike merely inhibits subsequent firing as a function of the average spiking profile in the past. In order to formally implement such an approximation, we introduce a series expansion [57] in terms of the spiking history moments (derivation in Methods) where we exploit the fact that  is a moment generating function:

is a moment generating function:

|

(7) |

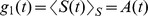

The first history moment  relates to the expected number of spikes at a given time

relates to the expected number of spikes at a given time  . The second history moment considers the spike-spike correlations

. The second history moment considers the spike-spike correlations  and so on for the higher moments.

and so on for the higher moments.

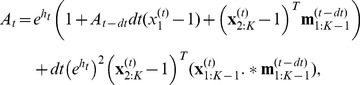

We truncate the series expansion resulting from Eq. 7 at the first order ( ). We can then write Eq. 6 as:

). We can then write Eq. 6 as:

| (8) |

We can insert Eq. 8 in Eq. 5 so as to solve for  as a function of the filtered input

as a function of the filtered input  . The solutions can be found using numerical methods.

. The solutions can be found using numerical methods.

We note that by removing the integral of  from Eq. 8 we return exactly to the renewal equation for population activity (

from Eq. 8 we return exactly to the renewal equation for population activity ( ). Adaptation reduces the driving force by an amount proportional to the average spike density before

). Adaptation reduces the driving force by an amount proportional to the average spike density before  , that is, the average spiking density before the most recent spike. In other words, instead of using the specific spike history of a given neuron, we work with the average history except for the most recent spike which we treat explicitly. We call Eqs. 5 and 8 the Quasi-Renewal equation (QR) to acknowledge its theoretical foundations. It is renewal-like, yet, we do not assume the renewal condition since a new spike does not erase the effect of the previous history (see Methods).

, that is, the average spiking density before the most recent spike. In other words, instead of using the specific spike history of a given neuron, we work with the average history except for the most recent spike which we treat explicitly. We call Eqs. 5 and 8 the Quasi-Renewal equation (QR) to acknowledge its theoretical foundations. It is renewal-like, yet, we do not assume the renewal condition since a new spike does not erase the effect of the previous history (see Methods).

Encoding and Decoding Time-Dependent Stimuli

Let us now assess the domain of validity of the QR theory by comparing it with direct simulations of a population of SRM neurons. To describe the single neurons dynamics, we use a set of parameters characteristic of L2–3 pyramidal cells [22]. The SAP is made of two exponentials: one with a short time constant (30 ms) but large amplitude and another with a long time constant (400 ms) but a small amplitude. The results presented here are representative of results that can be obtained for any other physiological set of parameters. For details on the simulation, see Methods.

The response to a step increase in stimulating current is a standard paradigm to assess adaptation in neurons and used here as a qualitative test of our theory. We use three different step amplitudes: weak, medium and strong. The response of a population of, say, 25,000 model neurons to a strong step increase in current starts with a very rapid peak of activity. Indeed, almost immediately after the strong stimulus onset, most of the neurons are triggered to emit a spike. Immediately after firing at  , the membrane potential of theses neurons is reset to a lower value by the contribution of the SAP;

, the membrane potential of theses neurons is reset to a lower value by the contribution of the SAP;  . The lower membrane potential leads to a strong reduction of the population activity. Neurons which have fired at time

. The lower membrane potential leads to a strong reduction of the population activity. Neurons which have fired at time  are ready to fire again only after the SAP has decreased sufficiently so that the membrane potential can approach again the threshold

are ready to fire again only after the SAP has decreased sufficiently so that the membrane potential can approach again the threshold  . We can therefore expect that a noiseless population of neurons will keep on oscillating with the intrinsic firing frequency of the neurons [6]; however, due to stochastic spike emission of a noisy population the neurons in the population gradually de-synchronize. The damped-oscillation that we see in response to a strong step stimulus (Fig. 2C) is the result of this gradual de-synchronization. Similar damped oscillations at the intrinsic firing frequency of the neurons have also been observed for a Spike Response Model with renewal properties [6], i.e., a model that only remembers the effect of the last spike.

. We can therefore expect that a noiseless population of neurons will keep on oscillating with the intrinsic firing frequency of the neurons [6]; however, due to stochastic spike emission of a noisy population the neurons in the population gradually de-synchronize. The damped-oscillation that we see in response to a strong step stimulus (Fig. 2C) is the result of this gradual de-synchronization. Similar damped oscillations at the intrinsic firing frequency of the neurons have also been observed for a Spike Response Model with renewal properties [6], i.e., a model that only remembers the effect of the last spike.

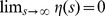

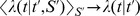

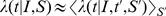

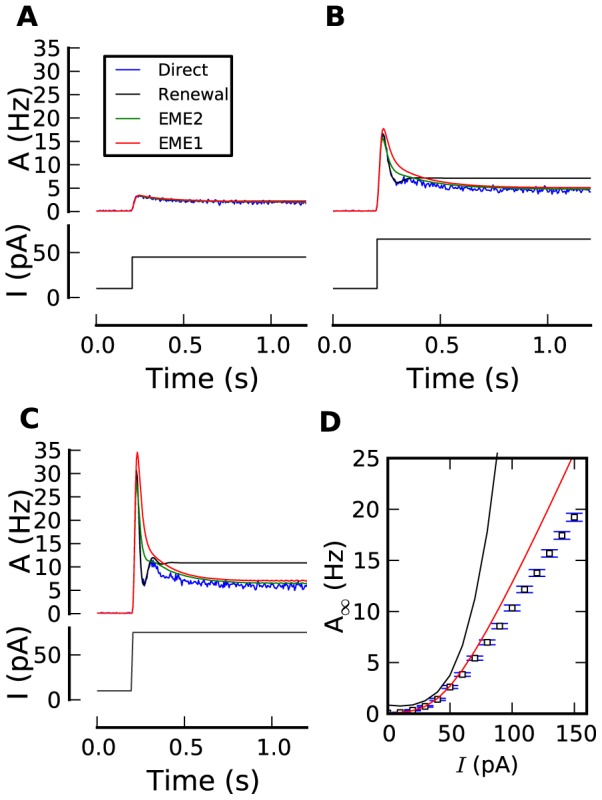

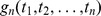

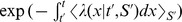

Figure 2. Quasi-renewal theory for step responses with realistic SAP.

(A–C) Population activity responses (top panels; PSTH from 25,000 repeated simulations in blue, quasi-renewal theory in black) to the step current input as shown in bottom panels (black). The input step size was increased from A to C. The mean first and last interspike interval were 458 2 ms and 504

2 ms and 504 2 ms, respectively, in A, 142.1

2 ms, respectively, in A, 142.1 0.4 and 214

0.4 and 214 1 ms in B, 93.5

1 ms in B, 93.5 0.2 and 163.2

0.2 and 163.2 0.5 ms in C. (D) Steady-state activity vs. input current for simulations of 25,000 independent neurons (blue) and quasi-renewal theory (black). The SAP was fixed to

0.5 ms in C. (D) Steady-state activity vs. input current for simulations of 25,000 independent neurons (blue) and quasi-renewal theory (black). The SAP was fixed to  [22]. For other model parameters see Models and Methods.

[22]. For other model parameters see Models and Methods.

In contrast to renewal models (i.e., models with refractoriness but no adaptation), we observe in Fig. 2C that the population activity decays on a slow time scale, taking around one second to reach a steady state. This long decay is due to adaptation in the single-neuron dynamics, here controlled by the slow time constant  ms. The amount of adaptation can be quantified if we compare, for a given neuron its first interspike interval after stimulus onset with the last interspike interval. The mean first interspike interval (averaged over all neurons) for the strong step stimulus is 93 ms while the last interval is nearly twice as long (163 ms), indicating strong adaptation. For smaller steps, the effect of refractoriness is less important so that adaptation becomes the most prominent feature of the step response (Fig. 2A). An appropriate encoding framework should reproduce both the refractoriness-based oscillations and the adaptation-based decay.

ms. The amount of adaptation can be quantified if we compare, for a given neuron its first interspike interval after stimulus onset with the last interspike interval. The mean first interspike interval (averaged over all neurons) for the strong step stimulus is 93 ms while the last interval is nearly twice as long (163 ms), indicating strong adaptation. For smaller steps, the effect of refractoriness is less important so that adaptation becomes the most prominent feature of the step response (Fig. 2A). An appropriate encoding framework should reproduce both the refractoriness-based oscillations and the adaptation-based decay.

The QR equation describes well both the damped oscillation and the adapting tail of the population activity response to steps (Fig. 2). Also, the steady state activity is predicted over a large range (Fig. 2D). We note that an adaptation mechanism that is essentially subtractive on the membrane potential (Eq. 4) leads here to a divisive effect on the frequency-current curve. Altogether, we conclude the QR theory accurately encode the response to step stimulus.

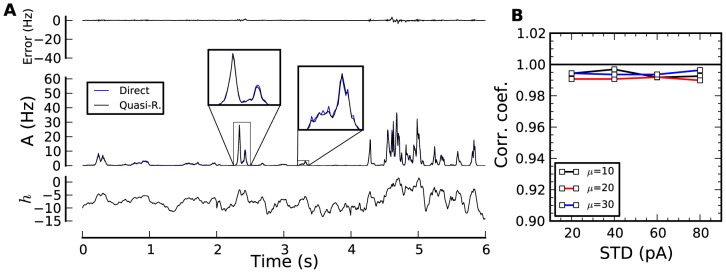

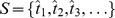

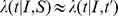

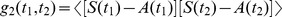

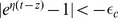

Step changes in otherwise constant input are useful for qualitative assessment of the theory but quite far from natural stimuli. Keeping the same SAP as in Fig. 2, we replace the piecewise-constant input by a fluctuating current (here Ornstein-Uhlenbeck process) and study the validity of QR over a range of input mean and standard deviation (STD), see Fig. 3. As the STD of the input increases, the response of the population reaches higher activities (maximum activity at STD = 80 pA was 89 Hz). The prediction by the QR theory is almost perfect with correlation coefficients consistently higher than 0.98. Note that the correlation coefficient is bounded above by the finite-size effects in estimating the average of the 25,000-neuron simulation. Over the range of input studied, there was no tendency of either overestimating or underestimating the population activity (probability of positive error was 0.5). There was only a weak tendency of increased discrepancies between theory and simulation at higher activity (correlation coefficient between simulated activity and mean square error was 0.25).

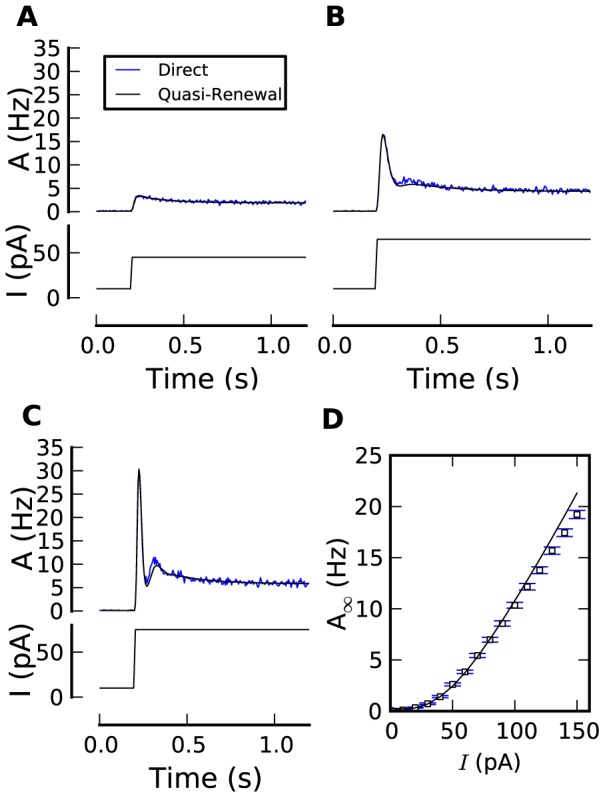

Figure 3. Encoding time-dependent stimuli in the population activity.

(A) Population activity responses (middle panel; PSTH from 25,000 repeated simulations in blue, quasi-renewal theory in black) to the time-dependent stimuli shown in the bottom panel (black). The difference between direct simulation and theory is shown in the top panel. The stimulus is an Ornstein-Uhlenbeck process with correlation time constant of 300 ms, a STD increasing every 2 seconds (20,40,60 pA) and a mean of 10 pA. (B) Correlation coefficients between direct simulation and QR for various STDs and mean (in pA) of the input current.

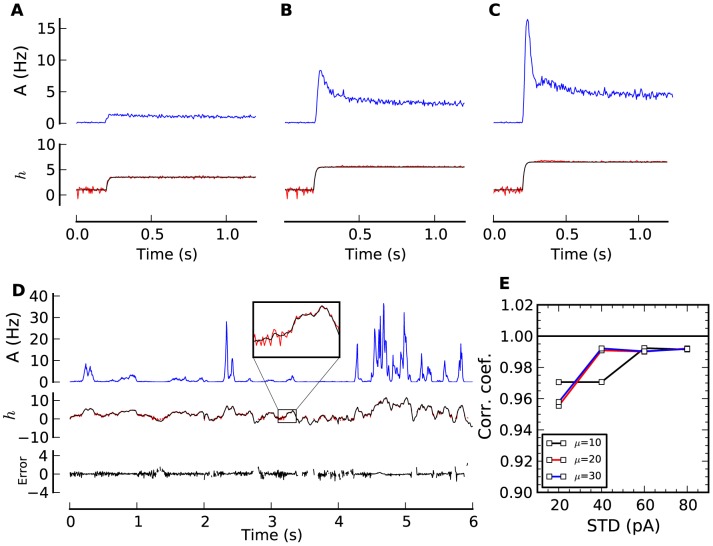

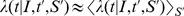

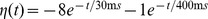

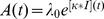

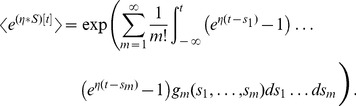

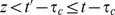

Decoding the population activity requires solving the QR equation (Eq. 5 and 8) for the original input  (see Methods). Input steps can be correctly decoded (Fig. 4A–C) but also fluctuating stimuli (Fig. 4D–E). Again, the input mean does not influence the precision of the decoding (Fig. 4E). The numerical method does not decode regions associated with population activities that are either zero or very small. Accordingly, the correlation coefficient in Fig. 4E is calculated only at times where decoding could be carried out. Note that unless one is to estimate the statistics of the input current and assume stationarity, it is impossible for any decoder to decode at times when

(see Methods). Input steps can be correctly decoded (Fig. 4A–C) but also fluctuating stimuli (Fig. 4D–E). Again, the input mean does not influence the precision of the decoding (Fig. 4E). The numerical method does not decode regions associated with population activities that are either zero or very small. Accordingly, the correlation coefficient in Fig. 4E is calculated only at times where decoding could be carried out. Note that unless one is to estimate the statistics of the input current and assume stationarity, it is impossible for any decoder to decode at times when  . If the size of the population is decreased, the performance of the QR decoder decreases (Fig. S1). Finite size effects limit decoding performance by increasing the error on the mean activity (as can be seen by comparing the effect of filtering the average population activity (Fig. S1A and B)). Another finite-size effect is that at small population sizes there is a greater fraction of time where an estimate of the activity is zero and the decoding cannot be performed (Fig. S1D–F). Also, decoding errors are larger when

. If the size of the population is decreased, the performance of the QR decoder decreases (Fig. S1). Finite size effects limit decoding performance by increasing the error on the mean activity (as can be seen by comparing the effect of filtering the average population activity (Fig. S1A and B)). Another finite-size effect is that at small population sizes there is a greater fraction of time where an estimate of the activity is zero and the decoding cannot be performed (Fig. S1D–F). Also, decoding errors are larger when  is close to zero (Fig. S1C). Nevertheless, for an input with STD = 40 pA and a population of 250 neurons, QR decoding can be performed 55% of the times with a correlation coefficient of 0.92. If the filtering of the population activity is on a longer time scale (20 ms instead of 2 ms) then decoding is possible 82% of the times and the accuracy is roughly the same (Fig. S1).

is close to zero (Fig. S1C). Nevertheless, for an input with STD = 40 pA and a population of 250 neurons, QR decoding can be performed 55% of the times with a correlation coefficient of 0.92. If the filtering of the population activity is on a longer time scale (20 ms instead of 2 ms) then decoding is possible 82% of the times and the accuracy is roughly the same (Fig. S1).

Figure 4. Decoding the stimulus from the population activity.

(A–C) The original (bottom panels, black line) and decoded stimulus (bottom panels, red line; arbitrary units) recovered from the PSTH of 25,000 independent SRM neurons (top panels; blue line) with the QR decoder (Eq. 45). (D) Same as before but for time-dependent input. The decoded waveform of negative input is occasionally undefined and corresponds to input outside the dynamic range. The difference between direct simulation and theory is shown in the bottom panel. (E) Correlation coefficient between original and decoded input as a function of input STD, shown for three distinct mean input ( pA,

pA,  pA, and

pA, and  pA). Decoding based on quasi-renewal theory (Methods).

pA). Decoding based on quasi-renewal theory (Methods).

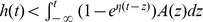

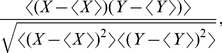

Comparing Population Encoding Theories

We will consider two recent theories of population activity from the literature. Both can be seen as extensions of rate models such as the Linear-Nonlinear Poisson model where the activity of a homogeneous population is  where

where  is a linear filter and

is a linear filter and  some nonlinear function. First, we focus on adaptive formulations of such rate models. For example Benda and Herz [15] have suggested that the firing rate of adapting neurons is a non-linear function of an input that is reduced by the past activity, such that the activity is

some nonlinear function. First, we focus on adaptive formulations of such rate models. For example Benda and Herz [15] have suggested that the firing rate of adapting neurons is a non-linear function of an input that is reduced by the past activity, such that the activity is  where

where  is a self interaction filter that summarizes the effect of adaptation. Second, we compare our approach with renewal theory [3], [42] which includes refractoriness, but not adaptation. How does our QR theory relate to these existing theories? And how would these competing theories perform on the same set of step stimuli?

is a self interaction filter that summarizes the effect of adaptation. Second, we compare our approach with renewal theory [3], [42] which includes refractoriness, but not adaptation. How does our QR theory relate to these existing theories? And how would these competing theories perform on the same set of step stimuli?

To discuss the relation to existing theories, we recall that the instantaneous rate of our model  depends on both the input and the previous spike trains. In QR theory, we single out the most recent spike at

depends on both the input and the previous spike trains. In QR theory, we single out the most recent spike at  and averaged over the remaining spike trains

and averaged over the remaining spike trains  :

:  . There are two alternative approaches. One can keep the most recent spike at

. There are two alternative approaches. One can keep the most recent spike at  and disregard the effect of all the others:

and disregard the effect of all the others:  . This gives rise to the time-dependent renewal theory, which will serve as a first reference for the performance comparison discussed below. On the other hand, one can average over all previous spikes, that is, no special treatment for the most recent one. In this case

. This gives rise to the time-dependent renewal theory, which will serve as a first reference for the performance comparison discussed below. On the other hand, one can average over all previous spikes, that is, no special treatment for the most recent one. In this case

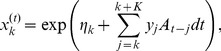

| (9) |

The right-hand side of Eq. 9 can be treated with a moment expansion similar to the one in Eq. 7. To zero order, this gives a population rate  , that is, an instantiation of the LNP model. To first order in an event-based moment expansion (EME1) we find:

, that is, an instantiation of the LNP model. To first order in an event-based moment expansion (EME1) we find:

| (10) |

Therefore, the moment expansion (Eq. 7) offers a way to link the phenomenological framework of Benda and Herz (2003) to parameters of the SRM. In particular, the nonlinearity is the exponential function, the input term is  and the self-inhibition filter is

and the self-inhibition filter is  . We note that Eq. 10 is a self-consistent equation for the population activity valid in the limit of small coupling between the spikes which can be solved using standard numerical methods (see Methods). A second-order equation (EME2) can similarly be constructed using an approximation to the correlation function (see Methods).

. We note that Eq. 10 is a self-consistent equation for the population activity valid in the limit of small coupling between the spikes which can be solved using standard numerical methods (see Methods). A second-order equation (EME2) can similarly be constructed using an approximation to the correlation function (see Methods).

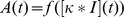

We compare the prediction of EME1, EME2 and renewal theory with the simulated responses to step inputs (Fig. 5). All the encoding frameworks work well for small input amplitudes (Fig. 5A). It is for larger input steps that the different theories can be distinguished qualitatively (Fig. 5C). Renewal theory predicts accurately the initial damped oscillation as can be expected by its explicit treatment of the relative refractory period. The adapting tail, however, is missing. The steady state is reached too soon and at a level which is systematically too high. EME1 is more accurate in its description of the adapting tail but fails to capture the damped oscillations. The strong refractory period induces a strong coupling between the spikes which means that truncating to only the first moment is insufficient. The solution based on EME2 improves the accuracy upon that of EME1 so as to make the initial peak shorter, but oscillates only weakly. We checked that the failure of the moment-expansion approach is due to the strong refractory period by systematically modifying the strength of the SAP (Fig. S2). Similarly, when the SAP is weak, the effect of  will often accumulate over several spikes and renewal theory does not capture the resulting adaptation (Fig. S2).

will often accumulate over several spikes and renewal theory does not capture the resulting adaptation (Fig. S2).

Figure 5. Approximative theories.

(A–C) Population activity responses (top panels; PSTH from 25,000 repeated simulations in blue, renewal theory in black, first order moment expansion (EME1) in red, second order (EME2) in green) to the step current input (bottom panels; black). (D) Activity at the steady state vs. input current as calculated from the direct simulation of 25,000 model neurons (blue squares, error bars show one standard error of the mean), prediction from renewal theory (black), and 1st order moment-expansion (red, Eq. 51).

Fluctuating input makes the population respond in peaks of activity separated by periods of quiescence. This effectively reduces the coupling between the spikes and therefore improves the accuracy of EME1. The validity of EME1 for encoding time-dependent stimulus (Fig. S3) decreases with the STD of the fluctuating input with no clear dependence on the input mean.

Decoding with EME1 is done according to a simple relation:

| (11) |

where the logarithm of the momentary population activity is added to an accumulation of the past activity. The presence of the logarithm reflects the non-linearity for encoding (the link-function in Eq. 3) and leads to the fact that when the instantaneous population activity is zero, the stimulus is undefined but bounded from above:  . Fig. S4 shows the ability of Eq. 11 to recover the input from the population activity of 25,000 model neurons. We conclude that Eq. 11 is a valid decoder in the domain of applicability of EME1.

. Fig. S4 shows the ability of Eq. 11 to recover the input from the population activity of 25,000 model neurons. We conclude that Eq. 11 is a valid decoder in the domain of applicability of EME1.

In summary, the EMEs yield theoretical expressions for the time-dependent as well as steady-state population activity. These expressions are valid in the limit of small coupling between the spikes which corresponds to either large interspike intervals or small SAP. Renewal theory on the other hand is valid when the single-neuron dynamics does not adapt and whenever the refractory effects dominate.

Discussion

The input-output function of a neuron population is sometimes described as a linear filter of the input [41], as a linear filter of the input reduced as a function of past activity [58], [59], as a non-linear function of the filtered input [60], or by any of the more recent population encoding frameworks [47], [48], [61]–[65]. These theories differ in their underlying assumptions. To the best of our knowledge, a closed-form expression that does not assume weak refractoriness or weak adaptation has not been published before.

We have derived self-consistent formulas for the population activity of independent adapting neurons. There are two levels of approximation, EME1 (Eq. 10) is valid at low coupling between spikes which can be observed in real neurons whenever (i) the interspike intervals are large, (ii) the SAPs have small amplitudes or (iii) both the firing rate is low and the SAPs have small amplitudes. The second level of approximation merges renewal theory with the moment-expansion to give an accurate description on all time-scales. We called this approach the QR theory.

The QR equation captures almost perfectly the population code for time-dependent input even at the high firing rates observed in retinal ganglion cells [55]. But for the large interspike intervals and lower population activity levels of in vivo neurons of the cortex [66], [67], it is possible that the simpler encoding scheme of Eq. 10 is sufficient. Most likely, the appropriate level of approximation will depend on the neural system; cortical sparse coding may be well represented by EME, while neuron populations in the early stages of perception may require QR.

We have focused here on the Spike Response Model with escape noise which is an instantiation of a Generalized Linear Model. The escape noise model, defined as the instantaneous firing rate  given the momentary distance between the (deterministic) membrane potential and threshold should be contrasted with the diffusive noise model where the membrane potential fluctuates because of noisy input. Nevertheless, the two noise models have been linked in the past [51], [54], [68]. For example, the interval-distribution of a leaky integrate-and-fire model with diffusive noise and arbitrary input can be well captured by escape noise with instantaneous firing rate

given the momentary distance between the (deterministic) membrane potential and threshold should be contrasted with the diffusive noise model where the membrane potential fluctuates because of noisy input. Nevertheless, the two noise models have been linked in the past [51], [54], [68]. For example, the interval-distribution of a leaky integrate-and-fire model with diffusive noise and arbitrary input can be well captured by escape noise with instantaneous firing rate  which depends both on the membrane potential and its temporal derivative

which depends both on the membrane potential and its temporal derivative  [51]. The dependence upon

[51]. The dependence upon  accounts for the rapid and replicable response that one observes when an integrate-and-fire model with diffusive noise is driven in the supra-threshold regime [68] and can, in principle, be included in the framework of the QR theory.

accounts for the rapid and replicable response that one observes when an integrate-and-fire model with diffusive noise is driven in the supra-threshold regime [68] and can, in principle, be included in the framework of the QR theory.

The decoding schemes presented in this paper (Eq. 11 and 45) reveal a fundamental aspect of population coding with adapting neurons. Namely, the ambiguity introduced by the adaptation can be resolved by considering a well-tuned accumulator of past activity. The neural code of adapting populations is ambiguous because the momentary level of activity could be the result of different stimulus histories. We have shown that resolving the ambiguity requires the knowledge of the activity in the past but to a good approximation does not require the knowledge of which neuron was active. At high population activity for neurons with large SAPs, however, the individual timing of the last spike in the spike trains is required to resolve the ambiguity (compare also Fairhall et al. [13]). Unlike bayesian spike-train decoding [55], [69], [70], we note that in our decoding frameworks the operation requires only knowledge of the population activity history and the single neuron characteristics. The properties of the QR or EME1 decoder can be used to find biophysical correlates of neural decoding such as previously proposed for short term plasticity [71], [72], non-linear dendrites [73] or lateral inhibition [74]. Note that, a constant percept in spite of spike frequency adaptation does not necessarily mean that neurons use a QR decoder. It depends on the synaptic structure. In an over-representing cortex, a constant percept can be achieved even when the neurons exhibit strong adaptation transients [75].

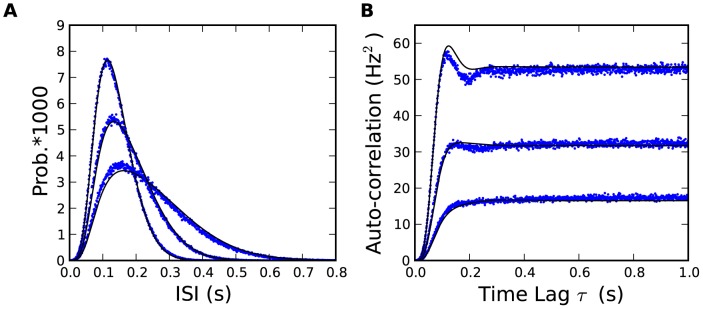

Using the results presented here, existing mean-field methods for populations of spiking neurons can readily be adapted to include spike-frequency adaptation. In Methods we show the QR theory for the interspike interval distribution and the steady-state autocorrelation function (Fig. 6) as well as linear filter characterizing the impulse response function (or frequency-dependent gain function) of the population. From the linear filter and the autocorrelation function, we can calculate the signal-to-noise ratio [3] and thus the transmitted information [1]. The autocorrelation function also gives an estimate of the coefficient of variation [76] and clarifies the role of the SAP in quenching the spike count variability [49], [77], [78]. The finite-size effects [27], [79]–[81] is another, more challenging, extension that should be possible.

Figure 6. Steady-state interspike interval distribution and auto-correlation.

(A)The interspike interval distribution calculated from the 25,000 repeated simulations of the SRM after the steady state has been reached (blue) is compared with the QR theory (Eq. 31; black) for I = 60, 70 and 80 pA. (B) On the same regimen, the autocorrelation function calculated from direct simulations at the steady-state (blue) is compared with the QR prediction (Eq. 33; black). See Methods for model parameters.

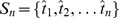

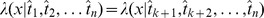

The scope of the present investigation was restricted to unconnected neurons. In the mean-field approximation, it is straight-forward to extend the results to several populations of connected neurons [6]. For instance, similar to EME1, a network made of inter-connected neurons of  cell-types would correspond to the self-consistent system of equation:

cell-types would correspond to the self-consistent system of equation:

|

(12) |

where  is the scaled post-synaptic potential kernel from cell-type

is the scaled post-synaptic potential kernel from cell-type  to cell-type

to cell-type  (following the formalism of Gerstner and Kislter [3]),

(following the formalism of Gerstner and Kislter [3]),  is an external driving force, each subpopulation is characterized by its population activity

is an external driving force, each subpopulation is characterized by its population activity  and its specific spike after potential

and its specific spike after potential  . The analogous equation for QR theory is:

. The analogous equation for QR theory is:

|

(13) |

where  is:

is:

|

(14) |

Since the SAP is one of the most important parameter for distinguishing between cell classes [22], the approach presented in this paper opens the door to network models that take into account the neuronal cell-types beyond the sign of the synaptic connection. Even within the same class of cells, real neurons have slightly different parameters from one cell to the next [22] and it remains to be tested whether we can describe a moderately inhomogeneous population with our theory. Also, further work will be required to see if the decoding methods presented here can be applied to brain-machine interfacing [82]–[84].

Methods

This section is organized in 3 subsections. Subsection A covers the mathematical steps to derive the main theoretical results (Eqs. 2, 5 and 7). It also presents a new approach to the time-dependent renewal equation, links with renewal theory and the derivation of the steady-state interspike interval distribution and auto-correlation. Subsection B covers the numerical methods and algorithmic details and subsection C the analysis methods.

A Mathematical Methods

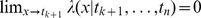

Derivation of Eq. 2

The probability density of a train of  spikes

spikes  in an interval

in an interval  is given by [85]:

is given by [85]:

| (15) |

where we omit writing the dependence on the input  for notational convenience. Here

for notational convenience. Here  is the spike train where

is the spike train where  denotes the most recent spike,

denotes the most recent spike,  the previous one and so on. Instead of

the previous one and so on. Instead of  we can also write

we can also write  . Note that because of causality, at a time

. Note that because of causality, at a time  with

with  ,

,  can only depend on earlier spikes so that

can only depend on earlier spikes so that  . Special care has to be taken because of the discontinuity of

. Special care has to be taken because of the discontinuity of  at the moment of the spike. We require

at the moment of the spike. We require  so that it is excluded that two spikes occur at the same moment in time. By definition, the population activity is the expected value of a spike train:

so that it is excluded that two spikes occur at the same moment in time. By definition, the population activity is the expected value of a spike train:  . Following van Kampen [57] we can integrate over all possible spike times in an ordered or non-ordered fashion. In the ordered fashion, each spike time

. Following van Kampen [57] we can integrate over all possible spike times in an ordered or non-ordered fashion. In the ordered fashion, each spike time  is restricted to times before the next spike time

is restricted to times before the next spike time  . We obtain:

. We obtain:

|

(16) |

where the term  has been eliminated by the fact that

has been eliminated by the fact that  . The notation

. The notation  is intended to remind the reader that a spike happening exactly at time

is intended to remind the reader that a spike happening exactly at time  is included in the integral. In fact only one Dirac-delta function gives a non-vanishing term because only the integral over

is included in the integral. In fact only one Dirac-delta function gives a non-vanishing term because only the integral over  includes the time

includes the time  . After integration over

. After integration over  we have:

we have:

|

(17) |

Note that there are now  integrals and the first integral is over

integrals and the first integral is over  with an upper limit at

with an upper limit at  . The

. The  makes clear that the spike

makes clear that the spike  must be before the spike at

must be before the spike at  . In the ordered notation

. In the ordered notation  . Re-labelling the infinite sum with

. Re-labelling the infinite sum with  , one readily sees that we recover the weighting factor

, one readily sees that we recover the weighting factor  of a specific spike train with

of a specific spike train with  spikes (Eq. 15) in front of the momentary firing intensity

spikes (Eq. 15) in front of the momentary firing intensity  :

:

|

(18) |

Therefore we have shown Eq. 2, which we repeat here in the notation of the present paragraph:

| (19) |

Note that the term with zero spikes in the past ( ) contributes a term

) contributes a term  to the sum.

to the sum.

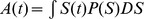

Derivation of Eq. 5

In order to single out the effect of the previous spike, we replace  and group factors in the path integral of Eq. 18:

and group factors in the path integral of Eq. 18:

|

(20) |

The first term contains the probability that no spike was ever fired by the neuron until time  . We can safely assume this term to be zero. The factors in square brackets depend on all previous spike times. However if we assume that the adaptation effects only depend on the most recent spike time

. We can safely assume this term to be zero. The factors in square brackets depend on all previous spike times. However if we assume that the adaptation effects only depend on the most recent spike time  and on the typical spiking history before, but not on the specific spike times of earlier spikes, then the formula in square brackets can be moved in front of the integrals over

and on the typical spiking history before, but not on the specific spike times of earlier spikes, then the formula in square brackets can be moved in front of the integrals over  ,

,  , … We therefore set:

, … We therefore set:

| (21) |

where  is the spike train containing all the spikes before

is the spike train containing all the spikes before  . Thus,

. Thus,  is now only a function of

is now only a function of  but not of the exact configuration of earlier spikes. We use the approximation of Eq. 21 only for the factors surrounded by square brackets in Eq. 20. The path integral Eq. 20 becomes:

but not of the exact configuration of earlier spikes. We use the approximation of Eq. 21 only for the factors surrounded by square brackets in Eq. 20. The path integral Eq. 20 becomes:

| (22) |

where we have used Eq. 17 to recover  .

.

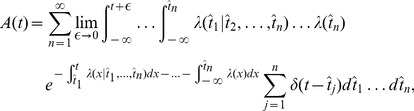

Derivation of Eq. 7

We can recognize in  the moment generating functional for the random function

the moment generating functional for the random function  . This functional can be written in terms of the correlation functions such as

. This functional can be written in terms of the correlation functions such as  [57]. The correlation functions are labeled

[57]. The correlation functions are labeled  as in van Kampen [57] such that the first correlation function is the population activity:

as in van Kampen [57] such that the first correlation function is the population activity:  , the second correlation function is

, the second correlation function is  for

for  , and so on. Then, the generating functional can be written [57]:

, and so on. Then, the generating functional can be written [57]:

|

(23) |

Eq. 23 is called a generating functional because the functional derivatives with respect to  and evaluated at

and evaluated at  yields the correlation functions.

yields the correlation functions.

Derivation of the renewal equation

A derivation of the renewal equation [6], [41], [42] can be obtained by replacing the QR approximation (Eq. 21) by the renewal approximation:

| (24) |

Applying this approximation on the factors in the square bracket of Eq. 20 gives:

| (25) |

Therefore Eqs. 20 and 24 yield a novel path integral proof of the renewal equation (Eq. 25).

The survival function and interval distribution

First consider the expected value in Eq. 2 partitioned so as to first average over the previous spike  and then over the rest of the spiking history

and then over the rest of the spiking history  :

:

| (26) |

where the last equality results from a marginalization of the last spike time.  is the probability to spike at time

is the probability to spike at time  and to survive from

and to survive from  to

to  without spiking. Thus we can write

without spiking. Thus we can write  as the product of the population activity at

as the product of the population activity at  and the probability of not spiking between

and the probability of not spiking between  and

and  that we will label

that we will label  :

:

| (27) |

The function  is the survival function in renewal theory. It depends implicitly on the spiking history. The rate of decay of the survival function depends in general on the precise timing of all previous spikes. The QR approximation means that we approximate this decay by averaging over all possible spike trains before

is the survival function in renewal theory. It depends implicitly on the spiking history. The rate of decay of the survival function depends in general on the precise timing of all previous spikes. The QR approximation means that we approximate this decay by averaging over all possible spike trains before  , so that:

, so that:

| (28) |

which can be integrated to yield:

| (29) |

The survival function in Eq. 27 and Eq. 26 leads to the QR equation (Eq. 5). Following renewal theory [3], the interspike interval distribution:

| (30) |

The factor in Eq. 5 can therefore be interpreted as an approximate expression of the interspike interval distribution of adaptive neurons.

Auto-correlation functions and interspike interval distributions at the steady state

At the steady state with a constant input  , the interspike interval distribution predicted by QR theory is:

, the interspike interval distribution predicted by QR theory is:

| (31) |

where  is the interspike interval,

is the interspike interval,  is the steady-state activity, and

is the steady-state activity, and  is the averaged conditional firing intensity

is the averaged conditional firing intensity  . The latter can be written as:

. The latter can be written as:

| (32) |

From which we recover the auto-correlation function  [3]:

[3]:

| (33) |

where  is the Fourier transform of

is the Fourier transform of  . To solve for the steady-state population activity, we note that the inverse of

. To solve for the steady-state population activity, we note that the inverse of  is also the mean interspike interval at the steady state:

is also the mean interspike interval at the steady state:

| (34) |

B Numerical Methods

All simulations were performed on a desktop computer with 4 cores (Intel Core i7, 2.6 GHz, 24 GB RAM) using Matlab (The Mathworks, Natwick, MA). The Matlab codes to numerically solve the self-consistent equations are made available on the author's websites. The algorithmic aspects of the numerical methods are discussed now.

Direct simulation

All temporal units in this code are given in milliseconds. Direct simulation of Eq. 3 was done by first discretizing time ( was varied between 0.5 and 0.005 ms) and then deciding at each time step whether a spike is emitted by comparing the probability to spike in a time-bin:

was varied between 0.5 and 0.005 ms) and then deciding at each time step whether a spike is emitted by comparing the probability to spike in a time-bin:

| (35) |

to a random number of uniform distribution. Each time a spike is emitted, the firing probability is reduced according to the SRM equation for  because another term

because another term  is added (Eq. 3). Typically 25,000 repeated simulations were required to compute PSTHs on such a fine temporal resolution. The PSTHs were built by averaging the 25,000 discretized spike trains and performing a 2-ms running average unless otherwise mentioned. The dynamics of

is added (Eq. 3). Typically 25,000 repeated simulations were required to compute PSTHs on such a fine temporal resolution. The PSTHs were built by averaging the 25,000 discretized spike trains and performing a 2-ms running average unless otherwise mentioned. The dynamics of  were calculated from the numerical solution of the differential equation corresponding to

were calculated from the numerical solution of the differential equation corresponding to  where

where  and similarly for

and similarly for  .

.

For all simulations, the baseline current was 10 pA (except for time-dependent current where the mean was specified), the baseline excitability was  kHz, the membrane filter

kHz, the membrane filter  was a single exponential with an amplitude

was a single exponential with an amplitude  in units of inverse electric charge and a time constant of

in units of inverse electric charge and a time constant of  10 ms.

10 ms.

Time-dependent input consisted of an Ornstein-Uhlenbeck process which is computed at every time step as:

| (36) |

where  is the mean,

is the mean,  the standard deviation and

the standard deviation and  = 300 ms the correlation time constant.

= 300 ms the correlation time constant.  is a zero mean, unit variance Gaussian variable updated at every time step.

is a zero mean, unit variance Gaussian variable updated at every time step.

Numerical solution of renewal and quasi-renewal equations

We consider the QR equation, Eq. 5, with the averaged conditional intensity of Eq. 8. We choose a tolerance  for truncating the function

for truncating the function  and find the cutoff

and find the cutoff  such that:

such that:  for all

for all  . A typical value for the tolerance,

. A typical value for the tolerance,  , is

, is  . We split the main integral in Eq. 5 in two integrals, one from

. We split the main integral in Eq. 5 in two integrals, one from  to

to  , the other from

, the other from  to

to  to get:

to get:

|

(37) |

where  is called the survival function (see Methods A) and corresponds to

is called the survival function (see Methods A) and corresponds to  . With the same reasoning the lower bound of the innermost integral can be changed from

. With the same reasoning the lower bound of the innermost integral can be changed from  to

to  because

because  for all

for all  . The first term in the square brackets of Eq. 37 are the neurons that have had their last spike a long time back in the past. For this term, we use the conservation equation,

. The first term in the square brackets of Eq. 37 are the neurons that have had their last spike a long time back in the past. For this term, we use the conservation equation,  [3]. This enables us to write the first-order QR equation in terms of an integral from

[3]. This enables us to write the first-order QR equation in terms of an integral from  to

to  or

or  only:

only:

|

(38) |

We define two vectors. First  is made of the exponential in Eq. 38 on the linear grid for

is made of the exponential in Eq. 38 on the linear grid for  , such that the

, such that the  'th element can be written:

'th element can be written:

|

(39) |

where  is the discretized function

is the discretized function  .

.  is the number of time steps

is the number of time steps  needed to cover the time span

needed to cover the time span  defined above. Note that

defined above. Note that  does not depend on

does not depend on  since

since  (because of an absolute refractoriness during the spike). The update of

(because of an absolute refractoriness during the spike). The update of  is the computationally expensive step of our implementation. Adaptive time-step procedures could be applied to improve the efficiency of the algorithm, but we did not do so. The special case where a rapid solution is possible is discussed further below.

is the computationally expensive step of our implementation. Adaptive time-step procedures could be applied to improve the efficiency of the algorithm, but we did not do so. The special case where a rapid solution is possible is discussed further below.

The second vector  corresponds to

corresponds to  for

for  evaluated on the same linear grid as the one used for

evaluated on the same linear grid as the one used for  . This vector traces the probability of having the last spike at

. This vector traces the probability of having the last spike at  . Assuming that there was no stimulation before time

. Assuming that there was no stimulation before time  we can initialize this vector to zero. To update

we can initialize this vector to zero. To update  we note that the firing condition Eq. 35 gives:

we note that the firing condition Eq. 35 gives:

| (40) |

To do so, we see from Eq. 40 that we can evaluate  from

from  calculated at the previous time step. The first bin is updated to the previous population activity:

calculated at the previous time step. The first bin is updated to the previous population activity:

| (41) |

and all the other bins are updated according to

| (42) |

We can therefore calculate the population activity iteratively at each time bin using Eq. 38:

| (43) |

where  and

and  depend on the activity

depend on the activity  for

for  . This algorithm implements a numerical approximation of the QR equation. On our desktop computer and with our particular implementation, solving 1 second of biological time took 36 seconds with a discretization of 0.1 ms for QR and 84–200 seconds for direct simulation of 25,000 neurons, depending on the firing rate. Using the same number of neurons but with a discretization of 0.5 ms it took 1.8 seconds to solve QR and 16–20 seconds to perform the direct simulation. If

. This algorithm implements a numerical approximation of the QR equation. On our desktop computer and with our particular implementation, solving 1 second of biological time took 36 seconds with a discretization of 0.1 ms for QR and 84–200 seconds for direct simulation of 25,000 neurons, depending on the firing rate. Using the same number of neurons but with a discretization of 0.5 ms it took 1.8 seconds to solve QR and 16–20 seconds to perform the direct simulation. If  is the total number of time step, the present numerical methods are

is the total number of time step, the present numerical methods are  . Evaluating the convolution in Eq. 39 with fast Fourier transform gives

. Evaluating the convolution in Eq. 39 with fast Fourier transform gives  . This same convolution can be evaluated with a differential equation with the choice of basis:

. This same convolution can be evaluated with a differential equation with the choice of basis:  , with parameters

, with parameters  and

and  having the constraint of

having the constraint of  . This fast parametrization solves in

. This fast parametrization solves in  .

.

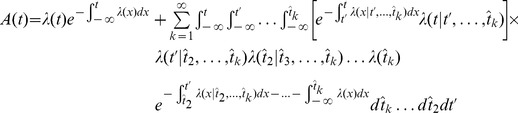

Decoding QR

Isolating the input  from Eq. 43 gives the decoding algorithm:

from Eq. 43 gives the decoding algorithm:

| (44) |

where  is also a function of

is also a function of  . Decoding can be carried out by assuming

. Decoding can be carried out by assuming  in Eq. 44, but this can lead to numerical instabilities when the time step is not very small. Instead we write

in Eq. 44, but this can lead to numerical instabilities when the time step is not very small. Instead we write  as a function of

as a function of  (Eqs. 41 and 42), expand Eq. 42 to first order in

(Eqs. 41 and 42), expand Eq. 42 to first order in  and solve the resulting quadratic equation for

and solve the resulting quadratic equation for  :

:

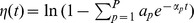

|

(45) |

where  denotes the element by element (array) multiplication.

denotes the element by element (array) multiplication.

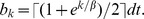

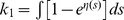

Numerical solution of EME1 and EME2

The structure of EME1 and EME2 allows us to use a nonlinear grid spacing in order to save memory resources. The bins should be small where  varies fast, and larger where

varies fast, and larger where  varies slowly. Since the SAP is approximatively exponential, we choose the size

varies slowly. Since the SAP is approximatively exponential, we choose the size  of bin

of bin  to be given by:

to be given by:  where

where  takes the nearest greater integer and

takes the nearest greater integer and  is the smallest time bin allowed and will be the discretization of the final solution for the population activity. The degree of nonlinearity,

is the smallest time bin allowed and will be the discretization of the final solution for the population activity. The degree of nonlinearity,  , is chosen such that there are

, is chosen such that there are  bins between

bins between  and

and  . To a good approximation,

. To a good approximation,  can be obtained by solving the equation:

can be obtained by solving the equation:  .

.

To perform the numerical integration, we define the vector  made of the function

made of the function  evaluated at the end of each bin

evaluated at the end of each bin  with bin size

with bin size  , the vector

, the vector  with elements

with elements  made of the convolution

made of the convolution  discretized on the uniform grid of length

discretized on the uniform grid of length  with bin size

with bin size  , and on the same grid the vector

, and on the same grid the vector  made of the discretized population activity. Finally, we define the vector

made of the discretized population activity. Finally, we define the vector  made of the population activity in the last

made of the population activity in the last  seconds since time

seconds since time  on the non-linear grid defined by

on the non-linear grid defined by  . Using the rectangle method to evaluate the integral of the first-order self-consistent equation for population activity, we can write:

. Using the rectangle method to evaluate the integral of the first-order self-consistent equation for population activity, we can write:

| (46) |

Such that the population activity is obtained by solving iteratively through time Eq. 46, an operation requiring  .

.

To compute the second order equation, we first build the correlation vector  on the linear grid of the smallest bin size

on the linear grid of the smallest bin size  :

:

| (47) |