Abstract

Major efforts are being made to improve the teaching of human anatomy to foster cognition of visuospatial relationships. The Visible Human Project of the National Library of Medicine makes it possible to create virtual reality-based applications for teaching anatomy. Integration of traditional cadaver and illustration-based methods with Internet-based simulations brings us closer to this goal.

Web-based three-dimensional Virtual Body Structures (W3D-VBS) is a next-generation immersive anatomical training system for teaching human anatomy over the Internet. It uses Visible Human data to dynamically explore, select, extract, visualize, manipulate, and stereoscopically palpate realistic virtual body structures with a haptic device. Tracking user’s progress through evaluation tools helps customize lesson plans. A self-guided “virtual tour” of the whole body allows investigation of labeled virtual dissections repetitively, at any time and place a user requires it.

One of the most difficult tasks in learning gross anatomy is to comprehend the three-dimensional visuospatial relations of anatomical structures. Thus, creation of realistic three-dimensional structures of human anatomy has been a goal of medical professionals and computer scientists for many years.

Traditionally, cadavers and 2D illustrations with labels to identify structures have been used to cognitively integrate anatomical information for visualizing three-dimensional structures. Our own research in this area is directed towards leveraging advances in information and virtual reality technologies to design an anatomical training information system that allows a user to build three-dimensional interactive simulations “in-front-of-their-eyes” over the Internet.

The design of our system, Web-based three-dimensional Virtual Body Structures (W3D-VBS), is based on the concept of “what you want is what you get” for interactive assembly and efficient viewing, exploring, and manipulating hundreds of organs, and their connected (or proximal) structures. The user does not require a medical background to use this system or have to be a medical student to learn from it.

Background

Major efforts initiated by The National Library of Medicine (NLM) have made it possible to take the Visible Humans (VH)1 from the state of data to knowledge applications. A great deal of research and development has resulted in innovative information technologies for the VH. This foundation has made it possible to build powerful virtual reality-based simulations that are ideal for computer-based next-generation anatomical training systems.

The Visible Human Project

When NLM initiated the Visible Human Project in 1986, their goal was to build a volumetric digital image library of a complete adult male and female body.

The Visible Human Male (VHM) dataset includes digitized images from CT and MRI scans and 1871 axial images from the cryosections.2 These axial images have a 2048 × 1216 pixel resolution and were taken at 1.0-mm intervals. The complete male dataset is about 15 GB in size and was available in November of 1994. In August of 2000, higher resolution (4096 × 2700 pixels) images were released. The Visible Human Female (VHF) data has axial images obtained at 0.33-mm intervals. This resulted in 5,189 anatomical images and a dataset of about 40 GB.

The VH images make it possible to visualize the body; however, it is the segmented data that provide structural information for each pixel. The combination of pixel-level color information from the images and structural information from the segmented data provides the underlying database for our system. Since the release of the VH datasets, there have been numerous research and development projects to create 3D visualization systems.4–10

Surface and Volumetric Virtual Body Structures

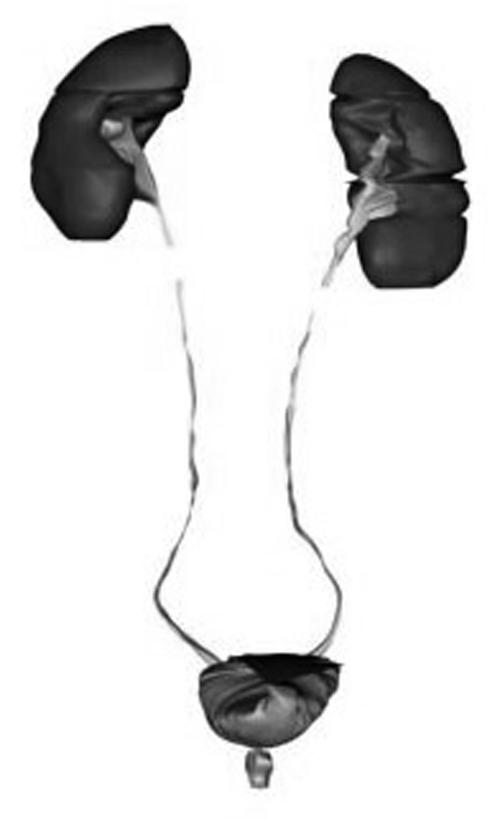

A surface-based Virtual Body Structure (s-VBS) is a 3D geometric model for the exterior of an anatomical structure. These models use polygons to define the surface of the model. To create the s-VBS, we used our s-VBS generator.3 The s-VBS generator allows the user to select anatomical body structures from an axial slice, body region, or anatomical system to create their surface-based 3D models. Figure 1▶ shows the s-VBS models for the urinary system that was created in one minute.

Figure 1 .

s-VBS models of the urinary system.

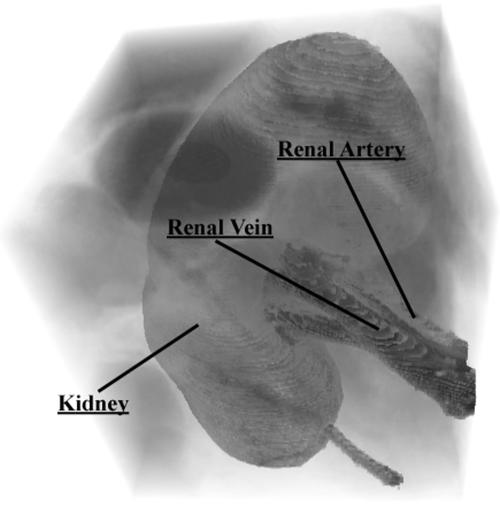

For a volumetric Virtual Body Structure (v-VBS), all internal substructures and external structures within a specific volume of interest (VOI) are completely defined. Figure 2▶ shows the VOI for the kidney with the surrounding tissues made semi-transparent to reveal the kidney, renal vein, and artery. In s-VBS, internal structures are not defined.

Figure 2 .

Volume of Interest for kidney.

Computer Haptics

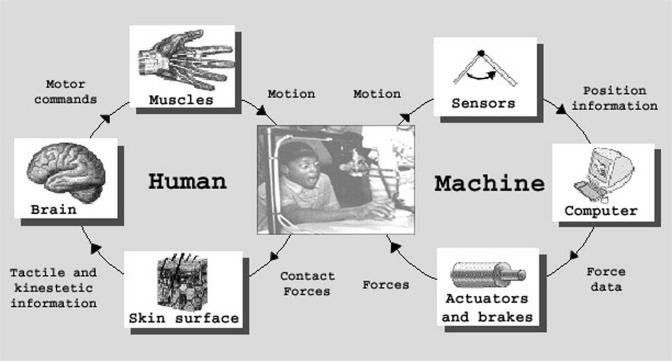

Computer haptics allows a user to touch virtual objects through a haptic device. A force feedback is felt as the user moves the haptic device in the virtual space and touches an object. The force depends on the material properties of the virtual object. Figure 3▶ shows how the human and machine collaborate in haptics. Most existing haptic applications, including W3D-VBS, use surface-based models. Haptics at a volumetric level is still a research area. The systems described below do not provide the ability to palpate anatomical structures.

Figure 3 .

Human-machine collaboration.

Computer-based Visible Human Projects

Several computer-based systems have taken novel approaches to create anatomical tools for teaching using surface-based models. The Anatomy Browser4 allows the user to explore certain areas of the body using pre-rendered images of models from fixed viewpoints. The Anatomic VisualizeR5 allows for creation of lesson plans based on anatomical models. The Voxel Man Project6 has created visually sophisticated products for teaching anatomy. Their work extends illustration-based methods to a 3D atlas, providing limited interactivity.

Web-Based Visible Human Projects

This section lists other major Web-based systems that utilize the VH to provide anatomical information (see Table 2▶ in the Discussion section).

Table 2 .

Feature Comparison Chart

| Feature | W3D-VBS | CSA | VHB | AnatLine* | VHV | Description |

|---|---|---|---|---|---|---|

| Volumetic 3D models | Yes | No | No | No | No | Define and view a volume |

| Haptic capability | Yes | No | No | No | No | Touch models with haptic device |

| Stereoscopic haptics | Yes | No | No | No | No | View and touch the s-VBS models stereoscopically |

| Learning Progress Evaluation | Yes | No | No | No | No | Track progress of learning anatomy |

| Voice controlled interface | Yes | No | No | No | No | Use voice commands to control interface |

| Slice views | Axial, coronal, sagittal | Axial | Axial, coronal, sagittal | Axial | Axial, coronal, sagittal | Sagittal: side-to-side Coronal: front-to-back Axial: top-to-bottom |

| Detailed slice navigation | Yes | Yes | No | Yes* | No | Display structure name that mouse is pointing to |

| Show volume of slices | Yes | No | No | Yes * | No | View all slices that contain the specified structure |

| Highlighting | Yes | Yes | No | Yes * | No | Highlight selected structures |

| 3D Structure labeling | Yes | No | No | No | No | Display structure name that mouse is pointing to or clicking on at the volumetric level |

| Structures categorized | Yes | Yes | No | Yes | No | Look up structures by functional types and body regions |

| Surface-based 3D models | Yes | No | No | Yes * | No | Select, view and interact with prebuilt 3D surface models |

| Select a specific slice by slice id | Yes | No | No | Yes * | No | Specify a slice to view |

| Search engine | Yes | Yes | No | Yes | No | Search for specific structure |

| User authentication | Yes | Yes | No | No | No | Login required |

| Image pixel resolution | 1760 × 1024 | 590 × 343 | Up to 2048 × 1216 | N/A | Up to 1728 × 940 | Image resolution user can see |

*AnatLine’s online browser provides images of some pregenerated 3D surface models. Its detailed slice images are available for download and view with its VHDisplay software.

Visible Human Browsers

The Visible Human Browsers (VHBs)7 from the University of Michigan provide basic Visible Human image navigation. The VHBs run within a web browser and allow users to view individual slices in low, medium, and high resolutions. The browsers do not provide anatomical information; however, the Regional Browser does separate the slices in five major regions: head and neck, thorax and abdomen, pelvis, upper leg, and lower leg.

Cross-sectional Anatomy

The Cross-sectional Anatomy (CSA) from Gold Standard Multimedia8 can be viewed with a plug-in– enabled web browser. This system allows a user to highlight structures on a slice but limits the users to axial slices. A notable feature of this system is its ability to categorize and search the structures by body regions and functional systems. Highlighting of several structures with different colors and alpha blending is a convenient way to identify structures on a slice. GSM also hosts a “Virtual Human Gallery,” in which anyone may submit rendered images and animations for exhibition.

AnatLine

AnatLine9 from the NLM has an online Anatomical Browser and a two-part Anatomical Database: one for online searching and one for using after downloading. The Anatomical Browser provides pre-rendered images of surface-based 3D models that the user can use to navigate through the thorax region of the body. The Anatomical Database lets users search body structure images by name. Search results provide links to files that can be downloaded and viewed using NLM’s VHDisplay software. These files include volume-rendered anatomical images, segmented stacks of image slices, and byte masks for segmenting anatomical structures. Most image files available for download are a few megabytes in size except for the segmented images, which range from 330 MB to over 900 MB for each file. This system provides little online interaction capabilities and requires the user to download the files for viewing.

Visible Human Viewer

The NPAC/OLDA Visible Human Viewer (VHV)10 from the University of Adelaide is an image viewer for the VH slices. VHV solely relies on slice-level navigation in axial, coronal, and sagittal orientations. The user can specify a section within the image for viewing in low, medium, and high resolutions.

W3D-VBS System Description

W3D-VBS is a virtual reality-based anatomical training system for teaching human anatomy over the Internet. Users can take a self-guided “virtual tour” of the whole body while exploring and manipulating pixel-labeled slices and a selected VOI.

Navigating the Visible Human

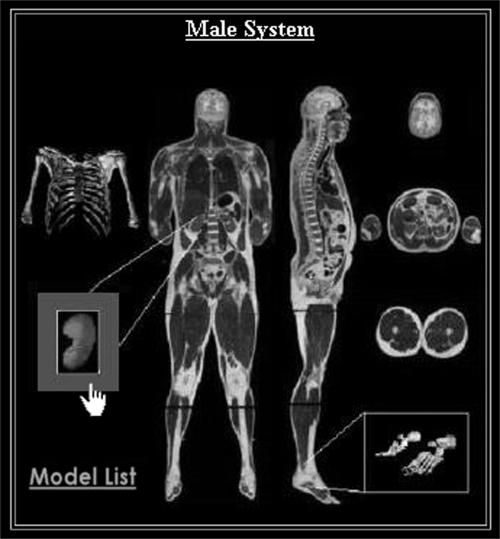

The navigation system, VBS-Explorer, provides user access to W3D-VBS via the Internet using a standard Java-enabled web browser. After logging into the system, the Quick Reference page (Figure 4▶) is loaded. At this stage, a user is able to navigate at (1) slice level by activating the VBS-Explorer, (2) volumetric level by activating the VBS-Volumizer, or (3) surface level by activating the Haptic Modeler.

Figure 4 .

Quick Reference Page.

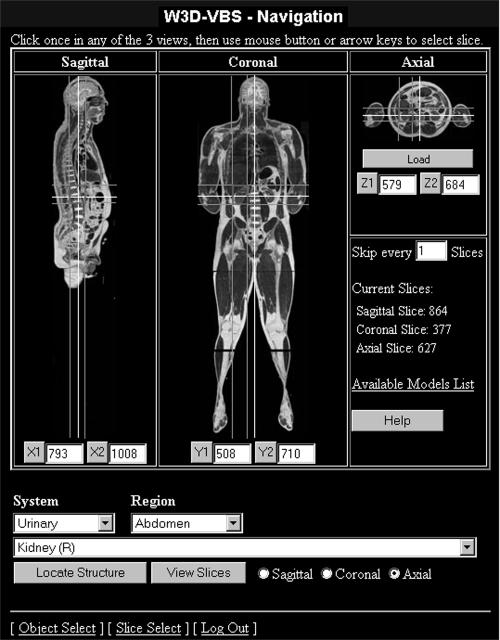

VBS-Explorer—Slice Level Navigation

Activating the VBS-Explorer directs the user to the Navigation page (Figure 5▶). Horizontal and vertical white reference lines are displayed on each view to indicate the current slice locations for the other two views. For example, the horizontal and vertical reference lines in the coronal view represent current axial and sagittal views. Slice-by-slice navigation is performed by moving the reference lines in any one of the three views. The other two views are automatically updated to reflect changes. In order to expedite the navigation process, a user-specified number of slices can be skipped in any direction.

Figure 5 .

Navigation page.

To assist in locating structures by their names, the system allows selection of hundreds of segmented structures from a drop down list. Specifying a region of the body and anatomical system can reduce the list. For example, the list in Figure 5▶ is reduced to urinary structures that reside in the abdomen region. This allows the user to select the left or right kidney, left or right renal pelvis, or the ureter. Red rectangles in Figure 5▶ display the location of the right kidney and they can be used to provide the x, y, and z values to define a default VOI containing the right kidney

A high-resolution image with detailed segmentation information allows the user to explore a specific slice in any direction. In the Detailed Slice View page (Figure 6▶), the navigation window (A), allows zooming of the area of interest in the working window (B). The name of the structure under the cursor is displayed, allowing the user to learn anatomy at the slice level. A user can highlight a structure by clicking on the image in the working window. This fills in the selected structure on the image with the default red color. The user may also highlight multiple structures by selecting their names from the structure drop down list and assigning colors for the segmentation. The small intestine, psoas major, liver, and right kidney are all highlighted in Figure 6▶.

Figure 6 .

Detailed Slice View page.

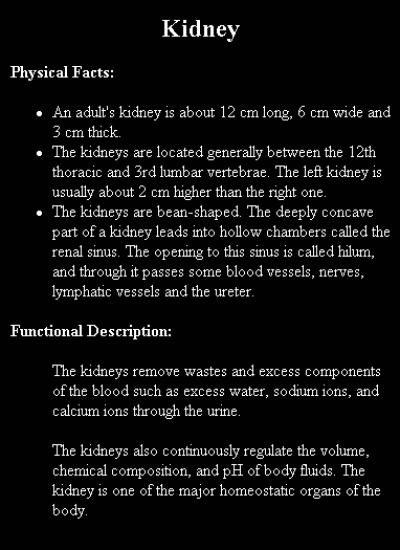

W3D-VBS provides physical and functional information for several anatomical structures (Figure 7▶). In order to provide textual information for all major structures, we have started to compile a knowledge base of functional and pathological information.

Figure 7 .

Structure information for the kidney.

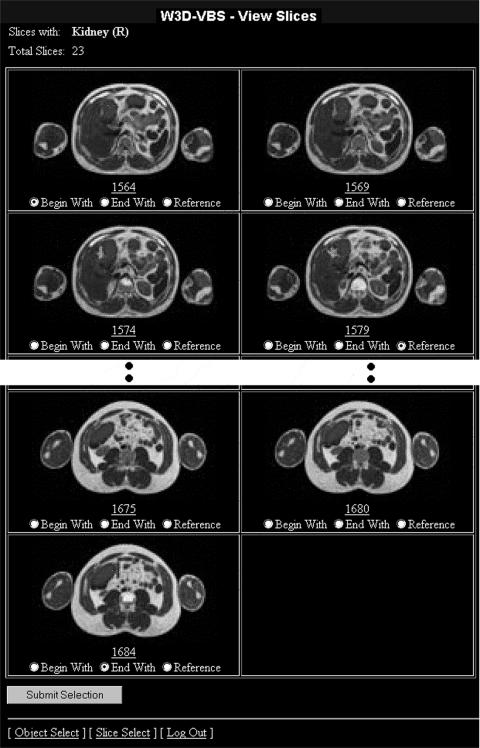

All slices for the selected structure can be viewed in the View Slices page (Figure 8▶). A specific slice can be chosen for detailed examination. This slice can also be used as a reference (Figure 9▶), for specifying a VOI rectangle. Choosing the first and last slices from this page provides the third dimension. The user can thus select any volume of interest around or near the chosen structure. Selection of a v-VBS provides a default VOI that tightly fits around the structure. This VOI specifies the dimensions of the volume and the location within the VH.

Figure 8 .

All axial slices containing kidney.

Figure 9 .

Reference slice for kidney.

VBS-Volumizer—Volumetric Level Navigation

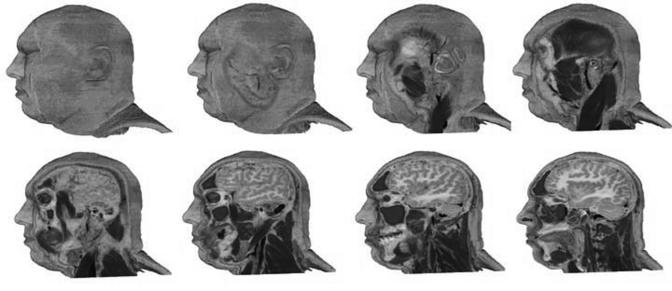

When the VOI is chosen, the VBS-Volumizer11 is activated to render the volume slice by slice. The VBS-Volumizer allows the user to dynamically create, visualize, explore, and manipulate a VOI. Once a VOI is loaded, the user can rotate, pan, zoom in or out, and modify its resolution. VBS-Volumizer is fully interactive where all manipulations are done in real-time. Several manipulations resulting in a partial “walk-through” of the head are shown in Figure 10▶. The term “walk-through” describes a manipulation of the volume in which the user is able to traverse the VOI from any angle to observe the internal representation of the structures.

Figure 10 .

Partial “walk-through” in the sagittal direction by removing five slices at a time.

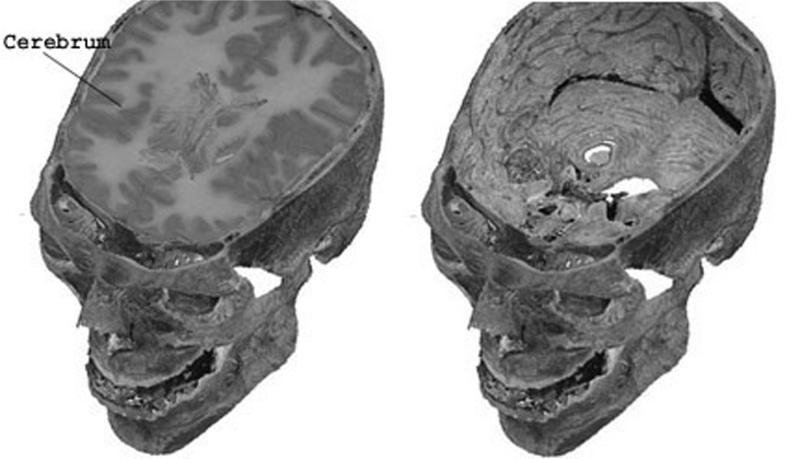

3D highlighting and labeling are included in VBS-Volumizer to assist the user in identifying the structures within the VOI. As the user moves the mouse over the rendered image, the name of the structure directly below the cursor is displayed, as illustrated in Figure 11▶. A selected structure can be highlighted for higher visibility during manipulations. A highlighted structure can be removed or isolated. In Figure 12▶, the cerebrum and other tissues in the skull were removed to view the skull cavity. Individual v-VBSs can be selected and extracted within the VOI by altering the opacity of individual structures. Figure 13▶ shows the kidney at 100%, 50%, and 0% opacity and surrounding tissues at 0% opacity in order to reveal internal structures.

Figures 11 and 12.

(Left)Cerebrum highlighted and labeled. (Right) Cerebrum and other tissue removed.

Figure 13 .

Kidney at 100%, 50%, and 0% opacity.

VBS-Volumizer provides surface, slice, and volumetric modes. The surface mode displays a hollow bounding box of the volume with texture. Surface or slice rendering modes are provided for faster manipulation. Switching to volume rendering provides greater level of detail and displays the entire volume, interior and exterior.

Haptic Modeler—Surface Model Level Navigation

The Haptic Modeler (HM) allows stereoscopic visualization and palpation of the s-VBS models using a PHANTOM12 haptic device. A user can select a s-VBS to touch from the Quick Reference, Navigation, or Detailed Slice View page. Once a s-VBS is selected, the system queries a model database to determine whether that structure is available. If a model exists, the system provides a link to load selected s-VBS into the HM on the client’s computer. A list of the available s-VBS models is also provided for loading the s-VBS of interest.

Default haptic material properties for the structures are loaded from the Haptic Texture library (described in the Architecture section); however, the user may change the feel of anatomical structures. The individual preferences can then be saved in a local Haptic Texture library.

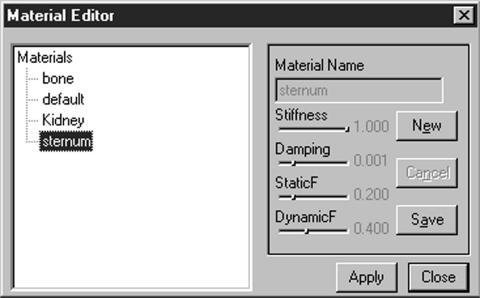

The material editor (Figure 14▶) is used to change tissue properties in order to define how s-VBS should feel while being palpated. This interface allows the user to create, manipulate, and save materials and apply them to 3D anatomical models. Each material consists of a unique combination of the stiffness, damping, and static and dynamic friction components; however as new descriptors become available, they can be incorporated into the system. Each of the current four components play different roles in specifying how the material feels. Stiffness is needed in order to change how hard or soft the object is. Damping makes the object have a “spongy” feeling. Static friction provides a constant frictional force, while dynamic friction adds friction that increases or decreases as the user glides the surface of the object. If haptics is initialized, modifications are felt instantaneously.

Figure 14 .

Haptic material editor.

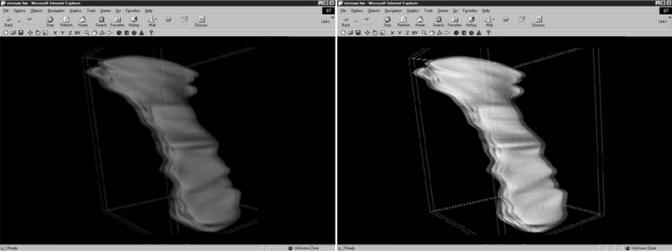

Using the HM, manipulation capabilities such as rotating, translating, and scaling the structure in any direction are possible. Zooming, panning, and rotating the entire scene are also an integral part of HM. The system provides several graphics rendering options such as viewing the model in wire-frame or filled mode and using flat or smooth shading. Another rendering option is to view the model in mono- or stereoscopic mode. HM provides two different modes of stereoscopic visualization: interlaced and anaglyph (Figure 15▶). Interlaced stereoscopy works by interlacing the left and right eye images along horizontal scan lines. The image is then displayed using an interlaced capable video card and liquid crystal shutter glasses. Anaglyph images are hardware-independent and only require a user to have a pair of different color glasses (e.g., red-blue, red-green). Either mode allows the user to dynamically adjust the perceived depth of the scene, by controlling the parallax (separation of the left and right eye), to determine whether the objects appear to recess into the monitor or protrude from it.

Figure 15 .

Two modes of stereoscopic rendering: anaglyph (left) and interlaced (right).

Evaluation

The evaluation tool is used to track the user’s progress in learning anatomy. The evaluation system allows users to customize question sets to determine their abilities to identify structures within a slice or a VOI. There are two types of evaluation, one for self-evaluation and one for use in a course curriculum. In the self-evaluation, users can create question sets for testing their ability to identify structures. During an evaluation, answers are saved to a database for analysis and to help track user’s progress. Depending on the progress, a user can add or delete questions for self-evaluation. Additional questions regarding hard-to-learn items can be added and easier ones removed. When used in a course curriculum, an instructor can build customized lessons and evaluations. The customization can be based on previous results for targeting trouble areas.

DV-UI

Current trends in the user interface design suggest that voice recognition and customization will become important features.13 Many products already include voice-controlled interfaces, but they usually lack the flexibility of dynamic customization. For example, Thinkdesign 6.014 requires the user to learn the hard-to-remember linguistics of the software to manipulate the model on the screen through speech.

The Dynamic Voice User Interface (DV-UI) is a tool that is bundled with VBS-Volumizer and HM to allow interface control through voice commands. When using the HM, for example, the user controls haptic properties of a model by saying “increase stiffness” to increase the stiffness of the object currently being palpated or “start rotating” to cause the model to rotate on the screen. DV-UI also gives the user the option of assigning custom phrases to these software functions, focusing on the user’s preferences.

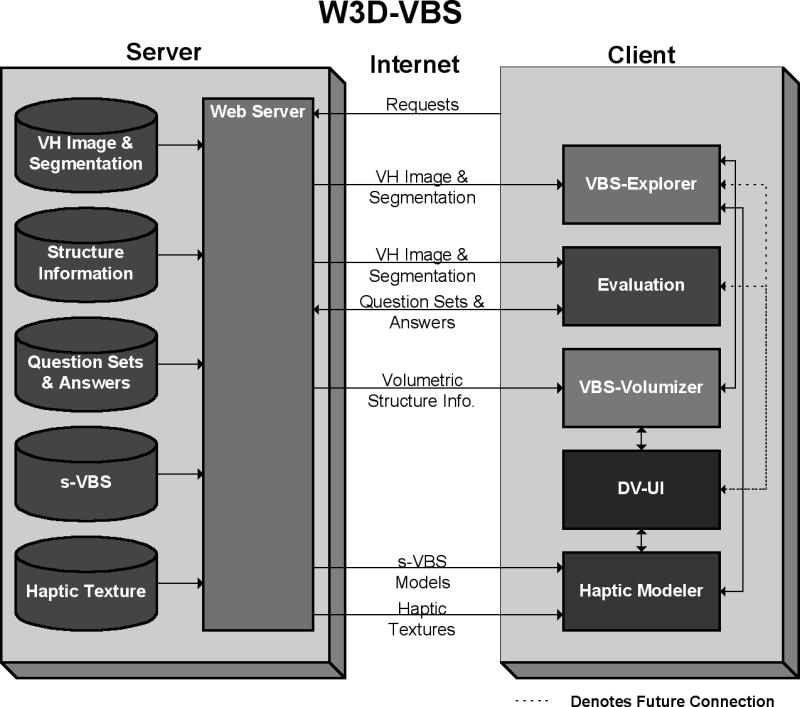

W3D-VBS Architecture

W3D-VBS is a client/server application that is accessible via Internet using a standard Java-enabled web browser. Figure 16▶ illustrates basic communication between the client and server. The server side consists of a web server and several libraries and databases used by the system. Our server runs on a machine with Windows NT Server 4.0 with Service Pack 6.0, a Pentium II 350 MHz processor, and 224 MB of RAM. The web server utilizes Microsoft Internet Information Server and is primarily used to transmit data and HTML pages to the clients. The client end of the system consists of VBS-Explorer, VBS-Volumizer, Haptic Modeler (HM), Dynamic Voice User Interface (DV-UI), and the evaluation system. Both VBS-Explorer and the evaluation system are HTML-based. VBS-Volumizer and the Haptic Modeler are helper applications that need to be downloaded and installed on the client’s computer prior to use.

Figure 16 .

W3D-VBS architecture.

At a client’s request, the server sends the data such as HTML pages, Java Applets, and anatomical data. HTML pages are generated dynamically using Active Server Pages (ASP).

Server Libraries and Databases

The VH Image and Segmentation database contains the dataset for the Visible Human. The system utilizes the slices and dataset from the VHM. The Structure Information database contains structure-specific information, including anatomical system, region of the body, and other information derived from the VH dataset (e.g., bounding box information for each structure). The Question Sets and Answers contain the information needed for the evaluation system. These are the questions created by the users, their corresponding answers, and the user’s responses. The s-VBS library contains a collection of surface-based 3D models that are used by W3D-VBS. We are currently in process of generating the Haptic Texture Library. We are using a heuristic method15,16 to obtain values to determine how the structure should feel. In addition to biomechanical factors, the perception of feeling objects by touch is subjective and dependent on a number of physiological and psychological factors. However, the sense of touch is an integral part of medical practice, and simulating it, even at an imperfect level, is essential. The capability of simulating palpation of different tissue types is an enormous hurdle for developers of computer haptics. We hope this heuristic method will allow us to record how medical experts think a structure should feel. These values can then be used as default haptic textures for the s-VBS. Until the library is complete, default textures with maximum stiffness are assigned.

Status Report

Supporting an exploration and discovery-based learning environment has been our major challenge. This “what you want is what you get” paradigm has made our system dynamic so that W3D-VBS can support changes in curricular structure to ensure successful learning. Currently, all our systems work as reported in this article. However, we consider W3D-VBS a work in progress and are continuously updating it to add new features.

We are planning to perform tests on the server to see how it handles loads from a large number of simultaneous users. Earlier tests with ten simultaneous users showed that the response times remained consistent; however, the server CPU and memory usage did increase.

A common problem among other popular web-based human anatomy systems is the limitation of network bandwidth. Other systems approach this problem by either reducing the image and segmentation resolution or asking the users to download hundreds of megabytes of data. In our system, the data files, which reside on the server, are compressed. This increases system performance by reducing response times from about 30 seconds down to about 2 seconds to download an entire high-resolution segmented slice.

We are currently working on integrating the VHF data into W3D-VBS.

VBS-Volumizer renders VOI and allows the user to explore it fully, using a moderately priced graphics card instead of expensive high-end workstations17–20 or specialized hardware,21–23 which are typically used for volume rendering. Our tests addressing real-time issues with the VBS-Volumizer show that we are able to render full-color voxel volumes of dimension 2003, 3003, and 4003 at 60, 20, and 8 frames per second, respectively. The size, rendering, and load times of these volumes are displayed in Table 1▶. Load times increase considerably for larger volumes; however, interactive frame rates are achieved once the volume is loaded. We are exploring optimization schemes to generate and render larger v-VBS at higher frame rates.

Table 1 .

VBS-Volumizer Rendering Rates for Different VOI

| System | Volume Size (MBs) | Volume Voxel Dimension | FPS | Load time (m) |

|---|---|---|---|---|

| Pentium 4 | 22.8 | 200 × 200 × 200 | 60 | 1.14 |

| 933Mhz, 512MB | 77.2 | 300 × 300 × 300 | 20 | 7.36 |

| GeForce2 (64 MB), Windows NT 4.0 | 183.1 | 400 × 400 × 400 | 8 | 13.34 |

Discussion

Our next step is to work with medical professionals (see acknowledgements) to help us develop the knowledge base for the evaluation system, including lesson-building capabilities. The robustness of our system needs to be tested with higher-resolution segmented data for the VH.

Currently, s-VBSs are drawn with a specific color (e.g., bone is white). We are planning to extract image textures for the s-VBS from the VH image slices and its v-VBS. We are in progress of integrating the s-VBS generator into W3D-VBS so that users can construct s-VBS models.

To provide complete textual information for all major structures, we plan to compile a knowledge base of functional and pathological information. Eventually we hope to incorporate other media sources, such as video and audio, providing the latest functional and pathological information about the anatomical structures.

Visualization of deformation of s-VBS and v-VBS models while using the haptic device is not currently available. Deformation of a simple volumetric haptic model has been accomplished.24 We plan on extending this to work with the s-VBS and v-VBS generated with VBS-Volumizer.

A database of rendered VOIs is currently being compiled. This database will enable users to create libraries of v-VBS for specific lessons. For example, Apple’s QuickTimeVR[25] allows the user to manipulate sequences of images. Changes in the opacity of the surrounding tissue and rotation of v-VBS can easily be accomplished using such techniques. This bypasses the need to regenerate and render the v-VBS every time.

Dynamic Voice User Interface needs to be fully integrated with the entire system. The current system has DV-UI incorporated into the VBS-Volumizer and HM.

The self-evaluation tool can be made more effective by utilizing progress charts based on test results. Another useful feature would be to compare the student’s test results with those of other students in the database. This feature will also help the instructor track individual progress and rank students. Highlighting a named structure and naming a highlighted structure are integral parts of generating tests. Generation of random questions, other than the standard tests prepared by the user or instructor, is also a possibility.

To evaluate the functionality of our system, we have performed a feature comparison (Table 2▶) between W3D-VBS and the systems described in the Web-based Visible Human Projects section.

Conclusion

Traditional methods of teaching anatomy include the use of cadavers and 2-D illustrations with labels to identify structures. Though these techniques have been proven effective over many years, computerized techniques can aid in learning anatomy. We have described our Web-based Three-dimensional Virtual Body Structure (W3D-VBS) system for the teaching of human anatomy. This system includes an interface that allows for the navigation through the Visible Human, the construction of volumetric structures, and the ability to touch previously built geometric structures with a PHANTOM haptic device. To create a more natural environment, our system includes a Dynamic Voice User Interface that allows for the use of voice commands to interact with the computer. The system includes a self-evaluation and lesson building tool for customized learning.

Acknowledgments

The authors thank Dr. John Griswold, Dr. Sammy Deeb, and Dr. John Thomas of the Texas Tech Health Sciences Center and Dr. Parvati Dev, Dr. Leroy Heinrichs, and Dr. Sakti Srivastava of the SUMMIT group at Stanford University for their support and stim-ulating discussions. We also acknowledge Dr. Donald Jenkins, Dr. Terry Yoo, and Dr. Mike Ackerman of National Library of Medicine. This work would not have been possible without the data provided by NLM, GSM, Dr. Gabor Szekely of Swiss Federal Institute of Technology, and Dr. Victor Spitzer of University of Colorado. We are also grateful for Brad Johnson’s contributions in compressing data. Finally, we express our deep gratitude to Dr. Thomas Krummel of Stanford University and Dr. Richard Satava of Yale University for continuous encouragement, support, and interest. This work is funded in part by the State of Texas Advanced Research Project, grant 003644-0117.

This work was supported in part by grant 003644-0017 from the State of Texas Advanced Research Project.

References

- 1.National Library of Medicine at http://ww.nlm.nih.gov. [DOI] [PubMed]

- 2.Ackerman MJ. The Visible Human Project. Proceedings of the IEEE 86(3) 504–11,1998. [Google Scholar]

- 3.Temkin B, Stephens B, Acosta E, et al. Haptic Virtual Body Structures. The Third Visible Human Project Conference, Sponsored by the National Library of Medicine, Oct 2000. CD-ROM, published with International Standard Serial Number (ISSN)—1524-9008.

- 4.Golland P, Kikinis R, Halle M, et al. AnatomyBrowser: A novel approach to visualization and integration of medical information. Comput. Aided Surg 4(3):129–43, 1999. [DOI] [PubMed] [Google Scholar]

- 5.Hoffman H, Murray M. Anatomic VisualizeR: Realizing the vision of a VR-based learning environment. Medicine Meets Virtual Reality 7:134–40, 1999. [PubMed] [Google Scholar]

- 6.Voxel Man Project at http://www.uke.uni-hamburg.de/institute/imdm/idv/forschung/vm/index.en.html.

- 7.Visible Human Browser at http://vhp.med.umich.edu.

- 8.Gold Standard Multimedia at http://imc.gsm.com/demos/xademo/xademo.htm.

- 9.AnatLine at http://anatline.nlm.nih.gov/.

- 10.NPAC/OLDA Visible Human Viewer at http://www.dhpc.adelaide.edu.au/projects/vishuman2/index.html.

- 11.Temkin B, Hatfield P, Griswold JA, et al. Volumetric Virtual Body Structures. Medicine Meets Virtual Reality: 10 Digital Upgrades: Applying Moor’s Law to Health, January 23–26, 2002.

- 12.SensAble Technologies at http://www.sensable.com.

- 13.Dertouzos M. The Unfinished Revolution. New York, Harper Collins, 2001.

- 14.Greco J. Thinkdesign 6. Computer Graphics World, May 2001.

- 15.Acosta E, Temkin B, Griswold JA, et al. Haptic texture generation—A heuristic nethod for virtual body structures.14th IEEE Symposium on Computer-Based Medical Systems 2001, July 26–27, pp 395–99.

- 16.Acosta E, Temkin B, Griswold JA, et al. Heuristic haptic texture for surgical simulations. Medicine Meets Virtual Reality: 10 Digital Upgrades: Applying Moor’s Law to Health, January 23–26, 2002. [PubMed]

- 17.Kniss J, McCormick P, McPherson A, et al. Interactive texture-based volume rendering for large data sets. IEEE Computer Graphics and Applications,

- 18.Bro-Nielsen M. Mvox: Interactive 2-4D medical image and graphics visualization software, Proc. Computer Assisted Radiology (CAR’96), pp 335–8, 1996.

- 19.LaMar E, Hamann B, Joy K. Multiresolution techniques for interactive texture-based volume rendering. In Proceedings of IEEE Visualization 99, pp 355–62, October 1999.

- 20.Parker S, Parker M, Livnat Y, et al. Interactive ray tracing for volume visualization. IEEE Transactions on Visualization and Computer Graphics, 1999.

- 21.Ray H, Pfister H, Silver D, Cook T. Ray casting architectures for volume visualization. Accepted to IEEE Transactions on Visualization and Computer Graphics, September 1999, Vol. 5, No. 3.

- 22.Pfister H, Hardenbergh J, Knittel J, et al. The volumepro real—time ray—casting system. In SIGGRAPH 1999 Conference Proceedings, pp 251–60, 1999.

- 23.Meißner M, Kanus U, Straßer W. VIZARD II: A PCI-Card for real-time volume rendering. In Proceedings of the Siggraph/ Eurographics Workshop on Graphics Hardware, pp 61–7, Lisbon, Portugal, August 1998.

- 24.Burgin J, Stephens B, Vahora F, et al. Haptic rendering of volumetric soft-bodies objects., The third PHANTOM User Workshop (PUG 98), Oct 3–6, MIT Endicott House, Dedham, MA.

- 25.Chen SE. Quicktime VR—An image based approach to virtual environment navigation. SIGGRAPH 95 Conference Proceed-ings, Annual Conference Series, pp 29–38.