Abstract

Usability evaluations are a powerful tool that can assist developers in their efforts to optimize the quality of their web environment. This underutilized, experimental method can serve to move applications toward true user-centered design. This article describes the usability methodology and illustrates its importance and application by describing a usability study undertaken at the Mayo Clinic for the purpose of improving an academic research web environment. Academic institutions struggling in an era of declining reimbursements are finding it difficult to maintain academic enterprises on the back of clinical revenues. This may result in declining amounts of time that clinical investigators have to spend in non–patient-related activities. For this reason, we have undertaken to design a web environment, which can minimize the time that a clinician-investigator needs to spend to accomplish academic instrumental activities of daily living. Usability evaluation is a powerful application of human factors engineering, which can improve the utility of web-based Informatics applications.

Most systems in health care are built without using the rigorous scientific principles employed by Medical Informatics. This work shows the value of performing a formal usability evaluation of software, which, in order to be effective, must function with a high degree of efficiency and flexibility. A review of such systems is provided by Shackel et al.1 The cognitive science principles, which are the basis for the usability methodology, are described in an excellent review by Cimino, Patel and Kushniruk.2 Cognitive science provides insight into many problems commonly wrestled with in Medical Informatics development efforts.3 This article describes the findings of a usability experiment designed to evaluate a research web environment developed for academic internists. The study design and the findings of the experiment should be helpful to informaticists interested in building high-quality, user-centered web-based applications.

Background: The Usability Laboratory

A usability study evaluates how a particular process or product works for individuals (Figure 1▶).4 Optimally one should test a population of individuals who are a sample of typical users of the type of process or product being tested. It should be stated clearly to participants that the purpose of the study is to evaluate the process or product and not the individual participant.5 Usability sessions are videotaped from multiple angles (including the computer’s screen image), and participants are encouraged to share their thoughts verbally as they progress through the scenarios provided (“think aloud”).6 This helps to define the participants’ behavior in terms of both their intentions and their actions.7 For example, in our study, we had users identify what information they were looking for before they initiated their search. We could monitor what was entered into the program and view the information retrieved. Then we recorded the degree to which clinician-users were satisfied with the information that they had obtained.8

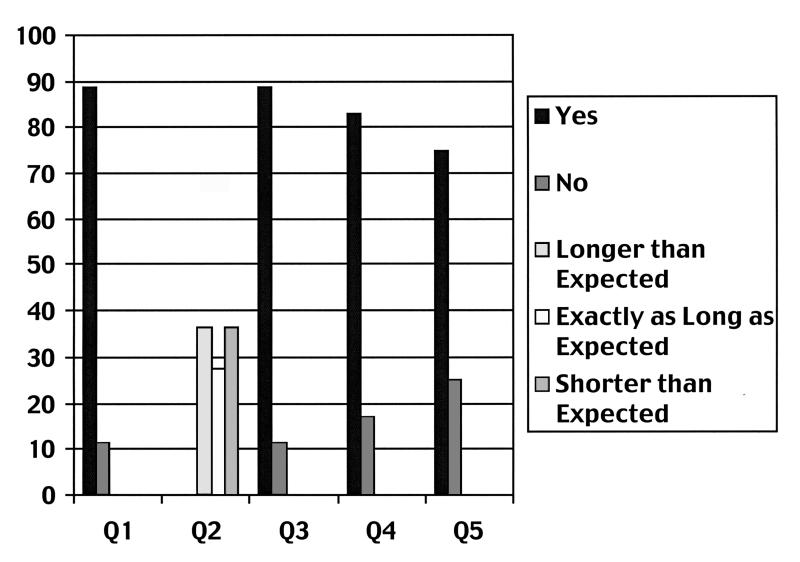

Figure 1 .

Some attributes of usefulness, as elucidated by Bench testing. Depictions of the axes of usability serve to emphasize the goals and challenges to the design of a well-formed web (hypertext) environment.

To accomplish a valid study, one must follow a specific protocol and have multiple participants (typically 6–12) interact with the system using the same set of scenarios.9 It is important that the design team be able to observe multiple participants if they are to become informed by the study. The scenarios should reflect the way the system being tested is actually going to be utilized.10 The closer the study design can mimic the true end-user environment, the more validity the results of the study will have.11 In this manner, developers ascertain characteristics of their web environment that are functional, need improvement, fit user expectations, miss expectations, fail to function, or are opportunities for development.12

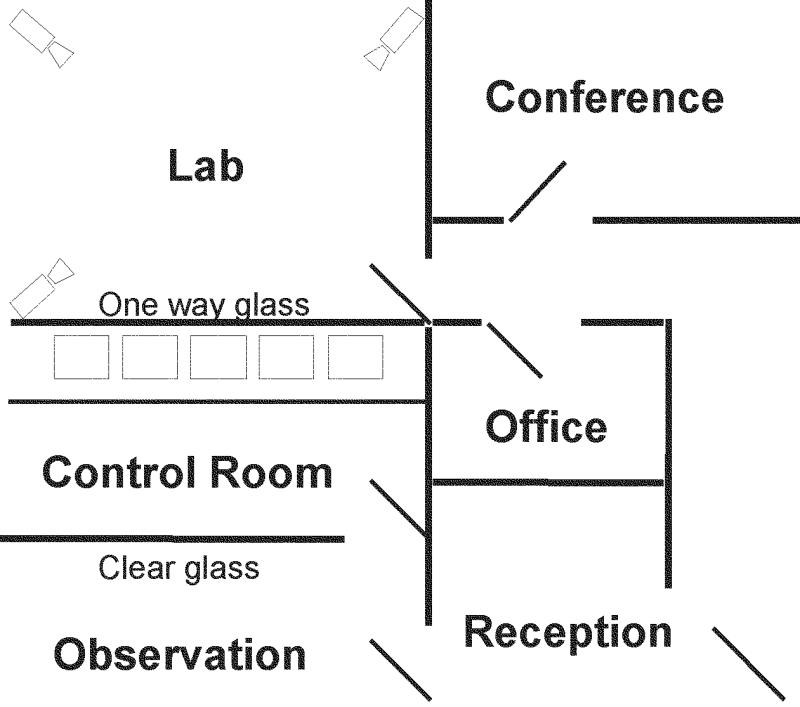

The Usability Laboratory at the Mayo Clinic is a suite of rooms, which provide space for study planning, execution, and review. The conference room has white board space for planning and evaluation. The facility utilized for executing the study includes the study lab, a control room and a developer’s observation booth. The study lab includes a desk and chair with a computer and screen, keyboard, and mouse on the desk. There are cameras on each of three corners of the room, and the back wall is a one-way mirror. The user sits in this space and works on the scenarios provided by the study team after a short introduction to the facility and purpose of the study by the study director (who is not part of the development team) (Figure 2▶). Behind the one-way mirror is a soundproof room with multiple monitors and video recording equipment. The control person directs the videotaping from the available source input (including a video input from the screen). The study director has a microphone, which is used to communicate with the study participant. The development team, if present, sits in a third space separated by a soundproof enclosure, which is located behind the control room. The development team has no contact with the participant but can easily observe the study and gain direct experience with the user’s interaction with the web environment.13

Figure 2 .

Typical layout for an evaluation laboratory used for user interface and software evaluation (similar to the Mayo Usability Laboratory). Recording and monitoring equipment is managed from the control room. Cameras and microphones in the lab capture the computer screen as well as the participant’s actions and verbal observations. As noted in the diagram above, each participant sat in front of a computer, at a desk configuration similar to his or her normal work environment, and performed the scenarios outlined in the methods section. Participants in our study used the research web environment to identify information specified in 10 specific scenarios. To avoid bias, developers typically observe from an observation room and do not participate in the usability studies.

This methodology arises from the field of human factors engineering, which applies principles from cognitive science to implementations such as a computer-human interface.14 The interface evaluated in this study is a web-based research environment, which has been developed to support the academic mission of the Department of Internal Medicine at the Mayo Clinic. This study and its approach to critical evaluation of applications, before and after putting them into the practice, are integral to the success of our tool set in its support of our academic mission. By critically evaluating the design of our web environment, we move closer to the ideal user-centered design.”15

Design Objectives

The web environment was designed to conform to the following philosophy and principles.

Philosophy

To provide resources for members of the department of medicine to facilitate:

Identification of specific applicable extramural and intramural grant offerings

Assist with the authoring of protocols

Serve as a reference model for previously funded protocols

Reference for institutional rules (e.g., institutional review board, contracting, human subjects)

Registration of subjects

Publications registry

Registry of trainees looking for projects

Registry of mentors looking for help with projects

Templates for grant writing

Principles

Easy to maintain

Easy to use (verified by usability testing)

The most complete and concise resource for research information for members of the department of medicine

Minimize time to answer a query (number of “clicks”)

Attribution (where does information come from?)

References (given where applicable)

Disclosure (conflicts of interest are disclosed)

Currency (timeliness of information is explicitly represented)

System Description

The environment begins with a graphical navigation bar depicted in Figure 3▶.16 The focus of the site is to provide clinician-investigators with information regarding funding opportunities, authoring a protocol, the mechanisms for institutional approval of protocols, and ongoing information about protocols. The pages on the site and their content are described below.

Figure 3 .

Navigation bar.

Newsletter. We publish a monthly newsletter regarding research and funding opportunities.

Search tools. Extramural and intramural grant search mechanisms are made available.

Application forms. Application forms are available.

National Institutes of Health. Includes links to the NIH home page, ClinicalTrials.gov, and the NIH Guide.

Federal funding sources. Links to other federal funding sources, such as the Department of Defense, the National Science Foundation, and the Health Resource and Services Administration.

Grant alerts. Links to services that provide e-mail alerts that match a registrant’s profile.

Grantsmanship. Includes the institutional guidelines for grant submission.

Activities forum. The Mayo Clinic has an annual activities forum for investigators.

Departmental grants. Links to intramural and specifically intra-departmental funding opportunities.

Links. Miscellaneous links are provided to Mayo internal research resources.

Usability Study Method

Eight physicians participated in a usability trial of the Department of Medicine’s research web environment. Each participant was given a maximum of five minutes to complete each scenario. Four physicians were in the 31–40 age range, two in the 41–50 age range, and two in the 51–60 age range. Computer experience ranged from one year to more than ten years of experience. The participants were chosen to include six novice researchers, one nonresearcher, and one senior investigator. Four women and four men participated in the trial. The medical specialties of the subjects included general medicine, rheumatology, hypertension, gastroenterology, endocrinology and cardiology.

Scenarios for Usability Trial

Using the Department of Medicine’s newsletter, identify the next deadline for a NIH R01 submission. Use the Department of Medicine’s Office for the Advancement of Research (OAR) web environment to find this date.

You are a novice researcher and looking for information about how to move a new protocol toward institutional approval. (If you are an experienced researcher, look to identify web-based information which, in your opinion, junior colleagues would find helpful.)

Find a site where you can download the NIH PHS-398 form.

Think of some information about the authoring or funding of a research protocol, that you would find important, and locate the information using the OAR web page.

Identify a NIH grant funding opportunity for research for AIDS vaccine research.

Find information to assist you in writing a grant proposal.

Identify whether other colleagues at the Mayo Clinic have an on going NIH-funded protocol in your area of research interest.

You are a new investigator. You work with animals and want to make sure that you have submitted the correct information to the appropriate institutional committee along with your new protocol. Find the document that you would need to submit regarding an animal research protocol.

You are a new investigator and decide to propose a study that involves a chart review of medical records. What form must you fill out to get approval?

Check to see whether any current NIH or private foundation grant offerings are available in your area of interest.

After each scenario the participants were asked to respond aloud to a set of five cue card questions. The questions and their answers are given together in the results section.

Statistical Analysis of Usability Study Results

For each cue card question, the dichotomous responses were analyzed by two-way analysis of variance, with the two factors being participant and scenario. The variability between scenarios and participants was assessed using the respective F-tests. Since, for some questions, variability among participants but not scenarios was evident, the mean response was calculated for each participant for each cue card question. Confidence intervals for the overall mean response for each question, as well as tests for whether this response was equal to 50%, were calculated based on the Student t-distribution for the average of the eight participant means.

Status Report

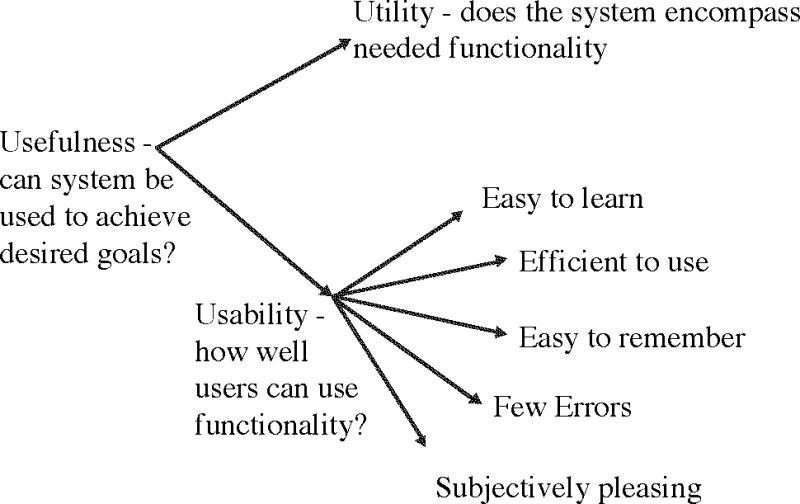

Results of the Usability Study (Table 1▶ and Figure 4▶) Cue Card Questions

Table 1 .

Cue Card Question (Q) Data for Each of the Eight Participants (P)

| P1 | P2 | P3 | P4 | P5 | P6 | P7 | P8 | |

|---|---|---|---|---|---|---|---|---|

| Q1 | 9Y 1N | 10Y | 10Y | 10Y | 9Y 1N | 9Y 1N | 6Y 4N | 8Y 2N |

| Q2 | 5L 1E 4S | 3L 1E 6S | 10S | 4L 4E 2S | 4L 2E 4S | 5L 4E 1S | 5L 3E 2S | 3L 7E |

| Q3 | 10Y | 10Y | 10Y | 9Y 1N | 8Y 2N | 9Y 1N | 7Y 3N | 8Y 2N |

| Q4 | 7Y 3N | 9Y 1N | 10Y | 4Y 6N | 10Y | 6Y 4N | 7Y 3N | 6Y 4N |

| Q5 | 9Y 1N | 8Y 2N | 10Y | 4Y 6N | 8Y 2N | 6Y 4N | 7Y 3N | 8Y 2N |

The numbers reflect the participant’s response over the 10 scenarios completed. Y = yes, N = no, S = shorter than expected, E = exactly as long as expected, and L = longer than expected.

Q1: Were you able to adequately find the information that you were asked to identify?

Q2: Was the time needed to perform the task (Shorter than expected, exactly as long as expected, longer than expected)?

Q3: Was the time needed to complete the task short enough to perform this task within the course of your normal workday?

Q4: Was the information, if found, where you expected it to be?

Q5: Were the navigation bars helpful with regard to your ability to complete this task?

Figure 4 .

Graphical display of summary data, given in percentages, from Table 1▶.

These questions are asked of and answered by each participant after each scenario.

Were you able to adequately find the information that you were asked to identify? In 88.8% ± 11.3% of the scenarios participants were able to adequately identify the information for which they were looking. This was significantly better than could randomly be expected (50% effect) (p = 0.006, Students T-test). For the six novice researchers, the mean was 93.3% ± 8.6%. The inter-reviewer variability trended toward significance (p = 0.084).

Was the time needed to perform the task shorter than expected, exactly as long as expected, longer than expected? In 36.25% of the scenarios the users said that the time to completion was shorter than expected; in 27.5% of the cases it was exactly as long as expected; and in 36.25% it took longer than expected to complete the task. Therefore, in 63.75% ± 14.1% of the scenarios the information was identified in the time expected or faster. For the six novice researchers the mean was 68.3% ± 18.1%. The inter-reviewer variability was insignificant (p = 0.29).

Was the time needed to complete the task short enough to perform this task within the course of your normal workday? The participants said that in 88.8% ± 9.42% of the scenarios it was fast enough to complete the task during the course of their normal workday. This was also significantly better than could be randomly expected (50% effect) (p = 0.002, Students T-test). For the six novice researchers the mean was 91.7% ± 10.3%. The inter-reviewer variability was insignificant (p = 0.27).

Was the information, if found, where you expected it to be? The information was organized in an intuitive fashion in 83.1% ± 18.3% of the scenarios. For the six novice researchers the mean was 84.5% ± 26.3%. The inter-reviewer variability was insignificant (p = 0.13).

Were the navigation bars helpful with regard to your ability to complete this task? Please elaborate. They were felt to be helpful in 75% ± 15.5% of the scenarios, by all participants in at least four scenarios, and 25% of the participants used the graphical navigation bar as their primary means of browsing. For the novice researchers, the mean was 78.3% ± 21.4%. The inter-reviewer variability trended toward significance (p = 0.058).

Our summary questions asked at the end of each participant’s time in the lab taught us to build into our design enough redundancy in the placement of web links to anticipate the variation in actual usage. The piggybank icon was not intuitive for the grantsmanship link, and we have changed it to a writing icon. Twenty-five percent of the users used the graphical navigation bar as their primary method of navigation, but all of the participants found it useful. The users suggested creating a form for them to input a basic research budget; such a form is currently being created. Surprisingly, no one had difficulty with disorientation when they left our site for an external website. All users were able to navigate back to our home page without difficulty (even though it was not the browser’s home page). All users felt that the web environment would be helpful to their research careers and specifically would help them to identify funding opportunities more easily.

Discussion

We have implemented this web environment as the Department of Medicine Research Website, with modifications as suggested by participants in our trial. Our intent is to support the academic enterprise of the Department of Medicine by decreasing the barriers to performing research. This supports includes faster access to funding information, which is accomplished by presenting the major grant search mechanisms directly on our home page. We also provide information about the method and requirements by which one can move a protocol toward institutional review board (IRB) approval. We provide several online courses on grant writing and provide rapid access to all of the forms needed to apply for a grant. We even provide resources to connect mentors and those needing mentorship. Through these mechanisms we hope to make access to information about research fast enough to be accomplished in the course of a routine clinical day and thus decrease the barriers to an effective research career.

The medical literature has a paucity of data describing usability evaluations. However, most institutions have an Intranet and many have an Internet presence. There exists some literature about usability methods to evaluate electronic medical record systems or their components, and these have invariably led to improvements.12 Therefore, we recommend that academic institutions consider applying the usability methodology and formal usability evaluations where appropriate, to optimally development their academic web environments.8 This usability trial specifically teaches us to build redundancy into our systems because users have varied habits that affect their interaction with the systems. We further demonstrated the benefit of a graphical navigation bar in addition to a side menu bar. This article has presented an example of a rigorous evaluation of an academic electronic web environment. If used properly, this tool can support the academic enterprise when finding the time to effectively contribute to the academic enterprise is becoming increasingly difficult. Clinicians in this trial felt that they could use this site to answer their research questions in the course of their normal clinical day.

The human factors engineering principles followed in the usability study methodology can assist institutions in employing user-centered design principles when crafting their academic web environment. An emphasis on user-centered design can help to ensure that your institution’s web environment will serve its intended purpose.

References

- 1.Shackel B. People and computers—some recent highlights. Appl Ergonom. 2000;31(6):595–608. [DOI] [PubMed] [Google Scholar]

- 2.Cimino JJ, Patel VL, Kushniruk AW. Studying the human-computer-terminology interface. JAMIA. 2001;8(2):163–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Patel VL, Kaufman DR. Medical Informatics and the science of cognition. JAMIA. 1998;5(6):493–502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Nielsen J. Usability Engineering. New York, Academic Press, 1993.

- 5.Preece J, Rogers Y, Sharp H, et al. Human-Computer Interaction, New York, Addison-Wesley, 1994.

- 6.Hix D, Hartson HR. Developing user interfaces: ensuring usability through product and process. New York, John Wiley & Sons, 1993.

- 7.Coble JM, Karat J, Orland MJ, Kahn MG. Iterative usability testing: Ensuring a usable clinical workstation. In Masys DR (ed) Proceedings of the 1997 AMIA Annual Fall Symposium. Philadelphia, Hanley & Belfus, 1997, pp 744–8. [PMC free article] [PubMed]

- 8.Kushniruk AW. Patel VL. Cimino JJ. Usability testing in medical informatics: cognitive approaches to evaluation of information systems and user interfaces. Proceedings of the AMIA Annual Fall Symposium. Philadelphia, Hanley & Belfus, 1997, 218–22. [PMC free article] [PubMed]

- 9.Nielsen J. Estimating the number of subjects needed for a thinking aloud test. Int J Human-Computer Stud. 1994; 41:385–97. [Google Scholar]

- 10.Patel VL, Ramoni MF. Cognitive models of directional inference in expert medical reasoning. In: Feltovich PJ, Ford KM, Hoffman RR (eds) Expertise in Context: Human and Machine. Cambridge, MA, MIT Press, 1997, pp 67–99.

- 11.Weir C, Lincoln MJ, Green J. Usability testing as evaluation: Development of a tool. In Cimino JJ (ed): Proceedings of the Twentieth Annual Symposium of Computer Applications in Medical Care, 1996, p 870.

- 12.Kushniruk A, Patel V, Cimino JJ, Barrows R. Cognitive evaluation of the user interface and vocabulary of an outpatient information system. In Cimino JJ (ed): Proceedings of the Twentieth Annual Symposium of Computer Applications in Medical Care, 1996, pp 22–26. [PMC free article] [PubMed]

- 13.Elkin PL, Mohr DN, Tuttle MS, et al. Standardized problem list generation, utilizing the Mayo canonical vocabulary embedded within the Unified Medical Language System. In: Masys DR (ed): Proceedings of the 1997 AMIA Annual Fall Symposium. Philadelphia, Hanley & Belfus, 1997, pp 500–4. [PMC free article] [PubMed]

- 14.Patel VL. Kushniruk AW. Interface design for health care environments: The role of cognitive science. Proceedings of the AMIA Annual Symposium. Philadelphia, Hanley & Belfus, 1998, pp 29–37. [PMC free article] [PubMed]

- 15.Patel VL, Kushniruk AW. Understanding, navigating and communicating knowledge: issues and challenges. Methods Inform Med. 1998;37(4-5):460–70. [PubMed] [Google Scholar]

- 16.Cimino JJ, Elkin PL, Barnett GO. As we may think: The concept space and medical hypertext. Comput Biomed Res. 1992; 25:238–63. [DOI] [PubMed] [Google Scholar]