Abstract

Objective

A fundamental problem in the study of human spoken word recognition concerns the structural relations among the sound patterns of words in memory and the effects these relations have on spoken word recognition. In the present investigation, computational and experimental methods were employed to address a number of fundamental issues related to the representation and structural organization of spoken words in the mental lexicon and to lay the groundwork for a model of spoken word recognition.

Design

Using a computerized lexicon consisting of transcriptions of 20,000 words, similarity neighborhoods for each of the transcriptions were computed. Among the variables of interest in the computation of the similarity neighborhoods were: 1) the number of words occurring in a neighborhood, 2) the degree of phonetic similarity among the words, and 3) the frequencies of occurrence of the words in the language. The effects of these variables on auditory word recognition were examined in a series of behavioral experiments employing three experimental paradigms: perceptual identification of words in noise, auditory lexical decision, and auditory word naming.

Results

The results of each of these experiments demonstrated that the number and nature of words in a similarity neighborhood affect the speed and accuracy of word recognition. A neighborhood probability rule was developed that adequately predicted identification performance. This rule, based on Luce's (1959) choice rule, combines stimulus word intelligibility, neighborhood confusability, and frequency into a single expression. Based on this rule, a model of auditory word recognition, the neighborhood activation model, was proposed. This model describes the effects of similarity neighborhood structure on the process of discriminating among the acoustic-phonetic representations of words in memory. The results of these experiments have important implications for current conceptions of auditory word recognition in normal and hearing impaired populations of children and adults.

Since the publication of Oldfield's (1966) seminal article, “Things, Words and the Brain,” a great deal of attention has been devoted to the structural organization of words in the mental lexicon. Most of this research, however, has focused on the structure of higher level aspects of lexical representations, namely the semantic and conceptual organization of lexical items in memory (e.g., Miller & Johnson-Laird, 1976; Smith, 1978). As a consequence, little attention has been directed to the structural organization of the representations of sensory and perceptual information used to gain access to these higher level sources of information. The goal of the present investigation was to explore in detail this structure and its implications for perception of spoken words by normal and hearing-impaired listeners.

In the present set of studies, structure will be defined specifically in terms of similarity relations among the sound patterns of words. Similarity will serve as the primary means by which the organization of acoustic-phonetic representations in memory will be investigated. We assume that similarity relations among the sound patterns of spoken words represent one of the earliest stages at which the structural organization of the lexicon comes into play. The precise aim of the present investigation was to gain a detailed understanding of the lower level relations between stimulus input, activation of phonetic representations, and, subsequently, recognition of spoken words.

The identification of structure with similarity relations among sound patterns of words raises the difficult problem of defining similarity. Similarity, although crucial to the present investigation, is an ill-defined concept in research on speech perception and spoken word recognition, and one that deserves considerably more work in these areas of research (see Mermelstein, Reference Note 7). However, similarity can be approximated by both computational and behavioral predictors of confusion, the approach taken here. Thus, similarity will be defined in terms of a specific computational metric for predicting confusions among phonetic patterns as well as a behavioral, or operational, metric based on the results of a series of perceptual experiments.

Having defined structure as the similarity relations among the sound patterns of words, the question arises: Should the structural organization of representations in memory have consequences for spoken word recognition? Consider a content-addressable memory system in which there is no noise either in the signal or the listener (Kohonan, 1980). In such a system, encoding the acoustic-phonetic information in the stimulus word is tantamount to locating the word in memory. In this case, the structural organization of acoustic-phonetic representations in memory would have no consequences for word identification. Instead, the task of spoken word recognition would be identical to phonetic perception, and one need only study phonetic perception to understand how words are recognized. (By phonetic perception we are referring to the perception of individual segments such as consonants and vowels.)

It is undeniable that phonetic perception is important in spoken word recognition. It is not undeniable, however, that the human word recognition system operates as a noiseless content-addressable system or that the acoustic-phonetic signal itself is devoid of noise. To begin, the signal is very often less than ideal for the purposes of the listener. Words are typically perceived against a background of considerable ambient noise, reverberation, and the voices of other talkers. In addition, coarticulatory effects and segmental reductions and deletions substantially restructure the phonetic information in a myriad of ways (Luce & Pisoni, 1987). Although such effects may indeed be useful to the listener (Church, Reference Note 3; Elman & McClelland, 1986), they also undoubtedly produce considerable ambiguities in the speech signal, making a strictly content-addressable word recognition system based on phonetic encoding unrealistic. In short, both the noise inherent in the signal as well as the noise against which the signal is perceived make it unlikely that word recognition is accomplished by direct access, based solely on phonetic encoding, to acoustic-phonetic representations in memory.

Not only is the speech signal noisy, so too is the recognition system of the listener. Although the human is clearly well adapted for the perception of spoken language, the system by which language is perceived is by no means a perfect one. In normal listeners, encoding, attentional, and memory demands frequently result in the distortion, degradation, or loss of important acoustic-phonetic information. The data on misperceptions alone attest to the fact that spoken word recognition is less than perfect (Bond & Garnes, 1980). In hearing-impaired listeners, the problems faced by normal listeners are exacerbated by impoverished input representations. Thus, again, a strictly content-addressable system does not suffice as a model of human spoken word recognition.

The alternative to a noiseless content-addressable system is one in which the stimulus input activates a number of similar acoustic-phonetic representations or candidates in memory, among which the system must choose (Marslen-Wilson, 1989; Marslen-Wilson & Welsh, 1978; Triesman, 1978a, b). In this system, a considerable amount of processing activity involves discriminating among the lexical items activated in memory. Indeed, many current models of word recognition subscribe to the view that word recognition is to a great degree a process of discriminating among competing lexical items (Forster, 1979; Luce, Pisoni, & Goldinger, 1990; Marslen-Wilson, 1989; Marslen-Wilson & Welsh, 1978; McClelland & Rumelhart, 1981; McQueen, Cutler, Briscoe, & Norris, 1995; Morton, 1979; Norris, 1994).

Given that one of the primary tasks of the word recognition system involves discrimination among lexical items, the study of the structural organization of words in memory takes on considerable importance, especially if structural relations can be shown to influence the ease or difficulty of lexical discrimination, and, subsequently, word recognition and lexical access. By the same token, under the assumption that word recognition involves discrimination and selection among competing lexical items, variations in the ease or difficulty of discriminating among items in memory can enlighten us as to the structural organization of the sound patterns of words. In short, lexical discrimination and structure are so inextricably tied together that the study of one leads to a further understanding of the other.

Assuming, then, that structural relations among words should influence spoken word recognition via the process of discrimination, it is important to determine that structural differences among words actually exist. Previous research by Landauer and Streeter (1973) has demonstrated that words vary substantially not only in the number of words to which they are similar, but also in the frequencies of these similar words. These findings suggest that both structural and frequency relations among words may mediate lexical discrimination. Investigation of the behavioral effects of these relations should help us to understand further not only the process of lexical discrimination, but also the organization of the sound patterns of spoken words in memory.

The issue of word frequency takes on an important role in the investigation of the structural organization of the sound patterns of words. Numerous studies over the years (Howes, 1954, 1957; Newbigging, 1961; Savin, 1963; Soloman & Postman, 1952) have demonstrated that the ease with which spoken words are recognized is monotonically related to experienced frequency, as measured by some objective count of words in the language. However, little work has been devoted to detailing the interaction of word frequency and structural relations among words (see, however, Triesman, 1978a, b). If word frequency influences the perceptibility of the stimulus word, it should also influence the degree of activation of similar words in memory. Frequency is important, then, in further specifying the relative competition among activated items that are to be discriminated among.

The goal of the present investigation was, therefore, to examine the effects of the number and nature of words activated in memory on auditory word recognition. Throughout the ensuing discussion, the term similarity neighborhood will be employed. A similarity neighborhood is defined here as a collection of words that are phonetically similar to a given stimulus word. (The term stimulus word will be used to refer to the word for which a neighborhood is computed.) Similarity neighborhood structure refers to two factors: 1) the number and degree of confusability of words in the neighborhood, and 2) the frequencies of the neighbors. This first factor will be referred to as neighborhood density or neighborhood confusability; the second factor will be called neighborhood frequency. In addition to neighborhood structure, the frequency of the stimulus word itself will be of interest.

Previous Research on the Role of Neighborhood Structure and Frequency in Word Recognition Neighborhood Structure

Little previous research has been devoted to examining the effects of neighborhood structure, primarily because of the lack of computational tools for determining similarity neighborhoods for a large number of words. One early study of visual word recognition by Havens and Foote (1963) examined the effects of the number of competitors, or neighbors, of words on tachistoscopic identification. The results of this study, although based on a very small number of words and a rather imprecise measure of neighborhood membership, are suggestive. Havens and Foote demonstrated that effects of word frequency could be eliminated if the number of competitors for a given word were controlled. That is, low-frequency words were identified at levels of accuracy equal to those of high-frequency words when the number of competitors was held constant. This result suggests that the effect of frequency on visual word recognition is crucially dependent on the neighborhood in which the word resides in the lexicon.

Similar suggestive evidence was reported in a little known thesis by Anderson (Reference Note 1). In this study, Anderson examined the effects of the nature and number of alternatives on the intelligibility of spoken words. Although the means for determining alternatives were crude, Anderson demonstrated that intelligibility of spoken words was affected by both the number of possible confusors as well as by the frequencies of these confusors. In general, Anderson showed that words with many possible confusors were less intelligible than words with fewer confusors. In addition, he demonstrated that high-frequency confusors tended to depress (i.e., inhibit) identification performance.

Other evidence for the role of neighborhood structure in spoken word recognition was obtained from a reanalysis of a set of data published by Hood and Poole (1980). Hood and Poole examined the intelligibility of words presented in white noise. They found that word frequency failed to correlate consistently with the word intelligibility scores for their data, in apparent contradiction to many previous findings regarding the effects of frequency on noise-masked words (Howes, 1957; Savin, 1963). This finding indicated that factors other than word frequency per se were responsible for the wide range of speech intelligibility scores obtained by Hood and Poole.

To examine the possibility that similarity neighborhood structure was, at least in part, responsible for the differences observed in intelligibility in the Hood and Poole study, we examined their 25 easiest and 25 most difficult words. Similarity neighborhoods were computed (see below) for each of these 50 words. In keeping with Hood and Poole's observation regarding word frequency, no significant difference in frequency was found between the 25 easiest and 25 most difficult words (Pisoni, Nusbaum, Luce, & Slowiaczek, 1985). However, we found that the relationships of easy words to their neighbors differed substantially from the relationships of the difficult words to their neighbors. More specifically, approximately 56% of the words in the neighborhoods of the difficult words were equal to or higher in frequency than the frequencies of the difficult words themselves. For the 25 easy words, however, only approximately 23% of the neighbors of the easy words were of equal or higher frequency. Thus, it appears that the observed differences in intelligibility were due, at least in part, to the frequency composition of the neighborhoods of the easy and difficult words, and were not primarily due to the frequencies of the words themselves.

Taken together, these earlier studies suggest that neighborhood structure may play an important role in word recognition. Furthermore, these studies suggest that the effect of the frequency of the stimulus word itself may be mediated by neighborhood structure of other similar sounding words. The present set of studies was aimed at examining the role of neighborhood structure in spoken word recognition as well as specifying the combined roles of word and neighborhood frequency. Study of the combined effects of neighborhood structure and frequency should, therefore, lead to a better understanding of the effects of both similarity and frequency on word recognition.

Given that so little research has been devoted to these problems, it is hardly surprising that current models of spoken word recognition have had little to say about the structural organization of acoustic-phonetic patterns in the mental lexicon. Only cohort theory (Marslen-Wilson, 1989; Marslen-Wilson & Welsh, 1978) has made any precise claims regarding structural effects, and these have primarily been based on the assumption that words are recognized at the point at which they diverge from all other words in the mental lexicon, a prediction that says little about the structural organization of words in memory. For the most part, therefore, similarity neighborhood structural effects have been ignored in both research and theory on spoken word recognition. As we hope to show, this has been a serious omission in earlier work. Indeed, we will demonstrate here that any adequate theory of word recognition must provide a basic account of the structure of the sound patterns of words in memory as well as how these structural relations affect perceptual processing.

Word Frequency

Although little work has been devoted to the study of neighborhood structure, a voluminous body of data has been published on the effect of word frequency in visual and spoken word recognition (e.g., Glanzer & Bowles, 1976; Glanzer & Ehrenreich, 1979; Gordon, 1983; Howes, 1954, 1957; Howes & Solomon, 1951; Landauer & Freedman, 1968; Morton, 1969; Newbigging, 1961; Rubenstein, Garfield, & Millikan, 1970; Rumelhart & Siple, 1974; Savin, 1963; Scarborough, Cortese, & Scarborough, 1977; Soloman & Postman, 1952; Stanners, Jastrzembski, & Westbrook, 1975; Whaley, 1978). In general, these results have demonstrated numerous processing advantages for high-frequency words. Many theories have also been proposed to account for the advantages associated with increased word frequency. These theories have cited frequency of exposure (Forster, 1976; Morton, 1969), age of acquisition (Carroll & White, 1973a, b), and the time between the present and last encounter with the word (Scarborough, Cortese, & Scarborough, 1977) as the underlying reasons for the processing advantages observed for high-frequency words. Whatever the precise mechanism, it is now widely assumed by many researchers (Broadbent, 1967; Catlin, 1969; Goldiamond & Hawkins, 1958; Nakatani, Reference Note 9; Newbigging, 1961; Pollack, Rubenstein, & Decker, 1960; Savin, 1963; Soloman & Postman, 1952; Triesman, 1971, 1978a, b) that frequency serves to bias, in some manner, the word recognition system toward choosing high-frequency words over low-frequency words. Although the claim that frequency effects arise from biases is not uncontroversial, many theories of the word frequency effect have espoused such a view (see references cited above). Among these theories are sophisticated guessing theory (Neisser, 1967; Newbigging, 1961; Pollack et al., 1960; Savin, 1963; Soloman & Postman, 1952), criterion-bias theory (Broadbent, 1967), and partial identification theory (Triesman, 1978a, b). Although there has been considerable debate among the proponents of each of these theories, all assume that word frequency, by some as yet poorly specified processing mechanism, influences the decisions of the word recognition system via some sort of bias (Gordon, 1983; Norris, Reference Note 10).

Although there is some agreement among researchers as to the means by which processing advantages afforded by high-frequency words arise, there has, as previously mentioned, been little research on the relation between frequency and neighborhood structure, a primary issue in the present set of studies. Only Triesman (1978a, b) has addressed the issue of how neighborhood structure may influence the word frequency effect. The present set of studies is therefore aimed, in part, at examining the role of word frequency in the context of the similarity neighborhoods for words.

Description of the Present Approach

In the present set of studies, similarity neighborhood structure was estimated computationally, using a large, on-line lexicon. This lexicon, based on Webster's Pocket Dictionary (Webster's Seventh Collegiate Dictionary, 1967), contains approximately 20,000 entries. In the version of the lexicon used in the present set of studies, each entry contains: 1) an orthographic representation, 2) a phonetic transcription, 3) a frequency count based on the Kucera and Francis (1967) norms, and 4) a subjective familiarity rating (Nusbaum, Pisoni, & Davis, Reference Note 11).

The phonetic transcriptions, coded in a computer-readable phonetic alphabet, are based on a general American dialect and include syllable boundary and stress markings. Frequency counts, as noted above, were obtained from an on-line version of the Kucera and Francis (1967) corpus. These counts were based on one million words of printed text. Although the study of frequency effects in spoken word recognition would be best served by a count of spoken words, no such count is available that covers the large number of words in Webster's lexicon. (For discussions of frequency and familiarity estimates of printed versus spoken words, see Gaygen & Luce, in press, and Pisoni & Garber, 1990.) Finally, the subjective familiarity ratings for each of the words were obtained in a large-scale study by Nusbaum et al. (Reference Note 11). In this study, groups of college undergraduates were asked to rate the subjective familiarity of each of the words in Webster's lexicon on a seven point scale, ranging from “don't know the word” (1) to “know the word and know its meaning” (7). The familiarity ratings were obtained from visually presented words.

The general procedure for computing similarity neighborhood structure using the computerized lexicon was as follows: a given phonetic transcription (constituting the stimulus word) was compared with all other transcriptions in the lexicon (which constituted potential neighbors). (The precise methods by which a neighbor was defined varied as a function of the particular experimental paradigm employed. These methods will be described in detail below.) By comparing the phonetic transcription of the stimulus word with all other phonetic transcriptions in the lexicon, it is possible to determine the extent to which a given stimulus word is similar to other words (i.e., neighborhood density or neighborhood confusability). In addition, it is also possible to determine the frequency of the neighbors themselves (i.e., neighborhood frequency), as well as the frequency of the stimulus word. Thus, in each of the studies to be reported, three variables were examined: stimulus word frequency, neighborhood density or neighborhood confusability, and neighborhood frequency.

The effects of similarity neighborhood structure on spoken word recognition were first examined in a large-scale experiment involving the identification of words against a background of white noise. Using confusion matrices for all possible initial consonants, vowels, and final consonants, a rule based on Luce's choice rule (1959) was devised to predict the accuracy of identifying words in noise. Basically, this rule—called the neighborhood probability rule (NPR)—takes into account the intelligibility of the stimulus word (estimated from the confusion matrices), the confusability of the neighbors of the stimulus word (also estimated from the confusion matrices), the frequency of the stimulus word, and the frequencies of the neighbors. The performance of this rule was tested against the data for the words presented for identification in noise. Based on the performance of this rule in predicting identification accuracy, the neighborhood activation model (NAM) of spoken word recognition was proposed to account for the perceptual identification of words in noise.

Two further experiments examined the role of similarity neighborhood structure in spoken word recognition using nondegraded stimuli. In the first of these experiments, words and nonwords varying in frequency and similarity neighborhood structure were presented to listeners in an auditory lexical decision task. In the second experiment, subjects attempted to repeat as quickly as possible auditorily presented words varying in frequency and similarity neighborhood structure. Each of these experiments was performed to answer different questions regarding the role of similarity neighborhood structure in spoken word recognition and to test specific claims of the NAM.

Summary

The present investigation represents an attempt to uncover the precise role of neighborhood structure in spoken word recognition using computational and behavioral techniques. The major hypothesis is that words are recognized in the context of other words in memory. More precisely, it is predicted that the number of words that must be discriminated among in memory will affect the accuracy and time-course of word recognition. It is furthermore hypothesized that the frequencies of the words activated in memory will affect decision processes responsible for choosing among the activated words. Finally, it is proposed that the well-known word frequency effect may be a function of neighborhood frequency and similarity, and not a simple direct function of the number of times the stimulus word has been encountered.

Experiment 1: Evidence from Perceptual Identification

Approximately 900 monosyllabic words were first presented to subjects for identification and recognition accuracy scores were obtained for each word. Using the computerized lexicon and behavioral measures of similarity based on confusion matrices, NPRs expressing the probability of choosing a word from among its neighbors were computed for each word. The performance of these rules was then evaluated against the obtained identification data.

Method

Stimuli

Nine hundred eighteen words were selected from Webster's lexicon that met the following criteria: 1) All words were three phonemes in length; 2) all were monosyllabic; 3) all were listed in the Brown corpus of frequency counts (Kucera & Francis, 1967); and 4) all words had a rated familiarity of 6.0 or above on a seven point scale. The familiarity ratings were obtained from a previous study by Nusbaum, Pisoni, and Davis (Reference Note 11). In this study, all words from the Webster's lexicon were presented visually for familiarity ratings. The rating scale ranged from “don't know the word” (1) to “recognize the word but don't know the meaning” (4) to “know the word” (7). The rating criterion was established to ensure that the words would be known to the subjects. For the present experiment, only 811 of the 918 words were of interest. These 811 words all had the form consonant-vowel-consonant (CVC). The remaining 137 words, all having forms other than CVC, were included for a separate analysis not directly relevant to the present study (Luce, Reference Note 5).

The 918 words were recorded by a male speaker of a Midwestern dialect. The stimuli were recorded in a sound attenuated booth (IAC model 401A) using an Electro-Voice D054 microphone and an Ampex AG-500 tape recorder. The stimuli were then low-pass filtered at 4.8 kHz and digitized via a 12-bit analog-to-digital converter operating at a sampling rate of 10 kHz. Using WAVES, a digital waveform editor (Luce & Carrell, Reference Note 6), each stimulus was spliced from the entire stimulus set and placed in a separate digital file. After editing, all stimulus files were equated for overall RMS amplitude using the program WAVMOD (Bernacki, Reference Note 2). Equating for RMS amplitude ensured that the stimuli were approximately equal in average intensity.

The 918 stimuli were then randomly partitioned into three stimulus set files consisting of 306 words each. From two of the three stimulus sets, two practice lists of 15 words each were selected and placed in separate stimulus set files.

Screening

Before conducting the identification experiment proper, each of the 918 words was screened to ensure that no clearly anomalous stimuli were included in the final analysis. Each of the three stimulus set files was presented to separate groups of 10 subjects, resulting in ten observations per word. For the screening experiment, each word was presented at 75 dB SPL in the absence of masking noise. Except for the manipulation of signal to noise (SN) ratio, the procedure for stimulus presentation and data collection was identical to that for the identification in noise experiment (see Procedure section below). Only those words identified at a level of 90% correct or above were included in the final analysis. Thirty-six of the original 918 words failed to meet this criterion. Although these words were presented in the identification experiment to maintain equal numbers of stimuli in each of the three stimulus set files, these words were eliminated from consideration in the final analyses of the data.

Subjects

Ninety subjects participated in partial fulfillment of an introductory psychology course. All subjects were native English speakers, reported no history of speech or hearing disorders, and were able to type.

Design

All stimuli were presented at each of three SN ratios: + 15 dB, +5 dB, and –5 dB SPL. SN ratio was manipulated by varying the amplitude of the stimuli against a constant level of white, band-limited, Gaussian noise. The level of the noise was set at 70 dB SPL and was low-pass filtered at 4.8 kHz to match the gross spectral range of the stimuli. The stimuli were presented at 85 dB SPL for the + 15 dB SN ratio, 75 dB for the +5 dB SN ratio, and 65 dB for the –5 dB SN ratio. Each of the three stimulus set files was presented to three groups of 10 subjects each. Each group of subjects heard one-third of the stimuli at + 15 dB, one-third at +5 dB, and one-third at –5 dB. However, presentation at a given SN ratio varied randomly from trial to trial. For a given stimulus, SN ratio was a between-subjects factor. Altogether, 10 subjects heard each word at each SN ratio.

Procedure

Stimulus presentation and data collection were controlled on-line in real-time by a PDP 11/34 minicomputer. The stimuli were presented via a 12-bit digital-to-analog converter over matched and calibrated TDH-39 headphones. The stimuli and noise were first manually calibrated at 85 dB SPL. Programmable attenuators were then adjusted for each trial to achieve the desired SN ratio.

Subjects were tested in individual booths in a sound-treated room. CRT terminals interfaced to the PDP-11/34 computer were situated in each of the booths. The procedure for an experimental trial was as follows: subjects were first presented with the message “READY FOR NEXT WORD” on their CRT terminals. One sec after the message, 70 dB SPL of white noise was presented over the headphones. One hundred msec after the onset of the noise, a randomly selected stimulus was presented at one of the three attenuation levels. One hundred msec after the offset of the stimulus, the noise was terminated until the beginning of the next trial. After presentation of the stimulus and noise, a prompt appeared on each subject's terminal. Subjects then typed their responses on the terminals and pressed the RETURN key when finished. Subjects were able to see their responses while typing and were able to correct any typing errors before pressing the return key. After each subject had responded, another trial was initiated. In the event that one or more subjects failed to respond, a new trial was initiated within 30 sec of the offset of the noise. Alphanumeric string responses were collected by the PDP-11/34 and stored in disk files for later analysis.

Subjects were instructed to provide their best guesses for each word they heard. They were also instructed to enter no response (i.e., simply press the RETURN key) only in the event that they were completely unable to identify the word. Mter the instructions, 15 practice words, each at one of the three SN ratios, were presented. None of the 15 practice words were presented in the main experiment. After the practice list, the instructions were summarized and procedural questions were answered. One of the stimulus set files consisting of 306 words was then presented. Three short breaks were given at equal intervals. An experimental session lasted approximately 1 hr.

Data Analysis

The data files were first combined into a master list consisting of the responses from 10 subjects for each SN ratio (resulting in 30 total responses per word) for each of the 918 words. The 36 words failing to meet the criterion established in the screening experiment were marked and excluded from further analysis, leaving data for 882 words. The 811 CVC words were then selected from the remaining 882 words. (The 71 words that were omitted were not CVC words, e.g., “ask,” “try,” etc.) In total, 24,330 (811 words × 3 SN ratios × 10 observations) subject responses were included in the master data list.

The master list was edited to correct misspellings. Corrections for misspellings were performed by correcting transpositions, deleting single letter insertions, inserting single letter omissions, and correcting single letter substitutions. Single letter substitutions were corrected only when the key of the incorrect letter was within one key of the target letter on the keyboard or when the correct letter would have been produced if the same keystroke had been performed by the opposite hand. Only responses constituting nonwords were corrected in this manner. Approximately 2.5% of the responses were corrected for misspellings. On completion of the editing of the master list, percentages correct (hereafter, “scores”) for each word at each SN ratio were computed. Responses were scored as correct if the phonetic transcription constituted an identical match to the target word or if the response was an inflected form of the target word or a homophone.

Neighborhood Probability Rules (NPRs)

To quantify the effects of similarity neighborhood structure and to devise a single expression that simultaneously takes into account stimulus word intelligibility, stimulus word frequency, neighborhood confusability, and neighborhood frequency, an NPR, based on Luce's general biased choice rule, was devised. However, to devise such a rule for predicting the accuracy of identifying the words in noise, a principled means was required for determining those words in the computerized lexicon that constitute neighbors of a given target stimulus word. This is a particularly important problem for a task involving degradation of words by white noise, given that this noise may differentially mask certain speech sounds (e.g., fricatives) more than others (e.g., vowels). This differential masking may produce confusions (i.e., neighbors) that are dependent, in part, on the spectral properties of the masking noise. Thus, to obtain an independent metric for computing neighborhood confusability, the confusability of individual speech sounds was determined from confusion matrices for all initial consonants, vowels, and final consonants. Details regarding how these confusion matrices were obtained can be found in Luce (Reference Note 5). These confusion matrices were then used to investigate the combined effects of stimulus intelligibility and neighborhood confusability on word identification accuracy.

Having obtained identification scores for the 811 CVC words and confusion matrices for the initial and final consonants and vowels composing these words, the question becomes: How can the segmental intelligibility of the stimulus word, estimated from the confusion matrices, be combined with the segmental confusability of its neighbors, also estimated from the confusion matrices, to provide an index of the identifiability of the stimulus word? One means of accomplishing this goal is to devise an NPR incorporating the probability of identifying the stimulus word and the probabilities of confusing the neighbors with the stimulus word. Stated differently, can an NPR be devised that expresses the probability of identifying the stimulus word given the probabilities of identifying its neighbors? Luce's (1959) choice rule provides a straightforward means of computing such probabilities. Very simply, Luce's choice rule states that the probability of choosing a particular item i is equal to the probability of item i divided by the probability of item i plus the sum of the probabilities of j other items.

The applicability of Luce's general choice rule to the problem at hand is transparent. Specifically, it provides a means for predicting the probability of choosing a stimulus word from among its neighbors and thus provides the formal basis for devising an NPR. Accordingly, an NPR assumes the following general form: the probability of identifying the stimulus word is equal to the probability of the stimulus word divided by the probability of the stimulus word plus the sum of the probabilities of identifying the neighbors. Thus:

| (1) |

where p(ID) is the probability of correctly identifying the stimulus word, p(S) is the probability of the stimulus word, and p(Nj) is the probability of the jth neighbor.

Stimulus Word Probabilities (SWPs)

The data from the confusion matrices can be used to compute the probability of the stimulus word and the conditional probabilities of its neighbors. The probability of the stimulus word was computed as follows: for each phoneme in the stimulus word, the conditional probability of that phoneme given itself can be obtained from the confusion matrices. Assuming independent probabilities, the obtained conditional phoneme probabilities can be multiplied. This product renders a SWP based on the probabilities of the individual phonemes of the stimulus word. Note that the probability for the stimulus word is based on the product of the conditional probabilities of identifying each phoneme independently, obtained from the confusion matrices. Thus, the SWP can be computed as follows:

| (2) |

where p(PSi|PSi) is the conditional probability of identifying the ith phoneme of the stimulus word given that phoneme, and n is the number of phonemes in the word. For example, the SWP of the word /dɔg/ (“dog”) is:

| (3) |

where, again, the conditional probabilities of the individual phonemes are determined from the confusion matrices for the initial consonants, vowels, and final consonants. Note that the SWP of /dɔg/ can be construed as the conditional probability of the word /dɔg/ given /dɔg/, or p(dɔg|dɔg).

Neighbor Word Probabilities (NWPs)

In this manner, conditional probabilities for each neighbor of the stimulus word can also be computed. Thus, the NWP can be computed by finding the conditional probabilities of each of the phonemes of the neighbor given the stimulus word phonemes. Multiplying these probabilities renders an index of the probability of the neighbor, or the NWP. NWP can be computed as follows:

| (4) |

where PNi is the ith phoneme of the neighbor, PSi is the ith phoneme of the stimulus word, and n is the number of phonemes.

To return to the example of the stimulus word /dɔg/ given above, the NWP for the neighbor /tæg/ can be computed as:

| (5) |

which also can be construed to be the probability of identifying /tæg/ given /dɔg/, or p(tæg|dɔg).

Frequency-Weighted Neighborhood Probability Rule (FWNPR)

Given these designations of stimulus word and NWPs, the appropriate substitutions of terms in Equation 1 render an NPR based on the general choice rule:

| (6) |

where PSi is the probability of the ith phoneme of the stimulus word, PNij is the probability of the ith phoneme of the jth neighbor, n is the number of phonemes in the stimulus word and the neighbor, FreqS is the frequency of the stimulus word, FreqNj is the frequency of the jth neighbor, and nn is the number of neighbors. This rule will be referred to as the FWNPR.

A number of properties of the NPR are worthy of mention. First, the intelligibility of the phonemes of the stimulus word itself will determine, in part, the role of the neighbors in determining the predicted probability of identification. Stimulus words with high phoneme probabilities (i.e., words with highly intelligible phonemes) will tend to have neighbors with low phoneme probabilities, owing to the fact that all probabilities in the confusion matrices are conditional. Likewise, stimulus words with low phoneme probabilities (i.e., those with less intelligible phonemes) will tend to have neighbors with relatively higher phoneme probabilities. However, the output of the NPR is not a direct function of the SWP. Instead, the output of the rule is dependent on the existence oflexical items that contain phonemes that are confusable with the phonemes of the stimulus word. For example, a stimulus word may contain highly confusable phonemes. However, if there are few actual lexical items (i.e., neighbors) that contain phonemes confusable with those of the stimulus word, the sum of the NWPs will be low. The resulting output of the NPR will, therefore, be relatively high. Likewise, if the phonemes of the stimulus word are highly intelligible, but there are a large number of neighbors that contain phonemes that are confusable with the stimulus word, the probability of identification will be reduced. In short, the output of the NPR is contingent on both the intelligibility of the stimulus word and the number of neighbors that contain phonemes that are confusable with those of the stimulus word. Thus, intelligibility of the stimulus word, confusability of the neighbors, and the nature of lexical items act in concert to determine the predicted probability of identification. In addition, the frequencies of the stimulus word and the neighbors will serve to amplify to a greater or lesser degree the word probabilities of the stimulus word and its neighbors. Note that frequency in this rule is expressed in terms of the relation of the frequency of the target word to the frequencies of its neighbors. Thus, the absolute frequency of the stimulus word may have differential effects on predicted identification performance depending on the frequencies of the word's neighbors. For example, given two stimulus words of equal frequency, the stimulus word with neighbors of lower frequencies will produce a higher predicted probability than the stimulus word with neighbors of higher frequencies. The degree to. which the frequencies of the neighbors will play a role in determining predicted identification performance will, of course, depend on the NWPs. The frequencies of the neighbors with low probabilities of confusion will play less of a role than those with high probabilities of confusion. Simply put, this rule predicts that neighborhood structure will play a role in determining predicted identification performance in terms of the combined effects of the number and nature of the neighbors, the frequencies of the neighbors, the intelligibility of the stimulus word, and the frequency of the stimulus word.

Predicting Identification Performance Using the NPR Computation of the Rule

To evaluate the success of the proposed NPR, the data from the perceptual identification study were analyzed in terms of the frequency-weighted rule using the confusion matrix data. Given that confusion matrices were obtained only for consonants occurring in initial and final position, only those words of the form CVC from the original data set were analyzed. As stated earlier, 811 CVC words were analyzed. In addition, to simplify the computational analysis, only monosyllabic words contained within the Webster's lexicon were used to compute the NPR. Inspection of the error responses revealed that this was not an unreasonable simplification, given that a significant majority of the error responses were, in fact, monosyllabic words, which indicates that subjects typically perceived monosyllabic words. The restriction of monosyllabic neighbors was necessitated by the particular procedure used to determine NWPs (see below).

The general method for computation of the NPR was as follows: the SWP was first determined for a given stimulus word. This probability was computed from the confusion matrices using Equation 2. Mter computation of the SWP, the transcription of the stimulus word was compared with the transcriptions of all other monosyllabic words in Webster's lexicon with a familiarity rating of 5.5. A cutoff familiarity rating of 5.5 was imposed on the possible neighbors to ensure that most words in the lexicon unknown to subjects would be excluded from consideration as neighbors. The value of 5.5 was chosen based on inspection of the familiarity ratings of the error responses.

To compute the NWPs, the vowel of the stimulus word was first aligned with the vowel of the neighbor being analyzed. The conditional probabilities of the vowel and the consonants flanking the vowel for the neighbor were then determined from the appropriate confusion matrices. In the event that the neighbor was a CVC word, the neighbor word phoneme probability was computed using Equation 4. That is, the conditional probability of the initial consonant of the neighbor given the initial consonant of the stimulus word was determined, as were the conditional probabilities of the vowel and the final consonant.

A problem arises as to the treatment of neighbors containing either initial consonant clusters, final consonant clusters, or both. In these cases, the transcriptions for the stimulus word and the neighbor were aligned at the vowel. However, when initial and/or final clusters were present in the neighbor word, those consonants not immediately adjacent to the vowel fail to overlap with anything in the stimulus word. For example, if the stimulus word was /kΛt/ and the neighbor was /skid/, the /k/ of the stimulus word would align with the /k/ of the neighbor, /Λ/ would align with /I/, and /t/ would align with /d/. However, the /s/ of the neighbor /skId/ would align with no phoneme in the stimulus word. In this event, the probability of the phoneme /s/ was determined by finding the conditional probability of /s/ given the null phoneme from the confusion matrix for initial consonants, or p(s|0), where “0” is the null phoneme. In essence, this is the probability of perceiving /s/ when in fact no phoneme has been presented. The conditional probabilities for the neighbor /skId/ would thus be: p(s/0), p(k|k), p(I|Λ), and p(d|t). The procedure for final consonant clusters was identical, except that the probability of the neighbor phoneme given the null phoneme was computed from the final consonant confusion matrix. This method of dealing with initial and final clusters in the neighbor word makes the simplistic assumption that clusters are phonetically and acoustically equivalent to the sum of their constituent phonemes. However, in the absence of confusion matrices for all possible clusters as well as singletons, this simplification appeared reasonable.

A similar problem arises when the neighbor is shorter than the stimulus word. In these cases, however, the solution is much more straightforward. The stimulus word and neighbor are once again aligned at the vowel. The empty slot in the neighbor is then assumed to contain the null phoneme, in which case the conditional probability for that phoneme can be easily determined from the appropriate confusion matrix. For example, if the stimulus word is /kΛt/ and the neighbor /æt/, the neighbor /æt/ is assumed to have the transcription /0æt/. The conditional probabilities for the neighbor phonemes would then be: p(0|k), p(æ|æ), and p(t|t). (See Luce, Reference Note 5, for further discussion regarding the applicability of the rule to items other than CVC words.)

In this manner, NWPs were determined and the NPR was computed for each of the 811 CVC stimulus words. The NPRs were computed separately for each SN ratio for each word using the confusion matrices appropriate to that SN ratio. Thus, when computing the NPR for a given SN ratio, the confusion matrix obtained at that SN ratio was used to determine the SWPs and NWPs. That is, three predicted identification scores were computed for each word, one for each of the three SN ratio.

Correlation Analysis

The outputs of the FWNPR was combined with the scores for the 811 CVC words and submitted to correlation analyses. The correlations between identification accuracy and the FWNPR for each of the three SN ratios are shown in Table 1. For comparison, the correlations between identification accuracy and word frequency are also shown. (Note that word frequency is generally considered to be one of the most powerful single predictors of word recognition performance.) All correlations were significant beyond the 0.05 level. The correlation between FWNPR and identification performance was highest at the intermediate level of stimulus degradation, probably because there was simply more variance to account for at this SN ratio. Furthermore, the FWNPR was superior to word frequency at all but the lowest SN ratio, where performance of the two variables was virtually identical. (The difference between the correlations at the –5 SN ratio was not significant, p > 0.05.) Given that overall performance was only approximately 14% correct at this SN ratio, there was simply little variance in accuracy to account for by FWNPR.

TABLE 1.

Correlations between the frequency-weighted neighborhood probability rule (FWNPR), word frequency, and identification scores for each signal to noise (SN) ratio.

p < 0.05.

The FWNPR produced significant, positive correlations at each of the SN ratios, demonstrating that identification of spoken words in noise is a function of the number and nature of items activated in the similarity neighborhood. A number of factors make the success of the rule impressive. First, the obtained correlations were computed for a large number of stimuli (N = 811). The number of stimuli in fact virtually exhausts the entire population of highly familiar, CVC words in English. The success of the FWNPR is thus rather remarkable given the large number of stimuli examined in this study.

The performance of the rule is even more striking given that no specific information about the idiosyncratic acoustic-phonetic structures of the individual stimuli was included in the rule. Only information concerning the relative intelligibility and confusability of the individual segments was included, and this information was obtained from an independent source of data, namely, the confusion matrices obtained in a separate experiment with different subjects. Thus, the rule was able to achieve the obtained level of performance in the absence of specific measurements of the spectral, durational, and amplitude characteristics of the specific phonetic segments of the individual words.

The FWNPR also incorporates three sources of information that may introduce considerable noise in predicting identification. The first comes from the confusion matrices themselves. The confusion matrices were obtained from a separate pool of subjects and were based on CV and VC syllables. The pattern of confusions obtained from the CV and VC syllables may differ in fundamental ways from the pattern of confusions produced by real words. In particular, response biases frequently observed in confusion matrices of this type may introduce significant sources of noise in predicting confusions among real words (Klatt, 1968; Miller & Nicely, 1955; Wang & Bilger, 1973). Obtaining confusion matrices for individual segments in a task requiring absolute identification of these segments in nonsense syllables may, therefore, reflect biases that may be inappropriate for predicting confusions among segments in real words. However, this does not mean that the use of confusion matrices to determine stimulus and NWPs was misguided (Moore, Reference Note 8). Indeed, the use of confusion matrices provides the only independent means of assessing stimulus intelligibility and confusability. However, in assessing the performance of the rule, it must be kept in mind that the confusion matrices provide less than perfect estimations of intelligibility and confusability of real words. In light of these observations, then, the performance of the NPR is even more impressive.

A second source of possible noise introduced in FWNPR arises from the lexicon used to compute neighborhood structure. Despite the controls placed on the inclusion ofwords in the neighborhoods of the stimulus words, the lexicon used may tend either to underestimate or overestimate the actual mental lexicons of the subjects themselves. The computer-based lexicon serves as only a very general model of the mental lexicon of the subject, thus introducing a potentially large source of noise in the estimation of neighborhood structure. However, in the absence of well-controlled techniques for estimating the nature and number of lexical items in the mental lexicon of a particular subject, the lexicon used in the present study provides an invaluable tool for determining neighborhood structure (Lewellen, Goldinger, Pisoni, & Greene, 1993). Indeed, before the availability of computerized lexicons containing phonetic transcriptions, such estimations of neighborhood structure would have been nearly impossible without these computational techniques.

A third source of noise in predicting identification may have arisen from the use of the Kucera and Francis frequency counts. These counts are not only somewhat dated, having been obtained in the 1960's, but they are also based on printed text. However, given that frequency counts were required for a large number of words, the use of available counts of spoken words was not feasible. Thus, the Kucera and Francis counts, although problematic, provided one of the single best estimates of word frequency available for a large number of stimuli.

Once these factors are taken into consideration, the performance of the FWNPR proves to be more than adequate. The results, therefore, clearly demonstrate the role of neighborhood structure in word identification.

Qualitative Analysis

Recall that the FWNPR predicts that identification performance is a function of the intelligibility of the stimulus word, the confusability of its neighbors, and the frequencies of the stimulus word and its neighbors. According to this view, frequency thus serves to bias the choice of a word from its neighborhood. Note that the effects of frequency are contingent on the nature of the words residing in the similarity neighborhood. As in Triesman's (1978a, b) partial identification theory, frequency effects are assumed in the rule to be relative. For example, high-frequency stimulus words residing in neighborhoods containing high-frequency neighbors are predicted by the rule to be identified at approximately equal levels of performance to low-frequency words residing in low-frequency neighborhoods, assuming that stimulus intelligibility and neighborhood confusability are held constant. That is, the frequency of the stimulus word alone will not determine identification performance. Instead, stimulus word frequency must be evaluated in terms of the frequencies of the neighbors of the stimulus word, as well as the confusability of the neighbors. Thus, the rule implies a complex relation between the stimulus word and its neighbors, such that stimulus frequency, neighbor frequency, stimulus intelligibility, and neighborhood confusability all act in combination to determine identification performance.

The rule, therefore, makes a number of important predictions depending on the SWP and the sum of the neighbor probabilities. For simplicity, we will define the sum of the frequency-weighted NWPs, i.e.,

as the overall frequency-weighted neighborhood probability, or FWNP. Inspection of Equation 6 reveals that if the frequency-weighted stimulus word probability (FWSWP) (i.e., SWP*FreqS, or the numerator of Equation 6) is held constant, as FWNP (the right-hand term of the denominator of Equation 6) increases, predicted identification will decrease. Likewise, if FWNP is held constant, then increases in FWSWP will result in corresponding increases in predicted identification. The interesting cases arise, however, when both the FWSWP and FWNP are allowed to vary. Consider the four cases in which the FWSWP and FWNP can take on either high or low values: 1) FWSWP high-FWNP high, 2) FWSWP high-FWNP low, 3) FWSWP low-FWNP high, and 4) FWSWP low-FWNP low. The predictions of the NPR for these four cases is shown in Table 2.

TABLE 2.

Predicted identification performance as a function of frequency-weighted stimulus word probability (FWSWP) and frequency-weighted neighborhood probability (FWNP).

| FWNP | FWSWP | |

|---|---|---|

| High | Low | |

| High | Intermediate | Low |

| Low | High | Intermediate |

As shown in Table 2, the rule predicts best performance for those words with high FWSWPs and low FWNPs. These are high-frequency words that, in a sense, “stand out” in their neighborhoods. The lowest performance is predicted for words with low FWSWPs and high FWNPs. These are low-frequency words that are least distinguishable in their neighborhoods. Interestingly, however, the rule predicts intermediate levels of performance for the remaining two cases. That is, words with high FWSWPs and high FWNPs are predicted to show approximately equal levels of identification performance to words with low FWSWPs and low FWNPs. Thus, the rule does not always predict an advantage for high-frequency words over low-frequency words. In addition, the rule predicts that words matched on FWSWP may show differential levels of performance depending on the FWNP, or frequency-weighted neighborhood structure. To determine weather the general pattern of predictions outlined in Table 2 holds for the present set of identification data, the following analyses were performed: for the 811 words, median values for the FWSWPs and FWNPs collapsed across SN ratio were determined. These median values were then used to divide the stimulus words into classes having high and low FWSWPs and high and low FWNPs. Altogether, four cells were analyzed (two levels of FWSWP by two levels of FWNP). Mean identification scores, collapsed across SN ratio, were then computed for words falling into each of the four cells. The results for the classification of word scores by FWSWP and FWNP are shown in Table 3. Predicted levels of performance are shown in parentheses.

TABLE 3.

Obtained identification performance (percent correct) as a function of frequency-weighted stimulus word probability (FWSWP) and frequency-weighted neighborhood probability (FWNP). Qualitative predictions are in parentheses.

| FWNP | FWSWP | |

|---|---|---|

| High | Low | |

| High | 50.56 (Intermediate) | 37.76 (Low) |

| Low | 64.03 (High) | 54.73 (Intermediate) |

As shown in this table, the pattern of results predicted by the NPR was clearly present in the identification data. As predicted, words with high FWSWPs and low FWNPs were responded to with the highest levels of accuracy; words with low FWSWPs and high FWNPs were responded to with the lowest levels of accuracy. The remaining two cases, as predicted, showed intermediate and nearly identical levels of identification performance. Note that words matched on FWSWP were responded to quite differently depending on the FWNP, demonstrating that stimulus word frequency is a direct function of the neighborhood in which the stimulus word occurs. This is also demonstrated by the cases showing intermediate levels of performance. Although the words in these cells differ substantially in their FWSWPs, they show nearly identical levels of identification performance, owing to the composition of their similarity neighborhoods. In short, the present analysis provides further empirical support for the hypothesis that spoken word recognition is the result of a complex interaction of stimulus word intelligibility, stimulus word frequency, and neighborhood confusability and frequency.

Discussion

Neighborhood Activation Model (NAM)

The NPRs developed above provided the groundwork for the development of a model of spoken word identification that we call the NAM. The basic postulate of the model is that the process of word identification involves discriminating among lexical items in memory that are activated on the basis of stimulus input. This is a fundamental principle in almost every current model of word recognition (e.g., Forster, 1976, 1979; Marslen-Wilson & Welsh, 1978; Paap, Newsome, McDonald, & Schvaneveldt, 1982). Indeed one of the most important issues in spoken word recognition concerns the processes by which discrimination among lexical items in memory is achieved (Pisani & Luce, 1987). The present model attempts to specify those factors responsible for the relative ease or difficulty of recognizing words arising from the processes involved in discrimination among sound patterns of words. Thus, a second fundamental principle of the model is that discrimination is a function of the number and nature of lexical items activated by the stimulus input. The “nature” of lexical items refers specifically to the acoustic-phonetic similarity among the activated lexical items as well as their frequencies of occurrence. The model is, therefore, concerned with the long-standing fundamental issue of word frequency. However, characterizing the effects of word frequency is only one aspect of the present model. More centrally, the model focuses primarily on structural issues concerning the process of lexical discrimination. Word frequency is important in the model only as a factor affecting the structural relationships among lexical items.

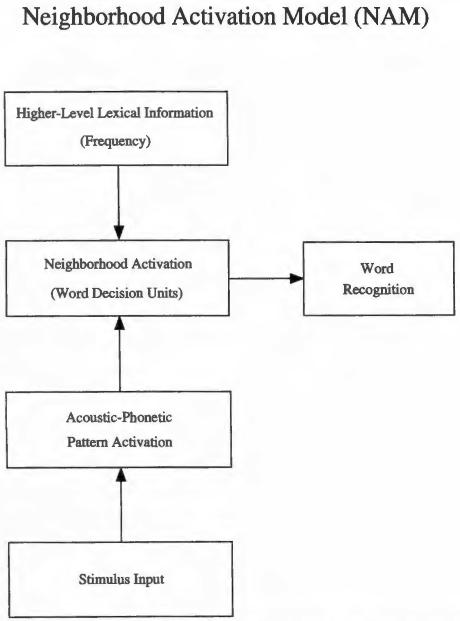

A flow chart of the NAM is shown in Figure 1. On presentation of stimulus input, a set of acousticphonetic patterns are activated in memory. It is assumed that all patterns are activated regardless of whether they correspond to real words in the lexicon or not, an assumption required by the fact that listeners can recognize the acoustic-phonetic form of novel words and nonwords. As in Triesman's (1978a, b) partial identification theory, the acoustic-phonetic patterns are assumed to be activated in a multidimensional acoustic-phonetic space in which the perceptual dimensions correspond to phonetically relevant acoustic differences among the patterns. Specification of the nature of these dimensions poses an important problem for any complete theory of speech perception and spoken word recognition (Luce & Pisoni, 1987). However, the present model is neutral with respect to the dimensions of the space. The only requirement of the model is that the dimensions of the space produce relative activation levels among the acoustic-phonetic patterns that are isomorphic with the dimensions of similarity to which subjects are sensitive.

Figure 1.

Flow chart for the neighborhood activation model.

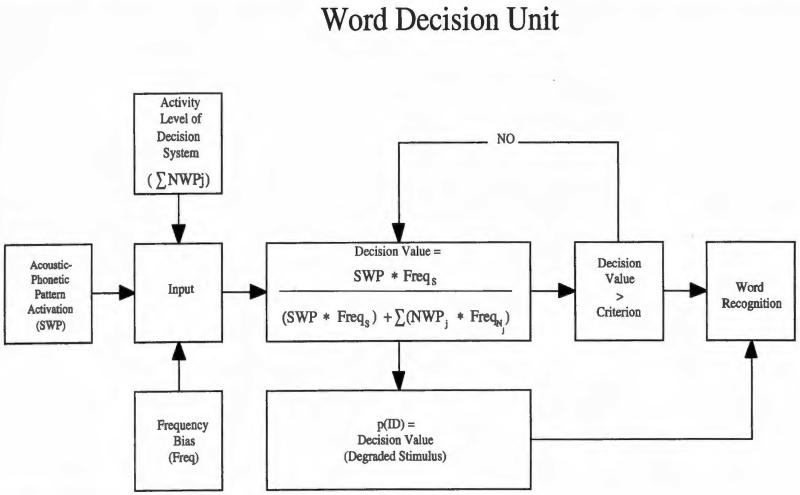

The acoustic-phonetic patterns then activate a system of word decision units tuned to the patterns themselves (Morton, 1969). A diagram of a single decision unit is shown in Figure 2. Only those acoustic-phonetic patterns corresponding to words in memory will activate a word decision unit. Neighborhood activation is assumed to be identical to the activation of the word decision units. Once activated, these decision units monitor the activation levels of the acoustic-phonetic patterns to which they correspond. After activation of the word decision units, these units then begin monitoring higher level lexical information relevant to the words to which they correspond. Word frequency is included in this higher level lexical information. In addition to monitoring higher level lexical information in long term memory, the decision units are also assumed to monitor any information in short term memory that is relevant to making a decision about the identity of a word.

Figure 2.

Diagram of a single word decision unit.

The system of word decision units is a crucial aspect of the NAM. These units serve as the interface between acoustic-phonetic information and higher level lexical information. Acoustic-phonetic information drives the system by activating the word decision units, affording priority to bottom-up information, as in cohort theory (Marslen-Wilson & Welsh, 1978). Higher level lexical information such as frequency is assumed to operate by biasing the decision units. These biases operate by adjusting the activation levels of the acoustic-phonetic patterns represented in the decision units. The biases introduced by higher level lexical information need not be under volitional control nor need they be conscious (Smith, 1980). Instead, these biases are assumed to be a fundamental aspect of word perception that enable optimization of the word recognition process via the employment of a priori probabilities and contextual information.

Each word decision unit is, therefore, responsible for monitoring two sources of information, acoustic-phonetic pattern activation and higher level lexical information. In addition, the decision units are assumed to be interconnected in such a way that each unit can monitor the overall level of activity in the system of units, as well as the activity level of the acoustic-phonetic patterns to which the units correspond (Elman & McClelland, 1986; McClelland & Elman, 1986). As analysis of the stimulus input proceeds, the decision units continuously compute decision values. These values are assumed to be computed via a rule of the type described by the NPR. In the NPR, the SWP corresponds to the activation level of the acoustic-phonetic pattern. The sum of the neighbor word probabilities (NWPjs) corresponds to the overall level of activity in the decision system. Frequency information serves as a bias, as in the FWNPR, by adjusting the activation levels of the acoustic-phonetic patterns represented in the word decision units.

As processing of the stimulus input proceeds, information regarding the match between stimulus input and the acoustic-phonetic pattern accrues. The activation levels of similar patterns drop and the decision values computed by the word decision unit monitoring the pattern of the stimulus steadily increase. Once the output of a given decision unit reaches criterion, all information monitored by that decision unit is made available to working memory. “Word recognition” is accomplished once the word decision unit for a given acoustic-phonetic pattern surpasses criterion (i.e., the acoustic-phonetic pattern is recognized). “Lexical access” occurs when higher level lexical information (i.e., semantic, syntactic, and pragmatic information) is made available to working memory. The term lexical access is actually somewhat misleading in the context of the NAM. Lexical information is monitored by the word decision units once these units are activated. However, this information is used only in the service of choosing among the activated acoustic-phonetic patterns and is, therefore, not available to working memory. Lexical access in the NAM is thus assumed to occur when lexical information is made available for further processing. The word decision units in the model, therefore, serve as gates on the lexical information available to the system (Morton, 1979). In so doing, the units prevent the cognitive system from “over-resonating,” making information available only once a decision is made as to the identity of the stimulus input.

The postulation of a system of word decision units is based on the finding that the FWNPR adequately predicted identification performance. Indeed, the system of word decision units is simply a processing instantiation of the NPR. However, the NAM, by instantiating the NPR in a system of decision units, makes a number of important claims. First, it is assumed that the word recognition system is, at least initially, completely driven by the stimulus input. Frequency information is thus assumed only to bias the decision units and not to affect the initial encoding of the acoustic-phonetic patterns. Thus, frequency information is not assumed to be an intrinsic part of the activation levels of the acoustic-phonetic patterns, but is assumed to be a bias that must be interpreted in the context of the frequencies of all other words. If frequency information were assumed to be intrinsic to the activation levels of the acoustic-phonetic patterns and no decisions were made based on the total activity of the system, low-frequency words would be responded to less accurately than high-frequency words regardless of their neighborhood structures, which is clearly in contradiction to the data reported above (Luce, Reference Note 5).

Having laid out a framework for interpreting neighborhood structural and frequency effects, we will now turn to a discussion of how the NAM accounts for the results of the perceptual identification study. Recall that the NAM, under normal circumstances, recognizes a word once the decision value for a given word exceeds criterion. It is assumed that stimulus degradation affects the word recognition system by impeding complete processing of the stimulus input. That is, only so much information can be obtained from the stimulus input when it is masked by noise. Given imperfect information, then, it is assumed that, in the long run, no decision unit will reach criterion, and a decision will thus be forced based on the available information. The “available information” is the state of the decision system at the point at which processing of the acoustic-phonetic information is completed. In a perceptual identification task, therefore, a response is made on the basis of the values of the decision units at the point at which processing is completed. Thus, the NPR developed above expresses the probability of choosing the stimulus word actually presented. If the stimulus input results in a large number of highly confusable, high-frequency neighbors, the probability of actually recognizing the stimulus word will be low. Likewise, if the stimulus input results in only a few confusable, low-frequency neighbors, the probability of identification will be high.

Note that because the decision units monitor both the activation levels of the acoustic-phonetic patterns to which they correspond as well as the overall activation of the decision system, probability of identification will not depend solely on the intelligibility of the stimulus word nor on neighborhood confusability. Words of low intelligibility with few confusable neighbors are predicted by the model to be equivalent to words of high intelligibility with many confusable neighbors. Indeed, as shown above, this prediction was borne out empirically. In short, perceptual identification is a function of the values of the decision units computed at the completion of stimulus processing. Furthermore, the role of stimulus degradation (whether arising from a noisy signal or a degraded input representation due to an impaired sensory apparatus) is assumed to be one of impeding complete processing of the stimulus input.

Summary

The NAM provides a conceptual and theoretical framework for instantiating the NPR developed here. To the extent that the NPR predicts identification performance, the model can be deemed an adequate account of the word identification process. Indeed, this rule was shown to make a number of precise predictions about the relative effects of stimulus intelligibility and neighborhood structure that were borne out by the data. In particular, the NPR predicts a complex interrelationship between the stimulus word and its neighbors. In addition, the FWNPR was able to account for the stimulus word frequency effect in terms of the frequency relationships between the stimulus word and its neighbors. The picture that begins to emerge from the present findings is one that emphasizes the complementary roles of discrimination and decision in spoken word recognition. Finally, both the data and the NAM emphasize the degree to which the structure of the mental lexicon influences word identification: precise accounts of the process of spoken word recognition are crucially tied to detailed accounts of the structural relationships among lexical items in memory.

Experiment 2: Evidence from Auditory Lexical Decision

The purpose of the present study was to explore further the effects of neighborhood structure on spoken word recognition. In particular, the lexical decision paradigm was employed to examine these effects. In the lexical decision paradigm, a subject is presented with a real word or a nonsense word, or nonword. The subject's task is to decide as quickly and as accurately as possible whether a given stimulus item is a word or nonword. The lexical decision task has proven quite useful in visual word recognition research in examining the effects of such variables as word frequency (Stanners, Jastrzembski, & Westbrook, 1975; Whaley, 1978; Forster, 1979). In general, it has been shown that high-frequency words tend to be classified as words more quickly than low-frequency words. Indeed, this has been a very robust finding in the literature, although there are numerous, and often times conflicting, accounts of frequency effects in lexical decision (Balota & Chumbley, 1984; Glanzer & Ehrenreich, 1979; Gordon, 1983; Paap, McDonald, Schvaneveldt, & Noel, 1986). A spoken analog of the visual lexical decision task thus presents a useful means of examining word frequency effects and the effects of neighborhood structure on spoken word recognition.

The use of the lexical decision task is also attractive for two other reasons. First, investigation of the process of spoken word recognition can be carried out in the absence of stimulus degradation. Although the perceptual identification experiment provided useful data regarding the effects of stimulus word frequency and neighborhood structure, a more robust test of these effects hinges on the demonstration that neighborhood structural effects can be demonstrated in the absence of stimulus degradation. In other words, it is important to demonstrate that the effects of neighborhood structure generalize beyond words that are purposefully made difficult to perceive.

The second advantage of the spoken lexical decision task is the ability to collect reaction time data. The reaction time data may aid in uncovering some of the temporal aspects of the effects of neighborhood structure on spoken word recognition. Furthermore, it is of crucial importance to demonstrate that neighborhood structure affects not only the accuracy of word recognition, but also the time course. Thus, the auditory lexical decision task provides a useful means of corroborating and generalizing the findings from the previous perceptual identification study.

The approach taken in the present study is similar to that in Experiment 1. Similarity neighborhood statistics, computed on the basis of Webster's lexicon, served as independent variables. The statistics of interest were again: 1) the number of words similar to a given stimulus word, or neighborhood density; 2) the mean frequency of the similar words or neighbors; and the 3) frequency of the stimulus word itself. Neighborhood density and neighborhood frequency were also manipulated for a set of specially constructed nonwords

The means of computing similarity neighborhood structure employed in the present study was somewhat different than in Experiment 1. Because the estimates of similarity of neighbors to their stimulus words in Experiment 1 was based on confusion matrices for consonants and vowels presented in noise, these measures of similarity are inappropriate for stimuli presented in the clear. Thus, a strictly computational means for estimating similarity neighborhood structure was employed. In this method, a given phonetic transcription (constituting the stimulus word) was again compared with all other transcriptions in the lexicon (which constituted potential neighbors). However, in this method of computing similarity neighborhood structure, a neighbor was defined as any transcription that could be converted to the transcription of the stimulus word by a one phoneme substitution, deletion, or addition in any position. For example, among the neighbors of the word /kæt/ would be /pæt/, /kIt/, and /kæn/, which each are derived on the basis of one phoneme substitutions in any position. Also included as neighbors would be the words /skæt/ and /æt/, derived on the basis of one phoneme additions or deletions. (Plurals and inflected forms of the stimulus were not included as neighbors.) The number of such neighbors constitutes the variable of neighborhood density. Neighborhood frequency again refers to the average of the frequencies, based on the Kucera and Francis counts, of each of the neighbors. And, stimulus word frequency refers to the frequency of the stimulus word for which the neighbors were computed (e.g., /kæt/).

This particular algorithm for computing neighborhood membership was based on previous work by Greenberg and Jenkins (1964) and Landauer and Streeter (1973), Sankoff & Kruskall (1983). Clearly, this method of computing neighborhood membership makes certain strong assumptions regarding phonetic similarity. In particular, it assumes that all phonemes are equally similar and that the similarities of phonemes at a given position are equivalent. However, this method of computing similarity neighborhoods provides a computationally simple means of estimating the number and nature of words similar to a given stimulus word presented in the absence of noise.

Because decision units only correspond to words actually occurring in memory, lexical decision in the context of the NAM can only be achieved by accepting or rejecting words. In particular, lexical decisions are assumed to be based—in a majority of the cases—on one of two criteria being exceeded (Coltheart, Develaar, Johansson, & Besner, 1976). A word response is executed if a decision unit determines that the activation level of the pattern it is monitoring exceeds the criterion, the normal procedure for recognizing a word and depositing its lexical information in working memory. However, the procedure for executing a nonword response is contingent on surpassing a lower level criterion. A nonword response is executed if the total activation level monitored by the decision units falls below a lower level criterion, indicating that no word is consistent with the stimulus input. According to the NAM, therefore, the primary means of word-nonword classification is based on the activity within the decision system surpassing or falling below one of two criteria, which can be referred to as the “word” and “nonword” criterion levels.