Abstract

Some children make impulsive choices (i.e., choose a small but immediate reinforcer over a large but delayed reinforcer). Previous research has shown that delay fading, providing an alternative activity during the delay, teaching participants to repeat a rule during the delay, combining delay fading with an alternative activity, and combining delay fading with a countdown timer are effective for increasing self-control (i.e., choosing the large but delayed reinforcer over the small but immediate reinforcer). The purpose of the current study was to compare the effects of various interventions in the absence of delay fading (i.e., providing brief rules, providing a countdown timer during the delay, or providing preferred toys during the delay) on self-control. Results suggested that providing brief rules or a countdown timer during the delay was ineffective for enhancing self-control. However, providing preferred toys during the delay effectively enhanced self-control.

Key words: children, delay, impulsivity, reinforcement, self-control, signals

Some young children exhibit impulsive behavior (i.e., choosing a smaller but immediate reinforcer over a larger but delayed reinforcer), whereas others exhibit self-control (i.e., choosing a larger but delayed reinforcer over a smaller but immediate reinforcer). Establishing or enhancing self-control might be important for several reasons. First, self-control may result in access to more preferred items, activities, and interactions (Hanley, Heal, Tiger, & Ingvarsson, 2007; Mischel, Ebbesen, & Zeiss, 1972; Schweitzer & Sulzer-Azaroff, 1988). For example, exhibiting impulsive behavior by stealing toys may result in only brief access to toys because caregivers are likely to remove the stolen toys and give them back to the child from whom they were stolen. Alternatively, exhibiting self-control and waiting for one's turn may result in extended access to toys because they are less likely to be removed by a caregiver. Second, children often encounter situations throughout the day in which they cannot have immediate access to certain items, activities, or interactions. For example, a child may be required to wait for a teacher's attention until the teacher has finished talking to another person or may be required to wait to go outside until the scheduled recess. These common situations in which reinforcement is delayed may evoke problem behavior (e.g., inappropriate vocalizations). Third, previous research has shown that self-control in early childhood is correlated with academic, social, and coping skills in adolescence (Mischel, Shoda, & Peake, 1988; Mischel, Shoda, & Rodriguez, 1992).

In both basic and applied research, several procedures have been evaluated to enhance self-control. A common method is delay fading, which involves gradually increasing the amount of wait time (Ferster, 1953; Fisher, Thompson, Hagopian, Bowman, & Krug, 2000; Schweitzer & Sulzer-Azaroff, 1988). For example, Schweitzer and Sulzer-Azaroff (1988) initially assessed children's self-control in the absence of intervention and found that children made impulsive choices (i.e., they consistently chose a small but immediate reinforcer over a large but delayed reinforcer). Subsequently, the experimenters implemented delay fading by presenting children with a small and immediate reinforcer and a large and immediate reinforcer and then gradually increasing the delay to the large reinforcer. That is, once a child consistently chose the large reinforcer, the delay to the large reinforcer was increased by 5-s increments. After delay fading, four of the five children tolerated delays that were at least 35 s longer than the delays they tolerated during baseline.

Another method that has been shown to enhance self-control is to provide an alternative activity during the delay (Anderson, 1978; Green & Myerson, 2004; Grosch & Neuringer, 1981; Ito & Oyama, 1996; Mischel et al., 1972). Grosch and Neuringer (1981) demonstrated that adding an alternative response (a disc on the back of the experimental chamber on which the pigeons could peck) resulted in an increase in self-control (i.e., the pigeons began to allocate responding to the larger but delayed reinforcer). In addition, both Mischel et al. (1972) and Anderson (1978) demonstrated that when children were given the option of waiting for a large reinforcer or switching and selecting a small reinforcer that then could be consumed immediately, children waited longer for the large reinforcer if there were toys available during the delay.

In addition to providing toys or other activities, researchers also have demonstrated that teaching children to repeat a rule during delays to reinforcement increases self-control (Hanley et al., 2007; Toner & Smith, 1977). Toner and Smith (1977) found that teaching preschool children to state a rule during delays to reinforcement increased the amount of time they waited for the large but delayed reinforcers. Specifically, children who were instructed to repeat a rule regarding waiting (e.g., “It is good if I wait”) waited longer for delayed reinforcers than did children who were not instructed to repeat a rule or who were instructed to repeat vocalizations related to the delayed reinforcer (e.g., “If I wait, I get the pretzels”). Hanley et al. (2007) extended previous research in two ways. First, they demonstrated that a group of children in a preschool classroom (rather than children in a more controlled setting) could be taught to repeat a rule (i.e., “When I wait, I get what I want”) during delays to reinforcement, which increased self-control. Second, Hanley et al. demonstrated that teaching children to repeat a rule during delays to reinforcement could be effective for decreasing problem behavior during delays imposed by both teachers and peers.

Several studies have shown that delay fading combined with other procedures has been effective for increasing self-control in humans. Delay fading has been combined with (a) providing an alternative activity during the delay (Binder, Dixon, & Ghezzi, 2000; Dixon & Cummings, 2001; Dixon, Rehfeldt, & Randich, 2003), (b) teaching participants to repeat rules during the delay (e.g., “If I wait a little longer, I will get the bigger one”; Binder et al., 2000), (c) providing a brief rule prior to reinforcer choice to increase the likelihood of choice of the larger but delayed reinforcer (e.g., Benedick & Dixon, 2009), or (d) presenting signals during the delay (e.g., Grey, Healy, Leader, & Hayes, 2009; Vollmer, Borrero, Lalli, & Daniel, 1999). For example, Vollmer et al. (1999) demonstrated that delay fading (i.e., a gradual increase from a 10-s to a 10-min delay) and the presentation of a countdown timer during the delay to reinforcement enhanced self-control for a young boy with developmental disabilities who engaged in aggression. Although providing a countdown timer or a brief rule during delays to reinforcement appears to be effective as a means of increasing self-control, it is unclear whether the timer or brief rule would be effective in the absence of delay fading.

Vollmer et al. (1999) suggested that continuous signals such as a countdown timer or other extraneous signals are not likely to be associated with delays to reinforcement in an individual's daily environment. Therefore, continuous signals may result in lower levels of treatment integrity, less acceptability, or both by caregivers. A more typical, and possibly more acceptable, means of signaling delayed reinforcement might be to provide a brief signal during the delay (e.g., saying “wait”; Vollmer et al.). Research has demonstrated that pigeons' responding for delayed reinforcement can be maintained by providing a brief signal during the delay (Schaal & Branch, 1988; Schaal, Schuh, & Branch, 1992); however, little research has evaluated the efficacy of brief signals for enhancing self-control in humans.

The research on self-control suggests that children can be taught to engage in self-control by (a) implementing delay fading; (b) providing an alternative activity during the delay; (c) teaching them to repeat a rule during the delay; or (d) implementing a combination of delay fading with an alternative activity, rule repetition, or a signal during the delay. Currently, it is unclear whether providing a continuous signal during the delay to the large reinforcer in the absence of delay fading is effective. It is also unclear whether a single rule presentation (e.g., “wait”) is effective. The purpose of the current study was to compare the effects of providing a countdown timer, a brief rule, and preferred toys, in the absence of delay fading, on self-control.

METHOD

Participants and Setting

Three typically developing preschool children (Larry, Nancy, and Amanda) who ranged in age from 3 to 5 years participated. All participants had age-appropriate language skills and could follow multistep instructions. Inclusion criteria for participation involved (a) sensitivity to magnitude of reinforcement in which the participant consistently chose a large immediate reinforcer over a small immediate reinforcer (see reinforcer magnitude assessment below) and (b) impulsivity in the absence of any intervention (see delay assessment below) as demonstrated by consistently choosing a small immediate reinforcer over a large delayed (either 2 min or 5 min) reinforcer. Sessions lasted 15 to 30 min and were conducted in a session room that contained a table, chairs, and relevant session materials. Sessions were conducted once or twice per day, 3 to 5 days per week and were separated by at least 30 min.

Preference Assessments

Prior to the first session, a paired-stimulus preference assessment (Fisher et al., 1992) was conducted to identify highly preferred edible items for each participant. The items were ranked based on the percentage of trials in which each was chosen. Prior to each session, the participants chose one edible item from the three most highly preferred to be used during that session. Prior to the beginning of toy sessions, the participants were taken to a toy room and allowed to choose four toys.

Response Measurement and Interobserver Agreement

Dependent variables were large reinforcer choice, small reinforcer choice, no reinforcer choice, and toy reinforcer choice. All dependent variables were scored using a frequency measure. Large reinforcer choice was defined as the participant choosing (touching) the plate that contained four pieces of a preferred food. Small reinforcer choice was defined as the participant touching the plate that contained one piece of a preferred food. No reinforcer choice was defined as the participant touching the plate that contained no food. Toy reinforcer choice was defined as the participant touching the toys.

Data also were collected on independent variables and additional experimenter behavior. Experimenter rules were defined as the experimenter saying, “When you wait, you get four pieces,” and were scored as a frequency measure. Timer delivery was defined as the experimenter starting the countdown timer and placing it in front of the participant and was scored as a frequency measure. Child rules were defined as the participant saying, “When I wait, I get four pieces,” and were scored as a frequency measure. Toy play was defined as the participant engaging with the preferred items or activities that were available following large reinforcer choices and was scored using partial-interval recording with 10-s intervals. Across all participants, the mean percentage of intervals with toy engagement was 97% (range, 91% to 100%). Stimulus delivery was defined as the experimenter providing the participant with an empty plate (no reinforcer delivery), a plate with one edible item (small reinforcer delivery), a plate with four edible items (large reinforcer delivery), or the toys (toy delivery).

Trained observers recorded data on handheld computers. Interobserver agreement was assessed by having a second observer simultaneously but independently record data during at least 30% of sessions across participants. Interobserver agreement was calculated on a trial-by-trial basis by comparing what the two observers scored on each trial, adding the number of trials with agreement, dividing by the total number of trials, and converting the quotient to a percentage. Mean agreement scores for the dependent variable for Larry, Nancy, and Amanda were 96% (range, 80% to 100%), 98% (range, 80% to 100%), and 96% (range, 80% to 100%), respectively. Mean agreement scores for other variables (i.e., independent variables and stimulus deliveries) for Larry, Nancy, and Amanda were 97% (range, 40% to 100%), 98% (range, 80% to 100%), and 97% (range, 80% to 100%). The lower range for Larry was due to one session in which there were three trials with a disagreement.

Procedure

During all assessments, a concurrent-operants arrangement was used to evaluate the number of choices for each of three stimuli. Participants first completed a reinforcer magnitude assessment to determine whether their behavior was sensitive to magnitude of reinforcement (i.e., whether they would select four food items over one food item when both were available immediately). Second, participants completed a baseline delay assessment to determine whether they would engage in impulsive behavior (i.e., whether they would select one item immediately over four items after a delay). In addition, the effects of reinforcer presence and absence were evaluated because previous research has demonstrated that organisms may wait longer when reinforcers are absent compared to present during delays to reinforcement (Grosch & Neuringer, 1981; Mischel & Ebbesen, 1970). Third, participants completed an assessment designed to determine whether an experimenter rule during delays to reinforcement or providing a countdown timer during delays to reinforcement would increase self-control. Fourth, participants completed an assessment designed to determine whether a self-stated rule during delays to reinforcement or providing toys during delays to reinforcement would increase self-control. Fifth, participants completed an assessment designed to determine relative preference between the toys provided in the previous assessment and the food items. A multielement design was used to compare the effects of the independent variables on increasing self-control. In addition, for one participant (Larry), a reversal design was used. The order in which the participants experienced the independent variable comparisons was counterbalanced.

General Procedure

For all sessions, the experimenter and participant sat across a table from each other. All sessions consisted of five trials. Each trial began with the experimenter presenting three stimuli. During the reinforcer magnitude assessment, baseline delay assessment, experimenter rule and timer assessment, and child rule and toys assessment, the experimenter placed three plates on the table in front of the participant. One plate contained one edible item (small reinforcer), a second plate contained four edible items (large reinforcer), and a third plate contained no edible items (no reinforcer). During the edible items versus toys assessment, the experimenter placed two plates and preferred toys in front of the participant. One plate contained four edible items; the second plate contained no edible items. Prior to all sessions, the experimenter told the participant the contingencies in place for that session (e.g., during the reinforcer magnitude assessment the experimenter said, “If you pick the empty plate you will not get any [food item]; if you pick the plate with one piece, you will get one piece of [food item] right away; and if you pick the plate with four pieces, you will get four pieces of [food item] right away”). In addition, the participant was given presession exposure to the contingencies for choosing each stimulus. That is, the participant was prompted to choose (by touching a plate or the toys) each of the three stimuli, and the contingencies associated with each stimulus were implemented after the choice (e.g., no food, one piece, or four pieces).

At the start of each trial, the experimenter presented the three stimuli (i.e., three plates in all conditions except for the edibles versus toys condition in which two plates and the toys were presented) and provided the prompt “Pick the one you want.” After the participant made his or her choice (by touching the plate or toys), the consequence associated with the participant's choice was implemented (e.g., if the participant selected the plate with one edible item, he or she was immediately given that plate and was allowed to consume the item). All attempts to access the stimuli before the experimenter provided them were blocked, and as little attention as possible was provided. Once the participant consumed the food, was given brief (i.e., 1 to 3 s) access to the empty plate, or played with the toys, either a new trial began or an intertrial interval (ITI; see below) was implemented prior to the next trial. The placement of the stimuli (i.e., left, center, or right) was determined pseudorandomly (i.e., no stimulus was placed in the same position for more than two consecutive trials).

Reinforcer Magnitude Assessment

During these sessions, one plate contained one edible item (small reinforcer), a second plate contained four edible items (large reinforcer), and a third plate contained no edible items (no reinforcer). Choosing the small, large, or no reinforcer plate resulted in immediate access to that plate.

Baseline Delay Assessment

During these sessions, one plate contained one edible item (small reinforcer), a second plate contained four edible items (large reinforcer), and a third plate contained no edible items (no reinforcer). Choosing the plate that contained the small reinforcer (one edible item) or the empty plate (no items) resulted in immediate delivery of the chosen plate. Choosing the plate that contained the large reinforcer resulted in a delay to delivery of the plate. We assessed responding under both 2-min and 5-min delays. Because there was a delay associated with one of the stimuli, an ITI (i.e., a period of time between the start of one trial and the beginning of the next trial) was utilized to hold trial duration constant and to ensure that the participant could not complete a session earlier by choosing the small immediate reinforcer. The ITI across all sessions (regardless of participant choice) was the delay period for that session plus 1 min. For example, if the delay for choosing the large reinforcer was 2 min, then when a participant chose the small- or no-reinforcer plate, the plate was delivered, and 3 min (2 min + 1 min) elapsed before the next trial. However, if the participant chose the large-reinforcer plate, the delay (2 min) elapsed, the plate was delivered, and then 1 additional minute elapsed before the next trial. We chose to calculate the ITI by adding 1 min to the delay because the participants were able to consume the large reinforcer in 1 min or less.

Food present

During food-present sessions, the large reinforcer choice resulted in the experimenter removing the small-reinforcer and no-reinforcer plates while the large-reinforcer plate remained in view. After a delay of 2 min, the experimenter delivered the plate that contained the four edible items. If a participant consistently chose the plate with four edible items when the delay was 2 min, the delay was increased to 5 min.

Food absent

Sessions were similar to food-present sessions; however, following a large reinforcer choice, the experimenter removed all plates. After the delay, the experimenter delivered the plate that contained the four edible items.

Experimenter Rule and Timer

During these sessions, a poster board (whose color was correlated with session type) was placed on the table to enhance discrimination of the different session types. A white board was present during baseline sessions, a blue board was present during experimenter-rule sessions, and a yellow board was present during timer sessions. Because participants made similar choices across food-present and food-absent sessions during the baseline delay assessment, we arbitrarily chose to have food present during the delay in this and all subsequent assessments.

Baseline

Sessions were identical to food-present sessions.

Experimenter rule

Sessions were similar to baseline; however, depending on the participant's choice, the experimenter provided a rule. Choosing no reinforcer resulted in the experimenter saying, “You get none,” while delivering the empty plate. Choosing the small reinforcer resulted in the experimenter saying, “You get one piece now,” while delivering the plate that contained one edible item. Choosing the large reinforcer resulted in the experimenter saying, “When you wait, you get four pieces,” and after the delay, the experimenter delivered the plate that contained four edible items.

Timer

Sessions were similar to experimenter-rule sessions except that choosing the large reinforcer resulted in the experimenter saying, “When you wait, you get four pieces,” and a countdown timer was started and placed in front of the participant during the delay. To promote discrimination between experimenter-rule and timer sessions, the timer was placed next to the large reinforcer option during choice trials.

Child Rule and Toys

During these sessions, a poster board (whose color was correlated with session type) was placed on the table to enhance discrimination of the different session types. A white board was present during baseline sessions, a blue board was present during child-rule sessions, and a yellow board was present during toy sessions.

Baseline

Sessions were identical to food-present sessions.

Child rule

Sessions were similar to experimenter-rule sessions except that choosing the large reinforcer resulted in the participant saying, “When I wait, I get four pieces,” once; after the delay, the experimenter delivered the plate that contained four edible items. The experimenter taught the participant to state the rule prior to the start of the first child-rule session by prompting the participant to state the rule until he or she was able to do it independently. That is, the experimenter provided an echoic prompt to state the rule using most-to-least prompting until the participant was able to state the rule in the absence of any experimenter prompts. There was no predetermined number of times the participant had to repeat the rule to be considered independent, and no data were collected on the number of trials required for the participant to state the rule in the absence of experimenter prompts. Before all subsequent sessions, the participant again was prompted to state the rule until he or she was able to do it independently. If the participant did not state the rule independently during presession exposure, he or she was prompted to practice the rule until he or she was able to say it in the absence of any experimenter prompts. On all trials, if the participant did not state the rule after a large reinforcer choice, the experimenter provided the least intrusive prompt necessary to evoke the brief response (e.g., glancing at the participant expectantly or saying “wh–?”). No data were collected on the frequency of prompted child-rule trials; however, anecdotally, participants rarely required prompts to state the rule following a large reinforcer choice.

Toys

Sessions were similar to child-rule sessions except that choosing the large reinforcer resulted in the participant saying, “When I wait, I get four pieces”; also, the experimenter provided access to preferred toys during the delay. After the delay elapsed, the experimenter removed the toys and provided the four edible items. Participants were neither prompted nor required to play with the toys. To enhance discrimination between child-rule and toy sessions, the preferred toys were placed behind the plate that contained the four edible items. As in child-rule sessions, participants were taught to state the rule independently, and they rarely required prompts to state the rule.

Edible Versus Toy Assessment

During toy sessions, the toys were available only after large reinforcer choices. Thus, it is possible that the participant chose the large reinforcer because this was the only option for which toys were available. That is, toys rather than the four edible items may have controlled large reinforcer choices. Therefore, we evaluated whether the toys were more preferred than the large reinforcer when both were available immediately. During these sessions, three stimuli were presented. The stimuli included one plate that contained no edible items (no reinforcer), a second plate that contained four edible items (food reinforcer), and the toys from toy sessions (toy reinforcer). Because participants were provided 5 min of access to preferred items during toy sessions, we continued to provide this amount of access during the current assessment. However, an ITI held trial duration constant and ensured that participants could not complete a session earlier by choosing the food. The ITI was calculated by adding 1 min to the toy access period to keep the ITI the same as in previous phases. Choosing no reinforcer resulted in the experimenter providing the empty plate. Choosing the food reinforcer resulted in the experimenter providing the four edible items immediately. Choosing the toys resulted in the experimenter providing access to the toys for 5 min.

RESULTS

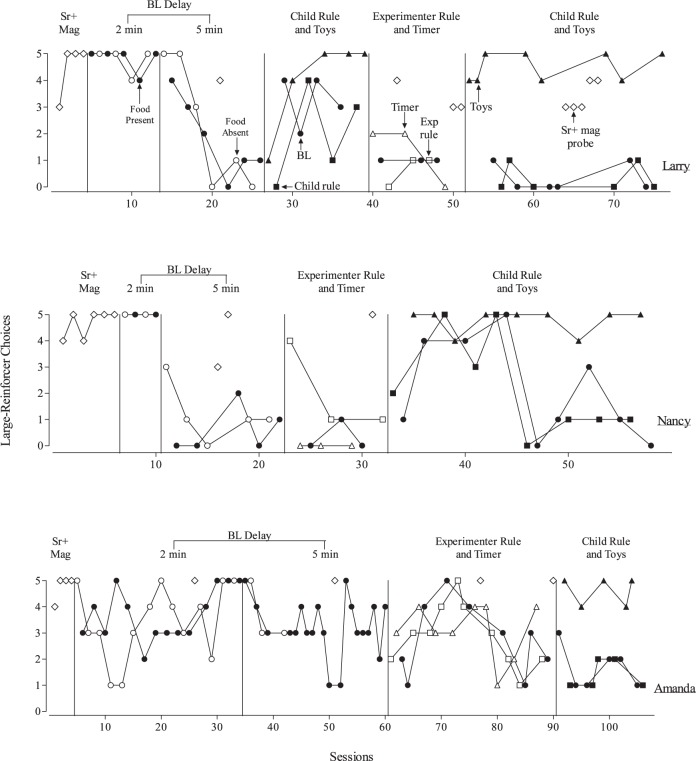

Figure 1 shows large reinforcer choice data from the reinforcer magnitude assessment; baseline delay assessment; experimenter-rule and timer assessment; and child-rule and toy assessment for Larry (top), Nancy (middle), and Amanda (bottom). Only large reinforcer choices are plotted because all three participants seldom chose no reinforcers; therefore, when they did not choose the large reinforcer, they typically chose the small reinforcer. Data on small and no reinforcer choices are available from the second author.

Figure 1.

Larry's, Nancy's, and Amanda's large reinforcer choices during the reinforcer magnitude assessment (Sr+ Mag), the baseline delay assessment (BL delay), the experimenter-rule and timer assessment, and the child-rule and toy assessment.

During the reinforcer magnitude assessment, all participants chose the large reinforcer on the majority of trials (Figure 1). That is, choice was sensitive to different magnitudes of reinforcement. During the baseline delay assessment, all participants exhibited self-control when the delay to the large reinforcer was 2 min. Amanda's choices were variable; however, consistent selection of the larger outcome was evident during the last five sessions. When the delay to the large reinforcer was increased to 5 min, participants exhibited impulsive behavior. (Anecdotally, participants did not engage in problem behavior during the delay to reinforcement.) To ensure that the decrease in participants' choice of the large reinforcer was not due to a decrease in sensitivity to different magnitudes of reinforcement, we conducted reinforcer magnitude assessment probes during the baseline delay assessment. During these probes, participants chose the large immediate reinforcer on the majority of trials, suggesting that their behavior was still sensitive to different magnitudes of reinforcement.

During the experimenter-rule and timer assessment, all participants infrequently chose the large reinforcer across experimenter-rule, timer, and baseline sessions, suggesting that neither the experimenter rule nor the timer were effective for enhancing self-control. During the child-rule and toy assessment, all participants infrequently chose the large reinforcer in child-rule and baseline sessions; however, during toy sessions, all participants chose the large reinforcer more frequently than during baseline or child-rule sessions.

During both the experimenter-rule and timer assessment and the child-rule and toy assessment, we also conducted several reinforcer magnitude assessment probes. Larry selected the large immediate reinforcer four times during the first probe in the experimenter-rule and timer assessment condition, suggesting that his behavior was still sensitive to different magnitudes of reinforcement. However, during the last two probes of this condition, he chose the large immediate reinforcer only three times, suggesting that either his behavior was less sensitive to different magnitudes of reinforcement or something about the rule or timer (e.g., watching a timer) made choice of the large reward aversive. To recapture choice of the large consequence and replicate the effects of the toy sessions, we reversed to the child-rule and toy assessment. During the reversal, Larry again selected the large delayed reinforcer more frequently in toy sessions than in child-rule or baseline sessions. Because of the decrease in the number of selections of the large immediate reinforcer during the reinforcer magnitude probes in the child-rule and timer assessment, we conducted several reinforcer magnitude probes during the child-rule and toy condition. During the first three probes, Larry chose the large immediate reinforcer three times per session; however, in the last two probes, he chose the large immediate reinforcer choice four times per session, suggesting that his choice behavior was again sensitive to different magnitudes of reinforcement. During the reinforcer magnitude assessment probes in the experimenter-rule and timer assessment and the child-rule and toy assessment, both Nancy and Amanda selected the large reinforcer on all trials, suggesting that their behavior was sensitive to different magnitudes of reinforcement.

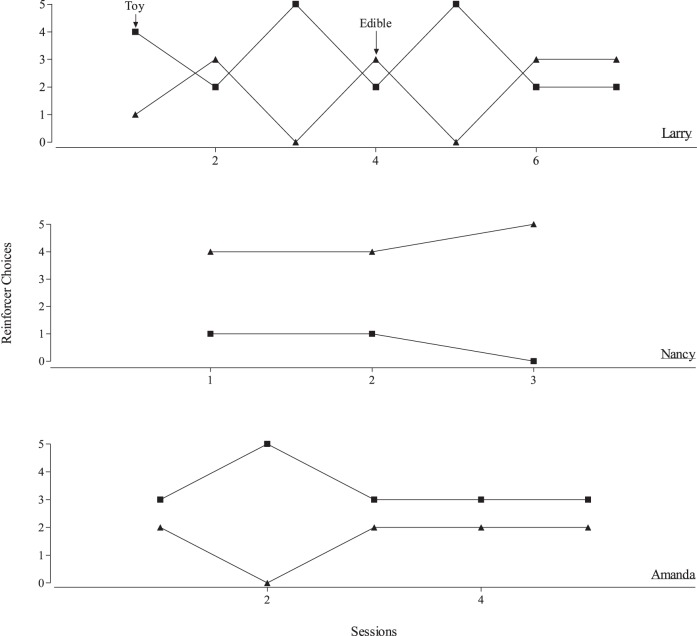

Based on the data from the child-rule and toy assessment for all three participants, it was unclear whether the participants were choosing the large delayed reinforcer during toy sessions because they preferred the toys that were available more than the edible items. Therefore, we evaluated preference for the toys and the food during the edible versus toy assessment. During three of the seven sessions, Larry chose the toys on more trials than the edible items; however, during the other four sessions he chose the toys and the edible items on a similar number of trials (Figure 2, top). These data suggest that Larry's choices during toy sessions may have been influenced by access to toys or the combination of toys and food. Nancy chose the food on more trials than the toys (Figure 2, middle). These data suggest that Nancy was not responding exclusively for the large delayed reinforcer in the toy sessions solely because such selections also produced toys. During the second session, Amanda chose the toys on all trials; however, during the other four sessions she chose the toys and edible items on a similar number of trials (Figure 2, bottom). These data suggest that Amanda's choices during toy sessions may have been influenced favorably by access to the toys or the combination of toys and edible items. (Data for no reinforcer choice are not depicted in Figure 2 because none of the participants chose the plate that contained no reinforcer.)

Figure 2.

Larry's, Nancy's, and Amanda's toy and edible reinforcer choices during the edible item versus toy assessment.

DISCUSSION

The purpose of the present study was to compare the effects of providing a countdown timer, a brief rule, and preferred toys in the absence of delay fading on self-control. We found that providing rules (regardless of whether the experimenter or child emitted the rule) and providing a countdown timer during the delay were ineffective for enhancing self-control; this result is somewhat inconsistent with results of previous research (e.g., Anderson, 1978; Binder et al., 2000; Grey et al., 2009; Hanley et al., 2007; Vollmer et al., 1999). Conversely, we found that providing access to toys during the delay effectively enhanced self-control, which is similar to the results of previous research (e.g., Anderson, 1978; Mischel et al., 1972). Finally, we evaluated whether access to toys promoted self-control because toys were more preferred than the edible items or because participants received a larger magnitude of reinforcement (i.e., the delivery of both the toys and the edible items). This additional analysis extends previous research. We found that the results were idiosyncratic across participants. Amanda and Larry chose toys more often than food, whereas Nancy chose food more often than toys. Thus, for Amanda and Larry, self-control may have occurred because of contingent access to preferred toys. For all three participants, self-control may have occurred because it produced access to a combination of toys and food.

Participants in previous studies on the provision of rules for enhancing self-control repeated the rule during the delay, whereas our participants were instructed to state the rule only once after choosing the delayed reinforcer. We chose to evaluate the use of brief rules based on the suggestion of Vollmer et al. (1999) that brief signals may be more akin to the signals provided in the natural environment. In addition, some basic research has demonstrated that brief signals can maintain responding under delayed reinforcement (e.g., Schaal & Branch, 1988; Schaal et al., 1992). However, in those studies, the efficacy of brief signals decreased as the delays to reinforcement increased. Schaal and colleagues suggested this may have occurred because the brief signal was no longer contiguous to the terminal reinforcer as the delay increased (i.e., the signal no longer served as a conditioned reinforcer). Although relatively little research has examined the effects of brief signals with humans, it seems possible that the brief rule was not effective in the current study because it was used during relatively long delays. Therefore, it was unlikely to be a conditioned reinforcer. However, we do not know whether the children repeated the rule covertly during the delay.

Similar to brief rules, providing a countdown timer during the delay to reinforcement was not effective for increasing participants' self-control in the current study. However, the results of Vollmer et al. (1999) and Grey et al. (2009) suggest that providing a countdown timer may enhance self-control. In the current study, we provided a countdown timer during fixed delays to reinforcement, whereas in previous research, the experimenters presented a countdown timer during gradually increasing delays to reinforcement (i.e., delay fading). Thus, there are several possible explanations for the different results. First, it may be that the delay fading used in previous research was the variable that enhanced self-control, and the countdown timer had no effect. Second, it may be that the delay fading enhanced the effects of the countdown timer. During delay fading, the initial delay is often short, which may result in the countdown timer developing greater conditioned reinforcing strength because it initially signals a very short delay to the terminal reinforcer. In fact, the provision of a timer or a rule in our study may have signaled long delays to reinforcement, which resulted in a decrease in self-control responses. That is, the presence of the timer or rule may have functioned similarly to the early signals in an extended chain schedule and led to a decrease in self-control selections (e.g., Jwaideh, 1973). Future research might evaluate the reasons for the difference between the results of the current study and previous research by comparing the effects of a rule (or other brief signals) or timer (or other continuous signals) with delay fading to those of a rule or timer with fixed delays to reinforcement.

Although our results were similar to those of previous research with respect to the effectiveness of providing toys during delays to reinforcement, it is unclear why this might be. One possible explanation is that providing toys during delays to reinforcement mediates the delay to reinforcement (i.e., playing with toys decreases the aversiveness of waiting). Given our procedures (i.e., toys were available only following the choice of the delayed reinforcer), another possible explanation is that the participants accessed both the large reinforcer (i.e., four pieces of food) and 5 min of access to toys for choosing the large delayed reinforcer. Thus, it is possible that self-control was enhanced due to a magnitude-of-reinforcement effect (i.e., access to a larger amount of reinforcement rather than a small immediate reinforcer). It also is possible that participants made large delayed choices because the toys were valuable (by themselves) and the toys were available only when participants made the large delayed choice. An interesting avenue of future research would be to evaluate systematically the relative preference of the toys provided during the delay to determine their respective impact on response allocation. Provision of low-preference toys may be less effective (or ineffective) in bridging the delay between choice and reinforcer delivery compared to highly preferred toys like those evaluated in the present study.

Finally, it is possible that participants in our study chose the large delayed reinforcer to access the toys because the toys were more preferred than the edible items. To address this possibility, we assessed whether the toys provided during the delay to reinforcement were more preferred than the food and found different results across participants. For Nancy, edible items were more preferred; for Larry, toys and food were similarly preferred; for Amanda, toys were somewhat more preferred. Although this analysis allowed us to determine that toy preference was not the sole reason for choosing the delayed reinforcer, limitations with the procedures did not allow us to determine whether magnitude of reinforcement resulted in preference for the delayed reinforcer. Future research could evaluate this by comparing choices for a small immediate reinforcer followed by access to toys versus access to toys followed by a large delayed reinforcer. Future research also could compare choices for one edible item versus 5-min access to toys, both available immediately. If participants selected the toys over the food, it would demonstrate that the behavior was not self-control but rather indicated a preference for toys over one immediate food item.

In summary, the current results suggest that providing participants with toys to play with during delays to reinforcement increased their choice of large delayed reinforcers, whereas giving participants something to say, a timer to watch, or telling participants to wait did not increase choice of large delayed reinforcers. However, it is still unclear why the toys increased choice of the large delayed reinforcer. Regardless of why making toys available during delays to reinforcement increases self-control, it is important to note that it is effective and therefore has clinical implications. For example, when going out to eat, parents could choose a few of their child's preferred toys and provide access to these toys while their child is waiting for dinner to increase self-control and decrease inappropriate behavior before the food is delivered (e.g., Bauman, Reiss, Rogers, & Bailey, 1983).

Footnotes

Matthew H. Newquist is now at Mains'l Services, Inc.

This study is based on a thesis submitted by the first author, under the direction of the second author, to the Department of Applied Behavioral Science at the University of Kansas in partial fulfillment of the requirements of the MA degree.

Action Editor, John Borrero

Special thanks to James Sherman for his suggestions and feedback on an earlier version of this manuscript.

REFERENCES

- Anderson W. H. A comparison of self-distraction with self-verbalization under moralistic versus instrumental rationales in a delay-of-gratification paradigm. Cognitive Therapy and Research. 1978;2:299–303. [Google Scholar]

- Bauman K. E, Reiss M. L, Rogers R. W, Bailey J. S. Dining out with children: Effectiveness of a parent advice package on pre-meal inappropriate behavior. Journal of Applied Behavior Analysis. 1983;16:55–68. doi: 10.1901/jaba.1983.16-55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benedick H, Dixon M. R. Instructional control of self-control in adults with co-morbid developmental disabilities and mental illness. Journal of Developmental and Physical Disabilities. 2009;21:457–471. [Google Scholar]

- Binder L. M, Dixon M. R, Ghezzi P. M. A procedure to teach self-control to children with attention deficit hyperactivity disorder. Journal of Applied Behavior Analysis. 2000;33:233–237. doi: 10.1901/jaba.2000.33-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M. R, Cummings A. Self-control in children with autism: Response allocation during delays to reinforcement. Journal of Applied Behavior Analysis. 2001;34:491–495. doi: 10.1901/jaba.2001.34-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon M. R, Rehfeldt R, Randich L. Enhancing tolerance to delayed reinforcers: The role of intervening activities. Journal of Applied Behavior Analysis. 2003;36:263–266. doi: 10.1901/jaba.2003.36-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster C. B. Sustained behavior under delayed reinforcement. Journal of Experimental Psychology. 1953;45:218–224. doi: 10.1037/h0062158. [DOI] [PubMed] [Google Scholar]

- Fisher W, Piazza C. C, Bowman L. G, Hagopian L. P, Owens J. C, Slevin I. A comparison of two approaches for identifying reinforcers for persons with severe and profound disabilities. Journal of Applied Behavior Analysis. 1992;25:491–498. doi: 10.1901/jaba.1992.25-491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fisher W. W, Thompson R. H, Hagopian L. P, Bowman L. G, Krug A. Facilitating tolerance of delayed reinforcement during functional communication training. Behavior Modification. 2000;24:3–29. doi: 10.1177/0145445500241001. [DOI] [PubMed] [Google Scholar]

- Green L, Myerson J. A discounting framework for choice with delayed and probabilistic rewards. Psychological Bulletin. 2004;130:769–792. doi: 10.1037/0033-2909.130.5.769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grey I, Healy O, Leader G, Hayes D. Using a Time Timer™ to increase appropriate waiting behavior in a child with developmental disabilities. Research in Developmental Disabilities. 2009;30:359–366. doi: 10.1016/j.ridd.2008.07.001. [DOI] [PubMed] [Google Scholar]

- Grosch J, Neuringer A. Self-control in pigeons under the Mischel paradigm. Journal of the Experimental Analysis of Behavior. 1981;35:3–21. doi: 10.1901/jeab.1981.35-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hanley G. P, Heal N. A, Tiger J. H, Ingvarsson E. T. Evaluation of a classwide teaching program for developing preschool life skills. Journal of Applied Behavior Analysis. 2007;40:277–300. doi: 10.1901/jaba.2007.57-06. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito M, Oyama M. Relative sensitivity to reinforcer amount and delay in a self-control choice situation. Journal of the Experimental Analysis of Behavior. 1996;66:219–229. doi: 10.1901/jeab.1996.66-219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jwaideh A. R. Responding under chained and tandem fixed-ratio schedules. Journal of the Experimental Analysis of Behavior. 1973;19:259–267. doi: 10.1901/jeab.1973.19-259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mischel W, Ebbesen E. B. Attention in delay of gratification. Journal of Personality and Social Psychology. 1970;2:329–337. doi: 10.1037/h0032198. [DOI] [PubMed] [Google Scholar]

- Mischel W, Ebbesen E. B, Zeiss A. R. Cognitive and attentional mechanisms in delay of gratification. Journal of Personality and Social Psychology. 1972;21:204–218. doi: 10.1037/h0032198. [DOI] [PubMed] [Google Scholar]

- Mischel W, Shoda Y, Peake P. K. The nature of adolescent competencies predicted by preschool delay of gratification. Journal of Personality and Social Psychology. 1988;54:687–696. doi: 10.1037//0022-3514.54.4.687. [DOI] [PubMed] [Google Scholar]

- Mischel W, Shoda Y, Rodriguez M. L. Delay of gratification in children. In: Lowenstein G, Elster J, editors. Choice over time. New York, NY: Sage; 1992. pp. 147–164. In. (Eds.) pp. [Google Scholar]

- Schaal D. W, Branch M. N. Responding of pigeons under variable-interval schedules of unsignaled, briefly signaled, and completely signaled delays to reinforcement. Journal of the Experimental Analysis of Behavior. 1988;50:33–54. doi: 10.1901/jeab.1988.50-33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaal D. W, Schuh K. J, Branch M. N. Key pecking of pigeons under variable-interval schedules of briefly signaled delayed reinforcement: Effects of variable-interval value. Journal of the Experimental Analysis of Behavior. 1992;58:277–286. doi: 10.1901/jeab.1992.58-277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schweitzer J. B, Sulzer-Azaroff B. Self-control: Teaching tolerance for delay in impulsive children. Journal of the Experimental Analysis of Behavior. 1988;50:173–186. doi: 10.1901/jeab.1988.50-173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toner I. J, Smith R. A. Age and overt verbalization in delay-maintenance behavior in children. Journal of Experimental Child Psychology. 1977;24:123–128. [Google Scholar]

- Vollmer T. R, Borrero J. C, Lalli J. S, Daniel D. Evaluating self-control and impulsivity in children with severe behavior disorders. Journal of Applied Behavior Analysis. 1999;32:451–466. doi: 10.1901/jaba.1999.32-451. [DOI] [PMC free article] [PubMed] [Google Scholar]