In 2008, the impact factor (often abbreviated IF) of the British Journal of Radiology rose from 1.77 to 2.3. I have written this article to help readers understand the importance of that achievement and to understand what the impact factor is, why it is important and how it should, and should not, be used.

The impact factor has a chequered history and has caused great contention in academic circles, where it has been heavily criticised and often derided. Criticisms focus mainly on the validity of the impact factor, possible manipulations of the data reported and its misuse [1–4].

Interestingly, the impact factor calculations could not be reproduced in an independent audit [5], although Thomson Scientific who now maintain and sell access to the Institute for Scientific Information (ISI) database from which the impact factor is calculated mounted a robust defence to these criticisms [6]. Despite this controversy, the impact factor is now an important quality driver in academic publication and is commonly used to compare the quality of journals, and to assess the quality of publications from individual academics.

What is the impact factor?

The impact factor was devised as an indicator of the importance of a particular journal in its field. It is a measure of the average number of citations to articles published in science and social science journals [7].

Before the introduction of the impact factor, the success and importance of a journal could be qualitatively assessed by the size of its readership and distribution, its financial success and its affiliation with professional organisations. However, all of these are subjective measures and in 1964 Dr Eugene Garfield, the founder of the ISI, wrote an essay describing the impact factor as an independent method of comparing the scientific quality and success of one journal to another [8].

The ISI began publishing Journal Citation Reports in 1975 and these are now updated yearly. The Web of Knowledge, from which the impact factor is calculated, indexes 9000 science and social science journals from 60 countries The journal citation reports are a proprietary product of the ISI and are widely, although not freely, available.

How is the impact factor calculated?

In any given year the impact factor of a journal is the average number of citations to papers published in the journal in the previous two years. Thus, for 2008 the impact factor is calculated as:

IF _ A/B

where

A _ the number of times articles published in 2006 and 2007 were cited by indexed journals during 2008.

B _ the total number of “citable items” published in 2006 and 2007.

Importantly, not all published content is considered citable. Citable items include articles, reviews, proceedings or notes, but not editorials or letters to the editor.

What is the impact factor used for?

The original concept of the impact factor was as a marketing tool for publishers, who could charge higher advertising rates and cover costs for highly cited journals. It was also intended to guide librarians on how to select journals that should be included within their catalogue.

However, the use of the impact factor rapidly expanded until it became widely regarded as a shorthand measure of a journal's quality [3]. Inevitably, these gauges of quality were used to judge the output quality of individual researchers and to be widely used in academic appointments, and as an evaluation tool for promotion and tenure review committees [4].

What is the effect of the impact factor?

There can be no doubt that the impact factor has become extremely important and this importance has, in many ways, changed the way that scientists publish and the way journals operate.

The impact factor has a major impact on scientists. Employers and review committees faced with competing CVs may evaluate scientists on the “quality” of the journals in which they have published. Researchers building their research profile know that they will be judged by the impact factor of the journals in which they publish. Consequently, researchers take the impact factor of a journal into account. Researchers shopping for high impact factors are, therefore, less likely to have loyalty to any individual journal, instead submitting to the “best” journal that they think will accept their submission. Some may argue this is a good thing and that the impact factor becomes a self-fulfilling prophecy, since the “best” articles will increasingly appear in the “best” journals.

The impact factor also has major implications for publishers. It is important that they maintain and improve journal quality if they are to maintain income, and continue to attract high impact publications.

With impact factor now widely regarded as the de-facto measure of quality, publishers have to increasingly play “The impact factor game”. Previous reviews have spoken of “unprincipled publishers attempting to manipulate the IF for their journal” [9], but the truth is, if the impact factor is the measure of journal quality that is used, then publishers would be failing in their duty to protect the competitive position of their publication if they did not “play the game” [10]. Like any game, there are rules and these are set by the ISI.

Publishers can modify their publication strategy to increase the numerator (number of citations) or decrease the denominator (number of citable articles) of the impact factor calculation [2]. For example:

it is no longer in the interests of publishers to consider articles which they know are unlikely to be widely cited. This has led to the almost complete death of the clinical case report. Case reports are highly educational for practicing clinicians, but are very seldom cited. Consequently, case reports contribute to the numerator, but are unlikely to contribute to the denominator.

Similarly, review articles are widely cited and, interestingly, 10 of the 20 top impact factor journals in 2008 publish only review articles and have the word review in the title. Therefore, inviting or commissioning reviews is increasingly big business and the number of major review articles in many journals has increased.

Editorials, letters to the editor and other non-source materials may attract citations, but do not count in the denominator. What counts as citable is decided by discussion with Thompson Scientific, which owns the ISI. Such negotiations have been reported to result in impact factor variation of more than 300% [11].

No distinction is made between articles cited in the journal itself (self-citations) or in other journals. If editors suggested two appropriate references to each author publishing in the journal, then the citation index would automatically be two.

Overviews and collations of articles published in the journal greatly increase the numerator with little effect on the denominator. In 2007, a specialist journal with an impact factor of 0.66 published an editorial that cited all its articles from 2005 to 2006 in a protest against the absurd use of the impact factor [12]. The large number of citations meant that the impact factor for that journal increased to 1.44. As a result of the increase, the journal was not included in the 2008 Journal Citation Report [13].

Is the impact factor a good measure of quality?

There have been numerous criticisms of the impact factor and many alternative measures have been proposed [3]. The truth is that, although the impact factor has significant problems, it will continue to be used, if only because of its simplicity and wide availability.

In many ways, the impact factor is a good measure if it is used in the way, and for the purpose for which, it was designed. That is, as direct comparison of the short-term impact of competing journals in a specific research field.

Unfortunately the impact factor is commonly used inappropriately, which is the cause of much of the criticism levelled against it [4]. For example, it is inappropriate to use the impact factor for:

Comparing different journals within different fields with different subject matter. When the impact factor was introduced into the UK research assessment exercise (which compares the quality of research in different universities), one medical school dean wrote to all academics instructing them not to submit papers to any publication with an impact factor of less than seven (personal communication). This caused anxiety, since in many specialist areas with a small number of researchers, like radiology, none of the major journals had an impact factor approaching seven. Indeed, in 2003 the impact factor of Radiology (the top ranked radiology journal in that year) was less than five.

Judging the quality of an individual paper or researcher. BJR remains some way from being the top radiology journal. Nonetheless, Sir Godfrey Hounsfield's original paper describing the invention of CT was rather influential and won the Nobel Prize. Sir Peter Mansfield's Nobel Prize-winning work on echo-planar imaging was also partly presented in the BJR (Figure 1). No one, to my knowledge, has so far suggested that these were actually bad papers because they were not published in a better journal. In contrast, papers which make major claims but are later realised to be flawed are commonly quoted but, by definition, are not of high quality. Analysis of the citation patterns from individual journals shows that the majority of the citations result from a relatively small proportion of the citable articles – the distribution is not normal. This means that the importance of any given individual publication will be different from, and in most cases less than, the journal average [14]. As a consequence of this the Higher Education Funding Council for England was urged by the House of Commons Science and Technology Select Committee to remind Research Assessment Exercise panels that they are obliged to assess the quality of the content of individual articles, not the reputation of the journal in which they are published [15].

Measuring the overall impact of a journal on the scientific and medical community. Remember that a journal could have a very high impact factor by publishing a small number of high quality review articles, which are then widely cited. However, it would have actually added nothing new to the state of knowledge and the actual number of citations could be very low. Conversely, a clinical journal such as the BJR may be read by all the members of a clinical community and be highly influential on clinical practice without those clinicians subsequently writing research articles which cite it.

Figure 1.

Sir Godfrey Hounsfield (left) who received the 1979 Nobel Prize. Elected CBE in 1976 and knighted in 1981 and Sir Peter Mansfield (right) who received the Nobel Prize in 2003. Would they have been more successful if they published in a journal with a higher impact factor?

One major problem with the impact factor is deciding exactly what represents a comparable journal. The ISI identify groups of journals, with similar focus and these are often used to rank journals within their speciality. Thus, the BJR appears in the group entitled Radiology, Nuclear Medicine and Medical Imaging. While this appears at first sight to be a sensible grouping, closer examination reveals significant inconsistencies. It is clearly inappropriate to compare citation index for the BJR with a non-clinical journal such as Neuroimage, a dedicated interventional journal such as Cardiovascular Interventional Radiology or even a major nuclear medicine journal such as the European Journal of Nuclear Medicine and Molecular Imaging.

What other measures of impact are there?

The problems associated with the impact factor have led to the development of a wide range of other metrics, each designed to address some perceived shortcoming of the impact factor. Some of these are also calculated and published by the ISI, others are published by other competing providers of bibliographic indexing services [16]. Commonly used measures published by the ISI include:

the immediacy index. The number of citations the articles in a journal receive in a given year divided by the number of articles published.

the cited half-life. The median age of the articles that were cited in Journal Citation Reports each year. For example, if a journal's half-life in 2005 is five, that means the citations from 2001–2005 are half of all the citations from that journal in 2005, and the other half of the citations precede 2001.

the aggregate impact factor for a subject category. This is calculated taking into account the number of citations to all journals in the subject category and the number of articles from all the journals in the subject category.

Other commonly used measures include the EigenfactorTM score, which is freely available online [17]. The EigenfactorTM score of a journal is an estimate of the percentage of time that library users spend with that journal. The Eigenfactor is calculated from a mathematical algorithm based on a model of reader activity. In the model, the reader follows changes of citation from journal to journal. Using actual information about the frequency of citations the model can be used to generate direct estimates of which journals are likely to be used accounting for journal size, number of citations allowed in articles and journal prestige. More detail is available from the eigenfactor.org website (http://eigenfactor.org/map/methods.htm).

What does the increase in the impact mean for the BJR?

Having dissected the meaning and effects of the impact factor, we return to the original question as to whether the rise from 1.77 to 2.3 is important. To cut a long story short, the answer is that this represents a real improvement in the importance and impact of the BJR.

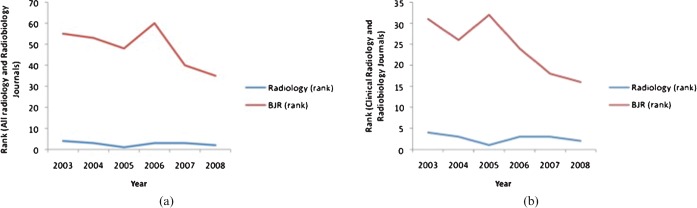

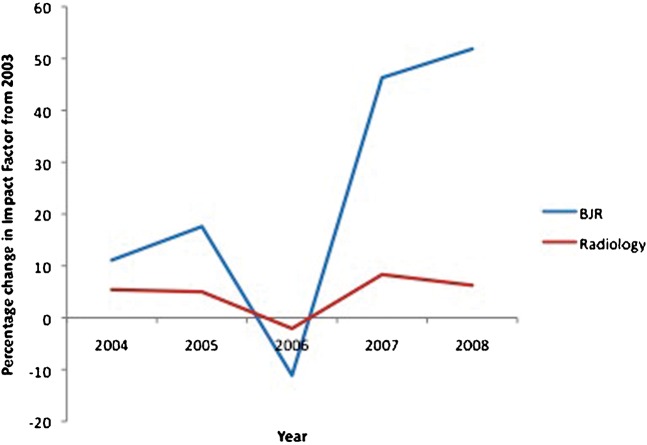

Table 1 shows the 2008 rankings of the BJR for impact factor and other citation metrics. Compared to the total ISI group of Radiology, Nuclear Medicine and Medical Imaging the BJR currently ranks 35th in the group, compared to 55th in 2003 (Figure 2a). However, eliminating “non-comparable” journals from the list (dedicated nuclear medicine, research neuroscience and other non-clinical imaging, and dedicated sub-speciality imaging journals) shows the BJR at 16th (Figure 2b). Direct comparison with Radiology, the lead diagnostic radiology journal, shows a genuine improvement in impact factor performance since 2006 (Figure 3).

Table 1. Rank position of the BJR according to data from the ISI. The middle column shows the rank within the overall Radiology, Nuclear Medicine and Medical Imaging group defined by the ISI. The right-hand column shows the rank when compared to journals with comparable subject matter and remit.

| Scoring system | Medical Imaging (Rank) | Clinical Radiology and Radiobiology (Rank) |

| Articles (145) | 34 | 19 |

| Total cites (6011) | 19 | 16 |

| Impact factor (2.366) | 35 | 16 |

| Cited half-life (9.2) | 8 | 7 |

| Immediacy index (0.31) | 43 | 24 |

| Eigenfactor (0.0129) | 26 | 16 |

| Article influence (0.63) | 36 | 23 |

Figure 2.

Graphs showing the change in rank of Radiology and the BJR since 2003. (a) shows the overall rankings within the overall Radiology, Nuclear Medicine and Medical Imaging group defined by the ISI. (b) shows the rank when compared to journals with comparable subject matter and remit.

Figure 3.

Graph comparing the proportional improvement in impact factor since 2003 for the BJR and Radiology.

Furthermore, it is of interest that the BJR continues to rank highly in terms of its cited half-life. This shows that half of the articles from the BJR cited in 2008 were from the period 1999–2008. This demonstrates that articles published within the BJR continue to be cited and all the importance for far longer than those in many comparable journals with client impact factors. This reflects the nature of the publication strategy for the BJR, which reflects translational and clinical imaging studies capable of influencing practice over a sustained period of time.

References

- 1.European AssociationofScienceEditorsstatementonimpactfactors http://www.ease.org.uk/statements/EASE_statement_on_impact_factors.shtml, retrieved 22-11-09. [Google Scholar]

- 2.Kurmis AP. Understanding the limitations of the journal impact factor. J Bone Joint Surg Am 2003;85-A:2449–54 [DOI] [PubMed] [Google Scholar]

- 3.Harter S, Nisonger T. ISI's Impact Factor as Misnomer: A Proposed New Measure to Assess Journal Impact. J Am Soc Inf Tech 1997;48:1146–1148 [Google Scholar]

- 4.Currie M, Wheat J. Impact Factors in Nuclear Medicine Journals. J Nucl Med 2007;48:1397–1400 [DOI] [PubMed] [Google Scholar]

- 5.Rossner MH, Van Epps , Hill E. Show me the data. J Cell Biol 2007;179:1091–2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Thomson ScientificCorrectsInaccuraciesInEditorial http://forums.thomsonscientific.com/t5/Citation-Impact-Center/Thomson-Scientific-Corrects-Inaccuracies-In-Editorial/ba-p/717;jsessionid _ 952C8E7C5F54E2F9208D9E0CB0E299BD#A4, Retrieved 22-11-09. [Google Scholar]

- 7.Introducing theImpactFactor http://www.thomsonreuters.com/products_services/science/academic/impact_factor/, Retrieved 22-11-09. [Google Scholar]

- 8.Garfield E. “Science Citation Index”—A New Dimension in Indexing. Science 1964;144:649–654 [DOI] [PubMed] [Google Scholar]

- 9.Fassoulaki A, Papilas K, Paraskeva A, Patris K. “Impact factor bias and proposed adjustments for its determination”. Acta Anaesthesiologica Scandinavica 2002;46:902–5 [DOI] [PubMed] [Google Scholar]

- 10.Monastersky R. 2005. “The Number That's Devouring Science”. The Chronicle of Higher Education. http://chronicle.com/free/v52/i08/08a01201.htm. [Google Scholar]

- 11.PLoS Medicine Editors(June 6 2006). “The Impact Factor Game”. PLoS Medicine. doi:10.1371/journal.pmed.0030291 Retrieved 22-11-09 [Google Scholar]

- 12.Harm K, Schuttea, Jan G., Svec “Reaction of Folia Phoniatrica et Logopaedica on the Current Trend of Impact Factor Measures”. Folia Phoniatrica et Logopaedica 2007;59:281–285 [DOI] [PubMed] [Google Scholar]

- 13.Journal CitationReports®Notices http://admin-apps.isiknowledge.com/JCR/static_html/notices/notices.htm Retrieved 22-11-09. [Google Scholar]

- 14.“Not-so-deep impact”. Nature 2005;435:1003–4 [DOI] [PubMed] [Google Scholar]

- 15.“House ofCommons–ScienceandTechnology–TenthReport” 2004-07-07. http://www.publications.parliament.uk/pa/cm200304/cmselect/cmsctech/399/39912.htm. Retrieved 22-11-09. [Google Scholar]

- 16.Roth D. The emergence of competitors to the Science Citation Index and the Web of Science. Current Science 2005;89:1531–1536 [Google Scholar]

- 17.Eigenfactor. org. http://www.eigenfactor.org/ [Google Scholar]