Abstract

A comprehensive mentoring program includes a variety of components. One of the most important is the ongoing assessment of and feedback to mentors. Scholars need strong active mentors who have the expertise, disposition, motivation, skills, and the ability to accept feedback and to adjust their mentoring style. Assessing the effectiveness of a given mentor is no easy task. Variability in learning needs and academic goals among scholars makes it difficult to develop a single evaluation instrument or a standardized procedure for evaluating mentors. Scholars, mentors, and program leaders are often reluctant to conduct formal evaluations, as there are no commonly accepted measures. The process of giving feedback is often difficult and there is limited empirical data on efficacy. This article presents a new and innovative six‐component approach to mentor evaluation that includes the assessment of mentee training and empowerment, peer learning and mentor training, scholar advocacy, mentee–mentor expectations, mentor self‐reflection, and mentee evaluation of their mentor. Clin Trans Sci 2012; Volume 5: 71–77

Keywords: mentors, evaluation, outcomes

Introduction

There is increasing evidence that new investigators with strong, committed active mentors are more likely to succeed as independent investigators. 1 This is especially true for new investigators working in the area of clinical translational science. Many of these scholars are conducting their research outside the traditional wet lab setting, which involves analysis of secondary data sets and clinically generated data warehouses. Primary research data collection is generally conducted in clinical and community settings where they interact with multidisciplinary teams across several schools and universities. They often have multiple mentors and supervisors. The days of working in one laboratory at one institution, under the supervision of single scientist, is becoming a practice of the past. 2

Clinical translational science has evolved over the years into a very complex multidisciplinary effort that, increasingly, is conducted with less protected time. The average length of funding for KT2 scholars has dropped from 4 to 2 years 3 between 2008 and 2011, although the goals of extramural funding remain the same. If scholars are not being met with mentoring that robustly addresses their scientific and career development needs, the effectiveness of the K mechanism will be minimized. Therefore, from a programmatic perspective, evaluating K scholar research mentors has become an important component of a comprehensive mentoring program.

The purpose of this article is to present a new six‐component approach to mentor evaluation that includes the assessment of mentee training and empowerment, scholar advocacy, mentee‐mentor expectations, peer learning and mentor training, mentor self‐reflection, and mentee evaluation of their mentor. This article builds on previously published Clinical and Translational Science Awards (CTSA) mentor working group white papers focused on the various elements of mentoring. 4 , 5 , 6 , 7

Methods

This article is based on several data sources including (1) a review of current evaluation instruments used by CTSAs available on the CTSA WIKI site (http://www.ctsaweb.org/federatedhome.html), (2) focus group interviews with 44 KT2 mentors and 55 KL2 scholars, (3) 46 telephone interviews with KT2 program directors, and (4) discussions over the last 3 years by members of the CTSA mentor working group in face‐to‐face meetings and regular conference calls.

The CTSA evaluation instruments examined were collected in 2009 when Silet et al. conducted semistructured telephone interviews with all of the current 46 KT2 program directors to collect baseline information on KT2 programs and their efforts to support mentoring across CTSA institutions. 3 In addition, a CTSA evaluation working group recently released a mentor evaluation instrument.

The focus group data reported in the paper were obtained from a series of meetings with scholars and mentors at four CTSAs, including the University of Wisconsin‐Madison, Vanderbilt University, University of Colorado‐Denver, and University of North Carolina‐Chapel Hill. The first two institutions had little mentoring infrastructure, such as supports for mentor selection or formal means for aligning expectations or evaluating the mentoring relationship. The other two institutions have made concerted efforts to build programmatic mentoring supports for their K scholars. Similar questions were asked of each focus group. The interviews were taped, transcribed, coded, and entered into NVivo (QSR International, Doncaster, Victoria, Australia). A total of 55 scholars and 44 mentors across the four sites participated.

Foundations for the New Approach

As reported in Silet et al., 65% (30/46) of the CTSAs do not formally evaluate mentoring. 3 Rather, the typical mechanism for assessing the health of the mentoring relationship is by looking at scholar outcomes. As one mentor notes in the focus group interviews,

“I think if your mentee is publishing papers and gets grants, you've probably done an okay job for that person.”

These outcome measures leave little room for assessing the relationship early on; however, when the relationship is at its most vulnerable and when there is “no pudding yet,” mentees also are quick to note that,

“You can have poor or mediocre mentorship and have the scholar succeed. Or you can have great mentorship and, for whatever reason, either by luck or by just the performance of the scholar themselves, they may not succeed in taking the next step, but that doesn't necessarily mean the mentorship was not good.”

Programs wanting to move beyond scholar outcomes to assess mentor fit have turned toward implementing surveys. 6 Most CTSA programs formally evaluating the mentoring relationship use open‐ and closed‐ended surveys. Surveys were seen by some program directors as better able to catch some smaller problems that might arise in the mentoring relationship, ones that would not be significant enough to bring up in the presence of the mentor or other “powers that be,” including the KL2 director.

We examined a sample of instruments used by CTSAs across the United States, including the Center for Clinical and Translational Science Mentor Evaluation Form (UIC), CTSA Mentoring Assessment (Mayo), CTSA KFC Evaluation Group, and Evaluation of CTSA/TRANSFORM Mentor/Mentee (Columbia). The UIC mentoring evaluation instrument includes 33 items that examines the domains of intellectual growth and development, professional career development, academic guidance, and personal communication and role modeling. 8

A working group at the Mayo Clinic developed two instruments, one to evaluate the mentor and one to evaluate the scholar. Both instruments primarily use rating scales and some include a few fill‐in‐the‐blank statements. Domains evaluated are communication, feedback, relationship with mentor/scholar, professional development, and research support. The instruments were intended to be used annually from one year after matriculation to completion of the program. Feedback was given to the mentors/mentees through their usual interviews with program leadership, because their institutional review board (IRB) forbid giving mentee responses directly to the mentor. Instruments were used with Masters scholars as well as K scholars. 9

Like the Mayo Clinic, Columbia University developed two instruments, one filled out by the mentor(s) and one filled out by the scholar annually. The evaluation of the mentor by the scholar collects feedback based on 13 statements addressing topics such as the number of mentors, frequency of meetings, quality and satisfaction, availability, communicating expectations, and professional development. The mentee evaluation instrument includes 10 items with questions about research abilities, ability to meet training goals, trainee participation, and professional development. Results are kept confidential and discussed among program directors. When necessary, action plans are developed with the respondent and implemented. In some cases, programs or courses are altered. 10

The Institute for Clinical Research Education at the University of Pittsburgh recently developed two new instruments: one to be filled out by the scholar/trainee about their primary mentor, with a few questions about their entire mentoring team (if applicable), and the other to be filled out by the scholar/trainee regarding their mentoring team if no one person is identified as a primary mentor. The primary mentor instrument consists of 36 items including five multiple‐choice questions regarding frequency and mode of communication. The measures are administered annually and there are currently plans to do psychometric testing once a large enough sample size is generated. 11

After reviewing the selected instruments, we categorized the various measures into five domains: (1) meetings and communication, (2) expectations and feedback, (3) career development, (4) research support, and (5) psychosocial development. Table 1 describes key examples of characteristics for each domain along with suggested questions generated from the focus group data that matched domains identified by the instruments.

Table 1.

Key characteristics and suggested questions for five mentoring domains.

| 1 | 2 | 3 | 4 | 5 | |

|---|---|---|---|---|---|

| Meetings and communication | Expectations and feedback | Career development | Research support | Psychosocial support | |

| Characteristics | Frequency and mode of communication | Timely constructive feedback | Opportunity and encouragement to participate/networking | Assistance with: setting research goals, identifying and developing new research ideas | Balance personal and professional life |

| Accessibility | Critique work | Counsel about promotion and career advice | Guidance and feedback through the research process | Trustworthy | |

| Time commitment | Set expectations | Advocate | Guidance in presenting or publishing scholarly work | Thoughtful | |

| Conflict resolution | Set goals | Assist in development of new skills | – | Unselfish | |

| – | – | Serves as a role model | – | Respectful | |

| – | – | Acknowledge contributions | – | Engaged listener | |

| – | – | Challenges | – | Discuss personal concerns or sensitive issues | |

| – | – | Promote self‐reflection | – | Relationship building | |

| Questions | When your research yields new data, how long does it typically take your mentor to hear about it? | How timely is your mentors feedback or emails? Grants? Papers? | What is your interest in staying in academic medicine? | To what extent does your mentor troubleshoot snags in your research? | To what extent do you trust your mentor to prioritize your best interests? |

| During your mentor meeting, generally what percentage of time do you talk? Lead the discussion? | How long does it typically take your mentor to respond to emails? Provide feedback on grants and papers? | To what extent do you foresee a long‐term relationship with this mentor? | How excited is your mentor about your research? | To what extent does your mentor help initiate you in a community of practice? | |

| How consistent is your mentor in their directions? | What mechanisms are in place to align expectations between you and your mentor? | To what extent does your mentor actively promote you as a junior faculty member? | – | How comfortable are you managing the interpersonal aspects of your mentoring relationship? | |

| In general, how much do you look forward to your mentor meetings? How well does your mentor take constructive criticism? | – | How informed is your mentor about University guidelines for P&T? In what ways does your mentor actively roster your independence? | – | How well does your mentor know aspects of your life outside of work? | |

| How aware is your mentor on the roles and involvement of each member of your mentor team? | – | – | – | – |

Questions are discussed in the recommendations section and were generated from the focus group data that matched domains identified.

Focus group participants expressed doubt that surveys were able to gather information accurate to a significant depth to address the complexities of the interpersonal relationship or in a time‐effective manner. In addition, because most mentors have few K scholars at any one time, it is difficult to maintain anonymity of response. Table 2 summarizes some of these barriers.

Table 2.

Example barriers to evaluating the mentoring relationship.

| Defined barriers | ||

|---|---|---|

| Mentor | Mentee | Program director |

| Power differential | Power differential (blame falls on mentee; can't hold mentor accountable for responding to feedback; difficult to be honest; not appropriate for mentee to tell mentor how they are doing; career interdependence means negative feedback equates shooting self in the head) | Unclear measurables beyond scholar's grants and pubs (need to put long‐term outcomes in place) |

| Mentor attitude (data collected not scientifically valuable; only dissatisfied mentees respond; mentors will not recognize their faults; evaluation provokes defense mechanism; evaluation does not necessarily lead to behavior change) | ‐ | Unclear mechanism |

| ‐ | ‐ | Unclear effective practice for intervention (limited ability to fix if not succeeding; no authority; do not want to offend senior folks) |

| ‐ | ‐ | Low response rate to mentor evaluation surveys |

| Lack of anonymity with small group of scholars | Mentor attitude (mentors will not recognize their faults; mentee will not change mentor; some mentor would be offended if commentary were written down) | How to identify issues early on (prospectively identify issues/real‐time assessment; identify anticedents) |

| No adequate mechanism (formalized process does not lead to truthful responses) | ‐ | How to get honest feedback from scholars |

| Negative feedback is difficult to give | No adequate mechanism (fear of unintended consequences; not a just‐in‐time process; difficult to relay interpersonal issues; unclear measurables; perceived as simply more paperwork) | Hard to assess relationship between scholar's outcomes and effect of mentoring relationship (scholars tend to be independent anyway and select mentors who respect that) |

| Mentee success is measured in the long‐term and not the short‐term | Negative feedback is hard to give; culture encourages positivity | Negative feedback is difficult to give (especially considering the time crunch mentors are under and that many programs offer no incentives for mentoring KL2 scholars |

| ‐ | No explicit expectations upon which to evaluate | ‐ |

In a culture described as promoting positive feedback, focus group participants expressed that having the “difficult conversations” can prove a challenge. The reverence given to the senior investigators working with K scholars is another cultural factor that influences the mentoring relationship. Although well earned, that reverence can make it difficult for mentees to feel free to express challenges that arise in what is, ultimately an interdependent and interpersonal relationship where the mentee's career is seen to be very much in the hands of their mentor. This mentee focus group conversation reveals the point:

Male Speaker 1: Your mentor can kill your career.

Female Speaker 7: Absolutely.

Male Speaker 1: So if you just say something poorly

about them, they can kill your career–point blank.

Female Speaker 7: You're done.

Male Speaker 4: I can think on multiple occasions

when I would have probably liked to have made a minor comment about something that my mentor could improve and didn't. I, quite frankly, did not say anything.

Male Speaker 1: There's no way in hell.

Male Speaker 4: There's no way I would.

Male Speaker 1: Why are you shooting yourself in the head? That's painful.

Program directors expressed similar lack of authority to question the mentoring being provided to K scholars. This hesitation can lead to evaluation data being collected by programs but not necessarily shared with the mentors themselves except in extreme cases. Without follow through, scholars feel less invested to take the process seriously. If evaluation is perceived simply as more paperwork for mentors, mentees expressed additional concern that it would act as a disincentive for attracting desired mentors. Indeed, mentors gave cause for such concerns in the focus group interviews:

“I'm just kind of anti‐feedback. I hate all the things we have to do…. It has nothing to do with quality, none of those things. They're all lies and just things that we do to get through because HR says we have to do it. If the NIH comes back and says that somebody's going to have to tell me whether I'm a good mentor, I'm just like ‘I don't care about it.’ I really don't. It doesn't work into making you better.”

Other mentors acknowledged that more formal evaluation processes are bound to raise the defense mechanisms of some mentors because judgment is perceived as the intended objective and the data collected is not “scientifically valid.” Indeed, this bias reinforces Huskins et al. endorsement for formally aligning mentor/mentee expectations, 7 for if expectations for the time frame being evaluated have not been written down with clear roles assigned, it is much more difficult to later attempt to measure the health and effectiveness of the relationship:

“One barrier might be just having a clear definition of expectations. As junior faculty, you're not quite sure what you're entitled to. You don't want to be a whiner. You don't want to be needy. You don't want to know how much is your own fault. And you're not exactly sure what the mentor is supposed to provide.”

As Huskins et al. note, some CTS A programs have introduced contracts to support the alignment process. The University of Alabama CTSA uses a mentoring contract as part of the selection process for KL2 programs with these questions: What expectations do the mentors have of the mentees? How often will you meet? What type of assistance does the mentee want from the mentor? If problems arise, how will they be resolved? 12 The Vanderbilt CTSA group reports the use of a mentorship agreement form that includes segments on time commitment, communication, professional development, resources, and scientific productivity. 13

Even with clear expectations between the mentor and scholar, there also needs to be invested interest on the part of the home institution if mentor evaluation is to come to fruition. The following is one concern stated by a scholar,

“If their focus is to train people, then there has to be a mechanism to protect those trainees. The CTSA has their best interest in mind. We've got to make sure the mentors are up to that task and holding them accountable because that's the only people who can hold them accountable. As mentees, we can't really hold our mentors accountable. We can try, but we're too vulnerable. We [the institution] have to commit to junior faculty and we have to show that commitment by making our mentors accountable for at least their portion of it.”

Proposed Model

The question remains as to how to adequately address the fluid, dynamic, and unique nature of the longer term mentoring relationship. How can one develop a standard protocol to evaluate varying K scholar goals and needs? Is a mixture of rating scales, forced choice, and open‐ended responses going to generate the answers needed to successfully evaluate a relationship between two or more professionals? What about questions addressing their specific mentoring relationship?

Keeping our focus on the ultimate aim of cultivating engaged professional relationships, our model proposes a multivariate approach that encourages reflection and conversation around core mentoring domains. If we can agree that, together, these domains comprise a well‐rounded mentoring approach (keeping in mind the primary research mentor may not be the only person supporting the mentee across each domain), can we find ways to reflect on and discuss the effectiveness of our behaviors, supports, and interventions?

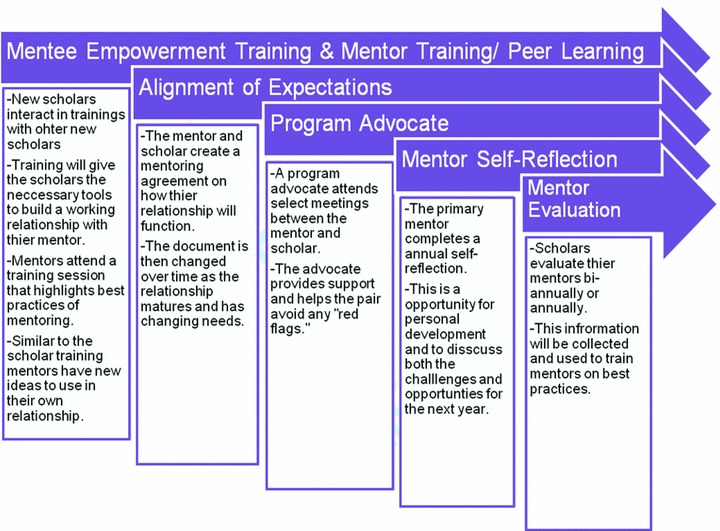

Through leveraging domains from the CTSA evaluations discussed previously and looking at the data generated from the focus groups, we propose a new model for evaluation that tries to negate the barriers defined in the focus group (power dynamic, mentor attitude, and setting expectations). The new model has six major parts: (1) mentee empowerment and training, (2) peer learning and mentor training, (3) aligning expectations, (4) mentee program advocate, (5) mentor self‐reflection, and 6) mentee evaluation of mentor. Figure 1 outlines each of the six components of the new model. Component one and two are combined in the figure.

Figure 1.

New model to evaluate mentoring relationship.

First, we suggest that all mentoring relationships begin with the setting of clear expectations of roles and responsibilities of both the scholar and mentor(s). But, how does the scholar know what can be expected? For this reason, we suggest that before meeting with their mentor all scholars attend a mentee empowerment training session. This session could take place during orientation or soon thereafter. The session would include topics on what you can/should expect from your mentor, best practices of mentoring, what has worked well and not well in past mentoring relationships, communication strategies, how to write expectations, how to build a successful mentoring team, and an overview of the entire evaluation process. Scholars then will have the proper tools to move forward, assess their own relationship, advocate for their needs, give productive feedback to their mentor, and infuse their relationship with best practices.

We also think it is important for mentors to have an opportunity to gain insight into best practices. We propose for the second component mentors are invited to attend an annual session on best practices of mentoring. Similar to the mentee empowerment session, mentors come together and discuss with peers what has worked, what has not worked, and what strategies they used. Program directors would share aggregate data gathered from the mentee evaluation of the mentor.

Mentor training is an important component as demonstrated by the following quote from a mentor,

“…I think a lot of people don't know how to be mentors. We're not trained in how to do that. You get so far along in your career; you've got other things that are competing demands, that, kind of, make the mentoring role minimal. I don't think there is a good resource base for learning how to do this. Maybe that's a better way [than evaluation] to try and get mentors to become better.”

Conducting mentor training through a peer process will likely make it easier for mentors to make changes,

“…because I think sometimes there's something that a mentor does that I've heard about and said, you know that's a really good idea. It's obvious that was viewed pretty positively. I might try that.”

It may be much easier for mentors to change their behavior when feedback comes from a peer.

The third part of the new evaluation model creates a formal agreement between the mentor and scholar. Those used by Vanderbilt and Alabama CTSAs are good examples. Mode and frequency of communication, research goals, and timeline of projects are all appropriate components. The agreement should reflect what the relationship should look like and, if there is more than one mentor, the role of each individual mentor should be defined. For example, it is possible that one member would serve the role of mentoring in just the research domain whereas someone else served as both professional development and psychosocial support mentor.

The reason this step is so important is that it is difficult to assess a relationship when you have not outlined what is expected. 7 It should also be noted that this is an ongoing document and potentially subject to change as the relationship matures or adjusts. This document can be used to frame meetings between the scholar and mentor as a way of keeping on track, as well as used as a reference at the scholar's annual progress report to help assess the relationship's functionality.

The fourth part of a comprehensive evaluation model is instituting a program advocate for each mentoring pair; these advocates could be past scholars, program staff, or senior mentors who are responsible for a number of mentoring pairs, attendance at biannual or quarterly meetings, and attendance at a minimum of one meeting where the entire mentoring team is present. The mentee advocate would informally assess the dynamics between the mentor and scholar, for example, the strength of communication. Having an advocate present could help avoid potential pitfalls, such as problems with the relationship or tasks not being completed. This information would be given to the program director who could step in before more complications arise.

The fifth part of the new evaluation model consists of the primary mentor completing a self‐reflection form before the scholar's annual progress meeting. Although the primary mentor may not be responsible for all of the domains, it would be imperative that they know what is happening in those secondary mentoring relationships. By using the domains presented earlier, the form seeks to understand challenges and opportunities over the past year (See Table 3 ). The mentor is asked to discuss their role, what happened as a result, and, finally, was there any further action. The reason why we suggest this form is that mentors are senior professionals whose prior success might hinder their interest in modifying their mentoring behavior. It can be hard for scholars to give honest feedback because of fear of repercussion. Completing this template acts as a stimulus for collective discussion during the scholar's annual report meeting and it allows the opportunity to make changes to the mentoring agreement for the following year in a protected environment.

Table 3.

Mentor self‐reflection template.

| Mentor self‐reflection template | ||||

|---|---|---|---|---|

| What were the unique challenges and opportunities from the past year? | What was your role? | What happened? What were the results? | Was there any further action? | |

| Meetings and communication | – | – | – | – |

| + | – | – | – | – |

| – | – | – | – | |

| Expectations and feedback | – | – | – | – |

| + | – | – | – | – |

| – | – | – | – | |

| Career development | – | – | – | – |

| + | – | – | – | – |

| – | – | – | – | |

| Research support | – | – | – | – |

| + | – | – | – | – |

| _ | – | – | – | – |

| Psychosocial support | – | – | – | – |

| + | – | – | – | – |

| _ | – | – | – | – |

Upcoming year

What do you want to keep doing?

What would you like to try differently with mentee in upcoming year?

What different resources or training would be helpful to you as the mentor?

The final part of the new model is a mentee evaluation of their mentor. Although other parts of the model are also assessing the relationship, we believe it is important to have some formal way for mentees to give feedback to their mentor,

“I think we ought to expect the mentees to be constructive and honest and to really talk about good things as well as some suggestions for improvement. I think mentors deserve that kind of feedback.”

This process is broken down into three phases. The initial phase takes place biannually for first year scholars and annually for advanced scholars. Scholars answer a series of questions about their mentoring relationship (see Table 1 ). Questions will be generated from each of the defined mentoring domains. In phase two, program directors will collect the responses and create themes, best practices, and new strategies.

During phase three, the responses are accumulated and shared at the annual mentee empowerment meeting and the annual mentor training. Most feedback would be shared in a group setting to generate discussion; however, there may be instances when a more personal setting with the mentee, mentor, and program director is appropriate.

We realize that this six‐component strategy is a significant departure from current practices. One strategy would be to implement one component at a time because it might not be reasonable for a program to do everything proposed in this article.

Conclusion

The primary goals of mentor and programmatic evaluation are to (1) increase learning opportunities for scholars, (2) assist mentors to become stronger mentors, and (3) guide scholar training activities. Prior evaluation work has focused on the development and use of surveys and questionnaires. 6 This article presents a more comprehensive approach to evaluation that tries to connect a number of important elements of comprehensive mentoring program. As in clinical medicine, where ongoing evaluation of clinical performance is becoming the norm, training young investigators deserves the same level of effort and use of new evaluation models.

Conflicts of interest

The authors have no relevant financial disclosures or other potential conflicts of interest to report.

Acknowledgments

This publication was supported by National Institutes of Health (NIH) Grant Numbers UL1 RR025011–03S1. Supported by grant UL1 RR025011 (MF). Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH.

References

- 1. Sambunjak D, Strauss SE, Marusic A. Mentoring in academic medicine: a systematic review JAMA. 2006; 296(9): 1103–1115. [DOI] [PubMed] [Google Scholar]

- 2. Stokols D, Hall KL, Taylor BK, Moser RP. The science of team science: overview of the field and introduction to the supplement. AM J Prev Med. 2008; 35(2 Suppl): S77–S89. [DOI] [PubMed] [Google Scholar]

- 3. Silet KA, Asquith P, Fleming M. Survey of mentoring programs for KL2 scholars. Clin Transl Sci. 2010; 3(6): 299–304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Burnham EA, Fleming M. Selection of research mentors K‐funded scholars. Clin Transl Sci 2011; 4(2): 87–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Burnham EA, Schiro S, Fleming M. Mentoring K scholars: strategies to support research mentors. Clin Transl Sci 2011; 4(3): 199–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Meagher E, Taylor LM, Probstfield J, Fleming M. Evaluating research mentors working in the area of clinical translational science: a review of the literature. Clin Tranl Sci 2011; 4(5): 353–358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Huskins WC, Silet K, Weber‐Main AM, Begg, MD , Fowler V, Hamilton J, Fleming M. Identifying and aligning expectations in a mentoring relationship. Clin Tranl Sci In Press. [DOI] [PMC free article] [PubMed]

- 8. Center for Clinical and Translational Science Mentor Evaluation Form, University of Illinois at Chicago . https://www.ctsawiki.org/wiki/display/EdCD/Career+Development+Mentor+Program+Workgroup. Accessed June 15, 2011.

- 9. Huskins Charles, communication personal, 23 May 2011 & CTSA Evaluation Assessment. https://www.ctsawiki.org/wiki/display/EdCD/Career+Development+Mentor+Program+Workgroup. Accessed June 15, 2011.

- 10. Li Sophia. personal communication, June 5, 2011.

- 11. Rubio Doris. personal communication, June 1, 2011.

- 12. The University of Alabama Birmingham Center for Clinical and Translational Science Mentoring Contract . https://www.ctsawiki.org/wiki/display/EdCD/Career+Development+Mentor+Program+Workgroup. Accessed June 15, 2011.

- 13. Vanderbilt University CTSA Mentoring Agreement . https://www.ctsawiki.org/wiki/display/EdCD/Career+Development+Mentor+Program+Workgroup. Accessed June 15, 2011.