Abstract

We describe a system for the automated diagnosis of diabetic retinopathy and glaucoma using fundus and optical coherence tomography (OCT) images. Automatic screening will help the doctors to quickly identify the condition of the patient in a more accurate way. The macular abnormalities caused due to diabetic retinopathy can be detected by applying morphological operations, filters and thresholds on the fundus images of the patient. Early detection of glaucoma is done by estimating the Retinal Nerve Fiber Layer (RNFL) thickness from the OCT images of the patient. The RNFL thickness estimation involves the use of active contours based deformable snake algorithm for segmentation of the anterior and posterior boundaries of the retinal nerve fiber layer. The algorithm was tested on a set of 89 fundus images of which 85 were found to have at least mild retinopathy and OCT images of 31 patients out of which 13 were found to be glaucomatous. The accuracy for optical disk detection is found to be 97.75%. The proposed system therefore is accurate, reliable and robust and can be realized.

Keywords: Fundus image, OCT, Diabetic retinopathy, Glaucoma, RNFL, Image processing

Introduction

Diabetic retinopathy (DR) and glaucoma are two most common retinal disorders that are major causes of blindness. DR is a consequence of long-standing hyperglycemia, wherein retinal lesions (exudates and micro aneurysm and hemorrhages) develop that could lead to blindness. It is estimated that 210 million people have diabetes mellitus worldwide [1-3] of which about 10-18% would have had or develop DR [3-6]. Hence, in order to prevent DR and eventual vision loss accurate and early diagnosis of DR is important.

Glaucoma is often, but not always, associated with increased pressure of the vitreous humor in the eye. Glaucoma is becoming an increasingly important cause of blindness, as the world’s population ages [7,8]. It is believed that glaucoma is the second leading cause of blindness globally, after cataract. Both DR and glaucoma are known to be more common in those with hyperlipidemia and glaucoma.

Serious efforts are being made to develop an automatic screening system which can promptly detect DR and glaucoma since early detection and diagnosis aids in prompt treatment and a reduction in the percentage of visual impairment due to these conditions [9-15]. Such an automated diagnostic tool(s) will be particularly useful in health camps especially in rural areas in developing countries where a large population suffering from these diseases goes undiagnosed. We present such an automated system which accepts fundus images and optical coherence tomography (OCT) images as inputs and provides an automated facility for the diagnosis of these diseases and also classify their severity.

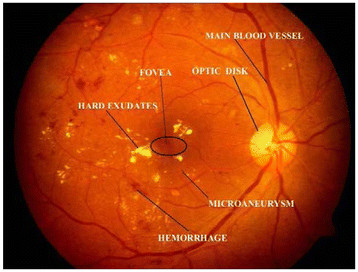

Color fundus images are used by ophthalmologists to study DR. Figure 1 shows a typical retinal image labeled with various feature components of DR. Micro aneurysms appear as small red dots, and may lead to hemorrhage(s); while the hard exudates appear as bright yellow lesions. The spatial distribution of exudates and microaneurysm and hemorrhages, especially in relation to the fovea is generally used to determine the severity of DR.

Figure 1 .

Typical fundus retinal image.

Ravishankar et al. [16] and others [17-22] showed that blood vessels, exudates, micro aneurysms and hemorrhages can be accurately detected in the images using different image processing algorithms, involving morphological operations. These algorithms first detect the major blood vessels and then use the intersection of these to find the approximate location of the optic disk. Detection of the optic disk, fovea and the blood vessels is used for extracting color information for better lesion detection. But the optical disk segmentation algorithm is rather complex, time consuming, and affected the overall efficiency of the system [23]. In contrast, we describe a simple method that uses fundamental image processing techniques like smoothening and filtering. For this purpose we used the previously described method of dividing the fundus images into ten regions forming fundus coordinates [24] and the presence of lesions in different coordinates was used to determine the severity of the disease [24-28].

Optical coherence tomography (OCT) is an established medical imaging technique. It is widely used, for example, to obtain high-resolution images of the retina and the anterior segment of the eye, which can provide a straightforward method of assessing axonal integrity. This method is also being used by cardiologists seeking to develop methods that uses frequency domain OCT to image coronary arteries in order to detect vulnerable lipid-rich plaques [29,30].

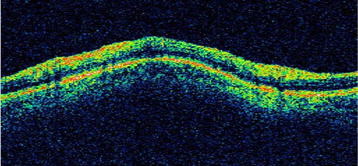

Previously, glaucoma was thought to be due to increased intraocular pressure. But, it is now known that glaucoma is also found in people with normal pressure. Glaucoma may lead to damage to optic nerve. The retinal nerve fiber layer (RNFL) when damaged leads to a reduction in its thickness. The diagnosis of glaucoma is arrived at by estimating the thickness of the RNFL. The top red-green region, as shown in Figure 2, is the RNFL region in an OCT image (Figure 2).

Figure 2 .

Retinal Nerve Fibre Layer in a typical OCT Image.

The use of Optical Coherence Tomography for diagnosis of glaucoma is a powerful tool. The earlier system with time domain OCT techniques has transformed to a superior system with spectral domain OCT techniques, and has become a well established technique for imaging the depth profile of various organs in medical images [31,32]. Liao et al.[33] have used a 2D probability density fields to model their OCT and a level set model to outline the RNFL. They introduced a Kullback-Leiber distance to describe the difference between two density functions that defined an active contours approach to identify the inner and outer boundaries and then a level set approach to identify the retinal nerve fiber layer. Although this technique is successful in determining the thickness, there is an additional requirement of extracting the inner and outer boundaries of the retina prior to identification of the nerve fiber layer. Also they have used separate circular scans to determine the thickness of the RNFL region. On the other hand, Mishra et al.[34] have used a two step kernel based optimization scheme to identify the approximate locations of the individual layer, which are then refined to obtain accurate results. However, they have tested their algorithms only on retinal images of rodents.

Speckle noise is inherently present in OCT images and most medical images like ultrasound and MRI. Due to the multiplicative nature of the noise, traditional Gaussian filtering and wiener filtering does not help although they are very robust against additive noise. The use of median filter for de-noising images corrupted with speckle noise is a well established technique in image processing. However, for images corrupted with high degree of speckle, median filtering fails to completely remove the noise. Chan et al.[35] have used an iterative gradient descent algorithm, based on progressive minimization of energy to de-noise the speckle corrupted image, and their technique is used in B mode ultrasound imaging. Wong et al.[36] suggested a method based on the evaluation of the general Bayesian least square estimate of noise free image, using a conditional posterior sampling approach which was found to be effective for rodent retinal images. Perona and Malik [37] suggested an anisotropic noise suppression technique, in order to deal with this type of noise and also provide edge preservation which is of vital importance in medical image processing where the edges and contours of tissues and organs need to be detected. The smoothing is done locally rather than globally in order to accurately differentiate between the homogenous regions of the ganglions and the boundaries of the RNFL.

Mujat et al.[38] have used an active contours based approach to detect the retinal boundaries. Their algorithm uses the multi-resolution deformable snake algorithm and is based on the work of Kass et al.[39]. The snake algorithm ensures a search technique which automatically evolves and settles on the contour to be detected. In the present study reported here, we used the anisotropic noise suppression method for dealing with the speckle noise and the greedy snake algorithm [40-43] which provides greater ease of implementation in the discrete domain.

Materials and methods

A. DR Detection

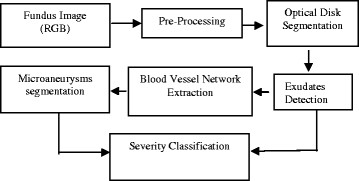

DR detection methodology followed for the extraction of features and classification of severity is given in Figure 3.

Figure 3 .

Flow chart for the automated diagnosis of Diabetic Retinopathy using fundus image.

1) Pre-processing: this step involves the illumination equalization and background normalization using adaptive histogram equalization.

2) Optical Disk Segmentation and Removal: Optical disk detection algorithm uses the property of fundus image that the optical disk region is the brightest region of the fundus image, and therefore the intensity value is the criterion used to detect optical disk. Accordingly, the input RGB image is converted to HSI color plane and I-plane is taken for further processing. Thus, low pass filtering is done on I-plane to smoothen the edges and a threshold criterion is applied on the image. The value of threshold is chosen just below the maximum intensity of fundus image (Imax–0.02, based on our data set of 89 images). After applying the threshold criterion, one may get more than one region. In order to remove other artifacts, a maximum area criterion is used to choose the final optical disk candidate. The region around the final optical disk candidate is segmented to get the region containing optical disk. To detect the boundary of the optical disk, this region is thresholded and optical disk is detected with proper boundary.

3) Blood Vessel Extraction: Blood vessel extraction is done using morphological closing as described previously [16]. A closing operation is performed on the green channel image using two different sizes of a structuring element (filter). A subtraction of the closed images across two different scales (say, S1 and S2 be the sizes of the structuring elements B1 and B2) will thus give the blood vessel segments C of the green channel image. The image is thresholded and artifacts are removed by eliminating small areas to get the final blood vessel structure.

4) Exudates Detection: Morphological dilation operation is used to detect exudates [16]. Dilation in gray scale enlarges brighter regions and closes small dark regions. Dilation is performed on the green channel at 2 different scales: S3 and S4, both of which are greater than S2 which was used for vessel extraction. Hence, at both S3 and S4, the blood vessels do not appear in the dilated result.

The exudates being bright with sharp edges respond to dilation. Subtraction of the results across the 2 scales gives the boundaries of the exudates P. The image P is subjected to the threshold criterion to get the binary image Pt. Morphological filling is performed on Pt to get possible optical disk region. The intensity in the green channel image is taken to detect exudates. As the optical disk can also be detected as exudates, the optical disk region coordinates are removed to get final exudates.

5) Fovea Detection: The fovea is a dark region located in the center of the region of the retina. It commonly appears in microaneurysm and hemorrhage detection results, much as the optic disk does in exudate detection results. The fovea is detected using the location of the optic disk and curvature of the main blood vessel. The main blood vessel is obtained as the thickest and largest blood vessel emanating from the optic disk. The entire course of the main blood vessel is obtained (from the image of the thicker vessels) by looking for its continuity from the optic disk. This blood vessel is modeled as a parabola. The vertex of the parabola is taken as the pixel on the main blood vessel that is closest to the center of the optic disk circular mask. The fovea is located approximately between 2 to 3 optical disk diameter (ODD) distances from the vertex, along the main axis of the modeled parabola and is taken as the darkest pixel in this region. The region of the fovea is taken to be within 1 optic disk diameter of the detected fovea location.

6) Micro Aneurysms and Hemorrhages (MAHM) Detection: Micro aneurysms are the hardest to detect in retinopathy images. Hemorrhages and micro aneurysms are treated as holes (i.e. small dark blobs surrounded by brighter regions) and morphological filling is performed on the green channel to identify them. The unfilled green channel image is then subtracted from the filled one and thresholded in intensity to yield an image (R) with micro aneurysm patches. The threshold is chosen based on the mean intensity of the retinal image in the red channel. Blood vessels can also appear as noise in the microaneurysm and hemorrhage detection as they have color and contrast similar to the clots. Therefore blood vessel coordinates are removed to get final MAHM (micro aneurysms and hemorrhages) candidates.

7) Severity Level Classification: The distribution of the lesions (exudates and MAHM) about the fovea can be used to predict the severity of diabetic macular edema. As suggested previously [17-23], we divided the fundus image into ten sub-regions about the fovea. The lesions occurring in the macular region are more dangerous and require immediate medical attention, than the ones farther away. As proposed previously [27,28], DR is divided into 5 categories: none, mild, moderate, severe, and proliferative. Our system uses these criteria in order to classify each image in these categories. For performing automated diagnosis of diabetic analysis studies using fundus images a written informed consent was obtained from the patient for publication of this report and other accompanying images.

B. Glaucoma Diagnosis

The estimation of the thickness of the Retinal Nerve Fiber Layer (RNFL) can be broadly broken down into the estimation of the anterior boundary (top layer of RNFL), the posterior boundaries (bottom layer of RNFL) and finally the distance between the two boundaries. The algorithm employed for this purpose is as described previously [38-43]. Two main goals that must be achieved before the thickness of the retinal nerve fibre layer is estimated is the identification of its anterior and the posterior boundaries.

Noise removal is imperative prior to boundary detection. Any imaging technique which is based on detection of coherent waves is affected by speckle noise. Since OCT is also based on interferometric detection of coherent optical beams, OCT images contain speckle noise. The speckle noise is multiplicative in nature which implies that it is an implicit composition of the information and the noise. The major challenge that needs to be tackled while reducing the effect of speckle noise is minimizing the loss of relevant details like the edges. Noise reduction algorithms with edge preservation thus become an optimal choice in such situations. These not only improve the visual appearance of the image, but also potentially improve the performance of subsequent boundary detection algorithm. In the present study, we employed the anisotropic noise suppression technique [37,38], which smoothes the image but at the same time preserve the edges. The next major step is the estimation of the anterior and the posterior boundaries. This is done using the deformable snake algorithm [39-43]. This is an iterative process which identifies the points with the maximum gradient, thereby detecting the boundary.

1) Anterior Boundary Estimation

Prior to estimation of the anterior boundary, the image is first smoothed using a 10 × 10 Gaussian kernel and standard deviation of 4. The image is then filtered using a 3 × 3 median filter which is very effective against speckle noise. The next step is to find an initial estimate of the anterior layer, which evolves as per the snake algorithm [39-43]. The initial estimate is found by first binarizing the magnitude of the image gradient. The estimate is then found as the first white pixel from the top. However, sometimes there are holes in the anterior boundary and the first pixel identified may not be on the anterior layer. This means that there are still some white pixels that need to be removed. This is done by removing the white pixels which have area less than 158 pixels (0.07% of the total image size [38]. Also any connected region, which is less than 25 pixels in length, is removed. These two morphological operations ensure that the white pixels are only those of the anterior boundary. Next we fill in the holes in the anterior boundary using a cubic polynomial curve fitting scheme. In this, using the set of points which lie on the anterior boundary, a cubic polynomial is generated. Using this polynomial equation the missing pixels can then be identified for every column.

2) Posterior Boundary Estimation: The posterior boundary estimation requires a few more pre-processing steps. First, everything above the anterior boundary is removed. Next a noise removal technique is employed prior to extraction of the posterior boundary so that a relatively more accurate estimate can be obtained. The joint anisotropic noise suppression algorithm with edge preservation is implemented as suggested by Perona and Malik [37].

The equation for anisotropic noise suppression involves the calculation of the divergence of the sum of the Laplacian and the gradient of the image. The output of this image is an image which is smoothed, except at the boundaries. In discrete domain, it also includes a time factor which is incorporated from its analogy to the heat diffusion process. The equation is implemented in discrete domain as follows:

| (1) |

The subscripts N, S, E, W, NE, NW, SE, and SW correspond to the neighborhood pixels. Although the original work of Perona and Malik [37] describes the use of only 4 neighbors, the use of eight neighbors in our algorithm has been found to be particularly more effective. The value of λ can be chosen as any value between 0 and 0.25. Here the symbol ∇ represents the Laplacian and is calculated in discrete domain as follows:

| (2) |

| (3) |

| (4) |

| (5) |

| (6) |

| (7) |

| (8) |

| (9) |

The value of the conduction coefficient C is updated after every iteration, as a function of the image intensity gradient.

| (10) |

| (11) |

| (12) |

| (13) |

| (14) |

| (15) |

| (16) |

| (17) |

There are two choices of the function g[37].The first of the two equations described by Perona and Malik [37], preserves high contrast edges over low contrast edges, while the second one preserves wide regions over smaller ones. Since our aim is to detect the boundary we choose the first function which is mentioned below again for convenience.

| (18) |

The constant K is chosen statistically to give perceptually best results. Once the noise suppression algorithm has been implemented the extraction of the posterior boundary becomes fairly simple since the portions of the interior of the RNFL get smoothed and the posterior boundary becomes much more distinct. An edge field is calculated by first finding the magnitude of the image gradient of the smoothed field obtained as a result of the joint anisotropic noise suppression algorithm. Then the image is first normalized and then binarized using a suitable threshold which is set statistically. Once this has been done there are still some areas which contain some unwanted white portions which are removed by removing those portions which have a pixel area of less than 100. Next the regions from below, the nerve fiber layer are eliminated which basically consist of the Retinal Pigment Epithelium (RPE). Also the anterior boundary is removed completely. However, there are still certain disconnected regions which were a part of RPE or the anterior boundary remain and need to be removed. This is done by removing areas having length less than 25 pixels and also areas which are less than 70 pixels [38]. The posterior boundary is then estimated as the first white pixel from the top. The points extracted are then passed through a median filter of 50 points in order to remove any unwanted spikes. This completes the detection of the posterior boundary. Now both the anterior and the posterior boundaries have been identified and the thickness is determined as the pixel difference between the boundaries. The thickness of each pixel depends on the OCT acquisition mechanism. In our case the pixel thickness is 6 μm. The thickness at each point of the anterior and posterior boundaries is calculated and then averaged over the length of the image. For performing automated diagnosis of Glaucoma studies using OCT images a written informed consent was obtained from the patient for publication of this report and other accompanying images.

Results and discussion

A. DR Diagnosis

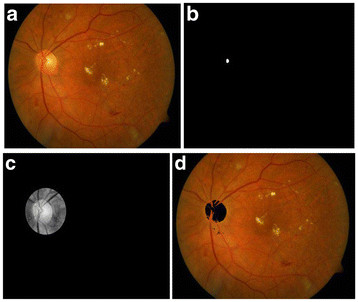

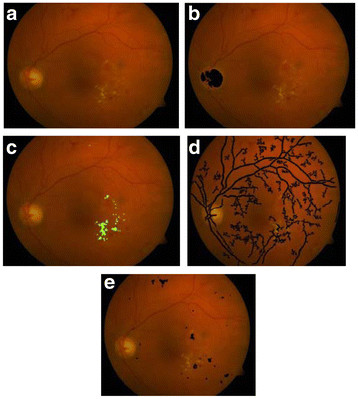

The results were obtained for eight nine (89) fundus images [44] which were used for detection and diagnosis of DR. The individual segmentation modules were developed using MATLAB, later integrated to act as standalone application software. The segmentation of Micro Aneurysms, Hard Exudates, Cotton Wool Spots, Optic Disc, and Fovea was successfully performed and the results obtained show high degree of accuracy, independent of different coordinates of the retinal Angiogram datasets. Some of the results obtained for the diagnosis of DR are shown in Figures 3, 4, 5, 6, 7 and 8. The total area occupied and the area occupied in the fovea region is calculated corresponding to the exudates and micro aneurysms, based on the number of pixels and the severity level was determined as none, mild, moderate and severe. Figure 9 shows the results of DR diagnosis of a typical patient, based on the fundus image.

Figure 4 .

Optical Disc detection process. (a) Input fundus image, (b) Optical Disc localization, (c) Optical Disc region, (d) Optical Disc detected.

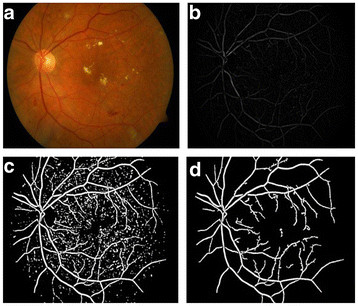

Figure 5 .

Blood vessel detection process. (a) Input fundus image, (b) Fundus gradient image, (c) Thresholded fundus gradient image, (d) Blood vessels detected.

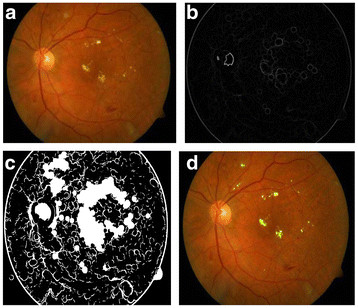

Figure 6 .

(a) Input Fundus Image, (b) Dilation gradient image, (c) Thresholded and filled image, (d) Exudates detected.

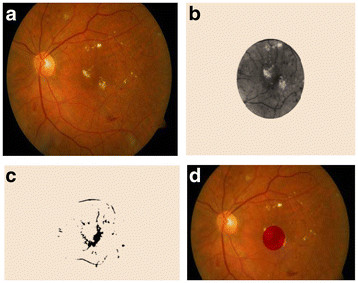

Figure 7 .

(a) Input Fundus Image, (b) Possible Fovea region, (c) Threshold region, (d) Fovea detected.

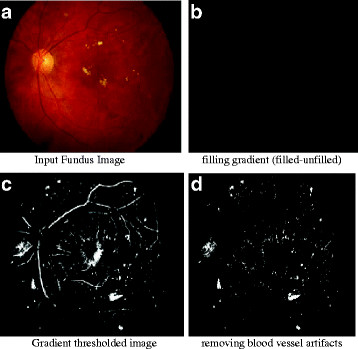

Figure 8 .

(a) Input Fundus Image, (b) filling gradient (filled-unfilled), (c) Gradient thresholded image, (d) removing blood vessel artifacts.

Figure 9 .

Total exudate area for above patient is 5196 pixels, total MAHM area is 3991 pixels and there is no exudate and MAHM pixel in fovea. Therefore the DR condition is classified as moderate. (a) Input RGB fundus image, (b) Optical Disk Detected, (c) Exudates Detection, (d) Blood vessel segmentation, (e) MAHM detected.

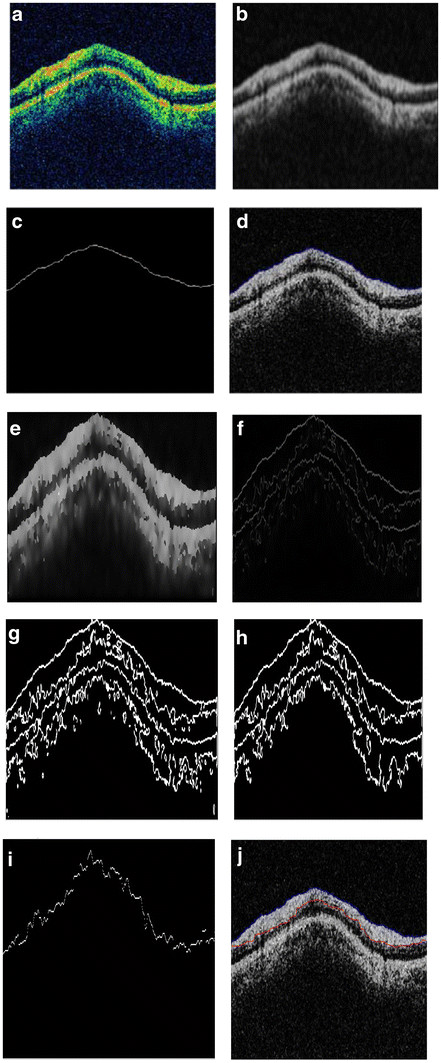

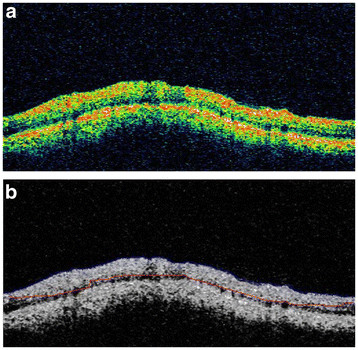

B. Glaucoma Diagnosis

Figure 10 (a-f) shows the steps described above with respect to Glaucoma diagnosis - starting from the initial estimate of the anterior boundary to detection of both the boundaries. The algorithm for the diagnosis of Glaucoma by measurement of the retinal nerve fiber layer thickness was tested on a set of 186 images of 31 patients i.e., three images each of the right and the left eye. The mean thickness for both the eyes was calculated and the classification into Glaucomatous and Non-Glaucomatous was done based on whether the thickness of the nerve fiber layer is lesser or greater than 105 μm [45,46]. The images are of the dimension 329 × 689pixels. The algorithm was implemented using Matlab 7.10 on an Intel Core2 Duo Processor 2.2 GHz machine. The results are shown in Figure 11. Figure 11 shows the input OCT image and the corresponding output image of a typical patient. Out of the 31 patients, 13 patients were found to have glaucoma in at least one eye; i.e., their RNFL thickness was less than 105 μm. The image shown above has an RNFL thickness of 168.06 μm, indicating a healthy candidate.

Figure 10 .

(a) input OCT image; (b) Gaussian smoothed median filtered image; (c) initial estimate of the anterior boundary; (d) accurately detected anterior boundary after applying snake algorithm; (e) Smoothed image with edges preserved using anisotropic diffusion; (f) edge field of image in 10(e); (g) binarized version of image in 10(f); (h) areas less than 100 pixels are removed; (i) initial estimate of Posterior Boundary; (j) Accurately detected posterior boundary.

Figure 11 .

(a): Input OCT image, (b): Anterior and posterior boundaries in blue and red respectively.

Conclusions

Here we have described a low cost retinal diagnosis system which can aid an ophthalmologist to quickly diagnose various stages of diabetic retinopathies and glaucoma. This novel system can accept both kinds of retinal images (fundus and OCT) and can successfully detect any pathological condition associated with retina. Such a system can be of significant benefit for mass diagnosis in rural areas especially in India where patient to ophthalmologist ratio is as high as (4,00,000:1) [47]. A major advantage of our algorithm is that the accuracy achieved for optical disk detection is as high as 97.75% which implies greater accuracy of exudates detection. Our results show that RNFL thickness measurement using our proposed method is concurrent with the ophthalmologist’s opinion for glaucoma diagnosis. This work can be extended to develop similar diagnostic tools for other ocular diseases and combining it with telemedicine application, for remote, inaccessible and rural areas may prove to be of significant benefit to diagnose various retinal diseases.

Furthermore, it is also relevant to note that the risk of development of both diabetic retinopathy and glaucoma are enhanced in those with hyperlipidemia [48,49]. This suggests that whenever diabetic retinopathy and glaucoma are detected in a subject they also should be screened for the existence of hyperlipidemia. Thus, early detection of diabetic retinopathy and glaucoma may also form a basis for screening of possible presence of dyslipidemia in these subjects. In this context, it is important to note that type 2 diabetes mellitus, glaucoma and hyperlipidemia are all considered as low-grade systemic inflammatory conditions [50,51] providing yet another reason as to why patients with DR and glaucoma need to be screened for hyperlipidemia.

Competing interest

The authors declare that they have no competing interests.

Authors’ contributions

PA carried out experimental studies on automated diagnosis of diabetic retinopathy and glaucoma studies using fundus and OCT images, PA, UND, TVSPM and RT participated in the sequence of algorithm studies and interpretation of results and interaction with ophthalmologists also all the authors participated in the sequence alignment and drafted the manuscript. All authors read and approved the final manuscript.

Contributor Information

Arulmozhivarman Pachiyappan, Email: amvarman@gmail.com.

Undurti N Das, Email: undurti@hotmail.com.

Tatavarti VSP Murthy, Email: tvspmurthy@yahoo.com.

Rao Tatavarti, Email: rtatavarti@gmail.com.

Acknowledgments

Dr. Das is in receipt of a Ramalingaswami Fellowship of the Department of Biotechnology, New Delhi, India during the tenure of this study. Authors acknowledge the fruitful discussions and comments from ophthalmologist, Dr. R Suryanarayana Raju during the study.

References

- Wild S, Roglic G, Green A, Sicree R, King H. Global prevalence of diabetes: estimates for the year 2000 and projections for 2030. Diabetes Care. 2004;27:1047–1053. doi: 10.2337/diacare.27.5.1047. [DOI] [PubMed] [Google Scholar]

- Day C. The rising tide of type 2 diabetes. Br J Diabetes Vasc Dis. 2001;1:37–43. doi: 10.1177/14746514010010010601. [DOI] [Google Scholar]

- Shaw JE, Sicree RA, Zimmet PZ. Global estimates of the prevalence of diabetes for 2010 and 2030. Diabetes Res Clin Pract. 2010;87:4–14. doi: 10.1016/j.diabres.2009.10.007. [DOI] [PubMed] [Google Scholar]

- Thomas RL, Dunstan F, Luzio SD, Roy Chowdury S, Hale SL, North RV, Gibbins RL, Owens DR. Incidence of diabetic retinopathy in people with type 2 diabetes mellitus attending the diabetic retinopathy screening service for Wales: retrospective analysis. BMJ. 2012;344:e874. doi: 10.1136/bmj.e874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox CS, Pencina MJ, Meigs JB, Vasan RS, Levitzky YS, D’Agostino RB. Trends in the incidence of type 2 diabetes mellitus from the 1970s to the 1990s. The Framingham Heart Study. Circulation. 2006;113:2814–2918. doi: 10.1161/CIRCULATIONAHA.106.613828. [DOI] [PubMed] [Google Scholar]

- Raman R, Rani PK, Reddi Rachepalle S, Gnanamoorthy P, Uthra S, Kumaramanickavel G, Sharma TV. Prevalence of diabetic retinopathy in India: sankara nethralaya diabetic retinopathy epidemiology and molecular genetics study report 2. Ophthalmology. 2009;116:311–318. doi: 10.1016/j.ophtha.2008.09.010. [DOI] [PubMed] [Google Scholar]

- Cedrone C, Mancino R, Cerulli A, Cesareo M, Nucci C. Epidemiology of primary glaucoma: prevalence, incidence, and blinding effects. Prog Brain Res. 2008;173:3–14. doi: 10.1016/S0079-6123(08)01101-1. [DOI] [PubMed] [Google Scholar]

- George, Ronnie MSG, Ramesh S, Lingam V. Glaucoma in India: estimated burden of disease. J Glaucoma. 2010;19:391–397. doi: 10.1097/IJG.0b013e3181c4ac5b. [DOI] [PubMed] [Google Scholar]

- Sinthanayothin C, Boyce JF, Williamson TH, Cook HL, Mensah E, Lal S, Usher D. Automated detection of diabetic retinopathy on digital fundus images. Diabet Med. 2002;19:105–112. doi: 10.1046/j.1464-5491.2002.00613.x. [DOI] [PubMed] [Google Scholar]

- Hipwell JH, Strachan F, Olson JA, McHardy KC, Sharp PF, Forrester JV. Automated detection of microaneurysms in digital red-free photographs: a diabetic retinopathy screening tool. Diabet Med. 2000;17:588–594. doi: 10.1046/j.1464-5491.2000.00338.x. [DOI] [PubMed] [Google Scholar]

- Bouhaimed M, Gibbins R, Owens D. Automated detection of diabetic retinopathy: results of a screening study. Diabetes Technol Ther. 2008;10:142–148. doi: 10.1089/dia.2007.0239. [DOI] [PubMed] [Google Scholar]

- Larsen M, Gondolf T, Godt J, Jensen MS, Hartvig NV, Lund-Andersen H, Larsen N. Assessment of automated screening for treatment-requiring diabetic retinopathy. Curr Eye Res. 2007;32:331–336. doi: 10.1080/02713680701215587. [DOI] [PubMed] [Google Scholar]

- Acharya UR, Dua S, Xian D, Vinitha Sree S, Chua CK. Automated diagnosis of glaucoma using texture and higher order spectra features. IEEE Trans Inf Technol Biomed. 2011;15:449–455. doi: 10.1109/TITB.2011.2119322. [DOI] [PubMed] [Google Scholar]

- Shehadeh W, Rousan M, Ghorab A. Automated diagnosis of glaucoma using artificial intelligent techniques. J Commun Comput Eng. 2012;2:35–40. [Google Scholar]

- Nayak J, Acharya RU, Bhat PS, Shetty N, Lim T-C. Automated diagnosis of glaucoma using digital fundus images. J Med Syst. 2009;33:337–346. doi: 10.1007/s10916-008-9195-z. [DOI] [PubMed] [Google Scholar]

- Ravishankar S, Jain A, Mittal A. Automated feature extraction for early detection of diabetic retinopathy in fundus images. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, Human Computer. 2009. pp. 210–217.

- Sinthanayothin C, Boyce JF, Williamson TH, Cook HL, Mensah E, Lal S, Usher D. Automated detection of diabetic retinopathy on digital fundus images. Diabetic Med. 2002;19:105–112. doi: 10.1046/j.1464-5491.2002.00613.x. [DOI] [PubMed] [Google Scholar]

- Faust O, Acharya RU, Ng EYK, Ng K-H, Suri JS. Algorithms for the automated detection of diabetic retinopathy using digital fundus images: a review. J Med Syst. 2012;36:145–157. doi: 10.1007/s10916-010-9454-7. [DOI] [PubMed] [Google Scholar]

- Nayak J, Bhat PS, Acharya R, Lim CM, Kagathi M. Automated identification of diabetic retinopathy stages using digital fundus images. J Med Syst. 2008;32:107–115. doi: 10.1007/s10916-007-9113-9. [DOI] [PubMed] [Google Scholar]

- Ramaswamy M, Anitha D, Kuppamal SP, Sudha R, Mon SFA. A study and comparison of automated techniques for exudate detection using digital fundus images of human eye: a review for early identification of diabetic retinopathy. Int J Comput Technol Appl. 2011;2:1503–1516. [Google Scholar]

- Acharya UR, Lim CM, Ng EYK, Chee C, Tamura T. Computer-based detection of diabetes retinopathy stages using digital fundus images. Proc Inst Mech Eng H J Eng Med. 2009;223:545–553. doi: 10.1243/09544119JEIM486. [DOI] [PubMed] [Google Scholar]

- Hansen AB, Hartvig NV, Jensen MSJ, Borch-Johnsen K, Lund-Andersen H, Larsen M. Diabetic retinopathy screening using digital non-mydriatic fundus photography and automated image analysis. Acta Ophthalmol Scand. 2004;82:666–672. doi: 10.1111/j.1600-0420.2004.00350.x. [DOI] [PubMed] [Google Scholar]

- Abdel-Razak Youssif AAH, Ghalwash AZ, Abdel-Rahman Ghoneim AAS. Optic disc detection from normalized digital fundus images by means of a vessels’ direction matched filter. IEEE Trans Med Imaging. 2008;27:11–18. doi: 10.1109/TMI.2007.900326. [DOI] [PubMed] [Google Scholar]

- Hoover A, Kouznetsova V, Goldbaum M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans Med Imaging. 2000;19:203–210. doi: 10.1109/42.845178. [DOI] [PubMed] [Google Scholar]

- Priya R, Aruna P. SVM and Neural Network based Diagnosis of Diabetic Retinopathy. Int J Comput Appl. 2012;41:6–12. [Google Scholar]

- Polar K, Kara S, Guven A, Gunes S. Comparison of different classifier algorithms for diagnosing macular and optic nerve diseases. Expert Syst. 2009;26:22–34. doi: 10.1111/j.1468-0394.2008.00501.x. [DOI] [Google Scholar]

- Nguyen HT, Butler M, Roychoudhry A, Shannon AG, Flack J, Mitchell P. Classification of diabetic retinopathy using neural networks, 18th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. Vision and Visual Perception 5.8.3, Amsterdam; 1996. pp. 1548–1549. [Google Scholar]

- Wilkinson CP, Ferris FL III, Klein RE, Lee PP, Agardh CD, Davis M, Dills D, Pararajasegaram R, Verdaguer JT. Global Diabetic Retinopathy Project Group. Proposed international clinical diabetic retinopathy and diabetic macular edema disease severity scales. Ophthalmology. 2003;110:1677–1682. doi: 10.1016/S0161-6420(03)00475-5. [DOI] [PubMed] [Google Scholar]

- Waxman S, Ishibashi F, Muller JE. Detection and treatment of vulnerable plaques and vulnerable patients. Novel approaches in prevention of coronary events. Circulation. 2006;114:2390–2411. doi: 10.1161/CIRCULATIONAHA.105.540013. [DOI] [PubMed] [Google Scholar]

- Low AF, Tearney GJ, Bouma BE, Jang I-K. Technology Insight: optical coherence tomography—current status and future development. Nat Clin Pract Cardiovasc Med. 2006;3:154–162. doi: 10.1038/ncpcardio0482. [DOI] [PubMed] [Google Scholar]

- Wojtkowski M, Leitgeb R, Kowalczyk A, Bajraszewski T, Fercher AF. In vivo human retinal imaging by Fourier domain optical coherence tomography. J Biomed Opt. 2002;7:457–463. doi: 10.1117/1.1482379. [DOI] [PubMed] [Google Scholar]

- Bajraszewski T, Wojtkowski M, Szkulmowski M, Szkulmowska A, Huber R, Kowalczyk A. Improved spectral optical coherence tomography using optical frequency comb. Opt Express. 2008;16:4163–4176. doi: 10.1364/OE.16.004163. [DOI] [PubMed] [Google Scholar]

- Lu Z, Liao Q, Fan Y. A variational approach to automatic segmentation of RNFL on OCT data sets of the retina. 16th IEEE International Conference on Image Processing (ICIP). Cairo, Egypt, Biomedical Image Segmentation. 2009. pp. 3345–3348.

- Mishra A, Wong A, Bizheva K, Clausi DA. Intra-retinal layer segmentation in optical coherence tomography images. Opt Express. 2009;17:23719–23728. doi: 10.1364/OE.17.023719. [DOI] [PubMed] [Google Scholar]

- Chan RC, Kaufhold J, Hemphill LC, Lees RS, Karl WC. Anisotropic edge-preserving smoothing in carotid B-mode ultrasound for improved segmentation and intima-media thickness (IMT) measurement. IEEE conference. Computers in Cardiology. 2009. pp. 37–40.

- Wong A, Mishra A, Bizheva K, Clausi DA. General Bayesian estimation for speckle noise reduction in optical coherence tomography retinal imagery. Opt Express. 2010;18:8338–8352. doi: 10.1364/OE.18.008338. [DOI] [PubMed] [Google Scholar]

- Perona P, Malik J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans Pattern Anal Mach Intell. 1990;12:629–639. doi: 10.1109/34.56205. [DOI] [Google Scholar]

- Mujat M, Chan R, Cense B, Park B, Joo C, Akkin T, Chen T, De Boer J. Retinal nerve fiber layer thickness map determined from optical coherence tomography images. Opt Express. 2005;13:9480–9491. doi: 10.1364/OPEX.13.009480. [DOI] [PubMed] [Google Scholar]

- Kass M, Witkin A, Terzopoulos D. Snakes: active contour model. Int J Comput Vision. 1988;1:321–331. doi: 10.1007/BF00133570. [DOI] [Google Scholar]

- Kang DJ. A fast and stable snake algorithm for medical images. Pattern Recognit Lett. 1999;20:507–512. doi: 10.1016/S0167-8655(99)00019-7. [DOI] [Google Scholar]

- Chesnaud C, Refregier P, Boulet V. Statistical region snake-based segmentation adapted to different physical noise models. IEEE Trans Pattern Anal Mach Intell. 1999;21:1145–1157. doi: 10.1109/34.809108. [DOI] [Google Scholar]

- Lean CCH, See AKB, Anandan Shanmugam S. An enhanced method for the snake algorithm. Int Conf Innov Comput Inf Control (ICICIC) 2006;1:240–243. [Google Scholar]

- Williams DJ, Shah M. A fast algorithm for active contours and curvature estimation. CVGIP: Image Underst. 1992;55:14–26. doi: 10.1016/1049-9660(92)90003-L. [DOI] [Google Scholar]

- DIARETDB0-Standard Diabetic retinopathy database Calibration level 0. IMAGERET, Lappeenranta University of Technology, Lappeenranta, FINLAND; [Google Scholar]

- Ramakrishnan R, Mittal S, Ambatkar S, Kader MA. Retinal nerve fibre layer thickness measurements in normal Indian population by optical coherence tomography. Indian J Ophthalmol. 2006;54:11–15. doi: 10.4103/0301-4738.21608. [DOI] [PubMed] [Google Scholar]

- Sony P, Sihota R, Tewari HK, Venkatesh P, Singh R. Quantification of the retinal nerve fibre layer in normal Indian eyes with Optical coherence tomography. Indian J Ophthalmol. 2004;52:304–309. [PubMed] [Google Scholar]

- World Health Organization, Report of a WHO Working Group. Vision 2020 Global initiative for the elimination of avoidable blindness: action plan 2006-2011. Geneva; 2002. pp. 34–44. [Google Scholar]

- Newman-Casey PA, Talwar N, Nan B, Musch DC, Stein JD. The relationship between components of metabolic syndrome and open-angle glaucoma. Ophthalmology. 2011;118:1318–1326. doi: 10.1016/j.ophtha.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogelbeg KH, Meurers G. Persisiting hyperlipidemias as risk factors of diabetic macroangiopathy. Kun Wochenschr. 1986;64:506–511. doi: 10.1007/BF01713057. [DOI] [PubMed] [Google Scholar]

- Das UN. Molecular basis of health and disease. Springer, New York; 2011. [Google Scholar]

- Sawada H, Fukuchi T, Tanaka T, Abe H. Tumor necrosis factor-concentrations in the aqueous humor of patients with glaucoma. Invest Ophthalmol Vis Sci. 2010;51:903–906. doi: 10.1167/iovs.09-4247. [DOI] [PubMed] [Google Scholar]