Abstract

There is much variation in the implementation of the best available evidence into clinical practice. These gaps between evidence and practice are often a result of multiple individual decisions. When making a decision, there is so much potentially relevant information available, it is impossible to know or process it all (so called ‘bounded rationality’). Usually, a limited amount of information is selected to reach a sufficiently satisfactory decision, a process known as satisficing. There are two key processes used in decision making: System 1 and System 2. System 1 involves fast, intuitive decisions; System 2 is a deliberate analytical approach, used to locate information which is not instantly recalled. Human beings unconsciously use System 1 processing whenever possible because it is quicker and requires less effort than System 2. In clinical practice, gaps between evidence and practice can occur when a clinician develops a pattern of knowledge, which is then relied on for decisions using System 1 processing, without the activation of a System 2 check against the best available evidence from high quality research. The processing of information and decision making may be influenced by a number of cognitive biases, of which the decision maker may be unaware. Interventions to encourage appropriate use of System 1 and System 2 processing have been shown to improve clinical decision making. Increased understanding of decision making processes and common sources of error should help clinical decision makers to minimize avoidable mistakes and increase the proportion of decisions that are better.

Keywords: decision making, delivery of healthcare, evidence-based practice, judgement, models, theoretical

Introduction

The aim of evidence-based medicine (EBM) is to ensure that decision making in health care incorporates the best available evidence. As Gambrill states ‘Critical thinking and evidence-informed practice are closely related; both reject authority as a guide (such as someone's status), both emphasize the importance of honouring ethical obligations such as informed consent, and both involve a spirit of inquiry’ [1]. Clinical decision making (including prescribing decisions) involves the judicious use of evidence, taking into account both clinical expertise and the needs and wishes of individual patients [2].

The link between evidence and decision making

The development of EBM has been linked with that of implementation; how to better incorporate the findings from high quality research into routine clinical practice. Despite more than 20 years of work, many variations in care can still be identified [3, 4]. Sometimes there is over-implementation, such as the rapid uptake of long acting insulin analogues for people with type 2 diabetes mellitus, when the clinical effectiveness and health economics vs. NPH insulin is highly questionable [5]. Sometimes there is under-implementation. For example, in patients with heart failure admitted to hospital, β-adrenoceptor blockers have been shown to reduce mortality by about one third in the first year after admission, when used at target doses. However, β-adrenoceptor blockers are only prescribed in 60% of eligible patients in England and Wales, and where they are prescribed, 66% of patients receive less than 50% of the target dose [6]. Many gaps between evidence and practice remain, and even when multi-faceted implementation programmes are in place, using, for example, education, audit, data feedback and financial incentives, it is difficult to predict the uptake of evidence [7–9].

These gaps are a result of multiple individual decisions. We expect health care practitioners to be good decision makers. Yet it is rare that the evidence on decision making, and how it might be improved, is discussed in mainstream undergraduate or postgraduate curricula. The patient safety data alone indicates that some decision making is not always optimal [10].

All decision making is an uncertain enterprise. Mistakes are inevitable even in the best of circumstances and especially when judged with the benefit of hindsight. But even in uncertain practice, some decisions are clearly better than others. Avoiding common mistakes would increase the proportions of decisions that are better, so learning about common sources of error ought to enable the recognition of errors and help develop strategies to minimize avoidable mistakes. The EQUIP study, for example, looked at the causes of prescribing errors in foundation trainees [11]. The study found a complex mixture of factors were involved, including miscommunication, lack of safety culture, inadequate prescribing training and support and stressful working conditions. The report supported the development of a core drug list to support prescribing training interventions to reduce prescribing errors [12].

How do we make decisions?

In 1978 Herbert Simon was awarded the Nobel Prize in economic sciences for describing ‘bounded rationality’ [13]. Put simply, this maxim states that there is so much potentially relevant information available to a decision maker that it is impossible for the human brain to know or process it all. This is counter to what we would wish to be the case. Either as clinicians or as patients we would expect high quality decisions to be made as a result of consideration and weighing of large amounts of scientific data, a well-controlled process carefully honed by years of training, experience and dedication. However, it has repeatedly been demonstrated that human beings truncate large volumes of information.

Imagine a patient presenting with a headache. Making a diagnosis might depend upon knowing the anatomy of the structures capable of causing the pain, the pathophysiological processes involved and, of course, human behaviour and its response to pain and illness. The consultation skills to elicit and weigh key symptoms accurately and succinctly and the examination skills to elicit the presence or absence of key signs are also required. Case memories (or illness scripts) of similar patients seen before may be recalled and compared with the current patient to help formulate a decision [14]. Various approaches are employed to enable a decision to be made in the face of such a large and complex volume of information. These usually involve truncating the amount of information used in order to be able to make a ‘good enough’ decision, an approach termed satisficing [13].

The diagnostic decision process is then followed by a decision on management, based on another potentially huge volume of complex evidence-based data. Even when this is summarized into high quality syntheses, the volume is amazing. Consider this analysis of information required to manage 18 patients admitted in one 24 h on call period in a UK hospital. The patients had a total of 44 diagnoses. The on call physician, if referring only to relevant guidelines provided by the National Institute for Health and Clinical Excellence (NICE), UK Royal Colleges and major societies, would need to have read 3679 pages. If it takes 2 min to read each page, the admitting physician would need to read for 122 h and correctly remember and apply all of that information (for one 24 h on call period) [15].

Dual process theory – System 1 and System 2

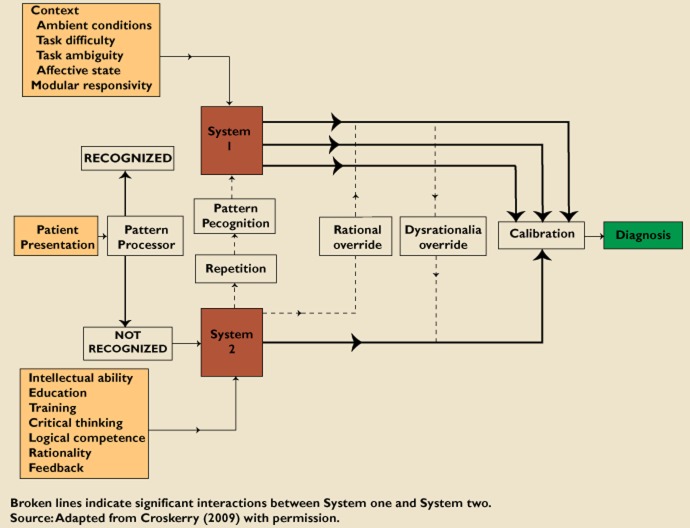

Dual process theory states that humans process information in two ways, termed System 1 and System 2 (Figure 1) [16, 17]. System 1 processing is an ‘intuitive, automatic, fast, frugal and effortless’ process, involving the construction of mental maps and patterns, shortcuts and rules of thumb (heuristics), and ‘mindlines’ (collectively reinforced, internalized tacit guidelines) [17, 18]. These are developed through experience and repetition, usually based on undergraduate teaching, brief written summaries, seeing what other people do, talking to local colleagues and personal experience [18]. System 2 processing involves a careful, rational analysis and evaluation of the available information. This is effortful and time consuming. Data from a variety of environments demonstrates that human beings prefer to use System 1 processing whenever possible [17].

Figure 1.

Schematic model for diagnostic decision making. Reprinted from [17]. Note: as illustrated this describes reaching a diagnosis after seeing a patient's presentation, but it could as easily illustrate a clinical management decision: substitute ‘clinical problem’ for ‘patient presentation’ and ‘drug treatment’ for ‘diagnosis’

Look at Figure 2. What emergency treatment does this seriously ill young person need?

Figure 2.

Picture of glass test in a young person with meningococcal septicaemia

Most health care professionals have little difficulty in determining that the presumed diagnosis is meningococcal septicaemia and part of the emergency response is parenteral antibiotics. Both the diagnostic decision and the management decision are made very quickly by experienced clinicians, using System 1 processing. However, in the same clinical setting the process of diagnostic reasoning by medical students might have to be purposeful and take longer and involve System 2.

Whilst it is appealing to hope that health care professionals can acquire and process all of the high quality evidence relating to diagnosis and management instantly, just when required, the volume of information is completely unmanageable. On most occasions and in most settings human beings make decisions based on a much faster and less intensive process. A clinician faced with a new consultation will either quickly recognize the constellation of symptoms and signs using pattern recognition and be able to make a diagnosis, or not. If the pattern is recognized System 1 operates and if not, then System 2 processing is required.

Imagine a 28-year-old Indian lady consults with a 2 month history of exertional chest pain when pushing her baby's buggy. She has a past history of type 2 diabetes, hypothyroidism and a BMI of 34.6 [19]. If this patient was a 58-year-old man, System 1 processing would probably lead most physicians effortlessly (through pattern recognition) to a diagnosis of ischaemic chest pain. In this case, however, the key clinical features (exertional chest pain + young woman + post partum + cardiovascular risk factors) do not fit with a well-recognized pattern and require some analytical thinking, within System 2. The doctors caring for this lady employed System 2 processing, arranged a number of investigations to inform their clinical decisions, leading to the discovery of a critical stenosis in her left anterior descending coronary artery.

If a diagnosis becomes obvious during the analytical process (in this case, for example, if the patient's resting or exercise ECG had clearly shown ischaemic changes) then the decision making moves into System 1 and, importantly, the model includes the option of a System 1 decision moving into a more purposeful check by System 2.

The qualitatative data describing what happens in real-world decision making in healthcare supports the hypothesis that System 1 processing dominates behaviour. If you ask doctors how often they need to search for an answer to manage a consultation, most say about once a week. However, if each consultation is observed and subsequently discussed, a clinical question is identified for every two or three patients seen [20, 21]. Many consultations are therefore managed without the activation of System 2 required to identify uncertainties; unrecognized clinical questions are suppressed, truncating the information required to manage the consultation.

Neither System 1 nor System 2 should be regarded as ‘good’ or ‘bad’. System 1 decision making can provide life-saving decisions very quickly (as in the case of recognizing a meningococcal rash). System 2 decision making can locate information which enables a decision to be made when System 1 is incapable of doing so. However, System 2 processing takes more time and this may not be consistent with the pace required in clinical practice. Gaps between evidence and practice may occur when a clinician develops a pattern of knowledge, which is then relied on for decisions using System 1 processing, without the activation of a System 2 check.

The natural preference all humans have for System 1 thinking makes it hard to modify practice in the light of new information which conflicts with our previous assumptions and knowledge [22]. A major challenge to adoption of innovation is managing information, and distinguishing information that is important, relevant, valid and that requires a change in practice; from that which is not and should not. However, even if important information is identified, inherent ‘dysrationalia over-ride’ (a preference for System 1 thinking), tends to inhibit a purposeful switch into System 2 thinking (Figure 1). Being aware of this risk should enable engagement of a ‘rational over-ride’ of System 1 thinking. Awareness may be facilitated by computer programmes such as hazard alerts, which have been shown to reduce medication errors significantly and increase safer prescribing by those who choose to read the warnings [23]. At the very least, all practitioners can try to ensure that, when they are appropriately deploying System 1 thinking, they take a moment to check that the decision they have come to is reasonable, a step termed ‘calibration’ in dual process theory (Figure 1).

The development of clinical expertise

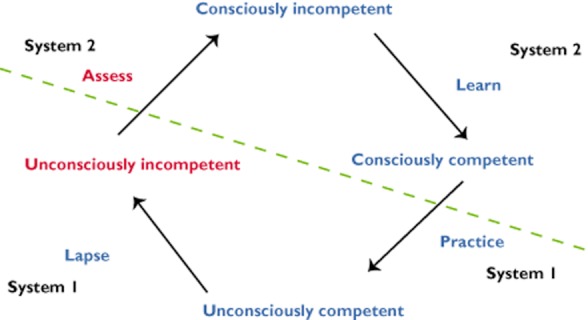

The development of any new skill can be illustrated by the four stage conscious competence model (Figure 3). The origins of this model are obscure, but the US Gordon Training International Organization has played a major role in defining it and promoting its use [24]. The dual process theory can be applied to this well known model of learning to explain how expertise can be developed.

Figure 3.

Four stage conscious competence model of learning

Imagine a prescriber who has never calculated the dose of gentamicin for a patient with impaired renal function. Whatever the task is, if they have never done this before, they will know they cannot do it, a state in this model termed ‘consciously incompetent’. It requires purposeful and conscious learning using System 2 processes to gain the knowledge and skills to carry out the task. Once the individual has mastered it, they may be able to pass an assessment of competence. However, at this stage, they can only perform the task adequately if, cognitively, they address it with their utmost attention and concentration. The individual is still in System 2 but they are now ‘consciously competent’.

With further practice some of the actions required become automated and, at least for part of the time, for part of the task, the individual can operate on ‘automatic pilot’. In this example, the prescriber no longer has to refer to a drug formulary when prescribing gentamicin. System 2 learning has become embedded into a System 1 process and they are now ‘unconsciously competent’. However, if the prescriber stays in System 1, errors may creep in and they may become ‘unconsciously incompetent’; for example, remembering a dose calculation incorrectly. With an effortful, conscious, System 2 assessment activity, they can realize this and become ‘consciously incompetent’ and be able to rectify their actions once again using System 2 as a check on System 1.

Cognitive and affective biases

Affect is inseparable from thinking and is intrinsically linked to our ability to process information and make good decisions [25]. Stress, fatigue, personal problems and other factors would all be expected to result in disturbances of affect and, in turn, decision making.

A cognitive bias is a pattern of deviation in judgment that occurs in particular situations. There is now an empirical body of research which has demonstrated a large number of cognitive biases in decision making, many of which are relevant to clinical decision making (Table 1) [26].

Table 1.

Cognitive biases in clinical practice

| Cognitive bias | Definition | Clinical example |

|---|---|---|

| Anchoring bias | Undue emphasis is given to an early salient feature in a consultation. | Concentrating on the fact that a 58-year-old patient with back pain has a manual job (diagnosis = musculoskeletal pain), and putting less weight on his complaint of hesitancy and nocturia (diagnosis = bony pain from metastatic prostate cancer). |

| Ascertainment bias | Thinking shaped by prior expectation. | A young patient with an unsteady gait in a city centre late on a Saturday night might be expected to be inebriated, rather than having suffered a stroke. |

| Availability bias | Recent experience dominates evidence. | Having recently admitted a patient with multiple sclerosis, this diagnosis comes to mind the next time a patient with sensory symptoms is seen. |

| Bandwagon effect | ‘We do it this way here’, whatever anyone else says or whatever the data say. | Continuing to prescribe diclofenac to patients with cardiovascular risk factors, despite its thrombotic risk profile. |

| Omission bias | Tendency to inaction, as events that occur due to natural disease progression, are preferred to those due to action of physician. | Electing not to have a child vaccinated against an infectious disease because of the risk of harm from that vaccine, without considering the harm caused by the illness itself. |

| Sutton's slip | Going for the obvious diagnosis. | Diagnosing musculoskeletal pain in the 28-year- old lady with chest pain described earlier. |

| Gambler's fallacy | The tendency to think that a run of diagnoses means the sequence cannot continue, rather than taking each case on its merits. | ‘I've seen three people with acute coronary syndrome recently; this can't be a fourth.’ |

| Search satisficing | Having found one diagnosis, other co-existing conditions are not detected. | Missing the second fracture in a trauma patient in whom a fracture has been identified. |

| Vertical line failure | Routine repetitive tasks lead to thinking in silos. | Missing the case of meningitis in the middle of an influenza epidemic. |

| Blind spot bias | ‘Other people are susceptible to these biases but I am not’. |

Shared decision making

Shared decision making, involving patients and professionals, is increasingly recognized as an essential part of modern health care [27]. NICE guidance on involving patients in decisions about prescribed medicines, and supporting adherence, recommends using symbols and pictures to make information accessible and understandable [28]. This may be done using patient decision aids (PDAs). A Cochrane review found that, compared with usual care, using PDAs led to patients having greater knowledge and lower decisional conflict related to feeling uninformed or unclear about personal values. Their use reduced the proportion of people who were passive in decision making and the proportion of people who remained undecided. Exposure to a decision aid which included probabilities, resulted in a higher proportion of people with accurate risk perceptions [29]. Many PDAs are intended to be used by patients to enhance their understanding prior to a discussion with a health professional (examples include the three PDAs available on NHS Direct [30]). The National Prescribing Centre (NPC) has produced PDAs intended for use by health professionals within the consultation (‘shared decision aids’), to support and augment the clinician's presentation of the possible treatment options. Thirty of these PDAs are currently available to download and use [31].

Making decisions better

Given that the purpose of undergraduate and postgraduate programmes for the health care professions is to produce individuals who can make sound clinical decisions, the failure to recognize widely this body of evidence on decision making and include it more explicitly in the undergraduate and postgraduate education of health care professionals is surprising. As one of the leading researchers in this area, Dan Ariely puts it, ‘Once we understand when and where we may make erroneous decisions, we can try and be more vigilant, force ourselves to think differently about those decisions, or use technology to overcome our inherent shortcomings’ [32].

There has been some success with interventions that encourage dual processing at the time of problem solving. Using System 1 and System 2 processes demonstrated small but consistent effects in absolute novices learning how to interpret electrocardiographs [33]. Similarly, encouraging System 1 processing has been shown to produce small gains with simple problems, and encouraging System 2 processing leads to improvements with difficult problems [34]. In addition, a number of ‘debiasing’ strategies to improve decision making have been published [35–37].

Conclusions

These are exciting times in the field of ‘getting research into practice’. The EBM movement has now developed a large repository of high quality evidence synthesized into the form of guidelines, and in many healthcare systems this is becoming linked into systems to both support and incentivize evidence-informed decision making. There is further research required to determine the optimal approach to teaching current and future decision makers how human beings make decisions.

Until such research has been performed, clinical decision makers should familiarize themselves with the different processes involved in decision making, and the biases that can affect their decisions. The conscious and appropriate application of these processes, particularly calibration (stopping to think e.g. ‘Could this be anything else’; ‘Are there any interactions if I prescribe. …’), and checking for possible bias, should increase the proportion of decisions made that are better.

Competing Interests

There are no competing interests to declare.

References

- 1.Gambrill E. Critical Thinking in Clinical Practice: Improving the Quality of Judgments and Decisions. 3rd edn. Hoboken, NJ: John Wiley & Sons; 2012. [Google Scholar]

- 2.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn't. BMJ. 1996;312:71–72. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Maskrey N, Greenhalgh T. Getting a better grip on research: the fate of those who ignore history. InnovAiT. 2009;2:619–625. [Google Scholar]

- 4.NHS Atlas of Variation. NHS Atlas of Variation. 2010. Available at http://www.rightcare.nhs.uk/atlas (last accessed 20 July 2012)

- 5.National Institute for Health and Clinical Excellence. Type 2 diabetes: newer agents. Short clinical guideline 87. 2009. Available at http://www.nice.org.uk/cg87 (last accessed 20 July 2012) [PubMed]

- 6.National Heart Failure Audit. National heart failure audit. 2010. Available at http://www.ic.nhs.uk/services/national-clinical-audit-support-programme-ncasp/audit-reports/heart-disease (last accessed 20 July 2012)

- 7.Greenhalgh T, Robert G, Bate P, Kyriakidou O, Macfarlane F, Peacock R. How to spread good ideas. A systematic review of the literature on diffusion, dissemination and sustainability of innovations in health service delivery and organisation. Report for the National Co-ordinating Centre for NHS Service Delivery and Organisation 2004. Available at http://www.sdo.nihr.ac.uk/files/project/38-final-report.pdf (last accessed 20 July 2012)

- 8.Sheldon TA, Cullum N, Dawson D, Lankshear A, Lowson K, Watt I, West P, Wright D, Wright J. What's the evidence that NICE guidance has been implemented? Results from a national evaluation using time series analysis, audit of patients' notes, and interviews. BMJ. 2004;329:999. doi: 10.1136/bmj.329.7473.999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.NHS Information Centre. Use of NICE appraised medicines in the NHS in England – Experimental Statistics. 2009. Available at http://www.ic.nhs.uk/statistics-and-data-collections/primary-care/prescriptions/use-of-nice-appraised-medicines-in-the-nhs-in-england–2009-experimental-statistics (last accessed 20 July 2012)

- 10.National Patient Safety Agency. Available at http://www.nrls.npsa.nhs.uk/resources (last accessed 20 July 2012)

- 11.Dornan T, Ashcroft D, Heathfield H, Lewis P, Miles J, Taylor D, Tully M, Wass V. An in depth investigation into causes of prescribing errors by foundation trainees in relation to their medical education. 2009. EQUIP study. Available at http://www.gmc-uk.org/FINAL_Report_prevalence_and_causes_of_prescribing_errors.pdf_28935150.pdf (last accessed 20 July 2012)

- 12.Baker E, Roberts AP, Wilde K, Walton H, Suri S, Rull G, Webb A. Development of a core drug list towards improving prescribing education and reducing errors in the UK. Br J Clin Pharmacol. 2011;71:190–198. doi: 10.1111/j.1365-2125.2010.03823.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Simon HA. Rational decision-making in business organizations. Nobel Memorial Lecture. 8th December 1978. Available at http://nobelprize.org/nobel_prizes/economics/laureates/1978/simon-lecture.pdf (last accessed 20 July 2012)

- 14.Schmidt HG, Norman GR, Boshuizen HP. A cognitive perspective on medical expertise: theory and implication. Acad Med. 1990;65:611–621. doi: 10.1097/00001888-199010000-00001. [DOI] [PubMed] [Google Scholar]

- 15.Allen D, Harkins KJ. Too much guidance? Lancet. 2005;365:1768. doi: 10.1016/S0140-6736(05)66578-6. [DOI] [PubMed] [Google Scholar]

- 16.Croskerry P. A universal model of diagnostic reasoning. Acad Med. 2009;84:1022–1028. doi: 10.1097/ACM.0b013e3181ace703. [DOI] [PubMed] [Google Scholar]

- 17.Croskerry P. Context is everything or how could I have been that stupid? Healthc Q. 2009;12:e171–176. doi: 10.12927/hcq.2009.20945. [DOI] [PubMed] [Google Scholar]

- 18.Gabbay J, le May A. Evidence based guidelines or collectively constructed ‘mindlines?’ Ethnographic study of knowledge management in primary care. BMJ. 2004;329:1013. doi: 10.1136/bmj.329.7473.1013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Dwivedi G, Hayat SA, Violaris AG, Senior R. A 28-year-old postpartum woman with right sided chest discomfort: case presentation. BMJ. 2006;332:406. doi: 10.1136/bmj.332.7542.643. 471, 643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ely JW, Osheroff JA, Ebell MH, Bergus GR, Levy BT, Chambliss ML, Evans ER. Analysis of questions asked by family doctors regarding patient care. BMJ. 1999;319:358–361. doi: 10.1136/bmj.319.7206.358. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Covell D, Uman GC, Manning PR. Information needs in office practice: are they being met? Ann Intern Med. 1985;103:596–599. doi: 10.7326/0003-4819-103-4-596. [DOI] [PubMed] [Google Scholar]

- 22.Maskrey N, Hutchinson A, Underhill J. Getting a better grip on research: the comfort of opinion. InnovAiT. 2009;2:679–686. [Google Scholar]

- 23.Schedlbauer A, Prasad V, Mulvaney C, Phansalkar S, Stanton W, Bates DW, Avery AJ. What evidence supports the use of computerized alerts and prompts to improve clinicians' prescribing behaviour? J Am Med Inform Assoc. 2009;16:531–538. doi: 10.1197/jamia.M2910. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Gordon Training International. Available at http://www.gordontraining.com (last accessed 20 July 2012)

- 25.Croskerry P. Diagnostic failure: a cognitive and affective approach. In: Henriksen K, Battles J, Marks E, Lewin D, editors. Advances in Patient Safety: From Research to Implementation. Rockville, MD: Agency for Healthcare Research and Quality (US); 2005. pp. 240–254. [PubMed] [Google Scholar]

- 26.Croskerry P. Achieving quality in clinical decision making: cognitive strategies and detection of bias. Acad Emerg Med. 2002;9:1184–1204. doi: 10.1111/j.1553-2712.2002.tb01574.x. [DOI] [PubMed] [Google Scholar]

- 27.Marshall M, Bibby J. Supporting patients to make the best decisions. BMJ. 2011;342:d2117. doi: 10.1136/bmj.d2117. [DOI] [PubMed] [Google Scholar]

- 28.National Institute for Health and Clinical Excellence. Medicines adherence Involving patients in decisions about prescribed medicines and supporting adherence. NICE Clinical Guideline 76. 2009. Available at http://www.nice.org.uk/CG76 (last accessed 20 July 2012) [PubMed]

- 29.O'Connor AM, Bennett CL, Stacey D, Barry M, Col NF, Eden KB, Entwistle VA, Fiset V, Holmes-Rovner M, Khangura S, Llewellyn-Thomas H, Rovner D. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev. 2009;(3) doi: 10.1002/14651858.CD001431.pub2. Art. No.: CD001431. doi: 10.1002/14651858.CD001431.pub2. [DOI] [PubMed] [Google Scholar]

- 30.NHS Direct. Patient decision aids. Available at http://www.nhsdirect.nhs.uk/news/newsarchive/2010/patientdecisionaidslaunched (last accessed 20 July 2012)

- 31.National Prescribing Centre. Patient decision aid directory. Available at http://www.npc.nhs.uk/patient_decision_aids/pda.php (last accessed 20 July 2012)

- 32.Ariely D. Predictably Irrational: The Hidden Forces That Shape Our Decisions. New York: Harper Collins; 2008. [Google Scholar]

- 33.Eva KW, Hatala R, Leblanc V, Brooks L. Teaching from the clinical reasoning literature: combined reasoning strategies help novice diagnosticians overcome misleading information. Med Educ. 2007;41:1152–1158. doi: 10.1111/j.1365-2923.2007.02923.x. [DOI] [PubMed] [Google Scholar]

- 34.Mamede S, Schmidt HG, Penaforte JC. Effects of reflective practice on the accuracy of medical diagnoses. Med Educ. 2008;42:468–475. doi: 10.1111/j.1365-2923.2008.03030.x. [DOI] [PubMed] [Google Scholar]

- 35.Croskerry P. The importance of cognitive errors in diagnosis and strategies to minimize them. Acad Med. 2003;78:775–780. doi: 10.1097/00001888-200308000-00003. [DOI] [PubMed] [Google Scholar]

- 36.Redelmeier DA. Improving patient care. The cognitive psychology of missed diagnoses. Ann Intern Med. 2005;142:115–120. doi: 10.7326/0003-4819-142-2-200501180-00010. [DOI] [PubMed] [Google Scholar]

- 37.Mamede S, Schmidt HG, Rikers R. Diagnostic errors and reflective practice in medicine. J Eval Clin Pract. 2007;13:138–145. doi: 10.1111/j.1365-2753.2006.00638.x. [DOI] [PubMed] [Google Scholar]