Abstract

Objectives. In an era of community-based participatory research and increased expectations for evidence-based practice, we evaluated an initiative designed to increase community-based organizations’ data and research capacity through a 3-day train-the-trainer course on community health assessments.

Methods. We employed a mixed method pre–post course evaluation design. Various data sources collected from 171 participants captured individual and organizational characteristics and pre–post course self-efficacy on 19 core skills, as well as behavior change 1 year later among a subsample of participants.

Results. Before the course, participants reported limited previous experience with data and low self-efficacy in basic research skills. Immediately after the course, participants demonstrated statistically significant increases in data and research self-efficacy. The subsample reported application of community assessment skills to their work and increased use of data 1 year later.

Conclusions. Results suggest that an intensive, short-term training program can achieve large immediate gains in data and research self-efficacy in community-based organization staff. In addition, they demonstrate initial evidence of longer-term behavior change related to use of data and research skills to support their community work.

The data needs of community-based organizations (CBOs) have increased in recent years as a result of funders’ interest in more formalized program accountability and evaluations1–3 and evidence-based decision-making.4–6 Moreover, there is a growing emphasis on community-based participatory research (CBPR) approaches in which CBOs partner with academic or other investigators on research.7,8 Indeed, building CBO research capacity is a core principle of CBPR.9–15 Community organizations are better equipped to participate equitably and with shared control over research processes in their community if they possess adequate knowledge and skills related to research terminology and methodologies.9 Emerging evidence also suggests that there are increases in the quality of research when investigators partner with the communities being studied.15–17 For example, a 2001 review of 60 published CBPR studies found greater participation rates, strengthened external validity, and decreased loss to follow-up as a result of community partnerships.15

Community organizations also have a need for data that will inform their programs, service delivery, and advocacy. Yet CBOs in underserved communities that are rapidly changing because of immigration, residential mobility, and other demographic shifts have difficulty finding secondary data sources that accurately capture the characteristics and experiences of the communities they serve.18–22

Despite these clear needs, little is known about the data or research capacity of CBOs. Initial evidence suggests that CBOs and local health departments fall short of the data and research skills required for service delivery,23,24 program evaluation,1 community assessment,25 or partnering effectively with public health researchers,26 although there is variability in these skills among nonprofits.27 Overall, however, the evidence suggests that their capacity is not keeping pace with increased demand.

Innovative programs that aim to increase CBO research capacity are growing in frequency,28–34 although published evaluations of such capacity-building programs are limited and often appear in the literature as program descriptions or evaluations of program implementation. Moreover, there are few community research capacity programs that focus on the general research capacity of participants independent of a specific health topic (e.g., environmental health35–37) or the aims of a concurrent CBPR or other research project.15

We present evaluation data from Data & Democracy, a community capacity building initiative of the Health DATA (Data. Advocacy. Training. Assistance.) program of the University of California Los Angeles Center for Health Policy Research. The goal of Data & Democracy was to increase the data and research capacity of community-based health organizations by increasing the knowledge and skills of CBO staff to plan and conduct a community health assessment. By “research capacity,” we refer to skills related to the design and methods of collecting primary data. In CBOs this may involve a community needs assessment, program evaluation, or other type of community-placed research. We distinguish that from “data capacity,” or a subset of research skills related to finding and using secondary data, as well as data management, analysis, and reporting.

Data & Democracy employed 4 strategies: (1) strong community partnerships that led to trusted endorsements supporting outreach and recruitment of prospective participants; (2) a comprehensive curriculum organized around core data and research skills using adult learning theory and popular education methods38; (3) a train-the-trainer model to ensure diffusion of innovation39 into the community and increase retention of course knowledge and skills for participants as they teach others; and (4) extensive technical assistance and follow-up.

Data & Democracy courses were offered to representatives of CBOs, nonprofits, advocacy networks, and coalitions serving underserved communities, such as low-income, immigrant, homeless, and racial/ethnic minority populations. The purpose of inclusion criteria was to provide capacity-building opportunities to organizations with fewer research training opportunities and limited research infrastructure.

The course curriculum focused on 6 steps for planning and conducting a community health assessment,40 using the assessment framework to teach the terminology and skills of a participatory research process. The first step addressed identifying and engaging key partners to plan and conduct a community assessment, pooling resources and skills, and partnering with researchers. The second step, determining an assessment focus, was designed to assist participants, who were more accustomed to writing program goals and objectives, to choose a focal issue(s) and develop clear research questions and assessment goals and objectives. The course's third step guided participants to determine the data needed to answer their research questions, starting first with identifying appropriate secondary data. The fourth step provided an introduction to data collection methods for collecting primary data when adequate existing data are not available. The fifth step reviewed strategies for basic descriptive analysis of quantitative and qualitative data, including the creation and interpretation of graphs and tables. The sixth step covered communicating data and findings in a strategic way to various audiences. Course material was taught through a combination of didactic learning, interactive exercises, homework, and real-world simulations in which participants applied research terms and methods to a community assessment partnership planning process.

After an initial pilot phase, Data & Democracy was funded for 2 cycles. This article combines evaluation findings from the first cycle (2005–2007), implemented in 6 California counties, and the second cycle (2008–2010), implemented in 4 counties. Program efforts in these 10 cohorts reached a total of 171 course participants (108 in cycle 1 and 63 in cycle 2). Sixty-four percent (n = 105) of participants went on to teach 993 coworkers, community partners, and community members as part of the train-the-trainer model, a proportion considerably higher than previous public health train-the-trainer programs.41

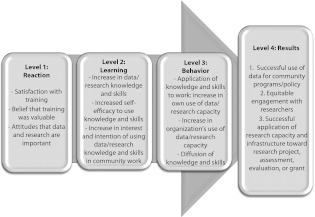

To better understand the broader context of data and research capacity building in which the Data & Democracy training initiative lies, we present a conceptual model in Figure 1, adapted from Kirkpatrick's hierarchical model of training effectiveness.42 As can be seen in the figure, this is a straightforward model containing 4 stages of capacity building: reaction, learning, behavior, and results. Kirkpatrick's theory was first developed in 1959 and has arguably become the most widely used model for the evaluation of training and learning.

FIGURE 1—

Conceptual model of community-based organization data and research capacity building: Data & Democracy training initiative, University of California, Los Angeles Health DATA (Data. Advocacy. Training. Assistance.) Program.

Source. Conceptual model based on Kirkpatrick's hierarchical model of training effectiveness.42

We focused on components of stages 2 (learning) and 3 (behavior) of the model. The learning stage focuses on increases in capacity, and the behavior stage focuses on the extent of applied learning or implementation back on the job. This study first aims to demonstrate whether a capacity-building program can increase the self-efficacy of CBO staff related to data and research knowledge and skills. The second aim takes a longer-term view with the second cycle of participants to determine if new knowledge and skills were translated into changes in behavior, such as the increased use of data and research in their work and the work of their organization.

METHODS

The Data & Democracy evaluation employed a mixed-method, pre–post course evaluation design. Capacity change was the primary outcome of the learning stage. Drawing from Bandura's social learning theory, we operationalized capacity change as increases in self-efficacy. Bandura stresses that self-efficacy to use knowledge, rather than knowledge alone, is a necessary precursor of action and sustained changes in behavior.43–45 A recent study from a similar capacity-building program tailored to community leaders (in this case focused on policy) demonstrated that a 4-session training was able to foster increases in self-efficacy for policy advocacy.46 Previous studies have also shown that increased self-efficacy following training predicts behavior among health professionals.47,48

Thus, we measured participants’ self-rated self-efficacy,49 or participants’ belief in their capacity to apply and execute the core course skills in their prospective work and community context. The core skills covered in the 6-step course and curriculum (Table 1) consisted of 7 training skills (e.g., understanding adult learning theory and training needs), 11 data and research skills (e.g., identifying good sources of community health data, pros and cons of data collection methods), and 1 skill drawing from both (i.e., training others how to plan and conduct a community assessment).

TABLE 1—

Participants’ Changes in Training and Research Capacity Measured as Self-Rated Self-Efficacy in 19 Course Skills: Data & Democracy Surveys, California, Cycle 1 (2005–2007) and Cycle 2 (2008–2010)

| Ratingsa |

|||

| Confidence Measurement Item | Precourse, Mean (SD) | Postcourse, Mean (SD) | Pre–Post Change |

| Training capacity mean scale | 3.12 (0.79) | 4.27 (0.61) | +1.14 (0.81)* |

| Understanding characteristics of an effective trainer | 3.44 (0.94) | 4.42 (0.76) | +0.98 (1.14)* |

| Understanding adult learning theory and training needs | 2.84 (1.04) | 4.40 (0.69) | +1.56 (1.09)* |

| Identifying and using effective training methods | 3.05 (0.96) | 4.30 (0.74) | +1.25 (1.16)* |

| Tailoring new material to a training audience | 3.05 (1.05) | 4.17 (0.74) | +1.13 (1.01)* |

| Using audiovisual aids for training purposes | 3.40 (1.03) | 4.20 (0.81) | +0.80 (1.04)* |

| Developing a workshop training plan | 2.96 (1.00) | 4.15 (0.87) | +1.19 (1.09)* |

| Conducting a community training | 3.14 (1.10) | 4.25 (0.78) | +1.11 (1.08)* |

| Research capacity mean scale | 2.77 (0.81) | 4.16 (0.68) | +1.38 (0.83)* |

| Developing a community partnership to conduct a community assessment | 2.96 (1.16) | 4.18 (0.92) | +1.21 (1.11)* |

| Developing goals and objectives to focus a community assessment | 2.87 (0.95) | 4.32 (0.76) | +1.45 (1.04)* |

| Identifying good sources of health data for community advocacy purposes | 2.77 (1.06) | 4.16 (0.82) | +1.39 (1.13)* |

| Determining when to collect new health data | 2.47 (1.08) | 3.93 (1.01) | +1.46 (1.12)* |

| Identifying pros and cons of various data collection methods | 2.65 (1.05) | 4.22 (0.83) | +1.56 (1.18)* |

| Identifying appropriate data analysis methods for quantitative and qualitative data | 2.40 (1.15) | 4.13 (0.86) | +1.73 (1.25)* |

| Communicating community assessment findings to targeted audiences | 2.94 (1.05) | 4.30 (0.81) | +1.35 (1.18)* |

| Developing a community assessment plan | 2.56 (1.06) | 4.22 (0.79) | +1.67 (1.15)* |

| Conducting a community assessment | 2.56 (1.09) | 4.21 (0.75) | +1.65 (1.17)* |

| Working with other researchers | 3.29 (1.05) | 4.04 (0.90) | +0.75 (1.11)* |

| Telling my community's story in a compelling way to funders and policymakers | 3.03 (1.31) | 4.04 (0.99) | +1.01 (1.30)* |

| Training and research capacity: training others how to plan and conduct a community assessment | 2.58 (1.27) | 4.12 (0.79) | +1.54 (1.28)* |

Note. From pre- and postcourse surveys, n = 142 matched pairs.

“How confident you feel today in this skill?” (scale from 0 to 5, where 5 = “extremely confident”).

*Wilcoxon Sign Rank Test P < .001.

We utilized data from 3 evaluation data collection methods. The first was a brief application survey, taken online via SurveyMonkey (SurveyMonkey.com LLC, Palo Alto, CA) by each applicant to the Data & Democracy course. In addition to questions used to determine applicant course eligibility, further items gathered information about applicants’ organizational characteristics (i.e., target population(s), number of staff, annual budget) and previous research and data experience (i.e., accessing, collecting, and using health data; conducting community assessments).

The second data collection method was a precourse survey administered in person to course participants at the beginning of the course's first day, and a postcourse survey administered at the end of the third (last) day of the course. Participants’ self-efficacy in the 19 core course skills was measured via 19 identical items in both surveys asking, “Please circle the number that indicates how confident you feel today in this skill.” Each item used a 6-point response scale from 0 to 5, where 5 is “extremely confident” and 0 is “not at all confident.” (We used a 6-point scale to eliminate a neutral option and force respondents to attribute a positive or negative rating to the question. Although there are 6 options, the maximum rating is a 5.)

The third data collection method included 2 follow-up surveys administered via SurveyMonkey at 4 months (wave 1) and 1 year (wave 2) after course completion. Course participants were e-mailed invitations with links to participate in the survey. To maximize response rates, Health DATA staff sent at least 2 e-mailed reminders to each of the course participants, as well as a third or fourth contact via e-mail or phone call. Most wave 1 follow-up survey items were related to the implementation, process, and perceived outcomes of trainers’ community workshops, and thus are not presented here. This survey had an 81% response rate. Wave 2 follow-up survey items explored behavior change, measured as the longer-term use and application of course skills and data by both the course participants and their organizations. By the wave 2 survey, the respondent sample had decreased to 36 of the 63 participants (57% response rate) because of attrition. The follow-up data are presented here.

Pre- and postcourse surveys were kept anonymous by using randomly generated participant IDs for matching them to each other. Anonymity was also protected for the follow-up surveys. It was important to the evaluation that participants felt comfortable being candid about the course experience and their workshop completion, despite the fact that Health DATA staff were their trainers, technical assistance providers, and also those requesting evaluative feedback. However, because of this anonymity, data collected from the pre–post surveys could not be linked to application survey or follow-up survey data.

For aim 1, we used univariate analyses to describe the course participants and calculate precourse, postcourse, and pre–post difference scores for each of the participants in each of the 19 course capacities. Participants for whom pre- and postcourse survey data could not be matched were deleted from further analysis. Twenty-nine cases were thus deleted, yielding a sample of 142 course participants for capacity change analysis. (These participants’ pre–post surveys could not be matched because of late arrival, early departure, or unmatchable survey numbers occurring as a result of administrator or respondent error.) An additional 29 participants were missing a pre- or postcourse response for at least 1 of the 19 items. (We conducted a sensitivity analysis to determine if missing responses differed in a systematic way from nonmissing responses, and detected no significant differences in item responses among nonmatched individuals.) We imputed missing values by using the corresponding pre- or postcourse value from the same item. In other words, in these cases, the amount of change would be equal to zero. This served to maintain a larger sample size while having a conservative effect on results.

Because of the nonparametric and dependent nature of the 2 pre- and postcourse scores, we used Wilcoxon signed rank tests to test differences in pre–post capacity (matched Likert scores).50 We created mean scales of the 7 training skills and the 11 data and research skills to determine if there were differences in course participants’ capacity and pre–post capacity change in these 2 areas, as well as to create a more reliable multiitem measure of capacity change.51 To evaluate scale internal consistency, we explored interitem correlations and calculated Cronbach's α.52 Cronbach's α for both scales were greater than or equal to 0.9 (not shown), indicating excellent internal consistency.53

For aim 2, we restricted the analytic sample to 36 of the 63 participants of the second cycle of Data & Democracy who completed the wave 2 follow-up survey because wave 2 surveys were first implemented in this second cycle's evaluation. Simple univariate and bivariate descriptive statistics were conducted to evaluate reported behavior change.

RESULTS

Maximum enrollment for each course was set at 30 to maximize interaction and personalized attention. There were 238 total applicants for the 6 courses of cycle 1 (averaging 27 waitlisted applicants per course), and 247 applicants for the 4 courses of cycle 2 (averaging 37 waitlisted applicants per course). Priority for admission was given to applicant staff at nonprofit CBOs working in underserved California communities.

Of the 171 total course participants, 144 (84%) completed an application survey. Table 2 demonstrates that participants came to the course with more experience with training than with data. Forty-two percent had previously trained groups “many times.” Yet only 22% reported “a lot of experience” collecting data, and 16% reported “a lot of experience” analyzing data and using it to inform organizational, programmatic, or advocacy decisions. Participants reported more direct experience with conducting a community assessment, as 45% had previously worked on a community assessment. Participants came from organizations typical of those representing and serving underserved communities (i.e., nonprofit, community-based, service delivery, and advocacy organizations). The organizations ranged in size of budget and staff. The participants themselves were a diverse group, as 42% were bilingual, speaking more than 5 languages.

TABLE 2—

Participants’ Previous Training and Data Experience and Organizational Characteristics: Data & Democracy Application Survey, California, Cycle 1 (2005–2007) and Cycle 2 (2008–2010)

| Characteristics | Valid % |

| Participant characteristics | |

| Previous training experience | |

| I have trained groups many times | 42 |

| I have trained groups a few times | 40 |

| I do not have training experience | 15 |

| Previous experience collecting data | |

| A lot of experience | 22 |

| Some experience | 56 |

| Little or no experience | 21 |

| Previous experience analyzing data | |

| A lot of experience | 16 |

| Some experience | 59 |

| Little or no experience | 23 |

| Previous experience using data in community work | |

| A lot of experience | 16 |

| Some experience | 59 |

| Little or no experience | 24 |

| Previous experience with community assessments | |

| Worked on one myself | 45 |

| Had contact with one conducted by others | 26 |

| Little or no experience | 28 |

| Language capacitya | |

| English only | 59 |

| Spanish only | 20 |

| English–Spanish bilingual | 13 |

| English–other language bilingual | 7 |

| Organizational characteristics | |

| Organization typebc | |

| 501(c)3 status nonprofit | 73 |

| Community-based or grassroots | 46 |

| Municipal or county health or other department | 16 |

| Hospital, clinic, or other service provider | 14 |

| State health or other department | 7 |

| Private or incorporated | 4 |

| Academic institution | 4 |

| Other | 21 |

| Organizational size, no. staff | |

| 1–5 | 17 |

| 6–10 | 14 |

| 11–20 | 15 |

| > 20 | 53 |

| Organizational budget, $/y | |

| < 100 000 | 13 |

| 100 000–1 000 000 | 34 |

| > 1 000 000 | 49 |

| Organization focusbc | |

| Health outreach, promotion, or education | 86 |

| Advocacy or public policy | 66 |

| Program planning or implementation | 59 |

| Care or service delivery | 45 |

| Research or evaluation | 32 |

| Volunteer | 23 |

| Consulting | 14 |

| Other | 18 |

| Target population(s)b | |

| Spanish monolingual | 69 |

| Low-income | 92 |

| Immigrant | 71 |

| Homeless | 56 |

| African American | 65 |

| American Indian | 46 |

| Asian/Pacific Islander | 60 |

| Latino | 80 |

| Other | 32 |

Note. The total sample size was n = 144.

Collected from cycle 1 only (n = 88).

Multiple replies allowed.

Collected from cycle 2 only (n = 56).

Capacity Change

Table 1 summarizes pre–post course capacity on the 19 training and research skills taught in the course. Precourse capacity scores ranged from 2.40 to 3.44 on the scale of 0 to 5, with 5 being “extremely confident.” Participants reported more confidence in training skills than in data and research skills before the course began. In the postcourse survey, average self-efficacy ratings on the 19 course skills ranged from 3.93 to 4.42. This time the difference in the mean scores on the training scale and data and research scale was smaller.

Pre–post change scores for each of the 19 course skills were positive, on average, demonstrating a statistically significant increase in self-efficacy for each of the skill areas covered in the course. Pre–post changes in the 2 capacity scales showed a 1.14 average improvement in training capacity and a 1.38 average improvement in data and research capacity (both P < .001). Although a single item rather than a scale, the course skill that incorporated both training and research skills (“training others how to plan and conduct a community assessment”) demonstrated the largest improvement (mean = 1.54) as a result of the course.

Behavior Change

At the wave 2 follow-up survey (1 year after training), the majority of respondents reported applications of the community assessment skills taught in the course to their work. The most frequently mentioned skills used most in their work since the course (Table 3) included developing goals and objectives to focus a community assessment, identifying good sources of health data for community advocacy, communicating findings to targeted audiences, and identifying the pros and cons of various data collection methods. The majority reported that the course gave them skills that enhanced their current work on community assessments. In addition, 1 in 3 reported that the course taught them skills they have applied to other research activities, and nearly a quarter expected to apply the skills to future community assessments. Only a small proportion (6%) reported no change in their approach to community assessment since the course.

TABLE 3—

Participants’ Frequency of Use of Data and Application of Community Assessment Skills: Data & Democracy Wave 2 Follow-Up Survey, California, Cycle 2 (2008–2010)

| Participant Skill | No. (Valid %) |

| Community assessment skills used most since coursea | |

| Developing a community partnership to conduct assessment | 6 (17) |

| Developing goals and objectives to focus a community assessment | 17 (48) |

| Identifying good sources of health data for community advocacy | 12 (34) |

| Determining when to collect new health data | 5 (14) |

| Identifying pros and cons of various data collection methods | 10 (29) |

| Identifying appropriate data analysis methods | 6 (17) |

| Communicating assessment findings to targeted audiences | 10 (29) |

| Developing community assessment plan | 2 (6) |

| Working with other researchers | 9 (26) |

| Telling my community's story to funders and policymakers | 8 (23) |

| Planning or implementing program or policy change | 8 (23) |

| Changes in approach to assessments since courseb | |

| Approach not changed | 2 (6) |

| Enhanced current work on community assessments | 20 (56) |

| Will apply skills to future community assessments | 8 (22) |

| Applied skills to other research processes | 11 (31) |

| Frequency of use of data since course | |

| Much more frequently | 4 (11) |

| More frequently | 11 (31) |

| About the same frequency | 16 (44) |

| Less frequently | 3 (8) |

| Don't use data in work | 2 (6) |

| Frequency of presenting data since course | |

| More often than before | 11 (31) |

| With about the same frequency | 20 (56) |

| Less frequently | 3 (8) |

| Have not presented data | 0 |

| Organization's frequency of use of data for funding since course | |

| Yes, more often than before | 15 (42) |

| No, frequency the same | 15 (42) |

| Not tried to use data for funding since course | 5 (14) |

| Organization's frequency of use of data for advocacy since course | |

| Yes, more often than before | 15 (42) |

| No, frequency the same | 19 (53) |

| Not tried to use data for advocacy since course | 0 |

| Organization's frequency of use of data for program or policy development since course | |

| Yes, more often than before | 14 (40) |

| No, frequency the same | 13 (37) |

| Not tried to use data for program or policy since course | 6 (17) |

Note. The sample size was n = 36.

Respondents could check up to 3 responses.

Respondents could check all that apply.

About a third of course participants reported using data “more frequently” in their work since taking the course, with an additional 11% using data “much more frequently.” Forty-four percent reported no change in use, and 8% reported decreased frequency. Nearly one third reported an increase in their use of data they present to others, such as in grant proposals, newsletters, and presentations.

Table 3 also summarizes reported changes in the use of data in the course participants’ organizations to determine whether there is evidence of an increase in organizational, not just participant, behavior change since the course. Approximately 4 in 10 reported that their organization had used data more frequently to develop funding, advocacy, or for policy or program development activities.

DISCUSSION

Proficient use of data is increasingly seen as essential for heightening awareness of health problems and informing strategies to improve health, especially in underserved communities experiencing persistent disparities.1–8 However, little research has focused on understanding how CBOs acquire and use health data or collect primary data within the populations they serve.23–27 Importantly, CBOs have limited opportunities to build their capacity to actively participate in research processes, and few studies have evaluated the capacity-building programs that do exist.

Results from our Data & Democracy program and evaluation demonstrate that there was a high demand for this course, suggesting a need among CBO staff to enhance these skills. Furthermore, the Data & Democracy course was successful in demonstrating evidence of the “learning” phase of capacity building according to our conceptual model: increasing participants’ self-efficacy related to core data and research skills. Before participating in the course, participants had limited previous experience collecting, analyzing, and using health data. Although they reported more experience with community assessments before the course, they nonetheless reported low baseline self-efficacy in the actual skills used in the community assessment process. After course participation, however, participants reported greater improvements in their data and research capacity, and the gap between their perceived training and data capacity narrowed by the end of the course.

Although our wave 2 sample was relatively small, results provide initial evidence of the behavior phase of capacity building: application of data and research capacity to participants’ community work. Our results demonstrate that, a year after the course, the majority of participants did increase their engagement in the research process, reporting use of the data and research skills taught in the course, as well as application of those skills to community assessments. Approximately 40% were using and presenting data more frequently in their work, and their organizations were using data for funding, advocacy, and program or policy development more frequently since the course. These results suggested that participants were closer to reaching the longer-term “results” stage of capacity building than we had expected.

The application of Kirkpatrick's model to a capacity-building framework is critical because it acknowledges and articulates important measures of the process of taking in and adopting new capacities, regardless of where along the capacity spectrum a trainee begins and ends. In the field of community capacity building, where audiences range significantly in former training, background, and expertise, evaluation of trainee progress must keep in mind this learning progression. Indeed, these results provide evidence that these participants moved forward along a continuum of data and research self-efficacy. Research self-efficacy is important for CBOs using health data to support their work and working in partnership with researchers. Knowledge of terminology and methods is important, but will only go so far in the deliberative process of research design. The community representative must also have the confidence to design or partner in a research process appropriate to their community contexts and organizational needs.

This study had several strengths. First, it included a large sample that participated in courses over a 5-year period, representing diverse communities, organizations, and geographic regions of California. Second, although the change in capacity was not positive for every course participant in every skill area (as might be expected), our analysis yielded significant pre–post capacity changes for both individual items and scaled skill areas. Our main limitation was our inability to link any surveys, except for the pre- and postcourse surveys to each other, because of a priority to maintain survey confidentiality. This meant that we were unable to explore individual or organizational determinants of self-efficacy, or the role of self-efficacy in behavior a year later. Another limitation was that the application process and our limited evaluation resources did not allow us to randomly assign CBO staff to a course and comparison group. As a result, our follow-up survey questions relied on participant self-report of what had changed in their work as a result of their participation in Data & Democracy.

Nonetheless, our results suggest that an intensive, short-term training program can achieve large immediate gains in data and research self-efficacy in community-based organization staff. In addition, they demonstrate initial evidence of behavior change related to use of data and research skills to support their community work a year later. We argue that providing data and research capacity-building opportunities and resources to CBOs working in community health is both an essential piece of building infrastructure for these organizations’ growing data needs, and a facilitator of more equitable and effective partnerships with researchers. Although the purpose of this 3-day course was not to create researchers, CBOs that are better “consumers” of health data and empowered participants in research processes are more effective in assessing their community′s health needs, advocating and planning for needed programs and services, and employing evidence-based practice.

The community research capacity literature has identified the need for validated measures of research capacity to truly understand the impact capacity-building efforts have on CBOs and the community at large.34 Although we did not set out to create validated measures, we hope that these results will inform that effort by providing a conceptual model of the community research capacity–building process, and providing a case study of how this sample of CBOs experienced learning and behavior change. Future research should attempt to capture all stages of community research capacity development to better understand the process and the outcomes of such research capacity–building efforts.

Acknowledgments

This program and the data analysis were supported by The California Endowment, National Institutes of Health (NIH) National Institute of Environmental Health Sciences (NIEHS; grant 5RC1ES018121), National Institute on Aging (NIA; grant P30-AG021684), and National Institute of Mental Health (NIMH; grant T32MH020031).

The authors would like to thank Barbara Masters and Gigi Barsoum, program officers of The California Endowment for the Data & Democracy project. The Health DATA program also acknowledges the support of the numerous community-based organizations who served on the Data & Democracy Advisory Committee, hosted the trainings, and facilitated enrollment outreach, and the many individuals who helped guide the development of the course curriculum and training guides. Advisory board members included Andrea Zubiate (California Department of Health Services Indian Health Program); Crystal Crawford (California Black Women's Health Project); Daniel Ruiz (AltaMed Health Services Corporation); Denise Adams-Simms (California Black Health Network); Gema Morales-Meyer (Los Angeles County Department of Health Services Whittier Health Center/SPA 7); Gloria Giraldo (California Poison Control Center, University of California San Francisco); Jaqueline Tran (Orange County Asian Pacific Islander Community Alliance); Jennifer Cho, Sarkis Semerdjyan, and Linda Jones (L.A. Care Health Plan); Laurie Primavera (Central Valley Health Policy Institute); Melinda Cordero (Network of Promotoras and Community Health Workers); Pamela Flood (California Pan-Ethnic Health Network); Raquel Donoso (Latino Issues Forum); and Wainani Au Oesterle (United Way of San Diego County). We also acknowledge the community advisory board members who contributed to the development of the Performing a Community Assessment curriculum: Cheryl Barrit (Long Beach Department of Health and Human Services), Marvin Espinoza (5-a-Day Power Play), Loretta Ford Jones (Healthy African American Families), Neelam Gupta (ValleyCare Community Consortium), Anita Jones (People's Choice Inc), Maria Magallanes (Health Consumer Center of LA), Lee Nattress (Disabled Advocacy Program, Community Health Systems Inc), Donald Nolar (Maternal and Child Access), William Prouty (The EDGE Project), Patricia Salas (Multi-Cultural Area Health Education Center), Valerie Smith (Yucca Loma Family Center), Antony Stately (AIDS Project LA), Michelle Strickland (Greater Beginnings for Black Babies), Orenda Warren (Minority AIDS Project), and Kynna Wright (Vivian Weinstein Child Advocacy and Leadership Program).

Note. The content does not necessarily represent the official views of The California Endowment, the NIH, NIEHS, NIA, or NIMH.

Human Participant Protection

This study consisted of anonymous evaluation data and, thus, human participants review was determined to not be needed by the UCLA Office of the Human Research Protection Program.

References

- 1.Carman JG. Evaluation practice among community-based organizations—research into the reality. Am J Eval. 2007;28(1):60–75 [Google Scholar]

- 2.LeRoux K, Wright NS. Does performance measurement improve strategic decision making? Findings from a national survey of nonprofit social service agencies. Nonprofit Volunt Sector Q. 2010;39(4):571–587 [Google Scholar]

- 3.Morley E, Urban Institute. Outcome Measurement in Nonprofit Organizations: Current Practices and Recommendations. Washington, DC: Independent Sector; 2001 [Google Scholar]

- 4.Glasgow RE, Lichtenstein E, Marcus AC. Why don't we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. Am J Public Health. 2003;93(8):1261–1267 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Annu Rev Public Health. 2007;28:413–433 [DOI] [PubMed] [Google Scholar]

- 6.Glasgow RE. What types of evidence are most needed to advance behavioral medicine? Ann Behav Med. 2008;35(1):19–25 [DOI] [PubMed] [Google Scholar]

- 7.Green LW, Mercer SL. Can public health researchers and agencies reconcile the push from funding bodies and the pull from communities? Am J Public Health. 2001;91(12):1926–1929 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Leung MW, Yen IH, Minkler M. Community based participatory research: a promising approach for increasing epidemiology's relevance in the 21st century. Int J Epidemiol. 2004;33(3):499–506 [DOI] [PubMed] [Google Scholar]

- 9.Israel BA, Schulz AJ, Parker EA, Becker AB. Review of community-based research: assessing partnership approaches to improve public health. Annu Rev Public Health. 1998;19(1):173–202 [DOI] [PubMed] [Google Scholar]

- 10.Kretzmann JP, McKnight JL. Building Communities From the Inside Out: A Path Towards Finding and Mobilizing a Community's Assets. Chicago, IL: ACTA Publications; 1993 [Google Scholar]

- 11.McKnight J. Regenerating community. Soc Policy. 1987;17:54–58 [Google Scholar]

- 12.Minkler M. Using participatory action research to build healthy communities. Public Health Rep. 2000;115(2-3):191–197 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Raczynski JM, Cornell CE, Stalker Vet al. Developing community capacity and improving health in African American communities. Am J Med Sci. 2001;322(5):294–300 [PubMed] [Google Scholar]

- 14.Steuart GW. Social and cultural perspectives: community intervention and mental health. Health Educ Q. 1993;(Suppl 1):S99–S111 [DOI] [PubMed] [Google Scholar]

- 15.Viswanathan M, Ammerman A, Eng Eet al. Community-based participatory research: assessing the evidence. Evid Rep Technol Assess (Summ). 2004;(99):1–8 [PMC free article] [PubMed] [Google Scholar]

- 16.Israel BA, Lichtenstein R, Lantz Pet al. The Detroit Community–Academic Urban Research Center: development, implementation, and evaluation. J Public Health Manag Pract. 2001;7(5):1–19 [DOI] [PubMed] [Google Scholar]

- 17.Cashman SB, Adeky S, Allen AJet al. The power and the promise: working with communities to analyze data, interpret findings, and get to outcomes. Am J Public Health. 2008;98(8):1407–1417 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chen W, Petitti D, Enger S. Limitations and potential uses of census-based data on ethnicity in a diverse community. Ann Epidemiol. 2004;14(5):339–345 [DOI] [PubMed] [Google Scholar]

- 19.Houghton F. Misclassification of racial/ethnic minority deaths: the final colonization. Am J Public Health. 2002;92(9):1386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Krieger N, Chen J, Waterman P, Rehnkopf D, Subramanian S. Race/ethnicity, gender, and monitoring socioeconomic gradients in health: a comparison of area-based socioeconomic measures—The Public Health Disparities Geocoding Project. Am J Public Health. 2003;93(10):1655–1671 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Shavers VL. Measurement of socioeconomic status in health disparities research. J Natl Med Assoc. 2007;99(9):1013–1023 [PMC free article] [PubMed] [Google Scholar]

- 22.Smedley B, Stith AY, Nelson AR, Unequal Treatment: Confronting Racial and Ethnic Disparities in Health Care. Washington, DC: Institute of Medicine; 2002 [PubMed] [Google Scholar]

- 23.Paschal AM, Kimminau K, Starrett BE. Using principles of community-based participatory research to enhance health data skills among local public health community partners. J Public Health Manag Pract. 2006;12(6):533–539 [DOI] [PubMed] [Google Scholar]

- 24.Whitener BL, Van Horne VV, Gauthier AK. Health services research tools for public health professionals. Am J Public Health. 2005;95(2):204–207 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Oliva G, Rienks J, Chavez GF. Evaluating a program to build data capacity for core public health functions in local maternal child and adolescent health programs in California. Matern Child Health J. 2007;11(1):1–10 [DOI] [PubMed] [Google Scholar]

- 26.Lounsbury D, Rapkin B, Marini L, Jansky E, Massie M. The community barometer: a breast health needs assessment tool for community-based organizations. Health Educ Behav. 2006;33(5):558–573 [DOI] [PubMed] [Google Scholar]

- 27.Carman JG, Fredericks KA. Evaluation capacity and nonprofit organizations. Am J Eval. 2010;31(1):84–104 [Google Scholar]

- 28.Bailey J, Veitch C, Crossland L, Preston R. Developing research capacity building for Aboriginal & Torres Strait Islander health workers in health service settings. Rural Remote Health. 2006;6(4):556. [PubMed] [Google Scholar]

- 29.Braun KL, Tsark JU, Santos L, Aitaoto N, Chong C. Building Native Hawaiian capacity in cancer research and programming. A legacy of ‘Imi Hale. Cancer. 2006;107(8, Suppl):2082–2090 [DOI] [PubMed] [Google Scholar]

- 30.Johnson JC, Hayden UT, Thomas Net al. Building community participatory research coalitions from the ground up: the Philadelphia area research community coalition. Prog Community Health Partnersh. 2009;3(1):61–72 [DOI] [PubMed] [Google Scholar]

- 31.May M, Law J. CBPR as community health intervention: institutionalizing CBPR within community based organizations. Prog Community Health Partnersh. 2008;2(2):145–155 [DOI] [PubMed] [Google Scholar]

- 32.Paschal AM, Oler-Manske J, Kroupa K, Snethen E. Using a community-based participatory research approach to improve the performance capacity of local health departments: the Kansas Immunization Technology Project. J Community Health. 2008;33(6):407–416 [DOI] [PubMed] [Google Scholar]

- 33.Scarinci IC, Johnson RE, Hardy C, Marron J, Partridge EE. Planning and implementation of a participatory evaluation strategy: a viable approach in the evaluation of community-based participatory programs addressing cancer disparities. Eval Program Plann. 2009;32(3):221–228 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tumiel-Berhalter LM, McLaughlin-Diaz V, Vena J, Crespo CJ. Building community research capacity: process evaluation of community training and education in a community-based participatory research program serving a predominantly Puerto Rican community. Prog Community Health Partnersh. 2007;1(1):89–97 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Cook WK. Integrating research and action: a systematic review of community-based participatory research to address health disparities in environmental and occupational health in the USA. J Epidemiol Community Health. 2008;62(8):668–676 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.O'Fallon LR, Dearry A. Commitment of the National Institute of Environmental Health Sciences to community-based participatory research for rural health. Environ Health Perspect. 2001;109(Suppl 3):469–473 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Parker EA, Chung LK, Israel BA, Reyes A, Wilkins D. Community organizing network for environmental health: using a community health development approach to increase community capacity around reduction of environmental triggers. J Prim Prev. 2010;31(1-2):41–58 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Freire P. Pedagogy of the Oppressed. New York, NY: Herder and Herder; 1970 [Google Scholar]

- 39.Rogers EM. Diffusion of Innovations. 4th ed. New York, NY: The Free Press; 1995 [Google Scholar]

- 40.Carroll AM, Perez M, Toy P. Performing a Community Assessment Curriculum. Los Angeles, CA: UCLA Center for Health Policy Research; September 2004. Available at: http://healthpolicy.ucla.edu/ProgramDetails.aspx?id=51. Accessed December 16, 2010 [Google Scholar]

- 41.Orfaly RA, Frances JC, Campbell Pet al. Train-the-trainer as an educational model in public health preparedness. J Public Health Manag Pract. 2005;(Suppl):S123–S127 [DOI] [PubMed] [Google Scholar]

- 42.Kirkpatrick DL. Evaluation of training. : Craig RL, Training and Development Handbook. 2nd ed New York, NY: McGraw-Hill; 1976 [Google Scholar]

- 43.Bandura A. Self-efficacy: toward a unifying theory of behavioral change. Psychol Rev. 1977;84(2):191–215 [DOI] [PubMed] [Google Scholar]

- 44.Bandura A. Self-efficacy mechanism in human agency. Am Psychol. 1982;37(2):122–147 [Google Scholar]

- 45.Bandura A. Social Foundations of Thought and Action: A Social Cognitive Theory. 1st ed. Englewood Cliffs, NJ: Prentice Hall; 1985 [Google Scholar]

- 46.Israel BA, Coombe CM, Cheezum RRet al. Community-based participatory research: a capacity-building approach for policy advocacy aimed at eliminating health disparities. Am J Public Health. 2010;100(11):2094–2102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Umble KE, Cervero RM, Yang B, Atkinson WL. Effects of traditional classroom and distance continuing education: a theory-driven evaluation of a vaccine-preventable diseases course. Am J Public Health. 2000;90(8):1218–1224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Farel A, Umble K, Polhamus B. Impact of an online analytic skills course. Eval Health Prof. 2001;24(4):446–459 [DOI] [PubMed] [Google Scholar]

- 49.Maibach E, Murphy DA. Self-efficacy in health promotion research and practice: conceptualization and measurement. Health Educ Res. 1995;10(1):37–50 [Google Scholar]

- 50.Roberson PK, Shema SJ, Mundfrom DJ, Holmes TM. Analysis of paired Likert data: how to evaluate change and preference questions. Fam Med. 1995;27(10):671–675 [PubMed] [Google Scholar]

- 51.Carmines EG, Zeller RA. Reliability and Validity Assessment. Sage Publications Inc: Thousand Oaks, CA; 1986 [Google Scholar]

- 52.Cronbach L. Coefficient alpha and the internal structure of tests. Psychometrika. 1951;16(3):297–333 [Google Scholar]

- 53.George D, Mallery P. SPSS for Windows Step by Step: A Simple Guide and Reference: 11.0 Update. Boston, MA: Allyn & Bacon; 2003 [Google Scholar]