Abstract

Purpose.

We investigated under what conditions humans can make independent slow phase eye movements. The ability to make independent movements of the two eyes generally is attributed to few specialized lateral eyed animal species, for example chameleons. In our study, we showed that humans also can move the eyes in different directions. To maintain binocular retinal correspondence independent slow phase movements of each eye are produced.

Methods.

We used the scleral search coil method to measure binocular eye movements in response to dichoptically viewed visual stimuli oscillating in orthogonal direction.

Results.

Correlated stimuli led to orthogonal slow eye movements, while the binocularly perceived motion was the vector sum of the motion presented to each eye. The importance of binocular fusion on independency of the movements of the two eyes was investigated with anti-correlated stimuli. The perceived global motion pattern of anti-correlated dichoptic stimuli was perceived as an oblique oscillatory motion, as well as resulted in a conjugate oblique motion of the eyes.

Conclusions.

We propose that the ability to make independent slow phase eye movements in humans is used to maintain binocular retinal correspondence. Eye-of-origin and binocular information are used during the processing of binocular visual information, and it is decided at an early stage whether binocular or monocular motion information and independent slow phase eye movements of each eye are produced during binocular tracking.

Humans can generate slow phase eye movements that differ in direction and frequency under dichoptic viewing conditions. Such movements serve to maintain binocular retinal correspondence and are independent from motion perception.

Introduction

The ability to move the eyes independently of each other in different directions generally is restricted to specialized lateral eyed animal species, for example chameleons. In contrast, humans with frontally placed eyes are considered to have a tight coupling between the two eyes, although there are exceptions.1 Normally, humans coordinate their eye movements in such a way that each eye is aimed at the same point at a given distance in visual space.2 Association of visual inputs derived from corresponding retinal locations provides the brain with a binocular unified image of the visual world.3 From the retinal images of the two eyes a binocular single representation of the visual world is constructed based on binocular retinal correspondence.4 To achieve this, the brain must use the visual information from each eye to stabilize the two retinal images relative to each other. Imagine one is holding two laser pointers, one in each hand, and one must project the two beams precisely on top of each other on a wall.

Eye movements contribute to binocular vision using visual feedback from image motion of each eye and from binocular correspondence. It generally is believed that the problem of binocular correspondence is solved in the primary visual cortex (V1).5,6 Neurons in V1 process monocular and binocular visual information.7 At subsequent levels of processing eye-of-origin information is lost, and the perception of the binocular world is invariant for eye movements and self-motion.8–11

An important question is to what extent binocular and/or monocular visual information is used. Binocular vision relies heavily on disparity, which works only within limits of fusion.12,13 Neurons used for binocular disparity were described first in V1 in the cat,14,15 and later were found in many other visual cortical areas: V1 to V5 (area MT) and in area MST.6,10,11,16–19 Although most is known about the neural substrate of horizontal disparities, there also is evidence for vertical disparity sensitive neurons in the visual cortex.7,20

The variation in sensitivity of cortical areas to specific stimulus attributes also suggests a hierarchical structure for motion processing. First-order motion energy detectors in striate areas are at the basis for initial ocular following responses.21 In area MT cortical neurons not only are tuned to binocular disparity, but also to orientation, motion direction, and speed.16,22 Perception of depth and motion in depth occurs outside area V1.5,9,10,23,24

Although it has been suggested that V1 is responsible for generating input signals for the control of vergence during binocular vision,6,25 and there is evidence for disparity energy sensing,26 it is unknown how visual disparity signals from V1 are connected to oculomotor command centers in the brainstem.

Also at the brainstem level, the monocular or binocular organization of oculomotor signals still is controversial (for a review see the report of King and Zhou27). On one hand, there is strong support for conjugate control using separate version and vergence centers, such as the mesencephalic reticular formation.28 On the other hand, there also are examples of a more independent control.1

Several lines of evidence suggest that at the premotor level abducens burst neurons can be divided in left and right eyes bursters, and thus have a monocular component.29–32

In humans there is behavioral evidence for asymmetrical vergence.8,33 Recently, a dual visual-local feedback model of the vergence movement system was proposed that can account for binocular as well as monocular driven vergence responses.34

To investigate to what extent humans have independent binocular control and what are the required conditions for this behavior, we used a two-dimensional dichoptic visual stimulation paradigm. With this paradigm we demonstrated in humans that to sustain binocular vision, they can generate slow phase eye movements with independent motion directions, and the perceived direction of binocular motion can be dissociated from control of eye movement.

Methods

Subjects

Six subjects participated in the experiments (age 20–52 years). None of them had a history of ocular or oculomotor pathology. Visual acuities varied between 0.8 and 1.0 (Snellen acuity chart). Stereopsis was better than 60 seconds of arc (measured with the TNO test for stereoscopic vision). None of the subjects had ocular dominance (tested at viewing distance of 2 m with Polaroid test). Subjects were naïve to the goal of the experiment with the exception of one of the authors. All experiments were performed according to the Declaration of Helsinki.

Stimulus Presentation

Subjects faced a tangent screen (dimensions 2.5 × 1.8 m) at a distance of 2 m. Visual stimuli were generated by a visual workstation (Silicon Graphics, Fremont, CA) and back-projected on the tangent screen by a high-resolution projector provided with a wide-angle lens (JVC D-ILA projector, contrast ratio 600:1; JVC, Yokohama, Japan).

The viewing angle of the whole stimulus was 60°, whereas each dot subtended 1.2° visual angle. The visual stimulus consisted of two overlaid random dot patterns (Fig. 1, left panels). One pattern oscillated horizontally, the other oscillated vertically. We stimulated with three different frequencies (f = 0.16, 0.32, and 0.64 Hz, with amplitudes of 1.72°, 0.86°, and 0.43°, respectively).

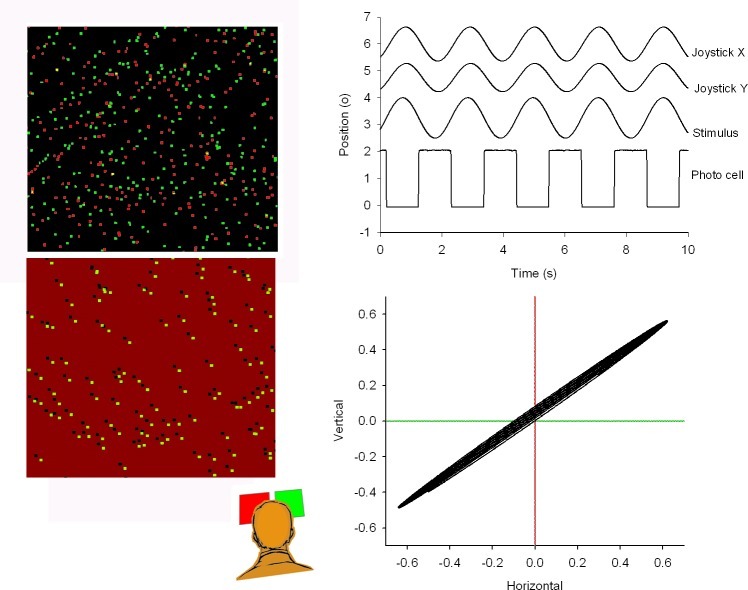

Figure 1. .

Left panel: cartoon of the correlated (upper panel) and anti-correlated (lower panel) stimulus displays from a subject's point of view. From behind the red and green filters, the left and right eyes saw the stimulus oscillating between up and down, and between left and right, respectively. Subjects tracked the perceived stimulus motion with a joystick. Amplitudes of the joystick signal were scaled to the actual stimulus amplitude. The upper right panel shows the perceived direction of motion of the pattern in relation to the stimulus motion. The signal labeled “stimulus” was reconstructed from the square wave photocell signal, in which each flank corresponds to a zero crossing of the sinusoidal motion. The lower right panel shows the perceived vectorial motion direction of the stimulus and the actual motion components.

Stimuli were either correlated or anti-correlated random dot patterns. Left and right eye image separation by the filters was better than 99%. In the experiments described in our study, subjects viewed the stimuli under dichoptic (separated by red and green filters) conditions. They were instructed to stare at the presented visual stimuli while trying to maintain binocular fusion. Correlated dot patterns consisted of randomly distributed red and green dots against a black background (Fig. 1, left upper panel). Correlated patterns with 1000 elements in each pattern were used with both identical as two different frequencies. Standard procedure was to present the horizontal stimulus to the right eye and the vertical stimulus to the left eye. Reversing this order had no effect on the general outcome of the data.

Anti-correlated stimuli consisted of random dot patterns against a red and green background with opposite contrast of the dots (Fig. 1, left lower panel). When viewed through red-green anaglyphic glasses dots appeared as light and dark dots against a grey background. Anti-correlated stereograms have the property that they cannot be fused and do not lead to depth perception,35 although they can evoke vergence eye movements with opposite sign.7,25 Performance between correlated and anti-correlated stimuli was compared using 200 element stimuli.

To synchronize stimulus presentation with the eye movement recordings, we projected a small alternating black and white square in the lower right corner of the screen. The square was covered with a black cardboard at the front side of the screen to make it invisible to the subject. The black-to-white transitions corresponded to the zero crossings of the oscillating patterns. A photodetector, placed directly in front of the black and white square, produced an analog voltage proportional to the luminance of the square. This voltage was sampled together with the eye movement signals (Fig. 1, upper right panel). In this way we were able to synchronize our sampled eye movements with the presentation onset of the stimulus on the screen within 1 ms precision.

Eye Movement and Perceived Movement Registration

Binocular eye movements of human subjects were measured using the two-dimensional magnetic search coil method,36 which has a resolution of 20 seconds of arc.

Subjects indicated the perceived motion with a joystick (Fig. 1, bottom right panel).

Analog signals were sampled at 1000 Hz with 16-bit precision by a PC-based data acquisition system (CED1401; Cambridge Electronic Design, Cambridge, UK). Before digitization, signals were fed through a low-pass filter with a cut-off frequency of 250 Hz. The overall noise level was less than 1.5 minutes of arc.

Zero crossings in the sampled photocell signal were determined by the computer, and used to reconstruct the stimulus. The signal also was used to verify that no frames had been skipped. The next step of the analysis consisted of a saccade removal of the eye coil signals. Saccades were identified with the following criteria: velocity threshold 12°/s, minimum amplitude 0.2°, acceleration threshold 1000°/s2 and subsequently removed from the raw eye movement signal (for a detailed description see the report of van der Steen and Bruno37).

In- and output relations between stimulus and smooth eye movement signals, that is gain and phase, were computed from the cross- and auto-spectral densities of the FFT transformed signals.38

Results

Orthogonal Stimulation with Correlated Random-Dot Images

All subjects fused the two correlated stimulus patterns without binocular rivalry. The combination of the horizontal pattern motion presented to one eye and vertical pattern motion to the other eye was seen as an oblique sinusoidal movement, corresponding to the vector sum of the motions presented to each eye.

The two orthogonally oscillating visual stimuli elicited smooth tracking eye movements interrupted by small saccades. Figure 2 (left panels) shows an example of the differences in amplitudes of the horizontal and vertical components of each eye. The right eye was tracking the horizontal motion and the left eye the vertical motion. The disconjugacy between left and right eyes was restricted to the smooth components. Saccades were conjugate (differences in saccade amplitude were less than 0.1°) and corrected for drift in the smooth signals (Fig. 2, left panel, traces of left and right eyes before and after saccade removal, labeled “raw” and “smooth”). In all subjects there was an upward drift in one eye and a nasally directed drift in the other eye (Fig. 2, smooth traces), which did not change when we reversed the movement presented to each eye.

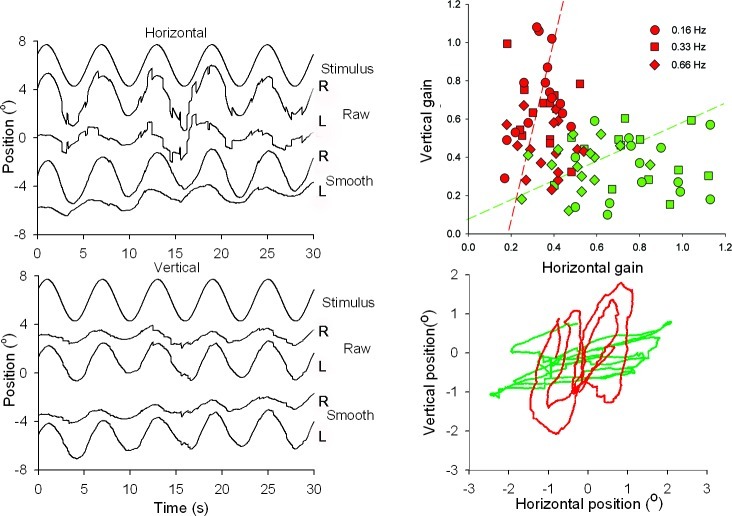

Figure 2. .

Eye movements in response to orthogonally oscillating patterns (f = 0.16 Hz, A = 1.72°). Left panels show from top to bottom: stimulus motion, and horizontal and vertical movements of right (R) and left (L) eyes. Positive values of horizontal and vertical eye movement traces correspond to rightward and upward positions, respectively. Traces labeled “Raw” show eye movements with saccades, whereas in the traces labeled “Smooth,” saccades have been removed digitally. The XY-plot at the lower right panel shows an example of the horizontal and vertical excursions of the smooth components of the two eyes. The top right panel shows a scatter plot of the horizontal versus vertical gain of left (red symbols) and right (green symbols) eyes of all six subjects for three different frequencies (circles 0.16 Hz, squares 0.33 Hz, and diamonds 0.66 Hz). Pooled data points for all three frequencies were fitted with an orthogonal fit procedure, minimizing errors in X and Y direction.

The lower right panel of Figure 2 shows examples of motion trajectories of the left and right eyes in one subject. The upper right panel of Figure 2 summarizes the differences in motion direction of left and right eyes for all six subjects. Here, we plotted the gain of the horizontal against vertical smooth eye movements of the left and right eyes for three different frequencies and amplitudes. The movement directions of the left and right eyes were not exactly orthogonal to each other. The orthogonal regression lines fitted through all data points are described by the following functions: and , where VR, VL, HR, and HL are the right and left eye vertical and horizontal gains, respectively. To test if the motion directions of left and right eyes were significantly different, we calculated the horizontal versus vertical amplitude ratio (XY-ratio) of the left and right eyes. We then compared the left and right eye ratios different for the three stimulus frequencies. For all three frequencies the left and right eye ratios were significantly different (P < 0.001, rank sum test, see box plots in Fig. 3).

Figure 3. .

Box plots based on the ratios of horizontal versus vertical amplitudes of left and right eyes. The plot shows three pairs of box plots for three stimulus frequencies alternating for left and right eyes. Note that differences between left and right eye ratios decrease as a function of stimulus frequency.

Orthogonal Stimulation with Anti-Correlated Random-Dot Images

None of the subjects (N = 6) reported to suppress one of the images or have binocular rivalry when viewing the anti-correlated stereograms. When they looked at the global pattern, they perceived an oblique motion, whereas when they shifted their attention to a single dot, only its local horizontal or vertical motion was seen.

An example of eye movements evoked in response to the anti-correlated pattern is shown in Figure 4 (left panels). The lower right panel shows an XY-plot of the right and left eye horizontal and vertical movements. Both eyes oscillated with a diagonal trajectory and were largely conjugate. The amplitude of the response was approximately 50% compared to the correlated stereograms. The upper right panel of Figure 4 shows the orthogonal regression lines fitted through the gain values of the horizontal against vertical smooth eye movements of the left and right eyes for all frequencies and amplitudes.

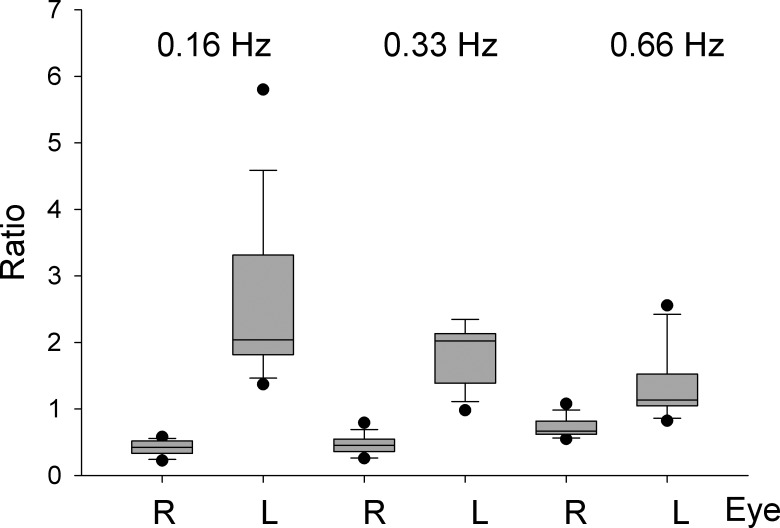

Figure 4. .

Eye movements in response to the orthogonally oscillating 200 dot anti-correlated stereogram. Left panels show horizontal (middle panel) and vertical (lower traces) raw and smooth eye movements. Top two traces at each of the two left panels show stimulus motion and perceived movement indicated with a joystick. The XY-plot at the lower right panel shows an example of the horizontal and vertical excursions of the smooth components of the two eyes. Top right panel shows a scatter plot of the horizontal versus vertical gain of left (closed circles) and right (open circles) eyes of all six subjects. Data points are fitted with an orthogonal fit procedure, minimizing errors in X and Y direction.

The orthogonal regression lines are described by the following functions: Right eye , left eye . We also calculated the XY-ratio of the left and right eyes. Motion directions for left and right eye data were not significantly different (P = 0.087, rank sum test).

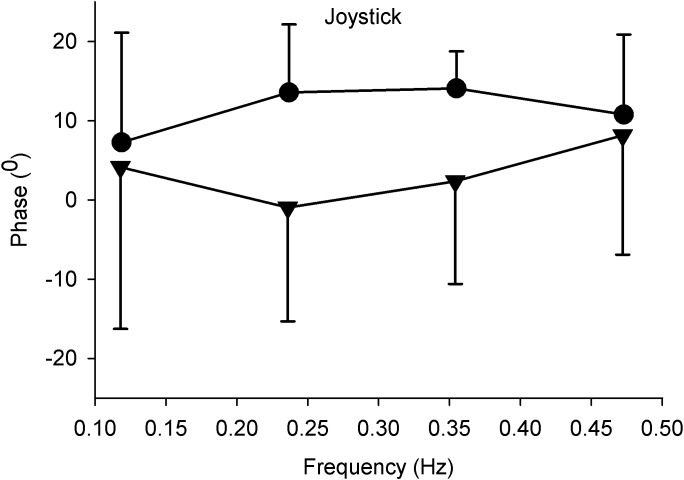

We compared the tracking performance of the perceived motion for correlated and anti-correlated 200 dot stimuli at different frequencies by having our subjects track the direction of perceived motion with a joystick. We concentrated on the timing of the tracking response and not on response amplitude because we expected a considerable individual variability in response amplitude due the subjective scaling. Under both conditions the tracking was in the same direction as the perceived motion. The phase of the tracking responses across frequencies is shown at the right panel of Figure 5 and was different for the two types of stimuli. Correlated stimuli were tracked with a mean phase lead of 3.4° ± 3.8°, whereas anti-correlated stimuli were tracked across frequencies with a mean phase lead of 11.6° ± 3.13°. Differences between correlated and uncorrelated stimuli values were not significantly different (paired t-test, P > 0.05).

Figure 5. .

Mean phase and standard deviation of perceived movement as a function of stimulus frequency. Subjects (N = 6) tracked the direction of perceived motion with a joystick. Positive values indicate a phase lead, negative values a phase lag. Closed triangles: correlated stimulus patterns. Closed circles: anti-correlated stimulus patterns.

Frequency Response of Left and Right Eyes during Orthogonal Movements

To estimate the contribution of monocular and binocular slow phase eye movement components to the response, we also determined the frequency response of the left and right eyes in two dimensions.

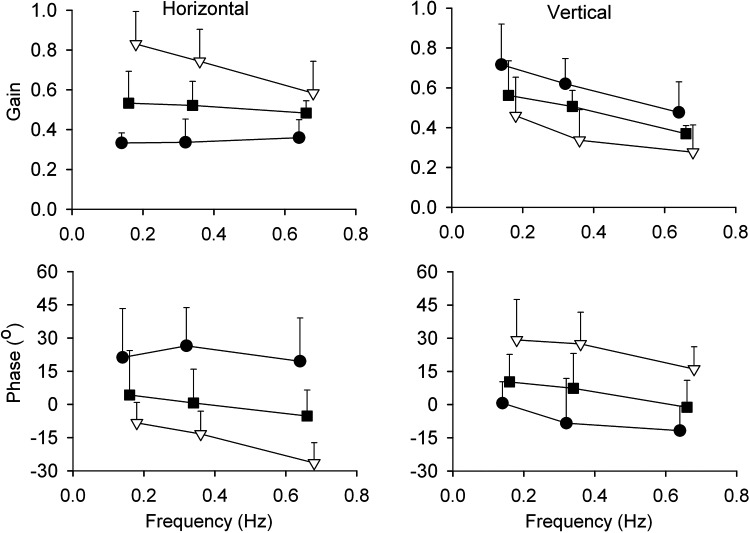

Firstly, we determined the gain and phase characteristics of the monocular (left and right eyes) and binocular (version and vergence) eye movement components. For all six subjects we analyzed the responses to orthogonal stimulation for the three different frequencies (0.16, 0.32, and 0.66 Hz) and amplitudes (1.72°, 0.86°, and 0.63°). Gain and phase were calculated from the Fast Fourier Transformed stimulus signal and the smooth eye movement components. Average values (N = 6) are shown in Figure 6. Each data point is based on 18 measurements.

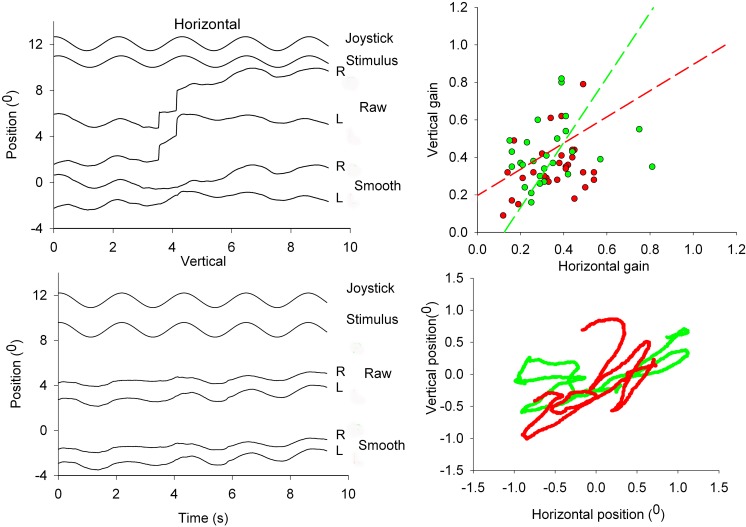

Figure 6. .

Gain and phase plots of the smooth left and right eye movements in response to sinusoidal stimulation with three different frequencies. Top panels show the mean (N = 6) gain ± 1 SD of left eye (closed circles), right eye (open triangles), and version signals (closed squares) in horizontal and vertical directions. Lower panels present the phase of the left eye, right eye, and version smooth eye movements. Note the phase lag and phase lead in the lower left panel of the horizontal left and right eyes, respectively. The reverse applies to the vertical eye movements (lower right panel). The individual curves have shifted along the X-axis with respect to each other to visualize the error bars.

Figure 6 shows that each monocular presented stimulus evoked not only a movement confined to the direction of the stimulated eye, but in addition a smaller gain component in the other eye (see also Fig. 2). Across all subjects the gain of the movement of the viewing eye in the plane of stimulus motion was significantly larger than in the other eye. With increase in stimulus frequency, gain decreased from 0.83 to 0.58 in horizontal direction. The gain of the vertical component ranged between 0.72 and 0.48. The gain of the non-stimulated eye movement averaged over subjects, and amplitudes varied from 0.33 and 0.36 for horizontal (Fig. 6, top left panel, closed circles) and from 0.46 to 0.28 for vertical stimulation (Fig. 6, top right panel, open triangles).

The movement components of the left and right eyes in the direction of stimulus motion lagged the stimulus motion (Fig. 6, lower left panel, open triangles and lower right panel, closed circles). Phase lag increased with frequency from −8.3 to −26° for horizontal and from +0.6 to −12° for vertical motion. In contrast, the “cross-talk” horizontal or vertical movement of the other eye had a phase lead that varied between +16 and +29°.

Version gain (Fig. 6, closed squares) ranged between 0.53 and 0.48 for horizontal, and between 0.58 and 0.37 for vertical stimulus motion. Phase was close to zero (mean 0.1° ± 4.8° for horizontal and 5.5° ± 6.0° for vertical).

In summary, this gain and phase analysis shows that each eye produced a movement with a high gain and a frequency-dependent phase lag in the direction of its own stimulus motion. This is in line with the XY-ratio analysis (Fig. 3). In addition, the gain-phase analysis shows that there is a low gain response and a phase lead of the “cross-talk” movement in the other eye. For version, gain equals to the mean of left and right eyes. Phase errors of the version signal were close to zero.

Discussion

In our study we showed that, with orthogonally oscillating dichoptic stimuli, correlated images within limits of fusion are tracked with slow phase eye movements in the direction and at the frequency of the stimulus presented to that eye. The perceived direction of motion was the vector sum of the motion of the two images presented to each eye. We concluded that these disjunctive eye movements help to maximize binocular correspondence and to minimize binocular correspondence errors. In our study, anti-correlated stimuli evoked a similar motion percept as the correlated stimuli, but the elicited eye movements were conjugate and moved obliquely in correspondence with the perceived direction of motion.

Binocular Eye Movement Control

The neural control of binocular eye movements is a controversial issue in oculomotor physiology. Behavioral and electrophysiologic evidence shows that separate version and vergence neuronal control systems exist with different dynamics.28,39–42 Horizontal version signals are mediated by the paramedian pontine reticular formation (PPRF), whereas vergence command centers are located in the mesencephalic region. The main body of the discussion so far has been on whether burst neurons in the PPRF carry conjugate or monocular command signals.27

The oculomotor behavior described in our study implies that the vergence center must be able to send an adjustable distribution of signals to left and right oculomotor neurons. The idea of adjustable distribution was suggested earlier for horizontal binocular eye movements by Erkelens.33 From our data it follows that this property not only applies to horizontal, but also to vertical binocular eye movements. The gain and phase plots we constructed from our data are consistent with simulated data from a recent model on the vergence eye movement system.34

Several studies have suggested that disparity-sensitive cells operate in the binocular servo control of disjunctive eye movements.5,8,43 Disparity-sensitive cells in V1 not only are sensitive to horizontal, but also to vertical disparities.14 We propose that depending on whether the two retinal images are fusible or not, disparity-sensitive cells or monocular-sensitive cells are in the feedback loop to the mesencephalic region. This way V1 not only acts as a gatekeeper for the perception of motion,44 but also controls left and right eye movement-related activity to maintain binocular correspondence. This results in paradoxically independent left and right eye movements to minimize the retinal errors between the two patterns. Such a scheme explains why in the absence of fusion only conjugate motor command signals are generated for both eyes.

Binocular Motion Perception

Maintaining fusion of binocularly perceived images involves sensory fusion mechanisms based on retinal correspondence, as well as oculomotor control mechanisms to keep the retinas of the two eyes within fusional limits.

To our knowledge, Erkelens and Collewijn first showed that large dichoptically presented random-dot stereograms with equal but opposite horizontal motion were perceived as stationary, while these patterns elicited ocular vergence movements.45 They concluded that the visual system strived for a situation in which binocular disparity as well as retinal slip were reduced to a minimum.8 Our data are in line with their findings and showed that this is not restricted to horizontal eye movements, but also applies to combined horizontal and vertical slow phase version and vergence.

The perceived motion directions of our correlated stimuli are in line with evidence that under dichoptic viewing conditions the motion components presented separately to the two eyes are integrated by the visual system into a single perceived motion.46,47

An important question is how the control mechanisms of binocular eye movements and motion perception interact. During the processing of binocular visual information, binocular retinal correspondence already is achieved in V1 by an early stage correlation of the two retinal images. It also has been suggested that binocular visual signals in V1 may be used for vergence control.6 However, several investigators claimed that the control mechanisms for binocular eye movements also have access to eye-of-origin information.33,48 We argue that the decision on the use of eye-of-origin information for eye movement depends on the fusibility of the two images. Although it is unlikely that V1 actually is the place where perception occurs,19 part of the decision process could take place at this level. In V1 disparity detectors exist that are sensitive to absolute local disparities.9 Recent evidence suggests that decisions leading to visual awareness of motion are taken in V1.44 Thus, the function of V1 could be not only to gate visual perception to higher areas, but also to gate signals to the oculomotor system.

How do anti-correlated stimuli fit in with this scheme? Normally, if the visual inputs of the two eyes do not match, a situation of binocular rivalry results. In such conditions, cellular activity in extrastriate areas is related to the perceptually dominating pattern.49,50 Anti-correlated patterns are a special case. Anti-correlated patterns can cause a perception of motion or depth in the reverse direction.51 It has been argued that perceived motion direction depends on spatial frequency.52

In our experiments, the correlated and anti-correlated random-dot patterns we used yielded a global motion percept consistent with the vector sum of the inputs to the two eyes. This suggests a high order grouping of local motion vectors independent from disparity. This global motion integration may occur in extrastriate areas, for example area MT, which is known to have disparity selection and motion selectivity.10,16,22

Footnotes

Supported by ZON-MW Grants 911-02-004 and 912-03-037. The authors alone are responsible for the content and writing of the paper.

Disclosure: J. van der Steen; None; J. Dits, None

References

- 1.MacAskill MR, Koga K, Anderson TJ. Japanese street performer mimes violation of Hering's Law. Neurology. 2011;76:1186–1187 [DOI] [PubMed] [Google Scholar]

- 2.Erkelens CJ, Van der Steen J, Steinman RM, Collewijn H. Ocular vergence under natural conditions. I. Continuous changes of target distance along the median plane. Proc R Soc Lond B Biol Sci. 1989;236:417–440 [DOI] [PubMed] [Google Scholar]

- 3.Howard IP, Rogers BJ. Seeing in Depth. Toronto: Porteous I.; 2002 [Google Scholar]

- 4.Westheimer G. Three-dimensional displays and stereo vision. Proc Biol Sci. 2011;278:2241–2248 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cumming BG, Parker AJ. Responses of primary visual cortical neurons to binocular disparity without depth perception. Nature. 1997;389:280–283 [DOI] [PubMed] [Google Scholar]

- 6.Cumming BG, Parker AJ. Local disparity not perceived depth is signaled by binocular neurons in cortical area V1 of the Macaque. J Neurosci. 2000;20:4758–4767 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Poggio GF. Mechanisms of stereopsis in monkey visual cortex. Cerebral Cortex. 1995;5:193–204 [DOI] [PubMed] [Google Scholar]

- 8.Erkelens CJ, Collewijn H. Eye movements and stereopsis during dichoptic viewing of moving random-dot stereograms. Vision Res. 1985;25:1689–1700 [DOI] [PubMed] [Google Scholar]

- 9.Cumming BG, Parker AJ. Binocular neurons in V1 of awake monkeys are selective for absolute, not relative, disparity. J Neurosci. 1999;19:5602–5618 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.DeAngelis GC, Cumming BG, Newsome WT. Cortical area MT and the perception of stereoscopic depth. Nature. 1998;394:677–680 [DOI] [PubMed] [Google Scholar]

- 11.Parker AJ, Cumming BG. Cortical mechanisms of binocular stereoscopic vision. Prog Brain Res. 2001;134:205–216 [DOI] [PubMed] [Google Scholar]

- 12.Schor C, Heckmann T, Tyler CW. Binocular fusion limits are independent of contrast, luminance gradient and component phases. Vision Res. 1989;29:821–835 [DOI] [PubMed] [Google Scholar]

- 13.Erkelens CJ. Fusional limits for a large random-dot stereogram. Vision Res. 1988;28:345–353 [DOI] [PubMed] [Google Scholar]

- 14.Barlow HB, Blakemore C, Pettigrew JD. The neural mechanism of binocular depth discrimination. J Physiol. 1967;193:327–342 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Pettigrew JD, Nikara T, Bishop PO. Binocular interaction on single units in cat striate cortex: simultaneous stimulation by single moving slit with receptive fields in correspondence. Exp Brain Res. 1968;6:391–410 [DOI] [PubMed] [Google Scholar]

- 16.DeAngelis GC, Newsome WT. Organization of disparity-selective neurons in macaque area MT. J Neurosci. 1999;19:1398–1415 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Maunsell JH, Van Essen DC. Functional properties of neurons in middle temporal visual area of the macaque monkey. II. Binocular interactions and sensitivity to binocular disparity. J Neurophysiol. 1983;49:1148–1167 [DOI] [PubMed] [Google Scholar]

- 18.Poggio GF, Gonzalez F, Krause F. Stereoscopic mechanisms in monkey visual cortex: binocular correlation and disparity selectivity. J Neurosci. 1988;8:4531–4550 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Backus BT, Fleet DJ, Parker AJ, Heeger DJ. Human cortical activity correlates with stereoscopic depth perception. J Neurophysiol. 2001;86:2054–2068 [DOI] [PubMed] [Google Scholar]

- 20.Durand JB, Zhu S, Celebrini S, Trotter Y. Neurons in parafoveal areas V1 and V2 encode vertical and horizontal disparities. J Neurophysiol. 2002;88:2874–2879 [DOI] [PubMed] [Google Scholar]

- 21.Masson GS, Yang DS, Miles FA. Reversed short-latency ocular following. Vision Res. 2002;42:2081–2087 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.DeAngelis GC, Newsome WT. Perceptual “read-out” of conjoined direction and disparity maps in extrastriate area MT. PLoS Biol. 2004;2:E77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Uka T, DeAngelis GC. Linking neural representation to function in stereoscopic depth perception: roles of the middle temporal area in coarse versus fine disparity discrimination. J Neurosci. 2006;26:6791–6802 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Neri P, Bridge H, Heeger DJ. Stereoscopic processing of absolute and relative disparity in human visual cortex. J Neurophysiol. 2004;92:1880–1891 [DOI] [PubMed] [Google Scholar]

- 25.Masson GS, Busettini C, Miles FA. Vergence eye movements in response to binocular disparity without depth perception. Nature. 1997;389:283–286 [DOI] [PubMed] [Google Scholar]

- 26.Miura K, Sugita Y, Matsuura K, Inaba N, Kawano K, Miles FA. The initial disparity vergence elicited with single and dual grating stimuli in monkeys: evidence for disparity energy sensing and nonlinear interactions. J Neurophysiol. 2008;100:2907–2918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.King WM, Zhou W. New ideas about binocular coordination of eye movements: is there a chameleon in the primate family tree? Anat Rec. 2000;261:153–161 [DOI] [PubMed] [Google Scholar]

- 28.Mays LE, Gamlin PD. Neuronal circuitry controlling the near response. Curr Opin Neurobiol. 1995;5:763–768 [DOI] [PubMed] [Google Scholar]

- 29.Zhou W, King WM. Premotor commands encode monocular eye movements. Nature. 1998;393:692–695 [DOI] [PubMed] [Google Scholar]

- 30.King WM, Zhou W. Neural basis of disjunctive eye movements. Ann N Y Acad Sci. 2002;956:273–283 [DOI] [PubMed] [Google Scholar]

- 31.King WM, Zhou W. Initiation of disjunctive smooth pursuit in monkeys: evidence that Hering's law of equal innervation is not obeyed by the smooth pursuit system. Vision Res. 1995;35:3389–3400 [DOI] [PubMed] [Google Scholar]

- 32.Cullen KE, Van Horn MR. The neural control of fast vs. slow vergence eye movements. Eur J Neurosci. 2011;33:2147–2154 [DOI] [PubMed] [Google Scholar]

- 33.Erkelens CJ. Pursuit-Dependent Distribution of Vergence Among the Two Eyes. New York: Plenum Press; 1999:145–152 [Google Scholar]

- 34.Erkelens CJ. A dual visual-local feedback model of the vergence eye movement system. J Vis. 2011;11:1–14 [DOI] [PubMed] [Google Scholar]

- 35.Julesz B. Foundations of Cyclopean Perception. Chicago: The University of Chicago Press; 1971 [Google Scholar]

- 36.Collewijn H, Van der Mark F, Jansen TC. Precise recording of human eye movements. Vision Res. 1975;15:447–450 [DOI] [PubMed] [Google Scholar]

- 37.van der Steen J, Bruno P. Unequal amplitude saccades produced by aniseikonic patterns: effects of viewing distance. Vision Res. 1995;35:3459–3471 [DOI] [PubMed] [Google Scholar]

- 38.Van der Steen J, Collewijn H. Ocular stability in the horizontal, frontal and sagittal planes in the rabbit. Exp Brain Res. 1984;56:263–274 [DOI] [PubMed] [Google Scholar]

- 39.Rashbass C, Westheimer G. Independence of conjugate and disjunctive eye movements. J Physiol. 1961;159:361–364 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Rashbass C, Westheimer G. Disjunctive eye movements. J Physiol. 1961;159:339–360 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mays LE, Porter JD. Neural control of vergence eye movements: activity of abducens and oculomotor neurons. J Neurophysiol. 1984;52:743–761 [DOI] [PubMed] [Google Scholar]

- 42.Mays LE, Porter JD, Gamlin PD, Tello CA. Neural control of vergence eye movements: neurons encoding vergence velocity. J Neurophysiol. 1986;56:1007–1021 [DOI] [PubMed] [Google Scholar]

- 43.Poggio GF, Fischer B. Binocular interaction and depth sensitivity in striate and prestriate cortex of behaving rhesus monkey. J Neurophysiol. 1977;40:1392–1405 [DOI] [PubMed] [Google Scholar]

- 44.Silvanto J, Cowey A, Lavie N, Walsh V. Striate cortex (V1) activity gates awareness of motion. Nat Neurosci. 2005;8:143–144 [DOI] [PubMed] [Google Scholar]

- 45.Erkelens CJ, Collewijn H. Motion perception during dichoptic viewing of moving random-dot stereograms. Vision Res. 1985;25:583–588 [DOI] [PubMed] [Google Scholar]

- 46.Alais D, van der Smagt MJ, Verstraten FA, van de Grind WA. Monocular mechanisms determine plaid motion coherence. Vis Neurosci. 1996;13:615–626 [DOI] [PubMed] [Google Scholar]

- 47.Andrews TJ, Blakemore C. Form and motion have independent access to consciousness. Nat Neurosci. 1999;2:405–406 [DOI] [PubMed] [Google Scholar]

- 48.Howard IP, Rogers BJ. Binocular Vision and Stereopsis. Oxford, UK: Oxford University Press; 1995 [Google Scholar]

- 49.Logothetis NK, Schall JD. Neuronal correlates of subjective visual perception. Science. 1989;245:761–763 [DOI] [PubMed] [Google Scholar]

- 50.Logothetis NK, Leopold DA, Sheinberg DL. What is rivalling during binocular rivalry? Nature. 1996;380:621–624 [DOI] [PubMed] [Google Scholar]

- 51.Sato T. Reversed apparent motion with random dot patterns. Vision Res. 1989;29:1749–1758 [DOI] [PubMed] [Google Scholar]

- 52.Read JC, Eagle RA. Reversed stereo depth and motion direction with anti-correlated stimuli. Vision Res. 2000;40:3345–3358 [DOI] [PubMed] [Google Scholar]