Abstract

Background

Optimizing colorectal cancer (CRC) screening requires identification of unscreened individuals, and tracking screening trends. A recent NIH State of the Science Conference, “Enhancing Use and Quality of CRC Screening,” cited a need for more population data sources for measurement of CRC screening, particularly for the medically underserved. Medical claims data (claims data) are created and maintained by many health systems to facilitate billing for services rendered, and may be an efficient resource for identifying unscreened individuals. The aim of our study, conducted at a safety-net health system, was to determine whether CRC test use measured by claims data matches medical chart documentation.

Methods

We randomly selected 400 patients from a universe of 20,000 patients previously included in an analysis of CRC test use based on claims data 2002–2006 in Tarrant Co, TX. Claims data were compared with medical chart documentation by estimation of agreement and examination of test use over-/under-documentation.

Results

We found agreement on test use was very good for fecal occult blood testing (κ=0.83, 95% CI:0.75–0.90) and colonoscopy (κ=0.91, 95% CI:0.85–0.96), and fair for sigmoidoscopy (κ=0.39, 95% CI:0.28–0.49). Over- and under-documentation of the two most commonly used CRC tests―colonoscopy and FOBT―were rare.

Conclusion

Use of claims data by health systems to measure CRC test use is a promising alternative to measuring CRC test use with medical chart review, and may be used to identify unscreened patients for screening interventions, and track screening trends over time.

Keywords: Colorectal Neoplasms, Early Detection of Cancer, Reproducibility of Results, Mass Screening, Medically Underserved Area

INTRODUCTION

Colorectal cancer (CRC) is the 2nd leading cause of cancer death in the United States1,2. CRC screening can reduce mortality3, but screening remains suboptimal nationwide, particularly among individuals typically served by safety-net health systems such as the poor, under/uninsured, and minorities2,4.

Public health entities seeking to improve CRC outcomes must first characterize rates of CRC test use and identify those who need to be screened. Presently, there is no national medical record or population-based screening registry to identify the unscreened. Indeed, a recent NIH State of the Science Conference, “Enhancing Use and Quality of CRC Screening,” cited a need for more population data sources for measurement of CRC screening, particularly for the medically underserved5,6.

Currently used approaches for large-scale assessment of CRC test use are generally too expensive, labor intensive, and/or impractical for wide-scale use by public health entities. National telephone surveys such as the Behavioral Risk Factor Surveillance Survey provide critical population estimates of CRC test use2, but telephone surveys are too expensive and labor-intensive for routine use by health systems. Manual medical chart review is another approach to identifying unscreened patients that health systems could readily implement, but is also time consuming, labor intensive, and impractical on a population basis. Use of electronic medical record data to characterize screening is limited by low rates of electronic record implementation--only 13% nationwide7,8, and particularly low for hospitals serving poor and under/uninsured individuals 9.

Medical claims data (hereafter referred to as claims data) may be an alternative resource for individually identifying unscreened patients. Claims data are created and maintained by many health systems to facilitate patient and third-party payor billing for services rendered such as CRC screening. These data may be readily available at low cost because they are required for billing insurance companies, Medicaid, and Medicare, regardless of the overall payer mix of a given health system. Indeed, claims data have been used to measure CRC test use among health maintenance organization members and Medicare beneficiaries, but widespread implementation by health systems such as safety-net health systems has not been reported10–15.

Few studies have compared whether CRC screening test use measured by claims data match CRC screening test use measured by more expensive and labor intensive medical chart review12–14,16,17; to our knowledge, none have done so in an urban safety-net health setting. Specific questions include whether claims data agree with medical chart documentation of CRC test use, and whether claims data over- and/or under-count test use. Determining whether claims data match medical chart data is critical because claims data have potential to be used on a widespread basis for resourcing CRC screening interventions, and tracking improvements over time.

Our primary aim was to determine whether CRC screening test use measured by claims data readily available at the health system level match CRC test use as documented by medical charts.

METHODS

Data source and sampling

John Peter Smith Health Network (JPS) is the only safety-net health system serving the city of Fort Worth and Tarrant County, TX. The system includes a tertiary care hospital, 44 hospital-based and satellite outpatient clinics, and is the primary provider of care for uninsured and Medicaid patients in Tarrant County. JPS serves over 155,000 unique patients through over 850,000 encounters each year. To serve the uninsured, JPS offers a medical assistance program for uninsured residents of Tarrant County that provides access to primary and specialty care, including CRC screening. At JPS, claims data are routinely created with every patient encounter to facilitate medical billing. These data include date of service, current procedural technology (CPT) codes for any services or procedures provided, and international classification of diseases (ICD) 9 codes for diagnoses associated with the encounter. Data are stored in a Siemen’s enterprise database, and routinely used by billing personnel to bill patients and third party payors such as insurers.

Previously, we extracted and used these claims data to estimate CRC test use from 2002 to 2006 among 20,000 JPS patients age 50–75 seen one or more times in 200611. Patients were classified as being up to date with CRC screening based on CPT coding claim consistent with 1) fecal occult blood testing (FOBT) in 2005 or 2006, 2) any flexible sigmoidoscopy 2002--2006, and/or 3) any colonoscopy 2002--2006 11. Though an individual who had a colonoscopy between 1997 and 2006 would be guideline adherent to CRC screening based on current definitions18, lack of electronic record of colonoscopy procedures prior to 2002 prevented analysis based on this criterion. Prior to 2002, colonoscopy was not routinely used for screening in this health system, and throughout the study period, neither fecal immunochemical testing nor computed tomographic colonography were available for screening. Distinguishing between tests done for screening or diagnostic purposes was not possible using claims data, thus our definition captured test use for both purposes.

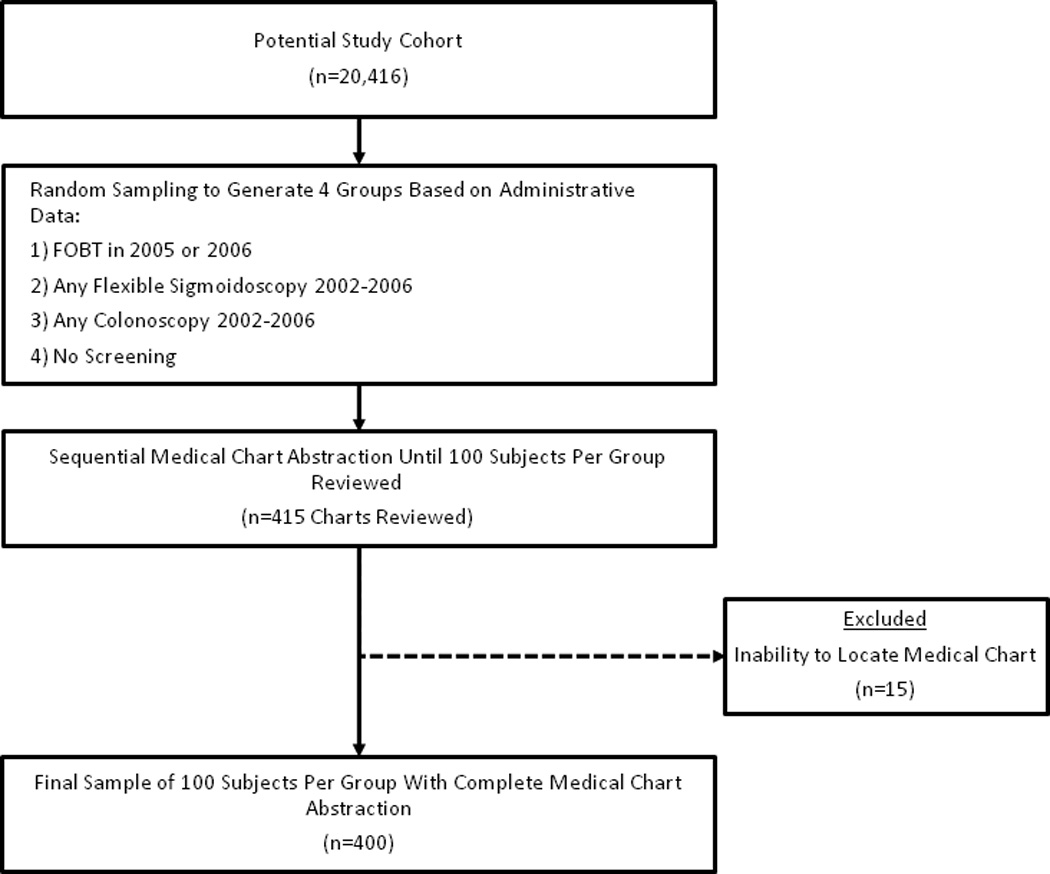

For the current study, our goal was to identify 100 patients with a claim consistent with any of the 3 CRC tests (FOBT, sigmoidoscopy, and colonoscopy), as well as 100 patients with no evidence of a claim consistent with CRC test (see sample size justification below). To identify these patients, we generated a random sample list of 200 individuals exclusively fitting into one of the 4 categories. Within each category, the list of 200 subjects was placed in random order. Medical charts for patients within each group were then ordered, and CRC test use information was abstracted sequentially until 100 patient charts from each category were reviewed. Four hundred fifteen potential study subjects were screened to identify a total of 400 subjects for the study (Figure 1). The 15 exclusions were due to inability to locate medical charts for review. Thus, the final study population consisted of a sample of 400 individuals characterized by claims data as having had one of 3 CRC tests (colonoscopy, FOBT, or sigmoidoscopy), or no CRC test use.

Figure 1. Study Flow.

A stratified random sample of patients from our prior analysis of CRC test use cohort (n=20,416) was taken to generate lists of subjects falling into one of the 3 CRC test use, or no CRC test use, groups based on claims data. Patient medical records were sequentially abstracted until 100 medical charts representing each group were reviewed.

Medical chart review

With permission, we adapted and used a structured tool previously developed to determine accuracy of Medicare claims data for documentation of CRC screening13,14. Medical charts were reviewed to determine presence or absence of any CRC test between 2002 and 2006. Confirmation of CRC test use was based on endoscopy test reports, lab reports, and progress notes. Data were directly entered into a referential, Microsoft Access database. Two cancer research nurses completed all data abstractions and verified abstraction quality.

Comparison of claims data to medical chart data

To compare claims data to medical chart data we used the Kappa (κ) statistic 19. As described previously, degree of agreement based on κ was summarized as poor (<0.0–0.2), fair (0.21–0.40), moderate (0.41–0.60), good (0.61–0.80), and very good (0.81–1.0)19. To evaluate rates of CRC test use under- and over-counting by claims data, we computed sensitivity and specificity of claims data for CRC test use, with medical chart review as the reference standard. We defined sensitivity as the probability that claims data pick up a test when medical chart review shows the presence of a test:

[# patients with CRC test use documented by medical chart reviewed also identified by claims data (i.e. “True Positives”)] /[(# patients with CRC test use documented by medical chart reviewed also identified by claims data (i.e. True Positives)) + (# patients with CRC test use documented by medical chart not identified by claims data (i.e. False Negatives))]

We defined specificity as probability that claims data show no evidence of CRC test use when medical chart review shows absence of CRC testing:

[# patients with no documentation of CRC test use by medical chart who have no claim for CRC test use (i.e. “True Negatives”)] / [(# patients with no documentation of CRC test use by medical chart who have no claim for CRC test use (i.e. “True Negatives”)) + (# of patients with no documentation of CRC test use by medical chart but incorrect documentation of CRC test use by claim (i.e. “False Positives”))]

Matching claims evidence of test use to medical chart findings for FOBT was based on confirmation of FOBT within a 2 year period, and, for sigmoidoscopy and colonoscopy based on confirmation of test use within a 5-year period.

Sample size and ethics

Sample size estimation was conducted using nQuery Advisor 7.0. With sample size of 100 individuals grouping each of the testing categories, we estimated that a two-sided 95% confidence interval for κ value would be within 10% of the observed κ value using the large sample normal approximation. Therefore, a stratified random sample of 100 individuals from each of the five potential screening/non-screening categories was chosen so that the 95% confidence interval for the κ statistics would lie within ± 10% of the observed Kappa value for each category. SAS version 9.2 was used to generate the random sample lists of patients selected for potential inclusion, and for completion of all analyses. All study procedures were approved by Institutional Review Boards at UT Southwestern Medical Center, and John Peter Smith Health Network.

RESULTS

Mean age for the 400 patients with charts abstracted was 64 years; 67% were women, and the population was racial/ethnically diverse (Table 1).

Table 1.

Demographic characteristics, Using medical claims data for assessment of colorectal cancer test use in a safety-net health system 2002–2006, Tarrant Co, TX (n=400).

| Age, years (SD) | 63.7 (7.2) |

| Gendera, % (n) | |

| Women | 66.8 (256) |

| Men | 33.2 (127) |

| Race, % (n) | |

| White | 36.5 (146) |

| African American | 34.5 (138) |

| Hispanic | 22.0 (88) |

| Other | 7.0 (28) |

Gender data was missing for 62 subjects

Comparison of claims data to medical chart data for CRC test use

The κ statistic for agreement of claims data and medical chart review indicated very good agreement for FOBT (κ=0.83) and colonoscopy (κ=0.91), and fair agreement for sigmoidoscopy (κ=0.39) (Table 2). Sensitivity of claims data for all types of colon tests was high, ranging from 91 to 99%, but specificity varied widely, from 63 to 93% (Table 2). Overall, 90% of individuals with a claim for a CRC test had confirmation of use of a CRC test on chart review. Cases with lack of agreement were primarily due to limited specificity of administrative data for sigmoidoscopy, such as presence of a CPT code consistent with sigmoidoscopy without corresponding sigmoidoscopy test documentation in the medical record.

Table 2.

Agreement, sensitivity, and specificity of claims data for CRC test use compared to medical chart documentation, 2002–2006, Tarrant Co, TX.

| κ (95% CI) | Sensitivity (95% CI) | Specificity (95% CI) | |

|---|---|---|---|

| Colonoscopy | 0.91 (0.85–0.96) | 0.99 (0.98–1.00) | 0.93 (0.89–0.97) |

| FOBT | 0.83 (0.75–0.90) | 0.91 (0.87–0.95) | 0.92 (0.88–0.96) |

| Flexible Sigmoidoscopy | 0.39 (0.28–0.49) | 0.93 (0.89–0.97) | 0.63 (0.56–0.70) |

κ, kappa; CRC, colorectal cancer; CI, confidence interval;.

DISCUSSION

Options for public health entities seeking to identify individuals not up to date with CRC test use, and track trends over time on a large scale, are limited to use of telephone surveys, direct medical chart review, or medical claims data. While use of telephone surveys and direct medical chart review are both labor intensive and expensive, use of claims data may be highly feasible because most health systems create and maintain such data to facilitate medical billing. As such, the main challenge to using claims data to identify those in need of CRC testing is knowing whether such data are likely to be accurate compared to more labor intense approaches such as direct medical chart review.

Our results showed very good agreement between claims for FOBT and colonoscopy use and medical charts, and fair agreement for sigmoidoscopy. Further, particularly for the two most commonly used test, colonoscopy and FOBT, under- and over-counting of test use by claims data were rare. These findings, in conjunction with our prior use of claims data to characterize CRC test use among 20,000 individuals served by our large safety net health system11, confirm claims data have potential for measuring CRC test use on a large scale, and identifying individuals for screening promotion interventions such as mailed invitations to complete screening.

Our findings of high agreement, and rare under- and over- counting of CRC test use by claims data compared to medical chart documentation are similar to most prior reports. Cooper and colleagues reported excellent agreement between CPT coding for endoscopic procedures and medical chart review16, similar to our estimate for colonoscopy. Quan and colleagues found substantial agreement of ICD9 procedure code claims for sigmoidoscopy with medical chart documentation (κ= 0.67) 17. But Schneider and colleagues, who assessed validity of the National Committee for Quality Assurance Health Plan Employer Data and Information Set (HEDIS) measure of CRC screening which employs claims data aggregated at the health insurance plan level12, found evidence for underestimation of screening by claims data. Discrepant findings may be due to source of claims data. For the HEDIS study, data were captured from claims submitted to insurance companies for reimbursement, which usually requires the claim be created locally (usually by a health provider or lab) and then submitted to the insurance company. Thus, it is possible some CRC tests not present in claims data were due to failure of claim generation locally, and/or failure to submit claims to the insurance company. Our claims data were retrieved directly from the health system, which does require claims be generated by a local health provider or lab, but does not require claims be submitted to an outside insurance company for reimbursement. We postulate that claims data from a stand-alone health system where healthcare to individuals of interest is being provided may be more accurate than claims data from health insurers, because of potential variability in claim submission. Schenck and colleagues studied validity of claims, medical record, and self-report data for measuring CRC test use among Medicare fee-for-service beneficiaries who self-reported sigmoidoscopy, colonoscopy, or FOBT on survey, using medical chart review as the criterion standard 13,14. Sensitivity of Medicare claims was high for sigmoidoscopy and colonoscopy (≥93%) but low for FOBT (62%), with specificity ranging from 77% for FOBT to 95% for colonoscopy. The authors postulated Medicare claims were reliable for characterization of endoscopy, but not FOBT. Our results were similar for endoscopic tests (particularly colonoscopy), but in contrast suggest claims data may also be used to characterize FOBT use. We speculate FOBT capture by claims data in our system may have been facilitated by a high rate of processing and subsequent billing for FOBT in the clinical lab and after “in office” development.

Strengths of our study include systematic abstraction of data by registered nurses, and use of a structured database at point of data review previously developed by other investigators for comparing claims data to medical chart documentation13,14. An additional strength is use of a ‘real world’ database at a large safety-net health system that may increase generalizability of our findings. Limitations include lack of direct patient survey of CRC test use, inability to review records (and associated claims) for subjects treated outside of our health system, absence of direct surveys of CRC test use, and need for assessment of external validity of our findings. Specifically, because it can be debated whether medical charts are a “gold standard” for capture of CRC test use, our estimates of sensitivity and specificity should be interpreted with caution. Inaccuracy in test-use characterization due to lack of direct patient survey and possibility of CRC test use outside of our health system could have contributed bias to our estimates. Indeed, using claims data obtained at the health insurer, rather than health system level, some investigators have found claims data alone underestimate test use compared to patient self report12,13,20. However, the population under study is predominantly under/uninsured, and generally have no other options than the health system under study, which is the only safety-net public health system for residents of Tarrant County, Texas. We expect that bias due to test use outside the health system, which might have been discovered through patient surveys, may therefore be minimal. Agreement for FOBT and colonoscopy claims with medical chart documentation was good. However, similar to other studies, we were unable to distinguish between CRC test use for screening vs. diagnostic purposes13. It is unclear whether the purpose for which a CRC test was done impacted accuracy of CRC test claims. Claims for colonoscopy and FOBT were more accurate than sigmoidoscopy claims. Our prior work suggested that the most commonly used test within the JPS health system was FOBT, and the next most commonly used test was colonoscopy. It is possible that claims for more commonly used tests may be more likely to be accurate, but we have no way of assessing this based on the data available. Finally, we did not test external validity of our approach to assessment and confirmation of CRC test use. This will require validation of our approach by other health systems using similar administrative claims data.

CONCLUSION

The National Institutes of Health State of the Science Conference, “Enhancing Use and Quality of CRC Screening,” cited a need for more population data sources for measurement of CRC screening rates, particularly for the underserved5,6. We suggest claims data may be used for population-based assessments of CRC test use at health systems that routinely compile claims data, including safety-net health systems such as ours. Because our findings suggest that claims data can not only characterize CRC test use, but also individually identify unscreened patients for screening-promotion interventions to improve screening, further study of claims data to improve CRC screening is warranted.

ACKNOWLEDGEMENTS

SOURCE OF FUNDING

Cancer Prevention and Research Institute of Texas Grant PP100039 (Gupta, PI)

National Institutes of Health grant number 1 KL2 RR024983-01, titled, “North and Central Texas Clinical and Translational Science Initiative” (Milton Packer, M.D., PI) from the National Center for Research Resources (NCRR), a component of the National Institutes of Health (NIH) and NIH Roadmap for Medical Research, and its contents are solely the responsibility of the authors and do not necessarily represent the official view of the NCRR or NIH. Information on NCRR is available at http://www.ncrr.nih.gov/. Information on Re-engineering the Clinical Research Enterprise can be obtained from http://nihroadmap.nih.gov/clinicalresearch/overview-translational.asp.

We would like to thank Dr. Anna Schenck for providing the validated tool for data abstraction, as well as for her thoughtful comments on the manuscript.

Abbreviations

- CRC

colorectal cancer

- CPT

Current Procedural Terminology

- ICD

International Classification of Diseases

- JPS

John Peter Smith Health Network

- FOBT

fecal occult blood test

- κ

Kappa

Appendix A: Administrative codes used to determine colorectal cancer test use

| Current procedural technology code | Colorectal test type |

|---|---|

| 44388 | Colonoscopy |

| 44391 | Colonoscopy |

| 44392 | Colonoscopy |

| 44394 | Colonoscopy |

| 44397 | Colonoscopy |

| 45355 | Colonoscopy |

| 45378 | Colonoscopy |

| 45379 | Colonoscopy |

| 45380 | Colonoscopy |

| 45381 | Colonoscopy |

| 45382 | Colonoscopy |

| 45383 | Colonoscopy |

| 45384 | Colonoscopy |

| 45385 | Colonoscopy |

| 45386 | Colonoscopy |

| 45387 | Colonoscopy |

| 45391 | Colonoscopy |

| 45392 | Colonoscopy |

| 44389 | Colonoscopy |

| 44390 | Colonoscopy |

| 44393 | Colonoscopy |

| 82270 | FOBT |

| 82271 | FOBT |

| 82272 | FOBT |

| 82273 | FOBT |

| G0107 | FOBT |

| 45330 | Flexible sigmoidoscopy |

| 45331 | Flexible sigmoidoscopy |

| 45332 | Flexible sigmoidoscopy |

| 45333 | Flexible sigmoidoscopy |

| 45334 | Flexible sigmoidoscopy |

| 45335 | Flexible sigmoidoscopy |

| 45336 | Flexible sigmoidoscopy |

| 45337 | Flexible sigmoidoscopy |

| 45338 | Flexible sigmoidoscopy |

| 45339 | Flexible sigmoidoscopy |

| 45340 | Flexible sigmoidoscopy |

| 45345 | Flexible sigmoidoscopy |

| 45341 | Flexible sigmoidoscopy |

| 45342 | Flexible sigmoidoscopy |

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

CONFLICTS OF INTEREST

The authors have no relevant conflicts of interest to declare.

This work was presented in abstract form at Digestive Disease Week 2010, as well as at the John Peter Smith Health Network Research Day 2010

References

- 1.Jemal A, Siegel R, Xu J, Ward E. Cancer statistics, 2010. CA Cancer J Clin. 2010 Sep-Oct;60(5):277–300. doi: 10.3322/caac.20073. [DOI] [PubMed] [Google Scholar]

- 2.Vital signs: colorectal cancer screening among adults aged 50–75 years - United States, 2008. MMWR Morb Mortal Wkly Rep. 2010 Jul 9;59(26):808–812. [PubMed] [Google Scholar]

- 3.Zauber AG, Lansdorp-Vogelaar I, Knudsen AB, Wilschut J, van Ballegooijen M, Kuntz KM. Evaluating test strategies for colorectal cancer screening: a decision analysis for the U. S. Preventive Services Task Force. Ann Intern Med. 2008 Nov 4;149(9):659–669. doi: 10.7326/0003-4819-149-9-200811040-00244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Trivers KF, Shaw KM, Sabatino SA, Shapiro JA, Coates RJ. Trends in colorectal cancer screening disparities in people aged 50–64 years, 2000–2005. Am J Prev Med. 2008 Sep;35(3):185–193. doi: 10.1016/j.amepre.2008.05.021. [DOI] [PubMed] [Google Scholar]

- 5.Allen JD, Barlow WE, Duncan RP, et al. NIH State-of-the-Science Conference Statement: Enhancing Use and Quality of Colorectal Cancer Screening. NIH Consens State Sci Statements. 2010 Feb 4;27(1) [PubMed] [Google Scholar]

- 6.Steinwachs D, Allen JD, Barlow WE, et al. National Institutes of Health state-of-the-science conference statement: Enhancing use and quality of colorectal cancer screening. Ann Intern Med. 2010 May 18;152(10):663–667. doi: 10.7326/0003-4819-152-10-201005180-00237. [DOI] [PubMed] [Google Scholar]

- 7.DesRoches CM, Campbell EG, Rao SR, et al. Electronic health records in ambulatory care--a national survey of physicians. N Engl J Med. 2008 Jul 3;359(1):50–60. doi: 10.1056/NEJMsa0802005. [DOI] [PubMed] [Google Scholar]

- 8.Jha AK, DesRoches CM, Campbell EG, et al. Use of electronic health records in U.S. hospitals. N Engl J Med. 2009 Apr 16;360(16):1628–1638. doi: 10.1056/NEJMsa0900592. [DOI] [PubMed] [Google Scholar]

- 9.Jha AK, DesRoches CM, Shields AE, et al. Evidence of an emerging digital divide among hospitals that care for the poor. Health Aff (Millwood) 2009 Nov-Dec;28(6):w1160–w1170. doi: 10.1377/hlthaff.28.6.w1160. [DOI] [PubMed] [Google Scholar]

- 10.Cooper GS, Doug Kou T. Underuse of colorectal cancer screening in a cohort of Medicare beneficiaries. Cancer. 2008 Jan 15;112(2):293–299. doi: 10.1002/cncr.23176. [DOI] [PubMed] [Google Scholar]

- 11.Gupta S, Tong L, Allison JE, et al. Screening for colorectal cancer in a safety-net health care system: access to care is critical and has implications for screening policy. Cancer Epidemiol Biomarkers Prev. 2009 Sep;18(9):2373–2379. doi: 10.1158/1055-9965.EPI-09-0344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schneider EC, Nadel MR, Zaslavsky AM, McGlynn EA. Assessment of the scientific soundness of clinical performance measures: a field test of the National Committee for Quality Assurance's colorectal cancer screening measure. Arch Intern Med. 2008 Apr 28;168(8):876–882. doi: 10.1001/archinte.168.8.876. [DOI] [PubMed] [Google Scholar]

- 13.Schenck AP, Klabunde CN, Warren JL, et al. Data sources for measuring colorectal endoscopy use among Medicare enrollees. Cancer Epidemiol Biomarkers Prev. 2007 Oct;16(10):2118–2127. doi: 10.1158/1055-9965.EPI-07-0123. [DOI] [PubMed] [Google Scholar]

- 14.Schenck AP, Klabunde CN, Warren JL, et al. Evaluation of Claims, Medical Records, and Self-report for Measuring Fecal Occult Blood Testing among Medicare Enrollees in Fee for Service. Cancer Epidemiol Biomarkers Prev. 2008 Apr;17(4):799–804. doi: 10.1158/1055-9965.EPI-07-2620. [DOI] [PubMed] [Google Scholar]

- 15.Goodwin JS, Singh A, Reddy N, Riall TS, Kuo YF. Overuse of Screening Colonoscopy in the Medicare Population. Arch Intern Med. 2011 May 9; doi: 10.1001/archinternmed.2011.212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cooper GS, Schultz L, Simpkins J, Lafata JE. The utility of administrative data for measuring adherence to cancer surveillance care guidelines. Med Care. 2007 Jan;45(1):66–72. doi: 10.1097/01.mlr.0000241107.15133.54. [DOI] [PubMed] [Google Scholar]

- 17.Quan H, Parsons GA, Ghali WA. Validity of procedure codes in International Classification of Diseases, 9th revision, clinical modification administrative data. Med Care. 2004 Aug;42(8):801–809. doi: 10.1097/01.mlr.0000132391.59713.0d. [DOI] [PubMed] [Google Scholar]

- 18.Levin B, Lieberman DA, McFarland B, et al. Screening and surveillance for the early detection of colorectal cancer and adenomatous polyps, 2008: a joint guideline from the American Cancer Society, the US Multi-Society Task Force on Colorectal Cancer, and the American College of Radiology. Gastroenterology. 2008 May;134(5):1570–1595. doi: 10.1053/j.gastro.2008.02.002. [DOI] [PubMed] [Google Scholar]

- 19.Altman DG. Practical Statistics for Medical Research. 7 ed. London: Chapman and Hall; 1991. [Google Scholar]

- 20.Pignone M, Scott TL, Schild LA, Lewis C, Vazquez R, Glanz K. Yield of claims data and surveys for determining colon cancer screening among health plan members. Cancer Epidemiol Biomarkers Prev. 2009 Mar;18(3):726–731. doi: 10.1158/1055-9965.EPI-08-0751. [DOI] [PubMed] [Google Scholar]