Abstract

The fusiform face area (FFA) is a region of human cortex that responds selectively to faces, but whether it supports a more general function relevant for perceptual expertise is debated. Although both faces and objects of expertise engage many brain areas, the FFA remains the focus of the strongest modular claims and the clearest predictions about expertise. Functional MRI studies at standard-resolution (SR-fMRI) have found responses in the FFA for nonface objects of expertise, but high-resolution fMRI (HR-fMRI) in the FFA [Grill-Spector K, et al. (2006) Nat Neurosci 9:1177–1185] and neurophysiology in face patches in the monkey brain [Tsao DY, et al. (2006) Science 311:670–674] reveal no reliable selectivity for objects. It is thus possible that FFA responses to objects with SR-fMRI are a result of spatial blurring of responses from nonface-selective areas, potentially driven by attention to objects of expertise. Using HR-fMRI in two experiments, we provide evidence of reliable responses to cars in the FFA that correlate with behavioral car expertise. Effects of expertise in the FFA for nonface objects cannot be attributed to spatial blurring beyond the scale at which modular claims have been made, and within the lateral fusiform gyrus, they are restricted to a small area (200 mm2 on the right and 50 mm2 on the left) centered on the peak of face selectivity. Experience with a category may be sufficient to explain the spatially clustered face selectivity observed in this region.

Keywords: neural selectivity, object recognition, individual differences, response reliability, ventral temporal cortex

Category-selective responses in the visual system are found for several categories, including faces, limbs, scenes, words, letters, and even musical notation (1–5). However, a plausible argument can be made for a genetically determined brain system specialized for the representation of faces (6), which includes the fusiform face area (FFA). One of the strongest arguments for modularity of face perception is domain-specificity of FFA responses (4). However, some studies suggest that the FFA also responds to nonface objects of expertise (7–11), supporting a more general account of specialization for faces according to which the FFA is part of the network tuned by experience individuating visually similar objects. However, recent work at high-resolution (HR) (12–13) raises the possibility that all expertise effects for nonface objects are a result of blurring from nonface-selective regions bordering the true FFA. Because expertise studies have only used standard-resolution (SR) functional MRI (fMRI) (8–11, 14, 15) and HR-fMRI studies have not measured expertise (12, 16), we fill a hole in the literature by investigating expertise effects with HR-fMRI.

The FFA is thought to support individuation of faces in concert with other face-selective areas, including the occipital face area (OFA), part of the superior temporal sulcus (STS), and an anterior temporal lobe area (aIT) (17, 18). Similarly, expertise individuating objects recruits a distributed network. In the first fMRI expertise study (8), training with novel objects recruited right FFA, right OFA, and right aIT. In experts with familiar objects, expertise recruited right FFA, right OFA, a small part of the parahippocampal gyrus bilaterally, and a small focus in left aIT (9, 11). One study reported an even more extensive brain network engaged by car experts attending to cars, including V1, parts of the OFA and FFA, and nonvisual areas (14). (The spatial extent of these effects may have been overestimated because of low-level stimulus differences, because even car novices showed significantly more activity to cars than control stimuli in early visual areas. In addition, the correlation with expertise was not tested, making it difficult to assess how much variance was accounted for by expertise in different areas.)

Given that faces and objects of expertise both recruit multiple areas, why is there so much focus on the FFA? The FFA occupies a special position on both sides of this theoretical debate. On the modular side, HR-fMRI reveals that only faces elicit a reliable selective response in the FFA (12, 16, 19). Neurophysiology in the monkey reveals face patches consisting almost solely of face-selective neurons (13). Based on such near-absolute selectivity for faces, authors have concluded against the role of expertise in understanding FFA function (20–23). Thus, FFA responses to objects obtained with SR-fMRI in novices or experts are sometimes attributed to spatial blurring. On the other side of the argument, although perceptual expertise in any domain likely engages a distinct set of processes, one of them, holistic processing [the tendency to process all parts of an object at once (24–26)], has been linked specifically to the FFA. During expertise training, increases in holistic processing correlate with activity in or very near the FFA (8, 27). The FFA has been predicted to be involved in those cases of expertise where individuation depends on holistic processing, as in car expertise (28). Therefore, as the focus of the strongest claims for modularity and the clearest predictions about expertise, FFA responses to objects of expertise are critical in evaluating whether face perception is, as recently put by Kanwisher, a “cognitive function with its own private piece of real estate in the brain” (23).

Our first goal was to assess whether car expertise effects that have been reported in the SR-FFA arise from face-selective voxels, nonselective voxels, or both. Our second goal was to characterize the spatial extent of expertise effects relative to the FFA clusters of activity. A tight spatial overlap of the activations for faces and objects of expertise in the FFA would be inconsistent with the idea that increased attention to objects of expertise results in increased activity in both face-selective and nonselective areas of the extrastriate cortex (14). Although objects of expertise (like faces) may plausibly attract attention, this could not explain the clustering of expertise effects for objects specifically near face-selectivity.

Here, we reveal the fine-grained spatial organization of expertise effects within and around the SR-FFA using HR-fMRI. First, we replicate the finding that SR-FFA includes voxels that are sensitive to face and others that are nonsensitive. Second, we show that car expertise effects are obtained not only in nonselective voxels but also in the most face selective voxels within the FFA. Third, we find that expertise effects are spatially limited within the fusiform gyrus (FG) to the patch observed for face-selectivity. Fourth, this overlap of object expertise effects with face-selective responses is found in the context of similar patterns of activity at a coarser scale within extrastriate areas.

Results

Defining the Classic SR-FFA Effects in Subjects Varying in Car Expertise.

We measured responses to images of different categories with HR-fMRI at 7 Tesla in 25 adults recruited to vary in self-reported car expertise. Perceptual expertise was behaviorally quantified outside the scanner in a sequential matching task with cars (9). Thirteen subjects claimed expertise with cars and outperformed the others on car matching (mean d′ car experts = 2.15, SD = 0.72; car novices = 1.40, SD = 0.51). We used SR-fMRI (2.2 × 2.2 × 2.5 mm) to localize several bilateral regions of interest (ROIs) (Table S1). In addition to defining the FFA as a single area, as has been the standard for the last 15 y, we also defined posterior (FFA1) and anterior (FFA2) portions of the FFA, as recently proposed (21, 29). Of the subjects, 11 of 20 and 14 of 21 had two clear foci corresponding to these regions in the right and left hemispheres, respectively, and for another four (right) and three (left), a single FFA region was large enough to bisect into two FFA parcels (30). We also defined another object-selective area in the medial FG (medFG) (Table S1) found in our HR-slices and where effects of expertise have also been reported (9). While scanning with 24 HR-fMRI slices (1.25-mm isometric voxels), we measured responses to faces, animals, cars, planes, and scrambled matrices (Fig. S1) during a one-back identity-discrimination task (12, 16).

Replicating Prior Cross-Validation Selectivity for Faces.

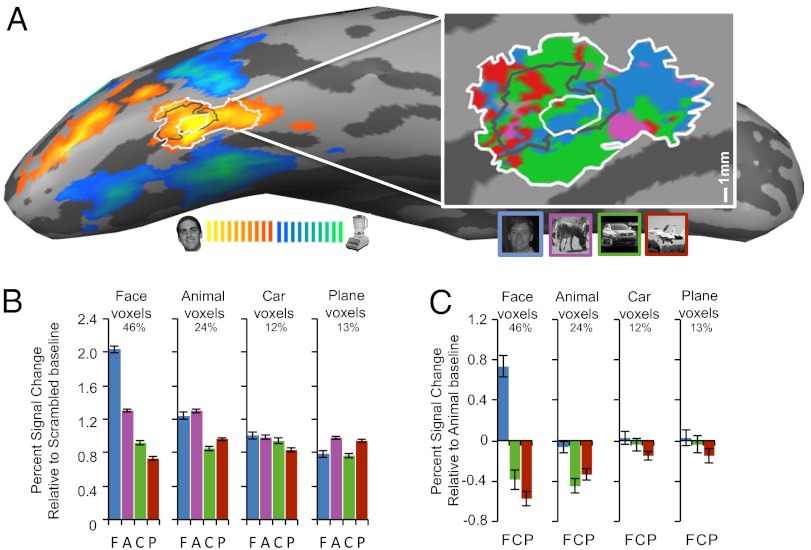

We performed HR-analyses of selectivity for each object category without spatial smoothing and regardless of expertise (12). HR-voxels within SR-FFA were sorted based on maximum response in half of the dataset. Putative face-selective voxels were often interdigitated among putative object-selective voxels (Fig. 1A). We assessed the reliability of the responses to different categories with two different indices, using cross-validation in both cases. First, in each voxel type we measured the response to each category relative to a scrambled baseline using the other half of the dataset (Fig. 1B) (12). Voxels that initially responded most to faces showed stronger responses to faces than other categories (F1,19 = 87.73, P < 0.0001). Voxels that initially responded most to animals responded equally to faces and animals relative to objects (F1,19 = 41.93, P < 0.0001). In line with prior work, putative car or plane voxels showed no category preference (cars: F1,19 = 0.001, not significant, ns; planes: F1,19 = 2.19, ns) (Fig. 1B, and Figs. S2 and S3). The same results can be illustrated relative to an animal baseline, which we use in later analyses as a high-level baseline (Fig. 1C). We also adopted an index sometimes called d′ (12, 29) but more aptly called da (see Methods) (31) to measure neural sensitivity relative to all nonpreferred categories. Again, we found reliable sensitivity for faces in the putative face-sensitive voxels (t = 7.90, P < 0.0001) and for animals in the putative animal sensitive voxels (t = 3.91, P = 0.001), but no reliable preference emerged for the putative car (t = 1.39, ns) or plane voxels (t = 0.73, ns). Thus, both measures replicate prior results that—when expertise is not considered—the SR-FFA includes HR-voxels that respond more to objects than to scrambled images but show no reliable preferences for nonface-object categories. Based on these results, we performed most analyses within three subpopulations of voxels: face-sensitive, animal-sensitive, and nonsensitive voxels (broken down into car-sensitive and plane-sensitive in Tables S2 and S3, also for other ROIs).

Fig. 1.

(A) SR-fMRI Face-Object contrast displayed on the inflated right hemisphere of a representative car expert. A 25-mm2 rFFA ROI was defined around the peak of face-selectivity (innermost white circle). The central black and outermost white outlines depict the 100-mm2 and 300-mm2 ROIs, respectively. (Inset) An enhanced view of the rFFA displayed on the flattened cortical sheet, with the 25-mm2, 100-mm2, and 300-mm2 ROIs. The color map shows the HR voxels sorted as a function of the maximal response in half the dataset. Average PSC to faces, animals, cars, and planes weighted over all HR voxels that were more active for objects than scrambled matrices. HR voxels were grouped by the category that elicited the maximal response in half of the data, and PSC for each category relative to scrambled matrices (B) or animals (C) was plotted for the other half of the data. Error bars show SEM. Percentages represent the average proportion for each kind of voxel. (Expertise for cars was correlated with the proportion of car-sensitive voxels in the 100-mm2 ROI: r = 0.52, P = 0.008 and in the 25-mm2 ROI: r = 0.513, P = 0.03).

Sensitivity of HR-Voxels for Cars Increases with Expertise in the FFA.

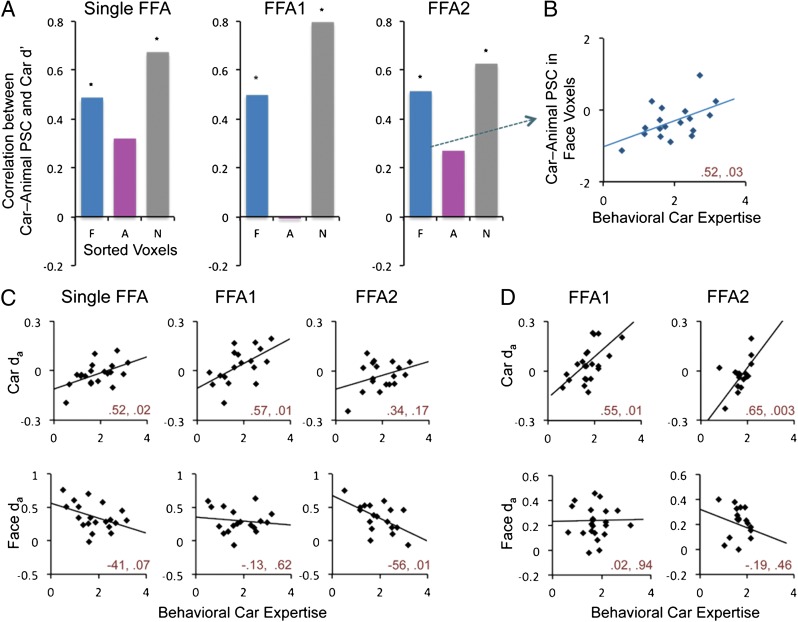

We examined the fine-scale organization of expertise effects in the FFA as a single ROI, and in FFA1 and FFA2. Using cross-validation, we found that car expertise predicted neural sensitivity to cars relative to animals (SI Text) in HR-voxels regardless of the definition of FFA (Fig. 2A). Importantly, not only nonsensitive voxels, but even highly selective face voxels showed an increased response to cars relative to animals with expertise (Fig. 2B).

Fig. 2.

(A) Correlation for behavioral Car d′ with PSC to cars–animals within face, car and nonsensitive voxels (car and plane voxels from Fig. 1) sorted for their maximal response in the other half of the data. Asterisks represent significant correlations at P < 0.05. (B) One exemplar scatterplot is displayed. (C) Scatterplots show the correlation between behavioral car expertise and voxel selectivity (da) across subjects for faces or cars in the single 100-mm2 FFA, or in FFA1 and FFA2. (D) Correlation as in C using data from 26 new subjects in Exp. 2. Pearson’s moment correlation and statistical significance are given (r, P) for each scatterplot.

Neural sensitivity (da) for each category can also be correlated with expertise (Fig. 2D). Because increases in car responses are found in both face-sensitive and nonsensitive voxels, this could lead to increased car da and reduced face da. Car da increased significantly in the single right (r) FFA ROI and in rFFA1, and although it did not reach significance in rFFA2, a second experiment (SI Text) found car da to increase with car expertise in both rFFA subregions (Fig. 2D). We also found evidence for a decrease in face da with car expertise, only reaching significance in rFFA2 in Exp. 1. In addition, we found reduced sensitivity to animals as a function of car expertise in the single FFA ROI (r = −0.646, P = 0.002) (Table S2), but not significantly in rFFA1 (r = −0.334, ns) or rFFA2 (r = −0.243, ns).

In contrast to rFFA, left (l) FFA had not shown expertise effects at SR-fMRI in prior work with real-world experts (9–11). An effect of car expertise was observed in the single lFFA ROI only (but not FFA1/FFA2) for the response to cars–animals in face-sensitive voxels (r = 0.596, P = 0.006) (Table S2). When nonsensitive voxels were split into car and plane voxels, car voxels also showed a significant effect of expertise in the single lFFA ROI (r = 0.548, P = 0.01). We found an increase in sensitivity (da) to cars with car expertise in the single lFFA ROI (r = 0.625, P = 0.003), and in lFFA2 (r = 0.555, P = 0.014). In both the single lFFA ROI and lFFA2, car expertise was also associated with a decrease in da for animals (r = −0.548, P = 0.01 and r = −0.669, P = 0.001, respectively) but not a significant decrease for face da. In Exp. 2, car da increased with car expertise in lFFA1 (r = 0.457, P = 0.049) and marginally so in lFFA2 (r = 0.454, P = 0.058), and face da was not correlated with car expertise (r = 0.244, ns and r = −0.057, ns, respectively). The specific definition of the FFA did not qualitatively change car expertise effects, especially in the right hemisphere, although this study had relatively little power to examine such differences.

Spatial Extent of Expertise Effects in the FFA.

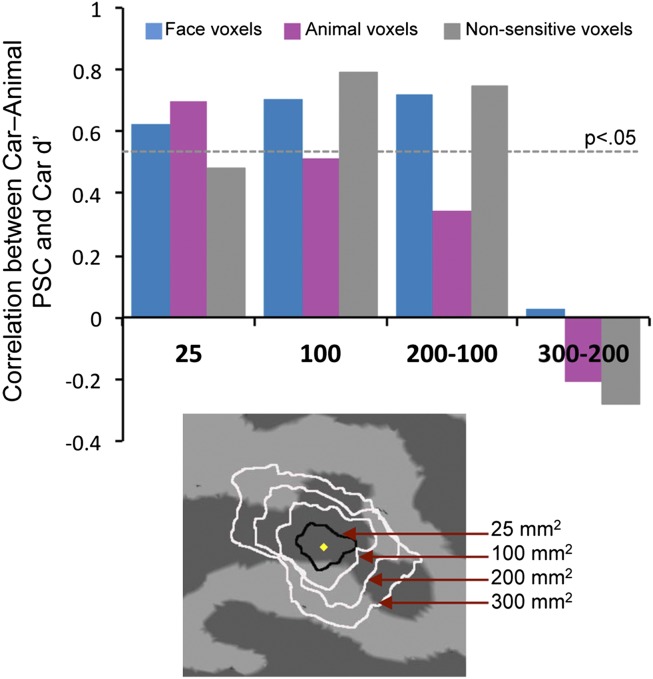

We assessed how car expertise effects vary as a function of distance from the peak of face selectivity in the FFA. This assessment could only be performed on the peak FFA, and not for FFA1/FFA2, because several subjects did not have two clear peaks (Fig. 1A). Moreover, to define ROIs of increasing sizes following the activation landscape of face responses, we were limited to subjects with a large FFA (only 12 had a 300-mm2 rFFA). Concentric ROIs centered on the localizer peak revealed that expertise effects were centered on and spatially limited to the rFFA (Fig. 3). Expertise effects were significant in the center of the FFA (25 mm2) but absent in a ring-ROI on average 8–10 mm from the peak of face selectivity (300–200 mm2). This absence of expertise effects in the outer ring was not because of reduced signal (SI Text). Similar results were obtained in the lFFA (Fig. S4). These results illustrate the failure of a conception of the FFA as an area within which face-sensitive regions are interspersed among nonsensitive regions that are functionally identical to nonsensitive areas outside the FFA. Here, nonsensitive voxels 10 mm from the peak show no expertise effect, but those near the peak do, responding to objects of expertise as highly face-sensitive voxels did.

Fig. 3.

Correlations in sorted voxels between PSC for cars–animals with behavioral car expertise, in circular and concentric ring ROIs: 25 mm2, 100 mm2, 200–100 mm2, and 300–200 mm2. The horizontal line represents the threshold for significance.

Partial Correlations for Car and Plane Expertise Effects in Car Experts.

Although 13 of 25 subjects reported being car experts, only three reported higher than average plane expertise. Nonetheless, behavioral performance for cars and planes was correlated (r = 0.65, P = 0.0005). Thus, we calculated partial correlations between expertise and neural responses to cars and planes, regressing out behavioral performance for the other category (Tables S2 and S3). Performance discriminating cars generally predicted FFA responses to cars independently of performance discriminating planes, and vice-versa. Thus, common variance was primarily not what accounted for neural expertise effects.

Interestingly, although the majority of our subjects reported no special interest in planes, plane expertise effects were similar in magnitude to car expertise effects in right hemisphere ROIs (Tables S2 and S3). This finding reveals that expertise effects depend on performance rather than explicit interest. Although car expertise effects were found in both hemispheres for cars, they were only observed in the right hemisphere ROIs for planes (Table S3). For example, the partial correlation between car expertise (controlling for plane expertise) and the response to cars–animals in car-responsive voxels within the FFA was no different in the right and left hemispheres (t = 1.21, ns) (Table S2). However, the partial correlation between plane expertise (controlling for car expertise) and the response to plane–animals in plane-responsive voxels within the FFA was larger in the right than left hemisphere (t = 5.21, P < 0.001) (Table S3), with the correlation in the left hemisphere not significant (r = 0.078). One possible interpretation for this unexpected result is that because performance for cars, but not planes, was associated with explicit interest, the difference could be driven by greater semantic knowledge for cars than planes. Future work with self-reported plane experts or manipulating visual and semantic expertise could help understand this pattern.

Spatial Extent of Expertise Effects Outside of the FFA.

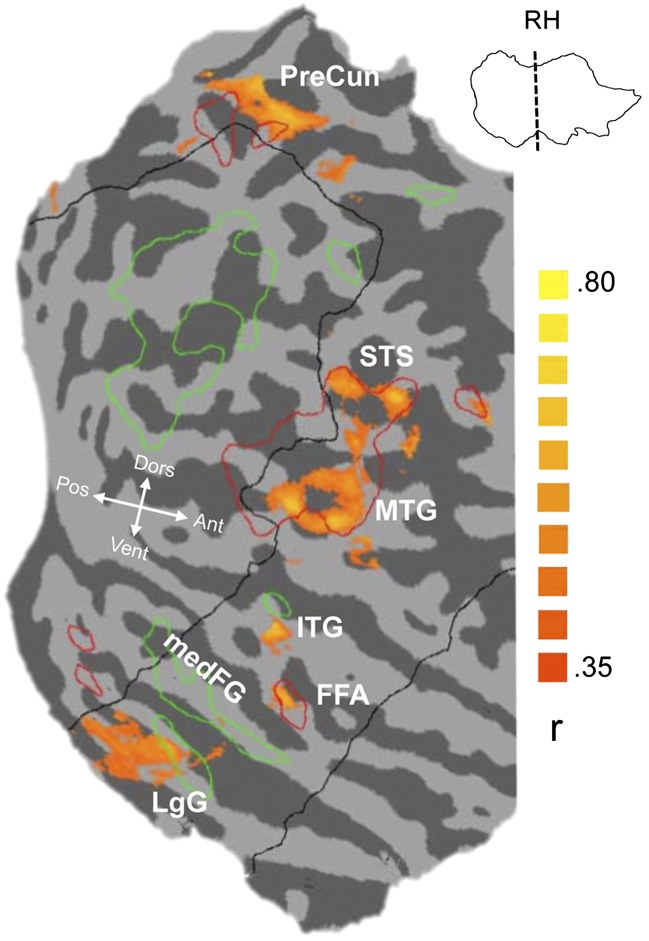

We also mapped the areas that correlated with individual differences for car discrimination at the group level. We smoothed the data from the HR-runs (Methods) and overlaid the group-averaged map of partial correlations of neural activity to cars (relative to animals) with behavioral car d′ after regressing out plane d′ onto the faces > objects SR-localizer map (Fig. 4). Although group-average maps do not easily capture overlap in individuals (e.g., see ref. 32), which is best assessed in our other analyses, we found a focus of activity associated with car expertise overlapping the FFA in both hemispheres (Fig. 4 and Fig. S5). Within the field-of-view (FOV) of our HR-slices, and as in prior work (9, 14), other regions were engaged, such as areas in the precuneus, inferior and right middle temporal gyrus, and STS (Table S4). These are regions that were also more active for faces than objects in our study (although group foci did not necessarily overlap, especially in the left hemisphere), or for the hippocampus, in prior work (e.g., see ref. 32). Despite the similarity of the patterns for car expertise and face responses in the right hemisphere, it should be kept in mind that the face localizer and the correlation map were acquired at different resolutions and that one represents a simple contrast but the other captures variance in neural activity related to individual differences in a discrimination task. Defining the peak FFA based on a functional localizer has proven robust to differences in task difficulty or contrast categories for the FFA (33), but this appears less likely in other regions. Future work comparing correlations with expertise for faces to that of objects in a sample with sufficient variability in behavioral face performance (32) could better test the similarity of these networks.

Fig. 4.

Group-average map of partial correlations between PSC for cars–animals and behavioral car d′ regressing out plane d′, overlaid on an individual flattened right hemisphere. The black outline depicts the approximate borders of the HR field of view. Red and green outlines represent regions activated by faces > objects or objects > faces, respectively, from the group-average SR localizer data at a threshold of P < 0.05 with false-discovery rate correction.

Discussion

Implications for the Interpretation of FFA Function.

When expertise is not taken into account, we replicate other HR-fMRI studies (12, 16): within the classic FFA, there are regions strongly selective to faces, interspersed among regions that show little category preference. These and related results in neurophysiological studies (13, 34) have led to the suggestion that the FFA is a highly domain-specific area, specialized for faces, just as the medial temporal area is specialized for motion (13), and that prior expertise effects at SR-fMRI were a result of spatial blurring (14, 23).

However, when considering individual differences in expertise, evidence of reliable object sensitivity was obtained in the FFA. The response to cars or planes was higher relative to that for other categories in subjects who were better at discriminating other cars or planes outside the scanner. Although HR-fMRI cannot determine whether the same neurons respond to faces and objects of expertise, expertise effects overlap with face-selectivity at a resolution that is finer than the clustering of face responses in the human FFA or face patches in the monkey. These effects are robust even within highly face-selective voxels in the center of the FFA and are restricted to an area 200 mm2 (or 50 mm2 for lFFA) around the peak of face-selectivity. Therefore, even if selectivity for faces and objects was found to depend on nonoverlapping populations of neurons, the tight spatial contiguity of these responses would still suggest commonalities in function. The results are consistent with the idea that, at least with regards to specialization in the FG, faces are one of many domains of expertise in which we have learned to individuate visually similar objects, albeit one where expertise is ubiquitous. Whether early acquisition of face expertise affords a special status to face responses remains unclear.

Expertise or Attention?

Could expertise recruit the FFA simply because experts attend to objects of interest, thereby increasing activity in most regions of extrastriate cortex, including nonsensitive regions interspersed within face-sensitive regions in the FFA (14)? We acknowledge that objects of expertise, including faces, may attract attention and that attention influences visual responses (35). However, attention should amplify existing patterns of category selectivity (36), and so this account would predict expertise effects at least as large in neighboring areas that contain a larger proportion of object-responsive neurons than in nonsensitive voxels within the FFA. Instead, expertise effects in the FG were spatially limited to a small area centered on the peak of face selectivity. Expertise effects in nonsensitive voxels in the center of FFA were as strong as those in face-sensitive voxels and dropped precipitously a short distance from the peak. In addition, expertise effects for planes in the rFFA were of the same magnitude as those for cars, dissociating the role of behavioral performance, which appears to drive the FFA, from that of explicit interest in a domain.

Experience or Preexisting Ability?

To the extent that our results are explained by learning, they are inconsistent with the idea that learning effects are distributed throughout cortex with no relation to face selectivity (22). Nonetheless, it is always possible that in a correlational study of this sort, some of the effects reflect experience-independent variance, whereby the integrity of a network of areas that includes the FFA predicts performance with objects and faces. Two aspects of our findings are more consistent with an experience than an ability account. First, our neural effects of expertise were domain-specific: not only were they obtained for cars or planes relative to baselines of responses to animals or all other categories in the calculation of da, but partial correlations for car and plane expertise allow us to point to neural effects related to domain-specific behavioral advantages, rather than a domain general ability. Second, we found some evidence of trade-offs between responses to objects of expertise and responses to animals or faces in the FFA, consistent with other work where learning in nonface domains competed with face selectivity (1, 37).

Conclusion.

Perceptual expertise as measured here likely occurs in most people as they learn to individuate objects related to professional activities or hobbies. Thus, most peoples’ FFA likely contains several populations of neurons selective for objects of expertise. Prior studies revealing only face-selective responses in the FFA (12) or face patches (13) relied on object categories for which subjects likely had little experience, and as we show here, even when experts are included in a sample, selectivity for objects is not easily revealed until individual differences are taken into account. Thus, we suggest that claims of strong domain-specific selectivity should be made with more caution.

Our results were generally similar when a single FFA ROI was separated into a more anterior and a more posterior part, with little consistent evidence of a difference between these two areas [indeed, mean Face da was comparable in the three definitions: single = 0.36 (0.05); FFA1 = 0.30 (0.04); FFA2 = 0.35 (0.05)]. This finding is consistent with the current lack of evidence for a clear functional separation between these two subregions of the FFA (30), but the distinction is relatively unique and our understanding of functional maps in the visual system is rapidly changing (38). One implication of our results in the context of such rapid progress is that understanding the principles of organization of ventral extrastriate cortex may be hampered by conceptions of areas anchored in the domains to which they maximally respond, because this framework offers no explanation for why a car or a plane recruits “object areas” in one individual but “face areas” in another. Uncovering what mechanisms account for why an area is a focus of specialization for domains as visually and semantically different as faces and vehicles, or birds (9, 11), chess boards (7), or radiographs (10), is a very different endeavor from studying the role of the same area in face recognition. Any theory that succeeds in only one of these two goals will be severely limited.

Methods

Subjects.

Twenty-five healthy right-handed adults (eight females), aged 22–34 y, participated for monetary compensation. Informed written consent was obtained from each subject in accordance with guidelines of the institutional review board of Vanderbilt University and Vanderbilt University Medical Center. All subjects had normal or corrected-to-normal vision.

Scanning.

Imaging was performed on a Philips Medical Systems 7-Tesla (7T) human magnetic resonance scanner at the Institute of Imaging Science at Vanderbilt University Medical Center (Nashville, TN).

HR anatomical scan.

HR T1-weighted anatomical volumes were acquired using a 3D MP-RAGE–like acquisition sequence (FOV = 256 mm, TE = 1.79 ms, TR = 3.68 ms, matrix size = 256 × 256) to obtain 172 slices of 1-mm3 isotropic voxels. HR anatomical images were used to align sets of functional data, for volume rendering (including gray matter–white matter segmentation for the purposes of inflating and flattening of the cortical surface) and for visualization of functional data.

SR functional scan.

The experimental sequence began with a single-run SR functional localizer for real-time localization of the FFA and optimal positioning of HR slices. We then acquired 30 SR slices (2.2 × 2.2 × 2.5 mm) oriented in the coronal plane. The blood-oxygen level-dependent–based signals were collected using a fast T2*-sensitive radiofrequency-spoiled 3D PRESTO sequence (FOV = 211.2 mm, TE = 22 ms, TR = 21.93 ms, volume repetition time = 2,500 ms, flip angle = 62°, matrix size = 96 × 96). For the final five subjects we achieved full-brain coverage (40 slices; 2.3 × 2.3 × 2.5 mm) in the axial/transverse plane (volume repetition time = 2,000 ms, all other scanning parameters remained the same). Slice prescriptions that incorporated face-responsive voxels (localized on an individual basis using real-time linear regression analysis during the SR functional scan) and also included the FG were selected for proceeding HR runs.

HR functional scans.

Immediately following the SR scan, we acquired 24 HR coronal slices. We used a radio-frequency–spoiled 3D FFE acquisition sequence with SENSE (FOV = 160 mm, TE = 21 ms, TR = 32.26 ms, volume repetition time = 4,000 ms, flip angle = 45°, matrix size = 128 × 128) to obtain 1.25-mm3 isotropic voxels.

fMRI Display, Stimuli, and Task.

Images were presented on an Apple computer using Matlab (MathWorks) with Psychophysics Toolbox extension. We used 72 grayscale images (36 faces, 36 objects) to localize face- and object-selective regions in the SR run. Subsequent runs used another 110 grayscale face images, and the same number of modern car, animal, and plane images (Fig. S1).

There were seven functional scans: one SR localizer run followed by six HR experimental runs. All runs used a blocked design using a one-back detection task with subjects indicating immediate repetition of identical images. Each image differed in size relative to the preceding image and every block included one-to-two repeats.

SR Localizer run.

The localizer scan used 20 blocks of alternating faces and common objects (20 images shown for 1 s) with a 10-s fixation at the beginning and end. (In response to scanner upgrades, we adjusted the localizer in the final five subjects to include 15 20-s blocks with 0-s fixation at the beginning and end of the run.) Average accuracy was 0.97 (range 0.69–1.0).

HR experimental runs.

After real-time alignment of the HR slices based on SR data, subjects completed six experimental runs with 20 20-s blocks (four each of faces, animals, cars, planes, and scrambled, with 20 images sequentially presented for 1 s), with 8-s fixation at the beginning and end. Within a run of 20 blocks, each category occurred once every five blocks, and two blocks of the same category never occurred in immediate succession. Average accuracy was 0.97 (range 0.81–0.99). Performance in the scanner was not related to behavioral expertise (P > 0.12).

Behavioral Expertise Measure and Stimuli.

A behavioral task outside the scanner used a separate set of grayscale images of 56 cars and planes. Subjects performed 12 blocks of 28 sequential matching trials per category. On each trial, the first stimulus appeared for 1,000 ms followed by a 500-ms mask. A second stimulus appeared and remained visible until a same or different response or 5,000 ms. Subjects judged whether the two images showed cars or planes from the same make and model regardless of year. An expertise sensitivity score was calculated for cars (car d′, range 0.52–3.17) and planes (plane d′, range 0.80–3.13) for each subject.

Data Analysis.

The HR T1-weighted anatomical scan was used to create a 3D brain for which translational and rotational transformations ensured a center on the anterior commissure and alignment with the anterior commissure–posterior commissure plane. For post hoc analyses only, the anatomical brain was normalized in Talairach space.

Functional data were analyzed using Brain Voyager (www.brainvoyager.com), and in-house Matlab scripts (www.themathworks.com). Preprocessing included 3D motion correction and temporal filtering (high-pass criterion of 2.5 cycles per run) with linear trend removal. Data from HR functional runs were interpolated from 1.25-mm isotropic voxels to a resolution of 1 mm isotropic using sinc interpolation. No spatial smoothing was applied at any resolution. We used standard (GLM) analyses to compute the correlation between predictor variables and actual neural activation, yielding voxel-by-voxel activation maps for each condition.

ROI selection.

ROIs were defined in 2D, except where specified. Automatic and manual segmentation were used to disconnect the hemispheres and identify the gray matter–white matter boundary. ROIs were defined using the face-object contrast in the SR localizer run that responded more to faces than objects (FFA) or the reverse (medFG). First, the center of the ROI was defined, then rings on the cortical surface were defined concentrically around this peak to achieve a fixed area (Table S1). Analyses were performed on 1-mm3 voxels of a given ROI that were activated significantly more to objects relative to the scrambled baseline (P < 0.01). Only SR localizer data were transferred to a surface format to define ROIs and all HR-analyses were performed on volume data that were not transferred into surface format, avoiding blurring.

Response amplitudes.

For each voxel, a percent signal-change (PSC) estimate was computed for each category using scrambled blocks as the baseline. Response amplitudes were computed in two steps. First, PSC values from the odd-numbered runs were used to determine the category preference of each voxel based on the category evoking the maximum response. Second, PSC values from the even-numbered runs were used to plot the average response amplitude for all four categories within each type of voxel. The reverse computations were performed using data from even-numbered runs to sort voxels and data from odd-numbered runs to calculate response amplitudes and the two computations were averaged.

Selectivity of response.

We quantified selectivity on a voxel-by-voxel basis using the signal detection theory measure da, with cross validation (29, 31). Selectivity was computed as,

where μ and σ represent the mean and SD, respectively, of responses, computed relative to a scrambled baseline (12): e.g.,

The preferred category for each voxel was the one that evoked the maximum response during odd-numbered runs, and da was calculated relative to this category using data from the even-numbered runs. These results were then averaged with results from the reverse computation.

Group-average maps.

We computed group-average brain maps to further compare the network of areas showing car expertise effects with the network of face-sensitive areas identified at the group level in the functional localizer. Data from the HR-runs was smoothed with a Gaussian kernel (FWHM = 6 mm) and transformed into Talairach space to combine hemispheres across individuals.

Supplementary Material

Acknowledgments

We thank Kalanit Grill-Spector for sharing her stimuli and John Pyles, Allen Newton, and Michael Mack for assistance. This work was supported by the James S. McDonnell Foundation and National Science Foundation (Grant SBE-0542013), the Vanderbilt Vision Research Center (Grant P30-EY008126), and the National Eye Institute (Grant R01 EY013441-06A2).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1116333109/-/DCSupplemental.

References

- 1.Dehaene S, et al. How learning to read changes the cortical networks for vision and language. Science. 2010;330:1359–1364. doi: 10.1126/science.1194140. [DOI] [PubMed] [Google Scholar]

- 2.Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- 3.Epstein R, Kanwisher N. A cortical representation of the local visual environment. Nature. 1998;392:598–601. doi: 10.1038/33402. [DOI] [PubMed] [Google Scholar]

- 4.Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Wong YK, Gauthier I. A multimodal neural network recruited by expertise with musical notation. J Cogn Neurosci. 2010;22:695–713. doi: 10.1162/jocn.2009.21229. [DOI] [PubMed] [Google Scholar]

- 6.Duchaine B, Germine L, Nakayama K. Family resemblance: Ten family members with prosopagnosia and within-class object agnosia. Cogn Neuropsychol. 2007;24:419–430. doi: 10.1080/02643290701380491. [DOI] [PubMed] [Google Scholar]

- 7.Bilalić M, Langner R, Ulrich R, Grodd W. Many faces of expertise: Fusiform face area in chess experts and novices. J Neurosci. 2011;31:10206–10214. doi: 10.1523/JNEUROSCI.5727-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Gauthier I, Tarr MJ, Anderson AW, Skudlarski P, Gore JC. Activation of the middle fusiform ‘face area’ increases with expertise in recognizing novel objects. Nat Neurosci. 1999;2:568–573. doi: 10.1038/9224. [DOI] [PubMed] [Google Scholar]

- 9.Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nat Neurosci. 2000;3:191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- 10.Harley EM, et al. Engagement of fusiform cortex and disengagement of lateral occipital cortex in the acquisition of radiological expertise. Cereb Cortex. 2009;19:2746–2754. doi: 10.1093/cercor/bhp051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Xu Y. Revisiting the role of the fusiform face area in visual expertise. Cereb Cortex. 2005;15:1234–1242. doi: 10.1093/cercor/bhi006. [DOI] [PubMed] [Google Scholar]

- 12.Grill-Spector KS, Sayres R, Ress D. High-resolution imaging reveals highly selective nonface clusters in the fusiform face area. Nat Neurosci. 2006;9:1177–1185. doi: 10.1038/nn1745. [DOI] [PubMed] [Google Scholar]

- 13.Tsao DY, Freiwald WA, Tootell RB, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Harel A, Gilaie-Dotan S, Malach R, Bentin S. Top-down engagement modulates the neural expressions of visual expertise. Cereb Cortex. 2010;20:2304–2318. doi: 10.1093/cercor/bhp316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Gauthier I, Tarr MJ. Unraveling mechanisms for expert object recognition: Bridging brain activity and behavior. J Exp Psychol Hum Percept Perform. 2002;28:431–446. doi: 10.1037//0096-1523.28.2.431. [DOI] [PubMed] [Google Scholar]

- 16.Baker CI, Hutchison TL, Kanwisher N. Does the fusiform face area contain subregions highly selective for nonfaces? Nat Neurosci. 2007;10:3–4. doi: 10.1038/nn0107-3. [DOI] [PubMed] [Google Scholar]

- 17.Nestor A, Plaut DC, Behrmann M. Unraveling the distributed neural code of facial identity through spatiotemporal pattern analysis. Proc Natl Acad Sci USA. 2011;108:9998–10003. doi: 10.1073/pnas.1102433108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Verosky SC, Turk-Browne NB. Representations of facial identity in the left hemisphere require right hemisphere processing. J Cogn Neurosci. 2012;24:1006–1017. doi: 10.1162/jocn_a_00196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Weiner KS, Grill-Spector K. Sparsely-distributed organization of face and limb activations in human ventral temporal cortex. Neuroimage. 2010;52:1559–1573. doi: 10.1016/j.neuroimage.2010.04.262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Tsao DY, Livingstone MS. Mechanisms of face perception. Annu Rev Neurosci. 2008;31:411–437. doi: 10.1146/annurev.neuro.30.051606.094238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Op de Beeck HP, Baker CI. The neural basis of visual object learning. Trends Cogn Sci. 2010;14:22–30. doi: 10.1016/j.tics.2009.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.McKone E, Kanwisher N, Duchaine BC. Can generic expertise explain special processing for faces? Trends Cogn Sci. 2007;11:8–15. doi: 10.1016/j.tics.2006.11.002. [DOI] [PubMed] [Google Scholar]

- 23.Kanwisher N. Domain specificity in face perception. Nat Neurosci. 2000;3:759–763. doi: 10.1038/77664. [DOI] [PubMed] [Google Scholar]

- 24.Farah MJ, Wilson KD, Drain M, Tanaka JN. What is “special” about face perception? Psychol Rev. 1998;105:482–498. doi: 10.1037/0033-295x.105.3.482. [DOI] [PubMed] [Google Scholar]

- 25.Richler JJ, Cheung OS, Gauthier I. Holistic processing predicts face recognition. Psychol Sci. 2011;22:464–471. doi: 10.1177/0956797611401753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Young AW, Hellawell D, Hay D. Configural information in face perception. Perception. 1987;10:747–759. doi: 10.1068/p160747. [DOI] [PubMed] [Google Scholar]

- 27.Wong ACN, Palmeri TJ, Rogers BP, Gore JC, Gauthier I. Beyond shape: How you learn about objects affects how they are represented in visual cortex. PLoS ONE. 2009;4:e8405. doi: 10.1371/journal.pone.0008405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Bukach CM, Phillips WS, Gauthier I. Limits of generalization between categories and implications for theories of category specificity. Atten Percept Psychophys. 2010;72:1865–1874. doi: 10.3758/APP.72.7.1865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Pinsk MA, et al. Neural representations of faces and body parts in macaque and human cortex: A comparative FMRI study. J Neurophysiol. 2009;101:2581–2600. doi: 10.1152/jn.91198.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Julian JB, Fedorenko E, Webster J, Kanwisher N. An algorithmic method for functionally defining regions of interest in the ventral visual pathway. Neuroimage. 2012;60:2357–2364. doi: 10.1016/j.neuroimage.2012.02.055. [DOI] [PubMed] [Google Scholar]

- 31.Mickes L, Wixted JT, Wais PE. A direct test of the unequal-variance signal detection model of recognition memory. Psychon Bull Rev. 2007;14:858–865. doi: 10.3758/bf03194112. [DOI] [PubMed] [Google Scholar]

- 32.Furl N, Garrido L, Dolan RJ, Driver J, Duchaine B. Fusiform gyrus face selectivity relates to individual differences in facial recognition ability. J Cogn Neurosci. 2011;23:1723–1740. doi: 10.1162/jocn.2010.21545. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Berman MG, et al. Evaluating functional localizers: The case of the FFA. Neuroimage. 2010;50:56–71. doi: 10.1016/j.neuroimage.2009.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Bell AH, et al. Relationship between functional magnetic resonance imaging-identified regions and neuronal category selectivity. J Neurosci. 2011;31:12229–12240. doi: 10.1523/JNEUROSCI.5865-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.McKeeff TJ, McGugin RW, Tong F, Gauthier I. Expertise increases the functional overlap between face and object perception. Cognition. 2010;117:355–360. doi: 10.1016/j.cognition.2010.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Puri AM, Wojciulik E, Ranganath C. Category expectation modulates baseline and stimulus-evoked activity in human inferotemporal cortex. Brain Res. 2009;1301:89–99. doi: 10.1016/j.brainres.2009.08.085. [DOI] [PubMed] [Google Scholar]

- 37.Behrmann M, Marotta J, Gauthier I, Tarr MJ, McKeeff TJ. Behavioral change and its neural correlates in visual agnosia after expertise training. J Cogn Neurosci. 2005;17:554–568. doi: 10.1162/0898929053467613. [DOI] [PubMed] [Google Scholar]

- 38.Wandell BA, Winawer J. Imaging retinotopic maps in the human brain. Vision Res. 2011;51:718–737. doi: 10.1016/j.visres.2010.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.