This article reports the consensus scientific observations and recommendation for priority research investments from one major module of the conference curriculum. The report is divided into four topical sections. In each, meeting proceedings are summarized and are followed by research recommendations.

Abstract

This article summarizes the proceedings of a portion of the Radiation Dose Summit, which was organized by the National Institute of Biomedical Imaging and Bioengineering and held in Bethesda, Maryland, in February 2011. The current understandings of ways to optimize the benefit-risk ratio of computed tomography (CT) examinations are summarized and recommendations are made for priority areas of research to close existing gaps in our knowledge. The prospects of achieving a submillisievert effective dose CT examination routinely are assessed.

© RSNA, 2012

Introduction

Growing concern has been expressed by the public, the media, physicians, and scientists about the increasing exposure of the American population to ionizing radiation derived from computed tomographic (CT) scanners. In addition, it is commonly observed that opportunities exist to (a) pool contemporary scientific and technical knowledge about the potentially adverse effects of low levels of ionizing radiation and (b) examine ongoing technical improvements in CT hardware and software.

In response to these concerns, the National Institute of Biomedical Imaging and Bioengineering convened a “Radiation Dose Summit,” which was held on February 24–25, 2011, in Bethesda, Maryland. More than 100 invited participants, including medical physicists, radiologists, cardiologists, engineers, industry representatives, and patient advocacy groups, met for 2 days to discuss these issues.

The goals of the summit were to (a) issue consensus statements on the state of scientific knowledge about the adverse effects of low doses of ionizing radiation from CT scanners, our ability to generate patient-specific estimates of radiation dose and risk, the potential for technical improvements to prevent accidental overexposures to ionizing radiation, and the potential to implement information technologies to improve the appropriateness of CT use; (b) recommend priority areas for research investment to close gaps in our scientific knowledge; and (c) lay out a research-based pathway toward our goal of generating diagnostic quality CT examinations at effective doses of 1 millisievert or less.

This article reports the consensus scientific observations and recommendation for priority research investments from one major module of the conference curriculum, namely “Radiation Exposure: How to Close Our Knowledge Gaps, Monitor and Safeguard Exposure.” The report is divided into four topical sections. In each, meeting proceedings are summarized, followed by research recommendations.

Section 1: State of Knowledge about Adverse Effects of Low Doses of Ionizing Radiation

The presence and magnitude of adverse effects in humans resulting from low doses of ionizing radiation have been debated among scientists for decades. Recently this debate has gained public visibility owing to the overexposure of patients undergoing CT examinations (1) and also because the number of CT examinations in the United States has increased by about 10% each year over the past decade. In 1980, radiation exposure from medical procedures accounted for about 15% of the total radiation received on average by U.S. residents. Today, about 50% of the average radiation dose to the population comes from medical exposures, with about one-fourth due to CT examinations alone.

The effective dose of ionizing radiation from medical imaging is measured in units called millisieverts, which are calculated to be the absorbed dose of radiation (expressed in milligrays, measuring energy absorbed as joules per kilogram of tissue) multiplied by a radiation weighting factor specific for the type of radiation under consideration and a tissue weighting factor specific for the region or organ receiving the absorbed dose. The radiation weighting factor for x-rays and gamma rays is 1.

While no direct evidence exists of adverse biologic effects in humans (or vertebrate animals in general) from radiation doses less than 100 mSv to individual organs or the whole body, there is evidence of adverse effects at higher doses. Six decades of study of survivors of the atomic bombs dropped on Hiroshima and Nagasaki in 1945 yields solid evidence of an adverse consequence of exposure at doses greater than 100 mSv. The consequence is an incremental increase in the incidence of various types of cancer compared with incidence of such cancers in the Japanese population of unexposed individuals. This incremental increase is impossible to distinguish at doses lower than approximately 100 mSv. Survivors in Hiroshima and Nagasaki received instantaneous whole-body exposures to a mixture of radiations more complex than the relatively low-energy x-ray beams used in CT. At CT examination, one or only a few organs are exposed, whereas the Japanese survivors received whole-body exposures. All of these factors complicate the extrapolation of the Japanese data to individuals exposed to radiation from medical imaging procedures.

Trying to connect the effects of whole-body instantaneous exposures of the Japanese survivors to patients undergoing CT and other medical examinations is a slippery slope. To compare the radiation doses to a few organs at CT to the whole-body exposures of the Japanese survivors, one must restate the organ doses in terms of the effective dose. Effective dose is defined as the radiation dose in millisieverts that would have to be delivered to the whole body to yield the same biologic consequences (specific for cancer induction risk only) as the dose actually received by the exposed organs. But whether effective dose should be used at all to estimate risk for individual patients undergoing medical imaging procedures is highly controversial. The concept of effective dose was developed to estimate the risk to occupationally exposed individuals (radiation workers) exposed nonuniformly to various types of radiation for the purpose of establishing standards for protection against radiation exposure. It was not developed to reliably estimate risk from medical exposures.

Once the effective dose has been computed, it is interpreted within the framework of the linear nonthreshold (LNT) model as an index of radiation-induced cancer risk. This model posits a straight-line relationship between dose and risk and extends the declining linearly projected risk all the way to zero dose. The LNT model was developed for the purpose of establishing standards for protection of occupationally exposed individuals and is thought to be a conservative model, meaning that in all likelihood it overestimates the incremental risk of cancer at low levels of radiation exposure.

The LNT model was not intended for use in the manner used by some to predict increased cancer deaths in a population of individuals exposed to medical radiation. These predictions typically involve multiplying a large number of individuals in a population exposed to low-dose radiation by a very small risk value that has been estimated from effective dose computations and the LNT model. Such computations project thousands of hypothetical “cancer deaths” in populations exposed to medical radiation. No hard data support using estimated effective doses and the LNT model in this manner, and predictions of radiation-induced cancer deaths in a population exposed to diagnostic x-ray imaging studies are highly suspect. Such predictions are provocative, garner considerable media attention, create substantial public anxiety, and consequently are potentially dangerous. Reacting to a media-induced fear of radiation, some patients have hesitated or refused to undergo medical imaging procedures. Yet, for almost all patients, the adverse health consequences of refusing a needed medical procedure far outweigh any potential radiation-associated risks or other risks that may be associated with the procedure.

Understanding of Subgroup Radiation Risk is Recommended

With the high natural incidence of cancer in populations worldwide, and the very small incremental increase in cancer that is conceivably attributable to low doses of radiation, it is virtually impossible to detect a few radiation-induced cancers in a population at doses less than 100 mSv, even if such an incremental increase did exist. With our current level of knowledge, efforts to identify such radiation-induced cancers in a general population are problematic, since excess cancers that occur in populations exposed to high levels of radiation (and consequently are likely to be radiation-induced) cannot be differentiated from naturally occurring cancers by even the most sophisticated pathologic techniques. Ultimately, the difficulty of identifying small increases of cancer in irradiated populations may be overcome by two potential approaches: the development of new pathologic criteria for radiation damage based on unique genetic or epigenetic changes induced by low doses or low dose rates of radiation; and much larger epidemiologic studies than were possible with the Japanese survivors, which someday may be possible with national/international patient dose registries.

Small subpopulations of humans appear to show a genetic predisposition to radiation-induced cancer, and this possible effect should be explored and quantified. Further, these explorations might lead to identification of other susceptible population subgroups for which radiation exposure is particularly risky. Individuals in these subgroups would be good candidates for alternate procedures that do not use ionizing radiation. For example, one subgroup that could be studied is people with ataxia telangiectasia, A-T, a rare, genetically mediated neurodegenerative disease that affects many parts of the body and causes severe disability. Some patients with A-T have an increased sensitivity to ionizing radiation that causes irreparable cell mutations (2). Therefore, patients with A-T should be exposed to medical x-rays only when absolutely necessary. Analysis of population subgroups that appear to be hypersensitive to radiation could illuminate a genetic predisposition for the development of cancer following radiation exposure (3). Ultimately this analysis might help to improve the current understanding of radiation sensitivity in general.

Other areas of research that could potentially contribute to evidence-based risk estimates at low radiation doses are studies on cellular and molecular processes that underlie the induction of radiation-induced cancer. For example, detailed characterization of the deficiencies in the cellular repair of radiation-induced DNA damage and of various defects in the integrity of tumor-suppressor genes could yield fundamental knowledge of the cellular and genetic mechanisms underlying radiation-induced cancer. Several areas of research that may contribute to a better understanding of the carcinogenic mechanisms of ionizing radiation are described in the Biologic Effects of Ionizing Radiation (BEIR VII) report of the U.S. National Academy of Sciences (4).

Research Opportunities

The topics and questions discussed above generate research challenges that might be addressed by federally sponsored research support such as:

Further identification of population subgroups that may be particularly sensitive (or insensitive) to ionizing radiation, and delineation of the biologic mechanisms underlying these attributes.

Further research into repair mechanisms in irradiated cells, tissues, and organs and how these mechanisms are influenced by the rates and magnitudes of exposure, to clarify differences between the bioeffects of acute, fractionated, and chronic exposures.

Determination of the population health impact of the anxiety and reluctance of patients recommended for medical imaging procedures caused by predictions of large numbers of cancer deaths induced by such procedures.

Determination of methods to identify specific cancers that are caused by radiation exposure.

Further epidemiologic research into the validity of the LNT hypothesis than is currently possible with data from bomb survivors and accident victims by using national or international medical imaging radiation exposure registries.

Conclusion

In the face of uncertainty about the biologic effects of radiation exposure at low doses, the prudent course of action with regard to medical imaging is to keep doses to patients as low as reasonably achievable (the ALARA principle) while ensuring that information is sufficient for accurate diagnoses and the guidance of interventional procedures. This course of action is reflected in international campaigns of radiologic organizations to reduce radiation dose to pediatric patients (Image Gently [5]) and adult patients (Image Wisely [6]). The summit meeting title, “Management of Radiation Dose in Diagnostic Medical Procedures: Toward the Sub-mSv CT Exam,” reflects this course of action to establish goals suitable for today’s needs for CT procedures and patient health.

Section 2: Translating “Machine-derived” Exposure Parameters into Patient-Specific Estimates of Dose to Radiation-Sensitive Organs and Risk for Both Tracking and Patient Management

Within several years of the introduction of CT into clinical practice, a standardized metric of scanner radiation output, the CT dose index (CTDI), was introduced (7,8) and widely adopted, such as its inclusion into the Code of Federal Regulations. While that index served a great purpose for many years, technologic developments in CT during the 1990s (eg, the introduction of helical scanning methods, the extension to multidetector scanners) required that this metric be modified. Other versions of this metric (eg, CTDIw, CTDIvol) were developed and standardized.

Further technologic developments in CT during the late 2000s and the introduction of wide-beam multidetector CT systems (eg, the introduction of cone beam and 320–detector row CT systems) again required that CT dose metrics be revised. Work has been underway through the American Association of Physicists in Medicine (AAPM) Task Group 111 (9) to develop new dose metrics that accurately reflect CT scanner output given that CTDI-based metrics were shown to significantly underestimate radiation output because the beam was now wider than either the measurement probe or even the phantom being used to make the measurement. Therefore, as technology has developed and dramatically increased the clinical and diagnostic capabilities of modern CT scanners, so has the need to develop metrics that accurately characterize the radiation output of the scanners themselves.

Recording and Reporting Patient Dose

In light of recent events publicizing radiation dose to patients undergoing CT examinations, many well-meaning efforts to record patient dose have been initiated. Many national and international groups (U.S. Food and Drug Administration, National Institute of Biomedical Imaging and Bioengineering, American College of Radiology [ACR], International Atomic Energy Agency, and National Institutes of Health intramural programs [10–12]) have begun recording “dose,” have required that their equipment report dose, or have encouraged other groups to report and/or record “dose.” In California, a recently passed state law (13) requires (as of July 1, 2012) the reporting of one of the following for each medical CT examination: “The computed tomography index volume (CTDIvol) and dose-length product (DLP), as defined by the International Electrotechnical Commission (IEC) and recognized by the federal Food and Drug Administration (FDA) [or] the dose unit as recommended by the American Association of Physicists in Medicine.”

These reporting/recording efforts are excellent and well meaning; however, the only dose metrics available for reporting are the CTDI metric and measures derived from it, including the DLP. While these CTDI measures are highly standardized and recognized around the world, they are not measures of patient dose (14)—a fact often misunderstood that has led to the misinterpretation of dosimetry values being reported at the scanner.

CTDI measures should not be considered to be equivalent to patient dose for several reasons, of which only four are listed here for illustration purposes. The first reason is that the CTDIvol reported at the scanner is the dose measured in a cylindrical, homogeneous, acrylic object, which is not representative of actual patient anatomy. These objects, known as phantoms, are cylinders of either 16 or 32 cm diameter and are referred to as the standard head and standard body CTDI phantoms, respectively. While these phantoms serve as a standard reference object, they were never intended to be used to represent any patient directly.

The second reason why the CTDI metric does not apply directly to patient dose is that this metric does not take into account the actual size of the patient, which has led to significant confusion when dose is being reported and recorded. Several studies have shown, for the same scanner output (ie, the same CTDIvol), that smaller patients actually absorb more radiation dose than larger patients (15,16). Therefore, if two patients of different size are scanned with the same technical factors, then the scanner would report an identical CTDIvol value, but the actual absorbed dose would be higher for a smaller patient than a larger patient. This becomes even more confusing when sites appropriately adjust technical factors for patient size (“right sizing” the radiation dose). This typically results in lower CTDIvol values for pediatric patients and small adults and much higher values for larger patients, which in turn might lead many to conclude that much higher doses are being given to larger patients. While it may be true that a site has appropriately lowered its CTDIvol values for pediatric patients, the actual doses to these patients can be two or three times higher than the CTDIvol value reported at the scanner. Conversely, although theCTDIvol values for obese adult patients may be very much higher than for standard sized patients, their actual absorbed dose can be significantly lower than the CTDIvol value reported at the scanner. Therefore, adjustments to CTDIvol values for patient size, such as those now described in AAPM Task Group 204 report (16), are necessary. Interestingly, as discussed more in section 3, data extracted from the CT scanner’s “preliminary radiograph” can be used to estimate patient size.

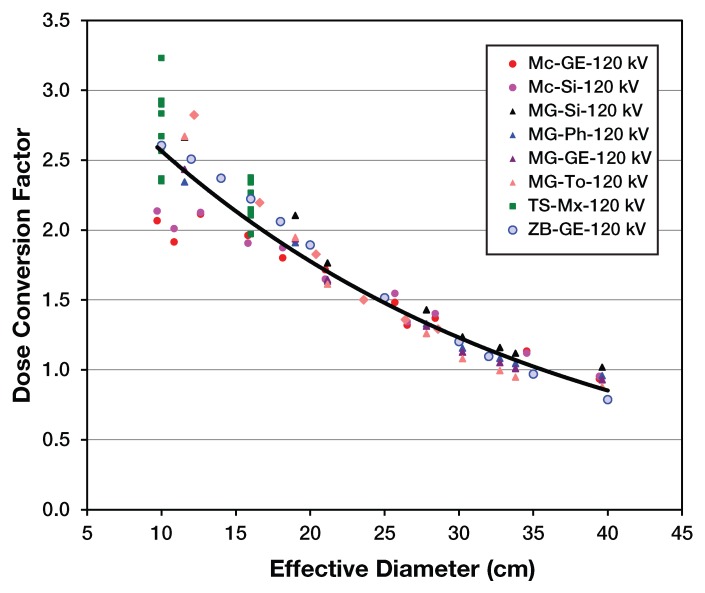

Figure 1 shows the strong dependence on patient size to patient dose. To obtain a size-specific dose estimate for a body scan of a patient, the normalized dose coefficient would be determined by the equivalent diameter, and that coefficient would be multiplied by the CTDIvol (from the 32-cm phantom). Note that if CTDIvol were actually equal to patient dose, then all coefficients would be 1.0. It should also be noted that for very small (0–1-year-old) patients, the CTDI underestimates dose by a factor between 2 and 2.5; for very large patients, the CTDI may actually be an overestimate of patient dose.

Figure 1:

The x-axis represents a patient size metric (effective diameter) and the y-axis represents the normalized dose coefficient for the 32 cm polymethyl methacrylate (PMMA) phantom. Note that AAPM Task Group 204 Report also contains conversions for 16 cm PMMA phantom as well. (Reprinted, with permission, from reference 16.)

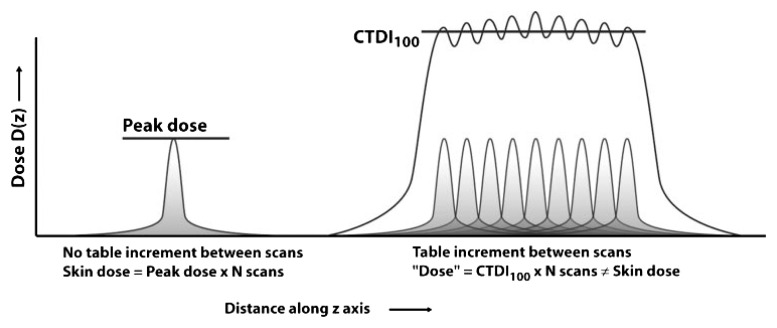

A third reason is that the CTDIvol can be a significant overestimate of dose for scans for which there is no table motion (eg, perfusion scans). CTDI was originally derived to represent the average dose to a small region located along the approximate center of the longitudinal extent of the scan volume that results from a contiguous set of scans along that longitudinal extent. When a volume of the patient is scanned (eg, the table is moved to cover a region of the patient), then this assumption is valid. When the table is not moved (such as when a perfusion scan is performed), then this assumption is not valid; the CTDI actually represents a significant overestimate of the dose, especially when considering peak skin dose. This is illustrated in the accompanying Figure 2 from Bauhs et al (17). Therefore, this overestimation of peak skin dose by CTDI is a necessary consideration when trying to estimate the radiation dose from these types of scans and specifically when trying to assess the deterministic effects of perfusion scans.

Figure 2:

Left: In perfusion or interventional CT (where there is no table movement), the peak skin dose is the relevant dose parameter for deterministic skin effects. The peak skin dose equals the peak dose from one scan times the number of scans. Right: If the peripheral CTDI100 is used as a surrogate for peak dose, the skin dose will be overestimated by up to a factor of two. (Reprinted, with permission, from reference 17.)

The fourth and final reason described here is that the CTDI metric may not provide an accurate reflection of patient dose when radiation dose–saving technologies are used. Most clinical scanning today employs radiation dose reduction methods developed by the manufacturers (eg, tube current modulation, dynamic collimation, angular modulation to reduce dose to anterior organs). However, the actions of these methods may not be accurately reflected in the CTDI metric; the value currently reported at the scanner is generally based on the average value of the tube current taken over the entire scan. Such an average tube current value is usually not a good indicator of the tube current value at any given anatomic location (eg, at the location of the glandular breast tissue or even at the location of the uterus when trying to estimate dose to a fetus). So, while CTDI is a highly standardized and recognized dosimetry metric, for the reasons described in this and the previous paragraphs, it does not reflect dose to a patient.

According to the national and international reports on radiation dose and risk (5,18–20), the entity we should be trying to estimate, record, and use as the basis for risk estimates is the radiation dose to individual radiosensitive organs. While there are some methods for estimating organ doses that have been utilized for some time (eg, ImPACT, CTExpo, ImpactDose), these still suffer from some of the same issues described above: They do not take into account patient size, variations across scanners, or the use of modern dose reduction methods. Much work must be done here to develop meaningful, robust metrics of patient dose (eg, organ dose) that account for these many factors and attendant complexities.

Dose Registries and Tracking Patient Dose

The ACR has completed a pilot phase of its CT Dose Index Registry and subsequently opened its full registry in the spring of 2011. Rather than tracking individual patient doses, the purpose of this registry is collecting data across a large number of sites for dose index values (specifically CTDI and DLP) to allow participating sites to compare their own values to those observed at other sites and to determine if values typically used are higher or lower than those used by others. The CT Dose Index Registry is utilizing the recently developed Digital Imaging and Communications in Medicine (DICOM) Radiation Dose Structured Report that records CTDI and DLP for each series (or “irradiation event”) in a CT examination. The DICOM hierarchy is used here in which a patient undergoes one or more examinations, an examination consists of one or more studies, a study consists of one or more acquisition series, and a series results in one or more images. As an example, when a patient undergoes a CT perfusion examination, they typically undergo a number of different acquisition series: (a) a planning radiograph, (b) an unenhanced head series, (c) a timing injection series, (d) the perfusion series, and (e) a CT angiography series.

While the ultimate objective of the CT Dose Index Registry was to allow sites to compare dose index values, the ACR became aware of several issues that make direct comparisons difficult across sites. Some of these issues included: (a) the multiplicity of different names for the same CT examinations in use at different sites and even within a site and the difficulties that these practices create for anyone trying to compare examinations (eg, each practice has different versions of “routine head”); (b) a lack of a universally accepted descriptor of patient size that is recorded reliably in the DICOM data structures (despite the existence of height and weight elements, they are not regularly filled in nor is it clear that they are the parameters that should be desired; for example, Task Group 204 used “equivalent diameter,” lateral diameter, anteroposterior thickness and perimeter as possible descriptors); (c) an inability to determine the cause of observed variations in CTDIvol within a site or between sites, given there is currently no size descriptor. Large CTDIvol values due to appropriate adjustment for a very large patient (or the proper use of tube current modulation) cannot be distinguished from inappropriately high CTDIvol values for pediatric patients. Clearly, these are some of the major issues that prevent a meaningful tracking of radiation dose to individual patients.

Given these difficulties and questions raised by these observations, the lessons learned already include the need for standardized study descriptions (such as those being proposed by the Radiological Society of North America’s [RSNA’s] RadLex® and others) and size descriptions (see next section) and their implementation in the DICOM standard.

When contemplating tracking dose to individual patients, several issues need to be considered. One is that the parameters being recorded should be a reasonably accurate reflection of the radiation dose being absorbed by the patient, and to achieve this requires a resolution of the above issues and implementation of standardizations for study and patient size descriptors. Even if a reasonably accurate representation of patient dose is available, we must answer the key question of how one should use that information. If a patient is referred for a CT examination, should his or her prior history of radiation dose exposure be factored in, and, if so, how? Is there a limit on how many CT scans one should undergo over a certain period? Do radiation dose–related cancer risks accumulate in a linear fashion (to justify simply summing doses over time, for example)? The knowledge gaps here present a research opportunity.

Research Opportunities

The topics and questions discussed above generate research challenges that might be addressed by federally sponsored research support such as:

The development of methods to accurately and robustly convert scanner output metrics (CTDI, DLP, Task Group 111 dose metrics) to reliably estimate patient doses (eg, organ doses) that account for anatomic region scanned, patient size, and dose reduction methods being used. A related initiative would be development of methods to measure patient size from the CT scanner’s preliminary radiograph and test the accuracy of this method to calculate organ doses and effective doses.

The development of methods to integrate improved patient dose estimates into the patient record by means of informatics-based approaches to allow scalable solutions to tracking patient dose. This would include the use of standardized study descriptions (including series elements) to allow meaningful comparisons of patient dose for specific examinations within and across sites, integration with an expanded DICOM Radiation Dose Structured Report (which does not currently support patient dose estimates) and finally methods to track individual patient dose across time (and even across institutions, as many patients undergo imaging at many different medical centers).

Additional research into how this information could be utilized for individual patients, including investigations into how to establish limits (or whether limits are actually appropriate) for exposure to individual patients.

Conclusion

Reasonably accurate estimates of radiation dose to patients and their radiosensitive organs are fundamental to the issues identified in this conference. These estimates need to be robust enough to account for variables such as patient size, scanner factors, and the use of radiation dose reduction methods to allow us to identify where further radiation dose reduction efforts need to be focused. They also need to be integrated into patient medical records in a scalable fashion to allow tracking of patient dose. Finally, investigations need to be made into how this information should be used in the context of patient management and patient safety (eg, decision support).

Section 3: How Can We Ensure Selection of Optimum CT Protocols, Implement “Fail-Safe” Mechanisms to Prevent Accidental Overexposure, and Provide Appropriate Training and Certification to Those Who Are Involved in Protocols and Perform CT Examinations?

To date, CT manufacturers have been reluctant to take direct responsibility for CT protocols partly due to medicolegal concerns and partly from a mindset that is fairly standard with vendor representatives that they do not want to “practice medicine.” However, recent CT accidents combined with revelations about the large disparity in CT protocol parameters pertaining to radiation dose have led to the realization that consensus-based “best practices” CT protocols should be made available to the CT user community. Furthermore, there is a clear need to allow CT users to transfer protocols from one vendor’s model to a different vendor’s scanner to the extent that this is possible. A working group in the DICOM committee (of the ACR–National Electrical Manufacturers Association working group) has focused on such protocols, but implementation remains years away if it happens at all. The effort should be reprioritized.

Using the localizer group or a hypothetical low dose, CT prescan, CT scanners have the ability to automatically determine the dimensions of the patient. Although the traditional localizer view (“CT radiograph”) does provide attenuation information about the patient, two views (posteroanterior and lateral) are necessary for a more complete assessment of patient dimensions, and these projection views are subject to error when the patient is not well centered on the gantry. A proposed localizer CT scan (one revolution of the gantry at full detector array width) can be performed at negligible doses but could be used to accurately assess the two-dimensional profile of the patient automatically. With such a mode enabled on a CT scanner, the technique factors as a function of patient size can be determined with technique charts that would be generated based on “best practices” principles, using a panel of expert CT radiologists and physicists. Such charts could be loaded into the CT scanners at purchase but then modified by the user. It is noted that the proposed localizer CT scan should be reconstructed at the full field of view of the scanner hardware, reducing cut-off of patient anatomy outside the field of view—which is necessary for accurately computing patient dimensions.

With the CT scanner enabled to estimate (and report) patient size (primarily effective diameter), the dose report that currently relies on CTDIvol and DLP can be made to be far more accurate in terms of CT dosimetry as seen in the AAPM Report 204 (16). Such a feature would have widespread use. First, if an accurate dose estimate were automatically made by the scanner for each patient and each scan series, the types of problems that led to large numbers of patients being overexposed to the point of epilation and erythema could be limited to far fewer patients. Surveillance software could report histograms of doses at the end of each shift or day, and threshold settings would then be useful in identifying overexposure situations. Second, the automatic estimation of patient dose in CT scanners would be useful as state laws are implemented (eg, California’s SB-1237) that require dose reporting in CT. Finally and most important, an institution could use the statistics built up from patient size–dependent dose reporting tools to evaluate its use of radiation dose against national and international norms for specific CT scanning protocols. Dose registries are already being formed by a number of organizations such as the ACR and others, but when size is included in the dose estimates, reference dose values become far more useful.

Some people have advocated for the use of independent radiation dose monitoring systems in CT scanners that would be designed to protect patients from overexposure. However, modern CT scanners already use calibration procedures such that the x-ray exposure level being used is “known” to the scanner. No known machine malfunction could cause the radiation dose to dramatically and accidently increase as long as the voltage, amperage, and rotation time are well controlled by the scanner, which they are. The only exception to this statement would be if the filtration assembly (inherent filtration and the beam-shaping filter) were to become mechanically detached from the x-ray tube assembly, which is a highly unlikely scenario. Therefore, the addition of dose monitoring radiation hardware is not advocated here.

Assessment of the benefits from CT imaging is a far more challenging and time-intensive task than estimating risk, because quantifying benefit requires indication-specific analysis over the huge gamut of indications for CT use. Nevertheless, far more research on comparative effectiveness for CT imaging is needed to generate scientifically justifiable appropriate use criteria for CT use, across its wide range of clinical utility.

The reality of CT practice in the United States today is that the vast majority of overexposures occur due to human error. While automatic technique selection based on size-specific metrics as discussed above will reduce mistakes, inappropriate tube current modulation settings were also the culprit in several CT overdose incidents as well. Therefore, even with automatic technique selection capabilities of modern CT systems, human error will remain a factor in CT operation. Efforts to increase the level of training for CT operators are necessary and should be a specific requirement of all CT accreditation procedures. In addition to continuing medical education (CME) requirements, testing should be made a part of periodic requirements for CT operators nationwide. Attending CME courses is of course valuable but monitoring participant understanding can only be determined by using frequent (annual or biannual) testing procedures that have actual workplace consequences. The technical sophistication of CT technologists in the United States has unfortunately fallen behind that of other advanced countries; hence, a major educational initiative for improving the understanding of CT operators is needed.

Efficient and safe operation of a CT scanner is also dependent on the user interface that the CT scanner employs, and unfortunately there is wide variability between CT manufacturers in both the user interface and the nomenclature used to describe the operation of the scanner. Because most CT technologists are required to operate CT scanners from two or more vendors at a given institution, the wide variability in scanner control interfaces is a genuine safety concern. This situation needs to change. Competition between the CT manufacturers has resulted in incredible innovation and dramatic improvements in CT scanner capacities and image quality, and this should continue to be encouraged. The creation of a standard interface for the CT operator should be encouraged by professional organizations in radiology such as the ACR, RSNA, and AAPM, and through trade organizations such as the Medical Imaging Technology Alliance. Government regulation and mandates could yield a similar outcome. However, government mandates are often not as useful as improvements developed within the radiology community.

The control consoles do not need to be identical, but their operation should be conceptually similar and the nomenclature should be standardized. There are many brands of automobiles, some with leather seats and wood-grained dashboards, and some without those features, but they all have the accelerator on the right and the brake pedal in the middle—and older readers will remember that the clutch was always on the left. It was recognized long ago in the auto industry that some things needed to be the same for the safe operation of a car—the CT manufacturers need to realize that the same is true in this industry. These important changes cannot take place unless major CT vendors are willing to compromise in terms of the look and feel of their CT console layout—but overdose and other CT accidents due to operator error are likely to continue unless major changes occur that create a more uniform CT control panel.

Research Opportunities

The topics and questions discussed above generate research challenges that might be addressed by federally sponsored research support such as:

The development of CT scanner–based methods to robustly measure patient dimensions and use this information for more accurate radiation dose assessment.

The development of information technology infrastructure tools that would allow any radiology institution to automate the process of estimating radiation dose due to CT procedures at their facility by using computer-based query methods. The output of these tools, for example, would allow the development of radiation dose statistics for each CT procedure (chest, head, abdomen-pelvis), as a function of which CT scanner is used and across a range of patient dimensions.

Research on the assessment of organ dose from CT scans of patients of different size is needed in order that radiation dose data can be properly used to compute patient risk. Both direct measurement and Monte Carlo–based methods are encouraged.

In addition to the need for research to improve our understanding of the risks of radiation from CT exposures for individuals and subgroups as described in section 1 of this report, the evaluation of the benefit from CT examinations has not been scientifically measured in any breadth, using reliable and objective measures. Research that focuses on epidemiologic methods, patient follow-up, or statistical techniques that can lead to quantifiable measures of benefit from CT procedures is encouraged so that the benefit-risk ratio can be determined across a number of clinical settings in which CT is frequently used.

While not research topics per se, more educational opportunities for CT technologists need to be developed and employers need to provide their technologists access to them. CT vendors should work collaboratively and in the interest of patient safety tocreate reasonable standards for CT control consoles. Manufacturer-specific CT nomenclature should also be standardized across vendor platforms.

Conclusion

Implementation of CT-derived patient size estimation prior to scanning is thought to be key to reducing CT overexposure. Patient size estimation, currently performed by all CT scanners but in a manner that is opaque to the operator, is key to a number of efforts outlined above that would lead to tighter technique control, reduced misadministration, and better dose-reporting statistics. Advancing the educational requirements of the technologist who operates CT scanners is also thought to be an essential component of radiation dose reduction efforts in CT. Periodic and meaningful testing of CT technologists is believed to be essential to the educational process. CT manufacturers should be encouraged to develop a more common CT console interface, which is thought to be necessary for the safe operation of multiple scanner types by technologists. A common nomenclature in CT terminology at the level of the CT console is also encouraged. Academic and professional societies in radiology, and trade organizations such as the Medical Imaging Technology Alliance, should exert continuous pressure on CT manufacturers until these relatively modest suggestions are incorporated across the installed scanner base. Toward this end, the Food and Drug Administration should consider an expedited approval process to allow CT manufacturers to deploy more uniform control console software in a timely manner.

Section 4: How Can We Use Information Technology to an Advantage to Ensure Optimum Use of CT Scanning?

More effective management of individual and population exposure to ionizing radiation could come through technologic improvements related to CT and the use of sophisticated information technology tools (21). During the conference, several examples of these ideas were presented.

Decision Support Influence on Test Ordering

It has been widely speculated that a fairly large proportion of diagnostic imaging studies performed in the United States contribute little impact to patient diagnoses and outcomes (22). This “wasteful” imaging could stem from one or more sources. First, referring physicians might be unaware of the true diagnostic contribution of imaging in a given patient with given signs and symptoms and, therefore, may select and order a test with a low yield of helpful findings. Second, referring physicians may be unaware of prior diagnostic imaging studies recently performed in a given patient (either within or outside their own institutions) and order unnecessary repeat testing. Third, the well-known practice of “defensive medicine” may lead physicians to have an extremely low threshold for ordering diagnostic imaging studies if they feel that the performance of such tests reduces their vulnerability to diagnostic error and a subsequent claim of negligence. Additionally, it is well established that physicians who have economic interests in imaging facilities order tests at a far higher rate than their counterparts who do not have such an interest (23) and that these higher utilization rates are wasteful.

The deployment of decision support systems (eg, computer-based expert systems that provide evidence-based advice to physicians about diagnostics test selection for a wide variety of patient presentations) has the potential to substantially mitigate each of these potential sources of wasteful, low-yield, or unnecessary testing. Reports from several institutions (24,25) have indicated that real-time decision support systems coupled with computerized physician order entry tools embedded in an electronic health record may reduce the utilization of advanced imaging tests by approximately 15%. The vast majority of the reduction comes from the elimination of orders for low-yield or duplicate imaging procedures that are often designated as “inappropriate utilization” (25).

Decision Support Influence on Protocol Selection

While nonradiologist physicians are most commonly responsible for selecting and ordering diagnostic imaging examinations, it is radiologists who are typically responsible for selecting specific technical imaging protocols (eg, the combination of user-selectable machine parameters, the use and timing of contrast agent administration, the number and timing of image acquisition series) that are most likely to answer the presenting clinical question. Radiologists need to be responsible for selecting (or prescribing) a protocol that will answer the clinical question efficiently while minimizing patient risk (from radiation and/or contrast agent exposure) and cost. An evidence-based decision support system designed to assist radiologists in this task could improve their choices.

While some Web-based protocol selection advice is available, no information is yet known about the effectiveness of this type of decision support.

“Harvesting” Exposure Information for Repositories

Several speakers at the summit conference expressed disappointment that the United States has not yet established a mechanism for harvesting organ-based effective radiation dose information for each patient receiving medical radiation and forwarding that information to a regional or national repository. Despite the ease of using secure, private national databases to manage everything from banking to online purchasing, only very primitive systems exist to make critical medical information, including the images, interpretations, and radiation exposure data readily available to patients, to their physicians, and to secure data repositories. Only very early efforts to communicate estimates of radiation-induced risks to health care providers and patients have been recorded in publications (26). The absence of such information creates inefficiencies in the diagnosis and treatment of a mobile population and can lead to waste and errors. Until recently, there was no significant federal support for the creation of such national medical information repositories, such as national imaging and radiation exposure registries. A partnership between the National Institute of Biomedical Imaging and Bioengineering and RSNA to create such imaging registries is a promising start.

If such registries existed, institutions could compare their doses to those in the acceptable ranges.

Cumulative Exposure as Factor in Algorithms

Several speakers at the summit conference spoke about the potential value of including data on a patient’s cumulative radiation exposure as a factor in decision support rules that established the benefit-risk ratio for ordering the “next” imaging test that involves ionizing radiation. Similarly, several speakers expressed concern that we do not yet have the needed scientific knowledge to ensure that such a practice would be beneficial. Until more is known quantitatively about both the benefits of specific imaging studies in a wide range of clinical settings and the risks of low-level radiation exposure to individuals, it will be very difficult to calculate these benefit-risk trade-offs accurately.

Research Opportunities

Based on what has been discussed above, there are many questions that warrant investigation through federally sponsored research support; they are:

Can we improve and test the value of clinical decision support systems that advise physicians about the best imaging examination in given clinical situations?

Can we develop and test decision support systems that help radiologists select the most appropriate imaging protocol for a given patient that is to undergo an appropriately selected imaging study?

Can we develop and test technical and communication standards that would permit accurate and meaningful exposure data on individuals and forward this information to secure “repositories” and to the patient’s electronic medical record?

Can we develop and test new decision support algorithms that can add radiation exposure to the “benefit-risk” calculation used to recommend an imaging test for a given clinical situation?

Conclusion

Information technology may well be as important as CT scanning technology itself in managing and reducing Americans’ exposure to excessive radiation from medical imaging procedures.

Disclosures of Conflicts of Interest: J.M.B. Financial activities related to the present article: none to disclose. Financial activities not related to the present article: consultancy for Varian; NIH grants to institution; textbook royalties from Lippincott Williams and Wilkins; unrelated travel/meeting expenses from NIH Study Section. Other relationships: none to disclose. W.R.H. No relevant conflicts of interest to disclose. M.F.M.G. Financial activities related to the present article: none to disclose. Financial activities not related to the present article: author receives research support from Master Research Agreement to UCLA from Siemens Medical Solutions; author is instructor for CME courses by Medical Technology Management Institute. Other relationships: none to disclose. S.E.S. Financial activities related to the present article: NIBIB sponsored the meeting from which this report is derived. Financial activities not related to the present article: author is President of Brigham Radiology Research and Education Foundation, which is an equity holder in Medicalis, a medical software manufacturer (author has no equity and receives no financial support); grant recipient from Toshiba and Siemens (receives no financial support); member of GERRAF Board of Review, receives travel reimbursement from AUR (no additional financial support). Other relationships: none to disclose.

Advances in Knowledge.

• Imagers can now do a more precise job in translating machine-derived exposure parameters into estimates of dose to radiation-sensitive organs.

• Imagers can now do more to ensure optimum design of CT examination protocols and use of best practices.

• Information technology has become a key tool to optimize the use of CT scanning.

Acknowledgments

We thank Andrew Menard and Lisa Cushman-Daly for their help in manuscript preparation.

Received December 19, 2011; revision requested January 26, 2012; revision received April 2; accepted April 16; final version accepted May 8.

Funding: The meeting from which this report was derived was sponsored by the National Institute of Biomedical Imaging and Bioengineering.

Abbreviations:

- AAPM

- American Association of Physicists in Medicine

- ACR

- American College of Radiology

- CTDI

- CT dose index

- CTDIvol

- CTDI volume

- DICOM

- Digital Imaging and Communications in Medicine

- DLP

- dose-length product

- LNT

- linear nonthreshold

- RSNA

- Radiological Society of North America

References

- 1.Los Angeles Times Cedars-Sinai investigated for significant radiation overdoses of 206 patients. http://latimesblogs.latimes.com/lanow/2009/10/cedarssinai-investigated-for-significant-radiation-overdoses-of-more-than-200-patients.html. Published October 9, 2009. Accessed April 17, 2011

- 2.McKinnon PJ. Ataxia-telangiectasia: an inherited disorder of ionizing-radiation sensitivity in man—progress in the elucidation of the underlying biochemical defect. Hum Genet 1987;75(3):197–208 [DOI] [PubMed] [Google Scholar]

- 3.Hricak H, Brenner DJ, Adelstein SJ, et al. Managing radiation use in medical imaging: a multifaceted challenge. Radiology 2011;258(3):889–905 [DOI] [PubMed] [Google Scholar]

- 4.National Research Council Health risks from exposure to low levels of ionizing radiation: BEIR VII–Phase 2. National Academy of Sciences. Washington, DC: National Academies Press, 2006 [PubMed] [Google Scholar]

- 5.Image Gently The Alliance for Radiation Safety in Pediatric Imaging. http://www.pedrad.org/associations/5364/ig/. Accessed April 17, 2011

- 6.Image Wisely: Radiation Safety in Adult Medical Imaging. http://imagewisely.org/. Accessed April 17, 2011

- 7.Jucius RA, Kambic GX. Radiation dosimetry in computed tomography. Appl Opt Instrum Med 1977;127:286–295 [Google Scholar]

- 8.Shope TB, Gagne RM, Johnson GC. A method for describing the doses delivered by transmis sion x-ray computed tomography. Med Phys 1981;8(4):488–495 [DOI] [PubMed] [Google Scholar]

- 9.American Association of Physicists in Medicine Comprehensive Methodology for the Evaluation of Radiation Dose in X-Ray Computed Tomography: Report of AAPM Task Group 111—The Future of CT Dosimetry. Report No 111. New York, NY: American Association of Physicists in Medicine, 2010 [Google Scholar]

- 10.Neumann RD, Bluemke DA. Tracking radiation exposure from diagnostic imaging devices at the NIH. J Am Coll Radiol 2010;7(2):87–89 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.U.S.Food and Drug Administration FDA unveils initiative to reduce unnecessary radiation exposure from medical imaging. http://www.fda.gov/NewsEvents/Newsroom/PressAnnouncements/ucm200085.htm. Published February 9, 2010. Accessed April 17, 2011

- 12.Rehani M, Frush D. Tracking radiation exposure of patients. Lancet 2010;376(9743):754–755 [DOI] [PubMed] [Google Scholar]

- 13.California Senate Bill 1237. Senate Bill No. 1237 Chapter 521, Sections 115111, 115112, and 115113 of the California Health and Safety Code. Radiation control: health facilities and clinics—records. http://www.leginfo.ca.gov/pub/09-10/bill/sen/sb_1201-1250/sb_1237_bill_20100929_chaptered.pdf. Published September 29, 2010. Accessed April 17, 2011

- 14.McCollough CH, Leng S, Yu L, Cody DD, Boone JM, McNitt-Gray MF. CT dose index and patient dose: they are not the same thing. Radiology 2011;259(2):311–316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Turner AC, Zhang D, Khatonabadi M, et al. The feasibility of patient size-corrected, scanner-independent organ dose estimates for abdominal CT exams. Med Phys 2011;38(2):820–829 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.American Association of Physicists in Medicine Size Specific Dose Estimates (SSDE) in Pediatric and Adult CT Examinations. Report of AAPM Task Group 204. AAPM Report No 204. New York, NY: American Association of Physicists in Medicine, 2011 [Google Scholar]

- 17.Bauhs JA, Vrieze TJ, Primak AN, Bruesewitz MR, McCollough CH. CT dosimetry: comparison of measurement techniques and devices. RadioGraphics 2008;28(1):245–253 [DOI] [PubMed] [Google Scholar]

- 18.The 2007 Recommendations of the International Commission on Radiological Protection. ICRP publication 103. Ann ICRP 2007;37(2-4):1–332 [DOI] [PubMed] [Google Scholar]

- 19.Smith-Bindman R, Lipson J, Marcus R, et al. Radiation dose associated with common computed tomography examinations and the associated lifetime attributable risk of cancer. Arch Intern Med 2009;169(22):2078–2086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Berrington de González A, Mahesh M, Kim KP, et al. Projected cancer risks from computed tomographic scans performed in the United States in 2007. Arch Intern Med 2009;169(22):2071–2077 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Prevedello LM, Sodickson AD, Andriole KP, Khorasani R. IT tools will be critical in helping reduce radiation exposure from medical imaging. J Am Coll Radiol 2009;6(2):125–126 [DOI] [PubMed] [Google Scholar]

- 22.Mecklenburg RS, Kaplan GS. Costs from Inefficient Use of Caregivers. IOM (Institute of Medicine). 2010. The Healthcare Imperative: Lowering Costs and Improving Outcomes: Workshop Series Summary. Washington, DC: The National Academies Press; Page 119 http://www.iom.edu/Reports/2011/The-Healthcare-Imperative-Lowering-Costs-and-Improving-Outcomes.aspx. Published February 24, 2011. Accessed April 17, 2011 [PubMed] [Google Scholar]

- 23.Levin DC, Rao VM, Kaye AD. Why the in-office ancillary services exception to the Stark laws needs to be changed—and why most physicians (not just radiologists) should support that change. J Am Coll Radiol 2009;6(6):390–392 [DOI] [PubMed] [Google Scholar]

- 24.Blackmore CC, Mecklenburg RS, Kaplan GS. Effectiveness of clinical decision support in controlling inappropriate imaging. J Am Coll Radiol 2011;8(1):19–25 [DOI] [PubMed] [Google Scholar]

- 25.Sistrom CL, Dang PA, Weilburg JB, Dreyer KJ, Rosenthal DI, Thrall JH. Effect of computerized order entry with integrated decision support on the growth of outpatient procedure volumes: seven-year time series analysis. Radiology 2009;251(1):147–155 [DOI] [PubMed] [Google Scholar]

- 26.Sodickson A, Baeyens PF, Andriole KP, et al. Recurrent CT, cumulative radiation exposure, and associated radiation-induced cancer risks from CT of adults. Radiology 2009;251(1):175–184 [DOI] [PubMed] [Google Scholar]