Abstract

Threatening faces involuntarily grab attention in socially anxious individuals. It is unclear however, if attention capture is at the expense of concurrent visual processing. The current study examined the perceptual cost effects of viewing fear-relevant stimuli (threatening faces) relative to a concurrent change-detection task. Steady-state visual evoked potentials (ssVEPs) were used to separate the neural response to two fully overlapping types of stimuli, flickering at different frequencies: Task-irrelevant facial expressions (angry, neutral, happy) were overlaid with a task-relevant Gabor patch stream, which required a response to rare phase reversals. Groups of 17 high and 17 low socially anxious observers were recruited through online prescreening of 849 students. A prominent competition effect of threatening faces was observed solely in elevated social anxiety: When an angry face, relative to a neutral or happy face, served as a distractor, heightened ssVEP amplitudes were seen at the tagging frequency of that facial expression. Simultaneously, the ssVEP evoked by the task-relevant Gabor grating was reliably diminished compared to conditions with neutral or happy distractor faces. Thus, threatening faces capture and hold low-level perceptual resources in viewers symptomatic for social anxiety, at the cost of a concurrent primary task. Importantly, this competition in lower-tier visual cortex was maintained throughout the viewing period and unaccompanied by competition effects on behavioral performance.

Introduction

Facial expressions represent social cues of particular relevance for individuals fearful of social contact. These social cues may convey information about the imminence of social interaction and thus the likelihood of negative evaluation and interpersonal scrutiny. Research in healthy observers has suggested that visual stimuli denoting threat are processed in a prioritized fashion, which may serve an adaptive function, allowing individuals to rapidly detect and respond to danger (Bar-Haim, Lamy, Pergamin, Bakermans-Kranenburg, & van Ijzendoorn, 2007; Öhman, 2005). However, a heightened tendency to direct attention to potentially threatening stimuli is often found in anxious individuals (MacLeod, Mathews, & Tata, 1986; Mathews & Mackintosh, 1998; Mogg & Bradley, 1998), and this bias toward threat cues has been demonstrated in both clinically and non-clinically anxious individuals in a wide variety of tasks. It has been suggested that attentional prioritization styles may integrally contribute to both the development and maintenance of anxiety disorders (for a meta-analysis of attentional biases in anxiety disorders, see Bar-Haim et al., 2007).

In the case of social phobia, attentional biases towards social threat cues have been consistently reported in studies capitalizing on reaction times in the dot-probe paradigm as well as those utilizing eye-tracking technology (Bar-Haim et al., 2007; Schultz & Heimberg, 2008). The dot-probe paradigm entails a spatial cueing procedure in which participants make a speeded response to a probe (e.g., a letter) when it replaces a cue in the same visual hemifield (e.g., angry face). Typically, response latencies to probes are reduced when preceded by fear-relevant (angry or disgusted faces) as opposed to neutral cues and this pattern is enhanced with social anxiety and interpreted as evidence of early hypervigilance (Klumpp & Amir, 2009; Mogg & Bradley, 2002; Mogg, Philippot, & Bradley, 2004; Pishyar, Harris, & Menzies, 2004). Eye-tracking methodology in socially anxious populations has afforded finer temporal resolution about orienting patterns. In particular these studies have indicated that when presented with face pairs, high compared to low socially anxious individuals initially fixate more often on angry (or even happy) relative to neutral expressions (Garner, Mogg, & Bradley, 2006; Wieser, Pauli, Weyers, Alpers, & Mühlberger, 2009) and show impairments when directed to inhibit reflexive orienting towards a given expression (Wieser, Pauli, & Mühlberger, 2009). Furthermore, social phobia patients demonstrate hypervigilant scanning patterns of aversive facial expressions (Horley, Williams, Gonsalvez, & Gordon, 2003, 2004). Taken together, these data have been interpreted in accordance with the so-called vigilance-avoidance hypothesis, such that perception of threat-relevant stimuli in anxious individuals is characterized by initial hypervigilance and subsequent defensive avoidance (Mogg, Bradley, De Bono, & Painter, 1997). Importantly, however, findings from the aforementioned paradigms point to hypervigilance for angry faces as a hallmark of social anxiety, whereas objective evidence of reflexive perceptual avoidance is limited (but see Mansell, Clark, Ehlers, & Chen, 1999).

While eye-tracking and dot-probe tasks have reliably implicated altered overt attention to facial expressions in social anxiety, they cannot account for fluctuations in covert attention: It is difficult to disentangle attentional facilitation from impaired disengagement, both of which may underlie the differences observed in behavioral responses (Bögels & Mansell, 2004; Garner et al., 2006; Mogg et al., 1997). In addition, as most theories on attentional biases in anxiety disorders differentiate early and late stages in the processing of threatening stimuli, a more detailed account of the temporal dynamics of the attentional bias, and as a consequence, a continuous measure of attentive processing is warranted. Given its excellent temporal resolution, EEG as a measure of cortical excitation has the potential to provide these measures. In fact, accumulating neurophysiological studies have demonstrated enhanced visual cortical processing of threat cues (angry facial expressions) in social anxiety (Kolassa et al., 2009; Kolassa, Kolassa, Musial, & Miltner, 2007; Kolassa & Miltner, 2006; Mühlberger, Wieser, Hermann, Weyers, Tröger, & Pauli, 2009; Sewell, Palermo, Atkinson, & McArthur, 2008), specifically as reflected in larger occipito-temporal P1, temporo-parietal N1 and associated amplitudes in event-related potentials (ERPs). An important caveat, however, is that ERPs reflect transient spatio-temporal responses that are not stationary spatially and temporally, and thus may not permit examination of sustained attentional shifts (Müller & Hillyard, 2000) proposed to be characteristic of attentional deployment pathognomic to interpersonal apprehension.

To extend these ERP findings, a recent study utilized steady-state visual evoked potentials (ssVEPs), a continuous measure of visual cortical engagement, to examine attention dynamics in social anxiety (McTeague, Shumen, Wieser, Lang, & Keil, 2011). The ssVEP is an oscillatory response to stimuli modulated in luminance (i.e., flickered), in which the frequency of the electro-cortical response recorded from the scalp equals that of the driving stimulus. Of significant advantage, the oscillatory ssVEP is precisely defined in the frequency domain as well as time-frequency domain. Thus, it can be reliably separated from noise (i.e., all features of the EEG that do not share the frequency characteristic of the stimulus train can be dismissed) and quantified as the evoked spectral power in a narrow frequency range. Importantly, ssVEPs reflect multiple excitations of the visual system with the same stimulus over a brief epoch. Thus, changes in driven neural mass activity can be affected both by initial sensory processing and subsequent re-entrant, top-down modulation of sensory activity via higher order processes (Keil, Gruber, & Müller, 2001; Silberstein, 1995). Facilitated sensory responding as indexed with enhanced ssVEP amplitude is linked to resource allocation of attention to the driving stimulus. For example, enhanced ssVEP amplitudes to visual stimuli are reliably observed as a function of instructed attention (Müller, Malinowski, Gruber, & Hillyard, 2003), fear conditioning (Moratti & Keil, 2005; Moratti, Keil, & Miller, 2006), and emotional arousal (Keil et al., 2003), showing high sensitivity to both intrinsic and extrinsic motivation.

To assess fluctuations in sustained sensory processing in social anxiety, McTeague et al. (2011) recorded ssVEP amplitudes when participants viewed facial expressions (i.e., anger, fear, happy, neutral). Generators of the ssVEP in response to a range of cues have been localized to extended visual cortex (Müller, Teder, & Hillyard, 1997), with strong contributions from V1 and higher-order cortices (Di Russo et al., 2007) and similarly, source estimation indicated an early visual cortical origin of the face-evoked ssVEP. Specifically among those with pervasive social anxiety, the visual cortical ssVEP showed amplitude enhancement for emotional (angry, fearful, happy) relative to neutral expressions, maintained throughout the entire 3500 ms viewing epoch (McTeague et al., 2011). Taken together these data suggest that temporally sustained, heightened perceptual bias towards affective facial cues is associated with generalized social anxiety.

While complementing preceding neurophysiological studies with a continuous and more protracted measure of electrocortical dynamics, the sustained perceptual sensitivity observed by McTeague et al. (2011) was elicited in response to isolated facial displays (i.e., one face at a time), with no alternative or competing stimulus present. This fundamentally limits conclusions on preferential selection versus perceptual avoidance of threat stimuli. In the perspective of biased competition models of attention (Desimone & Duncan, 1995), preference for motivationally-relevant cues will be most pronounced when viewing conditions enable competition (Yiend, 2010). Furthermore, the presentation of arrays of facial expressions provides, albeit a limited, though a more ecologically valid approximation of the complex real-world environment in which socially anxious individuals contend with simultaneous cues portending differential interpersonal interactions.

A particular advantage afforded by ssVEP methodology is the feasibility of assigning different frequencies to stimuli simultaneously presented in the visual field (a technique referred to as ‘frequency-tagging’). Thus, signals from spatially overlapping stimuli can be easily separated in the frequency domain (Wang, Clementz, & Keil, 2007) and submitted to time-frequency analyses to provide a continuous measure of the visual resource allocation to a specific stimulus amidst competing cues. Toward this aim, Wieser et al. (2011) employed ssVEP methodology while participants viewed two facial expressions presented simultaneously to the visual hemifields at different tagging frequencies. Regardless of whether happy or neutral facial expressions served as the competing stimulus in the opposite hemifield, high socially anxious participants selectively attended to angry facial expressions during the entire viewing period. Interestingly, this heightened attentional selection for angry faces among the socially anxious did not coincide with competition (cost) effects for the co-occurring happy or neutral expressions. Rather, perceptual resource availability appeared augmented among the socially anxious in response to a cue connoting interpersonal threat.

The notion that overall attentional resources are amplified rather than diverted to content with personally-relevant threat cues has implications for the understanding and treatment of social phobia, particularly with the emergence of cognitive modification programs (Hakamata et al., 2010). Notably these interventions aim to direct attention away from social threat, in particular static angry facial displays similar to those utilized in this series of studies. From a biased competition model point of view, attentional selection is most likely to occur when stimuli engage the same population of neurons (Desimone & Duncan, 1995). To hone the sensitivity for detecting diminution (i.e., cost effects) in the electrocortical response due to selective attention of social threat cues, stimuli were this time overlaid rather than spatially separated. In particular, to maximize the likelihood of recruiting the same neural network facial expressions were shown at the same location of (and overlapping fully with) grating stimuli (Gabor patches), associated with a detection task. Neural responses to both stimuli were separated by frequency tagging. We expected that in high socially anxious individuals, angry facial expressions would act as strong competitors, diminishing the response to the task-relevant stimulus. Such a pattern would be associated with reduced ssVEPs in the Gabor stream and potentially corresponding enhancement of error rates in the change detection task.

Materials and Methods

Participants

Participants were undergraduate students recruited from General Psychology classes at the University of Florida. They received course credit for participation. Participants were recruited based on responses to the self-report form of the Liebowitz Social Anxiety Scale (LSAS-SR, Fresco et al., 2001) collected during an online screening procedure. From a sample of 849 participants (M = 37.0, SD = 20.4), individuals scoring in the upper 20% (corresponding to a total score of 53) and lower 10–30% (corresponding to total scores of 11 to 25) were invited to take part in the study to constitute high (HSA) and low socially anxious (LSA) groups respectively. Thirty-four participants (17 per group) attended the laboratory session. To ensure that the screening procedure was valid, participants completed the LSAS-SR again upon arrival at the day of testing, prior to the experimental session. As expected, the groups differed significantly in total scores of the LSAS-SR, t(32) = 16.4, p < .001, LSA: M = 13.8, SD = 6.9, HSA: M = 66.4, SD = 11.3. The groups did not differ in terms of gender ratio (LSA: 5 males, HSA: 2 males, χ2(1, N = 34) = 1.6, p = .20), ethnicity (LSA: 76.4% Caucasian, 11.8% Asian, 11.8% Hispanic; HSA, 58.8% Caucasian, 11.8% Afro-American, 11.8% Asian, 5.9% other; χ2(4, N = 34) = 3.4, p = .50), and age (LSA: M = 18.7, SD = 1.2, HSA: M = 18.9, SD = 1.0, t(32) = 0.8, p = .43). All participants reported no family history of photic epilepsy, and reported normal or corrected-to-normal vision. All procedures were approved by the institutional review board of the University of Florida and accordingly all participants gave written informed consent and received course credit for their participation.

Design and Procedure

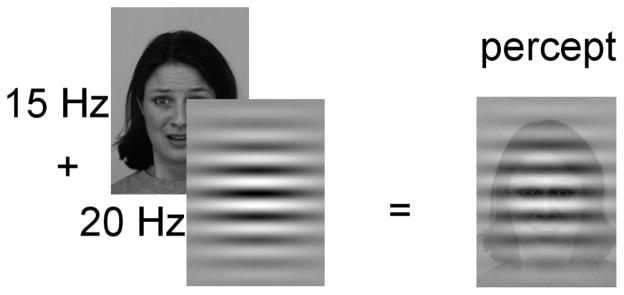

Seventy-two pictures were selected from the Karolinska Directed Emotional Faces (KDEF; (Lundqvist, Flykt, & Öhman, 1998); http://www.facialstimuli.com/), of 24 actors (12 female; 12 male) gazing directly at the viewer posing angry, happy and neutral facial expressions. All stimuli were converted to grayscales and displayed against a gray background on a 19- inch computer monitor with a vertical refresh rate of 60 Hz. In each experimental trial, a face picture was presented at the center of the screen for 3000 ms. Faces were shown in a flickering mode either at a frequency of 15 Hz or 20 Hz to evoke ssVEPs. Over this flickering face stimulus, a transparent Gabor patch was superimposed, flickering at either 20 Hz or 15 Hz, respectively (Figure 1).

Figure 1.

Schematic layout of the compound stimulus. In each trial, a face flickering at either 15 or 20 Hz was overlaid with a Gabor patch, flickering at the other frequency (20 Hz and 15 Hz, respectively). Occasionally, the Gabor patch was exchanged by its anti-phasic counterpart. Overall, the trials included either zero, one, or two phase changes in the Gabor patch stream.

Note that this figure does not veridically depict the temporal nature of the stimulus as seen on the screen. Because of the rapid flickering of both overlapping stimuli at high and different rates facial expressions were not perceived as being in the background and were easily recognizable.

The driving frequencies of the face stimulus and the Gabor patch were different to ensure distinct frequency-tagging of each stimulus stream. Regarding the detection task, the flickering stream of the Gabor patch either consisted of the same patch throughout the trial (zero change), or was replaced within the stream by its anti-phasic image either once (one change) or twice (two changes) at pseudo-random times (first change between 666 ms and 1333 ms, second change between 1666 and 2333 ms). The compound stimulus stream subtended a horizontal visual angle of 4.9 degrees, and a vertical visual angle of 6.3 degrees. The distance between the screen and the participants’ eyes was 1.0 m.

Participants were instructed to attend to the superimposed Gabor patch only, and to report upon completion of each trial the number of observed changes (change detection task). One block of stimuli (72 trials) was presented with the faces flickering at 15 Hz and the Gabor patches flickering at 20 Hz, whereas in the other block the tagging frequencies were interchanged, counter-balanced across participants. Prior to the actual experimental procedure, participants completed 16 practice trials. During the practice trials, participants received immediate, trial-specific feedback regarding performance (“Correct”, “Error”, or “Missed”). Once the participant clearly articulated the task and performed above chance, the experiment proper was initialized.

After the EEG recording, each participant viewed the 72 different facial expressions again in a randomized order and was asked to rate the respective picture on the dimensions of affective valence and arousal on the 9-point Self-Assessment Manikin (SAM, Bradley & Lang, 1994). In this last block, participants viewed each picture for 6 s (without flickering) before the SAM was presented on the screen for rating.

EEG recording and data analysis

The Electroencephalogram (EEG) was continuously recorded from 256 electrodes using a Electrical Geodesics system (EGI, Eugene, PL, USA), referenced to Cz, digitized at a rate of 250 Hz, and online band-pass filtered between 0.1 and 50 Hz. Electrode impedances were kept below 50 kΩ, as recommended for the Electrical Geodesics high-impedance amplifiers. Offline, a low-pass filter of 40 Hz was applied. Epochs of 600 ms pre-stimulus and 3600 ms post-stimulus onset were extracted off-line. Artifact rejection was also performed off-line, following the procedure proposed by Junghöfer et al. (2000). Using this approach, trials with artifacts were identified based on the distribution of statistical parameters of the EEG epochs extracted (absolute value, standard deviation, maximum of the differences) across time points, for each channel, and - in a subsequent step - across channels. Sensors contaminated with artifacts were replaced by statistically weighted, spherical spline interpolated values. The maximum number of approximated channels in a given trial was set to 20. These criteria also led to exclusion of trials contaminated by vertical and horizontal eye movements. For interpolation and all subsequent analyses, data were arithmetically transformed to the average reference. Artifact-free epochs were averaged separately based on task performance (correct versus incorrect response) for the 6 combinations of facial expressions (i.e., happy, neutral, or angry) and tagging frequency (i.e., 15 or 20 Hz) to obtain ssVEPs containing driving frequencies of both the underlaid (faces) and overlaid stimuli (Gabor patches). Overall, 63.3 % of trials were kept. The number of artifact-free trials did not differ between conditions and groups (all Fs < 1.1, ps > .31).

Time-varying amplitudes of ssVEP epochs for each condition were obtained by means of convoluting the EEG time series with complex Morlet wavelets (Bertrand, Bohorquez, & Pernier, 1994). The two driving frequencies (15 and 20 Hz) were used as center frequencies, wavelets being designed to have a ratio m = 14 of the analysis frequency f0 and the width of the wavelet in the frequency domain σf. As a consequence, the width of the wavelets in the frequency domain changes as a function of the analysis frequency f0, resulting in a SD of 1.07 Hz in the frequency domain and a SD of 148 ms in the time domain for the wavelet centered at 15 Hz, and a SD of 1.43 Hz in the frequency domain and a SD of 111 ms in the time domain for the wavelet at 20 Hz, respectively. Thus, for each trial, the time-frequency responses were calculated for both frequencies, either representing the cortical response to the Gabor patch or the face stimulus. Fully crossing face conditions with stimulation frequencies, all permutations of faces and Gabor patches were extracted from the compound ssVEP signal. Thus, a time-varying measure of the amount of processing resources devoted to one stimulus in the presence of another stimulus (competitor) was obtained.

In order to examine the time course of attentional engagement, ssVEP amplitudes were averaged across time points in three time windows after picture onset: 100–700 ms, 800–1400 ms, 1700–2300 ms. These temporal ranges were selected to capture dynamics related to the target events (i.e. changes in the phase of Gabor patches). In addition, temporally sustained effects were examined using an overall mean ranging between 100 and 3000 ms after the onset of the visual stream, thus capturing the entire viewing epoch. As observed in previous work (Müller, Andersen, & Keil, 2008) amplitudes of the ssVEPs were most pronounced at electrode locations near Oz, over the occipital pole. We therefore averaged all signals spatially, across a medial-occipital cluster comprising site Oz and the 7 nearest neighboring sensors (EGI sensors: 125, 126, 127, 137, 138, 139, 149, 150).

Statistical analysis

Mean ssVEP amplitudes (100 – 3000 ms) were analyzed by repeated-measures ANOVAs containing the between-subject factor group (LSA vs. HSA), and the within-subject factors tagged stimulus (face vs. Gabor patch) and underlaid facial expression (angry vs. neutral vs. happy). To more closely follow up the time course of cortical activation, an additional repeated-measures ANOVA with the former between-subjects factor group, the two within-subjects factors tagged stimulus and facial expression, and the additional within-subject factor time (100–700 ms, 800–1400 ms, and 1700–2300 ms) was conducted. Significant effects were followed-up by planned ANOVAs and simple planned contrast analyses.

As an additional analysis aiming to directly assess neural competition between the overlapping stimuli, we calculated indices reflecting the relative visual cortical activity evoked by a given face, relative to the concurrent Gabor patch. To this end, individual mean ssVEP amplitudes (100–3000 ms) in response to each face and Gabor patch were T-transformed across the three expression conditions (by calculating the z-transform, then multiplying by 10, and adding a constant of 50), for each participant. This transform results in all positive, standardized values adding convenience for illustration and interpretation. Competition indices were then computed by dividing the T-transformed ssVEP amplitudes in response to the face by the sum of the T-transformed amplitudes in both streams: T(face)/[T(face)+T(Gabor)]. Consequently, a ratio above 0.5 indicates a bias in the ssVEP signal towards the face stream, whereas a ratio below 0.5 indicates a bias in the signal towards the superimposed Gabor stream and associated detection task. Competition indices were analyzed by means of repeated-measures ANOVA with the between-subject factor group (LSA vs. HSA), and the within-subject factor facial expression (angry vs. neutral vs. happy). A significance level of .05 (two-tailed) was used for all analyses. The Greenhouse-Geisser correction for violation of sphericity was used as necessary and the uncorrected degrees of freedom, the corrected p-values, the Greenhouse-Geisser-ε and the partial η2 (ηp2) are reported (Picton et al., 2000).

Response data were averaged for hits (the correct number of detected changes, i.e., 1 or 2), misses, correct rejections (detecting 0 changes when 0 changes were shown), and false alarms (reporting changes when no changes were shown), and compared for group differences with t-tests. SAM ratings were averaged per facial expression and submitted to separate ANOVAs for valence and arousal ratings, containing the between-subject factor Group (LSA vs. HSA), and the within-subject factor facial expression (angry vs. neutral vs. happy).

Results

Steady-state VEPs

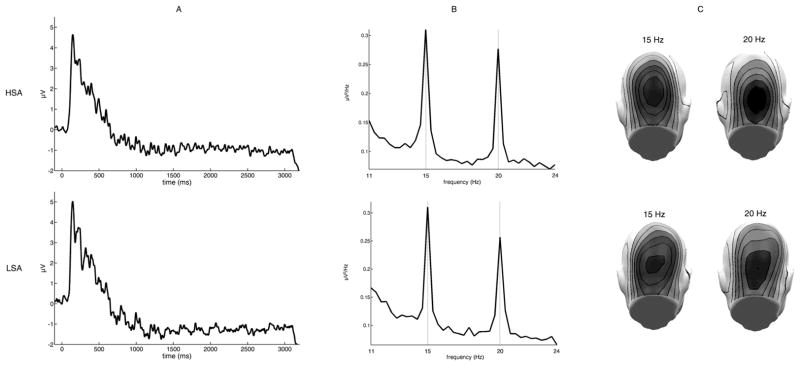

Facial stimuli as well as the superimposed Gabor task stream, reliably evoked 15 and 20 Hz oscillations. For example, as shown in Figure 2A the superimposed Gabor patch reliably evoked steady-state responses, as can be seen from the averaged raw ssVEP and its corresponding frequency power spectra obtained by a FFT analysis applied to the EEG data for a time window from 200–3000 ms after stimulus-onset. Clear peaks of spectral power were observed for the two driving frequencies, most prominent over occipital sensors (Figure 2B and C).

Figure 2.

Steady-state visual evoked potentials (ssVEP), frequency power spectra and corresponding scalp topographies per group (HSA = high socially anxious, LSA = low socially anxious): A) ssVEP for a representative occipital electrode (Oz), averaged across all conditions, containing both driving frequencies. B) Frequency power spectra as derived by FFT for Oz. Clear peaks of the driving frequencies 15 Hz and 20 Hz are detected. C) The mean scalp topographies of both frequencies show clear medial posterior activity over visual cortical areas.

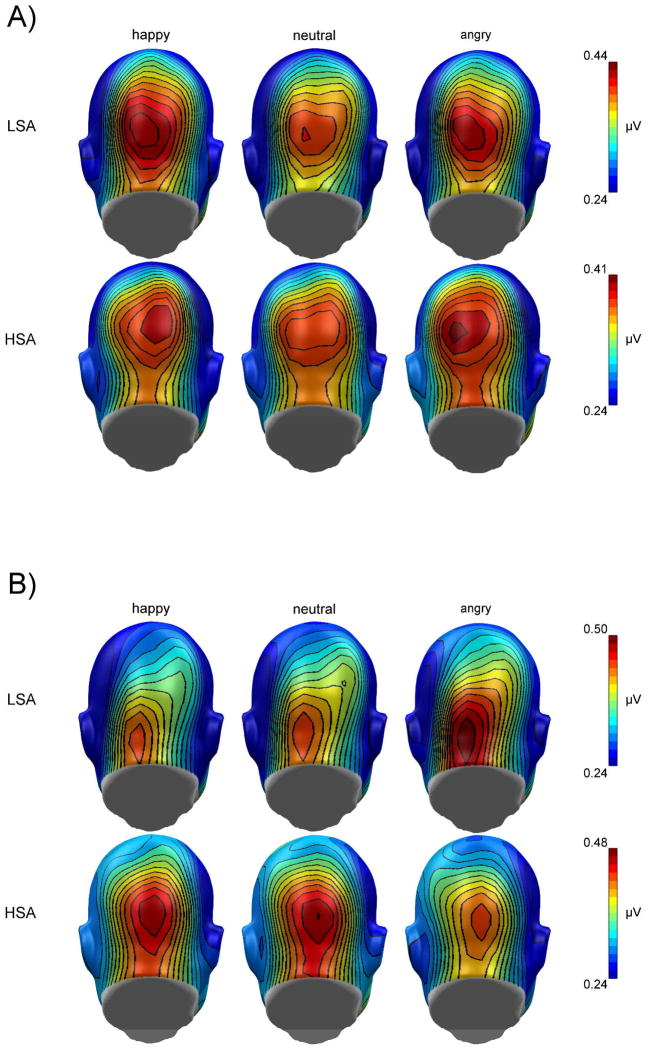

Overall, the mean amplitudes (100 – 3000 ms) of the ssVEPs were larger for the Gabor patch task stream compared to underlaid facial expressions, F(1,32) = 4.72, p = .037, ηp2 = .13.1 Furthermore, the ssVEP amplitudes to Gabor patches and faces were differentially modulated by facial expressions in HSA compared to LSA individuals, F(2,64) = 4.75, p = .012, ηp2 = .13. The topographies for the mean ssVEP amplitudes for HSA and LSA by facial expressions and tagged stimulus (Gabor patch vs. face) are given in figure 3A and B.

Figure 3.

Grand mean topographical distribution of the ssVEP amplitudes (mapping based on spherical spline interpolation) in response to angry, neutral, and happy facial expressions (A) and the Gabor patch stream overlaid over angry, neutral and happy facial expressions (B), shown separately for high socially anxious (HSA) and low socially anxious (LSA) participants. Grand means are averaged across a time window between 100 and 3000 msec after stimulus onset. Note that scales used for both groups are different.

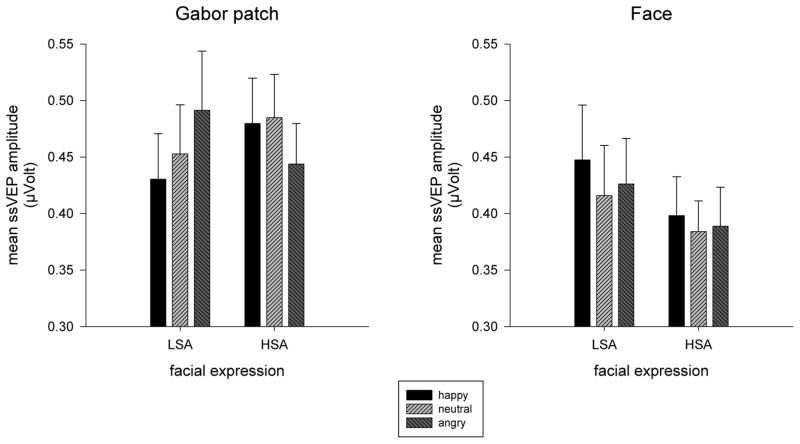

In order to follow up the three-way interaction of tagged stimulus x facial expression and group, repeated-measures ANOVAs were conducted for Gabor patches and faces, separately. Irrespective of whether the underlaid facial expression was happy, neutral, or angry, similar ssVEP amplitudes were elicited across groups in response to faces (Figure 4 right panel). In contrast, the ssVEP amplitudes to the Gabor patches superimposed on these faces were modulated by expression type in a pattern that differed between groups, F(2,64) = 8.07, p = .001, ηp2 = .20 (Figure 4, left panel). In LSA the underlaid expression modulated ssVEP amplitude to the Gabor patch, F(2,32) = 4.76, p = .025, GG-ε = .77, ηp2 = .29, owing foremost to amplitude reduction when the Gabor patches were competing with happy relative to angry faces F(1,16) = 14.84, p = .001, ηp2 = .48. Although still reliably modulated in HSA, F(2,32) = 3.72, p = .035, ηp2 = .19, the pattern revealed a very different perceptual bias—the amplitudes elicited by the Gabor patch were significantly attenuated when there was an angry face present compared to either happy or neutral competitors, F(1,16) = 5.88, p = .028, ηp2 = .27, and F(1,16) = 4.41, p = .052, ηp2 = .22. Essentially, whereas LSA showed reduced ssVEPs amplitude to the detection task amidst happy facial displays, HSA showed an opposing attenuation amidst threatening displays.

Figure 4.

Mean ssVEP amplitudes (100 – 3000 ms) and SEM elicited by faces (right panel) and Gabor patches (left panel) in low socially (LSA) and high socially anxious (HSA) participants. No differences were found for faces. The amplitudes elicited by the Gabor patch were significantly attenuated when there was an angry face present compared to either happy or neutral competitors in HSA, whereas in LSA, amplitudes were attenuated when there was a happy face present compared to an angry face.

The analysis of the time course of the cortical responses to the face and the Gabor stream did not reveal any significant differences between groups across time. Parallel to the ANOVA for the whole viewing period the mean amplitudes of the ssVEPs were larger for the Gabor patch task stream compared to underlaid facial expressions, F(1,32) = 6.15, p = .019, ηp2 = .16. The amplitude of the ssVEP differed across the three time windows, F(1,16) = 72.34, GG-ε = .77, p < .001, ηp2 = .69, which was differentially expressed for faces compared to Gabor patch stimuli, F(2,64) = 5.73, GG-ε = .85, p = .008, ηp2 = .15. Again, the significant Facial expression x Group x Tagged stimulus interaction emerged, F(2,64) = 3.88, p = .026, ηp2 = .11.

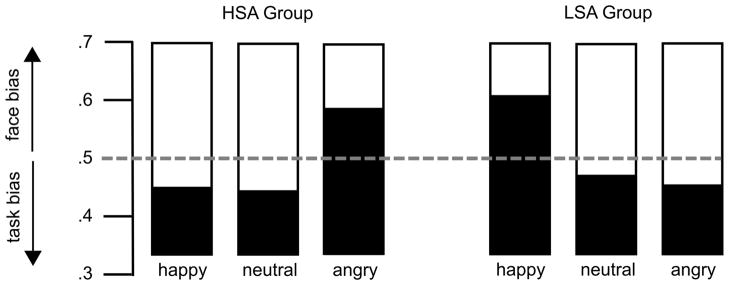

Competition analysis

The analysis of the competition indices (Figure 5), which report the amount of signal evoked by the face stimulus relative to the overall signal evoked by Gabor patch and face, revealed a strong Group x Facial expression interaction, F (2,64) = 5.72, p = .005, ηp2 = .15. Separate ANOVAs for each group confirmed the modulation of the competition index in both LSA, F (2,32) = 3.68, p = .037, ηp2 = .19, as well HSA, F (2,32) = 3.42, p = .045, ηp2 = .18. These effects were due to a greater competition index for happy compared to neutral facial expressions in LSA, t(16) = 3.39, p = .004, and larger competition indices for angry compared to happy, and neutral facial expressions in HSA, t(16) = 2.2, p = .045, and t(16) = 2.18, p = .042, respectively. Taken together, the competition analysis revealed that in HSA angry facial expressions produced the largest cost effect on the processing of the task-relevant target stream, whereas in LSA happy facial expressions were the strongest competitors.

Figure 5.

Competition index for the three facial expressions (angry, neutral, happy), shown separately for high socially anxious (HSA) and low socially anxious (LSA) participants. This index reflects the normalized relative difference between competing stimuli shown at the same time, with values greater than 0.5 indicating a relative bias towards the face stream and values smaller than 0.5 reflecting bias towards the Gabor patch.

Change detection performance

Overall, no group differences were observed in task performance. Furthermore, facial expression of the competitor stimulus did not impact task performance. Mean task performance parameters are given in table 1.

Table 1.

Means and standard deviations (SD) of task performance parameters (false alarms, correct rejections, misses, and hits) by trial type in low (LSA) and high socially anxious (HSA) participants.

| LSA | HSA | |||

|---|---|---|---|---|

|

| ||||

| M | SD | M | SD | |

| False alarms (0 change trials) | 18.4% | 21.8% | 17.7% | 20.8% |

| Correct rejections (0 change trials) | 81.0% | 23.9% | 81.5% | 21.1% |

| Misses (1 change trials) | 23.5% | 25.7% | 25.9% | 19.7% |

| Misses (2 change trials) | 25.7% | 25.4% | 26.0% | 17.3% |

| Hits (1 change trials) | 67.5% | 29.3% | 65.6% | 23.3% |

| Hits (2 change trials) | 51.6% | 27.8% | 44.0% | 20.8% |

To compare sensitivity and bias of task performance between both groups, the discriminability index d′ and the response criterion c (bias) were computed. Groups did not differ in both parameters. Overall, d′ for one target was 2.39 and 1.75 for two targets in LSA, and 2.31 and 1.44 for two targets in HSA suggesting that participants performed well in an overall challenging task. Response criterion C was .69 for one target and .77 for two targets in LSA, and .76 and .90 in HSA, respectively, indicating that participants showed a bias for reporting no change

SAM ratings

Overall, ratings of emotional arousal varied as a function of facial expression, F(2,64) = 13.07, p < .001, ηp2 = .29. Post-hoc t tests revealed that angry (M = 5.07, SD = 1.45) as well as happy faces (M = 4.60, SD = 1.71) were rated as more arousing than neutral ones (M = 3.87, SD = 1.23), t(33) = 5.17, p < .001, and t(33) = 3.86, p = .001, whereas angry and happy facial expressions did not differ, t(33) = 1.67, p = .11. Interestingly, HSA rated facial stimuli as more arousing compared to LSA, F(1,32) = 4.38, p = .044, ηp2 = .12, M = 4.94, SD = 0.84, and M = 4.09, SD = 1.45, respectively.

Similar to the findings for subjective arousal, pleasantness/unpleasantness ratings varied with facial expression, F(2,64) = 129.74, GG-ε = .64, p < .001, ηp2 = .80. Planned contrasts indicated that happy faces (M = 3.34, SD = 0.87) were rated as more pleasant compared to neutral (M = 5.50, SD = 0.35) and far more so than angry faces (M = 6.40, SD = 0.77), t(33) = 13.39, p < .001, and t(33) = 12.03, p < .001, respectively. Furthermore, angry faces were rated as more unpleasant than neutral faces, t(33) = 6.28, p < .001. No differences emerged between groups.

Discussion

This study aimed to examine the cost effects of fear-relevant stimuli (threatening faces) relative to a challenging change-detection task in social anxiety. Frequency tagging of ssVEPs was used to discriminate visual cortical responses to two simultaneously visible, overlapping stimuli: A Gabor patch, changes of which represented the target events, and an underlaid face distractor showing happy, neutral, or angry expressions. With regards to ssVEP amplitudes a prominent competition effect of threatening faces was observed in high social anxiety, only: When the irrelevant distractor was an angry face, the perceptual response to the competing Gabor grating was reliably diminished, whereas the response to the angry face stimulus itself was relatively enhanced. This bias for angry faces as a function of social anxiety was corroborated by the analysis of competition indices, which reflect the proportion of the face-evoked signal relative to the overall signal. In contrast, among individuals low in social anxiety, a perceptual preference for happy faces emerged. Behaviorally, no effects of the task-irrelevant facial expressions were observed, and high and low anxious participants performed equally well in the change detection task. We conclude that among individuals anxious about interpersonal interaction, threatening faces interfere with concurrent attention allocation to a primary task, specifically when both stimuli occupy the same location within the visual field. Importantly, this competition in lower-tier visual cortex is not discernible at the level of behavioral performance.

The observed competition effects are in line with the assumption that emotional stimuli have competitive advantages due to their intrinsic stimulus significance, which has been demonstrated in a number of studies using a wide range of experimental paradigms, (e.g., Anderson & Phelps, 2001; Keil & Ihssen, 2004; Öhman, Lundqvist, & Esteves, 2001; Öhman & Mineka, 2001). In contrast to previous studies, in which the competition effect of emotional pictures has been demonstrated to be early, but short-lasting (between 500 – 1000 ms after stimulus onset) in the processing stream (Hindi Attar, Andersen, & Müller, 2010; Müller et al., 2008), the present study found that attentional preference onset early and was maintained throughout the viewing period (100–3000 ms). Thus, involuntary perceptual bias to threatening faces in social anxiety seems to appear rapidly and persist for at least three seconds if the threat stimulus remains visible. This temporal pattern is consistent with notions regarding impaired attention disengagement in anxiety (Moriya & Tanno, 2011). It is also in line with recent findings that social anxiety is associated with sustained preferential processing for threatening faces in a spatial competition paradigm (Wieser et al., 2011). As an important difference, this latter study did not uncover any cost effects during sustained processing of threatening faces, even in high socially anxious participants. These authors interpreted the lack of competition/cost effects as reflecting additional availability of resources in the presence of threat. Basic research in the cognitive neuroscience of attention indicates that this is plausible for situations in which competition is implemented across spatially separated stimuli, because observers can allocate spatial selective attention voluntarily across noncontiguous zones of the visual field (Müller et al., 2003). Such non-contiguous zones (e.g., the left and right visual hemi-fields) are represented in different areas within visual cortex, thus facilitating concurrent, more independent processing. Models of biased competition predict that competitive interactions are strongest when stimuli compete for processing resources in the same cortical areas (Desimone & Duncan, 1995). One goal of the current study was therefore to maximize competition by creating complete spatial overlap between the task-relevant Gabor patch and the task-irrelevant face. Recent efforts to estimate the cortical sources of the face-evoked and Gabor-evoked ssVEP signal elicited by this paradigm suggest that potential generators of both signals are located in the same lower-tier visual areas (calcarine fissure) (Wieser & Keil, 2011). The overlap of neural generators thus maximizes the likelihood of competition amidst concurrent processing. An opposing bias in the non-symptomatic group was also revealed – that is that only happy facial expressions reliably diverted attentional resources from the foreground detection task – an effect not previously observed amidst single foveal (McTeague et al., 2011) or even to coincident though spatially separated facial cues (Wieser et al., 2011).

Interestingly, the competition effects observed on the electrocortical level were not paralleled by similar changes on the behavioral level, which is at odds with recent work showing strong competition effects both in electrocortical and behavioral measures when emotional scenes were used as competitors (Hindi Attar, Andersen et al., 2010; Hindi Attar, Müller, Andersen, Büchel, & Rose, 2010; Müller et al., 2008). The reason for the dissociation between cortical and behavioral level in the current study remains unclear. The withdrawal of attentional resources in response to a secondary threatening face may not be strong enough for the participant to fail in the primary change detection task. Although speculative, a recent study also employing the ssVEP technique with a primary task superimposed on facial expressions yielded similar results: No cost effects of emotional faces on target detection performance, but slowing of reactions time to indicate change (Hindi Attar, Andersen et al., 2010). It is possible that differences in behavioral interference effects secondary to face versus affective picture distractors are due to the lower subjective arousal associated with facial expressions (Bradley, Codispoti, Cuthbert, & Lang, 2001; Britton, Taylor, Sudheimer, & Liberzon, 2006), even among those apprehensive of social cues (McTeague et al., 2011). Additionally, such discordance between physiology and behavior is consistent with reports that although socially anxious individuals gauge their performance in social contexts as inferior to others and indicate greater susceptibility to distraction owing to interpersonal cues and concerns, no such deficits are apparent to observers (Bögels & Mansell, 2004; Rapee & Lim, 1992; Wallace & Alden, 1997). Another possible reason for the invariance of task performance with respect to facial distractors is the limited sensitivity of post-trial report to small changes in attentional control as suggested by the ssVEP data. For instance, it is conceivable that the distractors affected the speed of the target detection process, but not accuracy. Thus, any cost effects related to facial expression would not be reflected in the way behavioral performance was recorded in the present study. Potentially contributing to this problem, our task was very demanding (about 66 % hit rate in both groups), and load was high (Lavie, 2005), yielding a low and variable performance situation in which small cortical differences are not linearly translated into accuracy measures of behavior.

A substantial difference between the current study and earlier work is that the ssVEP technique allowed us to simultaneously assess both the facilitated processing of the task-irrelevant facial expressions as well as the reduced processing of the task-relevant Gabor patches via frequency tagging. In contrast, earlier studies focused on the neural and/or behavioral response associated with the primary task, whereas the processing of emotional distractors was inferred by the extent to which these distractors interfered with the response to the primary task stimulus (Hindi Attar, Andersen et al., 2010; Müller et al., 2008).

With regards to the time course of attentional allocation in social anxiety, the present study corroborates earlier findings of preferential and sustained processing of social threat cues (McTeague et al., 2011; Wieser et al., 2011). As observed before, no evidence for perceptual avoidance of threat cues as put forward by the hypervigilance-avoidance theory (Mogg et al., 1997) was found. Even under high-task demands, which might facilitate perceptual avoidance of the threatening face, HSA show involuntary, sustained attentional capture by phobia-relevant stimuli along with pronounced difficulty disengaging attention (Fox, Russo, & Dutton, 2002; Georgiou et al., 2005; Schutter, de Haan, & van Honk, 2004). The current findings also support the notion that social anxiety is associated with a continuous monitoring of social threat, which is implicated as an important factor in maintaining the symptoms of the disorder (Heimberg & Becker, 2002).

Potential candidate brain structures underlying the competition effect of threatening facial expressions may comprise sub-cortical as well as cortical structures: Limbic structures such as the amygdala may bias visual cortical processing bottom-up due to the intrinsic salience of the phobic cue for HSA (Sabatinelli, Lang, Bradley, Costa, & Keil, 2009). Deficits in the top-down control of attention in socially anxious individuals (Wieser, Pauli, & Mühlberger, 2009) may indicate dysregulation of attentional top-down bias signals from fronto-parieto cortical areas. Indeed, hemodynamic imaging studies in social anxiety have revealed exaggerated activation of limbic circuitry, particularly the amygdalae, in response to facial expressions (for a review, see Freitas-Ferrari, Hallak, & Trzesniak, 2010). Concurrent hyper-reactivity has also been observed in the extrastriate visual cortex (Evans, Wright, Wedig, Gold, Pollack, & Rauch, 2008; Goldin, Manber, Hakimi, Canli, & Gross, 2009; Pujol et al., 2009; Straube, Kolassa, Glauer, Mentzel, & Miltner, 2004; Straube, Mentzel, & Miltner, 2005), and increased bidirectional functional connectivity between bilateral amygdala and visual cortices was detected in the resting-state functional data of social phobia patients (Liao et al., 2010). In support of a dysfunction of the fronto-amygdalar inhibition in anxiety disorders, reduced resting-state functional connectivity between left amygdala and medial orbitofrontal cortex as well as posterior cingulate cortex/precuneus in patients with social anxiety disorder as compared to healthy controls has been recently reported (Hahn et al., 2011). Thus, imbalance of fronto-amygdalar networks in social anxiety could result in the observed competition effects at early sensory levels.

In sum, the present results together with earlier work support the hypothesis that threatening facial expressions are preferentially processed in socially anxious individuals and involuntarily capture and divert attentional resources from simultaneous demands. Even under high task demands, prioritized processing is rapidly instantiated and then maintained throughout the entire presentation of an angry face concurrently diminishing the electrocortical response to a non-affective detection task – despite the prominence of the latter in the foreground. Importantly, in contrast to earlier work suggesting the capability to enhance overall visual attentional allocation to spatially segregated facial cues, the current study underscored that affective facial expressions compete for available resources when stimuli are perceptually overlaid and presumably recruit the same population of neurons. Notably, the valence of facial cues interacts with social anxiety – happy expressions attract visual attention in non-symptomatic individuals whereas significant interpersonal apprehension is associated with bias to angry displays. Future work may capitalize on these visuo-cortical trade-off effects to objectively quantify therapeutic efforts to retrain maladaptive biases in social anxiety and thus remediate attentional processes presumably maintaining hallmark functional impairment and distress.

Acknowledgments

This research was supported by a grant from NIMH (R01 MH084932 - 02) to AK.

The authors would like to thank Kayla Gurak and Michael Gray for help in data acquisition.

Footnotes

In order to exclude the possibility that the different tagging frequencies (15 and 20 Hz) affected the modulation of the ssVEPs, we also conducted the omnibus ANOVA on the entire time window, including an additional within-subject factor of carrier frequency (15 vs. 20 Hz). This factor had no impact on the experimental effects or interactions reported in this manuscript.

References

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411(6835):305–309. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Bar-Haim Y, Lamy D, Pergamin L, Bakermans-Kranenburg MJ, van Ijzendoorn MH. Threat-related attentional bias in anxious and nonanxious individuals: A meta-analytic study. Psychological Bulletin. 2007;133(1):1–24. doi: 10.1037/0033-2909.133.1.1. [DOI] [PubMed] [Google Scholar]

- Bertrand O, Bohorquez J, Pernier J. Time-frequency digital filtering based on an invertible wavelet transform: an application to evoked potentials. Biomedical Engineering, IEEE Transactions on. 1994;41(1):77–88. doi: 10.1109/10.277274. [DOI] [PubMed] [Google Scholar]

- Bögels SM, Mansell W. Attention processes in the maintenance and treatment of social phobia: Hypervigilance, avoidance and self-focused attention. Clinical Psychology Review. 2004;24(7):827–856. doi: 10.1016/j.cpr.2004.06.005. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Codispoti M, Cuthbert BN, Lang PJ. Emotion and motivation I: defensive and appetitive reactions in picture processing. Emotion. 2001;1(3):276–298. [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. Measuring emotion: The Self-Assessment Manikin and the semantic differential. Journal of Behavior Therapy and Experimental Psychiatry. 1994;25:49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- Britton JC, Taylor SF, Sudheimer KD, Liberzon I. Facial expressions and complex IAPS pictures: Common and differential networks. Neuroimage. 2006;31(2):906–919. doi: 10.1016/j.neuroimage.2005.12.050. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Di Russo F, Pitzalis S, Aprile T, Spitoni G, Patria F, Stella A, et al. Spatiotemporal analysis of the cortical sources of the steady-state visual evoked potential. Human Brain Mapping. 2007;28(4):323–334. doi: 10.1002/hbm.20276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans KC, Wright CI, Wedig MM, Gold AL, Pollack MH, Rauch SL. A functional MRI study of amygdala responses to angry schematic faces in social anxiety disorder. Depression and Anxiety. 2008;25(6):496–505. doi: 10.1002/da.20347. [DOI] [PubMed] [Google Scholar]

- Fox E, Russo R, Dutton K. Attentional bias for threat: Evidence for delayed disengagement from emotional faces. Cognition & Emotion. 2002;16(3):355–379. doi: 10.1080/02699930143000527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freitas-Ferrari MC, Hallak JEC, Trzesniak C. Neuroimaging in social anxiety disorder: A systematic review of the literature. Progress in Neuro-Psychopharmacology and Biological Psychiatry. 2010;34(4):565–580. doi: 10.1016/j.pnpbp.2010.02.028. [DOI] [PubMed] [Google Scholar]

- Fresco DM, Coles ME, Heimberg RG, Leibowitz MR, Hami S, Stein MB, et al. The Liebowitz Social Anxiety Scale: A comparison of the psychometric properties of self-report and clinician-administered formats. Psychological Medicine. 2001;31(6):1025–1035. doi: 10.1017/s0033291701004056. [DOI] [PubMed] [Google Scholar]

- Garner M, Mogg K, Bradley BP. Orienting and maintenance of gaze to facial expressions in social anxiety. Journal of Abnormal Psychology. 2006;115(4):760–770. doi: 10.1037/0021-843X.115.4.760. [DOI] [PubMed] [Google Scholar]

- Georgiou GA, Bleakley C, Hayward J, Russo R, Dutton K, Eltiti S, et al. Focusing on fear: Attentional disengagement from emotional faces. Visual Cognition. 2005;12(1):145–158. doi: 10.1080/13506280444000076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin PR, Manber T, Hakimi S, Canli T, Gross JJ. Neural bases of social anxiety disorder: emotional reactivity and cognitive regulation during social and physical threat. Archives of General Psychiatry. 2009;66(2):170–180. doi: 10.1001/archgenpsychiatry.2008.525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hahn A, Stein P, Windischberger C, Weissenbacher A, Spindelegger C, Moser E, et al. Reduced resting-state functional connectivity between amygdala and orbitofrontal cortex in social anxiety disorder. Neuroimage. 2011;56:881–889. doi: 10.1016/j.neuroimage.2011.02.064. [DOI] [PubMed] [Google Scholar]

- Hakamata Y, Lissek S, Bar-Haim Y, Britton JC, Fox NA, Leibenluft E, et al. Attention bias modification treatment: A meta-analysis toward the establishment of novel treatment for anxiety. Biological Psychiatry. 2010;68:982–990. doi: 10.1016/j.biopsych.2010.07.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heimberg RG, Becker RE. Cognitive-behavioral group therapy for social phobia: Basic mechanisms and clinical strategies. New York: The Guilford Press; 2002. [Google Scholar]

- Hindi Attar C, Andersen SK, Müller MM. Time course of affective bias in visual attention: Convergent evidence from steady-state visual evoked potentials and behavioral data. Neuroimage. 2010;53(4):1326–1333. doi: 10.1016/j.neuroimage.2010.06.074. [DOI] [PubMed] [Google Scholar]

- Hindi Attar C, Müller MM, Andersen SK, Büchel C, Rose M. Emotional processing in a salient motion context: integration of motion and emotion in both V5/hMT+ and the amygdala. Journal of Neuroscience. 2010;30(15):5204–5210. doi: 10.1523/JNEUROSCI.5029-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horley K, Williams LM, Gonsalvez C, Gordon E. Social phobics do not see eye to eye: a visual scanpath study of emotional expression processing. Journal of Anxiety Disorders. 2003;17(1):33–44. doi: 10.1016/s0887-6185(02)00180-9. [DOI] [PubMed] [Google Scholar]

- Horley K, Williams LM, Gonsalvez C, Gordon E. Face to face: visual scanpath evidence for abnormal processing of facial expressions in social phobia. Psychiatry Research. 2004;127(1–2):43–53. doi: 10.1016/j.psychres.2004.02.016. [DOI] [PubMed] [Google Scholar]

- Junghöfer M, Elbert T, Tucker DM, Rockstroh B. Statistical control of artifacts in dense array EEG/MEG studies. Psychophysiology. 2000;37(4):523–532. [PubMed] [Google Scholar]

- Keil A, Gruber T, Müller MM. Functional correlates of macroscopic high-frequency brain activity in the human visual system. Neuroscience & Biobehavioral Reviews. 2001;25(6):527–534. doi: 10.1016/s0149-7634(01)00031-8. [DOI] [PubMed] [Google Scholar]

- Keil A, Gruber T, Müller MM, Moratti S, Stolarova M, Bradley MM, et al. Early modulation of visual perception by emotional arousal: evidence from steady-state visual evoked brain potentials. Cognitive, Affective, & Behavioral Neuroscience. 2003;3(3):195–206. doi: 10.3758/cabn.3.3.195. [DOI] [PubMed] [Google Scholar]

- Keil A, Ihssen N. Identification facilitation for emotionally arousing verbs during the attentional blink. Emotion. 2004;4(1):23. doi: 10.1037/1528-3542.4.1.23. [DOI] [PubMed] [Google Scholar]

- Klumpp H, Amir N. Examination of vigilance and disengagement of threat in social anxiety with a probe detection task. Anxiety, Stress and Coping. 2009;22(3):283–296. doi: 10.1080/10615800802449602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolassa IT, Kolassa S, Bergmann S, Lauche R, Dilger S, Miltner WHR, et al. Interpretive bias in social phobia: An ERP study with morphed emotional schematic faces. Cognition & Emotion. 2009;23(1):69–95. [Google Scholar]

- Kolassa IT, Kolassa S, Musial F, Miltner WHR. Event-related potentials to schematic faces in social phobia. Cognition and Emotion. 2007;21(8):1721– 1744. [Google Scholar]

- Kolassa IT, Miltner WHR. Psychophysiological correlates of face processing in social phobia. Brain Research. 2006;1118(1):130–141. doi: 10.1016/j.brainres.2006.08.019. [DOI] [PubMed] [Google Scholar]

- Lavie N. Distracted and confused?: Selective attention under load. Trends in Cognitive Sciences. 2005;9(2):75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Liao W, Qiu C, Gentili C, Walter M, Pan Z, Ding J, et al. Altered Effective Connectivity Network of the Amygdala in Social Anxiety Disorder: A Resting-State fMRI Study. PLoS ONE. 2010;5(12):1115–1125. doi: 10.1371/journal.pone.0015238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhman A. Karolinska directed emotional faces (KDEF) Stockholm: Karolinska Institutet; 1998. [Google Scholar]

- MacLeod C, Mathews A, Tata P. Attentional bias in emotional disorders. Journal of Abnormal Psychology. 1986;95(1):15–20. doi: 10.1037//0021-843x.95.1.15. [DOI] [PubMed] [Google Scholar]

- Mansell W, Clark DM, Ehlers A, Chen YP. Social anxiety and attention away from emotional faces. Cognition and Emotion. 1999;13(6):673–690. [Google Scholar]

- Mathews A, Mackintosh B. A cognitive model of selective processing in anxiety. Cognitive Therapy & Research. 1998;22(6):539–560. [Google Scholar]

- McTeague LM, Shumen JR, Wieser MJ, Lang PJ, Keil A. Social vision: Sustained perceptual enhancement of affective facial cues in social anxiety. Neuroimage. 2011;54:1615–1624. doi: 10.1016/j.neuroimage.2010.08.080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mogg K, Bradley BP. A cognitive-motivational analysis of anxiety. Behaviour Research and Therapy. 1998;36(9):809–848. doi: 10.1016/s0005-7967(98)00063-1. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP. Selective orienting of attention to masked threat faces in social anxiety. Behaviour Research and Therapy. 2002;40(12):1403–1414. doi: 10.1016/s0005-7967(02)00017-7. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP, De Bono J, Painter M. Time course of attentional bias for threat information in non-clinical anxiety. Behaviour Research and Therapy. 1997;35(4):297–303. doi: 10.1016/s0005-7967(96)00109-x. [DOI] [PubMed] [Google Scholar]

- Mogg K, Philippot P, Bradley BP. Selective attention to angry faces in clinical social phobia. Journal of Abnormal Psychology. 2004;113(1):160–165. doi: 10.1037/0021-843X.113.1.160. [DOI] [PubMed] [Google Scholar]

- Moratti S, Keil A. Cortical activation during Pavlovian fear conditioning depends on heart rate response patterns: an MEG study. Cognitive Brain Research. 2005;25(2):459–471. doi: 10.1016/j.cogbrainres.2005.07.006. [DOI] [PubMed] [Google Scholar]

- Moratti S, Keil A, Miller GA. Fear but not awareness predicts enhanced sensory processing in fear conditioning. Psychophysiology. 2006;43(2):216–226. doi: 10.1111/j.1464-8986.2006.00386.x. [DOI] [PubMed] [Google Scholar]

- Moriya J, Tanno Y. The time course of attentional disengagement from angry faces in social anxiety. Journal of Behavior Therapy and Experimental Psychiatry. 2011;42(1):122–128. doi: 10.1016/j.jbtep.2010.08.001. [DOI] [PubMed] [Google Scholar]

- Mühlberger A, Wieser MJ, Hermann MJ, Weyers P, Tröger C, Pauli P. Early cortical processing of natural and artificial emotional faces differs between lower and higher socially anxious persons. Journal of Neural Transmission. 2009;116(6):735–746. doi: 10.1007/s00702-008-0108-6. [DOI] [PubMed] [Google Scholar]

- Müller MM, Andersen SK, Keil A. Time Course of Competition for Visual Processing Resources between Emotional Pictures and Foreground Task. Cereb Cortex. 2008;18(8):1892–1899. doi: 10.1093/cercor/bhm215. [DOI] [PubMed] [Google Scholar]

- Müller MM, Hillyard S. Concurrent recording of steady-state and transient event-related potentials as indices of visual-spatial selective attention. Clinical Neurophysiology. 2000;111(9):1544–1552. doi: 10.1016/s1388-2457(00)00371-0. [DOI] [PubMed] [Google Scholar]

- Müller MM, Malinowski P, Gruber T, Hillyard SA. Sustained division of the attentional spotlight. Nature. 2003;424(6946):309–312. doi: 10.1038/nature01812. [DOI] [PubMed] [Google Scholar]

- Müller MM, Teder W, Hillyard SA. Magnetoencephalographic recording of steadystate visual evoked cortical activity. Brain Topography. 1997;9(3):163–168. doi: 10.1007/BF01190385. [DOI] [PubMed] [Google Scholar]

- Öhman A. The role of the amygdala in human fear: Automatic detection of threat. Psychoneuroendocrinology. 2005;30:953–958. doi: 10.1016/j.psyneuen.2005.03.019. [DOI] [PubMed] [Google Scholar]

- Öhman A, Lundqvist D, Esteves F. The face in the crowd revisited: A threat advantage with schematic stimuli. Journal of Personality and Social Psychology. 2001;80:381–396. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Öhman A, Mineka S. Fears, phobias, and preparedness: toward an evolved module of fear and fear learning. Psychological Review. 2001;108(3):483–522. doi: 10.1037/0033-295x.108.3.483. [DOI] [PubMed] [Google Scholar]

- Picton TW, Bentin S, Berg P, Donchin E, Hillyard SA, Johnson R, Jr, et al. Guidelines for using human event-related potentials to study cognition: recording standards and publication criteria. Psychophysiology. 2000;37(2):127–152. [PubMed] [Google Scholar]

- Pishyar R, Harris LM, Menzies RG. Attentional bias for words and faces in social anxiety. Anxiety, Stress, and Coping. 2004;17(1):23–36. [Google Scholar]

- Pujol J, Harrison BJ, Ortiz H, Deus J, Soriano-Mas C, Lopez-Sola M, et al. Influence of the fusiform gyrus on amygdala response to emotional faces in the non-clinical range of social anxiety. Psychological Medicine. 2009;39(07):1177–1187. doi: 10.1017/S003329170800500X. [DOI] [PubMed] [Google Scholar]

- Rapee RM, Lim L. Discrepancy between self-and observer ratings of performance in social phobics. Journal of Abnormal Psychology. 1992;101(4):728. doi: 10.1037//0021-843x.101.4.728. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Lang PJ, Bradley MM, Costa VD, Keil A. The Timing of Emotional Discrimination in Human Amygdala and Ventral Visual Cortex. Journal of Neuroscience. 2009;29(47):14864–14868. doi: 10.1523/JNEUROSCI.3278-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz LT, Heimberg RG. Attentional focus in social anxiety disorder: Potential for interactive processes. Clinical Psychology Review. 2008;28(7):1206–1221. doi: 10.1016/j.cpr.2008.04.003. [DOI] [PubMed] [Google Scholar]

- Schutter DJLG, de Haan EHF, van Honk J. Functionally dissociated aspects in anterior and posterior electrocortical processing of facial threat. International Journal of Psychophysiology. 2004;53(1):29–36. doi: 10.1016/j.ijpsycho.2004.01.003. [DOI] [PubMed] [Google Scholar]

- Sewell C, Palermo R, Atkinson C, McArthur G. Anxiety and the neural processing of threat in faces. Neuroreport. 2008;19:1339–1343. doi: 10.1097/WNR.0b013e32830baadf. [DOI] [PubMed] [Google Scholar]

- Silberstein RB. Steady-state visually evoked potential topography during the Wisconsin card sorting test. Electroencephalography and Clinical Neurophysiology. 1995;96(1):24–35. doi: 10.1016/0013-4694(94)00189-r. [DOI] [PubMed] [Google Scholar]

- Straube T, Kolassa IT, Glauer M, Mentzel HJ, Miltner WH. Effect of task conditions on brain responses to threatening faces in social phobics: an event-related functional magnetic resonance imaging study. Biological Psychiatry. 2004;56(12):921–930. doi: 10.1016/j.biopsych.2004.09.024. [DOI] [PubMed] [Google Scholar]

- Straube T, Mentzel HJ, Miltner WHR. Common and distinct brain activation to threat and safety signals in social phobia. Neuropsychobiology. 2005;52:163–168. doi: 10.1159/000087987. [DOI] [PubMed] [Google Scholar]

- Wallace ST, Alden LE. Social phobia and positive social events: The price of success. Journal of Abnormal Psychology. 1997;106(3):416. doi: 10.1037//0021-843x.106.3.416. [DOI] [PubMed] [Google Scholar]

- Wang J, Clementz BA, Keil A. The neural correlates of feature-based selective attention when viewing spatially and temporally overlapping images. Neuropsychologia. 2007;45(7):1393–1399. doi: 10.1016/j.neuropsychologia.2006.10.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wieser MJ, Keil A. Temporal Trade-Off Effects in Sustained Attention: Dynamics in Visual Cortex Predict the Target Detection Performance during Distraction. The Journal of Neuroscience. 2011;31(21):7784. doi: 10.1523/JNEUROSCI.5632-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wieser MJ, McTeague LM, Keil A. Sustained Preferential Processing of Social Threat Cues: Bias without Competition? Journal of Cognitive Neuroscience. 2011;23(8):1973–1986. doi: 10.1162/jocn.2010.21566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wieser MJ, Pauli P, Mühlberger A. Probing the attentional control theory in social anxiety: An emotional saccade task. Cognitive, Affective, & Behavioral Neuroscience. 2009;9(3):314–322. doi: 10.3758/CABN.9.3.314. [DOI] [PubMed] [Google Scholar]

- Wieser MJ, Pauli P, Weyers P, Alpers GW, Mühlberger A. Fear of negative evaluation and the hypervigilance-avoidance hypothesis: An eye-tracking study. Journal of Neural Transmisson. 2009;116:717–723. doi: 10.1007/s00702-008-0101-0. [DOI] [PubMed] [Google Scholar]

- Yiend J. The effects of emotion on attention: A review of attentional processing of emotional information. Cognition & Emotion. 2010;24(1):3–47. [Google Scholar]