Abstract

Objectives

Coding Systematized Nomenclature of Medicine, Clinical Terms (SNOMED CT) with complex and polysemy clinical terms may ask coder to have a high level of knowledge of clinical domains, but with simpler clinical terms, coding may require only simpler knowledge. However, there are few studies quantitatively showing the relation between domain knowledge and coding ability. So, we tried to show the relationship between those two areas.

Methods

We extracted diagnosis and operation names from electronic medical records of a university hospital for 500 ophthalmology and 500 neurosurgery patients. The coding process involved one ophthalmologist, one neurosurgeon, and one medical record technician who had no experience of SNOMED coding, without limitation to accessing of data for coding. The coding results and domain knowledge were compared.

Results

705 and 576 diagnoses, and 500 and 629 operation names from ophthalmology and neurosurgery, were enrolled, respectively. The physicians showed higher performance in coding than in MRT for all domains; all specialist physicians showed the highest performance in domains of their own departments. All three coders showed statistically better coding rates in diagnosis than in operation names (p < 0.001).

Conclusions

Performance of SNOMED coding with clinical terms is strongly related to the knowledge level of the domain and the complexity of the clinical terms. Physicians who generate clinical data can be the best potential candidates as excellent coders from the aspect of coding performance.

Keywords: Systematized Nomenclature of Medicine, Clinical Coding, Diagnosis, Operations, Knowledge

I. Introduction

Systematized Nomenclature of Medicine, Clinical Terms (SNOMED CT) is a comprehensive terminology system that provides clinical content and expressivity in the clinical field [1] and has been promoted as a key terminology standard by various standards organizations [2-4]. The size of the terminology with over 350,000 concepts provides more possibility to find correct match [5].

In order to use SNOMED CT at clinical situation, implementing SNOMED CT in Electronic Health Record (EHR) systems is necessary and promising way to build the infrastructure for health information functionalities. However, the complexity and polysemy of myriad clinical terms make accurate implementation of the standard terminology a challenge. Coding variation and ambiguity has been issues with SNOMED CT [3]. Efforts are under way to reduce the variability of coding and ambiguity. Qamar et al. [6] selected ambiguous data model and remodeled them to make clear the structure of the data. They reported improved mapping results and agreements among clinical experts. Patrick et al. [7] found moderate variation even when coders are limited to the use of pre-coordinated terms. They claimed that clearer guidelines and structured data entry tools are needed to better manage the coding variation.

Some researchers found the reason of variation of coding from the human side, the coder. When standard terminology is used by non-terminology expert, variation may occur [7]. Chiang et al. [8] studied agreement among three physicians using two SNOMED CT browsers to encode. They found out physicians training is required to promote coding reproducibility. Also the role of coding experts is highlighted in previous article, however, there can be discrepancies of SNOMED CT coding of same clinical terms even among coding experts with little semantic agreement [4].

In this article we tried to evaluate the effect of domain knowledge level to match SNOMED CT with clinical data. Although physicians are main generators of clinical data, the importance of their role is uncertain in coding process, since it is medical record technician's field to code clinical terms. However, clinical data have both literal meanings and clinician's intention and their intention should be fully understood clinician themselves who have most domain knowledge with which they generated data. Post-coordination of clinical concepts require high level of domain knowledge to match semantically with clinical data. Accordingly, the domain knowledge of physician in medical area seems to play an important role for coding standard terminology. Especially with SNOMED CT, physicians' role may be inevitable, since it has more granular and detailed concepts system than any other terminology system. But, there is few data quantitatively showing the relation between domain knowledge with coding ability yet. So, we compared the coding results with non-clinical experts and clinical experts in the domain of the operation name and diagnosis to reveals the effect of knowledge level to standard terminology coding.

II. Methods

1. Clinical Data

We collected diagnosis and operation names records from 500 ophthalmology and 500 neurosurgery patients. The patients were selected randomly by order of first spell of name among patients who were operated during admission in a university hospital from February to October 2010. We extracted records of diagnosis and operation names from discharge summary in EHR system. They were all free text data written in English by the physicians, which were not modified by any other medical record technician. At the hospital, corresponding International Classification of Diseases (ICD) codes are stored in a different section of the discharge summary. If the discharge summary contained more than two records of the diagnosis or operation names, maximally two were selected from each patient. However, for neurosurgical diagnosis only 1 record of operation name was allowed in each patient, because the record of operation names in neurosurgical department tend to consist of more than two operation names (e.g., pterional approach for clipping of right middle cerebral artery [MCA] aneurysm and external ventricular drainage [EVD]). Diagnosis and operation names, which were not specific to ophthalmology or neurosurgery department, were not included. For example, hypertension, diabetes mellitus were not included because the patient admitted on ophthalmology or neurosurgery department mainly due to these diseases. So, the only familiar diagnosis and operation names to the ophthalmologist or neurosurgeon were included. Abbreviations were resumed to its original terms before coding process.

2. The Coders

An ophthalmologist, a neurosurgeon and a medical record technician did SNOMED CT coding. All three coders did not have any experience in SNOMED CT coding with clinical data before. However, they all had experience in coding diagnosis and operation names into ICD-10 codes. Instructions about SNOMED CT code demo version browser (CliniClue; The Clinical Information Consultancy, Newtown, UK) were given to all coders. Books and internet access were allowed to them without limitation. They could search meaning of medical terms, medical knowledge and operation methods on websites which includes National Institute of Health, Wikipedia, and Google. Only communication among them during coding process was prohibited.

3. Parsing and Coding

The narrative texts of each diagnosis and operation names were parsed into words. The process was done to parse sentences into discrete concepts by a uniform methodology. Multiple-word pre-coordinated terms were considered a single concept. For example, "anterior lumbar interbody fusion" was parsed into "lumbar interbody fusion" and "anterior". This process was monitored by the SNOMED expert. Three coders were instructed to find best matching codes of SNOMED CT with each word or concept of diagnosis and operation names. The coders were allowed to make concepts from provided words to find out matched SNOMED CT concept. CliniClue browser was used to search and verify coding results. We used SNOMED data file released in July 2009.

4. Evaluation of Coding Results

The coding results were reviewed by all of three coders together with a SNOMED expert. When a narrative text of diagnose or operation name was exactly matched with one SNOMED CT concept or post-coordinated SNOMED CT concepts, we defined that diagnose or operation name was successfully coded. If a narrative text was not be fully described with SNOMED CT coded, or if a coded SNOMED CT had additional meaning to the text, we defined that diagnose or operation name was fail to code. Several different SNOMED CT coding can exist for one narrative text, especially when the text is long. We just evaluated the coding process at the aspect of semantic. Percent and number of successfully coded diagnosis or operation names were compared among three coders.

5. Statistical Analysis

Comparison of the successful coding rate between diagnosis and operation names with 3 coders was made using Wilcoxon signed-rank test, a test of paired proportions. A p-values of <0.05 were considered statistically significant. Statistical analysis was performed using SPSS ver. 12.0 (SPSS Inc., Chicago, IL, USA).

III. Results

1. Clinical Data

Five hundred neurosurgical department patient and 500 ophthalmologic patients were enrolled into the study. Seven hundred and five diagnosis and 500 operation names were collected from neurosurgical department, and 576 diagnosis and 629 operation names from ophthalmologic department. Average number of phased words from one diagnose were 4.66 (range, 1 to 12 words) from neurosurgery and 2.92 (range, 1 to 10 words) from ophthalmology. Average number of phased words from one operation name were 7.74 (range, 1 to 28 words) from neurosurgery and 4.88 (range, 1 to 14 words) from ophthalmology.

2. Coding Results

In reviewing with three coders and one expert coder, we found that only 16 diagnosis and 14 operation names from neurosurgery and 78 diagnosis and 25 operation names from ophthalmology were coded with one concept ID of SNOMED CT (total 133 concepts). Out of 133 narrative texts, 25 cases were not searched at CliniClue browser with original text contents. The 25 cases were found in the browser after searching with transformed texts keeping same meaning. The other 108 texts were found in the browser with original texts.

All three coders showed statistically better coding rate in diagnosis than in operation names (Wilcoxon's signed-rank test, p = 0.028). The medical record technician coded 437 (61.9%) neurosurgical and 479 (83.2%) ophthalmologic diagnosis correctly. However, she coded only 124 (24.8%) neurosurgical and 163 (25.9%) ophthalmologic operation names. Two physicians also showed better coding rate with diagnosis than operation names than medical record technician. The neurosurgeon successfully coded 597 (84.9%) neurosurgical and 538 (93.4%) ophthalmologic diagnosis. However, he was successful only in 322 (64.4%) neurosurgical and 208 (33.1%) ophthalmologic operation names. The ophthalmologists coded 517 (73.3%) neurosurgical and 555 (93.4%) ophthalmologic diagnosis correctly and 214 (42.8%) neurosurgical and 355 (56.4%) ophthalmologic operation names. Among the three coders, two physicians showed higher coding rates in all domains than the medical record technician. The neurosurgeon showed highest coding rate in neurosurgical domains and the ophthalmologist showed highest coding rate in ophthalmology.

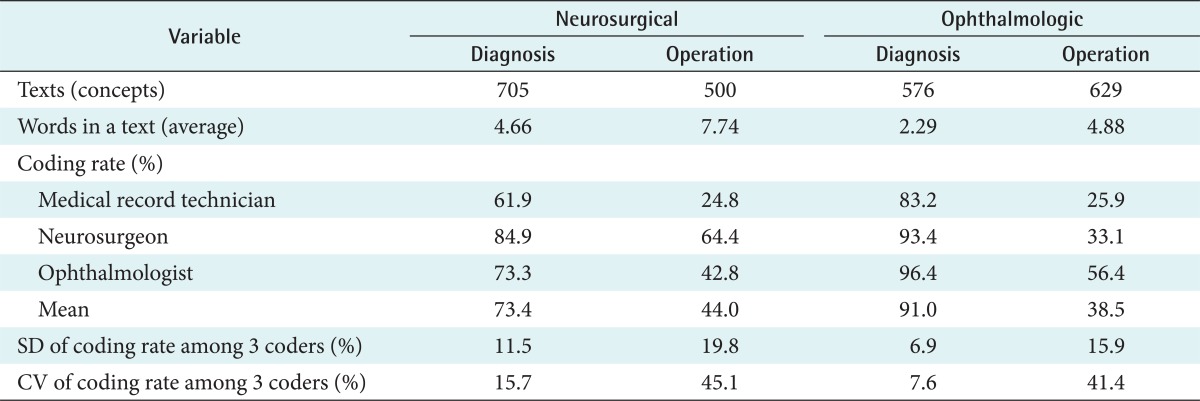

The coefficient of variations (CV) for coding rate of each medical domain among coders showed increasing tendency as the average numbers of words of each medical domain increased (Table 1).

Table 1.

Successful SNOMED CT coding rate among three coders with clinical data

SNOMED CT: Systematized Nomenclature of Medicine-Clinical Terms, SD: standard deviation, CV: coefficient of variation.

The variation of coding rates among three coders within a domain was highest in neurosurgical operation names (CV, 45.1%) and lowest in ophthalmologic diagnosis (CV, 7.6%). The CVs of coding rates in the other domains were 15.7% and 41.4% in neurosurgical diagnosis and ophthalmologic operation, respectively.

IV. Discussion

SNOMED CT coding with clinical data is starting with reading the local terms literally. Then the coders extract concepts from the local term and enter them into browser. From the results list from SNOMED browser, then, the coder should select proper SNOMED concept by reasoning with knowledge of domain. If the local terms and SNOMED description are exactly or very similarly matched, the coder could easily code terminology. However, if there is difference in concept size between local terms and their SNOMED descriptions, the coder will need more knowledge on the domain to code it correctly. To do good coding, the skill and experience of coding would be also required. However, it can be supposed that the coding results may be much more influenced by domain knowledge level of coders in this case. So, we tried to reveal that the effect of domain knowledge level to code SNOMED CT with clinical data, by comparing coding rate in the easy-to-code (diagnosis) and hard-to-code (operation name) domain between domain expert and non-expert.

Although we cannot assure the diagnosis is generally easier to code than operation names, the diagnosis contained less number of words than operation names which could be consisted with junction of multiple concepts in our study set. Another explanation on the difference between the diagnosis and operation names could be the age of a certain domain concepts. A concept of diagnosis tends to be more generalized and older than operation names. For example, myopia (diagnosis) could be better known to a person than Lasik (laser in-situ keratomileusis) procedure. The diagnosis probably could have existed when a neurosurgeon learned at medical school. Perhaps he did not have a chance to learn the concept of Lasik when he was a student. Since new eye operation methods continue to be published in the literature, it could be better understood by an ophthalmologist than a neurosurgeon. Because of the speed of scientific advancement, many operation names are not even registered by SNOMED CT. Also depending on the surgical department, preferred terms are different. Sometimes similar operation procedure itself could have different names depending on the surgical department. The suffixes "mileusis" means to "modify shape" of a certain structure. Keratomileusis means "modifying the shape of the cornea". However, for a neurosurgeon, the preferred term for "modifying certain structure" could be "-plasty" as in "cranioplasty". Mileusis rarely used in neurosurgical terms. If a concept should be understood by a physician who wishes to code it, "mileusis" could be more challenging to a neurosurgeon than to an ophthalmologist.

In this study, all of three coders didn't have coded SNOMED CT with clinical data at all. The medical record technician has much more experience of coding ICD-10 than other two physicians, and would somewhat be more skillful for coding diagnosis or operation names. She also had learned SNOMED in an education curriculum at her college. It may be commonly accepted that two physicians has more knowledge of clinical domain than a medical record technician and each physicians' knowledge would be more specialized in their own major.

Table 1 revealed that two physicians showed higher performance of coding in all domains than medical technician and that neurosurgeon showed best coding rate in neurosurgical domains and ophthalmologist do so in ophthalmologic domains. This result represented that the coding rates from three coders showed correlation with the domain knowledge levels which the coders were expected to have in general. Table 1 also showed the coding rates of diagnosis were higher than them of operation names by all three coders. It may not come from the difference of knowledge level among coders but mainly come from the different complexity and content of clinical data between diagnosis and operation names, because even physicians showed 30% lower coding rates in operation names than diagnosis and average coding rate of physician in operation names were less than 50%.

However, it is interesting results that the variations of coding rates in domains (represented by CVs among coders) were higher in the domains of operation names than diagnosis. Also the numbers of words in clinical records are correlated with increasing variation of coding rates among coders. The operation names had more words in a record than diagnosis (average, 6.3 and 3.5 words, respectively). The difference of coding rates variation between diagnosis and operation names seems to be caused by differences of the word numbers of a record. In general, common concepts have a tendency to generate one or couple of words to explain or descript the concepts. Complex or unfamiliar concepts which can't be fully described by several words require more words in order to represent the meaning of concepts. The fact that the more words should be used to explain concepts may imply that these concepts require knowledge to exactly code it. In this study, there were more coding variations in domains which had more words in a record. That means that there are more coding variations in domain which required high knowledge level to code.

To the best of our knowledge, this is the first report on the relationship between the domain knowledge and semantically successful coding. However, there are some drawbacks in this study. There were only 3 participants who coded all of the clinical data, who may not represent usual coding process in a hospital setting. However, there are several previous reports with small number of coders. Chiang et al. [8] studied reliability of SNOMED coding using two different terminology browsers. Only three physicians participated in that study. They concluded that the reliability of SNOMED coding is imperfect. They stressed that physician training and browser improvement is required to improve reproduction of SNOMED coding.

Since we focused on semantic coding ability according coder's knowledge, we did not check out the intra-coder agreements rates. We defined the successful coding as the chosen SNOMED CT concept could fully explain the local term semantically. When the other SNOMED CT concept which could fully cover the meaning of same local term was chosen, it was also defined successful coding in the case of intra- and inter-coder. It's known that there was little semantic agreement in the results even between SNOMED coding experts [4]. Also the aim of our study is not observing the inter- or intra-coder agreement but showing the relationship between domain knowledge and coding results.

The physician who generated clinical data may have high level of knowledge about what they generated. In Korea, however, the work of coding standard terminology usually performed by medical record technicians. Although the medical record technicians are very skillful coder, high level coding in hard-to-code domain would be challenge for their limitation about domain knowledge. When coders know much more about what they want to code, the coding performance would be increased, and the efficiency of coding would be more advanced especially in hard-to-code domain. We concluded that physicians or someone who generate clinical data should be involved more eagerly in SNOMED CT coding in implementing SNOMED CT in a hospital.

Footnotes

No potential conflict of interest relevant to this article was reported.

References

- 1.Price C, Spackman K. SNOMED clinical terms. Br J Healthc Comput Inf Manag. 2000;17(3):27–31. [Google Scholar]

- 2.Alecu I, Bousquet C, Jaulent MC. A case report: using SNOMED CT for grouping Adverse Drug Reactions Terms. BMC Med Inform Decis Mak. 2008;8(Suppl 1):S4. doi: 10.1186/1472-6947-8-S1-S4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Andrews JE, Patrick TB, Richesson RL, Brown H, Krischer JP. Comparing heterogeneous SNOMED CT coding of clinical research concepts by examining normalized expressions. J Biomed Inform. 2008;41(6):1062–1069. doi: 10.1016/j.jbi.2008.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Andrews JE, Richesson RL, Krischer J. Variation of SNOMED CT coding of clinical research concepts among coding experts. J Am Med Inform Assoc. 2007;14(4):497–506. doi: 10.1197/jamia.M2372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mantena S, Schadow G. Evaluation of the VA/KP problem list subset of SNOMED as a clinical terminology for electronic prescription clinical decision support. AMIA Annu Symp Proc. 2007:498–502. [PMC free article] [PubMed] [Google Scholar]

- 6.Qamar R, Kola J, Rector AL. Unambiguous data modeling to ensure higher accuracy term binding to clinical terminologies. AMIA Annu Symp Proc. 2007:608–613. [PMC free article] [PubMed] [Google Scholar]

- 7.Patrick TB, Richesson R, Andrews JE, Folk LC. SNOMED CT coding variation and grouping for "other findings" in a longitudinal study on urea cycle disorders. AMIA Annu Symp Proc. 2008:11–15. [PMC free article] [PubMed] [Google Scholar]

- 8.Chiang MF, Hwang JC, Yu AC, Casper DS, Cimino JJ, Starren JB. Reliability of SNOMED-CT coding by three physicians using two terminology browsers. AMIA Annu Symp Proc. 2006:131–135. [PMC free article] [PubMed] [Google Scholar]