Abstract

Information from the vestibular, sensorimotor, or visual systems can affect the firing of grid cells recorded in entorhinal cortex of rats. Optic flow provides information about the rat’s linear and rotational velocity and, thus, could influence the firing pattern of grid cells. To investigate this possible link, we model parts of the rat’s visual system and analyze their capability in estimating linear and rotational velocity. In our model a rat is simulated to move along trajectories recorded from rat’s foraging on a circular ground platform. Thus, we preserve the intrinsic statistics of real rats’ movements. Visual image motion is analytically computed for a spherical camera model and superimposed with noise in order to model the optic flow that would be available to the rat. This optic flow is fed into a template model to estimate the rat’s linear and rotational velocities, which in turn are fed into an oscillatory interference model of grid cell firing. Grid scores are reported while altering the flow noise, tilt angle of the optical axis with respect to the ground, the number of flow templates, and the frequency used in the oscillatory interference model. Activity patterns are compatible with those of grid cells, suggesting that optic flow can contribute to their firing.

Keywords: Optic flow, Grid cell firing, Entorhinal cortex, Spherical camera, Visual image motion, Gaussian noise model, Self-motion

Optic flow as one cue for self-positioning and self-orientation

Visual input from optic flow provides information about self-motion and, thus, could be one cue for the rat’s grid cell system. To characterize the quality of an optic flow input, we suggest a model for optic flow of the rat’s visual system that estimates linear and rotational velocity. These estimated velocities are fed into an oscillatory interference model for grid cell firing. Firing patterns generated by the concatenation of these two models, the template model and oscillatory interference model are evaluated using a grid score measure.

In this modeling effort we exclusively study the optic flow cue and exclude any contributions from other systems for purposes of analysis. However, we are aware of other cues such as landmarks, vestibular, or sensorimotor signals that might have strong influence on grid cells (Hafting et al. 2005; Barry et al. 2007). Thus, this study serves as a foundation for considering more complex, multi-cue paradigms.

Does the rat’s vision allow for the processing of optic flow?

Low-level processing mechanisms for visual motion exist in rat’s cortex. The vast majority of neurons in primary visual cortex respond to visual image motion (95 %) and the rest to flashing stimuli (Burne et al. 1984). Motion sensitive neurons show tuning for orientation, spatial frequency, and temporal frequency of a presented grating. The optimal stimulus velocity varies between 10 °/s to 250 °/s, and some neurons are selective to velocities of 700 °/s (Girman et al. 1999). Although a hierarchy of visual processing similar to the one in monkey cortex (Fellman and Van Essen 1991) has been pointed out based on the morphology (Coogan and Burkhalter 1993), the functional mapping of these anatomically identified areas is largely unknown.

Visual cues clearly contribute to the spatial response properties of neurons in the entorhinal cortex and hippocampus, including the responses of grid cells (Hafting et al. 2005; Barry et al. 2007), boundary vector cells (Solstad et al. 2008; Lever et al. 2009), head direction cells (Taube et al. 1990) and place cells (O'Keefe and Nadel 1978; Muller and Kubie 1987). Visual cues clearly influence the firing of these neurons, as rotations of a white cue card on a circular barrier causes rotations of the firing location of place cells (Muller and Kubie 1987) and grid cells (Hafting et al. 2005) and the angle of firing of head direction cells (Taube et al. 1990). Compression or expansion of the environment by moving barriers causes compression or expansion of the firing fields of place cells (O'Keefe and Burgess 1996) and grid cells (Barry et al. 2007). These data demonstrate the important role of visual input as one cue for generating spatial responses of grid cells. But visual input is not the only factor influencing firing, as grid cells and place cells can continue to fire in the same location in the darkness (O'Keefe and Nadel 1978; Hafting et al. 2005), and on the basis of self-motion cues without visual input (Kinkhabwala et al. 2011), so visual input is just one out of many possible influences on the firing of these cells. Experimental data has not yet indicated what type of visual input is essential. Detection of the distance and angle of barriers (Barry et al. 2007) could involve learning of features and landmarks, but could also leverage optic flow. Modeling the potential mechanism for the influence of optic flow on the neural responses of grid cells provides a means to test the possible validity of optic flow as a cue contributing to the firing location of these cells.

In our proposed model for rat’s processing of optic flow we assume areas of higher-level motion selectivity for the detection of self-motion from optic flow, similar to those found in macaque monkey’s area MST (Duffy and Wurtz 1995; Duffy 1998; Graziano et al. 1994). Although visual processing for rats is different—major differences occur in visual acuity and space variant vision—we assume that these mechanisms of large field motion pattern detection are not critical with respect to these properties provided by monkey’s cortex. In other words large field motion pattern can be detected also with a uniform sampling and low visual acuity, in our study 40 × 20 samples, and we do not use these properties of high visual acuity and space variant vision in our simulations.

Optic flow encodes information about directional linear and rotational velocities, relative depth, and time-to-contact

Brightness variations can occur due to object motion, background motion, or changes in lighting. Optic flow denotes the motion of brightness patterns in the image (Horn 1986, p. 278 ff.). To describe optic flow, models of visual image motion have been developed that denote the 3D motion of points projected into the image. Most models use a pinhole camera (Longuet-Higgins and Prazdny 1980; Kanatani 1993) or a spherical camera (Rieger 1983; Fermüller and Aloimonos 1995; Calow et al. 2004) and in both cases the center of rotation is located in the nodal point of the projection. Along with the camera model, the translation and rotation in 3D of the sensor is described by an instantaneous or differential motion model (Goldstein et al. 2001) which neglects higher order temporal changes like accelerations. As simplification we assume here all temporal changes of brightness, optic flow, and visual image motion are the same vector field.

In general optic flow contains information of the sensor’s linear and rotational 3D velocities, and relative depth values of objects in the environment. Several algorithms have been proposed for the estimation of linear and rotational velocities from optic flow using models of visual image motion. Different objective functions, e.g. the Euclidean difference between sensed optic flow and modeled visual image motion, have been used to formulate linear (Kanatani 1993; Heeger and Jepson 1992) and non-linear optimization problems (Bruss and Horn 1983; Zhang and Tomasi 1999). Specific objective functions have been directly evaluated by using biologically motivated neural networks (Perrone 1992; Perrone and Stone 1994; Lappe and Rauschecker 1993; Lappe 1998).

With linear velocity and relative depth, both estimated from optic flow, time-to-contact between the sensor and approaching surfaces can be computed (Lee 1976). For instance, plummeting gannets estimate their time to contact with the water surface from radial expanding optic flow, to close their wings at the right time before diving into the water (Lee and Reddish 1981).

Information about linear and rotational velocities estimated from optic flow could contribute to the firing of head direction and place cells in rats. By temporally integrating rotational velocities the head’s direction relative to some fixed direction (e.g. geographical north) can be computed. Thus, this integrated velocity signal could directly serve as input to the head direction cell system. Experiments support such integration. For instance, if visual motion and landmark cues contradict each other, often head direction cells average signals from both cues (Blair and Sharp 1996). Place cells are influenced by visual motion cues as well. Rats in a cylindrical apparatus with textured walls and textured floor which can be independently rotated show a more reliable update of place field firing if walls and floor have a compatible rotation. Thus, place cell firing is influenced by visual and vestibular signals (Sharp et al. 1995). Border cells (Solstad et al. 2008) could integrate time to contact information from walls that give rise to optic flow of high velocity since the length of flow vectors for translational motion is inversely related to distance. Furthermore, integration of linear velocity and rotational velocity provides a position signal that could serve as an input to the grid cell firing system. However, this temporal integration or path integration typically encounters the problem of error summation. Small errors accumulate over the course of integrating thousands of steps and can potentially lead to large deflections in the calculated animal’s spatial position. In order to study this problem of error summation and its influence on grid cell firing, we propose a model for optic flow in rats and a mechanism for self-motion detection and test its ability to generate grid cell firing.

Methods

A model for optic flow processing in rats and its influence on grid cell firing is developed in the following steps. Linear and rotational velocities are extracted from recorded rat trajectories that are pre-processed to model the rat’s body motion (Section 2.1). Visual image motion is simulated moving a spherical camera along these pre-processed trajectories (Section 2.2). In order to estimate the linear velocity the visual image motion model is constrained to points sampled from a horizontal plane (Section 2.3). Optic flow is modeled by adding Gaussian noise to the visual image motion which accounts for errors that would occur if flow was estimated from spatio-temporal varying patterns of structured light (Section 2.4). A template model estimates linear and rotational velocity from optic flow (Section 2.5). Finally, these estimated velocities are integrated into an oscillatory interference model to study grid cell firing (Section 2.6).

Simulating the rat’s locomotion by linear and rotational velocities

Typically in experiments only the head movement of rats is recorded and not their body movement or eye movement. This recorded data only allows an approximate calculation of the rat’s retinal flow due to body motion, which is based on the assumption that the monocular camera that models the rat’s entire field of view is forward directed and tangent to the recorded trajectory. This assumption leads to rotational velocities of nearly 360 ° between two sample points, leading to a rotational velocity of 360 ° · 50 Hz = 18,000 °/s, which seems unrealistically high, especially as visual motion sensitive cells in rats V1 are selective to speeds only up to 700 °/s (Girman et al. 1999). Looking at the behavior, these high rotational velocities occur due to head/sensor movements that are independent of body movements. Here we do not account for either of these movements. In addition jitter occurs in the recorded position. For these reasons we pre-processed all recorded rat’s trajectories with an iterative method that is based on three rules. First, data points are added by linear interpolation, if the rotation between two samples is above 90 ° or 4500 °/s for a 50 Hz sampling rate. Second, data points are removed if the displacement between two points is below a threshold of 0.05 cm or 2.5 cm/s for a 50 Hz sampling rate. Speeds below 2.5 cm/s are considered to indicate that the rat is stationary, as data shows that the distribution of recorded speeds peaks around 10 cm/s and tails off toward 50 cm/s. Third, data points are added by linear interpolation between samples that lead to linear velocities above 60 cm/sec. This pre-processing changes less than 17 % of the original data points, whereas Kalman filtering of the trajectories would change all data points. After pre-processing we assume that the trajectory is close to the actual body motion of the rat.

The rat’s enclosure is modeled by a circular ground platform that has a radius of 100 cm. This radius is 15 cm larger than the cage’s radius used in the experiment. We added an annulus of 15 cm in the simulations to allow for sensing visual motion in cases where the camera is close to the boundary and the optical axis points toward the boundary. The recorded positional data can be modeled by two degrees of freedom: A linear velocity parallel to the ground and a rotational velocity around the axis normal to the ground. In our simulation the camera is shifted and rotated, and analytical flow is calculated using a depth map computed by ray-tracing.

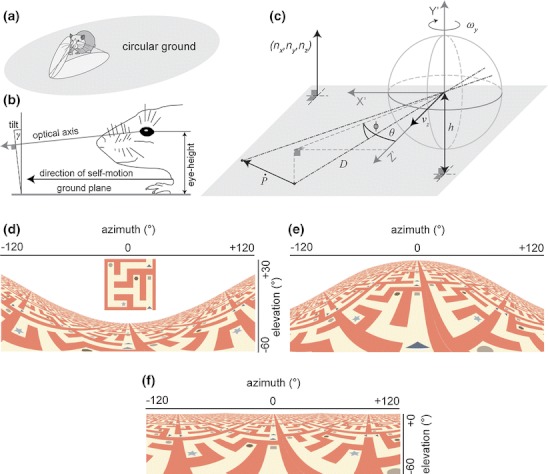

Figure 1(a) illustrates the rat sitting on the ground with its field of views for left and right eye which we model as a single visual field of view excluding binocular vision. The nodal point of the modeled camera is at height h above the ground and the y-axis of the camera’s coordinate system has a tilt angle of γ with respect to the ground plane (Fig. 1(b)). Positive tilt angles γ rotate the camera downward, negative ones upward. To model the field of view we use a spherical camera (Fig. 1(c)). Projections of a labyrinth texture tiled on the ground for this spherical camera are shown in Fig. 1(d)-(f). In the next paragraph we define the projection function and a model of visual image motion for this spherical camera model.

Fig. 1.

Our model for visual image motion of a rat. (a) A virtual rat is simulated running along recorded trajectories (Hafting et al. 2005). Optic flow is modeled for the overall visual field, which includes left and right hemisphere. (b) Shows the eye-height h and tilt angle γ of the optical axis with respect to the ground; adapted and redrawn from Adams and Forrester (1968), their Figure 6. (c) Visual image motion is generated for sample points P on the ground as the rat is moving parallel to the ground or rotating around an axis normal to the ground. This drawing is for γ = 0 ° tilt and, thus, the linear velocity is along the optical axis and the rotational velocity around the y-axis (yaw). (d-f) Projections of a regular labyrinth texture (inset in d) tiled on the ground floor within ±120 ° azimuth and ±60 ° elevation using the spherical camera model. The nodal point of the spherical camera is h = 3.5 cm above the ground plane 120 x 120 cm large and is placed in the center of the plane. Tilt angle varies, in (d) it is γ = -30 °, in (e) γ = 30 °, and in (f) γ = 0 °. All three images were rendered with Persistence of Vision Pty. Ltd. (2004). Note that the labyrinth texture does not coincide with some maze-task but has only been chosen for visualization purposes. Instead the simulated rat can freely move on a ground platform

Modeling the rat’s visual image motion using a spherical camera

Why do we use a spherical camera to model the visual image motion sensed by rats? First, rat eyeballs are more similar to spheres than a plane, as used in a pinhole camera. Second, with a spherical camera we can model a field of view larger than 180 °, impossible with a single pinhole camera.

Visual image motion for a spherical camera with its center of rotation, nodal point, and center of the sphere in the same point, is described in spherical coordinates by (Rieger 1983; Calow et al. 2004)1:

| 1 |

where θ denotes the azimuth angle, ϕ the elevation angle, and γ the tilt angle between optical axis and self-motion vector that is always parallel to the ground (see Fig. 1(b)). Azimuth is measured in the xz-plane from the z-axis that points forward. Elevation is measured from z'-axis in the yz'-plane where z' denotes the z-axis rotated by the azimuth angle. We use a left-handed coordinate system. In this system the z-axis points forward, the y-axis points to the top and the x-axis points to the right (see also Fig. 1(c)). The 3D linear velocity

This model for visual image motion has several properties. First, translational and rotational components are linearly superimposed. Second, only the translational component depends on distance D. Third, this depth dependence is reciprocal. Thus, farther points have lower velocities than closer points in terms of temporal changes of azimuth and elevation. Fourth, the image motion that is denoted in differential changes of azimuth and elevation angles is independent of the radius of the sphere that models the rat’s eyeball.

Based on the data we assume that the rat is moving tangent to the recorded trajectory in the 2D plane. This simplifies the model’s linear velocity to be

| 2 |

In this model self-motions are restricted to the rotational velocity ω y around an axis normal to the ground and the linear velocity v z parallel to the ground. If tilt is unequal to zero, the optical axis of the camera system changes while the self-motion vector remains constant.

Since we do not explicitly model both eyes but rather the entire visual field, we assume this field to extend ±120 ° horizontally and ±60 ° vertically and 0 ° horizontally is aligned with the optical axis (z-axis). Retinal input projects to lateral geniculate nucleus (LGN) in these ranges in rats (Montero et al. 1968).

Absolute linear velocity (speed) and depth appear to be invariant in Eqs. (1) and (2). Any factor multiplied to both variables

Constraining the linear velocity by sampling from a plane of known distance and spatial orientation in 3D

To resolve the invariance between absolute velocity and depth, visual image motion is constrained to sample points from a plane of known distance and 3D orientation. Evidence for such a constraint is provided from a different species, namely frogs, which use “retinal elevation”, the distance of the eyes above ground, to estimate the distance of prey (Collet and Udin 1988). This plane constraints distance values to be

| 3 |

where

| 4 |

Note that this constrained model of visual image motion is not directly accessed by the retina rather it provides a constraint for flow templates that are specific for flow sampled from the ground of known distance. Illustrations of constrained spherical motion flows for forward motion, rotational motion, and their combination are shown in Fig. 2. For the model we assume flow templates to be realized by cortical areas in the rat’s brain that are analogous to the middle temporal area (MT) and medial superior temporal (MST) in the macaque monkey (Graziano et al. 1994; Duffy and Wurtz 1995; Duffy 1998).

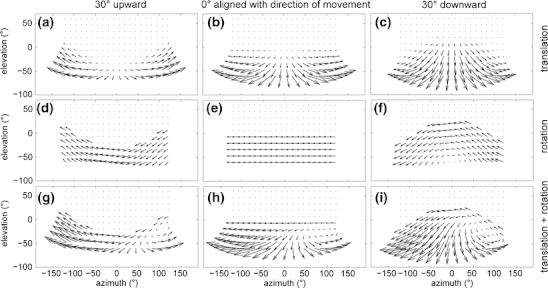

Fig. 2.

Visual motion fields for a spherical camera model for varying tilt and self-motion defined as a linear speed of v z = 2 cm/s and/or a rotational velocity of ω y = 15 °/s. (a-c) Shows motion fields generated by the forward motion, in (a) for γ = -30 °, (b) for γ = 0 °, and (c) for γ = 30 °. (d-f) Shows motion fields generated by a rotation along an axis orthogonal to the ground. Roll is very dominant in the motion field of (f), which is a result of the camera pointing downward while rotating around an axis orthogonal to the ground that is not the vertical axis of the camera. (g-i) Depict motion fields that are the result of adding motion fields from the first and second row. These are examples of motion fields generated by curvilinear motion. The vanishing point in (i) that appears around 90 ° azimuth and -20 ° elevation does not denote the theoretical focus of expansion. Instead it shows the center of motion that is the focus of expansion shifted by the rotational visual motion component. In all plots, the x-axis to y-axis has an aspect ratio of 1:2

Modeling of the rat’s sensed optic flow by applying Gaussian noise to analytically computed visual image motion

Optic flow is typically estimated from changing brightness values and, therefore, contains errors introduced by the sensing and estimation process. Here, we assume that these errors occur in the sensing of spatial and temporal changes in brightness. These errors are modeled by a Gaussian distribution function, whereas all three variables, two for spatial changes and one for temporal changes, are independent (Simoncelli et al. 1991; their Eq. (7)). Under these assumptions it follows that the distribution of image velocity estimates, using a linear least squares model, is again Gaussian distributed. Thus, in order to model the optic flow that is available to the rat, we assume additive Gaussian noise for the temporal changes of azimuth and elevation angle:

| 5 |

where

Estimating linear and rotational velocity using a template model

Linear and rotational velocity for the constrained model of visual image motion from Eq. (4) can be estimated, using the idea of template models: To explicitly evaluate an objective function defined by using neural properties (Perrone 1992; Perrone and Stone 1994). A challenge for template models that estimate self-motion is the 6D parameter space composed of two parameters for the linear velocity direction, one parameter for depth, and three parameters for rotational velocities. All these parameter intervals are sampled explicitly. For instance, for 10 samples in each interval this results in 106 samples for parameters where the sensed flow field has to be compared to all these 106 flow templates. This is a computational expensive approach and, therefore, typically template models use constrained self-motions, e.g. fixating self-motions (Perrone and Stone 1994).

Our constrained model in Eq. (4) has only two parameters: Linear and rotational velocity. Instead of sampling these two dimensions combined, we look for a method of separating the sampling of linear velocity (depth) from that of rotational velocity. Assume that the temporal changes for azimuth and elevation are given by

| 6 |

In this Eq. (6),

| 7 |

Flow templates for all common self-motions are created a priori by using expressions from Eq. (4), like

How do we estimate velocities based on the profiles defined by values about the match between template and input flow? One solution is to return the sampled velocity value that corresponds to the maximum match value in the profile, e.g.

An evaluation of trigonometric functions for the construction of the templates is not directly necessary. Assume that these flow templates with their trigonometric functions from Eq. (4) are ‘hard-wired’ or trained during exposure to visual stimuli. Then coefficients like sin (θ), cos (θ), … can be expressed as weights for pooling local motion signals over the entire visual space. Furthermore, different tilt angles γ and head direction angles φ can activate different template representations.

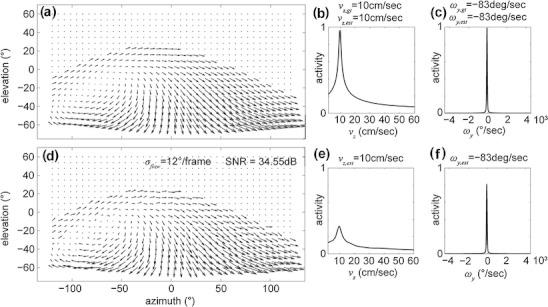

Figure 3 shows example flow fields, response profiles, and velocity estimates of our template model. Gaussian noise superimposed to the image motion reduces the peak height, compare Fig. 3(b) and (c) with (e) and (f) but the location of the peak remains. For stronger noise the peak height will be decreased even further, and its position might shift as well, especially if the peak height is falling below the noise floor. In general the peak height could be interpreted as certainty of a match.

Fig. 3.

Values for matches of our template model for analytical visual motion and optic flow modeled by visual motion with superimposed Gaussian noise are shown. (a) Motion field without noise and a tilt angle of γ = 30 °. (b) Profile for matches of linear velocities sampled in the range from 2 cm/s to 60 cm/s. (c) Match values for rotational velocities within ±4,500 °/s. (d) Optic flow defined as the visual motion field from (a) with independent Gaussian noise added to each flow component. (e and f) The profiles of match values for velocities have a decreased peak height but the position of their maximum peak remains. All flow fields are generated taking sample point 10 of the pre-processed rat trajectory ‘Hafting_Fig2c_Trial1’ from Hafting et al. (2005). The aspect ratio between x-axis (azimuth) and y-axis (elevation) is 1:2

The difference in the response profiles for linear and rotational velocity is due to the qualitative difference between flows generated for these. A linear velocity in the interval of 10 cm/s to 60 cm/s introduces slower velocity vectors that are more susceptible to noise than the larger velocity vectors generated by rotational velocities in the range of ±4,500 °/s. These slower vectors for linear velocity are stronger influenced by noise than the larger vectors generated by rotational velocities. As a result, the profile for linear velocity appears “narrower” than that of rotational velocity. Note, this observation depends on our assumption that noise is independent of the length of flow vectors, see also Eq. (5).

A temporal integration of linear and rotational velocity estimates provides an approximation for the rat’s position with respect to some reference. But, these velocities directly cannot explain the regular firing structure of grid cells. Several models (Fuhs and Touretzky 2006; Burgess et al. 2007; Hasselmo 2008; Burak and Fiete 2009) have been proposed to link 2D velocity vectors in the plane to the firing pattern of grid cells. In the next paragraph we review an oscillatory interference model and show how estimates of linear and rotational velocity are integrated into this model.

Modeling of grid cell firing by temporal integration of estimated velocities within an oscillatory interference model

To solve the problem of dead-reckoning or homing several species rely on path integration (Müller and Wehner 1988). Models for grid-cell firing use this idea and integrate 2D velocity signals. Such a 2D velocity

| 8 |

The rat’s orientation ϕ(t) in our case is calculated by temporally integrating all rotational velocities.2 Therefore, the rotational velocity has to be estimated besides the linear velocity, since it indicates the orientation of the linear velocity with respect to an external frame of reference, e.g. the middle of the cage. Linear and rotational velocity in Eq. (8) is estimated with the template model described in Section 2.5.

This 2D velocity estimate from Eq. (8) is temporally integrated within the oscillatory interference model (Burgess et al. 2007; Hasselmo 2008):

| 9 |

This model has two oscillations, the somatic oscillation ω and the dendritic oscillation

Table 1.

Parameters and their values set in simulations for the spherical camera model, template model, and oscillatory interference model

| Description of parameter | Value |

|---|---|

| Pre-processing of Simulated trajectories a | |

| Adds points for rotations above (90 ° · 50 Hz) | 4,500 °/s |

| Removes points for linear velocities below (0.05 cm · 50 Hz) | 2.5 cm/s |

| Adds points for linear velocities above (1.2 cm · 50 Hz) | 60 cm/s |

| Environment of a ground plane | |

| Radius of the circular ground platform b | 100 cm |

| Number of triangles to represent the ground platform | 32 |

| Spherical camera model | |

| Horizontal field of view | 240 ° |

| Vertical field of view | 120 ° |

| Number of sample points in vertical dimension c | 40 |

| Number of sample points in horizontal dimension c | 20 |

| Minimum distance for a sample point | D min = 0 cm |

| Maximum distance for a sample point | D max = 1000 cm |

| Eye-height above ground | h = 3.5 cm |

| Tilt angle of the optical axis with respect to ground | γ = 0 ° |

| Template model | |

| Standard deviation of the objective function for the linear velocity v z | σ v = 10 °/s |

| Standard deviation of the objective function for the rotational velocity ω y | σ ω = 25 °/s |

| Interval of rotational velocities | ω y,k ∈ {±4,500 °/s} |

| Samples for rotational velocities | k = 1…451 |

| Interval for linear velocities | v z,j ∈ {2…60 cm/s} |

| Samples for linear velocities | j = 1…117 |

| Oscillatory interference model | |

| Frequency d | f = 7.38 Hz |

| Parameter e | β = 0.00385 s/cm |

| Angles for basis vectors | φ1 = 0 °, φ2 = 120 °, φ3 = 240 ° |

| Threshold value for spiking | Θ = 1.8 |

aFile names of the trajectories included in the simulation are ‘Hafting_Fig2c_Trial1’, ‘Hafting_Fig2c_Trial2’, and ‘rat_10925’ from the Moser lab: http://www.ntnu.no/cbm/gridcell. For the simulations we extracted the maximum subsequence without not-a-numbers

bIn the simulation the size of the circular ground platform was extended by 15 cm on each side with respect to the original size of a radius of 85 cm. This extension provides sample points at the outmost boundary of 85 cm and allows for picking up optic flow

cThis refers to an image resolution of 40 × 20 pixels or n = 800. Note that image flow may not be acquired for each pixel because of the tilt angle and simulating an open cage, see Figs. 2 and 3

dFrequency f has been fitted to subthreshold oscillations of entorhnial cells (Giocomo et al. 2007)

eParameter β has been fitted to the measured subthreshold oscillations for neurons and their simulated grid cell spacing (Giocomo et al. 2007)

Results

Data on rat’s visual input, head-body position, and functions of cortical higher-order visual motion processing is largely unknown. Therefore, we assume parameter ranges for these unknowns that are simulated. The extraction of optic flow from visual input is modeled by providing analytical visual motion for a spherical camera superimposed with additive Gaussian noise. In the first simulation, the standard deviation σ flow of this Gaussian noise varies between 0 °/frame and 50 °/frame or 0 °/s and 2,500 °/s assuming a temporal sampling of 50 Hz. Since the head’s position of the rat is tracked in a 2D plane the tilt angle γ of the head with respect to the ground is not captured by the recording during the experiment. In the second simulation, we assume a range of tilt angles γ varying from –45 ° to +45 °. In the third simulation, the number of flow templates varies between 10 and 568. For the fourth simulation we vary the sub-threshold frequency f in the oscillatory interference model that changes the grid spacing. Table 2 provides a summary of parameter values in these simulations.

Table 2.

Parameter values of our model for the simulations

| Simulation type | σ flow (°/frame) | γ (°) | Templates | Frequency (Hz) |

|---|---|---|---|---|

| Flow noise | 0…50 | 0 | 568 | 7.38 |

| Tilt angle | 25 | –45… + 45 | 568 | 7.38 |

| Templates | 25 | 0 | 10…568 | 7.38 |

| Subthreshold frequency | 25 | 0 | 568 | 3.87…7.38 |

Error statistics of the estimated linear and rotational velocity

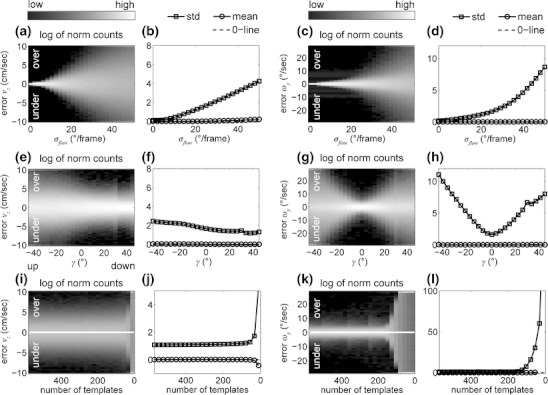

Simulated optic flow deviates from the model of visual image motion due to the Gaussian flow noise. Thus, we expect the template models’ estimates to have errors. Another source of errors is the finite sampling of the velocity spaces due to interpolation between sample points, although sampling intervals are adapted to the data. But what does the statistics of these errors look like? In three simulations we replay three recorded trajectories to the model and estimate self-motion from optic flow. Figure 4 depicts the error statistics for linear and rotational velocities that includes all three trajectories. Errors are defined as the estimate minus ground-truth value. Thus, errors of positive sign relate to an overestimation and errors of negative sign to an underestimation of velocity. For the first simulation of varying Gaussian flow noise, errors in the estimated linear velocity (Fig. 4(a) and (b)) and the rotational velocity (Fig. 4(c) and (d)) increase linear in terms of their standard deviation. Varying tilt shows an effect on the estimation (Fig. 4(e)-(h)). Downward tilts increase the length of azimuth and elevation vectors. This explains the decrease of error for conditions where gaze is directed down toward the ground. The error in rotational velocity estimates is symmetric around 0 ° tilt where it is smallest (Fig. 4(e)-(h)). The third simulation, that of varying the number of flow templates, shows large errors in the estimate of linear (Fig. 4(i) and (j)) and rotational velocity (Fig. 4(k) and (l)) for a small number of templates. This error drops to a lower nearly constant level around a number of 150 flow templates (Fig. 4(j) and (l)).

Fig. 4.

Errors for self-motion estimation while varying noise, tilt, or the number of flow templates. (a-d) shows the errors for varying Gaussian noise, (e-h) tilt angles, and (i-l) number of templates with σ flow = 25 °/frame in the latter two cases. Normalized histograms (a, c, e, g, i, k) are individually computed for every parameter value. Normalized counts for each bin are log-enhanced and displayed in gray-values ranging from black to white encoding low and high counts. The standard deviation and mean value of errors are displayed in the curve plots (b, d, f, h, j, l). Note that the intervals for plots (j) and (l) are different from those in previous rows. Legends are printed atop of each column. The error statistics includes three trajectories

Position errors for integrating velocity estimates

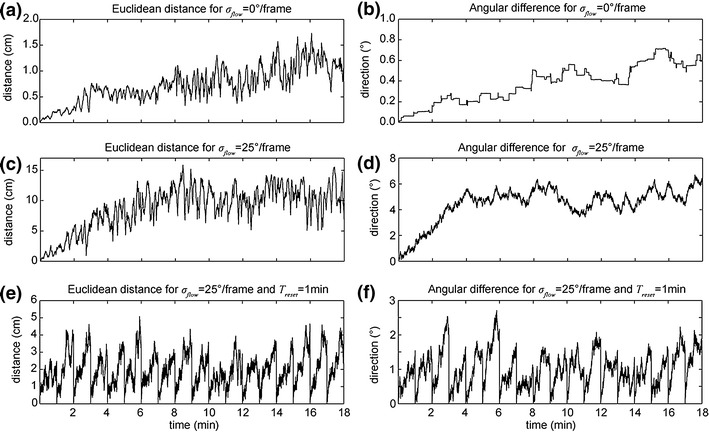

After looking into the error statistics of velocity estimates we now study the position error that occurs if temporally integrating estimated velocities. As error measures we report the Euclidean distance and angle between the ground-truth and estimated position. Figure 5 shows mean distance and angle errors for analytical visual image motion (σ flow = 0 °/frame) computed by using the three trajectories. Errors in distance are within 3 cm and errors in angle within 2 ° integrating over 18 min or 54,000 individual estimates (Fig. 5(a)-(b)). For the Gaussian flow noise of σ flow = 25 °/frame mean error range within 15 cm for the distance and 6 ° for angles. These results look promising and suggest that integration of optic flow into a path integration system can be helpful.

Fig. 5.

Position errors of temporally integrated estimates stay within (a) 3 cm Euclidean distance error and (b) 2 ° angle error for analytical image motion and within (c) 15 cm distance error and (d) 6 ° angle error for simulated optic flow that includes Gaussian noise with σ flow = 25 °/frame. (e and f) Shows the distance and angle error for estimates from optic flow with Gaussian flow noise σ flow = 25 °/frame where every T reset = 1 min a reset to the ground-truth position and orientation happens. Note the difference in intervals for the y-axis between panels

Grid scores for varying Gaussian flow noise, tilt angles, and number of templates

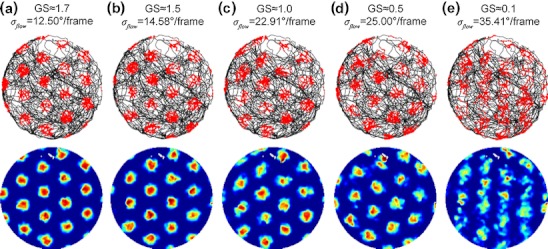

What is the effect of the errors in the velocity estimates on grid cell firing patterns? Estimated velocities are integrated into the velocity controlled oscillatory interference model that was reviewed in Section 2.6. Grid cell firing patterns are shown in Fig. 6. To quantify the grid-likeness of these patterns a grid score (GS) measure is computed (Langston et al. 2010; their supplement material on page 11). This GS ranges within the interval [–2… + 2]. Intuitively, the GS is high if the same spike pattern matches itself for rotations of 60 ° and multiples of 60 ° (hexagonal grid) and the GS is low if the grid matches itself for rotations of 30 ° and multiples of 30 ° (e.g. a squared grid). We illustrate this GSs measure in Fig. 6 by showing examples of grids of different scores. Note that for this data 1.7 is the highest GS value that could be achieved.

Fig. 6.

Examples for firing patterns of varying grid score (GS). The first row shows the different trajectories (black traces) with superimposed locations where a spike occurred (red dots). In the second row these spikes are registered in a 201 × 201 pixels image and are divided by the occupancy in these locations. Both registered spikes and occupancy are convolved with a squared Gaussian filter with nine pixels length and two pixels standard deviation. (a) A moderate amount of Gaussian flow noise (σ flow = 12.5 °/frame) leads to a GS of 1.7. Increasing the amount of noise gives GS of 1.5 in (b), 1.0 in (c), 0.5 in (d), and 0.1 in (e). All values are for a reset interval of T reset = 16.67 min and parameters of Gaussian flow noise σ flow are given atop of each trajectory plot. The simulation uses the trajectory ‘Hafting_Fig2c_Trial1’ from Hafting et al. (2005). Note that the response patterns in the lower row are individually scaled to min (blue) and max (red) values of spikes per second. Regions plotted in white have never been visited, in this example the gap in direction “North”

For the spike pattern in Fig. 6(a) we estimated the grid distance from the data by clustering the spikes using the k-means algorithm (10 retrials). The mean between-cluster distance for direct neighboring vertices is approximately ≈ 39 cm 3 and the theoretical value is calculated as

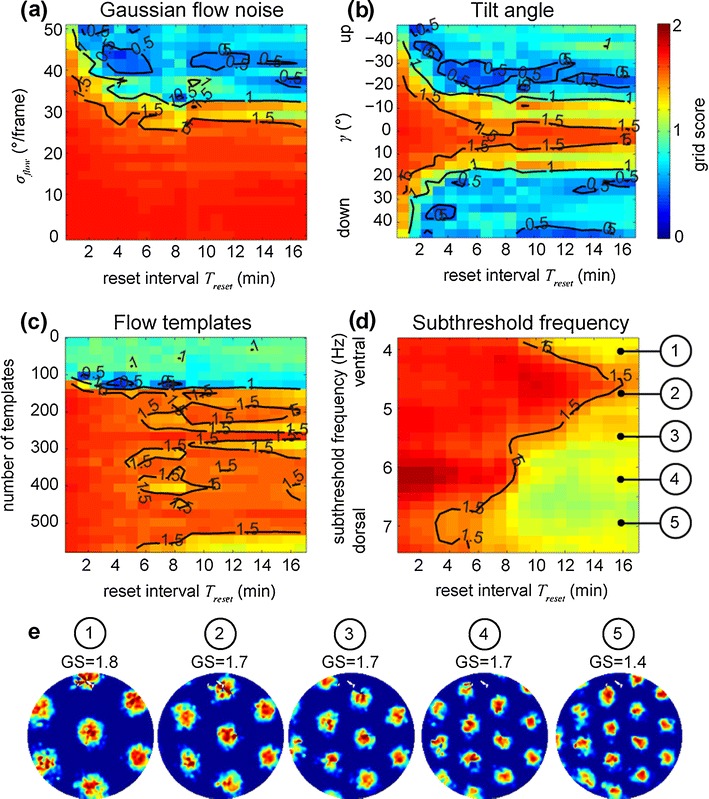

Does a reset mechanism that re-locates the rat with a certain periodicity T reset help to improve the grid cell firing? Besides optic flow other cues, such as visual landmark cues, somatosensory cues, or vestibular cues are available to the rat. Therefore, a reset mechanism that uses these cues is included into the simulation. This mechanism validates the flow integrated position with the position estimates from other cues. In the simulation the velocity integrated position is corrected to its ground-truth position using the recorded trajectory. This correction takes place after each temporal period T reset .4 In the simulation we vary this temporal period between 0.83 min and 16.67 min in steps of 0.83 min. This gives a 3D plot reporting GS over two varying parameters. Figure 7 shows these 3D plots, using a color code for the GS as third dimension. For varying Gaussian noise (y-axis) and varying reset intervals (x-axis) a transition between high GSs (>1) to GSs (<1) occurs for a standard deviation of ≈ 35 °/frame, largely independent of the reset interval T reset (Fig. 7(a)). For a tilt angle γ ≈ 0 ° GSs are higher than 1.5 (Fig. 7(b)) mainly due to the low error in rotational velocity (compare with Fig. 4(g)). Grid scores for around 150 flow templates change rapidly from above one to below one (Fig. 7(c)). Different reset intervals have a small effect visible by the drop being aligned with the x-axis or reset interval.

Fig. 7.

In most cases grid-cell firing could be achieved by integrating optic flow input when assuming a grid score (GS) greater than one. (a) Shows the GS in color (blue 0 and red 2) for different reset intervals (x-axis) and Gaussian flow noise (y-axis). A legend for the color code is printed on the right side. Black contour lines help to identify different regions with similar GS. Subsequent plots use the same color coding and show the GS for a varying tilt angle in (b), for a varying number of flow templates in (c), and for a varying frequency in (d). (e) Examples of firing patterns from the simulation in (d) as indicated by the number in circles for the trajectory ‘Hafting_Fig2c_Trial1’ from Hafting et al. (2005). Note that these grid scores differ from those shown in (d) that are mean values computed by using the three trajectories as noted in footnote ‘a’ in Table 1. For this plot we took the absolute value of the GS before computing the mean for different phases and trajectories

Varying the frequency parameter of the oscillatory interference model influences the grid spacing. For instance, for f = 7.38 Hz the grid spacing is 41 cm and for f = 3.87 Hz the grid spacing is 77 cm. This varying grid spacing shows an effect coupled to the reset interval T reset (Fig. 7(d)). For a reset interval above T reset = 12 min and a frequency below 5.5 Hz the GS is ≈ 1. This corresponds to the upper right corner in the 2D plot in Fig. 7(d). For frequencies above 5.5 Hz and a reset interval above ≈ 9 min the GS is ≈ 1. Other regions have a GS > 1. Our interpretation is that for low frequencies only a few vertices—around seven—are marginally present and, thus, no full clear cell of a hexagonal grid, consisting of seven vertices, is formed for all trajectories (although it is in the example trajectory shown in Fig. 7(e) 1). This partial occurrence of less than seven vertices reduces the GS. For slightly higher frequencies ≈ 4.5 Hz one full cell of the hexagonal grid is present and, thus, in these cases a higher GS of ≈ 1.5 is achieved. For a further increased frequency of >5 Hz, multiple additional firing fields of the grid are present (Fig. 7(e) 3). All these firing fields have to be accurate and this reduces the GS to ≈ 1.

Discussion

We investigated the role of optic flow available to rats and their possible influence on grid cell firing in a modeling study. Our model uses a spherical camera to simulate the large visual field of rats and models the sensed optic flow as analytical visual image motion with superimposed Gaussian noise. This sensed flow is compared to flow templates for linear and rotational velocities that are sampled in intervals according to the statistics of these velocities in recorded rat trajectories. Maximum responding templates and their neighbors are used to estimate linear and rotational velocity. These estimates are fed into the velocity controlled oscillator model to produce grid cell firing. Grid scores from this overall model using optic flow as input are above 1.5 even for Gaussian flow noise with a standard deviation up to 35 °/frame. In addition our model requires at least ≈ 100 flow templates to achieve grid scores above one (GS > 1). The tilt angle of the simulated spherical camera with respect to the ground affects the grid score. Overall our modeling study suggests optic flow as a possible input to grid cells beside other cues.

Template models for self-motion estimation using optic flow

Various template models have been suggested for self-motion estimation from flow. Perrone (1992) suggested a template model that estimates, first, rotational velocities from the input flow and, second, subtracts the flow constructed by these estimated rotational velocities from the input to estimate the linear velocities of self-motion. Later, Perrone and Stone (1994) modified this model by setting up flow templates for common fixating self-motions for human locomotion. Lappe and Rauschecker (1993) used a subspace of flow constraints that is independent of the depth and rotational velocities. The remaining constraints that depend on linear velocity only are used to set up flow templates. In contrast to these models, we simplified the parameter space by restricting the rat’s body motion to curvilinear path motion that is composed of linear velocity parallel to the ground and a rotational velocity normal to the ground. This reduces the degrees of freedom from six to two that are estimated independently by two 1D spaces of flow templates: One for linear velocities and one for rotational velocities. Similar to Perrone’s (1992) template model, the accuracy of self-motion estimation in the presence of flow noise increases with an increasing number of samples, given that the noise for samples is independent (Perrone 1992; his Fig. 5). For that reason we did not include the number of sampled flow vectors as a parameter of our simulations.

Although sharing this behavioral similarity, our template model is conceptually different from prior ones. It uses a spherical camera model instead of a pinhole camera. It resolves the scaling invariance between linear velocity or speed and absolute depth by assuming points of flow sampled from a ground plane and knowing the distance and angle of the camera with respect to that plane. Our model extends prior ones by allowing for variable tilt of the camera that leaves the direction of self-motion unaffected. In our model a separation of estimating linear and rotational velocity is achieved by multiplying the flow equation by a vector orthogonal to the rotation induced flow (see Eq. (6)). This separation leads to a speed-up of simulation times and requires less storage—or fewer “template neurons”. Rather than testing all combinations of linear and rotational velocities that would lead to a squared complexity in match calculation and storage, we tested linear and rotational velocities separately. This required only linear complexity in the number of samples in each dimension. This “computational trick” was applied for the convenience of the simulations rather than assuming the same trick is being applied in the rat visual system. In sum, our model provides several extensions compared to prior template models.

Models for grid cell firing integrate linear 2D velocities

Models for grid cell firing temporally integrate a 2D velocity signal (Burgess et al. 2007; Hasselmo 2008; Burak and Fiete 2009; Fuhs and Touretzky 2006; Mhatre et al. 2012). None of these models elaborate on the details of estimating this 2D velocity signal, other than assuming this signal being split into a head direction signal assumed to be provided by the head direction cell system and a linear speed signal assumed to be provided by the vestibular system. A realistic model would estimate the 2D velocity that in turn introduces errors that could be characterized by a noise model. However, noise is not assumed in the velocity signal in models of grid cell firing. Instead noise is assumed in the neural population of attractor models (Fuhs and Touretzky 2006; Burak and Fiete 2009) or in the phase of the oscillatory interference models (Burgess et al. 2007; Zilli et al. 2010).

In our study we elaborated on the modeling of the velocity signal, suggesting optic flow as an alternative cue besides the traditional view that velocities are supported by the vestibular system. This approach naturally leads to errors in the 2D velocity signal, as the velocity signal is estimated from optic flow. Our analysis of errors shows that most parameter settings in our model of self-motion estimation and optic flow allow for path integration that is accurate enough to produce the grid cell’s typical firing pattern.

Many species use optic flow for navigation and depth estimation

Optic flow influences the behavior in many species. Pigeons bob their heads during locomotion, which is mainly visually driven. This bobbing supports the stabilization of vision during the period of forward movement. The forward movement is compensated by a backward motion of the head caused by the bird’s neck zeroing its retinal motion (Friedman 1975). Behavioral data shows that honeybees use image motion for navigation in a corridor (Srinivasan et al. 1991). Mongolian gerbils use motion parallax cues and looming cues to estimate distance between two platforms where their task is to jump from one platform to the other (Ellard et al. 1984). For humans, Gibson (1979; page 111 ff.) highlights the relevance of optic flow during aircraft landing and more generally during locomotion. Humans can judge heading from translational flow within the accuracy of one degree of visual angle (Warren et al. 1988). For flow including simulated or anticipated eye-movements the accuracy is within two degrees of visual angle (Warren and Hannon 1990). Although, the resolution of rat vision is poorer compared to that of humans we suggest that rats are able to register large flow field motion as generated by self-motion in a stationary environment. In turn, such flow fields encode the rat’s self-motion which motivates our hypothesis that rats might use optic flow among other cues.

Studies using macaque monkeys reveal the underlying neural mechanisms of optic flow processing and estimation of self-motion. Neurons in area MST show selectivity for the focus of expansion (Duffy and Wurtz 1995; Duffy 1998) and to spiral motion patterns induced by a superposition of forward motion and roll motion of the sensor (Graziano et al. 1994). The selectivity for the focus of expansion is shifted by eye-movements (Bradley et al. 1996). Studying the connectivity, these neurons in area MST integrate local planar motion signals over the entire visual field by spatially distributed and dynamic interactions (Yu et al. 2010). Furthermore, MST neurons responded stronger if monkeys had a visual steering task compared to passive viewing. This suggests that task driven activation can shape MST neuron responses (Page and Duffy 2007). The activation of MST neurons is influenced by objects moving independently in front of the background (Logan and Duffy 2005). An electrical stimulation of MST neurons during a visual navigation task affected monkey’s decision performance, typically leading to random decisions and these were amplified in the presence of eye movements. This suggests that area MST is involved in self-motion estimation with a correction for eye-movements (Britten and Wezel 2002). An overview of mechanism in area MST, its influence on steering, and the solution of the rotation problem—the segregation of translation and rotation—is given by Britten (2008). No analogue to MST cells has been reported for rats, so far. But especially monkey physiology suggests experimental conditions to probe the optic flow system. Large field motion stimuli of expansion or contraction with a shifted focus of expansion or laminar flows are typical probes (see Figs. 2 and 3). In our model we assumed cells tuned to these flows for the estimation of linear and rotational velocity.

Optic flow cues might not be limited to influence only grid cells

Several cell types could profit from optic flow information. Border cells or boundary vector cells that respond as the animal is close to a border with or without wall (Burgess and O'Keefe 1996; Solstad et al. 2008; Lever et al. 2009; Barry et al. 2006) could receive input from hypothetical cells detecting self-motion patterns which are sensitive to different distributions of image motion speeds. In a related study we propose a model for boundary vector cell firing based on optic flow for rats in cages with and without walls (Raudies and Hasselmo 2012). In the presence of walls the challenge is to achieve a flow-based segmentation of walls from ground, as samples from these different surfaces play behaviorally different roles as we suggest. Flow samples from the ground allow for the estimation of linear velocity, and thus could facilitate grid cell firing. In contrast, flow samples from walls do not encode velocity above ground, rather they allow for a distance and direction estimate of walls if the velocity above ground is known. Thus, flow samples from the ground combined with flow samples from walls could facilitate the firing of boundary vector cells that fire dependent upon the boundaries of the environment. Segmentation of flow can also be used for the detection of physical drop-offs at the boundaries of an environment (which activate boundary vector cells). This is challenging in simulations if the rat is far away from the drop-off because in that case the flow close to the horizon at the drop-off has a small magnitude and the discontinuity in the flow between the edge of the drop-off and the background is hard to detect. In our related study we propose a mechanism for the detection of drop-offs and the conversion into distance estimates of the drop-off. This model builds upon the spherical model proposed here but is different in mechanisms and modeled cell populations. Together, our model of grid cell firing in this paper and the modeling of boundary vector cell firing in the related study support the hypothesis that the firing of these different cells might be facilitated by optic flow in a multi-modal integration with other cues.

Head direction cells (‘compass cells’) could update their direction specific firing in a 2D plane, e.g. for north, irrespective of the rat’s body or head position and rotation by integrating the estimated rotational velocity from optic flow (Taube et al. 1990). Grid cells (Hafting et al. 2005) and place cells (O’Keefe 1976) could fire based on the temporal integration of estimated linear and rotational velocity from optic flow, the latter is suggested by our model.

Self-localization by cues other than optic flow

Experiments show the importance of vestibular and sensorimotor input to head direction cells which are part of a neural network for rat’s navigation. Head direction cells in the anterior thalamic nucleus depend on vestibular input, as lesioning the vestibular system drastically reduces the coherence in directional firing of these cells (Stackman and Taube 1997; their Figs. 1 and 4). Selectivity of these head direction cells could be generated by angular head velocity sensitive cells in the lateral mammillary nuclei (Stackman and Taube 1997; Basset and Taube 2001). Persistent directional firing of head direction cells in the anterior dorsal thalamic nucleus and postsubiculum was not maintained while the rat was moved in darkness into another room, whereas this transfer excludes sensorimotor and visual cues. This shows that vestibular input is not sufficient to maintain firing of head direction cells in all cases (Stackman et al. 2003).

Various scenarios show the importance of landmarks, either directly for behavior or for the firing of cell types. Over-trained rats in a Morris water maze with artificially generated water currents rely on landmarks rather than path integration of sensorimotor cues to find the hidden platform (Moghaddam and Bures 1997). Head direction cells (Taube et al. 1990) receive vestibular signals, visual motion signals, and signals about landmark locations. Furthermore, if visual motion and landmark cues contradict each other, often the head direction cells average signals from both cues (Blair and Sharp 1996). The firing patterns of grid cells rotate with landmarks, thus, grid cell firing is locked to visual landmarks (Hafting et al. 2005; their Fig. 4). If idiothetic cues are in conflict with landmarks, place cells rely largely on landmarks for small mismatches and hippocampus formed a new representation for large mismatches (Knierim et al. 1998). Another experiment changes the size of the cage while running the experiment. Grid cell firing showed a rescaling to the environment leading to a deformation of the hexagonal grid structure. This rescaling is slowly reduced with experiencing the newly sized environment and the grid cell firing pattern is restored to its intrinsic hexagonal structure. This suggests that rats rely on visual cues in familiar environments such as boundaries and grid cell firing gets deformed if these cues are shifted (Barry et al. 2007). In sum, the vestibular system and the visual landmarks play a major role in driving cells prior or close to entorhinal cortex and the grid cell system.

Challenges to our flow-hypotheses

The results of our model simulations motivate two hypotheses. First, optic flow among other cues might contribute to the firing of grid cells. This hypothesis can be tested by using a virtual environment setup, similar to the one used by Harvey et al. (2009). In such a setup, the animal is running on a trackball and the corresponding video signal is shown on a display in front of the animal. In this case visual cues that lead to optic flow can be manipulated in two ways. Optic flow cues can be gradually removed while the animal is still provided with sensorimotor cues. According to our model less flow would lead to larger estimation errors for linear and rotational body velocities. For our multi-modal integration paradigm this would lead to a decrease in grid score for grid cells. Another manipulation is the update of the video being inconsistent with the movement of the animal on the trackball, e.g. instead of displaying a simulated forward motion showing a simulated backward motion. In this case we expect again a decrease in grid score as firing induced by optic flow becomes inconsistent with firing induced by e.g. sensorimotor signals. Our second hypothesis is that GS depends on the quality of the flow signal. From our simulation results we infer that for up to 30 °/frame standard deviation of Gaussian noise the grid score would still be about one for cells in the experiment, so they would still be classified as grid cells. This can be tested by superimposing noise in the video displayed to the rat in that again should affect the grid score of grid cells. Our hypothesis depends largely on the quality of optic flow that is available to the rat.

Optic flow amongst other cues

Optic flow directly provides linear and rotational velocity of the rat’s motion. Note that our model only simulated body motion, not head or eye motion. These velocity estimates for body motion can be temporally integrated to result in self-position information with respect to a reference location. For the same position estimates linear and angular acceleration signals from the vestibular system would have to be temporally integrated twice. Temporal integration at two stages introduces an additional source of error accumulation in comparison to a single temporal integration used for optic flow. Other cues, like visual landmarks, provide a self-position signal without temporal integration and are in that sense at an advantage compared to optic flow. Also optic flow is not available in the dark or in environments that do not provide enough texture to pick up flow. In these cases it becomes very likely that sensorimotor signals and vestibular signals are used for path integration. On the other hand, visual input might cause the observed compression and expansion effects of grid cell firing fields as a result of expanding or shrinking the rat’s cage during the experiment. For instance, optic flow that is generated from an altered cage size would be different and can indicate an expansion or contraction. Optic flow can be used for path integration during the initial phase of exploration before landmarks are learned and the environment is mapped out. In sum, optic flow might be one cue in the multi-modal integration of cues that lead to the firing of grid cells in cases where flow is available by texture together with sufficient lighting.

Acknowledgements

The constructive criticism of two reviewers of the manuscript is gratefully acknowledged. All authors are supported in part by CELEST, a NSF Science of Learning Center (NSF OMA-0835976). FR and EM acknowledge support from the Office of Naval Research (ONR N00014-11-1-0535). MEH acknowledges support from the Office of Naval Research (ONR MURI N00014-10-1-0936). Thanks to the Moser Lab for providing their data online at http://www.ntnu.no/cbm/gridcell.

Open Access

This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

Footnotes

Note that these references use different definitions for their coordinate system.

As an alternative this signal φ(t) can be provided by the head direction system. Such a signal has been used in Eq. (4) in cases where the ground’s normal vector depends on head orientation. This is the case for non-zero tilt angles.

Several runs of the k-means algorithm can give slightly different values due to a randomized initialization of the initial cluster centers.

To avoid a correlation effect between reset times and specific velocity configurations in the data, we evaluate ten different phase values, the onset times of the reset mechanism with respect to time zero that are regularly distributed within the duration of two resets.

A Matlab (R) implementation of the model is available at http://cns.bu.edu/∼fraudies/EgoMotion/EgoMotion.html

References

- Adams AD, Forrester JM. The projection of the rat's visual field on the cerebral cortex. Experimental Psychology. 1968;53:327–336. doi: 10.1113/expphysiol.1968.sp001974. [DOI] [PubMed] [Google Scholar]

- Barry C, Lever C, Hayman R, Hartley T, Burton S, O'Keefe J, Jeffery K, Burgess N. The boundary vector cell model of place cell firing and spatial memory. Rev Neuroscience. 2006;17:71–97. doi: 10.1515/revneuro.2006.17.1-2.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barry Y, Hayman R, Burgess N, Jeffery KJ. Experience-dependent rescaling of entorhinal grids. Nature Neuroscience. 2007;10(6):682–684. doi: 10.1038/nn1905. [DOI] [PubMed] [Google Scholar]

- Basset JP, Taube JS. Neural correlates for angular head velocity in the rat dorsal tegmental nucleus. Journal of Neuroscience. 2001;21:5740–5751. doi: 10.1523/JNEUROSCI.21-15-05740.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blair HT, Sharp PE. Visual and vestibular influences on head-direction cells in the anterior thalamus of the rat. Behavioral Neuroscience. 1996;110(4):643–660. doi: 10.1037//0735-7044.110.4.643. [DOI] [PubMed] [Google Scholar]

- Burgess N, O'Keefe J. Neuronal computations underlying the firing of place cells and their role in navigation. Hippocampus. 1996;6:749–62. doi: 10.1002/(SICI)1098-1063(1996)6:6<749::AID-HIPO16>3.0.CO;2-0. [DOI] [PubMed] [Google Scholar]

- Bradley DC, Maxwell M, Andersen RA, Banks MS, Shenoy KV. Mechanisms of heading perception in primate visual cortex. Science. 1996;273:1544–1547. doi: 10.1126/science.273.5281.1544. [DOI] [PubMed] [Google Scholar]

- Britten KH. Mechanisms of self-motion perception. Annual Review in Neuroscience. 2008;31:389–401. doi: 10.1146/annurev.neuro.29.051605.112953. [DOI] [PubMed] [Google Scholar]

- Britten KH, Wezel RJA. Area MST and heading perception in macaque monkeys. Cerebral Cortex. 2002;12:692–701. doi: 10.1093/cercor/12.7.692. [DOI] [PubMed] [Google Scholar]

- Brandon MP, Bogaard AR, Libby CP, Connerney MA, Gupta K, Hasselmo ME. Reduction of theta rhythm dissociates grid cell spatial periodicity from directional tuning. Science. 2011;332:595–599. doi: 10.1126/science.1201652. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruss A, Horn BKP. Passive navigation. Computer Vision, Graphics, and Image Processing. 1983;21:3–20. [Google Scholar]

- Burak Y, Fiete IR. Accurate path integration in continuous attractor network models of grid cells. PLoS Computational Biology. 2009;5(2):1–16. doi: 10.1371/journal.pcbi.1000291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burgess N, Barry C, O'Keefe J. An oscillatory interference model of grid cell firing. Hippocampus. 2007;17:801–812. doi: 10.1002/hipo.20327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burne RA, Parnavelas JG, Lin C-S. Response properties of neurons in the visual cortex of the rat. Experimental Brain Research. 1984;53:374–383. doi: 10.1007/BF00238168. [DOI] [PubMed] [Google Scholar]

- Calow D, Krüger N, Wörgötter F. Statistics of optic flow for self-motion through natural scenes. In: Ilg UJ, Blüthoff HH, Mallot HA, editors. Proceedings of dynamic perception. Berlin: IOS, Akademische Verlagsgesellschaft Aka GmBH; 2004. pp. 133–138. [Google Scholar]

- Collet TS, Udin SB. Frogs use retinal elevation as a cue to distance. Journal of Comparative Physiology A. 1988;163:677–683. doi: 10.1007/BF00603852. [DOI] [PubMed] [Google Scholar]

- Coogan TA, Burkhalter A. Hierarchical organization of areas in rat visual cortex. Journal of Neuroscience. 1993;13(9):3749–3772. doi: 10.1523/JNEUROSCI.13-09-03749.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffy CJ, Wurtz RH. Response of monkey MST neurons to optic flow stimuli with shifted centers of motion. Journal of Neuroscience. 1995;15(7):5192–5208. doi: 10.1523/JNEUROSCI.15-07-05192.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duffy CJ. MST neurons respond to optic flow and translational movement. Journal of Neurophysiology. 1998;80:1816–1827. doi: 10.1152/jn.1998.80.4.1816. [DOI] [PubMed] [Google Scholar]

- Ellard CG, Goodale MA, Timney B. Distance estimation in the Mongolian gerbil: the role of dynamic depth cues. Behavioural Brain Research. 1984;14:29–39. doi: 10.1016/0166-4328(84)90017-2. [DOI] [PubMed] [Google Scholar]

- Fellman D, Essen DV. Distributed hierarchical processing in the primate visual cortex. Cerebral Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Fermüller C, Aloimonos Y. Direct perception of three-dimensional motion from patterns of visual motion. Science. 1995;270:1973–1976. doi: 10.1126/science.270.5244.1973. [DOI] [PubMed] [Google Scholar]

- Friedman MB. Visual control of head movements during avian locomotion. Nature. 1975;255:67–69. doi: 10.1038/255067a0. [DOI] [PubMed] [Google Scholar]

- Fuhs MC, Touretzky DS. A spin glass model of path integration in rat medial entorhinal cortex. Journal of Neuroscience. 2006;26(16):4266–4276. doi: 10.1523/JNEUROSCI.4353-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson JJ. The ecological approach to visual perception. Boston: Houghton Mifflin; 1979. [Google Scholar]

- Giocomo LM, Zilli EA, Fransén E, Hasselmo ME. Temporal frequency of subthreshold oscillations scales with entorhinal grid cell firing field spacing. Science. 2007;315:1719–1722. doi: 10.1126/science.1139207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Girman SV, Sauve Y, Lund RD. Receptive field properties of single neurons in rat primary visual cortex. Journal of Neurophysiology. 1999;82(1):301–311. doi: 10.1152/jn.1999.82.1.301. [DOI] [PubMed] [Google Scholar]

- Goldstein, H., Poole, C., & Safko, J. (2001). Classical mechanics. Addison Wesley, 3rd edn.

- Graziano MSA, Andersen RA, Snowden RJ. Tuning of MST neurons to spiral motions. Journal of Neuroscience. 1994;14(1):54–67. doi: 10.1523/JNEUROSCI.14-01-00054.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hafting T, Fyhn M, Molden S, Moser M-B, Moser IM. Microstructure of a spatial map in the entorhinal cortex. Nature. 2005;436:801–806. doi: 10.1038/nature03721. [DOI] [PubMed] [Google Scholar]

- Harvey CD, Collman F, Dombeck DA, Tank DW. Intracellular dynamics of hippocampal place cells during virtual navigation. Nature. 2009;461:941–946. doi: 10.1038/nature08499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasselmo ME. Grid cell mechanisms and function: contributions of entorhinal persistent spiking and phase resetting. Hippocampus. 2008;18:1213–1229. doi: 10.1002/hipo.20512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heeger D, Jepson A. Subspace methods for recovering rigid motion, I: algorithm and implementation. International Journal of Computer Vision. 1992;7(2):95–117. [Google Scholar]

- Horn BKP. Robot vision. Cambridge: MIT Press, McGraw-Hill Book Company; 1986. [Google Scholar]

- Kanatani K. 3-D Interpretation of optical-flow by renormalization. International Journal of Computer Vision. 1993;11(3):267–282. [Google Scholar]

- Kinkhabwala AA, Aronov D, Tank DW. The role of idiothetic (self-motion) information in grid cell firing. Society for Neuroscience Abstract. 2011;37:729.16. [Google Scholar]

- Knierim JJ, Kudrimoti HS, McNaughton BL. Interactions between idiothetic cues and external landmarks in the control of place cells and head direction cells. Journal of Neurophysiology. 1998;80:425–446. doi: 10.1152/jn.1998.80.1.425. [DOI] [PubMed] [Google Scholar]

- Lappe M, Rauschecker J. A neuronal network for the processing of optic flow from self-motion in man and higher mammels. Neural Computation. 1993;5:374–391. [Google Scholar]

- Lappe M. A model of the combination of optic flow and extraretinal eye movement signals in primate extrastriate visual cortex—Neural model of self-motion from optic flow and extraretinal cues. Neural Networks. 1998;11:397–414. doi: 10.1016/s0893-6080(98)00013-6. [DOI] [PubMed] [Google Scholar]

- Langston RF, Ainge JA, Couey JJ, Canto CB, Bjerkens TL, Witter MP, Moser EI, Moser M-B. Development of the spatial representation system in the rat. Science. 2010;328:1576–1580. doi: 10.1126/science.1188210. [DOI] [PubMed] [Google Scholar]

- Lee DN. A theory of visual control of braking based on information about time-to-collision. Perception. 1976;5:437–459. doi: 10.1068/p050437. [DOI] [PubMed] [Google Scholar]

- Lee DN, Reddish PE. Plummeting gannets: a paradigm of ecological optics. Nature. 1981;293:293–294. [Google Scholar]

- Lever C, Burton S, Jeewajee A, O'Keefe J, Burgess N. Boundary vector cells in the subiculum of the hippocampal formation. Journal of Neuroscience. 2009;29:9771–9777. doi: 10.1523/JNEUROSCI.1319-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Logan DJ, Duffy CJ. Cortical area MSTd combines visual cues to represent 3-D self-movement. Cerebral Cortex. 2005;16:1494–1507. doi: 10.1093/cercor/bhj082. [DOI] [PubMed] [Google Scholar]

- Longuet-Higgins H, Prazdny K. The interpretation of a moving retinal image. Proceedings of the Royal Society of London. Series B, Biol. Sciences. 1980;208(1173):385–397. doi: 10.1098/rspb.1980.0057. [DOI] [PubMed] [Google Scholar]

- Mhatre H, Gorchetchnikov A, Grossberg S. Grid cell hexagonal patterns formed by fast self-organized learning within entorhinal cortex. Hippocampus. 2012;22(2):320–34. doi: 10.1002/hipo.20901. [DOI] [PubMed] [Google Scholar]

- Moghaddam M, Bures J. Rotation of water in the Morris water maze interferes with path integration mechanisms of place navigation. Neurobiology of Learning and Memory. 1997;68:239–251. doi: 10.1006/nlme.1997.3800. [DOI] [PubMed] [Google Scholar]

- Montero VM, Brugge JF, Beitel RE. Relation of the visual field to the lateral geniculate body of the albino rat. Journal of Neurophysiology. 1968;31(2):221–36. doi: 10.1152/jn.1968.31.2.221. [DOI] [PubMed] [Google Scholar]

- Müller M, Wehner R. Path integration in desert ants. Proceedings of the National Academy of Science USA. 1988;85:5287–5290. doi: 10.1073/pnas.85.14.5287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muller RU, Kubie JL. The effects of changes in the environment on the spatial firing of hippocampal complex-spike cells. Journal of Neuroscience. 1987;7(7):1951–68. doi: 10.1523/JNEUROSCI.07-07-01951.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Keefe J. Place units in the hippocampus of the freely moving rat. Experimental Neurology. 1976;51:78–109. doi: 10.1016/0014-4886(76)90055-8. [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Burgess N. Geometric determinants of the place fields of hippocampal neurons. Nature. 1996;381:425–428. doi: 10.1038/381425a0. [DOI] [PubMed] [Google Scholar]

- O'Keefe J, Nadel L. The hippocampus as a cognitive map. Oxford: Oxford University Press; 1978. [Google Scholar]

- Page WK, Duffy CJ. Cortical neuronal responses to optic flow are shaped by visual strategies for steering. Cerebral Cortex. 2007;18:727–739. doi: 10.1093/cercor/bhm109. [DOI] [PubMed] [Google Scholar]

- Persistence of Vision Pty. Ltd. (2004). Persistence of vision (TM) Raytracer. Persistence of Vision Pty. Ltd., Williamstown, Victoria, Australia. http://www.povray.org/ (Accessed April 2011).

- Perrone J. Model for the computation of self-motion in biological systems. Journal of the Optical Society of America A. 1992;9(2):177–192. doi: 10.1364/josaa.9.000177. [DOI] [PubMed] [Google Scholar]

- Perrone J, Stone L. A model of self-motion estimation within primate extrastriate visual cortex. Vision Research. 1994;34(21):2917–2938. doi: 10.1016/0042-6989(94)90060-4. [DOI] [PubMed] [Google Scholar]

- Raudies, F., & Hasselmo, M. (2012) Modeling boundary vector cell firing given optic flow as a cue. PLoS Computational Biology (in revision). [DOI] [PMC free article] [PubMed]

- Rieger JH. Information in optical flows induced by curved paths of observation. Journal of the Optical Society of America. 1983;73(3):339–344. doi: 10.1364/josa.73.000339. [DOI] [PubMed] [Google Scholar]

- Sharp PE, Blair HT, Etkin D, Tzanetos DB. Influences of vestibular and visual motion information on the spatial firing patterns of hippocampal place cells. Journal of Neuroscience. 1995;15(1):173–189. doi: 10.1523/JNEUROSCI.15-01-00173.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simoncelli, E. P., Adelson, E. H., & Heeger, D. J. (1991). Probability distributions of optical flow. In IEEE Conference on Computer Vision and Pattern Recognition, June, Hawaii. 310–315.

- Solstad T, Boccara CN, Kropff E, Moser M-B, Moser EI. Representation of geometric borders in entorhinal cortex. Science. 2008;322:1865–1868. doi: 10.1126/science.1166466. [DOI] [PubMed] [Google Scholar]

- Srinivasan MV, Lehrer M, Kirchner WH, Zhang SW. Range perception through apparent image speed in freely flying honeybees. Visual Neuroscience. 1991;6:519–535. doi: 10.1017/s095252380000136x. [DOI] [PubMed] [Google Scholar]

- Stackman RW, Taube JS. Firing properties of head direction cells in the rat anterior thalamic nucleus: dependence on vestibular input. Journal of Neuroscience. 1997;17(11):4349–4358. doi: 10.1523/JNEUROSCI.17-11-04349.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stackman RW, Golob EJ, Basset JP, Taube JS. Passive transport disrupts directional path integration by rat head direction cells. Journal of Neurophysiology. 2003;90:2862–2874. doi: 10.1152/jn.00346.2003. [DOI] [PubMed] [Google Scholar]

- Taube JS, Muller RU, Ranck JB., Jr Head direction cells recorded from the postsubiculum in freely moving rats. I. Description and quantitative analysis. Journal of Neuroscience. 1990;10(2):420–435. doi: 10.1523/JNEUROSCI.10-02-00420.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Warren WH, Hannon DJ. Eye movements and optical flow. Journal of the Optical Society of America A. 1990;7(1):160–169. doi: 10.1364/josaa.7.000160. [DOI] [PubMed] [Google Scholar]

- Warren WH, Jr, Morris WM, Kalish M. Perception of translational heading from optical flow. Journal of Experimental Psychology. 1988;14(4):646–660. doi: 10.1037//0096-1523.14.4.646. [DOI] [PubMed] [Google Scholar]

- Yu CP, Page WK, Gaborski R, Duffy CJ. Receptive field dynamics underlying MST neuronal optic flow selectivity. Journal of Neurophysiology. 2010;103:2794–2807. doi: 10.1152/jn.01085.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang, T., & Tomasi, C. (1999). Fast, robust, and consistent camera motion estimation. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Fort Collins, 164–170.

- Zilli EA, Yoshida M, Tahvildari B, Giocomo LM, Hasselmo ME. Evaluation of the oscillatory interference model of grid cell firing through analysis and measured period variance of some biological oscillators. PLoS Computational Biology. 2010;5(11):1–16. doi: 10.1371/journal.pcbi.1000573. [DOI] [PMC free article] [PubMed] [Google Scholar]