Abstract

Objectives:

This literature review examines the effectiveness of literature searching skills instruction for medical students or residents, as determined in studies that either measure learning before and after an intervention or compare test and control groups. The review reports on the instruments used to measure learning and on their reliability and validity, where available. Finally, a summary of learning outcomes is presented.

Methods:

Fifteen studies published between 1998 and 2011 were identified for inclusion in the review. The selected studies all include a description of the intervention, a summary of the test used to measure learning, and the results of the measurement.

Results:

Instruction generally resulted in improvement in clinical question writing, search strategy construction, article selection, and resource usage.

Conclusion:

Although the findings of most of the studies indicate that the current instructional methods are effective, the study designs are generally weak, there is little evidence that learning persists over time, and few validated methods of skill measurement have been developed.

Highlights.

Medical students and residents who participate in a literature searching skills workshop demonstrate improved searching abilities post-intervention.

There is a trend away from stand-alone literature searching skills instruction toward instruction that is integrated into the evidence-based medicine curriculum.

The Fresno test is a validated measurement of the steps of evidence-based practice. In addition to measuring the ability to ask an answerable clinical question, choose appropriate resources, and develop a MEDLINE search strategy, it requires users to demonstrate the ability to think critically about information.

Implications.

Additional research is needed to determine whether improvements in literature searching skills are durable over time.

Additional research is needed to determine whether there is a relationship between literature searching skills and practices and patient outcomes.

INTRODUCTION

Librarians have been teaching users how to search online medical literature databases for almost twenty years. In the early years, classes were usually stand-alone sessions on how to access resources and conduct database searches. More recently, literature searching skills have been integrated into the curriculum in undergraduate and graduate medical education, often as part of learning about the practice of evidence-based medicine (EBM). Lessons on literature searching are taught using a variety of pedagogical methods including lectures, hands-on workshops, and online tutorials [1].

As medical education focuses more on self-directed and lifelong learning [2] and as governing associations and accrediting bodies are adding information literacy and retrieval competencies to their objectives [3–5], the need to measure the success of literature search skills training becomes critical. This literature review will focus on articles that attempt to answer the question “how effective is the training we are offering?”

METHODS

This review summarizes current literature measuring the impact of literature searching skills training in medical education. Relevant literature was identified by searching PubMed for articles on training medical students and residents to search the literature. The initial search strategy used was:

(“evidence-based medicine”[MeSH Terms] AND “education, medical”[MeSH Terms] AND (medline[MeSH Terms] OR medline[Title/Abstract] OR fresno test))

Additional articles were retrieved by searching for related citations and reviewing the reference lists of relevant articles. The studies selected for inclusion in the review all described (1) literature searching skills instruction provided to medical students or residents, (2) an objective measurement of the impact of the intervention, and (3) the statistical significance of the measured outcomes. Studies reporting self-rated skills or knowledge and those measuring only knowledge without skills were excluded.

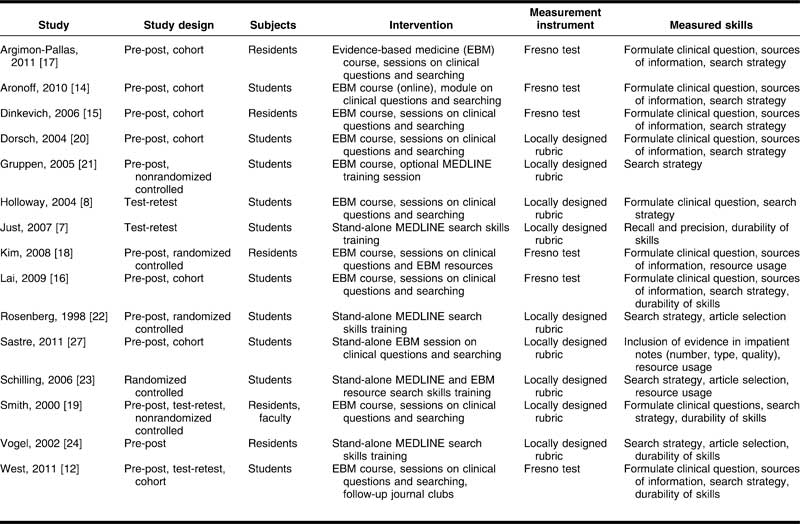

A total of fifteen studies published between 1998 and 2011 are included in the review (Table 1). Twelve of the studies used a cohort design with pre- and post-testing, seven of the studies included a control or comparison group (three of the control groups were randomized), and five studies measured searching skill retention over time.

Table 1.

Summary of study design, subjects, interventions, measurement instrument, and measured skills

RESULTS

Methods of instruction

Interventions represented in the studies included short online searching tutorials, in-person small group hands-on workshops, multi-week courses, and year-long curriculum-integrated instruction. Five of the studies featured stand-alone, hands-on MEDLINE searching or EBM workshop interventions taught by librarians or in one case by physicians. Ten studies described intensive, interactive instructional sessions offered over multiple weeks designed to impart the knowledge and skills required to practice EBM. Unlike the stand-alone sessions, literature searching skills were only a part of the overall curriculum in the EBM-focused sessions and were just as likely to be taught by physicians as by librarians.

Five studies specifically taught Ovid MEDLINE, one taught SilverPlatter MEDLINE, and the rest did not specify the MEDLINE interface used. Evidence-based resources mentioned in the studies included Cochrane Database of Systematic Reviews, DynaMed, InfoPOEMS, FirstConsult, ACP Journal Club, Guidelines.gov, and Tripdatabase.com.

Validated instruments

Most instruments used to measure searching skills were created locally for the purpose of measuring the impact of a locally developed intervention. Therefore, most of the instruments described in this review were used only once, and few efforts were made to test the instruments' reliability or validity. Only one of the instruments included in this review, the Fresno test, was utilized in more than one study. It and four additional instruments or methods reported here included measurements of reliability and validity.

The University of Michigan MEDLINE Search Assessment (UMMSA) tool is a matrix that assigns points for the inclusion of various required search elements [6]. With UMMSA, points are assigned for elements such as identifying appropriate Medical Subject Headings (MeSH), exploding MeSH, adding subheadings, focusing one or more MeSH terms, and limiting properly. To establish reliability and validity, the authors ran several tests. One test established inter-rater reliability among multiple faculty search raters. Construct validity was established by verifying the expected significant differences in scores between novices and experienced searchers and the similarity between experienced searchers and experts. The tool was also analyzed to show internal consistency among the test items. Because this article focused only on validation of the tool and did not include results, it was excluded from the rest of the review.

The Literature Searching Skills Assessment (LiSSA) tool measures both the process of searching, by awarding points for required search elements, and the outcome of searching, by awarding points for article selection [7]. LiSSA was also tested for reliability and validity. Inter-rater reliability was measured, and significant correlation between graders was found. Validity was measured by the tool's ability to differentiate between poor, adequate, good, and expert searchers on outcome measures for recall, precision, and F-measure (a harmonized mean of recall and precision). A significant difference was found between all levels of searchers on all measurements of success. Finally, the relationship between the measures of search ability and search outcome was examined, and weak to moderate but significant correlations were found between the two.

UMMSA and LiSSA are designed to measure search strategies. In contrast, Holloway et al. developed an instrument for measuring all of the steps in the EBM process, including but not limited to, search strategies [8]. Reliability and validity of the tool were measured using a variety of methods. Test-retest measures revealed weak to moderate statistically significant correlations between the overall score and the individual sub-scores for asking clinical questions and critically appraising the evidence, but not for MEDLINE search skills. No significant correlations were found between any of the steps in the tool, nor between the tool and the students' self-assessments.

The Holloway, UMMSA, and LISSA tools were designed to assess search strategies using the Ovid MEDLINE interface. The Ovid interface encourages users to conduct their searches in a logical and discrete sequence of steps. None of these tools would be ideal for grading search strategies from PubMed, where many of the steps that the tools measure happen automatically as part of the PubMed database search algorithm.

The next validated tool, the Fresno test, does not require the use of a particular MEDLINE interface, which allows for greater applicability. It was developed to measure the knowledge and skills required to practice EBM [9]. The tool was designed to measure performance on all steps in the EBM process as described by Sackett et al. [10]. The instrument requires students to complete a series of short-answer questions, and a grading rubric is used to assign scores to each question. The Fresno test includes items that specifically measure the literature searching skills portion of the EBM process, including writing an answerable clinical question, selecting relevant and valid sources of information, and conducting an appropriate MEDLINE search with keywords or MeSH and limits. Verification of the reliability and validity of the Fresno test has been achieved through an analysis of inter-rater reliability, internal reliability through test item comparison, and construct validity through the tool's ability to differentiate between novice and expert searchers [9].

Recall and precision have long been measured by librarians in literature search results. McKibbon et al. studied whether recall and precision rates differed significantly among novice, experienced, and expert searchers [11]. The Fresno test study validated that expert searchers demonstrated significantly better search recall and precision rates than novice searchers, and that recall improved with searcher experience. Although precision also increased, performance of experienced users remained suboptimal.

A fifth validated tool was identified and used in the study by West et al. in this review [12]. However, that instrument, the Berlin questionnaire, is a multiple-choice test that measures EBM knowledge [13]. Since the tool does not provide a measurement of skills, it falls outside the scope of this review. Accordingly, the findings related to the Berlin questionnaire reported in the West et al. article are excluded here.

Measurements of learning

Search skills measurement

Searching skills encompass a range of competencies including the ability to form a searchable clinical question; conduct a logical, efficient, and thorough database search; and select the most relevant articles. All of the studies measuring search performance included an examination of at least one of these skills.

Searchable clinical questions

One of the first steps of EBM is developing a well-built clinical question based on the patient case [10]. Accordingly, most of the studies identified for this review included a measurement of the clinical question. Six studies reported question development results using the Fresno test. Of the six Fresno test articles, three reported a significant increase in the ability to form a clinical question [14–16], one reported no significant change [17], and two reported only an overall score [12, 18]. Although the overall scores improved significantly, there was no separate reporting on the question specific to formulating a clinical question. Two additional studies [19, 20] reported significant improvement in clinical question formation following intervention, and one additional study found no change [8].

Search strategies

In studies where search strategies were graded, there was almost universal improvement in skills. Seven studies measured search strategies using locally developed tools [8, 19–24], and six used the Fresno test [12, 14–18].

Only one study reported mixed results [20]. Students demonstrated significant improvement on the overall score of an EBM skills tool, but only two search-related questions—“research methodology, terms, and publication types” and “subheadings, Boolean, and limits”—showed significant improvement. Other search-related questions—“search strategy logical,” “MeSH correlated with question,” and “correct keywords and title words”—showed no significant improvement.

The remainder of the studies using locally developed tools all measured searching success based on the use of various database elements including using MeSH, exploding, applying subheadings, using Boolean operators, and applying appropriate limits for publication type, language, or age [8, 19, 21–24]. Two studies employed a checklist to identify appropriate search elements [22–24], and both reported statistically significant increases in skills. One study ranked search skills on a 4-point scale from poor to excellent and reported a significant increase in skill level [23]. Three studies used a point system to measure skills and reported results as percent correct [8, 19, 21]. All 3 reported significant differences in percentage points between the first and second test or between the intervention and control groups, ranging from 13.4 [21] to 31 [19].

The literature searching skills portion of the Fresno test grading rubric measures some of the same elements as the locally developed tools [25, 26]. For example, points are awarded for the correct use of MeSH and keywords, the inclusion of all relevant search concepts, and the appropriate use of limits and publication types. Additionally, the Fresno test awards points for a skill none of the other instruments measure: critical thinking. To receive an “excellent” rating on the Fresno test MEDLINE search question, test takers must be able to articulate why search choices were made. For example, to receive full credit, a user might choose an appropriate MeSH term and explain why searching with that MeSH term is better than performing a keyword search. All six studies reporting Fresno test scores showed a significant increase in overall scores post-intervention [12, 14–18]. Four of the studies also separately reported performance on the subsections of the test; all four reported significant skill improvement on the MEDLINE searching question [14–17].

Article selection

In addition to asking the right question and performing a good search, students and residents should be able to select the best literature to answer a clinical question. Only two studies reported a measurement of article selection, and results were mixed. In one study, students reported selected articles, and a score was assigned to the highest quality article in the set [22]. Quality was determined by physician experts, based on a predetermined hierarchy. Students conducted a search on one of two predetermined clinical scenarios, then submitted their search strategies and selected articles. The group assigned to clinical scenario one improved their selection significantly following training. The group assigned to scenario two showed no improvement, but the pretest article selection was already high. The authors concluded that the second question was too easy, making it difficult to improve from the high baseline. The second study showed a statistically significant improvement in students' abilities to identify high-quality articles (defined in this study as randomized controlled trials and meta-analyses) from the full set of articles retrieved [23]. This study also revealed a significant positive correlation between MEDLINE searching scores and article selection quality. A third study included two elements related to article selection on the search performance checklist but did not report the findings of those elements in the study results [24].

Durability of searching skills

Five studies examined the retention of searching skills over time. Results from the follow-up studies were mixed, with some showing retention of skills over time and others showing a clear decay. In one study, residents were tested on their EBM skills either six or nine months after the intervention [19]. Both groups maintained their scores, indicating that learning was durable over time.

One study was designed to measure students' skill retention from the first to the fourth year of medical school [7]. Students received training on MEDLINE searching early in their first year and completed a series of three graded assignments throughout their first year of medical school. In a clerkship rotation in their fourth year, students again completed a similar graded search assignment. Comparisons of search skills and article retrieval were made between the scores at the two test points. The study found that the mean search strategy score increased from year one to year four; however, the difference was not statistically significant. There was a significant difference between the year one and year four search output. Unfortunately, the scores for the fourth year were lower than those for the first year. So although students retained their searching skills over time, their ability to retrieve the best articles diminished.

Two studies used the Fresno test to measure skill retention over time. One study was designed to measure skills immediately following and one year post-intervention [12]. During the post-intervention year, students practiced their EBM skills during each clinical rotation and received feedback from instructors. The mean scores increased significantly from pre- to post-intervention, then increased significantly again from immediately post-intervention to one year post-intervention. Students not only maintained their learning, but improved their skills during the year with practice and feedback.

The other Fresno test study measured decay over time indirectly [16]. Students were divided into five groups. Each group participated in an EBM training course, one group a month for five consecutive months. A pretest was administered before the first group training session and a posttest after all groups had completed the training. Depending on the group assignment, the gap between the end of the training and the posttest ranged from zero to five months. Although all groups scored significantly higher on the posttest than on the pretest, the group tested closest to the end of their training scored highest and the groups trained earlier scored lowest. Because the pretest scores were all similar, indicating a consistent baseline, and all groups were taught by the same instructor, the authors attributed the difference in mean score to decaying skills over time.

In another study with a similar design, residents were given a pretest, a posttest, and a third test at the end of the academic year [24]. Because the intervention was offered to different groups throughout the year, the follow-up test was anywhere from one to eleven months post-intervention. Residents scored significantly higher on both the posttests and follow-up tests, compared to the pretests. However, the follow-up results were significantly lower than the posttest scores in some areas. It would appear that at least some skills decayed after the intervention, though they did not return to the pre-intervention level. One notable issue with the study is that the individual group means were not reported; only an overall follow-up mean was provided. It would have been interesting if the authors had plotted the means over time to see if a pattern of skill decay emerged.

Resource usage

Three studies reported the impact of the intervention on actual database usage. One study logged click-throughs from the electronic medical record system to determine how often students were accessing electronic resources to make evidence-based notes in the patient chart [27]. The use of electronic resources increased significantly, from an average of 0.12 click-throughs per week to 0.66 click-throughs per week. Also, prior to the intervention, only 13% of the click-throughs were to critically appraised resources. Following the intervention the percentage increased to 59%. In another study, Ovid search logs were examined to determine how many searches were conducted by students in intervention and control groups over the course of a 6-week clerkship [23]. Logs revealed that students participating in an online EBM course conducted an average of 12.4 MEDLINE and 1.6 Cochrane searches during the clerkship, compared to 2.6 MEDLINE and 0.2 Cochrane searches in the control group. The differences in searching rates of both databases were statistically significant.

The third study measured how often residents would use electronic resources to answer questions on an exam of clinical vignettes [18]. The intervention group participated in formalized EBM training sessions during an elective month, while the control group received no formalized EBM training. Residents were given an exam with fifteen clinical questions and asked to complete the exam without accessing any resources. Then, they were asked to retake the exam, but the second time, they were allowed to consult online resources. Logging software was used to capture how often the residents accessed various online resources. When allowed to access resources, both the intervention and control group consulted online resources with similar frequency (mean of sixteen times for the intervention group and seventeen times for the control group). The intervention group chose evidence-based resources significantly more often than the control group. However, both groups improved their scores from the first test (without access to resources), and the final scores of the intervention and control group were not significantly different, implying that the residents performed better after consulting online resources, regardless of the types of resources accessed.

Patient notes

One study examined the content of patient intake notes in an electronic medical records system to measure whether an EBM workshop would alter students' behavior [27]. In the inpatient portion of an internal medicine clerkship, students wrote history and physical notes for two to three patients per week. All student write-ups were expected to include references to the literature. Notes were evaluated before and after a mandatory EBM resources tutorial to measure impact on the quantity and quality of references included in the notes. No significant differences were found in the number of citations included in the notes pre- and post-intervention. Post-intervention notes showed an increase in citations to evidence-based resources, but the increase was not statistically significant. Although the quantity of citations did not increase, the quality of the notes improved. Students were better able to incorporate the evidence into their notes about the patients' problems.

CONCLUSIONS

All of the studies in this review reported a significant increase in at least one measure of literature searching skills, regardless of the type of intervention provided. However, the differences between the studies make it difficult to draw meaningful conclusions or generalize the findings to other groups and populations. The studies varied considerably in study design, subjects, settings, timing, interventions, and measurements. The lack of articles demonstrating little or no improvement in skills after intervention may be attributable to the fact that studies with negative results are unlikely to be documented for publication.

Most of the studies that measured learning of literature searching skills tested students soon after the intervention, when retention would be highest. The few reported studies that measured the durability of literature searching skills learning over time reported less favorable results, and none of the studies had rigorous experimental design. The need to design literature searching skills interventions that demonstrate durable learning presents an opportunity for future research. Other research opportunities include the measurements of literature searching and use in physician practice, especially outside of academic medicine and the impact of literature searching and use on patient care outcomes.

Literature searching skills instruction appears to be moving away from stand-alone workshops that focus on specific MEDLINE interfaces and toward curriculum-integrated instruction that ties literature searching to evidence-based practice. The elements measured by the Fresno test reinforce the idea that literature searching is an important and integral part of a self-directed learning process that ultimately leads to better answers to clinical questions. A recent review of evidence-based practice instructional models and adult learning theories resulted in a proposed hierarchy of evidence-based teaching and learning [28]. Based on the findings of that review, the most effective EBM courses would be interactive and clinically integrated. If librarians want to be part of this type of instruction, we need to redouble our efforts to become embedded members of the clinical team to ensure that the use of information resources is a seamless part of providing clinical care.

REFERENCES

- 1.Brettle A. Evaluating information skills training in health libraries: a systematic review. Health Info Lib J. 2007 Dec;24:18–37. doi: 10.1111/j.1471-1842.2007.00740.x. [DOI] [PubMed] [Google Scholar]

- 2.Simon F.A, Aschenbrener C.A. Undergraduate medical education accreditation as a driver of lifelong learning. J Contin Educ Health Prof. 2005 Summer;25(3):157–61. doi: 10.1002/chp.23. [DOI] [PubMed] [Google Scholar]

- 3.Learning objectives for medical student education—guidelines for medical schools: report I of the medical school objectives project. Acad Med. 1999 Jan;74(1):13–8. doi: 10.1097/00001888-199901000-00010. [DOI] [PubMed] [Google Scholar]

- 4.Accreditation Council for Graduate Medical Education. Program director guide to the common program requirements [Internet] The Council [cited 30 Mar 2012]. < http://www.acgme.org/acwebsite/navpages/nav_commonpr.asp>.

- 5.Mantas J, Ammenwerth E, Demiris G, Hasman A, Haux R, Hersh W, Hovenga E, Lun K.C, Marin H, Martin-Sanchez F, Wright G IMIA Recommendations on Education Task Force. Recommendations of the International Medical Informatics Association (IMIA) on education in biomedical and health informatics. First revision. Methods Inf Med. 2010 Jan 7;49(2):105–20. doi: 10.3414/ME5119. [DOI] [PubMed] [Google Scholar]

- 6.Rana G.K, Bradley D.R, Hamstra S.J, Ross P.T, Schumacher R.E, Frohna J.G, Haftel H.M, Lypson M.L. A validated search assessment tool: assessing practice-based learning and improvement in a residency program. J Med Lib Assoc. 2011 Jan;99(1):77–81. doi: 10.3163/1536-5050.99.1.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Just M.L. Measuring Ovid MEDLINE information literacy and search skill retention in medical students [dissertation] [Los Angeles, CA]: University of Southern California; 2007. 121 p. [Google Scholar]

- 8.Holloway R, Nesbit K, Bordley D, Noyes K. Teaching and evaluating first and second year medical students' practice of evidence-based medicine. Med Educ. 2004 Aug;38(8):868–78. doi: 10.1111/j.1365-2929.2004.01817.x. [DOI] [PubMed] [Google Scholar]

- 9.Ramos K.D, Schafer S, Tracz S.M. Validation of the Fresno test of competence in evidence based medicine. BMJ. 2003 Feb 8;326(7384):319–21. doi: 10.1136/bmj.326.7384.319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sackett D.L, Straus S.E, Richardson W.S, Rosenberg W, Haynes R.B. Evidence-based medicine: how to practice and teach EBM. 2nd ed. London, UK: Churchill Livingston; 2000. [Google Scholar]

- 11.McKibbon K.A, Haynes R.B, Dilks C.J, Ramsden M.F, Ryan N.C, Baker L, Flemming T, Fitzgerald D. How good are clinical MEDLINE searches? a comparative study of clinical end-user and librarian searches. Comput Biomed Res. 1990 Dec;23(6):583–93. doi: 10.1016/0010-4809(90)90042-b. [DOI] [PubMed] [Google Scholar]

- 12.West C.P, Jaeger T.M, McDonald F.S. Extended evaluation of a longitudinal medical school evidence-based medicine curriculum. J Gen Intern Med. 2011 Jun;26(6):611–5. doi: 10.1007/s11606-011-1642-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fritsche L, Greenhalgh T, Falck-Ytter Y, Neumayer H.H, Kunz R. Do short courses in evidence based medicine improve knowledge and skills? validation of Berlin questionnaire and before and after study of courses in evidence based medicine. BMJ. 2002 Dec 7;325(7376):1338–41. doi: 10.1136/bmj.325.7376.1338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Aronoff S.C, Evans B, Fleece D, Lyons P, Kaplan L, Rojas R. Integrating evidence based medicine into undergraduate medical education: combining online instruction with clinical clerkships. Teach Learn Med. 2010 Jul;22(3):219–23. doi: 10.1080/10401334.2010.488460. [DOI] [PubMed] [Google Scholar]

- 15.Dinkevich E, Markinson A, Ahsan S, Lawrence B. Effect of a brief intervention on evidence-based medicine skills of pediatric residents. BMC Med Educ. 2006 Jan 10;6:1. doi: 10.1186/1472-6920-6-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Lai N.M, Teng C.L. Competence in evidence-based medicine of senior medical students following a clinically integrated training programme. Hong Kong Med J. 2009 Oct;15(5):332–8. [PubMed] [Google Scholar]

- 17.Argimon-Pallas J.M, Flores-Mateo G, Jimenez-Villa J, Pujol-Ribera E. Effectiveness of a short-course in improving knowledge and skills on evidence-based practice. BMC Fam Pract. 2011 Jun 30;12:64. doi: 10.1186/1471-2296-12-64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kim S, Willett L.R, Murphy D.J, O'Rourke K, Sharma R, Shea J.A. Impact of an evidence-based medicine curriculum on resident use of electronic resources: a randomized controlled study. J Gen Intern Med. 2008 Nov;23(11):1804–8. doi: 10.1007/s11606-008-0766-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Smith C.A, Ganschow P.S, Reilly B.M, Evans A.T, McNutt R.A, Osei A, Saquib M, Surabhi S, Yadav S. Teaching residents evidence-based medicine skills: a controlled trial of effectiveness and assessment of durability. J Gen Intern Med. 2000 Oct;15(10):710–5. doi: 10.1046/j.1525-1497.2000.91026.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dorsch J.L, Aiyer M.K, Meyer L.E. Impact of an evidence-based medicine curriculum on medical students' attitudes and skills. J Med Lib Assoc. 2004 Oct;92(4):397–406. [PMC free article] [PubMed] [Google Scholar]

- 21.Gruppen L.D, Rana G.K, Arndt T.S. A controlled comparison study of the efficacy of training medical students in evidence-based medicine literature searching skills. Acad Med. 2005 Oct;80(10):940–4. doi: 10.1097/00001888-200510000-00014. [DOI] [PubMed] [Google Scholar]

- 22.Rosenberg W.M, Deeks J, Lusher A, Snowball R, Dooley G, Sackett D. Improving searching skills and evidence retrieval. J R Coll Physicians Lond. 1998 Nov–Dec;32(6):557–63. [PMC free article] [PubMed] [Google Scholar]

- 23.Schilling K, Wiecha J, Polineni D, Khalil S. An interactive web-based curriculum on evidence-based medicine: design and effectiveness. Fam Med. 2006 Feb;38(2):126–32. [PubMed] [Google Scholar]

- 24.Vogel E.W, Block K.R, Wallingford K.T. Finding the evidence: teaching medical residents to search MEDLINE. J Med Libr Assoc. 2002 Jul;90(3):327–30. [PMC free article] [PubMed] [Google Scholar]

- 25.University of Texas Health Sciences Center San Antonio. Fresno test of evidence based medicine grading rubric [Internet] Office of Graduate Medical Education [cited 30 Mar 2012]; < http://www.uthscsa.edu/gme/documents/PD%20Handbook/EBM%20Fresno%20Test%20grading%20rubric.pdf>. [Google Scholar]

- 26.Tilson J.K. Validation of the modified Fresno test: assessing physical therapists' evidence based practice knowledge and skills. BMC Med Educ. 2010 May 25;10:38. doi: 10.1186/1472-6920-10-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sastre E.A, Denny J.C, McCoy J.A, McCoy A.B, Spickard A., III Teaching evidence-based medicine: impact on students' literature use and inpatient clinical documentation. Med Teach. 2011;33(6):e306–12. doi: 10.3109/0142159X.2011.565827. [DOI] [PubMed] [Google Scholar]

- 28.Khan K.S, Coomarasamy A. A hierarchy of effective teaching and learning to acquire competence in evidenced-based medicine. BMC Med Educ. 2006 Dec 15;6:59. doi: 10.1186/1472-6920-6-59. [DOI] [PMC free article] [PubMed] [Google Scholar]