Abstract

Objectives and Background:

Libraries are increasingly called upon to demonstrate student learning outcomes and the tangible benefits of library educational programs. This study reviewed and compared the efficacy of traditionally used measures for assessing library instruction, examining the benefits and drawbacks of assessment measures and exploring the extent to which knowledge, attitudes, and behaviors actually paralleled demonstrated skill levels.

Methods:

An overview of recent literature on the evaluation of information literacy education addressed these questions: (1) What evaluation measures are commonly used for evaluating library instruction? (2) What are the pros and cons of popular evaluation measures? (3) What are the relationships between measures of skills versus measures of attitudes and behavior? Research outcomes were used to identify relationships between measures of attitudes, behaviors, and skills, which are typically gathered via attitudinal surveys, written skills tests, or graded exercises.

Results and Conclusions:

Results provide useful information about the efficacy of instructional evaluation methods, including showing significant disparities between attitudes, skills, and information usage behaviors. This information can be used by librarians to implement the most appropriate evaluation methods for measuring important variables that accurately demonstrate students' attitudes, behaviors, or skills.

Highlights.

Comparisons of library instruction evaluation measures demonstrate what surveys, written tests, practical exercises, self-assessments, and so on actually do and do not measure.

Students routinely overestimate their information retrieval and information literacy skills.

Students' attitudes about the library and librarians may not correlate with their perceptions of their skills and demonstrated skills.

Research identifies a disconnect between theoretical knowledge and demonstrated skills.

Implications.

Formative assessment is not only effective for collecting baseline information, but for giving students a realistic picture of their true skill levels.

Longitudinal summative assessment of practical skills is the truest measure of learning.

Libraries should implement appropriate performance, affective, and behavioral measures to provide a complete and accurate assessment of learners' information literacy skills and attitudes.

Simple descriptive statistics are not adequate for showing how evaluation measures are interdependent, which have cause-and-effect relationships, and what combinations of measures represent skills, knowledge, and learning most accurately.

INTRODUCTION

In academic and professional environments that are increasingly evidence based and outcomes driven, librarians are likewise called upon to provide tangible evidence that instruction in information literacy (IL) skills is valid and legitimate. Legitimacy is usually quantified through a hierarchy of expected educational standards and outcomes. These include the impact of instruction on students' information retrieval skills, course grades, IL skills, and achievement of program and national standards. The assessment of student learning is important for demonstrating academic achievement and program success, particularly in the context of increasing tuition costs. Measuring student learning outcomes is a process that necessitates sequential and systematic evaluation of library training, workshops, or courses.

Quality educational program evaluation includes both quantitative and qualitative measures. Many commonly used evaluation tools such as self-reported perception surveys focus solely on students' perceptions of their own skills, their knowledge, or the library. These are important areas of investigation, for user satisfaction and self-confidence are significant factors in understanding students' attitudes about libraries and information. At the same time, a balanced approach is ideal, requiring librarians to distinguish between and appropriately apply effective evaluation measures to provide evidence of both attitudes and actual learning outcomes.

The authors surveyed the academic library literature on student learning assessment from 2007 to 2012 to uncover meaningful relationships between various measures of learning for assessing knowledge, skills, attitudes, or behaviors. Several questions related to the evaluation of library instruction were asked:

What evaluation measures are commonly used?

What are the pros and cons of popular evaluation measures?

What are the relationships between measures of skills versus measures of attitudes and behavior?

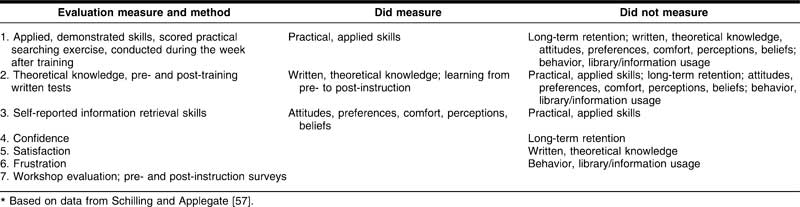

This review includes the articles that specifically address the use of evaluation measurements and instruments to assess IL skills or the impact of IL training on student learning. The review is not limited to the biomedical journal literature, but also includes literature from the arts, humanities, and social sciences. The resulting sample includes articles published since 2007 that report on the use of measures of performance (tests, products, portfolios), attitude (surveys), and behavior (in relation to the use of library resources). These measures are critiqued based on the extent to which they uncover meaningful relationships about learners' skills, knowledge, attitudes, and behaviors. An overview of their pros and cons, benefits, and drawbacks is given. This paper also highlights the literature that makes the best cases for drawing valid conclusions about the tangible impact of library training on student learning and educational outcomes. Table 1 shows the library training evaluation measures that were most frequently represented in the reviewed literature.

Table 1.

Evaluation measures frequently represented in the literature: 2007–2012

FOUNDATIONS OF INFORMATION LITERACY EVALUATION

During the 1990s, many higher education accrediting agencies adopted IL competencies as important criteria for academic success and achievement [1–4]. At the same time, the Association of College and Research Libraries (ACRL) called for an increased emphasis on IL through its Information Literacy Competency Standards for Higher Education [5]. The associated literature described the adoption and practical implications of the ACRL Standards and other IL criteria, as well as subsequent IL tests such as Project SAILS or institution-specific IL tests [6–12]. The library literature has also deliberated the educational viability of stand-alone IL tests, IL courses, and IL workshops; curriculum-integrated instruction; the pros and cons of online training; and the like [13–32]. This literature represents the foundations upon which library-based IL education is typically evaluated.

The Association of Research Libraries commissioned a study to document the demonstrated value of academic libraries to their campuses [33, 34]. In the resulting publications, the authors reviewed existing evaluation measures for training and educational programs and discussed the relevance of library instruction for measuring library impact on student learning (2011) [34]. They described options for measuring skills development (2008) via tests, performance, or evaluation rubrics. The research found, though, that too many libraries did not employ systematic approaches to the assessment of student learning outcomes and IL skills [33]. In fact, many libraries—even those that evaluated their workshops—did not formally evaluate student learning in any way.

This lack of evaluation becomes problematic when libraries must qualify and quantify their impact on educational goals and outcomes. This is an important issue, for without systematic evaluation, libraries do not have adequate information to determine the impact of existing training on students' IL skills and learning needs. Decisions regarding whether educational programs should be continued, expanded, or modified occur in a vacuum. In other cases, libraries may not lack data itself, but may lack a systematic process for using statistically reliable research to make conclusions about the validity of educational programming. This leaves librarians guessing: How do library educational programs impact IL skills development for meaningful student learning outcomes?

TIMING MATTERS: FORMATIVE AND SUMMATIVE EVALUATION

When evaluation activities take place impacts what is measurable, so realistic decisions about formative and summative evaluation are important. Formative and summative evaluation techniques are applied at different points across the educational cycle. Formative evaluation tracks student progress along the way. Summative evaluation represents a point in time usually either immediately after training or longitudinally. The timing-related pros and cons are discussed here.

Although formative and summative evaluation go hand-in-hand, summative evaluation is more prevalent in in the literature. Zhang, Watson, and Banfield analyzed the ERIC literature from 1990 to 2005, finding ten empirical studies that assessed affect, confidence, and attitudes in information seeking [35]. Of these ten, one implemented both pre- (formative) and post-instruction (summative) evaluation. The more current literature includes more articles describing parallel formative and summative evaluation activities like pre- and post-instruction written tests or affective surveys [8, 9, 11, 36–39]. In a well-rounded evaluation system, both formative and summative assessments are essential. Depending too heavily on one or the other may result in skewed perspectives on students and learning, because a complete picture of skills and attitudes is not available.

Formative assessment may be iterative, occurring periodically throughout a training session, course, or workshop series. This allows instruction to be modified on the go. Gilstrap and Dupre described an English composition course in which multiple sections of students (≈600) participated in curriculum-integrated IL training [40]. During each of 4 library instruction sessions, students completed “critical incident questionnaires” about their information needs and skills. Reviews of these reports resulted in the development of a list of recurring themes. When preparing for the next session, librarians reviewed these themes and modified training to address specific problems or areas of confusion.

This iterative approach is advantageous, but not always feasible or practical. For example, it is only practical when there are multiple opportunities to engage with learners across a period of time. However, library instruction may be short of duration (one to three hours) or one time only, limiting opportunities to do formative evaluation and modify training midstream. Time-in-training is another barrier: librarians may sacrifice formative evaluation measures so that more time is available for instruction or hands-on activities.

Summative evaluation is cumulative in nature. It typically occurs immediately after training, which is an obvious window of time to assess students' skills or record their attitudes. Short-term retention should not be used as a substitute for measures of long-term retention, however. Long-term retention is the more accurate indicator of actual learning. Ideally, short-term and longitudinal (weeks, months, semesters later) summative evaluation activities should be strategically implemented to understand more about learners' long-term retention [41]. There is a complex array of benefits and barriers to longitudinal tracking or testing, so finding a balance of measures is important.

Cmor, Chan, and Kong examined literature searching exercises and viewed oral presentations in which students described their search strategies that were recorded immediately post-training and again two weeks after instruction [42]. Content analysis showed that during the 2 weeks immediately following instruction, students reverted to ineffective searching behaviors. Of the 70% of students who showed reasonable competence immediately post-training, “very few” utilized those same effective search techniques just 2 weeks later. The students did not demonstrate learning or retention.

Despite being highly reliable for measuring actual learning, assessing retention longitudinally presents a variety of challenges, which are perhaps evidenced by the small numbers of articles on retention [41, 42]. One drawback is logistical: students have a tendency to scatter after training. Potential sources of information may also be lost over time. Zoellner, Samson, and Hines reported that only 33% of their original 426 communications students participated in summative surveys [38]. Researchers were also unable to track responses across time due to loss of identifying data with the posttest survey. Bronshteyn and Baladad measured learning with individual narrative reflections and anonymous surveys, but neither of these could be correlated because the anonymous surveys were impossible to track [43]. Cmor, Chan, and Kong indicated that 900 students took a skills test immediately post-instruction, but 20 student presentations from 2 weeks later were available for analysis [42]. Figa, Bone, and MacPherson reported that post-course surveys had only an 11% response rate [44]. Blummer also reported problems due to data restrictions and the inability to control for a variety of variables [45]. These articles provide evidence that without access to individual learners and artifacts, rigorous research methodologies cannot be implemented. Without adequate formative and summative evidence, librarians cannot make reasonable judgments regarding the value and impact of library instruction. Timing matters.

DIRECT AND INDIRECT MEASURES

Evidence of student attitudes and learning can be collected in many ways, including measures of performance (such as tests, portfolios, or products), attitude (surveys), and behavior (library usage). Popular techniques for evaluating the efficacy of library education programs have included in-process and end-product measures, such as citation analysis, narrative reflection, focus groups, and demonstration portfolios and products [43, 45–47]. Evaluation is either direct or indirect. Direct evaluation documents knowledge, skills, or behavior. Indirect assessment is based on self-reporting of perceived skills, learning, behaviors, or attitudes. The following section provides an overview of the benefits and drawbacks of various commonly cited evaluation approaches. Table 2 provides examples of these evaluation measures.

Table 2.

Overview of evaluation measures

Measures of performance

Performance measures (direct) are based on actual student work. Most often these include tests or scored exercises grounded in clinical questions or real-life simulations or displays such as course products, projects, portfolios, research papers, essays, exhibits, case analysis, and so on. Testing is an obvious way to measure skills or knowledge. Portfolio reviews, citation analysis, product reviews, and so on are also useful for understanding what students have achieved or learned over time. Other approaches include reviewing literature searches to assess students' skills in using explode, focus, subheadings, or limits or skills in creating effective search strategies for answering clinical questions [27, 30, 42, 47–55].

One pro of using performance measures is that students already produce course artifacts, including literature searches. Librarians do not necessarily need to implement separate evaluation activities. A negative is that significant coordination with information technology access policies and teaching faculty may be required to gain access to student materials. Another problem is that available course artifacts may not provide the best evidence for accurately assessing information retrieval and IL skills. Plus, intangible variables such as affect, values, perceptions, and beliefs are not represented. These intangibles influence learning.

Knowledge versus skills

In health sciences libraries, a logical approach to measuring skills or knowledge has been through literature search assessment, practical tests or exercises, or written tests. Not all tests are created equal, though. Written tests reflect theoretical knowledge. Practical exercises or tests demonstrate applied skills [38, 39, 56, 57]. In one experiment, medical students participated in an intervention that included pre- and post-training written tests on literature searching concepts and terminology, types of resources, citation formats, critical analysis, problem solving, and so on. Correlation of written tests with scored MEDLINE searches showed no statistically significant results (r = +0.17 not statistically significant) [57]. Theoretical knowledge did not translate into practical skill. Maneuvering through a sophisticated bibliographic database like MEDLINE to find journal literature to address a clinical question requires a unique set of skills [57].

Measures of attitude

Indirect assessment is affective or attitudinal, or based on learners' perceptions of their skills and learning, including what people think, feel, or believe about the training experience or about their skills. Sources for these reports are surveys or questionnaires completed by the students themselves, librarians, course instructors, internship supervisors, peers, and so on. Attitudes are quantified in terms of satisfaction, confidence, comfort likes, dislikes, preferences, interests, and so forth. Surveys are often used to evaluate the training itself, the instructors, training resources (blogs, clickers, videos), or the method of instruction (online or face-to-face) [38, 40, 44, 57–60].

Crawford [61] analyzed 215 empirical studies in ERIC from 1970 to 2002, showing that affective surveys were the most frequently used tool for assessing library instruction. In fact, self-reported attitudinal surveys were the most frequently cited method of evaluation in the literature reviewed here [12, 42, 44, 45, 56, 60]. A common “process-implementation” approach is to ask faculty to describe their satisfaction with the quality of student work or products [42]. Although these findings are non-measures in terms of student learning, they are useful for understanding instructor perspectives.

Polkinghorne and Wilton [11] described a political science research skills course in which students' reflections on their information search skills and a variety of course artifacts were reviewed. The analysis of these artifacts provided a window into students' perceptions. One of the more interesting findings was that students focused on end-results and end-products (assignments), rather than on the information retrieval process itself. The authors commented that students, particularly those with weak skills, were not aware of the requirements for improved IL skills [11]. This leads to an important issue in indirect assessment: students may not be excellent self-judges of their own skills [57]. Even when students acknowledged that their searching methods were chaotic and inefficient, they still felt that they had achieved success and were adept researchers [11]. This is indicative of the “satisfied but inept” phenomenon described by Plutchak [62]. Information retrieval may identify only a handful of the available and applicable resources, and searchers may waste time and go about the process in a haphazard and inefficient manner. Yet, because users find something that seems relevant, they are satisfied with their work: thus, the “satisfied but inept” effect.

Related findings were reported in evidence-based information retrieval experiments with first-year and third-year medical students [57, 63]. Triangulation of evaluation results showed students: (1) believed themselves to possess higher IL skills than their test scores indicated, (2) did not know what information resources to use to support their coursework, (3) were not aware of important evidence-based resources, and (4) overestimated their knowledge of those they had used. For example, of the 88% of first-year medical students (n = 128) who reported being very familiar with MEDLINE, only 12% were aware of Medical Subject Headings (MeSH). In fact, students consistently overestimated their own MEDLINE, information retrieval, and IL skills and knowledge (r = −0.07 not statistically significant) [57].

Library and resource usage

Questions about the impact of library training and IL programs are also addressed through library usage data. Two approaches measure library usage: reported usage or actual demonstrated usage. In libraries, resource usage is most often gathered through self-reported surveys [45, 46, 56, 64]. A benefit to this approach is that self-reported library usage behaviors are relevant for understanding attitudes like satisfaction, knowledge of resources, comfort, and so forth. A drawback is that self-reported behavior is based on guessing rather than fact. It may not be a statistically valid marker of actual demonstrated usage [57].

While self-reported library usage behavior does not show actual learning, it may be indicative of the potential for learning. For example, students who are more engaged with library resources have more opportunities to learn to use these resources effectively and efficiently, so perhaps these students will achieve IL higher skills. Unfortunately, the opportunity to learn does not equal actual learning. Another common hypothesis is that students who are more comfortable, satisfied, or familiar with the library are also more likely to use the library. The validity of these claims cannot be determined without real usage data, however; so estimated or reported behaviors are mostly useful for understanding a student's frame of mind.

Demonstrated library usage can be documented through library statistics or data from service areas such as interlibrary loan, circulation, and reserves or from resources including staff, library websites, or bibliographic databases. One benefit of this approach is that it paints a picture of actual resource usage. Another benefit is that usage behavior can be easily tracked longitudinally. One obstacle to measuring user behavior is that it requires tracking of individuals or cohorts. Internal review board requirements may complicate this issue. Plus, many resources are accessed anonymously, rendering it impossible to track individual users.

When available, library usage data can be correlated with other variables like attitudes, test scores, or student learning outcomes to form a broader picture. For instance, library resource usage by a cohort of first-year medical students (n = 128) was gathered in 2 ways [27]. Immediately post-training, students were surveyed regarding their anticipated use behavior over the next semester. At the end of the semester, students again described their information use during the previous semester. Actual usage across the semester was also captured using students' individual system accounts. These data were then correlated to training group (online training versus face-to-face training) to determine whether the training method (online or face-to-face) itself impacted expected or demonstrated usage. This was important to librarians who wanted students to “get the message” regarding the importance of particular evidence-based course resources. Data analysis revealed no statistical correlations between training groups. This meant that online instruction and traditional instruction were comparable in terms of their impact on students' anticipated information usage behaviors (P = 0.20) and actual usage behaviors throughout the semester (P = 0.25) [27]. These results eased librarians' concerns about online training. Without library usage statistics, this level of comparison would not have been possible.

Implementing the most efficacious evaluation measures and instruments in any given situation can be challenging. Each approach has its own limitations. At the same time, the online learning example above illustrates that not every important research question needs to be about student learning. Evaluation is useful for informing and driving internal decisions and policies. An excellent program of evaluation combines measures from a variety of sources for a variety of research questions. This approach provides a range of artifacts and data from which to draw evidence.

IN-PROCESS AND END-PRODUCT MEASURES

Information retrieval, information literacy, and learning are processes. Capturing the process itself is important. In-process measures are useful for identifying exactly where a student's skills are weak or strong. The early foundational literature on information-retrieval skills in biomedical education established the groundwork for understanding the information-retrieval process itself, specifically as it relates to end-user searching in MEDLINE [48–55]. This literature also examined behavioral variables like the extent to which prior MEDLINE searching experience impacted subsequent searching skills and behaviors. For instance, a strong relationship between the level of search experience and frequency of searching was demonstrated [51]. In other words, searching begets searching. Research also showed that searching experience and skill levels correlated. Practice makes perfect [51]. Additionally, Pao and colleagues found that clinical knowledge did not translate to bibliographic searching skills, evidence that bibliographic searching requires a unique set of skills [50, 51]. Although the information-retrieval process continues to warrant exploration, a strong foundational body of literature addresses many major questions.

In-process evaluation can also be applied to other student artifacts such as presentations, projects, or portfolios. There are three reasonably effective methods for conducting in-process evaluation:

An assignment immediately at the end of an instruction session or sequence (summative), particularly those in which students can actually begin their searches (direct), takes minimal course faculty engagement but significant coordination, planning, and grading for librarians. Scored practical exercises are also effective measures of actual skills.

A survey administered after a period of time (summative, indirect) requires coordination with course instructors, and a reasonable guarantee that the students are available and willing to complete and return surveys. Self-reported surveys are not strong measures of actual learning.

A blog, journal, or portfolio captures elements of the writing process such as initial bibliographies, search journals, notes, and so on (formative and/or summative; direct).

The third method requires significant faculty buy-in and willingness to share student work [9, 11, 12, 40–42, 56, 60, 65]. Process portfolios are also usually only feasible when course projects themselves use a developmental approach that requires evidence such as drafts, journals, or milestone notes or evidence. Unfortunately, many process measures, including those in which course instructors are surveyed about what should be incorporated into an instructional session, are considered “non-measures” for the purpose of evaluating student learning [66, 67].

One of the most commonly used summative end-product approaches to evaluating student learning is the citation analysis method, in which an academic task culminates in a formally cited paper, project, presentation, and so on [10, 12, 41]. Citations are examined for standards of content and quality. This approach has two main strengths. The first is the idea that the paper or product itself is the ultimate goal, similar to the journal article publication of a research scientist. It is a real, authentic summative measure. Secondly, citation analysis is feasible in many academic settings and may require minimal coordination between the content instructor and library instructor.

Sharma's research at the University of Connecticut described a one-credit, stand-alone IL course that used a portfolio, with requirements to incorporate specific searching skills [12]. The primary course goal was to give students hands-on practice and to provide instructors with detailed information for assessment. Portfolios were evaluated with worksheets for each area covered, a rubric system with dimensions (areas to be evaluated) and defined ratings (needs improvement, good, or excellent). Aggregated results showed that a particularly difficult step in the process was in defining a topic in a way that was conducive to developing an effective search strategy [12]. This phenomenon is routinely observed among librarians who spend considerable time teaching students about appropriate “research questions” and “searchable questions.”

There are two related drawbacks to the end-product evaluation approach. One is that a final product does not provide information about the information retrieval process itself. Library instruction aims at producing searchers who are both effective and efficient. A student who is a poor searcher but a good evaluator, for example, could produce a high-quality citation list only after wading through hundreds of citations. An effective searcher would have achieved the same final results, but with much greater precision and, hence, economy of effort. Citation analysis loses this important information.

Another drawback to end-product citation analysis is the loss of those citations and resources that are used but may not appear on a final bibliography. An anthropological observation study by Edge showed that astronomers read, talked about, and were influenced by far more than just those citations that appeared in their formal papers [68]. Students are likewise influenced by items that they retrieve and read. Another drawback is that citation analysis does not describe where the search process has failed: poor use of Boolean logic, ineffective application of limit options, poor article evaluation skills, and so on. Citation analysis overlooks the searching process and does not measure actual searching behavior or information retrieval skills. Table 3 summarizes the pros and cons of commonly used evaluation measures.

Table 3.

Summary of evaluation pros and cons

ALL MEASURES ARE NOT CREATED EQUAL

It is perhaps most important to distinguish between “what one wants to measure” versus “what really is being measured.” While there are many important distinctions between direct testing and affective and behavioral approaches to evaluation, the complex relationships between measures remain unclear. A report in the field of engineering education, for instance, concluded that self-reports demonstrated a “very good relationship” to objective measures [69]. Weisskirch and Silveria reported that higher student confidence in the process (e.g., the ability to evaluate the quality of sources) correlated positively with higher grades on a final project [60]. Kisby reported that college students who rated their “online skills” as high also reported greater satisfaction with library services [64]. Lower self-assessed skills were associated with lower satisfaction and nonuse of services. In contrast to these findings, Schilling and Applegate found that attitudes neither predicted nor equaled skill [57]. Students' (n = 128) MEDLINE and information retrieval skills (graded search exercise) did not statistically significantly correlate to any of the following:

student self-reported satisfaction with their searches (r = +0.12 not statistically significant)

confidence/comfort in their searches (r = +0.14 not statistically significant)

frustration with the information retrieval process (r = −0.09 not statistically significant)

Interestingly, students did know when they had learned something. Those who reported learning more during the training did achieve higher MEDLINE searching skills (r = +0.41 statistically significant) [57]. Contradictory research results leave unanswered questions about the efficacy of affective measures.

Affective measures are important because user satisfaction and self-confidence play into information behavior and skills development. At the same time, the common affective measures, like questionnaires and self-reporting skills surveys, cannot substitute as reliable measures of skills and knowledge. The challenge becomes to implement a balanced approach. This requires that librarians distinguish between and systematically apply appropriate non-measures of learning (faculty observations), indirect measures of learning (self-assessment of skills), and direct measures of learning (tests).

Another issue that presented in the literature review had to do with the nature of statistical analysis. Most of the literature exclusively reports simple descriptive statistics. These measure one variable at a time, independent of one another. Descriptive statistics are not particularly effective for revealing complex relationships among multiple variables. Moving beyond simple descriptive analysis to the triangulation of data from multiple sources can show the extent to which different evaluation measures are interdependent, which evaluation measures have cause-and-effect relationships, and, most importantly for learning outcomes, what combinations of measures most accurately represent skills, knowledge, and learning. Librarians need not become statisticians themselves but can periodically consult with statisticians to identify options for uncovering deeper relationships within the myriad data that they may already have on hand. Tables 4 and 5 show statistical results from a previous study that illustrate the efficacy of tests, attitudes, reported behavior, and other variables for assessing learning [57]. These findings revealed relationships among multiple variables for understanding learning itself and attitudes about learning.

Table 4.

Efficacy of evaluation measures*

Table 5.

Results of statistical correlations*

These results and the results of other studies reviewed above indicated that:

Practical exercises were the most efficacious way to document actual applied, practical skills.

Written tests were not the equivalent of a practical searching exercise in terms of measuring applied, practical skills.

Students were poor self-judges of their own skill levels. In fact, their attitudes evidenced the “satisfied but inept” phenomenon [62].

Students were excellent self-judges of their attitudes, feelings, beliefs, and perceptions. Certain attitudes went hand-in-hand, like confidence and satisfaction or frustration and dissatisfaction.

Attitudes, feelings, beliefs, and perceptions were not indicative of actual knowledge and learning.

This level of statistical analysis provided evidence upon which to make conclusions about the impact of library training on learners' information usage, attitudes, skills, and behavior and, ultimately, on student learning and achievement of educational outcomes.

DISCUSSION AND CONCLUSION

This paper provides an overview of current approaches to evaluating library instruction and students' achievement of educational outcomes, describing the most commonly used evaluation measures among libraries and presenting pros and cons or benefits and barriers of each. This paper also illustrates the significant disparities between measures of skills, knowledge, attitudes, and information usage behaviors. While affective measures are more common, they are not likely to provide meaningful evidence in terms of students' skills, course grades, or learning outcomes. Yet, attitudes do remain important in the larger context. Librarians want students to feel comfortable and confident in the library and to learn to identify their own limitations and learning needs. Academic assessment protocols strongly urge the incorporation of direct testing measures, such as objective tests or expert reviews of performances (searches), artifacts, or portfolios [70]. Although every evaluation measure has value, retention of learning is evidenced most efficaciously through demonstrated skills that are assessed longitudinally. When direct measures, such as tests, are expensive, cumbersome to implement, or difficult to analyze, indirect measures are often accepted as viable alternatives. Evaluators must avoid confusing “material” satisfaction (whether the learner demonstrated effective skills) versus “emotional” satisfaction (how the learner felt about his or her skills). One must consider also the issue of validity of measures. This requires a deliberate and strategic approach to evaluation, asking: What questions do we have? What evidence do we need? What can we realistically evaluate? What data can we realistically collect? Through what methods and tools or instruments can these data be realistically collected?

Libraries continue to invest significant time and attention to educational programs and know that systematic and sequential information literacy education is important to problem solving, critical thinking, and lifelong learning. Likewise, librarians must equally evaluate their training and educational program outcomes systematically and sequentially with rigorous research methods and measures. The goal of effective evaluation remains to implement what is known about the efficacy of evaluation methods, measures, and tools; weigh the pros and cons, benefits and barriers of each; and acknowledge the required compromises for feasibility, practicality, and affordability. The efficacy of the evaluation measures employed will continue to have a major impact on what questions are answered and unanswered, and on what evidence is available to quantify the educational value of library instruction.

Footnotes

This article has been approved for the Medical Library Association's Independent Reading Program <http://www.mlanet .org/education/irp/>. <http://www.mlanet.org/education/irp/">

REFERENCES

- 1.New England Association of Schools and Colleges. Standards for accreditation. Winchester, MA: New England Association of Schools and Colleges, Commission on Institutes of Higher Education; 1992. [Google Scholar]

- 2.Southern Association of Colleges and Schools. Criteria for accreditation: commission on colleges. Atlanta, GA: Southern Association of Colleges and Schools; 1992. [Google Scholar]

- 3.Association of American Medical Colleges. Medical School Objectives Project (MSOP): report IV: contemporary issues in medicine: basic science and clinical research [Internet] The Association; 2001 Aug. 24. p. [cited 29 Apr 2012]. < https://www.aamc.org/initiatives/msop>. [Google Scholar]

- 4.Association of American Medical Colleges. Physicians for the twenty-first century: report of the panel on the General Professional Education of the Physician and college preparation for medicine (GPEP) J Med Educ. 1984;59(11, 2):1–208. [PubMed] [Google Scholar]

- 5.American Library Association, Association of College and Research Libraries. Information literacy competency standards for higher education: standards, performance indicators, and outcomes [Internet] The Association; 2000. 23 paragraphs. [cited 29 Apr 2012]. < http://www.ala.org/acrl/standards/informationliteracycompetency>. [Google Scholar]

- 6.Kent State University. Project SAILS (Standardized Assessment of Information Literacy Skills) [Internet] The University; 2000–2012) [cited 29 Apr 2012]}. {{< https://www.projectsails.org>. [Google Scholar]

- 7.Lym B, Grossman H, Yannotta L, Talih M. Assessing the assessment: how institutions administered, interpreted, and used SAILS. Ref Serv Rev. 2009;38(1):168–86. [Google Scholar]

- 8.Cameron L, Wise S.L, Lottridge S.M. The development and validation of the information literacy test. Coll Res Lib. 2007 May;68(3):229–36. [Google Scholar]

- 9.Burkhardt J.M. Assessing library skills: a first step to information literacy. Portal: Lib Acad. 2007;7(1):25–49. [Google Scholar]

- 10.Scharf D, Elliot N, Huey H.A, Briller V, Joshi K. Direct assessment of information literacy using writing portfolios. J Acad Librariansh. 2007 Jul;33(4):462–77. [Google Scholar]

- 11.Polkinghorne S, Wilton S. Research is a verb: exploring a new information literacy-embedded undergraduate research methods course. Can J Inf Lib Sci. 2010 Dec;34(4):457–73. [Google Scholar]

- 12.Sharma S. Perspectives on: from chaos to clarity: using the research portfolio to teach and assess information literacy skills. J Acad Librarianshp. 2007 Jan;33(1):127–35. [Google Scholar]

- 13.Rui W. The lasting impact of a library credit course. Portal: Lib Acad. 2006;6(1):79–92. [Google Scholar]

- 14.Breivic P.S. Putting libraries back in the information society. Am Lib. 1985 Nov;16:723. [Google Scholar]

- 15.Breivik P.S. Making the most of libraries in the search for academic excellence. Change. 1987 Jul–Aug;19:46. [Google Scholar]

- 16.Breivik P.S, Gee E.G. Information literacy: revolution in the library. New York, NY: Macmillan; 1989. [Google Scholar]

- 17.Mellon C.A. Process not product in course-integrated instruction: a generic model of library research. Coll Res Lib. 1984 Nov;45(6):471–8. [Google Scholar]

- 18.Mellon C.A, editor. Bibliographic instruction: the second generation. Englewood, CO: Libraries Unlimited; 1987. [Google Scholar]

- 19.Nowicki S. Information literacy and critical thinking in the electronic environment. J Instr Deliv Syst. 1999 Winter;13(1):25–8. [Google Scholar]

- 20.Foust J.E, Tannery N.H, Detlefsen E.G. Implementation of a web-based tutorial. Bull Med Lib Assoc. 1999 Oct;87(4):477–9. [PMC free article] [PubMed] [Google Scholar]

- 21.Kelley K.B, Orr G.L, Houck J, Schweber C. Library instruction for the next millennium: two web-based courses to teach distant students information literacy. J Lib Adm. 2001;32(1–2):281–94. [Google Scholar]

- 22.Lindsay E.B. Distance teaching: comparing two online information literacy courses. J Acad Librariansh. 2004;30:482–7. [Google Scholar]

- 23.Maughan P.D. Assessing information literacy among undergraduates: a discussion of the literature and the University of California–Berkeley assessment experience. Coll Res Lib. 2001;62(1):71–85. [Google Scholar]

- 24.Onwuegbuzie A.J, Jiao Q.G. The relationship between library anxiety and learning styles among graduate students: implications for library instruction. Lib Inf Sci Res. 1998;20(3):235–49. [Google Scholar]

- 25.Ren W.H. Library instruction and college student self-efficacy in electronic information searching. J Acad Librariansh. 2000;26(5):323–8. [Google Scholar]

- 26.Stamatoplos A, Mackoy R. Effects of library instruction on university students' satisfaction with the library: a longitudinal study. Coll Res Lib. 1998;59(4):323–34. [Google Scholar]

- 27.Schilling K. Information retrieval skills development in electronic learning environments: the impact of training method on students' learning outcomes, information usage patterns, and attitudes. IADIS Int J WWW/Internet. 2006;4(1):27–42. [Google Scholar]

- 28.Green B.F, Lin W, Nollan R. Web tutorials: bibliographic instruction in a new medium. Med Ref Serv Q. 2006;25(1):83–91. doi: 10.1300/J115v25n01_08. [DOI] [PubMed] [Google Scholar]

- 29.Lindsay E.B, Cummings L, Johnson C.M, Scales B.J. If you build it, will they learn? assessing online information literacy tutorials. Coll Res Lib. 2006;67(5):429–45. [Google Scholar]

- 30.Shute S.J, Smith P.J. Knowledge-based search tactics. Inf Process Manag. 1993;29(1):29–45. [Google Scholar]

- 31.Lindsay E.B. Distance teaching: comparing two online information literacy courses. J Acad Librariansh. 2004;30:482–7. [Google Scholar]

- 32.Fafeita J. The current status of teaching and fostering information literacy in Tafe. Aust Acad Res Lib. 2006;37(2):136–61. [Google Scholar]

- 33.Oakleaf M. Dangers and opportunities: a conceptual map of information literacy assessment approaches. Portal: Lib Acad. 2008;8(3):233–53. [Google Scholar]

- 34.Oakleaf M. Are they learning? are we? learning outcomes and the academic library. Lib Q. 2011 Jan;2011(81):61–82. [Google Scholar]

- 35.Zhang L, Watson E.M, Banfield L. The efficacy of computer-assisted instruction versus face-to-face instruction in academic libraries: a systematic review. J Acad Lib. 2007 Jul;33(4):478–84. [Google Scholar]

- 36.Swoger B.J.M. Closing the assessment loop using pre- and post-assessment. Ref Serv Rev. 2011 May;39(2):244–59. [Google Scholar]

- 37.Hufford J.R. What are they learning? pre- and post-assessment surveys for LIBR 1100, Introduction to Library Research. Coll Res Lib. 2010 Mar;71(2):139–58. [Google Scholar]

- 38.Zoellner K, Samson S, Hines S. Continuing assessment of library instruction to undergraduates: a general education course survey research project. Coll Res Lib. 2008 Jul;69(4):370–83. [Google Scholar]

- 39.Staley S.M, Branch N.A, Hewitt T.L. Standardised library instruction assessment: an institution-specific approach. Info Res [Internet] 2010 Sep;15(3) [cited 12 Jun 2012]. < http://www.informationr.net/ir/15-3/paper436.html>. [Google Scholar]

- 40.Gilstrap D.L, Dupre J. Assessing learning, critical reflection, and quality educational outcomes: the critical incident questionnaire. Coll Res Lib. 2008 Sep;69(6):407–26. [Google Scholar]

- 41.Barratt C.C, Nielsen K, Desmet C, Balthazor R. Collaboration is key: librarians and composition instructors analyze student research and writing. Portal: Lib Acad. 2009;9(1):37–46. [Google Scholar]

- 42.Cmor D, Chan A, Kong T. Course-integrated learning outcomes for library database searching: three assessment points on the path to evidence. Evid Based Libr Inf Pract. 2010;5(1):64–81. [Google Scholar]

- 43.Bronshteyn K, Baladad R. Librarians as writing instructors: using paraphrasing exercises to teach beginning information literacy students. J Acad Librariansh. 2006;32(5):533–6. [Google Scholar]

- 44.Figa E, Bone T, MacPherson J.R. Faculty-librarian collaboration for library services in the online classroom: student evaluation results and recommended practices for implementation. J Lib Info Serv Dist Learn. 2009;3(2):67–102. [Google Scholar]

- 45.Blummer B. Assessing patron learning from an online library tutorial. Community Jr Coll Lib. 2007;14(2):121–38. [Google Scholar]

- 46.Hernon P. What really are student learning outcomes? pushing the edge: explore, engage, extend. 2009. pp. 28–35. Proceedings of the Fourteenth National Conference of the Association of College and Research Libraries; Seattle, WA;

- 47.Canick S. Legal research [instruction] assessment. Legal Ref Serv Q. 2009;28:201–17. [Google Scholar]

- 48.Bates M.J. Search and idea tactics. In: White H.D, Bates M.J, Wilson P, editors. For information specialists: interpretations of reference and bibliographic work. Norwood, NJ: Ablex; 1992. pp. 183–200. p. [Google Scholar]

- 49.Fidel R. Moves in online searching. Online Rev. 1985;9(1):61–74. [Google Scholar]

- 50.Pao M.L, Grefsheim S.F, Barclay M.L, Wooliscroft J.O, McQuillan M, Shipman B.L. Factors affecting students' use of MEDLINE. Comput Biomed Res. 1993;26:541–55. doi: 10.1006/cbmr.1993.1038. [DOI] [PubMed] [Google Scholar]

- 51.Pao M.L, Grefsheim S.F, Barclay M.L, Wooliscroft J.O, Shipman B.L, McQuillan M. Effect of search experience on sustained MEDLINE usage by students. Acad Med. 1994;69(11):914–9. doi: 10.1097/00001888-199411000-00014. [DOI] [PubMed] [Google Scholar]

- 52.Wildemuth B.M, Moore M.E. End user searching of Medline. Final report. Washington, DC: Council on Library Resources; 1993 Aug. [Google Scholar]

- 53.Wildemuth B.M, Moore M.E. End-user search behaviors and their relationship to search effectiveness. Bull Med Lib Assoc. 1995 Jul;83(3):294–304. [PMC free article] [PubMed] [Google Scholar]

- 54.Rosenberg W.M.C, Deeks J, Lusher A, Snowball R, Dooley G, Sackett D. Improving searching skills and evidence retrieval. J Royal Coll Physicians. 1998;32(6):557–63. [PMC free article] [PubMed] [Google Scholar]

- 55.Burrows S.C, Tylman V. Evaluating medical student searches of MEDLINE for evidence-based information: process and application of results. Bull Med Lib Assoc. 1999 Oct;87(4):471–6. [PMC free article] [PubMed] [Google Scholar]

- 56.Sobel K, Wolf K. Updating your tool belt: redesigning assessments of learning in the library. Ref User Serv Q. 2011;50(3):245–58. [Google Scholar]

- 57.Schilling K, Applegate R.A. Evaluating library instruction: measures for assessing educational quality and impact. 2007. pp. 206–14. Proceedings of the Thirteenth National Conference of the Association of College and Research Libraries; Baltimore, MD;

- 58.Appelt K.M, Pendell K. Assess and infest: faculty feedback on library tutorials. Coll Res Lib. 2010 May;71(3):245–53. [Google Scholar]

- 59.Ariew S, Lener E. Evaluating instruction: developing a program that supports the teaching librarian. Res Strat. 2007;20:506–15. [Google Scholar]

- 60.Weisskirch R.S, Silveria J.B. The effectiveness of project-specific information competence instruction. Res Strateg. 2007;20:370–8. [Google Scholar]

- 61.Crawford J. The use of electronic information services and information literacy: a Glasgow Caledonian University study. J Librariansh Inf Sci. 2006;38(1):33–44. [Google Scholar]

- 62.Plutchak T.S. On the satisfied and inept end user. Med Ref Serv Q. 1989 Spring;8(1):45–8. [Google Scholar]

- 63.Schilling K, Wiecha J, Polineni D, Khalil S. An interactive web-based curriculum on evidence-based medicine: design and effectiveness. Fam Med. 2006 Feb;38(2):126–32. [PubMed] [Google Scholar]

- 64.Kisby C.M. Self-assessed learning and user-satisfaction in regional campus libraries. J Acad Librariansh. 2011;37(6):523–31. [Google Scholar]

- 65.Xin W, Kwangsu C. Computational linguistic assessment of genre differences focusing on text cohesive devices of student writing: implications for library instruction. J Acad Librarianshp. 2010 Nov;36(6):501–10. [Google Scholar]

- 66.Palomba C.A, Banta T.W. Assessment essentials: planning, implementing, and improving assessment in higher education. San Francisco, CA: Jossey-Bass; 1999. [Google Scholar]

- 67.Applegate R. Models of user satisfaction: understanding false positives. RQ. 1993;32(4):525–39. [Google Scholar]

- 68.Edge D. Quantitative measures of communication in science: a critical review. History Sci. 1979;17(35):102–34. doi: 10.1177/007327537901700202. [DOI] [PubMed] [Google Scholar]

- 69.Lattuca L, Terenzini P.T, Volkwein J.F. Engineering change: a study of the effect of Ec2000: executive summary [Internet] ABET; 2006 [cited 29 Apr 2012]. < http://www.abet.org/uploadedFiles/Publications/Special_Reports/EngineeringChange-executive-summary.pdf>. [Google Scholar]

- 70.Suskie L. Assessing student learning: a common sense guide. San Francisco, CA: Jossey-Bass; 2009. [Google Scholar]