INTRODUCTION

Evidence-based medicine (EBM) is an important component of an undergraduate medical education curriculum that promotes lifelong learning and critical thinking [1]. Defined as “the conscientious, explicit, and judicious use of current best evidence in making decisions about the care of individual patients” [2], EBM can be considered another important clinical tool, not unlike the stethoscope. The authors designed and assessed an innovative EBM course for third-year medical students to strengthen the EBM curriculum for undergraduate medical education.

BACKGROUND

Previous studies have advocated real-time teaching of EBM during attending rounds, in a case-based format with role playing or with standardized patients [3–5]. At the same time, brief training in literature searching and critical appraisal skills has been shown to be effective, and interactive workshops have been suggested as an ideal format for medical students [6–8]. At many academic medical centers, including the authors', constraints on resident and student duty hours and increased demands for productivity from clinical teaching faculty have made adding formal curricula to the clinical services problematic. In an effort to combine an interactive learning environment with core topics in EBM, we devised a multidisciplinary, case-based seminar series that is team-taught by clinicians and a librarian and is designed to be interactive, engaging, and clinically relevant.

METHODS

Designed by a medical librarian and a physician (Schardt and Gagliardi), the EBM course is intended to provide an interactive forum for students to develop skills in practicing EBM in the care of patients. The first version of the EBM course was offered as a noncredit elective to 8 third-year medical students in 2006 and consisted of 6 90-minute sessions conducted over the course of the spring semester. After experimenting with a 10-session format, we settled on 6 120-minute sessions conducted on consecutive Thursday evenings, from 5:00 p.m. to 7:00 p.m.

After the third iteration of the course in 2008, it became a credit-bearing elective, which required opening it not only to third-year medical students, but also to fourth-year medical students. Since the 2008/09 academic year, the EBM course has attracted approximately forty students per year. The current study with pre- and post-course assessments of knowledge as well as attitudes was conducted during the 2008/09 academic year.

The EBM course is designed to optimize student readiness and participation, faculty willingness to teach, and instructional strategies to maximize retention and use of EBM skills, with key features outlined below.

1. Timing in the curriculum

At our institution, medical students complete their required clinical clerkships during the second year. The EBM course is designed for students who are pursuing academic endeavors during the third year, which is dedicated to research. This is optimal timing because students have completed their required clinical clerkships and, therefore, have had some patient care experience along with the opportunity to observe and practice EBM in a variety of settings. Additionally, as they are about halfway through their scholarly research activity year, they are cognizant of some of the logistical and practical issues involved in designing and conducting scientific research.

2. Course organization and faculty

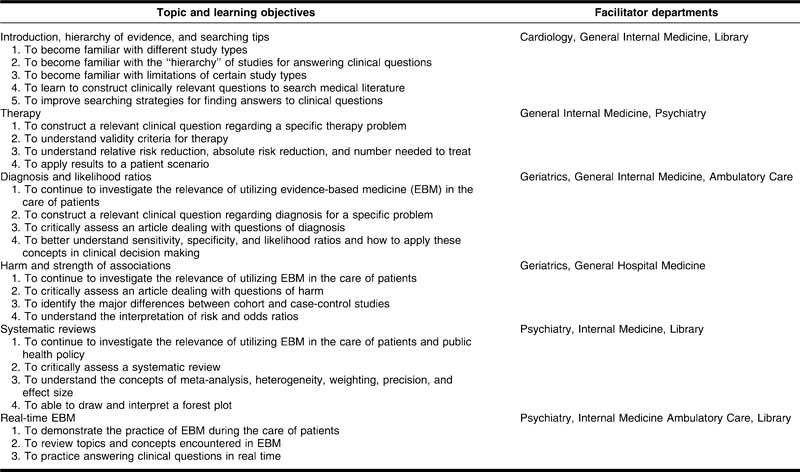

Table 1 shows the course outline. The introductory session provides an overview of the EBM framework and the basics of study design. The subsequent four sessions are case based and teach relevant issues pertaining to the retrieval, appraisal, and application of studies of therapy, diagnosis, harm, and systematic reviews. The final session is designed to demonstrate the real-time application of EBM skills. Clinicians from the emergency department and in-patient units take students through actual clinical cases they have encountered and demonstrate how they used EBM to contribute to their care of actual patients. Each case usually takes five to fifteen minutes and effectively demonstrates that EBM can be incorporated into clinical practice.

Table 1.

Evidence-based medicine course curriculum

We rely on a diverse faculty with a broad spectrum of clinical interest and experience. Facilitators are clinically based and recruited from departments and disciplines in which they are likely to have encountered many of the students in clinical settings. In this way, no one faculty member is responsible for delivering of all content, students benefit from witnessing the use of EBM skills by a variety of clinicians and role models, and faculty members are receptive to repeat solicitations for participation since 2006. The two course directors attend all sessions and provide continuity and clarification throughout the course.

3. Interactive teaching strategies

Research has demonstrated the importance of active participation for optimal learning about EBM [9]. Instructors in our EBM course are encouraged to adopt as many interactive strategies as possible. Instructors are recruited from a group of faculty with interest in and enthusiasm for teaching EBM. All faculty have participated as tutor-trainees and/or tutors in the annual “Duke Evidence-Based Medicine Workshop: Teaching and Leading EBM.” This workshop focuses on developing facilitation and interactive teaching skills to promote the practice of EBM.

Another strategy we use for ongoing faculty development is a team-teaching approach, in which we try to pair experienced teachers with more novice teachers. In these sessions, the junior teacher is encouraged to take a lead role in developing and teaching the session, while the experienced teacher is available for guidance and to model interactive strategies.

One important component is the informal setting and tone instructors adopt with learners, which encourages interactive banter and case-based discussions. We provide food, such as pizza or sandwiches. In the large group setting, the audience response system (TurningPoint) has been useful to project multiple-choice queries that quickly and anonymously assess student knowledge, interject humor, and keep learners engaged. Some session leaders use props, such as two different candies in sealed envelopes to illustrate randomization, shooting basketball hoops or tossing a coin to demonstrate chance and the concepts underlying odds, or throwing darts to illustrate sampling error and the difference between precision and accuracy. Session leaders may call on students to volunteer to participate in role playing or visual demonstrations of study design. Using nonclinical examples is another strategy that keeps the tone interactive and humorous. This allows the instructors to focus on the process of EBM rather than the science of medicine. A cornerstone method of active participation involves breaking the large group into small groups to practice with validity criteria and mathematical calculations or debate about the results and application of a study. After breaking into small groups, the large group reconvenes and small groups report on their discussions.

4. Continuous use of student feedback

Students are requested to complete a written evaluation at the conclusion of each session. The evaluation asks for an overall rating of the session and then asks what is the most important point that the student learned, whether any points are still confusing, what was the most significant weakness of the session, what was the most significant strength of the session, and any other feedback that the student would like to share. One author (Gagliardi) compiles all of the responses to the evaluations in an Excel workbook and provides the compiled responses to the faculty leaders for that session as well as for the upcoming session. This allows course directors to identify and then, during sessions, clarify or expand on points that students found confusing.

5. Course leadership

The EBM course is directed by a medical librarian (Schardt) and a clinician (Gagliardi), who work together to develop the curriculum, identify appropriate faculty, schedule the classes, organize the media and audience response system slides, analyze feedback, attend each session, and co-teach sessions. This effective collaboration developed out of the library's presence and persistence in a variety of clinical education venues and its active role in developing a framework for teaching EBM in the Medical School curriculum [10].

OUTCOMES

Previous studies have assessed the impact of EBM courses on learner perceptions and knowledge [5–7, 11–13]. Our assessment included a feedback survey and the Berlin questionnaire, a validated instrument for measuring knowledge and skills in EBM. Compared with the Fresno test, which emphasizes some of the qualitative aspects of EBM and requires a scoring rubric and some subjective judgment as to the correctness of an individual answer [14], the Berlin questionnaire more closely reflects our curriculum and emphasizes the quantitative aspects of EBM with an objective scoring system [13]. The Berlin questionnaire form A and the Berlin questionnaire form B are two different fifteen-question, multiple-choice tests of knowledge and EBM skills that have been previously validated in assessments of educational intervention in EBM [13], so that scores obtained on Berlin questionnaire form A are comparable to scores obtained on Berlin questionnaire form B, minimizing the possibility of bias from learning the instrument. Permission to use the Berlin questionnaires was granted by R. Kunz, one of the developers of the instrument. We also designed survey questions to assess student attitudes and confidence regarding EBM skills (Appendix, online only).

Students were required to complete the survey of their attitudes about EBM along with the Berlin questionnaire form A before the start of the EBM course. At the conclusion of the EBM course, students were required to complete the Berlin questionnaire form B. Five months after the course, students were asked to complete an online, anonymous survey of their attitudes about EBM. SurveyMonkey <www.surveymonkey.com> was used to administer the pre- and post-course questionnaire and survey. Student responses were tracked for purposes of completion, but individual responses were not identifiable. Responses to surveys and questionnaires were not viewed until after completion of the course and submission of grades. Study procedures were approved as exempt from institutional review board review in accordance with Title 45 of the Code of Federal Regulations, Part 46.101(b), given the objective to evaluate a method of educational instruction, and collected data were stripped of any identifiers before analysis.

RESULTS

A total of 30 medical students (17 female, 13 male; 18 fourth-year students, 12 third-year students) signed up to take the course. The median overall percent correct score on the Berlin questionnaire form A, for the 29 students who completed the course, was 53% (minimum 7%, maximum 87%). The median overall percent correct on the Berlin questionnaire form B, for the 29 students who completed the course, was 80% (minimum 40%, maximum 100%). Five students performed worse on the post-course assessment; 3 students' scores did not change; and 21 students demonstrated improvement on the post-course assessment. Median change scores and median survey scores were compared, and P-values were determined using the Wilcoxon signed rank test with one exception: The domain of meta-analysis was a dichotomous variable and was compared using McNemar's test. The median difference between overall scores from pre- to post-course administrations was 13% (minimum 13%, maximum 73%), which was statistically significant (P<0.001). Scores for individual domains were significantly better for diagnosis, therapy, and prognosis but not for study design or meta-analysis.

Five months after taking the course, 16 students returned surveys assessing their attitudes regarding EBM. Among these students, their self-reported confidence had improved in formulating a patient-focused clinical question (P = 0.002); assessing the results of a therapy article (P = 0.02); calculating absolute risk reduction, relative risk reduction, and number needed to treat (P = 0.02); and applying the results of studies to patient care (P = 0.002). Student confidence in searching the literature and using likelihood ratios did not increase.

DISCUSSION

Basic, patient-centered EBM skills were a major focus of the seminar's curriculum, and we were gratified to learn that students had gained not only in their abilities, but also their confidence in applying EBM to their patients. We noted that student confidence in searching the medical literature was not improved. As a result of these findings, we changed the previous self-paced, web-based tutorial in searching the medical literature to a small-group, hands-on, required activity during the orientation to the clinical year. We also noted that student confidence in using likelihood ratios did not increase, and we have focused efforts on enhancing this topic in subsequent courses. During the 2009 EBM course, the course directors were not aware of student answers or scores on any assessment until well after the course was completed. In future years, it may be possible to use the Berlin questionnaire as a diagnostic tool to target relative strengths and weaknesses among students taking the course.

Though we did not collect formal feedback from faculty members, it is worth noting that we have had enthusiastic repeat participation from many of our instructors, and we have been able to use the course as a venue for faculty to develop their EBM and interactive teaching strategies.

In summary, key innovative features of the EBM course include flexibility, a multidisciplinary faculty that includes a librarian, an after-hours schedule, a goal of maximizing interactivity in every session, and placement in the curriculum after required clerkships to maximize clinical relevance. Medical student performance has been evaluated using the Berlin questionnaire, which demonstrated that EBM knowledge and skills improved after our six-week course. This EBM course can serve as a model for efficient, cost-effective delivery of important clinically relevant EBM content.

Electronic Content

Footnotes

A supplemental appendix is available with the online version of this journal.

REFERENCES

- 1.Welch H.G, Lurie J.D. Teaching evidence-based medicine: caveats and challenges. Acad Med. 2000 Mar;75(3):235–40. doi: 10.1097/00001888-200003000-00010. [DOI] [PubMed] [Google Scholar]

- 2.Sackett D.L, Rosenberg W.M, Gray J.A, Haynes R.B, Richardson W.S. Evidence based medicine: what it is and what it isn't: it's about integrating individual clinical expertise and the best external evidence. BMJ. 1996 Jan 13;312(13):71–2. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.McGinn T, Seltz M, Korenstein D. A method for real-time, evidence-based general medical attending rounds. Acad Med. 2002 Nov;77(11):1150–2. doi: 10.1097/00001888-200211000-00019. [DOI] [PubMed] [Google Scholar]

- 4.Korenstein D, Dunn A, McGinn T. Mixing it up: integrating evidence-based medicine and patient care. Acad Med. 2002 Jul;77(7):741–2. doi: 10.1097/00001888-200207000-00028. [DOI] [PubMed] [Google Scholar]

- 5.Davidson R.A, Duerson M, Romrell L, Pauly R, Watson R.T. Evaluating evidence-based medicine skills during a performance-based examination. Acad Med. 2004 Mar;79(3):272–5. doi: 10.1097/00001888-200403000-00016. [DOI] [PubMed] [Google Scholar]

- 6.Gruppen L.D, Rana G.K, Arndt T.S. A controlled comparison study of the efficacy of training medical students in evidence-based medicine literature searching skills. Acad Med. 2005 Oct;80(10):940–4. doi: 10.1097/00001888-200510000-00014. [DOI] [PubMed] [Google Scholar]

- 7.Norman G.R, Shannon S.I. Effectiveness of instruction in critical appraisal (evidence-based medicine) skills: a critical appraisal. CMAJ. 1998 Jan;158(2):177–81. [PMC free article] [PubMed] [Google Scholar]

- 8.Ellis P, Green M, Kernan W. An evidence-based medicine curriculum for medical students: the art of making focused clinical questions. Acad Med. 2000 May;75(5):528. doi: 10.1097/00001888-200005000-00051. [DOI] [PubMed] [Google Scholar]

- 9.Kunz R, Wegscheider K, Fristche L, Schünemann H.J, Moyer V, Miller D, Boluyt N, Falck-Ytter Y, Griffiths P, Bucher H.C, Timmer A, Meyerrose J, Witt K, Dawes M, Greenhalgh T, Guyatt G.H. Determinants of knowledge gain in evidence-based medicine short courses: an international assessment. Open Med [Internet] 2010;4(1):e3–e10. doi: 10.2174/1874104501004010003. [cited 14 Jun 2012]. < http://www.openmedicine.ca/article/viewArticle/299/291 3/28/2012>. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Schardt C, Powers A, von Isenburg M. Weaving evidence-based medicine into the school of medicine curriculum: the library's role in developing evidence-based clinicians. Presented at MLA '07, the Medical Library Association 107th Annual Meeting; Philadelphia, PA; May 2007.

- 11.Dobbie A.E, Schneider F.D, Anderson A.D, Littlefield J. What evidence supports teaching evidence-based medicine. Acad Med. 2000 Dec;75(12):1184–5. doi: 10.1097/00001888-200012000-00010. [DOI] [PubMed] [Google Scholar]

- 12.Kasuya R.T, Sakai D.H. An evidence-based medicine seminar series. Acad Med. 1996 May;71(5):548–9. doi: 10.1097/00001888-199605000-00073. [DOI] [PubMed] [Google Scholar]

- 13.Fritsche L, Greenhalgh T, Falck-Ytter Y, Neumayer H.H, Kunz R. Do short courses in evidence based medicine improve knowledge and skills? validation of Berlin questionnaire and before and after study of courses in evidence based medicine. BMJ. 2002 Dec 7;325(7376):1338–41. doi: 10.1136/bmj.325.7376.1338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ramos K.D, Schafer S, Tracz S.M. Validation of the Fresno test of competence in evidence based medicine. BMJ. 2003 Feb 8;326(7384):319–21. doi: 10.1136/bmj.326.7384.319. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.