Abstract

Face aftereffects (FAEs) are generally thought of as being a visual phenomenon. However, recent studies have shown that people can haptically recognize a face. Here, I report a haptic, rather than visual, FAE. By using three-dimensional facemasks, I found that haptic exploration of the facial expression of the facemask causes a subsequently touched neutral facemask to be perceived as having the opposite facial expression. The results thus suggest that FAEs can also occur in haptic perception of faces.

Keywords: face aftereffect, haptics

Face adaptation is a powerful paradigm for investigating the neural representations involved in face processing. One measure for such an adaptation is the face aftereffect (FAE), in which adaptation to a visually presented face belonging to a facial category, such as expression and identity, causes a subsequently neutral face to be perceived as belonging to an opposite facial category (Webster et al 2004). The finding of larger FAEs for upright than for inverted faces is consistent with a locus of the fusiform face area (Rhodes et al 2009). FAEs are likely associated with adaptation of face-selective neurons in higher-level areas of the visual cortex, although this has yet to be demonstrated.

Face adaptation is generally assumed to occur for complex visual attributes that are products of higher-order processing. This assumption appears to be based on the experience that people usually recognize a face through sight. However, recent studies have shown that people are also able to haptically classify facial expressions (eg, Lederman et al 2007), thus suggesting that face processing is not unique to vision. Here, I show that haptic exploration of the facial expression in a three-dimensional facemask can cause significant bias in the perception of a subsequently touched neutral facemask.

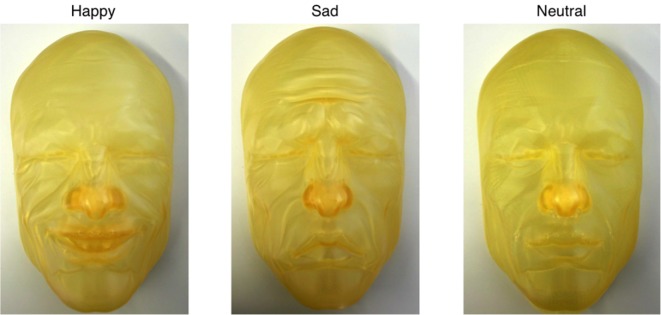

An exemplar of the front view of a male face was taken from a human model included in computer graphics (CG) software. Three types of facial expressions (happy, sad, and neutral) were generated from the exemplar using this CG software, and masks of these faces were made from epoxy-cured resin for use in the experiments (Figure 1).

Figure 1.

Facemasks depicting happy, sad, and neutral faces, respectively.

Twelve male participants aged 22–39 were tested in the experiments.

As a pilot experiment, I tested whether, without learning, the participants could distinguish correctly between two emotional expressions (happiness and sadness) by haptically exploring the facemasks with their eyes closed. The participants were instructed to respond verbally to the presented facial expression by using one of two alternatives: happiness or sadness. The pilot test confirmed that all participants could distinguish correctly between the happy and sad facemasks, even without learning. After the trials, the participants were asked to report on the facial regions that they touched during expression recognition. Their answers indicated that the areas around the eyes–eyebrows and mouth–lips were important for haptic judgment of facial expression. These findings are consistent with Lederman et al's study.

Two main experiments were then conducted: one without adaptation and the other with adaptation. In the no-adaptation experiment, participants explored a test facemask for 5 s without adaptation. The test facemask was randomly chosen from three facemasks (happy, sad, and neutral). Each participant explored each test facemask once with a 1 min break between trials. The percentage of sad responses from participants was calculated for each test facemask. In the adaptation experiment, an adaptation facemask was positioned on the left side of a table in front of the participant, and a test facemask was placed on the right. During adaptation, participants haptically explored the adaptation facemask with their eyes closed for 20 s, after which they haptically explored the test facemask for 5 s. Participants were then requested to classify the test facemask as either happy or sad. The adaptation experiment was performed under two adaptation conditions: (1) with adaptation to a happy facemask and (2) with adaptation to a sad facemask. In both cases, the expression of the test facemask was neutral. Each participant explored the test facemask once for each adaptation condition with a 1-min break between tests. The percentage of sad responses from participants was calculated for each adaptation condition.

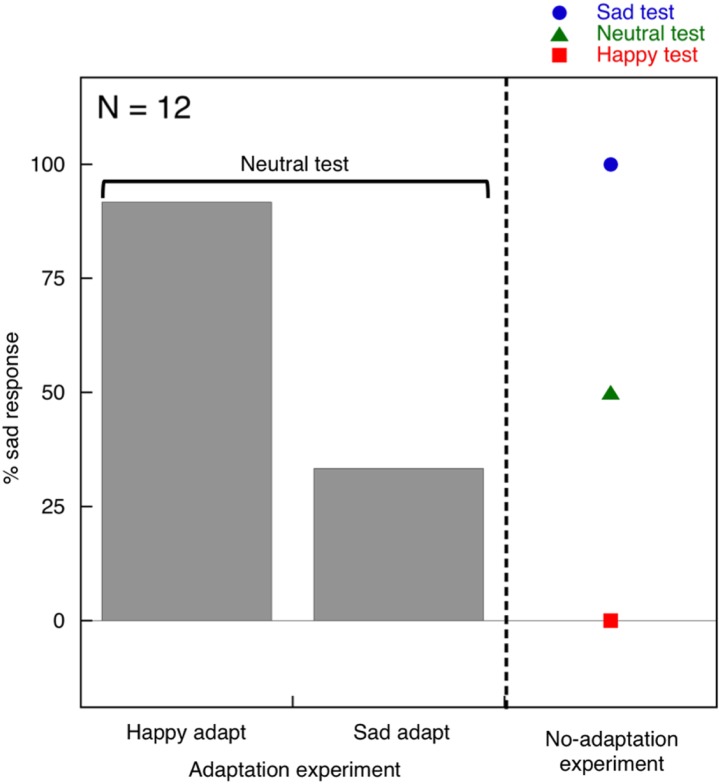

Figure 2 shows the percentage of sad responses for the adaptation and no-adaptation experiments. When the happy or sad facemask was presented in the no-adaptation experiment, all participants identified the emotion correctly for each facemask. However, the percentage of sad responses for the neutral facemask was 50%. In contrast, in the adaptation experiment, the percentage of sad responses under the condition of adaptation to a happy facemask was larger than under that of adaptation to the sad facemask, even though the same neutral facemask was used as the test stimulus under both adaptation conditions. A two-way χ ² test revealed a significant difference between the two adaptation conditions (x21 = 8.71, p < .005). Furthermore, performance increased under the happy facemask adaptation condition compared with that of the neutral test facemask in the no-adaptation experiment. However, performance under the sad facemask adaptation condition showed a decreasing trend relative to that of the neutral test facemask in the no-adaptation experiment. These results indicate that adaptation to a haptic face that belongs to a specific facial expression causes a subsequently touched neutral face to be perceived as having the opposite facial expression, suggesting that FAEs can be observed in haptic perception of faces.

Figure 2.

Percentage of sad responses for the no-adaptation and adaptation experiments. Gray bars represent the performance of the neutral test facemask in the adaptation experiment.

What are the underlying mechanisms of haptic FAEs? Haptic identification of facial expressions is associated with activity in the inferior frontal gyrus, inferior parietal lobe, and superior temporal sulcus (Kitada et al 2010). Haptic FAEs may tap into adaptation occurring at these higher cortical areas. Alternatively, given that imagined faces can induce FAEs (Ryu et al 2008), haptic FAEs could be mediated by visual imagery generated from touching a face. Moreover, unambiguous adaptors can induce a repulsive bias in the perception of a subsequently presented ambiguous stimulus (Daelli 2011). Thus, haptic FAEs may reflect such a categorization bias. As a future investigation, it would be informative to examine these potential causes in detail.

It remains unknown as to how the haptic system processes facial information. The haptic FAEs will serve as a valuable probe for understanding the mechanisms involved in haptic face processing.

Acknowledgments

I thank Dr Derek H Arnold and the anonymous referee for their helpful comments. This work was supported by a Grant-in-Aid for Scientific Research on Innovative Areas, “Face perception and recognition” from MEXT KAKENHI (23119704) and by the Research Institute of Electrical Communication, Tohoku University Original Research Support Program to KM.

References

- Daelli V. High-level adaptation aftereffects for novel objects: the role of pre-existing representations. Neuropsychologia. 2011;49:1923–1927. doi: 10.1016/j.neuropsychologia.2011.03.019. [DOI] [PubMed] [Google Scholar]

- Kitada R. Johnsrude I S. Kochiyama T. Lederman S J. Brain networks involved in haptic and visual identification of facial expressions of emotion: an fMRI study. NeuroImage. 2010;49:1677–1689. doi: 10.1016/j.neuroimage.2009.09.014. [DOI] [PubMed] [Google Scholar]

- Lederman S J. Klatzky R L. Abramowicz A. Salsman K. Kitada R. Hamilton C. Haptic recognition of static and dynamic expressions of emotion in the live face. Psychological Science. 2007;18:158–164. doi: 10.1111/j.1467-9280.2007.01866.x. [DOI] [PubMed] [Google Scholar]

- Rhodes G. Evangelista E. Jeffery L. Orientation-sensitivity of face identity aftereffects. Vision Research. 2009;49:2379–2385. doi: 10.1016/j.visres.2009.07.010. [DOI] [PubMed] [Google Scholar]

- Ryu J J. Borrmann K. Chaudhuri A. Imagine Jane and identify John: face identity aftereffects induced by imagined faces. PLoS ONE. 2008;3:e2195. doi: 10.1371/journal.pone.0002195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Webster M A. Kaping D. Mizokami Y. Duhamel P. Adaptation to natural facial categories. Nature. 2004;428:557–561. doi: 10.1038/nature02420. [DOI] [PubMed] [Google Scholar]