Abstract

We used a dynamic auditory spatial illusion to investigate the role of self-motion and acoustics in shaping our spatial percept of the environment. Using motion capture, we smoothly moved a sound source around listeners as a function of their own head movements. A lowpass filtered sound behind a listener that moved in the direction it would have moved if it had been located in the front was perceived as statically located in front. The contrariwise effect occurred if the sound was in front but moved as if it were behind. The illusion was strongest for sounds lowpass filtered at 500 Hz and weakened as a function of increasing lowpass cut-off frequency. The signals with the most high frequency energy were often associated with an unstable location percept that flickered from front to back as self-motion cues and spectral cues for location came into conflict with one another.

Keywords: auditory illusion, front back confusion, head movement, spatial processing, egocentric motion, auditory vestibular interaction

The term “front/back confusion” refers to a common error in sound localization: a mistaken percept of a sound source being behind you when in fact it is in front, or vice versa. If a sound source is located either directly ahead or directly behind, then in both cases the interaural time difference between the two sounds is zero, so creating a fundamental ambiguity. This ambiguity happens for any pair of front and back sound locations that share the same subtended angle off the listener's midline. It is thought that head movements contribute to our ability to resolve this problem (Wightman and Kistler 1999): if a listener turns 10° to the right, then from the perspective of the listener a sound source that was actually in the front will move 10° to the left, whereas a sound source that was actually behind will move 10° to the right. Thus by turning the head and noting the direction in which a sound moves, one can determine whether it is coming from the front or the back.

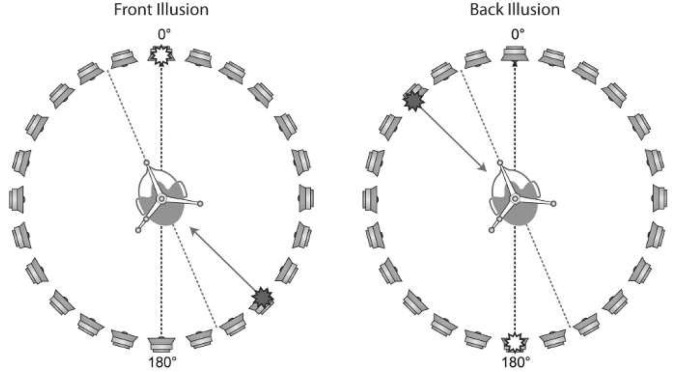

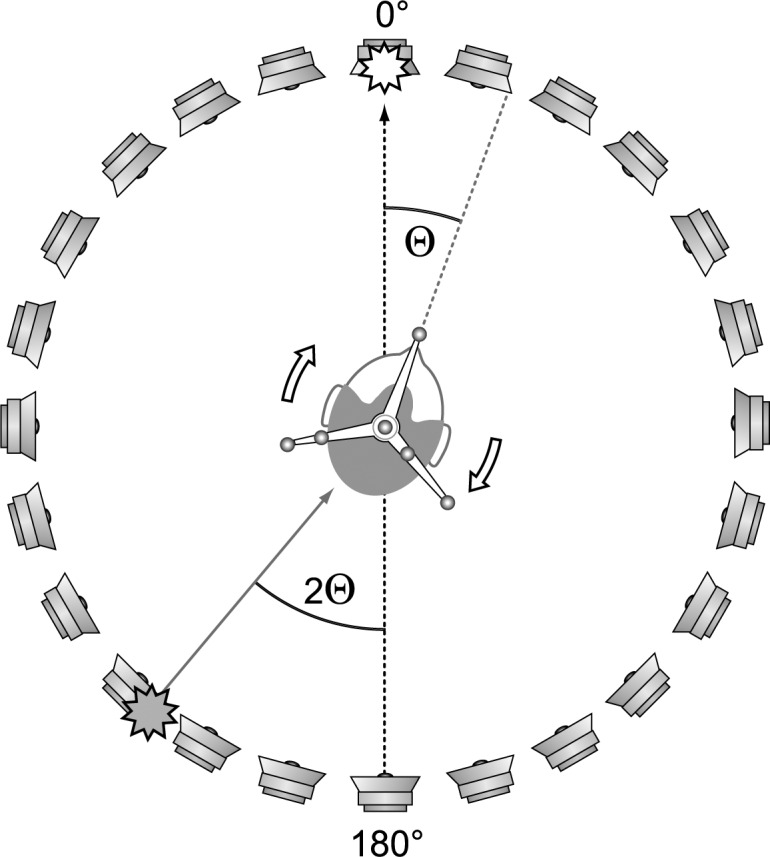

Wallach (1940) showed that it is possible to use head movements to create a front/back confusion. The key is to move a sound source in real-time at twice the rotation rate of a listener's head. The geometry is shown in Figure 1: if a listener turns Θ degrees and the sound source is rotated by 2Θ degrees relative to the 180° loudspeaker, then the listener perceives the sound to be statically located at 0°. Wallach's apparatus was a rotary switch attached to the head that would send an audio signal to different loudspeakers as the head turned. Modern advances in motion-tracking technology and computer processing have now made it possible to use software to synchronously and smoothly move a sound source as a real-time function of head movements, thus opening up the phenomenon to systematic investigation. We report here a modernization of Wallach's illusion and a test of how its salience is affected by the spectrum of the signal.

Figure 1.

For the front illusion, the presentation angle of the acoustic signal (filled star) relative to the rear 180° loudspeaker was updated 100 times per second by multiplying the current head angle Θ by two. The percept of this moving sound should be that of a static sound located at 0° (open star).

Animation 1.

This animation demonstrates how the front and back illusions are generated. The signal is presented from the location marked by the filled star but appears to emanate from the location marked by the open star. Click image to view animation.

We used the output of a motion-tracking system to drive sound presentation in a 1.8 m diameter circular ring of 24 loudspeakers placed at intervals of 15°. Using six infrared motion tracking cameras (Vicon MX3+) running at 100 Hz, we measured the 3-D location of reflective markers attached to a head-mounted crown (Brimijoin et al 2010); this gave the azimuthal angle of the listener's head. Four conditions were run: (1) “static front,” in which a signal was presented at 0°; (2) “static back,” in which a signal was presented at 180°; (3) “front illusion,” in which the presentation angle was varied as described above; and (4) “back illusion,” in which presentation angle was 2Θ relative to the front (0°) loudspeaker. For between-loudspeaker presentation angles, the signal was presented from the two closest loudspeakers using equal power panning. The audio was buffered in segments of 10 ms duration, and the transitions between these segments were crossfaded using 1.5 ms linear ramps.

For stimuli, we used sentences drawn from the Adaptive Sentence List corpus (MacLeod and Summerfield 1987); these were lowpass filtered at 0.5, 1, 2, 4, 8, and 16 kHz. Each possible pair of condition/cutoff frequency was repeated 36 times with condition type and lowpass frequency fully randomized. Seven normal-hearing listeners were recruited; they were seated in the center of the ring and were asked to keep their heads in constant rotational motion between the loudspeakers at ±15°. Listeners used a wireless keypad to report whether a given sentence was ahead or behind them. The experiment was conducted in accordance with procedures approved by the West of Scotland Research Ethics Service.

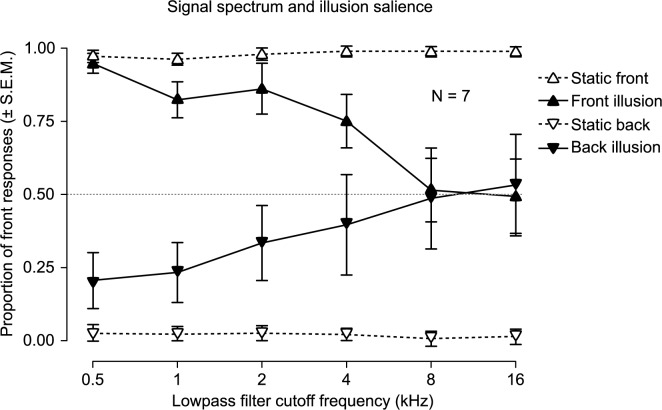

Figure 2 shows the average results across all listeners. We found that the location percepts of the two static conditions were unaffected by lowpass cutoff frequency. The perceived location of the two illusion conditions, however, was strongly dependent on frequency content. Both the front and back illusions were strongest when the sentences were lowpass filtered at a cutoff frequency of 500 Hz. The front illusion was slightly stronger than the back illusion. The effectiveness of both illusions dropped as a function of increasing cutoff frequency.

Figure 2.

Results from the experiment: the proportion of “front” responses made in each condition. Error bars are one standard error of the mean.

The most parsimonious explanation for the frequency-dependent drop in illusion strength is that higher frequencies, particularly those above 4 kHz, contain information on the target location that conflicts with the illusory location. The head and pinnae act as directionally-dependent filters, altering the signal spectrum as a function of sound source direction (Blauert 1997). For full-band signals in the illusory condition, therefore, the way in which the signals move relative to the head indicates an opposite source direction from that indicated by the signal spectrum. For these signals, the proportion of front responses drops to chance (0.5), suggesting that self-motion cues and spectral cues are similarly weighted. Furthermore, listeners often reported that the apparent position of the sentences would fluctuate in a rapid and unstable manner between front and back. Thus the data may be the result of the development of an unstable bimodal percept.

Acknowledgments

All research was funded by the Medical Research Council and the Chief Scientist Office (Scotland). We would like to thank William Whitmer and David McShefferty for invaluable comments on drafts of this manuscript.

Contributor Information

W Owen Brimijoin, MRC Institute of Hearing Research (Scottish Section), Glasgow Royal Infirmary, 16 Alexandra Parade, Glasgow G31 2ER, UK; email: owen@ihr.gla.ac.uk.

Michael A Akeroyd, MRC Institute of Hearing Research (Scottish Section), Glasgow Royal Infirmary, 16 Alexandra Parade, Glasgow G31 2ER, UK; email: maa@ihr.gla.ac.uk.

References

- Blauert J. Spatial hearing. The psychophysics of human sound localization. Cambridge, MA: MIT Press; 1997. [Google Scholar]

- Brimijoin W O. McShefferty D. Akeroyd M A. Auditory and visual orienting responses in listeners with and without hearing-impairment. The Journal of the Acoustical Society of America. 2010;127:3678–3688. doi: 10.1121/1.3409488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacLeod A. Summerfield Q. Quantifying the contribution of vision to speech perception in noise. British Journal of Audiology. 1987;21:131–141. doi: 10.3109/03005368709077786. [DOI] [PubMed] [Google Scholar]

- Wallach H. The role of head movements and vestibular and visual cues in sound localization. Journal of Experimental Psychology. 1940;27:339–367. doi: 10.1037/h0054629. [DOI] [Google Scholar]

- Wightman F L. Kistler D J. Resolution of front-back ambiguity in spatial heading by listener and source movement. The Journal of the Acoustical Society of America. 1999;105:2841–2853. doi: 10.1121/1.426899. [DOI] [PubMed] [Google Scholar]