Abstract

Objective

Computerized provider order entry (CPOE) with clinical decision support (CDS) can help hospitals improve care. Little is known about what CDS is presently in use and how it is managed, however, especially in community hospitals. This study sought to address this knowledge gap by identifying standard practices related to CDS in US community hospitals with mature CPOE systems.

Materials and Methods

Representatives of 34 community hospitals, each of which had over 5 years experience with CPOE, were interviewed to identify standard practices related to CDS. Data were analyzed with a mix of descriptive statistics and qualitative approaches to the identification of patterns, themes and trends.

Results

This broad sample of community hospitals had robust levels of CDS despite their small size and the independent nature of many of their physician staff members. The hospitals uniformly used medication alerts and order sets, had sophisticated governance procedures for CDS, and employed staff to customize CDS.

Discussion

The level of customization needed for most CDS before implementation was greater than expected. Customization requires skilled individuals who represent an emerging manpower need at this type of hospital.

Conclusion

These results bode well for robust diffusion of CDS to similar hospitals in the process of adopting CDS and suggest that national policies to promote CDS use may be successful.

Keywords: Clinical, decision support systems, medical order entry systems

Background and significance

Evidence demonstrates that computerized provider order entry (CPOE) with clinical decision support (CDS) can enhance healthcare quality and efficiency.1–5 Based partly on these results, the meaningful use requirements for Medicare and Medicaid reimbursement specified under the American Recovery and Reinvestment Act include CPOE with escalating amounts of CDS,6 which we define as ‘passive and active referential information as well as computer-based order sets, reminders, alerts, and condition (-specific) or patient-specific data displays that are accessible at the point of care’ (7 p. 524). Only 11.9% of the 5795 US hospitals have either basic or comprehensive electronic records, however, and most are larger, urban academic hospitals, which can mandate use by providers.8 Although 86% of US hospitals are community hospitals,9 only 6.9% of community hospitals have reported having even a basic clinical information system.10 There is some evidence that the use of CDS could have a profound impact on care offered by community hospitals: a recent study of six community hospitals found that these hospitals actually had higher adverse drug event rates than academic hospitals and that a higher proportion appeared to be potentially preventable using CDS.11 Despite the potential for CDS use in community hospitals, little research about CDS availability has been conducted in these settings.12 A few survey studies about community hospital practitioners' views of CDS have been published.13–15 The one survey study about the availability of CDS in hospitals reported in the literature was limited to tertiary hospitals in Korea, indicating that of the hospitals with hospital information systems, only 27.3% also had CDS systems.16

Objectives

Given the potential for CDS to impact patient care in community hospitals, we sought to investigate availability and standard practices for management of CDS in community hospitals in the USA by building on our previouis research on CPOE. In 2005, we conducted a telephone interview survey about infusion levels—defined as the extent to which one uses an innovation in a complete and sophisticated manner17—and unintended consequences of CPOE in US acute-care hospitals that reported having CPOE.18 Between 2005 and 2009, we performed ethnographic research focused on CDS in community hospitals19 20 and concluded that major issues for these institutions involved: (1) the role of data as a foundation for CDS; (2) use of measurement and metrics to guide CDS decisions; (3) governance related to decisions about CDS; (4) collaboration among individuals who develop, modify, and manage CDS; and (5) the presence of essential people involved in CDS. Although these results came from seven carefully selected hospitals and thousands of pages of interview and observation data, we did not know if our results could be extended to a broad cross-section of community hospitals with CPOE. Our current study was designed to investigate the applicability of these earlier results to community hospitals.

To discover more about present use and management, which we refer to as standard practices, of CDS in community hospitals, we conducted interviews with CDS experts in US community hospitals with mature CPOE systems to identify standard practices related to CDS in these hospitals. This study is unique in that it is not a study of perceptions of providers, but a survey of what CDS is available and how it is developed and managed.

Materials and methods

Hospital sample

For the 2005 interview survey about the consequences of CPOE, we included all 448 hospitals listed in the HIMSS Analytics database as having CPOE. We added 113 Veterans Affairs (VA) hospitals because we felt there was much to learn from them in terms of the consequences of CPOE. When the 176 responding organizations were compared with other hospitals, there were no statistically significant differences in bed size and teaching hospital status, but there were significant differences in management type (p<0.001) because of the high VA response and geography, with the northeast overrepresented (p=0.007).18

For the 2010 survey, we selected only community hospitals and only those that we succeeded in interviewing 5 years ago because we wanted to track their CPOE infusion progress over time in addition to asking questions about CDS. We defined community hospitals as acute care hospitals that are not members of the Association of Teaching Hospitals. Our sample included 49 hospitals. We obtained human subjects approval for the survey from Oregon Health & Science University, the University of Texas, and Kaiser Permanente.

Informant sample

When possible, we contacted via email the same individual we interviewed 5 years ago. If this person was no longer employed at the hospital, we phoned the hospital and asked to speak with the person most knowledgeable about CPOE and CDS. We established contact via phone or email to explain the project and schedule a phone interview. We audiotaped the interview after the participant gave his/her permission.

Interview instrument

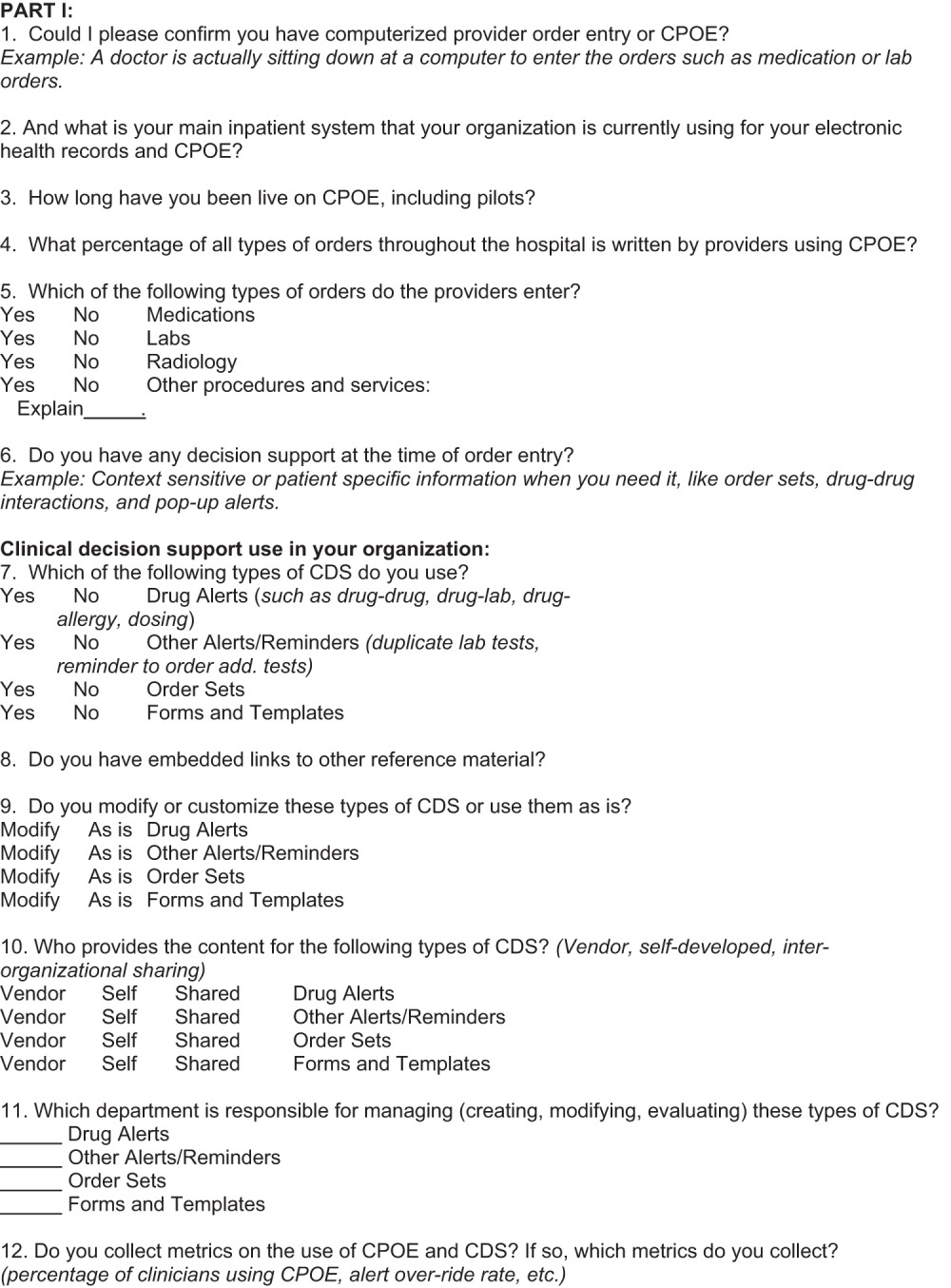

Box 1 shows the interview questions. We formulated our questions based on our qualitative findings, designing them to elicit answers to some fixed-choice questions and some more open-ended questions. We asked several questions about CPOE infusion so that we could compare changes over time (questions 1–6). These included questions about the percentage of orders entered using CPOE, types of orders and existence of CDS. We then asked questions about types of CDS in the hospitals (questions 7–12). The semistructured interview questions (questions 13–20) focused on data, metrics, governance, collaboration, people, and feedback. Because CDS is a requirement for meaningful use, we also included a question asking if the hospital expected to meet these criteria within a year (question 21). We piloted the interview script with three hospitals not included in the survey population because they were not contacted 5 years ago, as their installations were more recent. Experienced interviewers with informatics, anthropology, or clinical backgrounds administered the survey between June and October 2010.

Box 1. Interview survey questions about CDS in community hospitals.

|

Descriptive statistics and analysis of comments

Using STATA, we compared responding hospitals with non-responding hospitals on bed size, geography, and ownership to find differences. These are generally accepted differentiators.21 We compared changes in infusion for responding hospitals over time. We used descriptive statistics to investigate answers to fixed-choice questions. We analyzed interview transcripts with the assistance of QSR NVivo qualitative data analysis software. We first analyzed answers to each question to find patterns. We then used a grounded theory approach22 to identify overall themes and trends about which we did not directly ask.

Results

We conducted full interviews with representatives from 34 of the 49 hospitals, for a response rate of 69%. Of respondents, 17 were the same individuals we talked with 5 years ago and 17 were different. One hospital no longer had CPOE and other non-respondents refused to be interviewed either because of the time commitment needed or because their hospitals had policies against survey completion. We transcribed all interviews and analyzed free-text answers using qualitative methods.

Roles of informants and hospital demographics

Interviewees held a wide variety of titles. Most respondents, five out of nine, from the VA hospitals were clinical application coordinators who generally had clinical backgrounds, information technology (IT) skills, and CDS management responsibilities. Respondents from other hospitals were two chief medical information officers, six chief information officers, four clinical analysts, four department directors, and others not specified. In the previous study, community hospitals were generally represented by a nurse manager or IT analyst, so there has been a change in the predominant roles of respondents over time as more chief medical information officers and chief information officers have been hired.

Table 1 is a table of hospital characteristics. Using a Fisher's exact test, we found no statistically significant differences when we compared respondents with non-respondents on bed size (p=0.154), ownership (p=0.675), and geography (p=0.106), suggesting that our 34 respondents are representative of the 49 US community hospitals with mature CPOE we talked with 5 years ago. Of respondents, 59% came from hospitals with fewer than 200 beds. Nine hospitals were completely independent, nine were VA hospitals, and 17 belonged to hospital systems. Seven were Shriners hospitals. The abundance of VA and Shriners hospitals is likely because they were well represented in the 2005 survey, having been at the forefront of CPOE implementation. In the discussions below, we describe the VA hospitals separately because they are unique in some respects. We also describe the Shriners hospital system separately because fully 50% of US hospitals belong to similarly consolidated hospital systems.9

Table 1.

Hospital demographics

| Characteristic | Respondent | Non-respondent | Total | Response rate (%) |

| Staffed beds | ||||

| <50 | 4 | 1 | 5 | 80 |

| 50–99 | 8 | 0 | 8 | 100 |

| 100–199 | 8 | 7 | 15 | 53 |

| 200–299 | 3 | 5 | 8 | 38 |

| 300–399 | 7 | 0 | 7 | 100 |

| 400–799 | 3 | 1 | 4 | 75 |

| 800+ | 1 | 1 | 2 | 50 |

| Total | 34 | 15 | 49 | 69 |

| Ownership | ||||

| Not for profit | 21 | 10 | 31 | 68 |

| VA | 9 | 2 | 11 | 82 |

| District, city, county, State | 3 | 2 | 5 | 60 |

| For profit | 1 | 0 | 1 | 100 |

| Church | 0 | 1 | 1 | 0 |

| Total | 34 | 15 | 49 | 69 |

| System status | ||||

| Independent | 9 | NA | ||

| Multihospital | 25 | NA | ||

| Total | 34 | |||

| Geographical location | ||||

| NE | 16 | 9 | 25 | 64 |

| SE | 5 | 2 | 7 | 71 |

| MW | 2 | 4 | 6 | 33 |

| NW | 3 | 0 | 3 | 100 |

| SW | 8 | 0 | 8 | 100 |

| Total | 34 | 15 | 49 | 69 |

VA, Veterans Affairs.

Infusion

Answers to our questions about infusion (questions 1–6) were remarkably homogenous. We verified that all sites had CPOE. The nine VA hospitals used CPRS, and the seven Shriners hospitals and one other hospital used Cerner. Eight hospitals use Eclipsys (now Allscripts), five Siemens, two MEDITECH, and two GE. The average percentage of orders entered using CPOE has increased 11% since our last survey, from 72% to 83%. We asked in both surveys if the hospitals had medication orders, lab orders, radiology orders, and/or other types of orders. All hospitals had all types of orders and there was a slight increase in the ‘other’ category since 2005. All respondents replied that they provide CDS at the time of order entry.

Types of CDS in use and customization

Including VA and Shriners hospitals, all hospitals reported that they had order sets and medication alerts. Seven respondents replied that medication alerts are provided by vendors, eight said they self-develop them, and others did not respond to the question. Also, 88% reported having other alerts or reminders in addition to medication alerts. Eleven noted that these additional alerts or reminders are self-developed, with only two reporting that vendors supply them and others not responding to the question. Overall, 68% reported having documentation templates, defined as forms that guide clinicians in the capture of structured or even unstructured data, often with respect to specific clinical conditions, procedures, or administrative tasks. Presumably, the others did not yet have electronic documentation. Table 2 shows details about the level of customization for each type of CDS and the source of CDS content (vendor or self-developed). The appendix (available as a supplementary file online only) provides direct representative quotes from transcripts. The selected quotes illustrate the diversity of answers we received, whereas the following text summarizes trends across all sites.

Table 2.

Responses to the survey questions regarding CDS

| Use of content | Medication alerts | Other alerts | Order sets | Templates |

| As is | 8 | 1 | 0 | 0 |

| Create own | 1 | 0 | 3 | 1 |

| Modify | 25 | 29 | 30 | 22 |

| Not applicable | 0 | 3 | 0 | 9 |

| No response | 0 | 1 | 1 | 2 |

| Total | 34 | 34 | 34 | 34 |

| Source of content | ||||

| Vendor | 16 | 6 | 6 | 2 |

| Locally developed | 16 | 22 | 24 | 19 |

| Not applicable | 0 | 3 | 0 | 8 |

| No response | 2 | 3 | 4 | 5 |

| Total | 34 | 34 | 34 | 34 |

CDS, clinical decision support.

We asked about embedded links to reference material (question 8), but respondents were frequently confused by the question. We meant links that allow a user to request additional information on a specific item by clicking on a hypertext link that responds with a new window containing additional information, but this was difficult for us to explain and respondents to understand. However, we did learn that all hospitals had some mechanism for accessing literature, either through an intranet to hospital-owned sources or through the internet.

Management of CDS

We asked all participants about how the hospital develops, modifies, and maintains CDS. When asked what department manages CDS, respondents from five of the seven independent hospitals responded that an IT group managed CDS with a great deal of assistance from clinicians. For the other hospitals, which were VA, Shriners or members of a hospital system, an informatics or information services department managed CDS. All but one hospital described a hierarchical committee structure with a high-level oversight committee that dealt with issues beyond IT. The informatics or IT representatives on this committee were also on mid-level committees dealing with clinical systems. Respondents noted there were other multidisciplinary committees of pharmacists, IT, and quality specialists, as well as clinician users. All of these hospitals brought together ad-hoc committees for developing or modifying specific CDS modules. The VA has national, regional, and local committees and staff; Shriners has a national as well as local structure; and the hospitals that were part of consolidated systems had cross-hospital committees in addition to local committees. The one hospital without committees is in the midst of changing vendors and has low CPOE use (less than 1% of orders entered this way).

Measurement and monitoring

Our focus in the area of measurement was on the use of metrics to assess how well CDS is performing. Eleven out of 23 responding to this question noted their hospitals tracked alert override rates to ensure the physicians were not overburdened with alerts. Fifteen of 18 responding hospitals tracked which physicians were using CPOE so they could encourage greater use. Some comments indicated that hospitals also tracked the effectiveness of individual order sets, and the number of times each alert fired. One respondent noted ‘for any new order set that's being created, we require that they identify a metric, that they will measure pre and post’.

Data as a foundation for CDS

Because accurate and sufficient data are needed to trigger patient-specific CDS, we asked respondents if they trusted the data. Ten out of 17 responding answered ‘yes’, but four were unsure about the quality of data entered by busy clinicians. We also received recommendations that the existence of data quality assurance mechanisms be a prerequisite for creating CDS modules. These are articulated in the list of quotes in the supplementary appendix (available online only).

New roles

Twenty-two out of 34 informants described new job roles that are CDS specific, such as knowledge engineers and analysts. Trends included a move towards new informatics or information services departments, and more clinical analysts and trainers with clinical backgrounds. Four people mentioned the importance of clinical credibility; one respondent noted ‘we created some nursing analyst roles, they work part time just to maintain their clinical credibility with the providers and their fellow nurses’. Many (13/34) also mentioned informatics committees or departments and new positions for chief medical or nursing informatics officers.

Barriers and facilitators

Participants' comments about barriers and facilitators (see box 2) were varied and could prove valuable for those who hope to implement CDS systems. Of the 34 interviewees, 32 replied to the question about facilitators. The most frequently mentioned facilitator was user involvement and the importance of training users (19/32). Many (11/32) noted the value of top-level support and leadership. Respondents said you should try to save clinicians' time (7/32), target the right CDS to the most appropriate clinician (7/32), or link CDS to quality initiatives (6/32) or evidence-based medicine (4/32). When asked about barriers, 29 of the 34 responded. Most mentioned that lack of the aforementioned facilitators would be barriers (22/29). The most noted additional barrier was over-alerting (11/29), leading to alert fatigue.

Box 2. Responses to the survey question regarding barriers and facilitators.

Facilitators (question 18)

I think you have to determine what CDS is relevant to the specific providers, realize quality and not quantity.

You need to make it so that the person that sees the message is the one that it's pertinent to.

If they can prove that outcomes are better by using some kind of CDS, I think that would definitely be a facilitator.

Definitely we are a HIMSS stage 6 analytics hospital so we very much value information management. . [we stress that we are] dealing with evidence-based practice.

New risks and warnings come out and we say ‘hey, we need to address this’…. We always look for what can the computer system do to help support any changes in policy.

When we say ‘evidence based medicine’ they [the medical staff] hear ‘cookbook medicine’. I had a conversation today with one of our lead physicians who's always said ‘my patients are unique’, and we were talking about creating a data warehouse where we can go back in and do population-based studies, to look at best practices, so he's even starting to buy into the whole idea.

Physicians, the more they use the computer system, the more they realize that the use of order sets can save them time. Because, literally, with a couple of clicks, they can order a bunch of tests.

It's important that you have certain standards and the ability to normalize the data.

We really spent a fair amount of time upfront looking at what triggers it, when it should be suppressed, who it goes to, what role, just to really do due diligence extensive process.

As we are employing more and more physicians, I think the job is somewhat easier because, as in the military, we tell them ‘this is what you need to do’ and that's going to help us, I think, evolve more quickly.

I would say, having quality involved, the quality arm of your organization, is a facilitator.

Taking into consideration not just your best technological users of the systems but even some of those who aren't as technology facile because sometimes they have some of the best suggestions on how to enhance the system.

The driver behind CDS is always a specific clinical need. We have a very, very broad quality program, which I run, and if there is a specific clinical need, there's a process metric that's off track, or if there's something else that is not right, then that would be the primary driver behind a CDS tool.

Barriers (question 19)

We did it backwards. We started with CPOE instead of starting with results feeder systems like labs and radiology.

Keeping current. My co-worker and I, we try to keep everything current and it's a big part of our job to just try to keep everything up to date.

I'm trying to be politically correct I think… you can have as many CDS systems in place as possible, but it doesn't necessarily mean people use them.

The biggest challenge is to avoid alert overload… the balance is what can you do to get the right information to the right person in a timely manner, and it's pertinent enough that they go, ‘OK, I did need to know this,’ versus ‘you're just botherin’ me and you're in my way'.

Managing the order sets once they're in place, and we don't have any internal mechanism to do that other than manually, so we're looking at some third party products.

The struggle we have is when we couple our efforts with quality improvement, people have to understand the capabilities and limitations of CDS… they come to us saying ‘oh, the computer will solve this problem, can you build it?’ And we know at our end that's not gonna work, it's a training issue or it's a workflow issue.

Knowledge management is another barrier that's associated with CDS, just there's so many rules, there's so many alerts out there now, and we really don't have a way to understand which ones are working, which ones are truly evidence based, which ones are most beneficial.

Semantics, what do we mean by this CDS? Just the view of the medical record is a type of decision support that no one really puts much thought into 'cuz they just inherit whatever the vendor gives them, but one of the biggest needs of our users is a concise review of the patient.

Collaboration

We asked an open-ended question, ‘how does your organization approach collaboration between users, vendors, IT administration, and other stakeholders with respect to CPOE and CDS?’ Many respondents (19/34) mentioned that the committees that make decisions about CDS are collaborative in nature. Some (11/34) commented on the importance of vendor relationships, one noting ‘we've found it of benefit to have formal collaboration between vendors and our hospital’, and another mentioning that a contract agreement specified that ‘we sort of both had skin in the game’.

Meaningful use

Although VA hospitals are ineligible for meaningful use incentives, when asked, all VA representatives felt they could meet the criteria regardless. The seven Shriners hospital respondents were sure they would meet these criteria. Of the 18 other hospitals, 12 were certain they could meet criteria, but six were not. Most interviews were held before the final less demanding stage 1 criteria were announced, so these six respondents might answer differently now.

VA profile

We interviewed representatives from nine VA hospitals that are considered community hospitals, all of which used CPRS. These hospitals varied somewhat in length of time using CPRS, with an average of 13 years of use. All claimed that over 88% of orders were entered using CPOE and that providers were using CPOE for medication, lab, radiology, and other kinds of orders. The hospitals have all of the types of CDS we asked about. These systems had embedded links to reference resources and most content is modified locally. The source of the CDS was national (through the central VA), regional, and local. Many types of metrics were collected. Decisions were made at both the regional and local levels in addition to the national level. New roles had been developed. The most common new role was for clinical application coordinators.

Shriners hospitals profile

We spoke with representatives of seven Shriners hospitals. We also interviewed the director of CDS for Shriners Hospital International, which is the central office for the organization, although we have not included this interview in our hospital response rate. We separated these seven hospitals from the general community hospitals for several reasons. First, with 20 hospitals overall, they were representative of consolidated hospital systems in that they are quite centralized from the point of view of clinical systems administration and governance. All but one Shriners hospital we spoke with uses Cerner and most had it in place since 2004. The percentage of orders placed with CPOE varied from 65% to 100% and the central office reported an average of 70% across the 20 hospitals that have CPOE. Providers used CPOE for medication, lab, radiology, and other kinds of orders across the system. CDS was available at the time of order entry in the form of medication alerts, order sets, and forms and templates. All of the represented Shriners hospitals buy medication alerts from vendors. Aside from medication alerts, three buy all CDS from vendors and four self-develop it. Because the hospitals were geographically diverse, order sets required some customization. As one respondent noted: ‘we had some issues in that in some areas they do things differently, different regs, different laws, things like that’. Other types of alerts, such as duplicate test orders, were not yet used. Although reference content was available, there were no embedded links to content. Medication alerts were not modified, but order sets were. Most content was provided through vendors, although some was locally developed. All but the newest installations collected metrics. New roles were created at the central office and at the local level. For example, one hospital hired a risk manager as a result of CPOE and CDS implementation. According to the central office respondent, this group of hospitals would easily meet meaningful use criteria.

Themes and trends

Our informants represented small and medium-sized hospitals, yet their infusion levels—measured partly by the percentage of orders entered using CPOE—were high, with an overall average of 83%, 11% higher than 5 years earlier. Most of these hospitals belonged to hospital systems, so decision-making about CDS occurred at the hospital system level as well as at the local level. Governance included all levels, but local customization required local involvement in decision-making. This customization effort was labor intensive and involved local clinician effort as well as local informatics staff effort. Trends in staffing included the use of ‘informatics’ as a term, establishment of informatics departments and committees, and the hiring of more CDS-specific analysts and developers. Comments indicated that manpower shortages exist in this area. There was a concern that management of CDS customization and updating would become more demanding, and that development of the required knowledge management practices and staff would be increasingly labor intensive.

Discussion

We found that a broad sample of community hospitals that had used CPOE for at least 5 years had robust levels of CDS despite their small size and the independent nature of many of their physician staff members. Although a survey study that only included tertiary hospitals in Korea found just 27.3% of hospitals with clinical information systems also had CDS systems,16 we found that all of our responding hospitals had CDS.

Our results indicate that many community hospitals belong to larger hospital systems. Small hospitals often benefit from becoming part of larger systems that achieve economies of scale, such as the ability to hire informatics staff specifically to customize CDS. Consolidation represents a trend in the USA that is expected to continue, and health IT will likely be an important driver.23 The VA and Shriners systems were not unique in their move to standardize some CDS across their systems, yet allow local customization when necessary. However, the level of customization needed for most CDS before implementation was greater than we expected. While most electronic health record vendors supplied some CDS, representatives of these hospitals clearly felt they needed more, and while these hospitals tended to purchase medication CDS, they put a great deal of effort into building other types of CDS.

Policy implications

Our findings have a number of policy implications. Under the American Recovery and Reinvestment Act, the country has made an unprecedented investment in health IT. The meaningful use criteria have been developed to increase the likelihood that organizations will implement and use health IT in ways that result in care improvement, and CDS is one of the most important functionalities in this regard. While the meaningful use incentives require decision support, they have been minimally prescriptive regarding CDS. For example, in 2011 they only required that a single rule be in place. Despite that, we found that hospitals appear to be on a good track, at least with respect to the governance needed. However, a substantial level of customization was needed in most institutions. Some of this likely related to the specific workflow at the individual institutions. Customization requires skilled individuals who represent an emerging manpower need for this type of hospital.24

Limitations

This study has a number of limitations. We only surveyed hospitals we were able to talk with 5 years ago rather than the entire population of community hospitals with CPOE in the USA, so one cannot generalize from our results to the entire group. We only talked with one representative at each hospital, and therefore we only captured one person's comments in addition to factual information. Half of the individuals we interviewed this time were different from those responding to the original survey. We did not assess whether or not any specific decision support was in use, so we were unable to identify exactly what rules were in place.

Conclusion

Our survey results suggest that within this community hospital sample, nearly all hospitals had developed a level of sophistication that appeared similar to that of the relatively highly regarded VA system.25 This finding bodes well for hospitals of all sizes and types in the USA that are striving towards meeting the increasingly difficult meaningful use requirements. A hospital just beginning to pilot a CPOE system can expect to look similar to the hospitals in our sample in 5 years' time if it follows the same path these hospitals have pursued. Nonetheless, there is a manpower shortage of people with the key skills, and the needs of community hospitals for skilled staff members who can customize and manage CDS needs to be addressed on a continuing basis by the workforce development initiatives of the Office of the National Coordinator for Health Information Technology.

Supplementary Material

Acknowledgments

The authors would like to thank Caroline Wethern, Joseph Wasserman and Michael Shapiro for assisting with interviewing and Vishnu Mohan for help with qualitative analysis.

Footnotes

Contributors: Conception and design: JSA, DFS, AW, CM, DWB. Analysis and interpretation of data: JLM, AW. Drafting of the paper: JSA, DFS, CM. Critical revision of the paper for important intellectual content: JSA, DFS, AW, CM, DWB. Final approval of the paper: JSA, JLM, DFS, AW, CM, DWB.

Funding: This research was supported by grant LM 06942 from the National Library of Medicine (NLM), National Institutes of Health, and NLM training grant ASMMI0031. NLM had no role in the design or execution of this study, nor in the decision to publish.

Competing interests: None.

Ethics approval: Ethics approval was obtained from the Oregon Health & Science University institutional review board.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. Hunt DL, Haynes RB, Hanna SE, et al. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review. JAMA 1998;280:1339–46 [DOI] [PubMed] [Google Scholar]

- 2. Devine EB, Hansen RN, Wilson-Norton JL, et al. The impact of computerized provider order entry on medication errors in a multispecialty group practice. J Am Med Inform Assoc 2010;17:78–84 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Kawamoto K, Houlihan CA, Balas EA, et al. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005;330:765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Garg AX, Adhikari NK, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–38 [DOI] [PubMed] [Google Scholar]

- 5. Kaushal R, Jha AK, Franz C, et al. Return on investment for a computerized physician order entry system. J Am Med Inform Assoc 2006;13:261–6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Centers for Medicare and Medicaid Services Medicare and Medicaid Programs; Electronic Health Record Incentive Program. Baltimore (MD): CMS, 2010. http://cms.gov/ehrincentiveprograms/ (accessed 30 Aug 2011). [Google Scholar]

- 7. Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc 2003;10:523–30 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Jha AK, DesRoches CM, Kralovec PD, et al. A progress report on electronic health records in U.S. hospitals. Health Aff 2010;29:1951–7 [DOI] [PubMed] [Google Scholar]

- 9. www.aha.org/research/rc/stat-studies/fast-facts.shtml (accessed 30 Aug 2011).

- 10. Jha AK, DesRoches CD, Campbell EG, et al. Use of electronic health records in U.S. hospitals. N Engl J Med 2009;360:1628–38 [DOI] [PubMed] [Google Scholar]

- 11. Hug BL, Witkowski DJ, Sox CM, et al. Adverse drug event rates in six community hospitals and the potential impact of computerized physician order entry for prevention. J Gen Int Med 2010;25:31–8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Chaudry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Int Med 2006;144:742–52 [DOI] [PubMed] [Google Scholar]

- 13. Sylvia KA, Ozanne EM, Sepucha KR. Implementing breast cancer decision aids in community sites: barriers and resources. Health Expect 2008;11:46–53 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Runman MS, Richman PB, Kline JA. Emergency medicine practitioner knowledge and use of decision rules for the evaluation of patients with suspected pulmonary embolism: variations by practice setting and training level. Acad Emerg Med 2007;14:53–7 [DOI] [PubMed] [Google Scholar]

- 15. Brace C, Schmocker S, Huang H, et al. Physicians' awareness and attitudes toward decision aids for patients with cancer. J Clin Oncol 2010;28:2286–92 [DOI] [PubMed] [Google Scholar]

- 16. Chae YM, Yoo KB, Kim ES, et al. The adoption of electronic medical records and decision support systems in Korea. Health Inform Res 2011;17:172–7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Sittig DF, Guappone K, Campbell E, et al. A survey of USA acute care hospitals' computer-based provider order entry system infusion levels. Stud Health Technol Inform 2007;129:252–6 [PubMed] [Google Scholar]

- 18. Ash JS, Sittig DF, Poon EG, et al. The extent and importance of unintended consequences related to computerized physician order entry. J Am Med Inform Assoc 2007;14:415–23 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Ash JS, Sittig DF, Dykstra RD, et al. Exploring the unintended consequences of computerized physician order entry. Stud Health Technol Inform 2007;129:198–202 [PubMed] [Google Scholar]

- 20. McMullen CK, Ash JS, Sittig DF, et al. Rapid assessment of clinical information systems in the healthcare setting: an efficient method for time-pressed evaluation. Meth Inform Med 2011;50:299–307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Longo DR, Hewett JE, Ge B, et al. The long road to patient safety: a status report on patient safety systems. JAMA 2005;294:2858–65 [DOI] [PubMed] [Google Scholar]

- 22. Crabtree BF, Miller WL, eds. Doing Qualitative Research, 2nd edn Thousand Oaks, CA: Sage, 1999 [Google Scholar]

- 23. Galloro V. The urge to merge. Mod Healthc 2009;39:6–7; 16. [PubMed] [Google Scholar]

- 24. Osheroff JA, Teich JM, Middleton B, et al. A roadmap for national action on clinical decision support. J Am Med Inform Assoc 2007;14:141–5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Evans DC, Nichol WP, Perlin JB. Effect of the implementation of an enterprise-wide electronic health record on productivity in the Veterans health administration. Health Econ Policy Law 2006;1:163–9 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.