Abstract

Purpose

To formulate and solve the fluence-map merging procedure of the recently-published VMAT treatment-plan optimization method, called VMERGE, as a bi-criteria optimization problem. Using an exact merging method rather than the previously-used heuristic, we are able to better characterize the trade-off between the delivery efficiency and dose quality.

Methods

VMERGE begins with a solution of the fluence-map optimization problem with 180 equi-spaced beams that yields the “ideal” dose distribution. Neighboring fluence maps are then successively merged, meaning that they are added together and delivered as a single map. The merging process improves the delivery efficiency at the expense of deviating from the initial high-quality dose distribution. We replace the original merging heuristic by considering the merging problem as a discrete bi-criteria optimization problem with the objectives of maximizing the treatment efficiency and minimizing the deviation from the ideal dose. We formulate this using a network-flow model that represents the merging problem. Since the problem is discrete and thus non-convex, we employ a customized box algorithm to characterize the Pareto frontier. The Pareto frontier is then used as a benchmark to evaluate the performance of the standard VMERGE algorithm as well as two other similar heuristics.

Results

We test the exact and heuristic merging approaches on a pancreas and a prostate cancer case. For both cases, the shape of the Pareto frontier suggests that starting from a high-quality plan, we can obtain efficient VMAT plans through merging neighboring fluence maps without substantially deviating from the initial dose distribution. The trade-off curves obtained by the various heuristics are contrasted and shown to all be equally capable of initial plan simplifications, but to deviate in quality for more drastic efficiency improvements.

Conclusion

This work presents a network optimization approach to the merging problem. Contrasting the trade-off curves of the merging heuristics against the Pareto approximation validates that heuristic approaches are capable of achieving high-quality merged plans that lie close to the Pareto frontier.

Keywords: VMAT Treatment Planning, Pareto Efficiency, Network Optimization, Discrete Bi-criteria Optimization

1. Introduction

Volumetric-modulated arc therapy (VMAT) is an emerging radiation treatment modality with the ability to efficiently deliver highly-conformal dose distributions. It is essentially an alternative form of intensity-modulated radiation therapy (IMRT) that can achieve a superior or comparable dose quality compared with fixed-beam IMRT for different treatment sites (see, e.g., [24]). Perhaps the main advantage of VMAT over fixed-beam IMRT is its significantly better delivery efficiency (see, e.g., [20, 25, 28]), which helps reduce the treatment time and increase the patient throughput. However, it has also been reported that to achieve a clinically-acceptable treatment outcome for targets with complex geometry, single-arc VMAT may not be sufficient [13, 3]. This suggests that one may need to slow down the gantry (or alternatively to use multiple arcs) to allow for more field modulation in order to enhance the dose conformity. Hence, there is a trade-off between the VMAT dose quality and its delivery efficiency. In this paper, using a recently-published VMAT planning approach, we develop a modeling framework along with a solution method to quantify this trade-off.

VMAT treatment planning poses a more challenging optimization problem than fixed-beam IMRT since in VMAT the gantry continuously rotates and delivers radiation from every angle around the patient. Furthermore, the leaf motion in Multileaf Collimator (MLC) during the gantry rotation is limited by the finite leaf speed. Finally, the machine dose rate may vary while the gantry rotates. Hence, VMAT treatment planning requires solving a large-scale optimization problem in which gantry speed, leaf motion, and dose rate are all intertwined. Several studies have addressed the VMAT treatment planning problem; in particular, there are two main solution methods to the problem. The first method is a sequential approach consisting of two stages; in the first stage, the fluence-map optimization (FMO) problem is solved for a fine beam grid. In the second stage, the obtained fluence maps are decomposed into a collection of deliverable apertures using an arc-sequencing algorithm (see, e.g., [17, 26, 5]). The second method is a direct aperture optimization (DAO) approach in which an aperture is directly determined for each beam angle while taking the finite leaf speed as well as other MLC deliverability restrictions into account (see, e.g., [19, 18]).

A two-stage method to VMAT treatment plan optimization, termed VMERGE, has been recently proposed in [8]. In the first stage of VMERGE, the 360°-arc around the patient is divided into 2° angular segments, which we call gantry sectors. The FMO problem is then solved to determine the optimal fluence maps for the underlying beam angles. In particular, a multi-criteria optimization (MCO) approach is used to obtain an FMO solution that yields the desired trade-off between different treatment-plan evaluation criteria (see, e.g., [16, 7]). In the second stage, the obtained fluence maps are individually sequenced using the sliding-window technique discussed in [23, 22]. The resulting treatment plan, in principle, can be delivered using a VMAT-deliverable machine so that once the gantry arrives at the beginning of each gantry sector, MLC leaves start from one end of the field and sweep across to modulate the corresponding fluence map. Moreover, the gantry angular velocity as well as the dose rate are adjusted accordingly within each gantry sector to allow MLC leaves to travel across the field in a timely fashion. Finally, the MLC leaf-sweeping direction alternates between consecutive gantry sectors. This results in a high-quality VMAT plan that yields the “ideal” dose distribution. However, delivering such treatment plan requires a long treatment time due to the large number of gantry sectors considered, rendering it clinically impractical. To improve the delivery efficiency of the plan, VMERGE iteratively merges pairs of neighboring fluence maps and delivers the sum of the associated fluence maps over the combined gantry sectors. This reduces the MLC leaf-travel distance and allows the gantry to rotate faster. However, improving the treatment efficiency through merging neighboring fluence maps comes at the expense of dose quality deterioration. Searching for the desired trade-off can be cast as an optimization problem, which we call the merging problem, where the goal is to find the optimal merging of the gantry sectors and their corresponding fluence maps so that on the one hand, the delivery efficiency of the treatment plan is improved, and on the other hand, the dose quality does not substantially deteriorate. VMERGE applies a greedy heuristic to the merging problem and successively merges the pair of most similar neighboring fluence maps until the dose quality is not clinically attractive anymore. In this paper, we study exact and heuristic solution approaches to the merging problem and investigate the performance of these techniques on clinical cancer cases. In particular, we formulate the merging problem as a bi-criteria optimization problem and propose a solution method to characterize the associated Pareto-efficient frontier. Using clinical cancer cases, we then contrast the performance of VMERGE, as well as several variants of the algorithm, against the Pareto-frontier representation.

The rest of the paper is organized as follows. In Section 2, we first construct a network-flow model for the merging problem and then formulate the problem as a discrete bi-criteria optimization problem on the network. In Section 3, we discuss different solution methods for quantifying the trade-off between the two criteria and present our customized solution approach. In Section 4, we first discuss how to calculate the network parameters and then test the performance of our solution approach as well as several other heuristics on two clinical cancer cases. Finally, in Section 5, we summarize and conclude the paper.

2. Modeling the Merging Problem

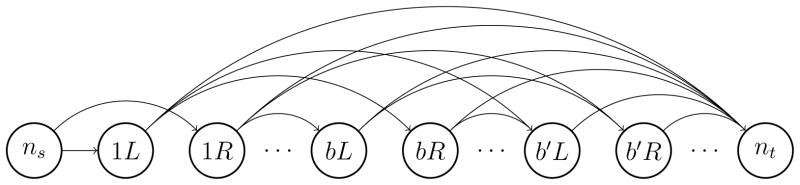

The goal of the merging problem is to find the optimal merging of the neighboring fluence maps as well as the MLC leaf-sweeping direction for modulating the resulting maps. Note that even though the MLC leaf-sweeping direction does not have an impact on the dose quality when modulating a static field, it can be influential in case of modulating fluence maps corresponding to merged gantry sectors. We represent the merging problem as an uncapacitated network-flow model as illustrated in Figure 1. In this network, nodes correspond to gantry sectors along with their starting MLC leaf positions, and arcs represent how these gantry sectors are merged together. More formally, let B denote the index set of the underlying beam angles. MLC leaves can be initially at the right (R) or left (L) side of the field for each gantry sector. Hence, we define the set of nodes, denoted by N, to be the collection of bL and bR for b ∈ B. For notational convenience, we let a(n) and p(n) represent the beam-angle index and the initial MLC-leaf position associated with node n ∈ N, respectively. Finally, we add dummy nodes ns and nt to N to represent the source and the sink node, respectively. The set of arcs in the network, denoted by A, connect bR to b′L and bL to b′R for every b, b′ ∈ B such that b′ > b. For instance, arc (bL, b′R) represents a merged gantry sector in which the MLC leaves start at the left side, travel across to modulate the sum of the fluence maps corresponding to beam angles b to b′ − 1, and end at the right side of the field. We also connect every node n ∈ N\{ns} to the sink node nt using arc (n, nt) to represent a merged gantry sector that starts from the beginning of a(n) and spans across all the following gantry sectors. Finally, we connect the source node ns to nodes 1L and 1R using two dummy arcs. We then associate cost c(ninj)with arc (ni, nj) ∈ A to represent the time it takes to deliver the merged gantry sector (ni, nj). We assign a cost of zero to dummy arcs. We also let be the vector of all arc costs. Similarly, for arc (ni, nj) ∈ A we let d(ninj) be the vector of dose deposition from the corresponding merged gantry sector in all voxels v ∈ V and for dummy arcs we set d to 0. We let be the matrix formed by all dose deposition vectors.

Figure 1.

The network representation of the merging problem.

(N, A) is a directed acyclic network with

(|B|) nodes and

(|B|) nodes and

(|B|2) arcs. There is a one-to-one correspondence between the ns→nt (source-to-sink) paths in the network and all possible merging patterns. Thus, we model the merging problem as a network-flow problem using the network (N, A). More specifically, let x(ninj) ∈ {0, 1} be a binary variable indicating if arc (ni, nj) ∈ A is used in the chosen ns→nt path and x ∈ {0, 1}|A| be the vector of x(ninj)’s. We then use X ⊂ {0, 1}|A| to represent the collection of all binary vectors forming an ns→nt path on the network. More formally, X can be characterized as X = {x ∈ {0, 1}|A|: Gx = g} where G is the incidence matrix associated with the network and g is a column vector of size |N| with g1 = 1, g|N| = −1, and gi = 0 (i = 2, …, |N| − 1). Constraints Gx = g are the flow-balance constraints and enforce that exactly one unit of flow is sent from the source node ns and is received at the sink node nt. We refer the interested reader to [1] for a complete review of the network-flow theory and applications. In the context of the merging problem, a merged plan refers to x ∈ X and X is the collection of all possible merged plans. X contains

(|B|2) arcs. There is a one-to-one correspondence between the ns→nt (source-to-sink) paths in the network and all possible merging patterns. Thus, we model the merging problem as a network-flow problem using the network (N, A). More specifically, let x(ninj) ∈ {0, 1} be a binary variable indicating if arc (ni, nj) ∈ A is used in the chosen ns→nt path and x ∈ {0, 1}|A| be the vector of x(ninj)’s. We then use X ⊂ {0, 1}|A| to represent the collection of all binary vectors forming an ns→nt path on the network. More formally, X can be characterized as X = {x ∈ {0, 1}|A|: Gx = g} where G is the incidence matrix associated with the network and g is a column vector of size |N| with g1 = 1, g|N| = −1, and gi = 0 (i = 2, …, |N| − 1). Constraints Gx = g are the flow-balance constraints and enforce that exactly one unit of flow is sent from the source node ns and is received at the sink node nt. We refer the interested reader to [1] for a complete review of the network-flow theory and applications. In the context of the merging problem, a merged plan refers to x ∈ X and X is the collection of all possible merged plans. X contains

(2|N|) merged plans so that their explicit enumeration is computationally intractable.

(2|N|) merged plans so that their explicit enumeration is computationally intractable.

To measure the delivery time of a merged plan, we define the function t: X → ℝ+ as

| (1) |

Furthermore, to measure the dose deviation of a merged plan from the ideal dose distribution, we define the function q: X → ℝ+ as

| (2) |

where is the ideal dose distribution corresponding to the FMO solution prior to merging and is a diagonal matrix with diagonal elements wvv representing the relative importance of voxel v ∈ V. Thus, q measures the weighted distance of the dose distribution delivered by the merged plan x from the ideal dose distribution z*. We can then formulate the merging problem as a binary bi-criteria optimization problem as follows:

subject to

| (3) |

| (4) |

(P) seeks to obtain a merged plan that on the one hand has the minimal treatment delivery time, measured by t, and on the other hand has the least weighted deviation from the ideal dose distribution, measured by q. The notion of Pareto optimality can then be used to characterize the set of merged plans that need to be considered in quantifying the trade-off between the two criteria. In particular, Pareto-optimal solutions are those for which one cannot improve one criterion value unless the other criterion deteriorates. Any other solution is dominated by a Pareto-optimal solution and thus does not need to be considered in the trade-off. For a complete review of Pareto optimality see [11]. In the next section, we discuss a solution approach to obtain the set of Pareto-optimal solutions to (P).

3. Solution Approach

The goal of a bi-criteria optimization problem is to quantify the trade-off between the two criteria. To that end, one needs to obtain a representative set of Pareto-optimal solutions to the problem. In particular, the weighted-sum method is a well-known technique to generate Pareto-optimal solutions (see, e.g., [6]). In this approach a family of single-criterion problems are formulated using a linear scalarization of the two criteria where the scalarization weights are the changing parameters. By solving the problem for different nonnegative weights one can obtain all the Pareto-optimal solutions to a convex bi-criteria problem. However, for a discrete bi-criteria problem it may not be possible to obtain all the Pareto-optimal solutions using this method due to the non-convexity of the associated Pareto frontier. More specifically, this approach can only generate the supported Pareto-optimal points. These are the points that lie on the convex envelope of the Pareto frontier. Hence, to obtain a representative set of Pareto-optimal solutions to a discrete bi-criteria problem, other solution techniques that can generate non-supported Pareto-optimal points are required. In particular, the ε-constraint method is a well-known example of such techniques (see, e.g., [6]). In this approach a family of single-criterion problems are formulated by transforming one of the objectives into a constraint with an upper bound of ε where ε is the changing parameter. By solving the problem for different values of ε one can generate all the Pareto-optimal solutions to the problem. Using the ε-constraint method, [21], [14], and [2] proposed so-called box algorithms that yield a representation of the Pareto frontier as a collection of boxes containing all Pareto-optimal solutions. However, applying these techniques to (P) can be computationally very expensive due to the size of the problem. We propose a customized box algorithm to obtain the Pareto-frontier representation with less computational effort. In particular, we approximate the Pareto frontier using the concept of weak Pareto-optimality. Weak Pareto-optimal solutions are the ones at which one cannot improve both criteria at the same time; however, improving one criterion without deteriorating the other one maybe possible (see, e.g., [11]). We use the ε-constraint method to generate these solutions and use them in our box algorithm. Note that conventional convex-cost flow solution approaches (see, e.g., [1]) are not applicable since q in Equation (2) is not a separable function over the network arcs. Therefore, we use integer-programming solution techniques (see, e.g., [27]). Moreover, to ease the computational burden of solving large-scale binary optimization problems required by the ε-constraint method, we only obtain near-optimal solutions and account for the resulting optimality gap in the Pareto approximation. In the following we first discuss some mathematical results used in our solution approach and then introduce the method.

Let tmin = minx∈X t(x) and tmax = maxx∈X t (x) be the minimum and maximum delivery time achievable over the set of all possible merged plans X and x1 and x2 be the corresponding merged plans. Note that x1 and x2 are the shortest and longest ns→nt path in the network, respectively, which can be efficiently determined on an acyclic network (see, e.g., [1]). x1 and x2, the-so-called anchor points, are both Pareto-optimal solutions to (P). To generate weak Pareto-optimal solutions to (P) using the ε-constraint method, we formulate a family of optimization problems, denoted by (P(ε)), for ε ∈ [tmin, tmax] as follows:

subject to

| (5) |

where (3)–(5) characterize the collection of all merged plans whose delivery time is less than or equal to ε. The following proposition holds for the optimal solution to (P(ε)) (see, e.g., Ehrgott 2005).

proposition 3.1

The optimal solution to (P(ε)) for ε ∈ [tmin, tmax] is a weak Pareto-optimal solution to (P).

Proof

Let x*(ε) be the optimal solution to (P(ε)). Now suppose x*(ε) is not a weak Pareto-optimal solution, then there exists x ∈ X such that t(x) < t(x*(ε)) ≤ ε and q(x) < q(x*(ε)). Hence, x is a feasible solution to (P(ε)) with a strictly smaller objective value than q(x*(ε)), but this is a contradiction since x*(ε) is the optimal solution to (P(ε)).

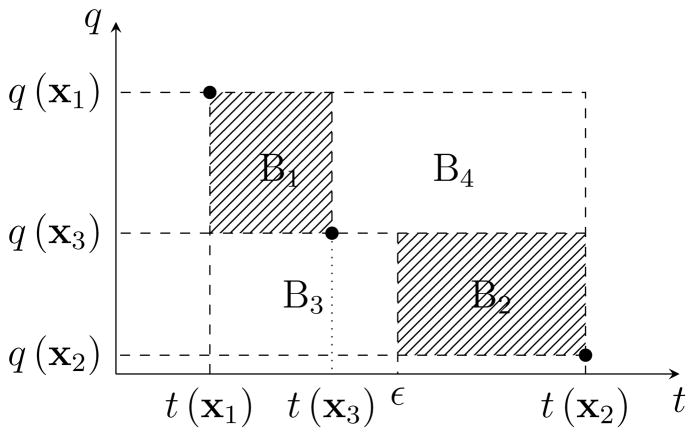

Hence, by solving (P(ε)) for different values of ε ∈ [tmin, tmax] one can generate weak Pareto-optimal solutions to (P). In the rest of this section, we discuss how to obtain an approximation of the Pareto frontier of (P) by solving instances of (P(ε)) for ε ∈ [tmin, tmax]. In particular, we iteratively obtain weak Pareto-optimal solutions to (P) and use them to derive a collection of boxes that contain all Pareto-optimal solutions. Clearly, the anchor points, x1 and x2, are two Pareto-optimal solutions (and therefore weak Pareto-optimal solutions). Starting with the anchor points, we can approximate the Pareto frontier using a box defined by the upper-left and lower-right corner points (t(x1), q(x1)) and (t(x2), q(x2)). Note that all Pareto-optimal solutions to (P) lie in this box. We then let ε = (t(x1) + t(x2))/2 and solve (P(ε)) to obtain the weak Pareto-optimal point x3 and the corresponding treatment time t(x3) and dose distance q(x3). The following proposition shows that all the Pareto optimal solutions to (P) lie either in box B1 with corner points (t(x1), q(x1)) and (t(x3), q(x3)), or box B2 with corner points (ε, q(x3)) and (t(x2), q(x2)) as shown in Figure 2 (see, e.g., Hamacher 2007).

Figure 2.

The new approximation of the Pareto frontier (the shaded area) after obtaining weak Pareto-optimal point x3.

proposition 3.2

For any given Pareto-optimal solution x to (P), (t(x), q(x)) lies in either box B1 or B2 as shown in Figure 2.

Proof

Clearly, (t(x), q(x)) cannot lie in B4 since in that case it would be dominated by x3. Moreover, (t(x), q(x)) cannot lie in the interior of B3 since in that case it would violate the optimality of x3 for (P(ε)). Finally, all the Pareto-optimal solutions lie in the box defined by anchor points (t(x1), q(x1)) and (t(x2), q(x2)); therefore, (t(x), q(x)) is either in B1 or B2.

Thus, using the weak Pareto-optimal solution x3, we can improve the initial approximation by eliminating parts of it. We use the above procedure iteratively to obtain a fine approximation of the Pareto frontier. More specifically, at iteration n ≥ 2 the Pareto approximation is expressed as a collection of n − 1 boxes, denoted by Bk for k = 1, 2, …, n − 1. We also let (tk1, qk1) and (tk2, qk2) represent the upper-left and lower-right corner points associated with box Bk for k = 1, 2, …, n − 1. To improve the approximation we first choose the box Bk* with the largest smaller side, where the sides are normalized accordingly. In other words,

where rt and rq are the range of change in t and q, respectively, obtained from the anchor points. The choice of k* is to ensure that the Pareto approximation is composed of flat boxes along either axes. We then set ε = (tk*1 + tk*2)/2 and solve (P(ε)) to obtain a new weak Pareto-optimal solution in box Bk*, which is denoted by xn. Using xn we replace box Bk* with two smaller boxes obtained according to Proposition 3.2 and proceed to the next iteration. Finally, we terminate the algorithm when the largest smaller side among all boxes in the collection is less than a user-specified threshold.

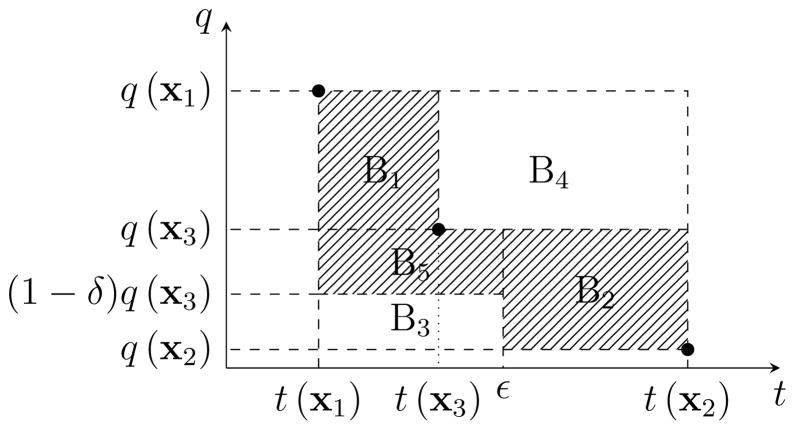

Each instance of (P(ε)) is a large-scale binary quadratic programming (BQP) problem and solving the problem to optimality may require a large computational effort. Thus, the problem is typically solved to a user-specified optimality gap or within a time limit. In either cases, we obtain a near-optimal BQP solution with an optimality gap of δ > 0. In the following we discuss the impact of this optimality gap on the approximation of the Pareto frontier. In particular, suppose starting with anchor points x1 and x2, we solve (P(ε)) for ε = (t(x1) + t(x2))/2 and obtain x3 with an optimality gap of δ. The next proposition shows that to account for this optimality gap, the new Pareto approximation contains an additional box B5 with corner points (t(x1), q(x3)) and (ε, (1 − δ) q(x3)) (see Figure 3).

Figure 3.

The approximation of the Pareto frontier (the shaded area) after obtaining x3 with the optimality gap of δ.

proposition 3.3

In the presence of the optimality gap δ > 0, for any given Pareto-optimal solution x to (P), (t(x), q(x)) lies in either box B1, B2, or B5 as shown in Figure 3.

Proof

Clearly, (t(x), q(x)) cannot lie in B4 since it would then be dominated by x3. Moreover, x3 is the best solution found for (P(ε)) with the corresponding optimality gap of δ > 0. Then, by the definition of the optimality gap, (1 − δ)q(x3) is a lower bound on the optimal objective value of (P(ε)). Therefore, (t(x), q(x)) cannot lie in B3 since in that case x would be a feasible solution to (P(ε)) and would violate the lower bound (1 − δ)q(x3).

Therefore, using Proposition 3.3 one can use near-optimal solutions to (P(ε)) for ε ∈ [tmin, tmax] to obtain a collection of boxes that contain all Pareto-optimal solutions to (P). Finally, if during the course of the box algorithm, (P(ε)) for ε ≤ t(x3), yields a near-optimal solution that lies in the lower bound region (box B5 in Figure 3), then this solution clearly dominates x3, and we can simply replace x3 with the newly-found solution. In the next section we report the results obtained from applying our solution technique to two clinical cancer cases and compare them against those obtained by VMERGE and two other related heuristics.

4. Computational Results

We ran our experiments on a pancreas and prostate case with a voxel grid of 2.6×2.6×2.5 and 3×3×2.5 mm3 and a prescription dose of 48 and 79 Gy, respectively. The treatment plans are designed for a single treatment fraction using a prescription dose of 2 Gy per fraction and the resulting dose distribution is scaled up accordingly. We considered gantry sectors of 2° (i.e., [0°,2°], [2°,4°], …, [358°,360°]) and a beamlet grid of 1×1 cm2 for the underlying beams. We used the beamlet-based dose calculation algorithm, quadratic infinite beam (QIB), implemented in CERR 3.0 beta 3 [10] to compute the dose-influence matrices for 180 equi-spaced beams centered at the gantry sectors. We solved the 180-beam FMO problem using the MCO approach (FMO formulations for both cases are provided in [8]). Using the obtained fluence maps we then constructed the network model (N, A) described in Section 2. In particular, for every arc (ni, nj) ∈ A, we need to obtain the associated cost c(ni,nj), which is the arc delivery time, as well as the dose deposition vector d(ni,nj). In the following we discuss how these parameters are calculated. Let denote the optimal fluence map corresponding to beam angle b ∈ B determined by solving the FMO problem. We compute the fluence map associated with arc (ni, nj), denoted by F(ni,nj), as follows:

Note that while the MLC leaves travel across the field to modulate F(ni,nj), the gantry sweeps across the merged gantry sector corresponding to arc (ni, nj). Hence, the arc delivery time c(ni,nj) should allow for the F(ni,nj) modulation time as well as the gantry travel time. To calculate c(ni,nj) we first determine the F(ni,nj) modulation time assuming a maximum leaf speed of 2.5 cm per second and dose rate of 600 MU per minute, and the gantry travel time assuming a maximum gantry speed of 6° per second. To synchronize these two, if the modulation time is longer than the gantry travel time, we slow down the gantry accordingly; otherwise, we reduce the MLC maximum leaf speed. We then sequence F(ni,nj) using the sliding-window technique with the adjusted maximum leaf speed where the MLC leaves start from p(ni) side of the field. c(ninj) is then set to the F(ni,nj) modulation time. Moreover, using the obtained leaf trajectories as well as the adjusted gantry velocity we determine the fluence map delivered at each 2° gantry sector included in the arc (ni, nj) and compute the dose-deposition vector d(ni,nj) (see also [8] for more details). Finally, the W setting in Equation (2) can be used to emphasize one or several particular structures while searching for the merged plan that most closely matches the ideal dose distribution. Note that the VMERGE heuristic does not provide such feature. We assigned an identical nonzero weight to each target voxel in the W matrix while all other voxels receive a weight of zero. This W setting is motivated by the observation that the target coverage is the most susceptible aspect of a treatment plan to the merging process. However, we would like to emphasize that any choice of nonnegative W can be applied.

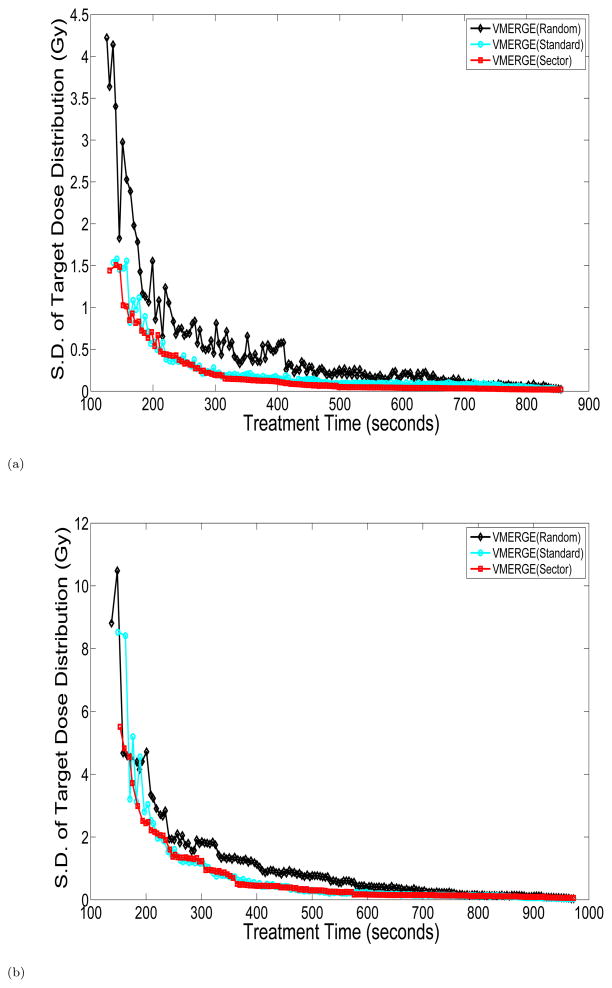

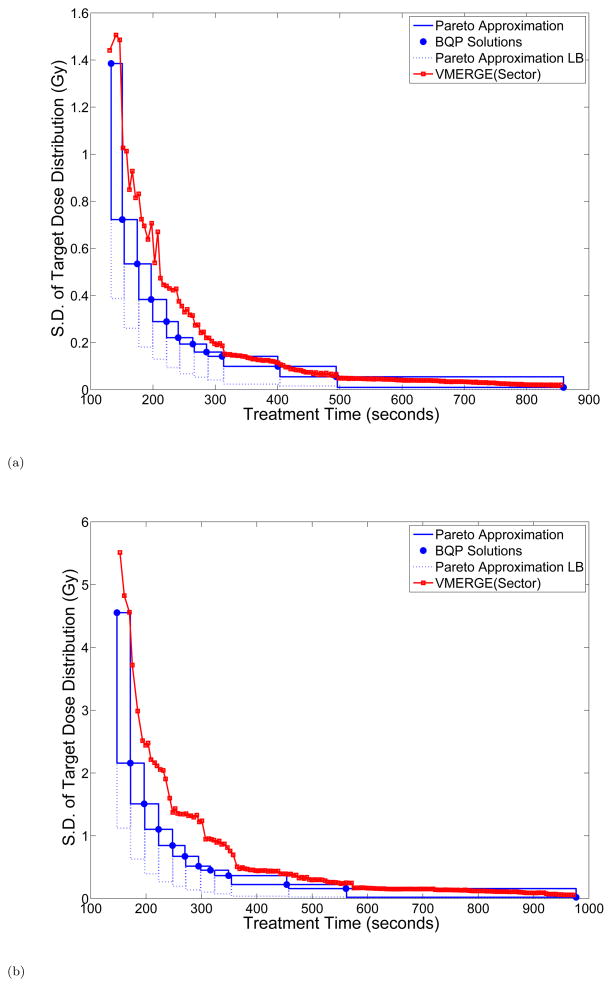

We first ran standard VMERGE as well as two variants of the algorithm and evaluated the treatment delivery time t and the weighted dose distance q after each merge. We considered three different strategies for merging neighboring gantry sectors. In particular, at each iteration of the VMERGE algorithm the pair of merging gantry sectors are chosen (1) randomly (random VMERGE), (2) based on the fluence-map similarity score (standard VMERGE), and (3) based on the length of the merged gantry sector (sector VMERGE). More specifically, sector VMERGE first merges all 2° gantry sectors, then the resulting 4° gantry sectors, and so forth. Figure 4 compares the trade-off curves obtained using strategies (1)–(3) for the pancreas and prostate cases. Clearly, strategy (1) is outperformed by strategies (2) and (3) in both cases. Moreover, the trade-off curves associated with strategies (2) and (3) are somewhat similar; however, strategy (3) shows a more stable performance particularly for shorter treatment-time values.

Figure 4.

Trade-off curves associated with merging strategies (1)–(3) in VMERGE for the (a) pancreas and (b) prostate case. S.D.: Standard Deviation.

We next obtained the Pareto-frontier representation for (P) using our solution method described in Section 3. We used IBM ILOG CPLEX solver for mixed-integer quadratic programming to solve each instance of (P(ε)). We warm-started the optimizer with a solution to (P(ε)) provided by a network-flow heuristic, which is designed using our network model. More specifically, this heuristic uses the merging pattern as a surrogate for the dose quality. To that end, we associate a nonnegative weight with each arc in the network to exponentially penalize the number of merges performed within that arc. We then formulate and solve a constrained shortest-path problem to obtain a source-to-sink path on the network whose cost is below ε and has the least cumulative weight. The constrained shortest-path problem is well studied in the literature and several solution approaches using dynamic programming, subgradient method and integer-programming techniques have been proposed for this problem (see, e.g., [12, 9]). Here we employed the CPLEX solver for mixed integer programming, which can solve our constrained shortest path formulation in order of seconds. Solutions provided by this network-flow heuristic are comparable to the ones obtained using sector VMERGE. We prefer this alternative heuristic to warm start the CPLEX solver because it relies solely on the network model and therefore can be performed without requiring the implementation of sector VMERGE.

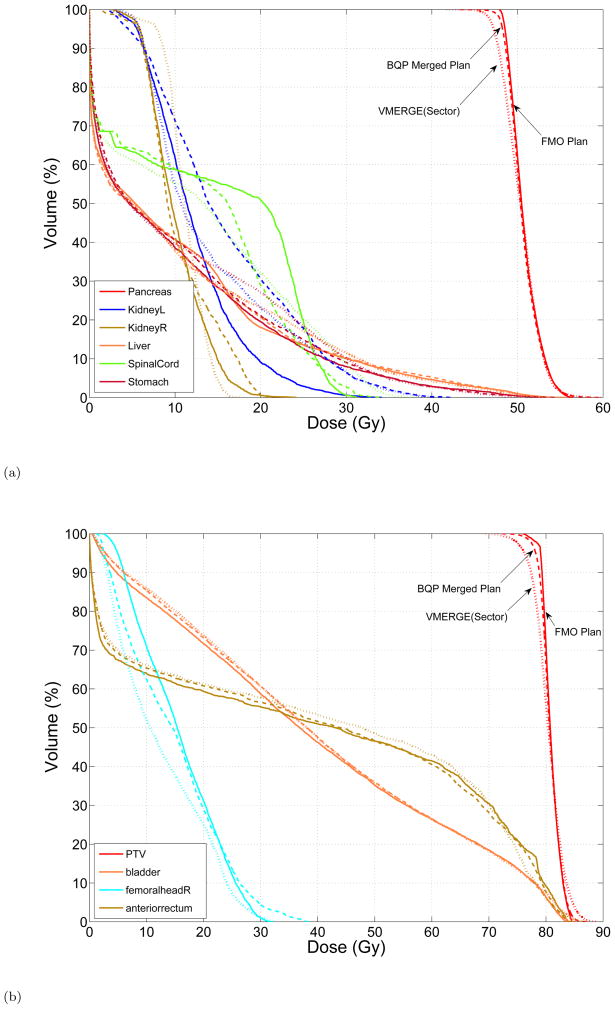

Figure 5 shows the Pareto-frontier representation obtained by the box algorithm for the trade-off between the standard deviation of the target dose distribution and the treatment time. The BQP solutions, obtained from solving different instances of (P(ε)) and used by the box algorithm, are also shown in the figure. We considered a minimum optimality gap and a maximum time limit of 45 minutes as termination conditions while solving each instance of (P(ε)). The CPLEX solver cannot reach this optimality gap within the time limit and therefore terminates with a larger gap. Thus, the optimality gap associated with BQP solutions are accounted for by adding the Pareto-approximation lower bound (LB) using Proposition 3.3 which appreas to be relatively loose. To investigate this further, we let the optimizer run longer for several instances of (P(ε)); however, we only observed marginal improvements in the lower bound. As the shape of the Pareto frontier suggests, initially the treatment time can be significantly reduced through merging the neighboring gantry sectors without deviating from the ideal dose distribution in the target dramatically. However, below a certain treatment time, small improvements in treatment efficiency come at the expense of large deviations from the ideal target dose. The trade-off curve associated with sector VMERGE is also contrasted against the approximated Pareto frontier in Figure 5. In particular, for relatively large treatment times the trade-off curve associated with VMERGE (sector) lies within the Pareto-frontier approximation and as the treatment time further decreases it starts deviating from it. Finally, in Figure 6 we compare the DVH curves associated with the initial FMO plan, a BQP merged plan, and a treatment plan obtained using sector VMERGE with the same treatment time. For both cases, the BQP merged plans yield superior DVH curves particularly for the target coverge.

Figure 5.

The Pareto approximation obtained by the box algorithm and the trade-off curve associated with sector VMERGE for the (a) pancreas and (b) prostate case. S.D.: Standard Deviation.

Figure 6.

DVH curves associated with the initial FMO plan (solid), BQP merged plan (dashed) with the treatment time of (a) 140 seconds for the pancreas and (b) 215 seconds for the prostate case, and the sector VMERGE plan (dotted) with the same treatment time.

5. Discussion and Conclusion

The merging problem has been formulated as a bi-criteria optimization problem on a network-flow model and the corresponding Pareto-frontier representation is obtained using a customized solution approach. In particular, we developed a box algorithm that sequentially generates weak Pareto-optimal points using the ε-constraint method. These points are then used to identify a collection of boxes that contain all Pareto-optimal solutions to the problem. However, due to the large size of the BQP problems, they can only be solved with an optimality gap. Hence, our solution method is designed to account for this optimality gap in the Pareto-frontier representation.

The Pareto-frontier representation provides valuable information about the trade-off between the VMAT dose quality and its treatment efficiency. More specifically, starting from a 180-beam FMO solution that yields the ideal dose distribution, one can substantially improve the treatment efficiency by merging neighboring fluence maps without deteriorating the original dose distribution significantly. However, below a certain treatment time, slight improvements in the treatment efficiency come at the expense of large deviations from the ideal dose distribution. Our exact solution approach to the merging problem provides a platform for evaluating the performance of different merging heuristics including the standard VMERGE. In particular, contrasting the trade-off curves obtained by merging heuristics against the Pareto-frontier representation shows that using a merging heuristic one can obtain clinically-attractive merged plans which lie close to the Pareto surface. Considering the computational effort required by the exact method, the merging heuristics appear to be a reasonable strategy in approximately solving the merging problem.

It has been shown that the sliding-window technique can modulate a given fluence map with minimal delivery time when the MLC leaves are forced to start from one side of the field and end at the other side of the field (see, e.g., [23]). However, if this restriction is relaxed, the sliding-window technique does not necessarily yield an optimal delivery time anymore. For instance, in the case of a uni-modal fluence strip (one-dimensional map), the close-in technique clearly has a shorter delivery time compared to the sliding-window technique [15, 4]. This difference can be even more pronounced in VMAT where a collection of fluence maps are sequentially modulated. Currently, the merging problem is formulated and solved assuming that all merged fluence maps are modulated using the sliding-window technique. However, further improvement in treatment efficiency can be achieved if a dynamic leaf-sequencing scheme that allows for other forms of leaf trajectories is used. Future research can extend this work by employing a more general leaf-sequencing scheme in the merging problem.

Acknowledgments

The project described was supported by Award No R01CA103904 from the National Cancer Institute. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Cancer Institute or the National Institutes of Health. The authors would like to thank Thomas Bortfeld for providing feedback on the manuscript. Thanks to Dualta McQuaid and Wei Chen for their contributions to the computational infrastructure used in this research.

References

- 1.Ahuja RK, Magnanti TL, Orlin JB. Network Flows: Theory, Algorithms, and Applications. Prentice-Hall; Upper Saddle River NJ: 1993. [Google Scholar]

- 2.Bérubé JF, Gendreau M, Potvin JY. An exact ε-constraint method for bi-objective combinatorial optimization problems: Application to the traveling salesman problem with profits. European Journal of Operational Research. 2009;194(1):39–50. [Google Scholar]

- 3.Bortfeld T, Webb S. Single-arc IMRT? Physics in Medicine and Biology. 2009;54:N9–N20. doi: 10.1088/0031-9155/54/1/N02. [DOI] [PubMed] [Google Scholar]

- 4.Bortfeld TR, Kahler DL, Waldron TJ, Boyer AL. X-ray field compensation with multileaf collimators. International Journal of Radiation Oncology Biology Physics. 1994;28(3):723–730. doi: 10.1016/0360-3016(94)90200-3. [DOI] [PubMed] [Google Scholar]

- 5.Bzdusek K, Friberger H, Eriksson K, Hårdemark B, Robinson D, Kaus M. Development and evaluation of an efficient approach to volumetric arc therapy planning. Medical Physics. 2009;36:2328–2339. doi: 10.1118/1.3132234. [DOI] [PubMed] [Google Scholar]

- 6.Chankong V, Haimes YY. Multiobjective Decision Making: Theory and Methodology. North-Holland; New York: 1983. [Google Scholar]

- 7.Craft DL, Halabi TF, Shih HA, Bortfeld TR. Approximating convex pareto surfaces in multiobjective radiotherapy planning. Medical Physics. 2006;33:3399–3408. doi: 10.1118/1.2335486. [DOI] [PubMed] [Google Scholar]

- 8.Craft D, McQuaid D, Wala J, Chen W, Salar E, Bortfeld T. Multicriteria VMAT optimization. Medical Physics. 2012;39(2):686–696. doi: 10.1118/1.3675601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Carlyle WM, Royset JO, Kevin Wood R. Lagrangian relaxation and enumeration for solving constrained shortest-path problems. Networks. 2008;52:256–270. [Google Scholar]

- 10.Deasy JO, Blanco AI, Clark VH. CERR: a computational environment for radiotherapy research. Medical Physics. 2003;30:979–985. doi: 10.1118/1.1568978. [DOI] [PubMed] [Google Scholar]

- 11.Ehrgott M. Multicriteria Optimization. Vol. 491. Springer-Verlag; Berlin: 2005. [Google Scholar]

- 12.Garcia R. PhD Thesis. 2009. Resource constrained shortest paths and extensions. [Google Scholar]

- 13.Guckenberger M, Richter A, Krieger T, Wilbert J, Baier K, Flentje M. Is a single arc sufficient in volumetric-modulated arc therapy (VMAT) for complex-shaped target volumes? Radiotherapy and Oncology. 2009;93(2):259–265. doi: 10.1016/j.radonc.2009.08.015. [DOI] [PubMed] [Google Scholar]

- 14.Hamacher HW, Pedersen CR, Ruzika S. Finding representative systems for discrete bicriterion optimization problems. Operations Research Letters. 2007;35(3):336–344. [Google Scholar]

- 15.Källman P, Lind B, Eklöf A, Brahme A. Shaping of arbitrary dose distributions by dynamic multileaf collimation. Physics in Medicine and Biology. 1988;33:1291–1300. doi: 10.1088/0031-9155/33/11/007. [DOI] [PubMed] [Google Scholar]

- 16.Küfer KH, Scherrer A, Monz M, Alonso F, Trinkaus H, Bortfeld T, Thieke C. Intensity-modulated radiotherapy–a large scale multi-criteria programming problem. OR Spectrum. 2003;25(2):223–249. [Google Scholar]

- 17.Luan S, Wang C, Cao D, Chen DZ, Shepard DM, Yu CX, et al. Leaf-sequencing for intensity-modulated arc therapy using graph algorithms. Medical Physics. 2008;35(1):61–69. doi: 10.1118/1.2818731. [DOI] [PubMed] [Google Scholar]

- 18.Men C, Romeijn HE, Jia X, Jiang SB. Ultrafast treatment plan optimization for volumetric modulated arc therapy (VMAT) Medical Physics. 2010;37:5787–5791. doi: 10.1118/1.3491675. [DOI] [PubMed] [Google Scholar]

- 19.Otto K. Volumetric modulated arc therapy: IMRT in a single gantry arc. Medical Physics. 2008;35:310–317. doi: 10.1118/1.2818738. [DOI] [PubMed] [Google Scholar]

- 20.Rao M, Yang W, Chen F, Sheng K, Ye J, Mehta V, Shepard D, Cao D. Comparison of elekta VMAT with helical tomotherapy and fixed field IMRT: Plan quality, delivery efficiency and accuracy. Medical Physics. 2010;37:1350–1359. doi: 10.1118/1.3326965. [DOI] [PubMed] [Google Scholar]

- 21.Solanki R. Generating the noninferior set in mixed integer biobjective linear programs: an application to a location problem. Computers and Operations Research. 1991;18(1):1–15. [Google Scholar]

- 22.Spirou SV, Chui CS. Generation of arbitrary intensity profiles by dynamic jaws or multileaf collimators. Medical Physics. 1994;21:1031–1041. doi: 10.1118/1.597345. [DOI] [PubMed] [Google Scholar]

- 23.Svensson R, Kallman P, Brahme A. An analytical solution for the dynamic control of multileaf collimators. Physics in Medicine and Biology. 1994;39:37–61. doi: 10.1088/0031-9155/39/1/003. [DOI] [PubMed] [Google Scholar]

- 24.Teoh M, Clark CH, Wood K, Whitaker S, Nisbet A. Volumetric modulated arc therapy: a review of current literature and clinical use in practice. British Journal of Radiology. 2011;84(1007):967–996. doi: 10.1259/bjr/22373346. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Tsai CL, Wu JK, Chao HL, Tsai YC, Cheng JCH. Treatment and dosimetric advantages between VMAT, IMRT, and helical tomotherapy in prostate cancer. Medical Dosimetry. 2011;36(3):264–271. doi: 10.1016/j.meddos.2010.05.001. [DOI] [PubMed] [Google Scholar]

- 26.Wang C, Luan S, Tang G, Chen DZ, Earl MA, Yu CX. Arc-modulated radiation therapy (AMRT): a single-arc form of intensity-modulated arc therapy. Physics in Medicine and Biology. 2008;53:6291–6304. doi: 10.1088/0031-9155/53/22/002. [DOI] [PubMed] [Google Scholar]

- 27.Wolsey LA. Integer Programming. Wiley-Interscience; 1998. [Google Scholar]

- 28.Zhang P, Happersett L, Hunt M, Jackson A, Zelefsky M, Mageras G. Volumetric modulated arc therapy: planning and evaluation for prostate cancer cases. International Journal of Radiation Oncology Biology Physics. 2010;76(5):1456–1462. doi: 10.1016/j.ijrobp.2009.03.033. [DOI] [PubMed] [Google Scholar]