Abstract

Localist models of spreading activation (SA) and models assuming distributed-representations offer very different takes on semantic priming, a widely investigated paradigm in word recognition and semantic memory research. In the present study we implemented SA in an attractor neural network model with distributed representations and created a unified framework for the two approaches. Our models assumes a synaptic depression mechanism leading to autonomous transitions between encoded memory patterns (latching dynamics), which account for the major characteristics of automatic semantic priming in humans. Using computer simulations we demonstrated how findings that challenged attractor-based networks in the past, such as mediated and asymmetric priming, are a natural consequence of our present model’s dynamics. Puzzling results regarding backward priming were also given a straightforward explanation. In addition, the current model addresses some of the differences between semantic and associative relatedness and explains how these differences interact with stimulus onset asynchrony in priming experiments.

Keywords: word recognition, semantic priming, neural networks, distributed representations, latching dynamics

1. Introduction

Related concepts tend to elicit one another in semantic memory. This simple and intuitive notion is firmly grounded in day-to-day experience as well as in formal studies of human performance. For example, in free-association tasks, when subjects are instructed to respond with the first word that comes into their mind given a cue word, the response is often related in meaning to the cue (e.g., Deese, 1962); in sentence-verification tests, judgments regarding the semantic relationships between words are usually carried out faster for words sharing close semantic relations compared to words sharing distant relations (Collins & Quillian, 1969); in word recognition studies implementing priming paradigms, subjects respond faster to a target word shortly after being exposed to a related word prime, compared to when the prime is semantically unrelated (Neely, 1991). Such findings have often been interpreted as supporting models of semantic memory in which the organization of knowledge is, at least partially, based on meaning-related neighborhoods.

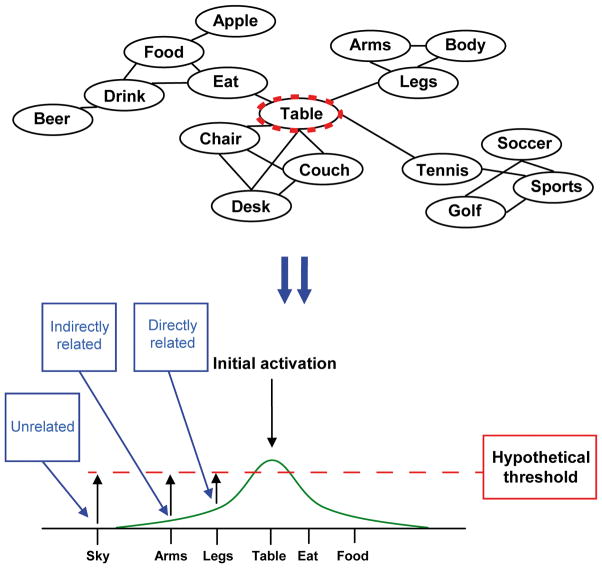

One of the most prominent theories of semantic processing is the Spreading Activation (SA) model (e.g., Anderson, 1983; Collins & Loftus, 1975; Collins & Quillian, 1969). According to this model, concepts are represented by single units (or ‘nodes’), interconnected to each other in a network structure which allows semantic activation to spread from one unit to another. The amount of activation that spreads is determined by the strength of the connection between two units, which represents their semantic/associative relatedness. The stronger two concepts are related to one another, the stronger is the connection between them (e.g., table – chair compared to bed – chair and dog - chair). When a concept is activated (for example, by an external input), the activity level of its corresponding unit is set above a certain threshold, signaling its recognition by the system. The activity building at a particular node propagates to adjacent nodes automatically, thus elevating their activity. The concepts activated by proxy would, in turn, activate their own surroundings and so activity spreads further and further in the semantic space (Fig. 1). If, during this SA process, the activity of a unit reaches its own threshold, its corresponding word becomes consciously perceived and available for evaluation. The SA stops when the original node is no longer externally activated, thus letting the remaining amount of activity in the network diminish with time and distance from origin (‘dissipation of activation’).

Fig. 1.

Spreading activation operates on interconnected nodes within semantic memory. When one node is externally activated, activation spreads to related concepts, thus raising their baseline. Priming occurs when a pre-activated concept is presented as a target.

A fundamentally different approach to modeling semantic memory is given by attractor neural network models (e.g., Masson, 1995; Moss, Hare, Day, & Tyler, 1994; Plaut, 1995; Plaut & Booth, 2000). The common assumption of such models is that concepts in semantic memory are represented by the distributed activity pattern (labeled ‘memory pattern’) of an assembly of “neurons”. During a learning phase in which the concepts are introduced to the network, the connectivity among the neurons gradually changes until the memory patterns corresponding to the learned concepts become attractors in the network dynamics. Semantic relations are expressed in attractor models as correlations between memory patterns. In some models, this correlation is interpreted as feature-overlap: If each neuron represents an explicit feature of a concept (e.g., have 4 legs), all concepts sharing this feature (dogs, cats, etc …) will have similar activity in the corresponding neurons. In other models, however, the correlation between patterns does not indicate distinguished shared features (see Jones, Kintsch, & Mewhort, 2006; Plaut, 1995). When the network is presented with an external cue corresponding to one of its stored memory patterns, the units’ activity is gradually driven to this pattern until the network fully settles on its attractor-state. This convergence represents the identification of the corresponding concept. Since related concepts are correlated, when one concept is ‘activated’ (that is, the network converges on it), its related concepts are partly activated in parallel. The dynamics in these networks is therefore short-lived, and terminates when the steady-state activity of an attractor is reached. SA and attractor models have often been contrasted (e.g., McNamara, 2005; Thompson-Schill, Kurtz, & Gabrieli, 1998).

SA models, being less constrained than attractor networks, are usually more flexible in accounting for various cognitive phenomena and, indeed, have been frequently used to simulate many well-known findings in the semantic memory literature (for a review see McNamara & Holbrook, 2003). However, they are metaphorical models, which do not offer a mechanistic account of the dynamics in question. Hence, the traditional SA models may very well be seen as describing the dynamics in the semantic network (albeit in quantifiable terms) rather than pointing to its underlying (biologically-inspired) sources. Attractor models, in contrast, may be somewhat more limited in their explanatory power due to their reliance on symmetric properties such as pattern-similarity and their lack of emphasis on long-term dynamics beyond the typical convergence process. However, they provide mechanistic accounts of the processes involved and are more biologically oriented than SA, relying on principles such as distributed representations, attractor dynamics and inter-neuronal connectivity driven by biologically-inspired learning rules (e.g., Hebbian learning; Hopfield, 1982). While the biological plausibility of distributed representations was recently put into question (Bowers, 2009), they are, nevertheless, still a consensual view in neuroscience particularly when assuming sparse representations with low correlations between them (see, for example, Plaut & McClelland, 2009; Quian Quiroga & Kreiman, 2010; Waydo, Kraskov, Quiroga, Fried, & Koch, 2006). It is therefore an interesting computational question whether attractor networks can be extended to reach explanatory power comparable to SA while retaining the principles which allow them a certain degree of biological feasibility.

In the current study, we developed a model which attempts to take a step towards unifying the principles of SA and attractor networks into one coherent framework. We did this in the context of semantic priming, one of the frequently used paradigms in word recognition and semantic memory research that confronts many of the fundamental differences between the two approaches. We show how the introduction of biologically-motivated adaptation mechanisms into attractor networks can lead to autonomous hopping between encoded memory patterns, which mimics SA dynamics and, consequently, how such a model can account for some of the basic findings in the priming literature.

An additional motivation for our work was that SA and attractor networks offer distinctively different takes on the general notion of semantic activation, which tap on several key issues in the semantic memory literature; these include the question of representation (local or distributed), degree of semantic activation (focused or spread) and the source of semantic and associative relatedness (static similarities between distributed representations or a product of dynamical changes in the system). Suggesting a model which combines the classical principles of SA and the more biologically-plausible principles of attractor networks, we hope to present some of these issues in a new perspective.

The article has the following structure: We begin by discussing how SA and attractor models explain semantic priming and what the discrepancies between these models are. Then we introduce our model, followed by simulations which demonstrate its basic traits and capability to explain previously reported semantic priming and free-association results in human performance studies. Finally, we discuss some implications of the model and how it may relate to several other theories in the field.

2. Semantic priming

2.1. Basic experimental findings

Since its introduction in the early seventies (Meyer & Schvaneveldt, 1971), semantic priming has been among the most widely investigated phenomena in the research of semantic memory. In a typical priming experiment (Neely, 1977; See Neely, 1991, and McNamara, 2005, for reviews), the participant is presented with two words in succession, the prime and the target, with either a short or a long Stimulus Onset Asynchrony (SOA). Frequently used procedures involve reading the prime silently and either naming the target (pronunciation task), or deciding whether it is a real word or not (lexical decision task). The target could either be semantically related or unrelated to the prime, or a nonword in case of the lexical decision task. The semantic priming effect refers to the finding that the average reaction time (pronouncing the second word or deciding it is a real word) is shorter and error rates are lower when the two words are semantically related to each other, compared to when they are unrelated. Experiments have shown that priming may reflect both facilitation (i.e., a prime accelerates the reaction time to a related target) and inhibition (the prime delays reaction time to an unrelated target) compared to a condition in which a neutral stimulus (such as a row of X’s) takes the role of the prime.

The nature of the relations between primes and targets and how it influences the priming effect has often been the focus of attention in the priming literature. Words can be only semantically related (e.g., trout – salmon), could be episodically associated even without a semantic relationship (e.g., pillar – society), or could be related both semantically and associatively (e.g., dog – cat). Across all three types of relationships, priming is augmented for pairs which are closely related to each other (a “strong” connection) compared to pairs in which the relationship between the words is weaker (Lorch, 1982; Neely, 1991). Priming seems to be stronger for pairs which are both semantically and associatively related (as determined by free association norms; see Nelson, McEvoy, & Schreiber, 2004) compared to pairs which are purely semantically related (the so called ‘associative boost’ effect; Hutchison, 2003; Lucas, 2000; Moss et al., 1994). When both semantic and associative relationships between prime and target exists, the magnitude of priming tends to increase with SOA (e.g., de Groot, 1984, 1985), whereas when the prime and target are only semantically related priming is less affected by SOA (Lucas, 2000). Priming can also be asymmetric, so that the size of the priming effect changes pending on which of the two words in a pair is the prime and which is the target. This asymmetry is best demonstrated for pairs in which the words are associatively related in one direction (e.g., stork – baby), but not in the opposite direction (e.g., baby – stork). For such pairs, priming could be very effective when the prime and the target preserve the association, while if presented in the backward-direction (termed ‘backward priming’) the effect is smaller and may even disappear with sufficiently long SOAs (e.g., Kahan et al., 1999). Finally, word pairs in which the prime is related to the target only indirectly through a mediating word (termed ‘mediated priming’; e.g., lion – stripes, mediated by tiger) were shown to yield priming effects when strategic processes related to decision-making are prevented (Balota & Lorch, 1986; Neely, 1991). These effects, however, are smaller compared to the priming of directly related items.

Generally speaking, models of semantic priming have focused on either automatic or controlled mechanisms contributing to the effect. Controlled mechanisms refer to specific strategies which subjects can intentionally use in attempt to maximize the efficiency of their response to the target, producing either facilitation or inhibition at long prime-target SOAs. Automatic priming, on the other hand, results from the structure, dynamics and connectivity of the semantic storage itself and is allegedly independent of subjects’ strategies. These mechanisms typically contribute to the facilitation of target processing, primarily at short SOAs, without evident inhibitory effects. SA models and attractor networks account mostly for automatic priming.

2.2. The SA account

SA theories explain semantic priming assuming that when the semantic node which represents the prime is activated, the activation spreads automatically to related nodes (for review see Neely, 1991). This wave of activation raises the baseline activity of such nodes and, therefore, reduces the amount of additional activation needed to bring them above threshold when addressed bottom-up (Fig. 1). Hence, SA can facilitate the recognition of targets that follow semantically related primes, as reflected by faster reaction times to such targets. The magnitude of this facilitation is proportional to the strength of the connection between the prime and the target nodes; therefore SA can naturally account for the positive relation between the magnitude of the priming effect and the strength of the semantic connection. Since activation propagates beyond the immediate neighbors of the prime, SA accounts not only for priming based on direct semantic relatedness but also for mediated priming. Moreover, since the level of activation is reduced by distance from origin, SA correctly predicts that the magnitude of the mediated priming effect should be smaller than that of direct priming.

SA theories often do not make a clear distinction between semantic and associative connections and exploit the same mechanism to account for both semantic and associative priming (Lucas, 2000). Since the reciprocal connections between two nodes in the SA network need not be equal, asymmetric priming can readily be produced. However, differences between semantic and associative priming cannot be accounted for by SA theories due to the lack of distinction between these two types of connections. In addition, backward priming, which is evident for items with no prime-to-target relations, could not be easily explained by SA mechanisms because only a unidirectional connection between the corresponding nodes should be present in such cases. Finally, SOA effects on priming are predicted only when assuming that the typical time of activation-spread is in the order of hundreds of milliseconds. If, however, activation spreads very quickly (e.g., Lorch, 1982; Ratcliff & McKoon, 1981), no such effects should exist.

2.3. The attractor-network account

Attractor networks account for semantic priming by relying on the fundamental assumption that semantically related concepts have correlated representations (e.g., Masson, 1995; Moss, Hare, Day, & Tyler, 1994; Plaut 1995; Plaut & Booth, 2000). When the prime is presented, the activity pattern of the network begins to converge on its corresponding attractor. Since distributed representations imply, by definition, that neurons are shared among different memory patterns, convergence to the attractor representing the prime necessarily activates some of the neurons included in the memory patterns that represent its related concepts. As a result, when the target is presented and the network begins traveling towards its attractor, fewer neurons will have to change their activation status when the transition is from a prime to a related (correlated) target compared to when it is to an unrelated target. Since the prime pattern constitutes an attractor of the network dynamics, it tends to resist changes in neuronal activation applied by the presentation of the target; therefore, the fewer the neurons which need to change their status during the transition, the less resistance would the transition face and, consequently, the faster the network would converge to the target’s attractor, reflecting its recognition. Hence, neural networks with distributed representations and attractor dynamics can easily account for the acceleration of word recognition in semantic priming experiments1. In addition, if stronger semantic relatedness is interpreted as stronger correlations, the above account can easily explain the dependence of priming on the strength of the prime-target semantic relations.

Attractor networks, however, struggle to explain several specific features of priming. First, since indirectly related prime-target pairs should have uncorrelated representations (as there are no direct relations between them) mediated priming is not easily accounted for by such models. Second, since the dynamics ends with the convergence on the prime, it places strict limitations on the time window during which SOA can influence priming unless the convergence process is assumed to last for seconds. Third, since priming in such networks depends on correlation, which is a symmetric trait, they cannot easily account for asymmetrical priming. Finally, relying on pattern-correlations as the main explanatory tool, attractor models cannot distinguish between semantic and associative connections.

Some of the above deficiencies have been addressed in various ways. For example, several models (Moss et al., 1994; Plaut, 1995) showed that associative relations can be formed in the network independently of semantic relations. If, while the network is trained, certain concepts are coupled so that they frequently appear in succession, the network may learn this temporal consistency. Later, in a priming simulation, when the same concepts appear as prime and target in the order they were learned, the network would tend to converge on the target faster than when an unassociated pair is used, thus demonstrating associative priming independent of semantic relations. It also allows associative priming to be asymmetric and increase with SOA (Plaut, 1995). However, asymmetry in pure semantic priming, which is still based on correlations in these models, cannot be explained by this mechanism.

An additional attempt to address a weakness of attractor network models of semantic priming regarded the effect of mediated relationships. Some authors postulated that indirectly related word-pairs actually have a weak direct relatedness between them, allowing mediated priming to occur in attractor networks much in the same way as direct priming (e.g., Jones et al., 2006; McKoon & Ratcliff, 1992; Plaut, 1995). This suggestion, however, is challenged by several empirical findings (e.g., Jones, 2012) and its validity is strongly debated in the literature (see, for example, McNamara, 2005). All in all, attractor dynamics seems to lack some of the flexibility that SA dynamics offers and, consequently, falls short in accounting for various priming results which SA models can comfortably address.

3. The current model

Following the traditional separation between levels of processing (e.g., Borowsky & Besner, 1993; Smith, Bentin, & Spalek, 2001), we speculate the existence of three different computational levels, represented by three networks: orthographic, lexical/phonological and semantic. In line with other connectionist models (e.g., Huber & O’Reilly, 2003; McClelland & Rumelhart, 1981; Seidenberg & McClelland, 1989; see also Plaut, 1997) we assume that visual input containing words activates the orthographic layer, where letters are identified. The output of this process is fed into the lexical/phonologic network where real words are recognized and fed forward to the semantic network where the word’s meaning is represented. Importantly, these processes are interactive all the way down: the semantic network can influence the lexical network by feedback, and so is the case between the lexical and the orthographic networks.2 Top-down effects contribute to semantic priming: When a newly arrived word (the target) is related in some way to a word which the semantic network is ‘tuned’ on (the prime), the lexical network can recognize this target faster than if the prime and the target are not related because both the bottom-up and the top-down streaming contribute to the recognition process. When a neutral stimulus (that is, a stimulus which doesn’t represent a word) is presented to the lexical network, neither the lexical nor the semantic network is activated and no information transfer occurs (see McNamara, 2005, for a similar conceptualization in an interactive-activation model)3.

Being concerned here primarily with semantic effects, we fully modeled and simulated only the lexical and semantic networks, as they are directly involved in the manifestation of this phenomenon. All other processes, including the visual input, the activity in the orthographic network and its output were unified to a simple external bottom-up input arriving to the lexical network. The lexical and semantic networks were modeled as attractor neural networks with sparse, binary representations and continuous-time dynamics (see Hopfield 1982, 1984; Tsodyks, 1990). Sparse representations have lately been supported by neurophysiologic evidence and are considered to be the rule in cortical codes (e.g., Waydo et al., 2006).

3.1. The semantic network

3.1.1. Basic properties

The semantic network in our model is a fully connected recurrent network composed of 500 units (‘neurons’). Memory patterns encoded to the network, representing concepts (here-to-end labeled ‘concept-patterns’), are binary vectors of size 500, with ‘1’ indicating a maximally active unit, and ‘0’ an inactive one. The representations are sparse (i.e., a small number of units are active in each pattern) with p being the ratio of active units ( p ≪ 1, equal for all patterns). When an external input attempts to activate units that are part of a specific memory pattern in the network, the activity of the entire network is driven by the internal connectivity to gradually converge on this pattern. The connectivity matrix between the units assures the patterns’ stability. External inputs are always excitatory.

The units themselves are analog with activity xi in the range [0,1] and reach binary values when converged on one of the memory patterns. The activity of the i-th unit obeys a logistic transfer function of the form:

| (1) |

With T being a gain parameter4, and hi representing a low-pass filtered version of the instantaneous local input to the unit. Following Herrmann, Ruppin, and Usher (1993), the local input obeys the following linear differential equation:

| (2) |

Here, τn is the time constant of the unit, xj is the activity of the j-th unit (with x indicating average over all units), N is the number of units (500 in our case), p is the sparseness of the representations mentioned earlier, λ a regulation parameter which maintains stability of mean activation, and θ is a constant unit-activation threshold, which can also be seen as global inhibition (see Herrmann et al., 1993, for details). The […]+ symbol indicates a threshold linear function, such that [x]+ = 0 for x < 0, and [x]+ = x otherwise. The use of this function allows the external input to the unit, , to influence the network activity only if it surpasses some constant external threshold θext. Finally, ηi is a noise term drawn from a Gaussian distribution with standard-deviation ηamp and temporal correlations τcorr (see details later). The (maximal) connectivity matrix of the network is determined according to a Hebbian- inspired rule (Tsodyks, 1990):

| (3) |

In (3), P is the total number of memories encoded into the network, and ξ⃗μ is the μ-th memory pattern.

Relatedness between concepts is implemented in the model as correlations between memory patterns (reflecting the degree of overlap between them), defined for two patterns, ξ⃑μ and ξ⃑ν, as:

| (4) |

The higher two concepts are related, the stronger their correlation is; unrelated patterns have a correlation near 0.

3.1.2. Latching dynamics

In most attractor networks which were used to simulate semantic priming, the dynamics lead the network to converge to a certain pattern from which only a new external input could drive it away. A few studies, however, have suggested the possibility of an additional, long-term dynamical process (compared to the relative short one which governs the convergence phase) based on neuronal adaptation mechanisms. Experimentally, adaptation mechanisms have been assumed to take part in several cognitive functions operating on different levels of processing and a variety of time-scales, ranging from visual mechanisms (e.g., perceptual priming; Huber & O’Reilly, 2003) to lexical mechanisms such as phonetic-to-lexical processing (e.g., the verbal transformation effect; Warren, 1968) as well as lexical-to-semantic processing (as in the semantic satiation effect; Lambert & Jakobovits, 1960; Amster, 1964; Tian & Huber, 2010). Many of these effects were shown to be captured by network models implementing neural adaptation between different computational layers (e.g., Huber & O’Reilly, 2003; see General Discussion for more details). In attractor networks with multiple steady-states, adaptation mechanisms can prevent units from maintaining a constant firing rate and make the network unable to hold its stability for long. Therefore, the network state autonomously leaves the initial attractor and converges to a different one. The process may repeat again and again with the network ‘jumping’ from one attractor to another, simulating what may be seen as free associations. This type of jumping was termed ‘latching dynamics’ by Treves (2005), and was investigated by his group (e.g., Kropff & Treves, 2007; Russo, Namboodiri, Treves, & Kropff, 2008), as well as by others (Herrmann et al., 1993; Horn & Usher, 1989; Kawamoto & Anderson, 1985). Mechanisms that cause adaptation can range from dynamic thresholds (e.g., Herrmann et al., 1993) to dynamic synapses (e.g., Bibitchkov, Herrmann, & Geisel, 2002). It was also found that there is a greater tendency for network transitions between correlated patterns than between uncorrelated ones. This bias occurs because neurons in attractor networks are typically noisy and hence do not adapt at exactly the same rate. Consequently, when some neurons are already incapable of maintaining their activity due to fast adaptation, other neurons belonging to the same memory pattern may still maintain their activity. As a result, the network leaves the original attractor and settles to a correlated attractor in which the slowly-adapting neurons are still active (see Herrmann et al., 1993).

We implemented adaptation in the semantic network using short-term synaptic depression. This process has been shown to exist in cortical synapses (e.g., Tsodyks & Markram, 1997) and is thought to have several computational advantages (e.g., Pfister, Dayan and Lengyel, 2010). Yet, other adaptation mechanisms would have led to similar results.

Short-term synaptic depression was modeled according to Tsodyks, Pawelzik and Markram (1998). In line with this model the synaptic efficacy of each unit (i.e., the efficiency of its synaptic transmission to other units) decreases linearly with its activity:

| (5) |

Here, si is the synaptic efficacy of the i-th unit, τr is the time constant of recovery of the synaptic efficacy and U is the utilization of the available synaptic resources. The term xmax refers to a hypothetical maximum firing rate of a unit (e.g., 100 spikes/sec), and was needed because in the original equations (Tsodyks et al., 1998) the firing rate of the units was not bounded by the range [0,1] as it was in our case. The synaptic strength for a given efficacy at a given time is determined as the maximal weight multiplied by the efficacy:

| (6) |

The result of adding short-term synaptic plasticity to the units is that the stability of a pattern cannot be maintained by the network for long. This is because the efficacy of both the excitatory synaptic connections among the active units in the pattern, as well as the inhibitory connections from the active units to silent units, decreases with time. Consequently, after a given time, the network will leave the attractor and converge to a different one. During this time, depleted synapses have the opportunity to recover.

3.1.3. Noise

The noise term in our network, ηi, is drawn from a normal distribution with temporal correlations, independently for each unit. Temporal correlations on the order of tens of milliseconds are evident in physiological data and may reflect filtering processes associated with synaptic integration (Zador, 1998). Additionally, synchronous activity of external networks may also lead to temporal correlations in the noise, which may have important computational consequences (Mato, 1999). In our model, the correlations cause occasional ‘drifts’ in the units’ activity consisting of noise-driven sporadic rises or decreases which last for more than a few milliseconds (in contrast to the white noise case, where the lack of temporal correlations allows only instantaneous sporadic changes). These drifts are important since they allow a wide variety of transitions between patterns induced by the latching dynamics. Although typically the network jumps from one memory pattern to a strongly correlated one, it could nevertheless perform occasional transitions to less strongly correlated patterns. If the temporal correlations were set to zero, transitions were almost always from one pattern to the one most correlated to it. In addition, the noise amplitude itself influences the stability of the attractors and, consequently, affects the rate of transitions in the semantic network. Its value was set to allow a transition rate which fits previously published experimental results. Different values can slow or even halt transitions.

3.2. The lexical network

Like the semantic network, the lexical network in our model is fully recurrent and comprised 500 units. We labeled the memory patterns in the lexical network as ‘word patterns’. The equations governing its dynamics are similar to those of the semantic network, with two important changes:

There are no correlations between the word patterns in the lexical network. This is not meant to indicate that there are no lexical relations in natural languages (indeed, such relations obviously exist, at least at the phonological level, e.g. ‘rat’-‘bat’, ‘cable’-‘table’), but merely to ensure that such relations would not add unnecessary noise to our simulations. In fact, typical semantic priming experiments control for such possible confounds by selecting prime-target pairs that bare no lexical/phonological relations within a pair. Anyhow, this is a simplification which should not influence the average pattern of results.

The lexical network does not implement latching dynamics. This is another simplification, which further reduces the variability in the lexical network to allow emphasizing the effect of the semantic network on lexical convergence. Moreover, from a conceptual point of view, it stands to reason that semantic networks are more associative in nature than lexical networks since, as indicated by association norms (e.g., Nelson et al., 2004); free associations are based more on the meaning of words rather than their lexical/phonologic properties. In practice, latching dynamics was eliminated in the lexical network by decreasing the rate of the synaptic depression, U, to a very small level. Indeed, although there is still no direct evidence of systematic differences in synaptic depression between brain-regions, Tsodyks and Markram (1997) have found that there is a wide variety of synaptic depression rates among neocortical neurons which strongly affects their computational properties.

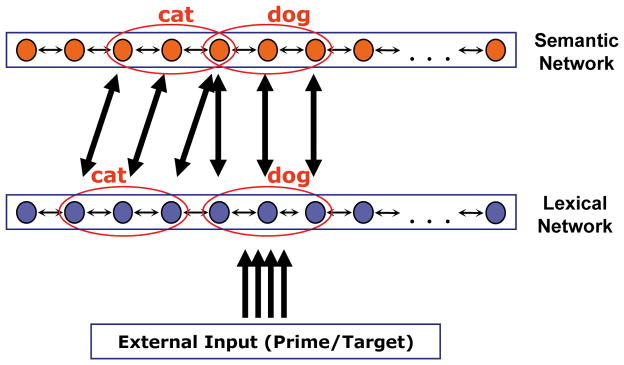

3.3. Connectivity between the networks

The links between the lexical and semantic networks are based on connections between active units in corresponding patterns (Fig. 2). An activated unit belonging to a certain word pattern in the lexical network sends excitatory connections to all active units in the corresponding concept-pattern of the semantic network and vice-versa. Given the distributed nature of the semantic representations and the correlations in the semantic network, the activation of one word pattern in the lexical network activates to different extents all semantically-related concept patterns in the semantic network. This partial activation is fed-back to the corresponding word patterns in the lexical network and adds to its activation by the bottom-up input from the orthographic network. The bottom-up input is also excitatory and determines to which word pattern the lexical network will converge by influencing only the corresponding active units in this pattern.

Fig. 2.

The architecture of the model. Patterns representing related concepts are correlated in the semantic network but uncorrelated in the lexical network. Active units of two toy example patterns representing ‘dog’ and ‘cat’ are marked. Connections between networks are from active units of a pattern in one network to all the corresponding active units in the other network. For simplicity, only some of these connections are drawn.

In order to allow some separation in the computational processes within each layer, the semantic and lexical networks respond to external inputs if, and only if, they surpass a certain threshold (see equation 2). Lexical-to-semantic connections are set to be stronger than the semantic-to-lexical connections. The logic for this asymmetry is that in word-recognition experiments, the required behavior is governed by stimulus-dependant processes, which encourage bottom-up information transmission. Top-down processes (like the influence of semantics on lexical access) are not essential and can be readily reduced by scaling down the appropriate connection strength5. This reduction, however, is not absolute and still allows for some top-down semantic influences as the ones causing semantic priming. As a consequence of this asymmetry, the lexical network affects the activity in the semantic network quicker than the other way around (the threshold could be surpassed more easily due to the stronger connections) and allows it to be influenced by the semantic network only while it is also activated by the bottom-up external input.

In order to further increase the independence of each layer, the lexical-to-semantic connections are also subject to synaptic depression with a slow recovery time (for a similar approach see Huber & O’Reilly, 2003). This causes the bottom up influence of the lexical network to diminish after a typical time interval, letting the semantic network engage in latching without further disturbance (until a new bottom-up external input arrives and the lexical network converges to a new pattern). Nevertheless, we assume only minimal suppression of semantic-to-lexical connections, as these links are, as described above, weak in the first place. In addition, the bottom-up external input to the lexical network is modeled as constant for as long as a word is assumed to be visible, and diminishes abruptly when the visual word disappears.

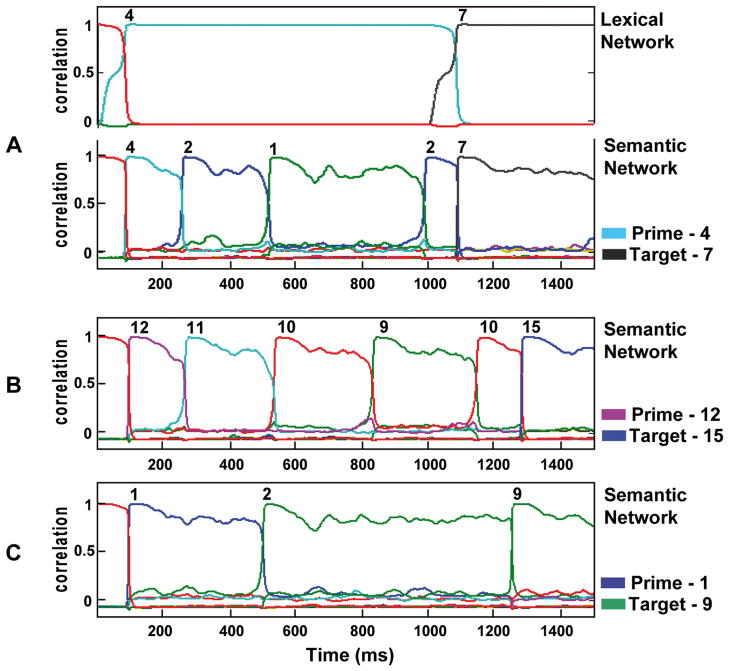

3.4. Basic behavior of the lexical and semantic networks

Fig. 3 demonstrates typical examples of one-trial activations of the network in a typical semantic priming simulation. Correlation of the activation pattern along time for each network with each of its stored patterns (including the real memory patterns and the neutral one) during a trial is presented in that figure in different colors, and convergence to a specific pattern is indicated by its number appearing on top. The lexical network follows the external input by converging to the corresponding memory pattern and keeping stability until a new input arrives. In contrast, the semantic network converges to the appropriate memory pattern, only to jump to other attractors in a serial manner, hence presenting latching dynamics. When a new external input arrives, the semantic network stops its transitions and quickly converges to the corresponding new memory pattern a little after the lexical network has done so (its reaction is much quicker than the lexical network’s due to the strong lexical-to-semantic connections).

Fig. 3.

Correlation of the network state with its different memory patterns as a function of time. Each pattern is indicated by a line with a different color (not all correlation lines are visible at all times, as often they coincide). Moment of convergence to a specific pattern is indicated by the corresponding pattern number above the appropriate line. (A) Typical dynamics of the semantic and lexical networks. The semantic network presents latching dynamics, while the lexical network is stable. (B), (C): More examples of the dynamics of the semantic network, showing the stochasticity of transitions.

4. Simulation 1

This simulation examined the free dynamics of the semantic network and its relation to SA.

4.1. Method

The simulation was written in Matlab 8a and run on an Intel Core 2 Quad CPU Q6600 with 2.4 Ghz and 2 GB of RAM. In all the numeric simulations, one numeric step represented 0.66ms.

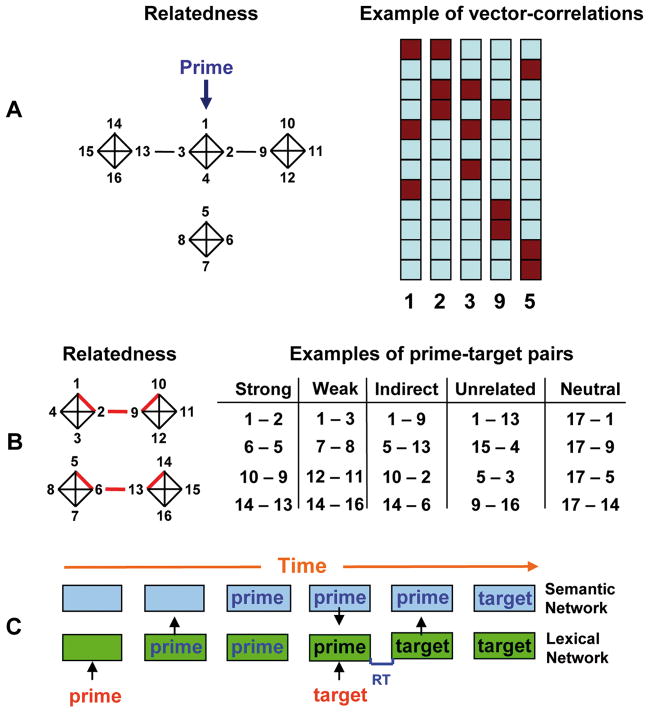

4.1.1 Encoded patterns

Seventeen different memory patterns were encoded in the semantic and lexical networks. Within each network, these patterns comprised binary vectors with equal mean activity and a very sparse representation6. In the semantic network, the basic correlations between patterns were a priori set as following (Fig. 4A): Four groups, each containing 4 patterns, formed ‘semantic neighborhoods’ (patterns 1–4, 5–8, 9–12 and 13–16), so that each pattern in a neighborhood was correlated with the other patterns in its neighborhood and, with few exceptions (see below), no correlations existed between the neighborhoods7. All correlations within a semantic neighborhood were equally strong. The 17th memory pattern was a ‘baseline’ pattern to which the network was initialized at the beginning of each trial, and was not correlated to any of the other patterns. This baseline ensured that the network would not readily converge to one of the ‘real’ patterns when the trial begins and its stability allowed the network to maintain activity until the prime began influencing it (see Rolls, Loh, Deco, & Winterer, 2008 for another example of modeling baseline activity as a stable state of the system).

Fig. 4.

(A) Patterns used in Simulation 1. The left column shows the semantic organization of patterns by neighborhood. The right column presents a simplified illustration of the relatedness as represented by vector-correlations in the network for several representative concepts (brown/light blue colors representing values of 1/0). (B) Patterns used in Simulation 2. The left column shows the semantic organization of patterns by neighborhood, with weak relatedness indicated in black lines and strong relatedness by red lines. The right column presents examples of prime-target pairs used in the simulation trials, organized by relatedness condition. (C) Example of expected chain of events in a semantic priming simulation. Lexical network converges to the prime pattern, followed by convergence of the semantic network. When target appears, the lexical network converges to the appropriate target pattern under the influence of the semantic network. No latching dynamics is assumed in the example.

To produce indirect relatedness (in addition to direct relatedness) in the semantic network, we modified the above basic encoding structure so that a correlation was introduced between a pattern in one neighborhood and a pattern in a different neighborhood. This correlation was based on other units than the ones forming the correlations within each neighborhood. For example, whereas patterns 1–4 formed a semantic neighborhood and patterns 9–12 formed a different semantic neighborhood, we slightly changed the encoding of patterns 2 and 9 to introduce a correlation between them (see the vector examples in Fig. 4A). Consequently, patterns 1 and 9 became indirectly related (mediated by pattern 2). Similarly we correlated pattern 3 with pattern 13 which resulted in an indirect relatedness between patterns 1 and 13.

In the lexical network, all 17 patterns were unrelated to each other. The 17th pattern was, again, the initial state for the network, and was not linked through top-down or bottom up lexical-semantic connections to any of the 17 patterns in the semantic network (thus forming a ‘neutral’ pattern; see Simulation 2 for a more extensive discussion of “neutral” patterns).

4.1.2 Experimental procedure

The simulation was comprised of 100 trials. Each trial began with the lexical and semantic networks converged on their respective neutral patterns. An external input (always pattern 1) was presented to the lexical network immediately after the trial began. This input was a binary vector corresponding to the appropriate memory pattern of the lexical network (1’s in the to-be activated units, 0’s in the rest). One hundred milliseconds after trial onset the external input was removed and the network’s activity followed the dynamic equations without further interference, for a total period of 3000 simulated milliseconds (4500 numeric steps). No additional input was presented in the current simulation. Correlation of the momentary state of each network with each pattern, for each time point along each trial, was stored and averaged offline.

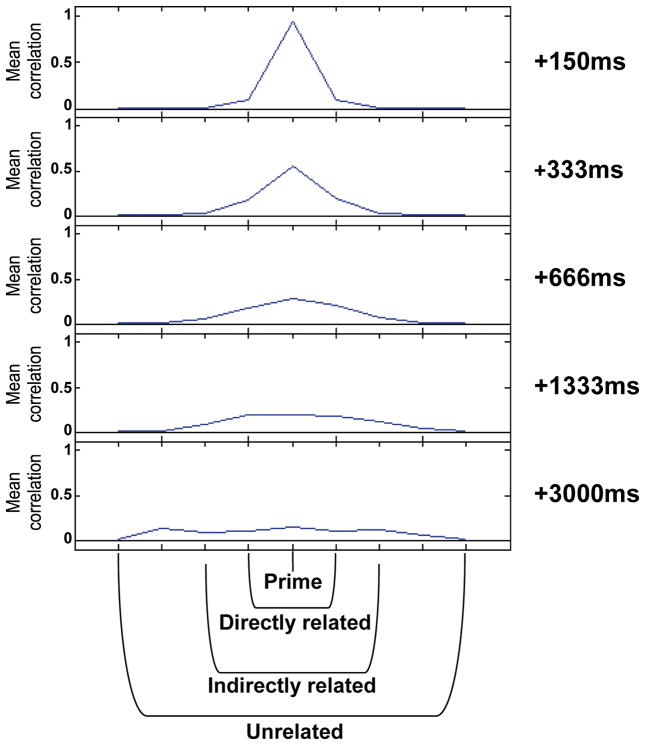

4.2 Results

The mean correlation between the state of the semantic network and each of its encoded memory patterns was computed for each time point over trials. Fig. 5 presents these correlations for five different time points after the prime onset. On the x-axis, the middle point shows the mean correlation with the actually presented prime concept, the two adjacent points to left and right of the center show mean correlations with two of the neighbor patterns (patterns 2 and 3), the two points further to the right and left show the correlation with two indirectly related concepts (patterns 9 and 13), and the two most extreme points show the correlation of the network state with two unrelated concepts (patterns 5 and 6). As can be seen in this figure, the mean correlations followed the principles of spreading activation. Initially, the concept represented by the external input has the strongest activation (correlation), its directly related concepts are activated to a smaller degree, and concepts not related to it are not activated at all. With time, as semantic transitions occur (due to the latching dynamics), the mean activation of the initial concept decreases, while the related concepts are activated more and more. Indirectly related concepts show some activation, with a peak rising later. Unrelated concepts receive no activation at all throughout this period. After 3000 simulated milliseconds, the mean correlation with each of the network’s patterns is distributed more or less equally, corresponding to a nearly deactivated state of the whole network (which can be seen as a statistical implementation over aggregated trials of the dissipation of activation with time which characterizes SA theories).

Fig. 5.

Spreading activation behavior of the semantic network in Simulation 1. Mean correlation of various memory patterns with the state of the network is displayed at five points in time after prime onset. Middle of the x axis corresponds to the prime pattern (pattern 1), to its right and left are two of its related patterns (pattern 2 and 3), and next are the indirectly related patterns (patterns 9 and 13). Further to the right and left are double-indirect patterns (patterns 12 and 16), and on the edges are two unrelated patterns (patterns 5 and 6). Because of finite size effects of the network, unrelated patterns no not yield an exact 0 correlation, and the displayed results are corrected for such bias. The mean correlation presents a ‘spreading out’ behavior, initially concentrated on the prime, then spreads to the related and indirectly related patterns, and finally distributed evenly between many patterns. In very large networks, the final stage is expected to be distributed between many more patterns, yielding an insignificant correlation to each of them, a situation resembling the activation dying out.

5. Simulation 2

As elaborated in the introduction, SA and attractor networks have often been contrasted using the semantic priming paradigm. Whereas semantic priming in SA stems from the existence of connections between related concept nodes, attractor networks mostly attribute priming effects to correlations between patterns. These two explanations differ, among other things, in their prediction about mediated priming: Since mediated items are not related directly, they should not be correlated; therefore, simple attractor networks (without latching dynamics) are unable to account for mediated priming results. SA, on the other hand, allows activation to spread for long distances in semantic space and, therefore, predicts mediated priming. In the present simulation, we tested whether our model yields basic semantic priming effects and how different prime-target relations, including mediated relations, modulate priming. In particular, we explored whether the pattern of priming effects in the simulation correspond with findings previously reported in human studies.

5.1. Method

The general methods were similar to those used in Simulation 1.

5.1.1. Strong vs. moderate and direct vs. indirect relations

To produce varying degrees of direct relatedness, we changed the encoding of two specific patterns in each neighborhood, (e.g., patterns 1 and 2), so that their correlation was higher than all the others within the neighborhood (e.g., the correlations between patterns 1 and 3, 1 and 4, 2 and 3, 2 and 4). Independent units were used to produce this additional correlation so that it wouldn’t interact with any already-encoded correlation. This procedure resulted in two levels of relatedness within a neighborhood.

Mediated priming was produced like in Simulation 1. Specifically, we introduced a correlation between pattern 2 and pattern 9, and between patterns 6 and 13. Consequently, patterns 1 and 9 and patterns 5 and 13 became indirectly related (mediated by patterns 2 and 6, respectively; see Fig. 4B).

5.1.2. Experimental procedure

Each trial consisted of the presentation of two inputs, a prime followed by a target, each being one of the pre-encoded lexical patterns. The relatedness between the prime and the target could be strong, moderate, indirect, or they could be unrelated. For example, since patterns 1 and 2 were a strongly correlated pair within the semantic neighborhood of patterns 1–4, and pattern 2 was also correlated outside its neighborhood to pattern 9, then presenting the patterns 1 and 2 as prime and target, respectively, formed a strong and directly related condition, 1 and 3 a moderate and directly related condition, 1 and 9 an indirectly related condition, and 1 and 16 an unrelated condition. (See examples for all experimental conditions in Fig. 4B). In addition, a neutral condition was presented with the prime being pattern 17 and the target being any of the ‘real’ word-patterns (1–16). Since no connections exist between the neutral patterns of either network, this condition was, in fact, equivalent to not presenting the prime at all8. Primes and targets were randomly chosen from within the possible combinations for each condition, with 100 trials in each condition.

Each trial started with the presentation of an external input to the lexical network which served as ‘prime’. After 100 simulated milliseconds this external input was removed, and a new external input corresponding to the target was presented to the lexical network with 250ms SOA (cf., Balota & Lorch, 1986). The reaction time to a target was measured from its onset until the convergence of the lexical network (‘correct’ convergences to the target attractor were always achieved). Convergence was defined as the network’s state reaching a 0.95 correlation with the relevant memory pattern. Fig. 4C presents an example of this chain of events in a non-neutral trial (for simplicity, no semantic transitions were assumed in this example).

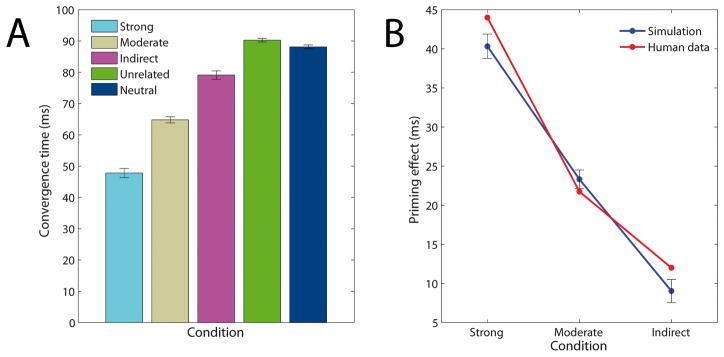

5.2. Results

The lexical network’s Reaction Time (RT) was computed separately for each prime-target relatedness condition (Fig. 6A) 9. Reaction-times were shortest for the strongly related pairs (M = 47.81 simulated milliseconds), followed by the moderately related pairs (M = 64.81ms), the indirectly related pairs (M = 79.1ms) and the unrelated and neutral pairs (M = 90.27ms and M = 88.13ms, respectively). Fig. 6B presents the main facilitation effects (relative to the neutral condition) compared to data from human experiments (taken from Lorch, 1982; Balota & Lorch, 1986). Mirroring the empirical findings in humans, there was a gradient of the priming magnitude which decreased both with semantic relatedness, as well as with directedness of this relation.

Fig. 6.

(A) Mean convergence time of the lexical network for the various conditions of Simulation 2. (B) Facilitation effects in Simulation 2 compared to human experiments. Human results taken from Lorch, 1982 (table 2, 150ms and 300ms SOAs; table 3, 200ms and 400ms SOAs. High and low dominance exemplars representing strong and moderate connections); and Balota and Lorch, 1986 (table 2, 250ms SOA only). Error bars represent ±1 standard error of the mean.

5.3. Discussion of simulations 1 and 2

The results of the first simulation showed how an attractor neural network with latching dynamics can implement spreading activation on average over trials. This pattern is manifested in the semantic network and it is based solely on its characteristics, with no dependence on the characteristics of the lexical network (or even its mere existence). The only condition for activation to ‘spread’ from a particular network pattern to its ‘neighbors’ is that the input to the semantic network would diminish and let the latching dynamics run freely (as was implemented in the present model by postulating short-term synaptic depression in lexical-to-semantic connections). The pace at which the activation spreads depends on the rate of transitions from one attractor to another during the latching dynamics. The faster the transitions are the faster activation spreads.

As mentioned above, latching may be achieved by various mechanisms. Yet, the general characteristics of the SA as revealed by the present simulation did not depend on the specific adaptation mechanism which has been implemented. Rather, the most important factor which determines where and how quickly the activation spreads is the correlation between the patterns. Transitions occur more frequently between correlated patterns and within a semantic neighborhood than between uncorrelated patterns contained in different neighborhoods (Fig. 3). In other words, the correlations between concept patterns play the role played by the connections’ strength between various word nodes in the original SA theory. However, the probability of the network to jump from one pattern to another is not simply determined by the correlation strength between two concepts. Rather, this probability is determined by the relative strengths of the correlations that a particular concept pattern has with all the other concept patterns. For example, if one pattern (‘pattern A’) has a 0.1 correlation with another pattern (‘pattern B’) and no correlations with any other pattern, there is a high probability that the network would jump from pattern A to pattern B. On the other hand, if pattern A is also correlated with another pattern, C, with a 0.2 correlation, the most probable jump would be from A to C rather than from A to B; the probability of the jump from A to B is reduced even though the strength of the corresponding correlation is the same. This characteristic of our model resonates with Anderson’s model of spreading activation (1983), in which the connection strengths between nodes are scaled by the total amount of connections (a hypothesis which is essentially required to account for other linguistic phenomena, such as the fan-effect; see Anderson, 1974). It is also in line with some spreading activation models in which the connection strengths are determined by free association norms (e.g., Spitzer, 1997). In such models the connection strength from dog to cat, for example, is determined by the probability that cat will be the first association of dog in a free associations task. The total strength of connections from dog to all of its associates must sum up to 1 (as it represents probability) and, therefore, each of the connections is influenced by all the others. This is exactly what should be expected from the waySA is implemented in our model, which, being expressed on average over trials, provides, in fact, a natural scaling for the connections’ strength. Indeed, one could see the activity of nodes in the original SA model as an average manifestation of both the correlations between the patterns in our semantic network and the probability of associative occurrences achieved from association norms. As will be demonstrated in Simulation 3, the probability of a transition from one pattern to another in our model resembles, in principle, the probability of a corresponding association between two concepts in free association norms.

There is, however, an important distinction between the average performance of the semantic network in our model and that of the original spreading activation model. In our network, spreading is temporarily interrupted by relaxation periods which correspond to the network reaching an attractor. In other words, activation does not spread in a monotonic manner like in the original SA model, but, rather, in jumps which reflect the dynamical transitions from one attractor to another. This implies that at very short SOAs, the ‘spreading’ is actually entirely dependent on the network’s correlations as it relaxes on the prime’s attractor and may, therefore, seem instantaneous in respect to the prime’s immediate neighbors (cf., Plaut, 1995). Only at longer SOAs can transitions participate in the dynamics and allow spreading to carry on. Thus, immediate and distant (i.e., indirect) neighbors in our model have different status in terms of the activation spread, in contrast to the classical SA in which no such distinction exists.

The results of the second simulation demonstrated how the dynamics in the semantic network affects the convergence time of the lexical network. As can be seen in Fig. 6, mimicking semantic priming effects in humans, the time needed for the convergence of the lexical network on the target’s word pattern is shorter if prior to its appearance the semantic network converged on a concept pattern that is correlated (i.e., related) to that target’s concept. This result is achieved because a number of units that are activated in the semantic network are connected to the units that would be activated by the target pattern in the lexical network, and this partial top-down pre-activation facilitates the convergence of the lexical network on the target. Since the magnitude of facilitation is proportional to the amount of shared units, the stronger the prime and the target concept patterns are correlated in the semantic network, the faster would the target ‘recognition’ by the lexical network be (compare the turquoise and the beige bars in Fig. 6A). Unrelated prime and target patterns do not share any active units and, indeed, did not facilitate each other.

In addition to direct priming, mediated priming effects were also apparent in Simulation 2. These effects stemmed from trials in which the semantic network committed a transition from the prime’s pattern to another pattern before target onset. Often, this new pattern is correlated both to the prime and to the upcoming target (as in the example of lion being related to tiger, which is related to stripes). Consequently, when the target appears, the semantic network will already be converged to a pattern correlated to it (even if the original prime is not). This latter correlation yields the observed mediated priming effect.

Since the semantic-to-lexical connections in our model are purely excitatory, we expected only facilitatory effects and, therefore, the unrelated and neutral targets, both representing patterns uncorrelated to the prime, should have yielded equivalent convergence times. This was, indeed, the general observed result of the simulation: Although the unrelated and the neutral conditions were not identical 10, the difference between these two conditions was very small, an order of a magnitude smaller than the facilitation effect (Fig. 6A). Therefore, our results, based on our implementation of neutral trials, are in agreement with the view that automatic priming mechanisms primarily contribute to facilitation of related targets.

6. The emergence of asymmetric priming effects and their modulation by SOA

In the previous simulations we showed how the combination of correlation between concept patterns and synaptic adaptation in the semantic network yields a spreading-activation-like dynamics, allowing for both direct and mediated priming to emerge. Next, we will show how these two mechanisms combine in sophisticated ways to account for previously reported asymmetry in associative relations. We will demonstrate how this asymmetry is modulated by SOA and how it can be related to the difference between semantic and associative priming, as well as to backward priming.

There is an important distinction between the correlation of two patterns and the probability of transitions between them. This can be shown by a simple example: Imagine that pattern B is correlated to the same degree to both patterns A and C, whereas neither A nor C is correlated to other patterns. If the network rests on pattern A and then jumps, it will almost definitely jump to pattern B, its only correlated pattern. On the other hand, if it rests on pattern B, jumps to A and C would be equally probable. Therefore, although A and B are symmetrically correlated, the transition probabilities from one of them to the other are asymmetric. This example demonstrates the fundamental characteristic of our network’s dynamics: The transition probabilities from one pattern to another are influenced by the entire structure of pattern correlations encoded in the network11.

In priming experiments, the transition probabilities can influence reaction time. When the semantic network frequently jumps to the pattern representing the upcoming target (before its actual appearance), the mean reaction time to the target should decrease considerably (and priming effects should consequently increase) since many more units representing the target concept contribute to facilitating the convergence of the lexical network. If, however, transitions are mostly to a pattern other than the target, reaction times may not change or may even increase, depending on where the network jumped to. Asymmetry in priming may therefore arise as a function of the asymmetry in jumps. This asymmetry should interact with SOA: With short SOAs, there are less semantic transitions and therefore the asymmetry in probabilities has less impact. With longer SOAs, semantic transitions are probable and, consequently, asymmetry emerges.

A common finding in the priming literature is that the size of the priming effect is modulated by SOA to different degrees, depending on the prime-target relatedness type. Whereas the priming effect for associated pairs increases with SOA (Lorch, 1982; de Groot, 1984, 1985; de Groot, Thomassen, & Hudson, 1986; Neely, 1991), semantic priming for related but unassociated pairs is less influenced by SOA, and may even decrease for backward-related pairs (Lucas, 2000; Kahan et al., 1999). Several authors suggested that this difference between the SOA effects on associated and unassociated pairs may be attributed to episodically learned connections between linguistic items based on co-occurrence. Episodically learned associations could be formed either between concepts in the semantic system (e.g., Herrmann et al., 1993; Silberman, Miikkulainen, & Bentin, 2005) or between words in the lexical system (Fodor, 1983; Lupker, 1984). These connections were suggested to affect priming mostly at long SOAs assuming that time allows more efficient processing of the prime, leading to a greater impact of the learned prime-target associations (Plaut, 1995). Alternatively, other authors explained the SOA-dependent increase in priming between associated pairs relying on controlled mechanisms, which supposedly take time to initiate and therefore contribute to priming only at long SOAs (e.g., Neely, 1977; Neely, 1991).

In our model, SOA is expected to influence the magnitude of priming since transitions in the network cause the focus of semantic activation to change, in a given trial, from the prime to its surrounding neighborhood. This SOA-dependency occurs without assuming any particular connectivity beyond the correlation structure of the encoded patterns and, most important, is expected to differ for symmetrically and asymmetrically associated pairs because of their different transition probabilities. The difference between associative and semantic priming may therefore be a product of such asymmetry.

7. Simulation 3

The goal of this simulation was to explore how a more complex structure of network correlations affects the transitional probabilities of the network and the priming effect, as a function of SOA. The correlation structure was determined by the association norms of four specific concepts within one semantic neighborhood (Nelson, McEvoy, & Schreiber, 1998) and was designed to mimic their mutual free association probabilities. First, we showed how such probabilities can be roughly implemented in the semantic network despite its small size and small variety of correlation strengths. Second, we verified that several known characteristics of association response-times (e.g., Schlosberg & Heineman, 1950; Goldstein, 1961) are roughly reproduced by the transition latencies in our model. Third, we examined the priming effect and its modulation by SOA for prime-target pairs that differ in their forward and backward transition probability.

7.1. Method

7.1.1. Encoded patterns

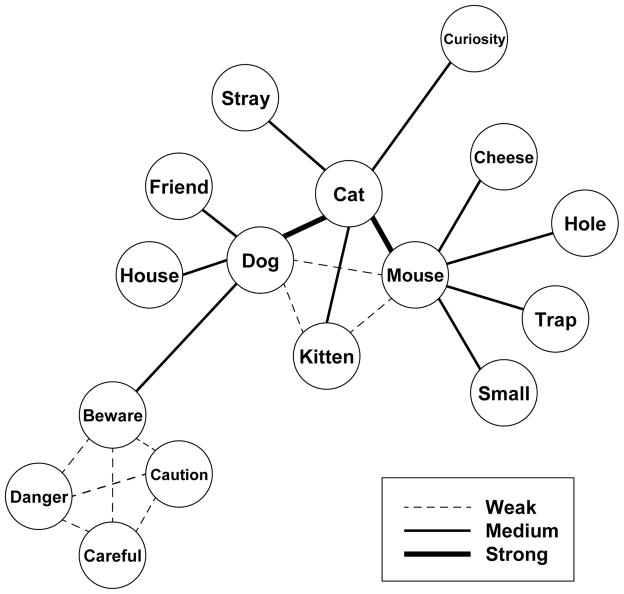

The present simulation focused on a semantic neighborhood consisting of patterns analogous to four animal concepts – dog, cat, mouse and kitten. Based on the human-derived association norms, the “cat” pattern was strongly correlated to both “dog” and “mouse”, and moderatelycorrelated to “kitten”. All other correlations within the neighborhood were weak. Each animal concept, with the exception of kitten, also had idiosyncratic moderate correlations with concepts outside the neighborhood (kitten was the exception because, according to the association norms, it hardly has any significant forward or backward connections outside the neighborhood). Dog, in particular, had an idiosyncratic correlation with the concept beware, which belonged to another semantic neighborhood consisting of beware, danger, caution, and careful, all weakly correlated among themselves. The total number of memory patterns encoded in the network (including the baseline pattern) was 17, as in previous simulations. A summation of the structure is depicted in Fig. 7.

Fig. 7.

Structure of the semantic memory used in Simulation 3. Sixteen memory patterns representing 16 different concepts were used, with three degrees of correlation strengths. Width of the connecting lines represents the strength of the correlations.

7.1.2. Experimental procedure

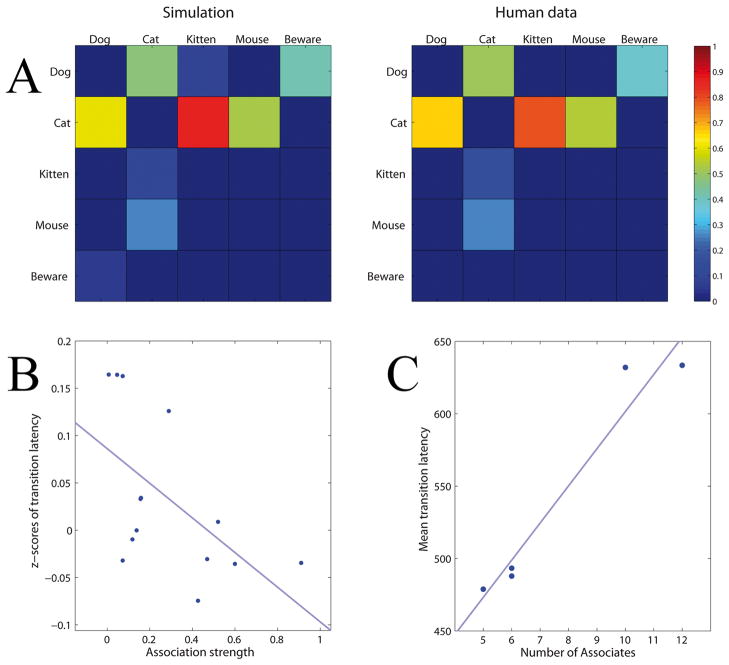

First we assessed the probability of associations between the animal concepts as reflected by the first transition from each stimulus. This assessment was based on 4000 trials in which each of the four animal concepts were presented 1000 times to the lexical network as single stimulus. Trials duration was one second, to elevate the chances of at least one transition in the semantic network. Identically, 1000 trials were run with the concept beware, to examine its tendency to jump to the dog concept. The observed probabilities of these transitions are presented in a color-bar graph next to their real values based on the human association norms (Fig. 8A). As can be seen, albeit not identical, the transition probabilities of the network closely resembled the trends observed in the human data. Specifically, the concept patterns corresponding to dog and cat were associates of each other, while dog was also a moderate associate of beware, but not vice versa. The concept cat had, in addition, some moderate associations with kitten and mouse. Of particular interest, the association to kitten was dramatically asymmetric, with kitten leading to cat almost nine times more often than the other way around.

Fig. 8.

Behavior of the model using associative and non-associative pairs in Simulation 3. (A) First-transition probabilities of the five main concepts in the network. Probabilities are indicated by colors ranging from 0 (dark blue) to 1 (red). Columns represent the presented words and rows represent their associations. The simulation results are compared to data taken from human association norms (Nelson, McEvoy, & Schreiber, 1998). (B) Associative strength (measured as frequency of occurrence) vs. standardized transition latency of first-transitions in the network. (C) Number of associates of the five main concepts in the network vs. their mean transition latency.

Next we calculated the average transition latency of each of the associations produced by the network by computing the time from the appearance of the stimulus until the first transition has concluded, separately for each association. Following this stage, priming was simulated as in Simulations 1 and 2, with a target following a prime at a pre-designated SOA. Several representative prime-target pairs within the animal neighborhood were used, consisting of both high and low mutual transition probabilities. In addition, a couple of pairs consisting of one concept within the neighborhood and one concept outside it were used. For some of the strongly associated prime-target pairs, priming simulations of the corresponding backward direction (where the prime and target switched roles) were also conducted. Finally, neutral trials (with targets chosen randomly from the memory patterns) were conducted for comparison with the related trials. Each priming pair was repeated for 100 trials, and for 7 SOAs equally distributed from 150ms to 450ms.

7.2. Results

7.2.1. Association latencies

Experimental research on association norms often finds a negative correlation between association strength (defined in terms of frequency of occurrence for a given cue) and association response-time (e.g., Schlosberg & Heineman, 1950), as well as a positive correlation between the number of associates of a cue and the average latency of all of its associates (Goldstein, 1961; Flekkoy, 1973). In order to examine how well the network associations resemble human data, we examined if these trends exist in our results12.

Association strength (defined here as the observed probability of a particular transition) and association latency were not correlated when the entire spectrum of associations from the five stimuli were considered. However, it was evident that the latencies for a particular stimulus were strongly affected by the total number of active units that stimulus shared with all the other concept patterns. Stimuli which shared a large number of units with other patterns (such as dog, cat and mouse) were less stable and tended to yield considerably faster transitions than stimuli which shared few units (kitten and beware). This difference, which blurs the correlation of interest, might not be as robust in the representations of real concepts in humans and, therefore, it is most probably a confound created by the specific encoding structure used in our simulations (see discussion). We therefore controlled for the difference in the total number of shared units by computing the z-scores of each of the latencies, that is, comparing each raw latency value with the association latencies of its deriving stimulus (e.g., the z-score of cat-kitten was computed in comparison to the average transition latencies stemming from cat, while the z-score of kitten-cat was computed compared to the average latencies stemming from kitten). These latency z-scores are plotted against association strength in Fig. 8B. Corresponding to published findings in humans, there was a significant negative correlation (r = −0.57; p < 0.04) between the measures, indicating that strong associations tended to occur faster than weaker associations.

We also looked at the total number of associates of each of the five patterns as a function of its average association latency (over all the associations stemming from it; naturally, this computation used raw RT values and not z-scores). These data are plotted in Fig. 8C. Again, mirroring findings from human associations, we found a positive correlation between these two measures (r = 0.97). Although only five data points were available in the present data, this correlation was highly significant (p < 0.007).

7.2.2. Priming effects

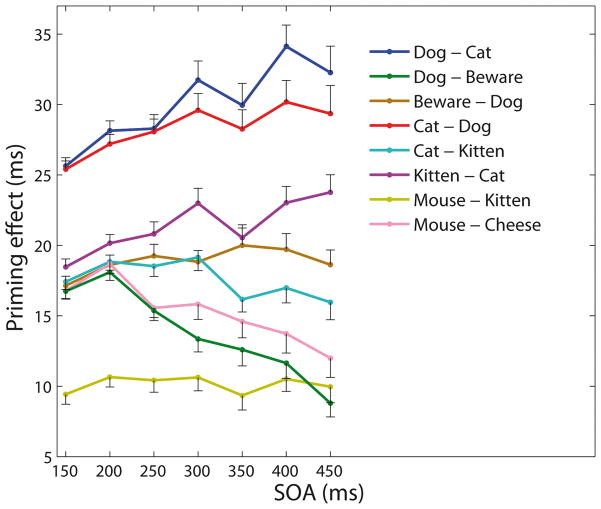

The priming effects of the pre-specified prime-target pairs, as a function of SOA, are presented in Fig. 9. Three notable results are apparent: First, the priming of a target by a strongly associated prime was higher, across SOAs, compared to priming between unassociated pairs (compare the three upper curves to the rest in Fig. 9). Second, the priming effects for strongly associated pairs increased with SOA (Fig. 9, red, blue and purple curves), while the priming for non-associative pairs was unaffected by SOA or even decreased (cyan, yellow, pink and green curves). Third, pairs which were asymmetrically associated to each other, as reflected by their transitional probabilities, yielded asymmetric priming, with the asymmetry growing with SOA (compare purple vs. cyan and brown vs. green curves).

Fig. 9.

Mean facilitation effects as a function of SOA for the prime-target pairs presented to the network in Simulation 3. Error bars represent ±1 standard error of the mean.

7.3. Discussion

The results of Simulation 3 demonstrate the importance of the correlation structure between encoded patterns in determining the transition probabilities between concepts and the consequence of these probabilities on priming. Although the structure of the semantic network in our model is determined by the symmetric correlations between concepts, the transition probabilities resulting from this structure are not symmetric, reflecting directional associative relations as well. This characteristic allows the semantic network in the model to exhibit complex dynamics yielding asymmetrical associative priming as well as an interaction between the effect of associative strength and SOA. The most important conclusion from this simulation is that differences in the association strength between concepts and the resulting differences in their priming patterns can be based solely on the correlation structure of the encoded memories without requiring the formation of explicit associative connections between specific words through designated training (cf., Plaut, 1995).

The observed transition latencies between memory patterns matched several reported characteristics of experimental RTs in free-association tasks (Scholsber & Heineman, 1950; Flekkoy, 1973). The association strength between concepts was negatively correlated with the z-scores of the transition latencies, though not with the absolute latency values. The lack of correlation with the absolute latency values could be explained by the fact that the concept patterns in our simulation were apparently divided into two distinct groups, one which contained patterns sharing a large number of active units with other patterns (dog, cat, mouse) and another which contained patterns with few shared units (kitten, beware). The difference in average latencies between the two groups was relatively large (~150ms) and probablyblurred the effect of association strength on latency. Indeed, separate post-hoc examinations of the association strength within each of the two groups in isolation yielded negative correlations with the absolute scores just as for the z-scores of the combined group (though these correlations did not reach statistical significance due to the small number of data points in each group separately). Whether the discrepancy between the two groups is of theoretical interest remains to be explored. It may be that the difference does not have an analog in the representations of real concepts in the brain and, therefore, should be considered as an artifact stemming from the relative small size of our network (that limits the maximum amount of encoded concepts and prevents equating the number of shared units across all patterns). Another possibility, however, is that this difference in the number of shared units does reflect, to some extent, real diversity in the way concepts are represented in the semantic system; in that case, a more comprehensive comparison of the associative latencies may require identifying how the total number of shared units of a pattern in the network maps to known characteristics of real concepts (examples of which may include, perhaps, semantic set size and familiarity). However, since the patterns in our network represent real concepts only crudely, we leave further investigation of this issue to future studies in which a more transparent analogy between concepts and their computational representations will be determined.

Another feature of the transition latencies which resembled human associative RT data was the positive correlation between the average association latency from a stimulus and the total number of associates of this stimulus. That is, the more associates a concept has, the longer the associates’ latency is on average. As shown by Flekkoy (1973), this result cannot be only a by-product of the negative association between association strength and association latency. In our network, this correlation stemmed from the fact that the stimuli tended to produce occasional idiosyncratic transitions (that is, transitions from a stimulus to a concept-pattern not correlated to it directly, such as kitten - beware), and this tendency differed systematically between the five stimuli. The fewer units a stimulus-pattern shared with other concept-patterns, the more idiosyncratic transitions it produced. Since, as discussed above, concepts which shared few units also tended to yield slower transitions, a correlation emerged.