It is thus a major virtue of the hierarchical predictive coding account that it effectively implements a computationally tractable version of the so-called Bayesian Brain Hypothesis. (Clark, in press)

It seems by now common wisdom that a brain organized according to the principles of hierarchical predictive coding is a brain that is capable of efficiently performing full-blown Bayesian inferences. The idea is not only common, but also of great significance, as it suggests that the hierarchical predictive coding framework may provide a neurally plausible and computationally feasible bridge between theories of neural functioning (Friston, 2005) and theories of cognitive functioning (Chater and Manning, 2006; Baker et al., 2009).

But can predictive brains really be the same as Bayesian brains? Or is the claim merely an informal or imprecise shorthand for something which is formally and factually false? We address these questions by reconsidering the formal specifications of the theory of hierarchical predictive coding, as put forth by Friston (2002, 2005).

In the hierarchical predictive coding framework, it is assumed that the brain represents the statistical structure of the world at different levels of abstraction by maintaining different causal models that are organized on different levels of a hierarchy, where each level obtains input from its subordinate level. In a feed-backward chain, predictions are made for the level below. The error between the model’s predicted input and the observed (for the lowest level) or inferred (for higher levels) input at that level is used (a) in a feed-forward chain to estimate the causes at the level above and (b) to reconfigure the causal models for future predictions. Ultimately, the system stabilizes when it has minimized the overall prediction error.

Here we will focus on (a) the cause estimation step in the feed-forward chain. We will argue that the predictive coding framework does not yet satisfactorily specify how this step can be both Bayesian and computationally tractable. In the Bayesian interpretation of predictive coding (Friston, 2002) estimating the causes comes down to finding the most probable causes vm given the input u for that level and the current model parameters θ:

Given that vm has maximum a posteriori probability (MAP), the idea that predictive coding implements Bayesian inference seems to hinge on this step. The idea that hierarchical predictive coding implements tractable Bayesian inference in turn hinges on the presumed existence of a tractable computational method for estimating vm. Given that it is known that computing MAP—whether exactly or approximately—is computationally intractable for arbitrary causal structures (Shimony, 1994; Abdelbar and Hedetniemi, 1998; Kwisthout, 2011), the existence of a tractable method crucially depends on the structural properties of the brain’s causal models (Kwisthout et al., 2011).1

At present, the hierarchical predictive coding framework does not yet make stringent commitments as to the nature of the causal models that the brain can represent. Hence, contrary to suggestions by Clark (in press), the framework does not yet have the virtue that it effectively implements tractable Bayesian inference. At this point in time three mutually exclusive options remain open: either predictive coding does not implement Bayesian inference, or predictive coding is not tractable, or the theory of hierarchical predictive coding is enriched by specific assumptions about the structure of the brain’s causal models.

Assuming that one is committed to the Bayesian Brain Hypothesis, the first two options are out and the third is the only one remaining. Formal analyses expanding on this option are beyond the scope of this commentary (see e.g., Blokpoel et al., 2010; van Rooij et al., 2011), but Table 1 qualitatively sketches the space of causal models that could (or could not) yield tractable Bayesian cause estimation. We will discuss the viability of the options in more detail below.

Table 1.

For which types of causal models do there exist methods for cause estimation that are both tractable and Bayesian?

| Structure of causal models | Method used for cause estimation | Bayesian | Tractable |

|---|---|---|---|

| Simple | Heuristic | Yes | Yes |

| Approximate | Yes | Yes | |

| Intermediate | Heuristic | Maybe | Yes |

| Approximate | Yes | Maybe | |

| Unconstrained | Heuristic | No | Yes |

| Approximate | Yes | No | |

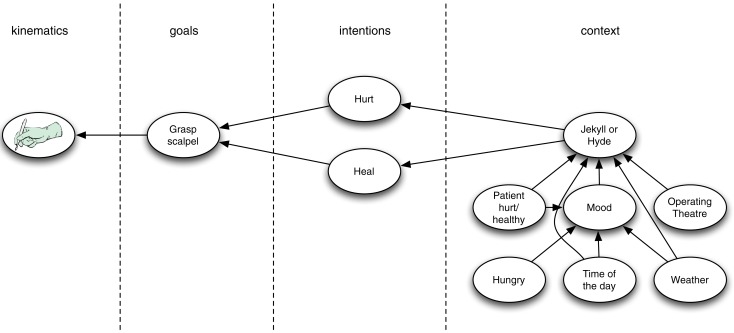

To start, causal models could be assumed to be quite simple, e.g., having high degrees of statistical independencies of variables. In this case, it may be that heuristic methods, such as those based on gradient ascent (Friston, 2002, p. 13) or a Kalman filter (Rao and Ballard, 1999), yield tractable Bayesian cause estimation. Let’s assume that it does. Then, of course, also tractable approximation methods exist for those simple structures—the heuristics themselves being a case in point. Note, however, that a commitment to such simple causal models may limit the scope of the predictive coding theory to simple or low-level forms of perception and cognition. After all, higher-order causal reasoning—such as occurs, for instance, in Theory of Mind (Kilner et al., 2007)—seems to presuppose quite sophisticated causal structures containing complex statistical interdependencies (see Figure 1 for an illustration; cf. Uithol et al., 2011). Complex causal models can allow for rugged probability landscapes of different possible causes and heuristic methods can get stuck in local optima that may be arbitrarily far off from the true Bayesian (i.e., MAP) solution. For complex causal structures, heuristics are thus not guaranteed to do anything remotely like approximating Bayesian inference.

Figure 1.

An illustration of a hierarchy with higher level complex causal models. The illustration builds on the Jekyll and Hyde example used by Kilner et al. (2007). Kilner et al. assumed four different levels and simple mappings between the levels. For example, if at the higher level one infers that the person grasping the scalpel is Dr. Jekyll (or Mr. Hyde) then at the lower level one predicts the intention is to heal (or to hurt). The Figure illustrates that at higher levels of the hierarchy the causal models within a level can become quite complex. Whether one infers that the person is Jekyll or Hyde can depend on a myriad of interconnected variables, such as the present location, the health status of the patient, the weather, and the person’s mood. Note that this complexity cannot be dissolved by decomposing the complex causal model into simple causal models at higher levels of the hierarchy, because complex models cannot generally be so decomposed. So it seems that if one wants to use the hierarchical predictive coding framework to explain high-level cognition, then complex models within levels are required.

Given that the hierarchical predictive coding framework seems to aspire spanning all levels of cognitive functioning, it probably does not want to commit to simple causal models. The other extreme—i.e., that the brain’s causal models are structurally unconstrained—is also excluded. As explained above, it follows from known intractability results for approximating MAP (Shimony, 1994; Abdelbar and Hedetniemi, 1998; Kwisthout, 2011) that such a brain cannot implement tractable Bayesian inference. We are thus left with the intermediate option: The causal models represented by the brain can be complex but not arbitrarily so. Given that the exact nature of this causal complexity will determine whether or not a hierarchical predictive coding architecture can implement tractable Bayesian inference, it seems vital for the viability of the marriage between the predictive coding framework and the Bayesian Brain Hypothesis to identify exactly what this nature is.

There is a strong appeal to the Bayesian Brain Hypothesis, as well as to the hypothesis that the brain implements cognition via hierarchical predictive coding. Given that the statistics of the world do not seem to be arbitrarily complex, it is conceivable that the brain has evolved specifically those constraints on its causal models that afford tractable Bayesian inference via hierarchical predictive coding. The open question remaining is what those constraints could possibly be. This question is particularly pressing, yet non-trivial to answer, if the hierarchical predictive coding account aims to apply to all levels of perception and cognition.

Footnotes

1We note that, for arbitrary causal structures, having the prediction and the prediction error in the input when estimating vm does not make this estimation computationally tractable.

References

- Abdelbar A. M., Hedetniemi S. M. (1998). Approximating MAPS for belief networks is NP-hard and other theorems. Artif. Intell. 102, 21–38 10.1016/S0004-3702(98)00043-5 [DOI] [Google Scholar]

- Baker C. L., Saxe R., Tenenbaum J. B. (2009). Action understanding as inverse planning. Cognition 113, 329–349 10.1016/j.cognition.2009.07.005 [DOI] [PubMed] [Google Scholar]

- Blokpoel M., Kwisthout J., van der Weide T. P., van Rooij I. (2010). “How action understanding can be rational, Bayesian and tractable,” in Proceedings of the 32nd Annual Conference of the Cognitive Science Society, eds Ohlsson S., Catrambone R. (Austin: Cognitive Science Society; ), 1643–1648 [Google Scholar]

- Chater N., Manning C. (2006). Probabilistic models of language processing and acquisition. Trends Cogn. Sci. (Regul. Ed.) 10, 335–344 10.1016/j.tics.2006.05.008 [DOI] [PubMed] [Google Scholar]

- Clark A. (in press). Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. [DOI] [PubMed] [Google Scholar]

- Friston K. (2002). Functional integration and inference in the brain. Prog. Neurobiol. 68, 113–143 10.1016/S0301-0082(02)00076-X [DOI] [PubMed] [Google Scholar]

- Friston K. (2005). A theory of cortical responses. Philos. Trans. R. Soc. Lond. B Biol. Sci. 360, 815–836 10.1098/rstb.2005.1622 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilner J. M., Friston K. J., Frith C. D. (2007). Predictive coding: an account of the mirror neuron system. Cogn. Process. 8, 159–166 10.1007/s10339-007-0170-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwisthout J. (2011). Most probable explanations in Bayesian networks: complexity and tractability. Int. J. Approx. Reason. 52, 1452–1469 10.1016/j.ijar.2011.08.003 [DOI] [Google Scholar]

- Kwisthout J., Wareham T., van Rooij I. (2011). Bayesian intractability is not an ailment that approximation can cure. Cogn. Sci. 35, 779–784 10.1111/j.1551-6709.2011.01182.x [DOI] [PubMed] [Google Scholar]

- Rao R., Ballard D. (1999). Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat. Neurosci. 2, 79–87 10.1038/4580 [DOI] [PubMed] [Google Scholar]

- Shimony S. (1994). Finding MAPs for belief networks is NP-hard. Artif. Intell. 68, 399–410 10.1016/0004-3702(94)90072-8 [DOI] [Google Scholar]

- Uithol S., van Rooij I., Bekkering H., Haselager P. (2011). What do mirror neurons mirror? Philos. Psychol. 24, 607–623 10.1080/09515089.2011.562604 [DOI] [Google Scholar]

- van Rooij I., Kwisthout J., Blokpoel M., Szymanik J., Wareham T., Toni I. (2011). Intentional communication: computationally easy or difficult? Front. Hum. Neurosci. 5:52. 10.3389/fnhum.2011.00052 [DOI] [PMC free article] [PubMed] [Google Scholar]