Abstract

Music evokes complex emotions beyond pleasant/unpleasant or happy/sad dichotomies usually investigated in neuroscience. Here, we used functional neuroimaging with parametric analyses based on the intensity of felt emotions to explore a wider spectrum of affective responses reported during music listening. Positive emotions correlated with activation of left striatum and insula when high-arousing (Wonder, Joy) but right striatum and orbitofrontal cortex when low-arousing (Nostalgia, Tenderness). Irrespective of their positive/negative valence, high-arousal emotions (Tension, Power, and Joy) also correlated with activations in sensory and motor areas, whereas low-arousal categories (Peacefulness, Nostalgia, and Sadness) selectively engaged ventromedial prefrontal cortex and hippocampus. The right parahippocampal cortex activated in all but positive high-arousal conditions. Results also suggested some blends between activation patterns associated with different classes of emotions, particularly for feelings of Wonder or Transcendence. These data reveal a differentiated recruitment across emotions of networks involved in reward, memory, self-reflective, and sensorimotor processes, which may account for the unique richness of musical emotions.

Keywords: emotion, fMRI, music, striatum, ventro-medial prefrontal cortex

Introduction

The affective power of music on the human mind and body has captivated researchers in philosophy, medicine, psychology, and musicology since centuries (Juslin and Sloboda 2001, 2010). Numerous theories have attempted to describe and explain its impact on the listener (Koelsch and Siebel 2005; Juslin and Västfjäll 2008; Zentner et al. 2008), and one of the most recent and detailed model proposed by Juslin and Västfjäll (2008) suggested that several mechanisms might act together to generate musical emotions. However, there is still a dearth of experimental evidence to determine the exact mechanisms of emotion induction by music, the nature of these emotions, and their relation to other affective processes.

While it is generally agreed that emotions “expressed” in the music must be distinguished from emotions “felt” in the listener (Gabrielson and Juslin 2003), many questions remain about the essential characteristics of the complex bodily, cognitive, and emotional reactions evoked by music. It has been proposed that musical emotions differ from nonmusical emotions (such as fear or anger) because they are neither goal oriented nor behaviorally adaptive (Scherer and Zentner 2001). Moreover, emotional responses to music might reflect extramusical associations (e.g., in memory) rather than direct effects of auditory inputs (Konecni 2005). It has therefore been proposed to classify music–emotions as “aesthetic” rather than “utilitarian” (Scherer 2004). Another debate is how to properly describe the full range of emotions inducible by music. Recent theoretical approaches suggest that domain-specific models might be more appropriate (Zentner et al. 2008; Zentner 2010; Zentner and Eerola ,2010b), as opposed to classic theories of “basic emotions” that concern fear, anger, or joy, for example (Ekman 1992a), or dimensional models that describe all affective experiences in terms of valence and arousal (e.g., Russell 2003). In other words, it has been suggested that music might elicit “special” kinds of affect, which differ from well-known emotion categories, and whose neural and cognitive underpinnings are still unresolved. It also remains unclear whether these special emotions might share some dimensions with other basic emotions (and which).

The advent of neuroimaging methods may allow scientists to shed light on these questions in a novel manner. However, research on the neural correlates of music perception and music-elicited emotions is still scarce, despite its importance for theories of affect. Pioneer work using positron emission tomography (Blood et al. 1999) reported that musical dissonance modulates activity in paralimbic and neocortical regions typically associated with emotion processing, whereas the experience of “chills” (feeling “shivers down the spine”) (Blood and Zatorre 2001) evoked by one’s preferred music correlates with activity in brain structures that respond to other pleasant stimuli and reward (Berridge and Robinson 1998) such as the ventral striatum, insula, and orbitofrontal cortex. Conversely, chills correlate negatively with activity in ventromedial prefrontal cortex (VMPFC), amygdala, and anterior hippocampus. Similar activation of striatum and limbic regions to pleasurable music was demonstrated using functional magnetic resonance imaging (fMRI), even for unfamiliar pieces (Brown et al. 2004) and for nonmusicians (Menon and Levitin 2005). Direct comparisons between pleasant and scrambled music excerpts also showed increases in the inferior frontal gyrus, anterior insula, parietal operculum, and ventral striatum for the pleasant condition but in amygdala, hippocampus, parahippocampal gyrus (PHG), and temporal poles for the unpleasant scrambled condition (Koelsch et al. 2006). Finally, a recent study (Mitterschiffthaler et al. 2007) comparing more specific emotions found that happy music increased activity in striatum, cingulate, and PHG, whereas sad music activated anterior hippocampus and amygdala.

However, all these studies were based on a domain-general model of emotions, with discrete categories derived from the basic emotion theory, such as sad and happy (Ekman 1992a,) or bi-dimensional theories, such as pleasant and unpleasant (Hevner 1936; Russell 2003). This approach is unlikely to capture the rich spectrum of emotional experiences that music is able to produce (Juslin and Sloboda 2001; Scherer 2004) and does not correspond to emotion labels that were found to be most relevant to music in psychology models (Zentner and Eerola 2010a, 2010b). Indeed, basic emotions such as fear or anger, often studied in neuroscience, are not typically associated with music (Zentner et al. 2008).

Here, we sought to investigate the cerebral architecture of musical emotions by using a domain-specific model that was recently shown to be more appropriate to describe the range of emotions inducible by music (Scherer 2004; Zentner et al. 2008). This model (Zentner et al. 2008) was derived from a series of field and laboratory studies, in which participants rated their felt emotional reactions to music with an extensive list of adjectives (i.e., >500 terms). On the basis of statistical analyses of the factors or dimensions that best describe the organization of emotion labels into separate groups, it was found that a model with 9 emotion factors best fitted the data, comprising “Joy,” “Sadness,” “Tension,” “Wonder,” “Peacefulness,” “Power,” “Tenderness,” “Nostalgia,” and “Transcendence.” Furthermore, this work also showed that these 9 categories could be grouped into 3 higher order factors called sublimity, vitality, and unease (Zentner et al. 2008). Whereas vitality and unease bear some resemblance with dimensions of arousal and valence, respectively, sublimity might be more specific to the aesthetic domain, and the traditional bi-dimensional division of affect does not seem sufficient to account for the whole range of music-induced emotions. Although seemingly abstract and “immaterial” as a category of emotive states, feelings of sublimity have been found to evoke distinctive psychophysiological responses relative to feelings of happiness, sadness, or tension (Baltes et al. 2011).

In the present study, we aimed at identifying the neural substrates underlying these complex emotions characteristically elicited by music. In addition, we also aimed at clarifying their relation to other systems associated with more basic categories of affective states. This approach goes beyond the more basic and dichotomous categories investigated in past neuroimaging studies. Furthermore, we employed a parametric regression analysis approach (Büchel et al. 1998; Wood et al. 2008) allowing us to identify specific patterns of brain activity associated with the subjective ratings obtained for each musical piece along each of the 9 emotion dimensions described in previous work (Zentner et al. 2008) (see Experimental Procedures). The current approach thus exceeds traditional imaging studies, which compared strictly predefined stimulus categories and did not permit several emotions to be present in one stimulus, although this is often experienced with music (Hunter et al. 2008; Barrett et al. 2010). Moreover, we specifically focused on felt emotions (rather than emotions expressed by the music).

We expected to replicate, but also extend previous results obtained for binary distinctions between pleasant and unpleasant music, or between happy and sad music, including differential activations in striatum, hippocampus, insula, or VMPFCs (Mitterschiffthaler et al. 2007). In particular, even though striatum activity has been linked to pleasurable music and reward (Blood and Zatorre 2001; Salimpoor et al. 2011), it is unknown whether it activates to more complex feelings that mix dysphoric states with positive affect, as reported, for example, for nostalgia (Wildschut et al. 2006; Sedikides et al. 2008; Barrett et al. 2010). Likewise, the role of the hippocampus in musical emotions remains unclear. Although it correlates negatively with pleasurable chills (Blood and Zatorre 2001) but activates to unpleasant (Koelsch et al. 2006) or sad music (Mitterschiffthaler et al. 2007), its prominent function in associative memory processes (Henke 2010) suggests that it might also reflect extramusical connotations (Konecni 2005) or subjective familiarity (Blood and Zatorre 2001) and thus participate to other complex emotions involving self-relevant associations, irrespective of negative or positive valence. By using a 9-dimensional domain-specific model that spanned the full spectrum of musical emotions (Zentner et al. 2008), our study was able to address these issues and hence reveal the neural architecture underlying the psychological diversity and richness of music-related emotions.

Materials and Methods

Subjects

Sixteen volunteers (9 females, mean 29.9 years, ±9.8) participated in a preliminary behavioral rating study performed to evaluate the stimulus material. Another 15 (7 females, mean 28.8 years, ±9.9) took part in the fMRI experiment. None of the participants of the behavioral study participated in the fMRI experiment. They had no professional musical expertise but reported to enjoy classical music. Participants were all native or highly proficient French speakers, right-handed, and without any history of past neurological or psychiatric disease. They gave informed consent in accord with the regulation of the local ethic committee.

Stimulus Material

The stimulus set comprised 27 excerpts (45 s) of instrumental music from the last 4 centuries, taken from commercially available CDs (see Supplementary Table S1). Stimulus material was chosen to cover the whole range of musical emotions identified in the 9-dimensional Geneva Emotional Music Scale (GEMS) model (Zentner et al. 2008) but also to control for familiarity and reduce potential biases due to memory and semantic knowledge. In addition, we generated a control condition made of 9 different atonal random melodies (20–30 s) using Matlab (Version R2007b, The Math Works Inc, www.mathworks.com). Random tone sequences were composed of different sine waves, each with different possible duration (0.1–1 s). This control condition was introduced to allow a global comparison of epochs with music against a baseline of nonmusical auditory inputs (in order to highlight brain regions generally involved in music processing) but was not directly implicated in our main analysis examining the parametric modulation of brain responses to different emotional dimensions (see below).

All stimuli were postprocessed using Cool Edit Pro (Version 2.1, Syntrillium Software Cooperation, www.syntrillium.com). Stimulus preparation included cutting and adding ramps (500 ms) at the beginning and end of each excerpt, as well as adjustment of loudness to the average sound level (−13.7 dB) over all stimuli. Furthermore, to account for any residual difference between the musical pieces, we extracted the energy of the auditory signal of each stimulus and then calculated the random mean square (RMS) of energy for successive time windows of 1 s, using a Matlab toolbox (Lartillot and Toiviainen 2007). This information was subsequently used in the fMRI data analysis as a regressor of no interest.

All auditory stimuli were presented binaurally with a high-quality MRI-compatible headphone system (CONFON HP-SC 01 and DAP-center mkII, MR confon GmbH, Germany). The loudness of auditory stimuli was adjusted for each participant individually, prior to fMRI scanning. Visual instructions were seen on a screen back-projected on a headcoil-mounted mirror.

Experimental Design

Prior to fMRI scanning, participants were instructed about the task and familiarized with the questionnaires and emotion terms employed during the experiment. The instructions emphasized that answers to the questionnaires should only concern subjectively felt emotions but not the expressive style of the music (see also Gabrielson and Juslin 2003; Evans and Schubert 2008; Zentner et al. 2008).

The fMRI experiment consisted of 3 consecutive scanning runs. Each run contained 9 musical epochs, each associated with strong ratings on one of the 9 different emotion categories (as defined by the preliminary behavioral rating experiment) plus 2 or 3 control epochs (random tones). Each run started and ended with a control epoch, while a third epoch was randomly placed between musical stimuli in 2 or 3 of the runs. The total length of the control condition was always equal across all runs (60 s) in all participants.

Before each trial, participants were instructed to listen attentively to the stimulus and keep their eyes closed during the presentation. Immediately after the stimulus ended, 2 questionnaires for emotion ratings were presented on the screen, one after the other. The first rating screen asked subjects to indicate, for each of the 9 GEMS emotion categories (Zentner et al. 2008), how strongly they had experienced the corresponding feeling during the stimulus presentation. Each of the 9 emotion labels (Joy, Sadness, Tension, Wonder, Peacefulness, Power, Tenderness, Nostalgia, and Transcendence) was presented for each musical piece, together with 2 additional descriptive adjectives (see Supplementary Table S2) in order to unambiguously particularize the meaning of each emotion category. The selection of these adjectives was derived from the results of a factorial analysis with the various emotion-rating terms used in the work of Zentner and colleagues (see Zentner et al. 2008). All 9 categories were listed on the same screen but had to be rated one after the other (from top to bottom of the list) using a sliding cursor that could be moved (by right or left key presses) on a horizontal scale from 0 to 10 (0 = the emotion was not felt at all, 10 = the emotion was very strongly felt). The order of emotion terms in the list was constant for a given participant but randomly changed across participants.

This first rating screen was immediately followed by a second questionnaire, in which participants had to evaluate the degree of arousal, valence, and familiarity subjectively experienced by the preceding stimulus presentation. For the latter, subjects had to rate on a 10-point scale the degree of arousal (0 = very calming, 10 = very arousing), valence (0 = low pleasantness, 10 = high pleasantness), and familiarity (0 = completely unfamiliar, 10 = very well known) which was felt during the previous stimulus. It is important to note that, for both questionnaires (GEMS and basic dimensions), we explicitly emphasized to our participants that their judgments had to concern their subjectively felt emotional experience not the expressiveness of the music. The last response on the second questionnaire then automatically triggered the next stimulus presentation. Subjects were instructed to answer spontaneously, but there was no time limit for responses. Therefore, the overall scanning time of a session varied slightly between subjects (average 364.6 scans per run, standard deviation 66.3 scans). However, only the listening periods were included in the analyses, which comprised the same amount of scans across subjects.

The preliminary behavioral rating study was conducted exactly in the same manner, using the same musical stimuli as in the fMRI experiment, with the same instructions, but was performed in a quiet and dimly lit room. The goals of this experiment was to evaluate each of our musical stimuli along the 9 critical emotion categories and to verify that similar ratings (and emotions) were observed in the fMRI setting as compared with more comfortable listening conditions.

Analysis of Behavioral Data

All statistical analyses of behavioral data were performed using SPSS software, version 17.0 (SPSS Inc., IBM Company, Chicago). Judgments made during the preliminary behavioral experiment and the actual fMRI experiment correlated highly for every emotion category (mean r = 0.885, see Supplementary Table S3), demonstrating a high degree of consistency of the emotions elicited by our musical stimuli across different participants and listening context. Therefore, ratings from both experiments were averaged across the 31 participants for each of the 27 musical excerpts, which resulted in a 9-dimensional emotional profile characteristic for each stimulus (consensus rating). For every category, we calculated intersubject correlations, Cronbach’s alpha, and intraclass correlations (absolute agreement) to verify the reliability of the evaluations.

Because ratings on some dimensions are not fully independent (i.e., Joy is inevitably rated higher in Wonder but Sadness lower), the rating scores for each of the 9 emotion categories were submitted to a factor analysis, with unrotated solution, with or without the addition of the 3 other general evaluation scores (arousal, valence, and familiarity). Quasi-identical results were obtained when using data from the behavioral and fMRI experiments separately or together and when including or excluding the 3 other general scores, suggesting a strong stability of these evaluations across participants and contexts (see Zentner et al. 2008).

For the fMRI analysis, we used the same consensus ratings to perform a parametric regression along each emotion dimension. The consensus data (average ratings over 31 subjects) were preferred to individual evaluations in order to optimize statistical power and robustness of correlations, by minimizing variance due to idiosyncratic factors of no interest (e.g., habituation effects during the course of a session, variability in rating scale metrics, differences in proneness to report specific emotions, etc.) (parametric analyses with individual ratings from the scanning session yielded results qualitatively very similar to those reported here for the consensus ratings but generally at lower thresholds). Because our stimuli were selected based on previous work by Zentner et al. (2008) and our own piloting, in order to obtain “prototypes” for the different emotion categories with a high degree of agreement between subjects (see above), using consensus ratings allowed us to extract the most consistent and distinctive pattern for each emotion type. Moreover, it has been shown in other neuroimaging studies using parametric approaches that group consensus ratings can provide more robust results than individual data as they may better reflect the effect of specific stimulus properties (Hönekopp 2006; Engell et al. 2007).

In order to have an additional indicator for the emotion induction during fMRI, we also recorded heart rate and respiratory activity while the subject was listening to musical stimuli in the scanner. Heart rate was recorded using active electrodes from the MRI scanner’s built-in monitor (Siemens TRIO, Erlangen, Germany), and respiratory activity was recorded with a modular data acquisition system (MP150, BIOPAC Systems Inc.) using an elastic belt around the subject’s chest.

FMRI Data Acquisition and Analysis

MRI data were acquired using a 3T whole-body scanner (Siemens TIM TRIO). A high-resolution T1-weighted structural image (0.9 × 0.9 × 0.9 mm3) was obtained using a magnetization-prepared rapid acquisition gradient echo sequence (time repetition [TR] = 1.9 s, time echo [TE] = 2.32 ms, time to inversion [TI] = 900 ms). Functional images were obtained using a multislice echo planar imaging (EPI) sequence (36 slices, slice thickness 3.5 + 0.7 mm gap, TR = 3 s, TE = 30 ms, field of view = 192 × 192 mm2, 64 × 64 matrix, flip angle: 90°). FMRI data were analyzed using Statistical Parametric Mapping (SPM5; Wellcome Trust Center for Imaging, London, UK; http://www.fil.ion.ucl.ac.uk/spm). Data processing included realignment, unwarping, normalization to the Montreal Neurological Institute space using an EPI template (resampling voxel size: 3 × 3 × 3 mm), spatial smoothing (8 mm full-width at half-maximum Gaussian Filter), and high-pass filtering (1/120 Hz cutoff frequency).

A standard statistical analysis was performed using the general linear model implemented in SPM5. Each musical epoch and each control epoch from every scanning run were modeled by a separate regressor convolved with the canonical hemodynamic response function. To account for movement-related variance, we entered realignment parameters into the same model as 6 additional covariates of no interest. Parameter estimates computed for each epoch and each participant were subsequently used for the second-level group analysis (random-effects) using t-test statistics and multiple linear regressions.

A first general analysis concerned the main effect of music relative to the control condition. Statistical parametric maps were calculated from linear contrasts between all music conditions and all control conditions for each subject, and these contrast images were then submitted to a second-level random-effect analysis using one-sample t-tests. Other more specific analyses used a parametric regression approach (Büchel et al. 1998; Janata et al. 2002; Wood et al. 2008) and analyses of variance (ANOVAs) that tested for differential activations as a function of the intensity of emotions experienced during each musical epoch (9 specific plus 3 general emotion rating scores), as described below.

For all results, we report clusters with a voxel-wise threshold of P < 0.001 (uncorrected) and cluster-size >3 voxels (81 mm3), with additional family-wise error (FWE) correction for multiple comparisons where indicated.

We also identified several regions of interest (ROIs) using clusters that showed significant activation in this whole brain analysis. Betas were extracted from these ROIs by taking an 8-mm sphere around the peak coordinates identified in group analysis (12-mm sphere for the large clusters in the superior temporal gyrus [STG]).

Differential Effects of Emotional Dimensions

To identify the specific neural correlates of relevant emotions from the 9-dimensional model, as well as other more general dimensions (arousal, valence, and familiarity), we calculated separate regression models for these different dimensions using a parametric design similar to the methodology proposed by Wood et al. (2008). This approach has been specifically advocated to disentangle multidimensional processes that combine in a single condition and share similar cognitive features, even when these partly correlate with each other. In our case, each regression model comprised at the first level a single regressor for the music and auditory control epochs, together with a parametric modulator that contained the consensus rating values for a given emotion dimension (e.g., Nostalgia). This parametric modulator was entered for each of the 27 musical epochs; thus, all 27 musical pieces contributed (to different degrees) to determine the correlation between the strength of the felt emotions and corresponding changes in brain activity. For the control epochs, the parametric modulator was always set to zero, in order to isolate the differential effect specific to musical emotions excluding any contribution of (disputable) emotional responses to pure tone sequences and thus ensuring a true baseline of nonmusic-related affect. Overall, the regression model for each emotion differed only with respect of the emotion ratings while other factors were exactly the same, such that the estimation of emotion effects could not be affected by variations in other factors. In addition, we also entered the RMS values as another parametric regressor of no interest to take into account any residual effect of energy differences between the musical epochs. To verify the orthogonality between the emotion and RMS parametric modulators, we calculated the absolute cosine value of the angle between them. These values were close to zero for all dimensions (average over categories 0.033, ±0.002), which therefore implies orthogonality.

Note that although parametric analyses with multiple factors can be performed using a multiple regression model (Büchel et al. 1998), this approach would actually not allow reliable estimation of each emotion category in our case due to systematic intercorrelations between ratings for some categories (e.g., ratings of Joy will always vary in anticorrelated manner to Sadness and conversely be more similar to Wonder than other emotions). Because parametric regressors are orthogonalized serially with regard to the previous one in the GLM, the order of these modulators in the model can modify the results for those showing strong interdependencies. In contrast, by using separate regression models for each emotion category at the individual level, the current approach was shown to be efficient to isolate the specific contributions of different features along a shared cognitive dimension (see Wood et al. 2008, for an application related to numerical size). Thus, each model provides the best parameter estimates for a particular category, without interference or orthogonality prioritization between the correlated regressors, allowing the correlation structure between categories to be “transposed” to the beta-values fitted to the data by the different models. Any difference between the parameter estimates obtained in different models is attributable to a single difference in the regressor of interest, and its consistency across subjects can then be tested against the null hypothesis at the second level (Wood et al. 2008). Moreover, unlike multiple regression performed in a single model, this approach provides unbiased estimates for the effect of one variable when changes in the latter are systematically correlated with changes in another variable (e.g., Joy correlates negatively with Sadness but positively with Wonder) (because parameter estimates are conditional on their covariance and the chosen model, we verified the validity of this approach by comparing our results with those that would be obtained when 3 noncorrelated emotion parameters (Joy Sadness and Tension are simultaneously entered in a single model. As expected, results from both analyses were virtually identical, revealing the same clusters of activation for each emotion, with only small differences in spatial extent and statistical values [see Supplementary Fig. S2]. These data indicate that reliable parameter estimates could be obtained and compared when the different emotion categories were modeled separately. Similar results were shown by our analysis of higher order emotion dimensions [see Result section]).

Random-effect group analyses were performed on activation maps obtained for each emotion dimension in each individual, using a repeated-measure ANOVA and one-sample t-tests at the second level. The statistical parametric maps obtained by the first-level regression analysis for each emotion were entered into the repeated-measures ANOVA with “emotion category” as a single factor (with 9 levels). Contrasts between categories or different groups of emotions were computed with a threshold of P < 0.05 (FWE corrected) for simple main effects and P < 0.001 (uncorrected) at the voxel-level (with cluster size > 3 voxels) for other comparisons.

Results

Behavioral and Physiological Results

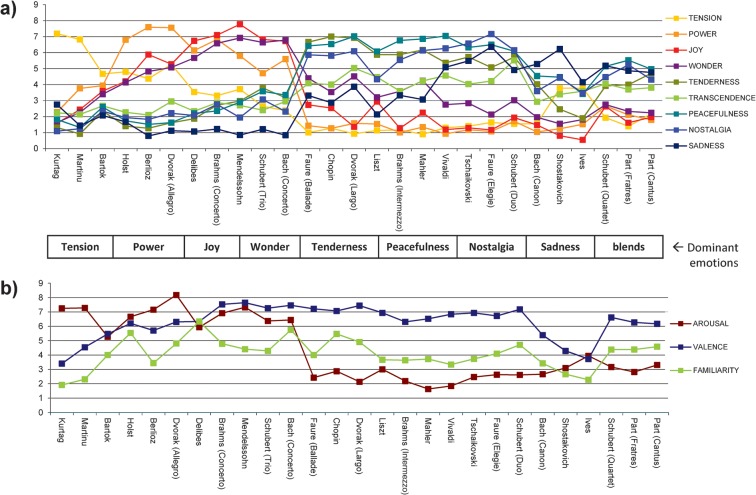

Subjective ratings demonstrated that each of the 9 emotions was successfully induced (mean ratings ≥ 5) by a different subset of the stimuli (see Fig. 1a). Average ratings for arousal, valence, and familiarity are shown in Figure 1b. Familiarity was generally low for all pieces (ratings < 5). The musical pieces were evaluated similarly by all participants for all categories (intersubject correlations mean r = 0.464, mean Cronbach’s alpha = 0.752; see Supplementary Table S4), and there was high reliability between the participants (intraclass correlation mean r = 0.924; see Supplementary Table S4). Results obtained in the behavioral experiment and during fMRI scanning were also highly correlated for each emotion category (mean r = 0.896; see Supplementary Table S3), indicating that the music induced similar affective responses inside and outside the scanner.

Figure 1.

Behavioral evaluations. Emotion ratings were averaged over all subjects (n = 31) in the preexperiment and the fMRI experiment. (a) Emotion evaluations for each of the 9 emotion categories from the GEMS. (b) Emotion evaluations for the more general dimensions of arousal, valence, and familiarity. For illustrative purpose, musical stimuli are grouped according to the emotion category that tended to be most associated with each of them.

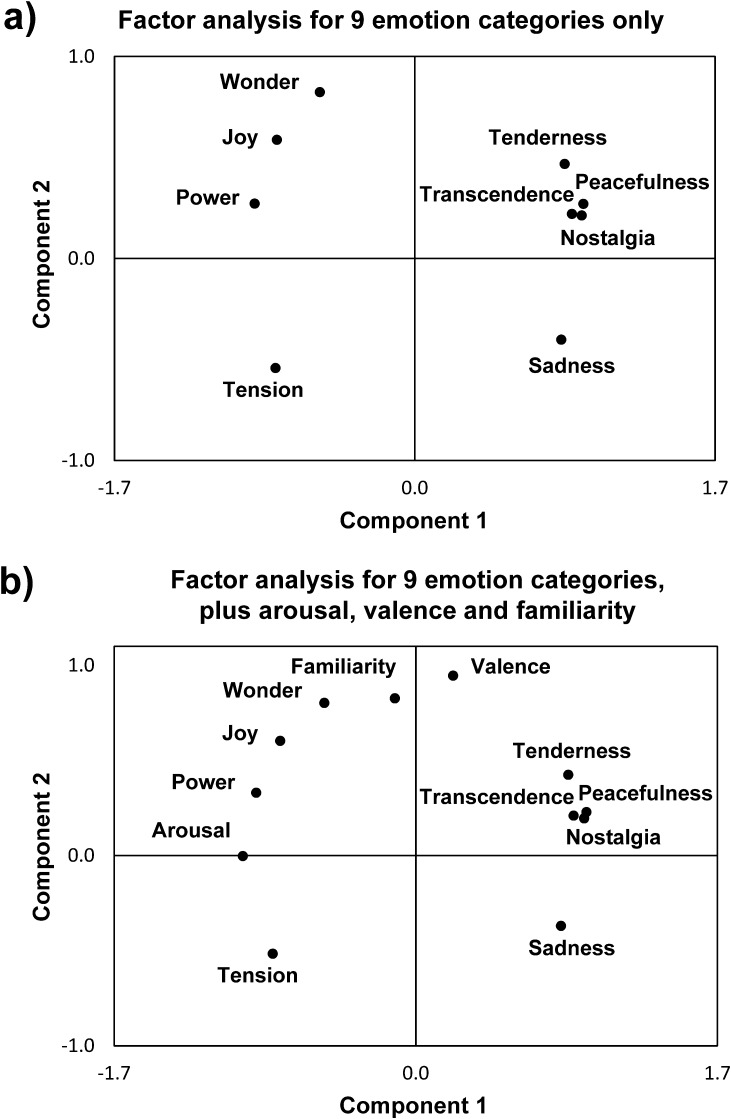

Moreover, as expected, ratings from both experiments showed significant correlations (positive or negative) between some emotion types (see Supplementary Table S5). A factor analysis was therefore performed on subjective ratings for the 9 emotions by pooling data from both experiments together (average across 31 subjects), which revealed 2 main components with an eigenvalue > 1 (Fig. 2a). These 2 components accounted for 92% of the variance in emotion ratings and indicated that the 9 emotion categories could be grouped into 4 different classes, corresponding to each of the quadrants defined by the factorial plot.

Figure 2.

Factorial analysis of emotional ratings. (a) Factor analysis including ratings of the 9 emotion categories from the GEMS. (b) Factor analysis including the same 9 ratings from the GEMS plus arousal, valence, and familiarity. Results are very similar in both cases and show 2 components (with an eigenvalue > 1) that best describe the behavioral data.

This distribution of emotions across 4 quadrants is at first sight broadly consistent with the classic differentiation of emotions in terms of “Arousal” (calm-excited axis for component 1) and “Valence” (positive–negative axis for component 2). Accordingly, adding the separate ratings of Arousal, Valence, and “Familiarity” in a new factorial analysis left the 2 main components unchanged. Furthermore, the position of Arousal and Valence ratings was very close to the main axes defining components 1 and 2, respectively (Fig. 2b), consistent with this interpretation. Familiarity ratings were highly correlated with the positive valence dimension, in keeping with other studies (see Blood and Zatorre 2001). However, importantly, the clustering of the positive valence dimension in 2 distinct quadrants accords with previous findings (Zentner et al. 2008) that positive musical emotions are not uniform but organized in 2 super-ordinate factors of “Vitality” (high arousal) and “Sublimity” (low arousal), whereas a third super-ordinate factor of “Unease” may subtend the 2 quadrants in the negative valence dimension. Thus, the 4 quadrants identified in our factorial analysis are fully consistent with the structure of music-induced emotions observed in behavioral studies with a much larger (n > 1000) population of participants (Zentner et al. 2008).

Based on these factorial results, for our main parametric fMRI analysis (see below), we grouped Wonder, Joy, and Power into a single class representing Vitality, that is, high Arousal and high Valence (A+V+); whereas Nostalgia, Peacefulness, Tenderness, and Transcendence were subsumed into another group corresponding to Sublimity, that is, low Arousal and high Valence (A−V+). The high Arousal and low Valence quadrant (A−V+) contained “Tension” as a unique category, whereas Sadness corresponded to the low Arousal and low Valence category (A−V−). Note that a finer differentiation between individual emotion categories within each quadrant has previously been established in larger population samples (Zentner et al. 2008) and was also tested in our fMRI study by performing additional contrasts analyses (see below).

Finally, our recordings of physiological measures confirmed that emotion experiences were reliably induced by music during fMRI. We found significant differences between emotion categories in respiration and heart rate (ANOVA for respiration rate: F11,120 = 5.537, P < 0.001, and heart rate: F11,110 = 2.182, P < 0.021). Post hoc tests showed that respiration rate correlated positively with subjective evaluations of high arousal (r = 0.237, P < 0.004) and heart rate with positive valence (r = 0.155, P < 0.012), respectively.

Functional MRI results

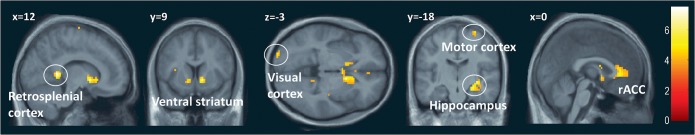

Main Effect of Music

For the general comparison of music relative to the pure tone sequences (main effect, t-test contrast), we observed significant activations in distributed brain areas, including several limbic and paralimbic regions such as the bilateral ventral striatum, posterior and anterior cingulate cortex, insula, hippocampal and parahippocampal regions, as well as associative extrastriate visual areas and motor areas (Table 1 and Fig. 3). This pattern accords with previous studies of music perception (Brown et al. 2004; Koelsch 2010) and demonstrates that our participants were effectively engaged by listening to classical music during fMRI.

Table 1.

Music versus control

| Region | Lateralization | BA | Cluster size | z-value | Coordinates |

| Retrosplenial cingulate cortex | R | 29 | 29 | 4.69 | 12, −45, 6 |

| Retrosplenial cingulate cortex | L | 29 | 25 | 4.23 | −12, −45, 12 |

| Ventral striatum (nucleus accumbens) | R | 60 | 4.35 | 12, 9, −3 | |

| Ventral striatum (nucleus accumbens) | L | 14 | 3.51 | −12, 9, −6 | |

| Ventral pallidum | R | 3 | 3.23 | 27, −3, −9 | |

| Subgenual ACC | L/R | 25 | 88 | 3.86 | 0, 33, 3 |

| Rostral ACC | R | 24 | * | 3.82 | 3, 30, 12 |

| Rostral ACC | R | 32 | * | 3.36 | 3, 45, 3 |

| Hippocampus | R | 28 | 69 | 4.17 | 27, −18, −15 |

| Parahippocampus | R | 34 | * | 3.69 | 39, −21, −15 |

| PHG | L | 36 | 8 | 3.62 | −27, −30, −9 |

| Middle temporal gyrus | R | 21 | 19 | 3.82 | 51, −3, −15 |

| Middle temporal gyrus | R | 21 | 3 | 3.34 | 60, −9, −12 |

| Anterior insula | L | 13 | 5 | 3.34 | −36, 6, 12 |

| Inferior frontal gyrus (pars triangularis) | R | 45 | 3 | 3.25 | 39, 24, 12 |

| Somatosensory cortex | R | 2 | 23 | 4.12 | 27, −24, 72 |

| Somatosensory association cortex | R | 5 | 3 | 3.32 | 18, −39, 72 |

| Motor cortex | R | 4 | 16 | 4.33 | 15, −6, 72 |

| Occipital visual cortex | L | 17 | 23 | 3.84 | −27, −99, −3 |

| Cerebellum | L | 30 | 3.74 | −21, −45, −21 |

Note: ACC, anterior cingulate cortex; L, left; R, right. * indicates that the activation peak merges with the same cluster as the peak reported above.

Figure 3.

The global effect of music. Contrasting all music stimuli versus control stimuli highlighted significant activations in several limbic structures but also in motor and visual cortices. P ≤ 0.001, uncorrected.

Effects of Music-Induced Emotions

To identify the specific neural correlates of emotions from the 9-dimensional model, as well as other more general dimensions (arousal, valence, and familiarity), we calculated separate regression models in which the consensus emotion rating scores were entered as a parametric modulator of the blood oxygen level–dependent response to each of the 27 musical epochs (together with RMS values to control for acoustic effects of no interest), in each individual participant (see Wood et al. 2008). Random-effect group analyses were then performed using repeated-measure ANOVA and one-sample t-tests at the second level.

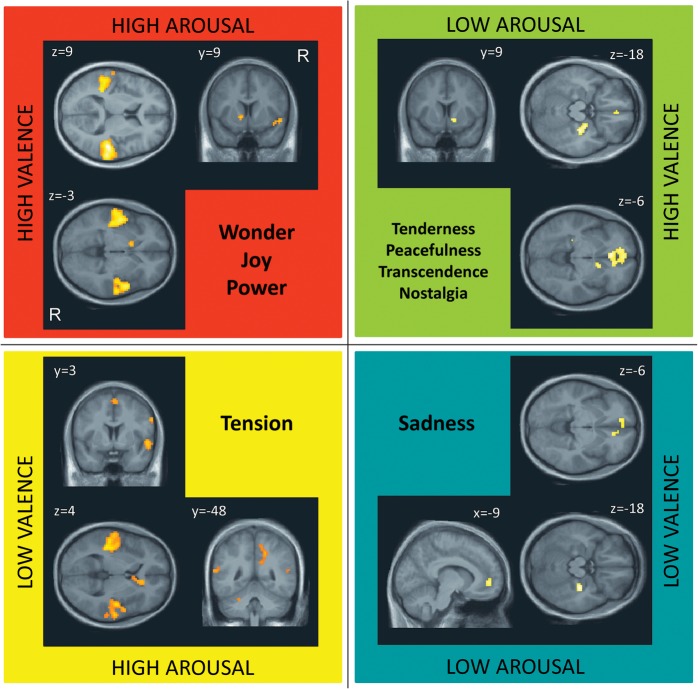

In agreement with the factor analysis of behavioral reports, we found that music-induced emotions could be grouped into 4 main classes that produced closely related profiles of ratings and similar patterns of brain activations. Therefore, we focused our main analyses on these 4 distinct classes: A+V+ representing Wonder, Joy, and Power; A−V+ representing Nostalgia, Peacefulness, Tenderness, and Transcendence; A+V− representing Tension, and A−V− Sadness. We first computed activation maps for each emotion category using parametric regression models in each individual and then combined emotions from the same class together in a second-level group analysis in order to compute the main effect for this class (Wood et al. 2008). This parametric analysis revealed the common patterns of brain activity for emotion categories in each of the quadrants identified by the factorial analysis of behavioral data (Fig. 4). To highlight the most distinctive effects, we retained only voxels exceeding a threshold of P ≤ 0.05 (FWE corrected for multiple comparisons).

Figure 4.

Brain activations corresponding to dimensions of Arousal–Valence across all emotions. Main effects of emotions in each of the 4 quadrants that were defined by the 2 factors of Arousal and Valence. P ≤ 0.05, FWE corrected.

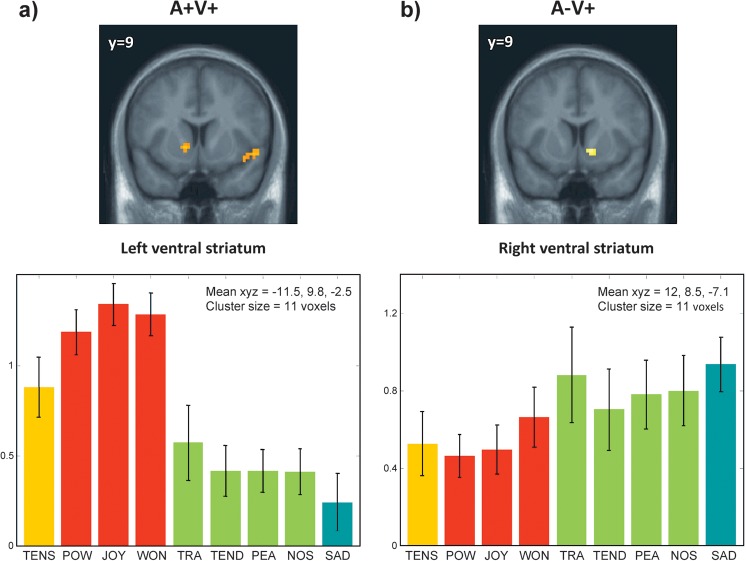

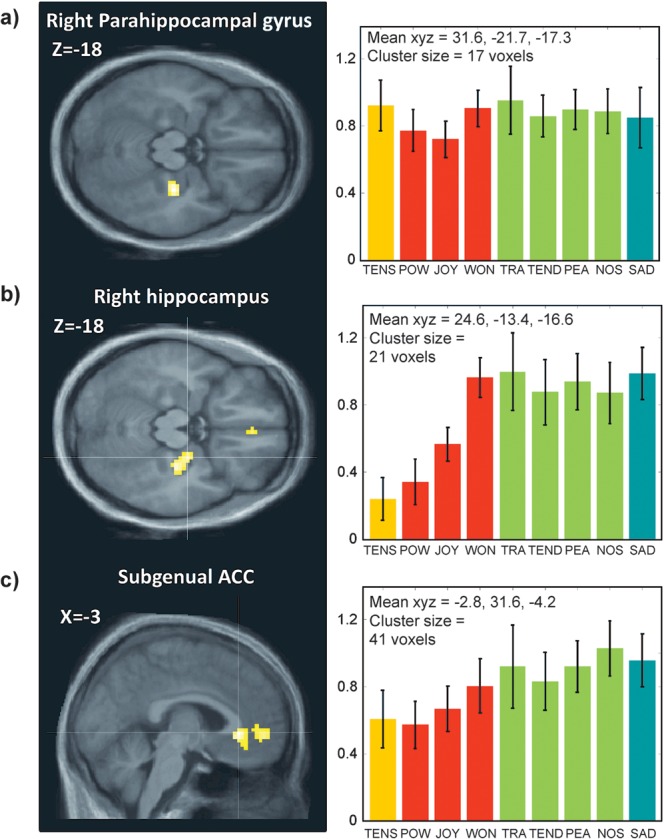

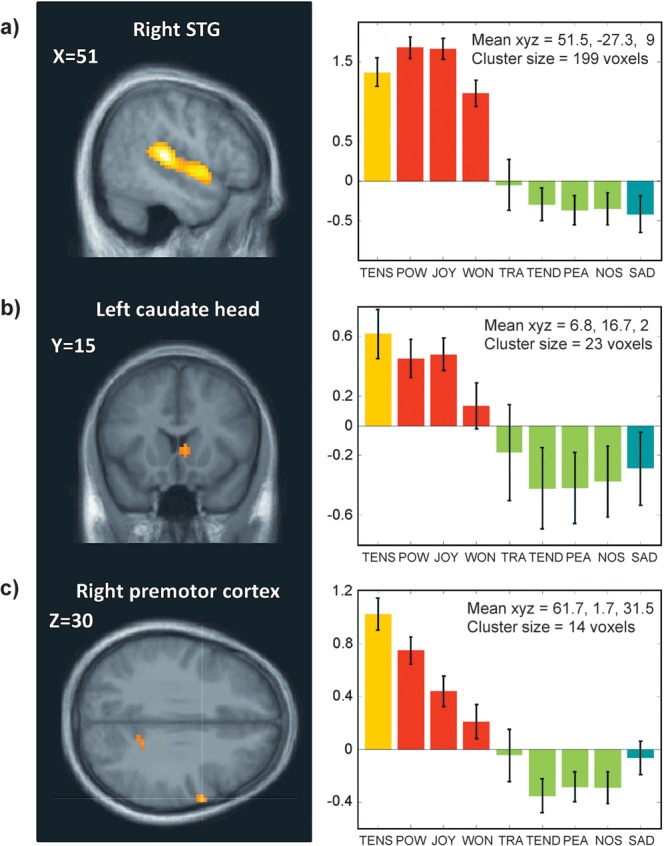

For emotions in the quadrant A+V+ (corresponding to Vitality), we found significant activations in bilateral STG, left ventral striatum, and insula (Table 2, Fig. 4). For Tension which was the unique emotion in quadrant A+V−, we obtained similar activations in bilateral STG but also selective increases in right PHG, motor and premotor areas, cerebellum, right caudate nucleus, and precuneus (Table 2 and Fig. 4). No activation was found in ventral striatum, unlike for the A+V+ emotions. By contrast, emotions included in the quadrant A−V+ (corresponding to Sublimity) showed significant increases in the right ventral striatum but also right hippocampus, bilateral parahippocampal regions, subgenual ACC, and medial orbitofrontal cortex (MOFC) (Table 2 and Fig. 4). The left striatum activated by A+V+ emotions was not significantly activated in this condition (Fig. 5). Finally, the quadrant A−V−, corresponding to Sadness, was associated with significant activations in right parahippocampal areas and subgenual ACC (Table 2 and Fig. 4).

Table 2.

Correlation with 4 different classes of emotion (quadrants)

| Region | Lateralization | BA | Cluster size | z-value | Coordinates |

| A+V− (Tension) | |||||

| STG | R | 41, 42 | 702 | Inf | 51, −27, 9 |

| STG | L | 41, 42 | 780 | 7.14 | −57, −39, 15 |

| Premotor cortex | R | 6 | 15 | 5.68 | 63, 3, 30 |

| Motor cortex | R | 4 | 20 | 5.41 | 3, 3, 60 |

| PHG | R | 36 | 27 | 6.22 | 30, −24, −18 |

| Caudate head | R | 75 | 5.91 | 12, 30, 0 | |

| Precuneus | R | 7, 31, 23 | 57 | 5.55 | 18, −48, 42 |

| Cerebellum | L | 15 | 5.16 | −24, −54, −30 | |

| A+V+ (Joy, Power, and Wonder) | |||||

| STG | R | 41, 42 | 585 | Inf | 51, −27, 9 |

| STG | L | 41, 42 | 668 | 7.34 | −54, −39, 15 |

| Ventral striatum | L | 11 | 5.44 | −12, 9, −3 | |

| Insula | R | 4.87 | 42, 6, −15 | ||

| A−V+ (Peacefulness, Tenderness, Nostalgia, and Transcendence) | |||||

| Subgenual ACC | L | 25 | 180 | 6.15 | −3, 30, −3 |

| Rostral ACC | L | 32 | * | 5.48 | −9, 48, −3 |

| MOFC | R | 12 | * | 5.04 | 3, 39, −18 |

| Ventral striatum | R | 11 | 5.38 | 12, 9, −6 | |

| PHG | R | 34 | 39 | 5.76 | 33, −21, −18 |

| Hippocampus | R | 28 | * | 5.62 | 24, −12, −18 |

| PHG | L | 36 | 11 | 5.52 | −27, −33, −9 |

| Somatosensory cortex | R | 3 | 143 | 5.78 | 33, −27, 57 |

| Medial motor cortex | R | 4 | 11 | 4.89 | 9, −24, 60 |

| A−V− (Sadness) | |||||

| PHG | R | 34 | 17 | 6.11 | 33, −21, −18 |

| Rostral ACC | L | 32 | 35 | 5.3 | −9, 48, −3 |

| Subgenual ACC | R | 25 | 11 | 5.08 | 12, 33, −6 |

Note: ACC, anterior cingulate cortex; L, left; R, right.* indicates that the activation peak merges with the same cluster as the peak reported above.

Figure 5.

Lateralization of activations in ventral striatum. Main effect for the quadrants A+V+ (a) and A−V+ (b) showing the distinct pattern of activations in the ventral striatum for each side. P ≤ 0.001, uncorrected. The parameters of activity (beta values and arbitrary units) extracted from these 2 clusters are shown for conditions associated with each of the 9 emotion categories (average across musical piece and participants). Error bars indicate the standard deviation across participants.

We also performed an additional analysis in which we regrouped the 9 categories into 3 super-ordinate factors of Vitality (Power, Joy, and Wonder), Sublimity (Peacefulness, Tenderness, Transcendence, Nostalgia, and Sadness), and Unease (Tension), each regrouping emotions with partly correlated ratings. The averaged consensus ratings for emotions in each of these factors were entered as regressors in one common model at the subject level, and one-sample t-tests were then performed at the group level for each factor. This analysis revealed activations patterns for each of the 3 super-ordinate classes of emotions that were very similar to those described above (see Supplementary Fig. S1). These data converge with our interpretation for the different emotion quadrants and further indicates that our initial approach based on separate regression models for each emotion category was able to identify the same set of activations despite different covariance structures in the different models.

Altogether, these imaging data show that distinct portions of the brain networks activated by music (Fig. 3) were selectively modulated as a function of the emotions experienced during musical pieces. Note, however, that individual profiles of ratings for different musical stimuli showed that different categories of emotions within a single super-ordinate class (or quadrant) were consistently distinguished by the participants (e.g., Joy vs. Power, Tenderness vs. Nostalgia; see Fig. 2a), as already demonstrated in previous behavioral and psychophysiological work (Zentner et al. 2008; Baltes et al. 2011). It is likely that these differences reflect more subtle variations in the pattern of brain activity for individual emotion categories, for example, a recruitment of additional components or a blend between components associated with the different class of network. Accordingly, inspection of activity in specific ROIs showed distinct profiles for different emotions within a given quadrant (see Figs 7 and 8). For example, relative to Joy, Wonder showed stronger activation in the right hippocampus (Fig. 7b) but weaker activation in the caudate (Fig. 8b). For completeness, we also performed an exploratory analysis of individual emotions by directly contrasting one specific emotion category against all other neighboring categories in the same quadrant (e.g., Wonder > [Joy + Power]) when there was more than one emotion per quadrant (see Supplementary material). Although the correlations between these emotions might limit the sensitivity of such analysis, these comparisons should reveal effects explained by one emotion regressor that cannot be explained to the same extent by another emotion even when the 2 regressors do not vary independently (Draper and Smith 1986). For the A+V+ quadrant, both Power and Wonder appeared to differ from Joy, notably with greater increases in the motor cortex for the former and in the hippocampus for the latter (see Supplementary material for other differences). In the A−V+ quadrant, Nostalgia and Transcendence appeared to differ from other similar emotions by inducing greater increases in cuneus and precuneus for the former but greater increases in right PHG and left striatum for the latter.

Figure 7.

Differential effects of emotion categories associated with low arousal. Parameter estimates of activity (beta values and arbitrary units) are shown for significant clusters (P < 0.001) in (a) PHG obtained for the main effect of Sadness in the A−V− quadrant. The average parameters of activity (beta values and arbitrary units) are shown for each of these clusters. Error bars indicate the standard deviation across participants. (b) Right hippocampus found for the main effect of emotions in the quadrant A−V+ and (c) subgenual ACC found for the main effect of emotions in the A−V+ quadrant.

Figure 8.

Differential effects of emotion categories associated with high arousal. Parameter estimates of activity (beta values and arbitrary units) are shown for significant clusters (P < 0.001) in (a) right STG correlating with the main effect of emotions in the A+V+ quadrant, (b) right caudate head, and (c) right premotor cortex correlating with the main effect of Tension in the A+V− quadrant (A+V−). The average parameters of activity (beta values and arbitrary units) are shown for each of these clusters. Error bars indicate the standard deviation across participants.

Effects of Valence, Arousal, and Familiarity

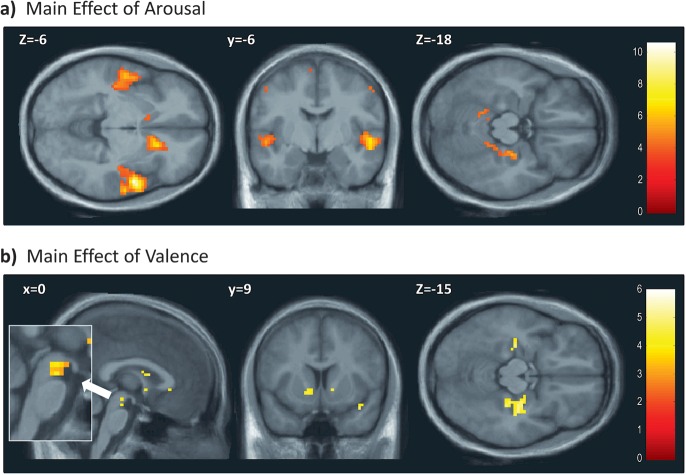

To support our interpretation of the 2 main components from the factor analysis, we performed another parametric regression analysis for the subjective evaluations of Arousal and Valence taken separately. This analysis revealed that Arousal activated the bilateral STG, bilateral caudate head, motor and visual cortices, cingulate cortex and cerebellum, plus right PHG (Table 3, Fig. 6a); whereas Valence correlated with bilateral ventral striatum, ventral tegmental area, right insula, subgenual ACC, but also bilateral parahippocampal gyri and right hippocampus (Table 3 and Fig. 6b). Activity in right PHG was therefore modulated by both arousal and valence (see Fig. 7a) and consistently found for all emotion quadrants except A+V+ (Table 2). Negative correlations with subjective ratings were found for Arousal in bilateral superior parietal cortex and lateral orbital frontal gyrus (Table 3); while activity in inferior parietal lobe, lateral orbital frontal gyrus, and cerebellum correlated negatively with Valence (Table 3). In order to highlight the similarities between the quadrant analysis and the separate parametric regression analysis for Arousal and Valence, we also performed conjunction analyses that essentially confirmed these effects (see Supplementary Table S6).

Table 3.

Correlation with ratings of arousal, valence, and familiarity

| Region | Lateralization | BA | Cluster size | z-value | Coordinates |

| ARO+ | |||||

| STG | L | 22, 40, 41, 42 | 650 | 5.46 | −63, −36, 18 |

| STG | R | 22, 41, 42 | 589 | 5.39 | 54, −3, −6 |

| Caudate head | R | 212 | 4.42 | 18, 21, −6 | |

| Caudate head | L | * | 3.98 | −9, 18, 0 | |

| PHG | R | 36 | 26 | 3.95 | 27, −18, −18 |

| Posterior cingulate cortex | R | 23 | 6 | 3.4 | 15, −21, 42 |

| Rostral ACC | L | 32 | 5 | 3.37 | −12, 33, 0 |

| Medial motor cortex | L | 4 | 4 | 3.51 | −6, −9, 69 |

| Motor cortex | R | 4 | 4 | 3.39 | 57, −6, 45 |

| Motor cortex | L | 4 | 3 | 3.29 | −51, −6, 48 |

| Occipital visual cortex | L | 17 | 6 | 3.28 | −33, −96, −6 |

| Cerebellum | L | 21 | 3.59 | −15, −45, −15 | |

| Cerebellum | L | 4 | 3.2 | −15, −63, −27 | |

| ARO− | |||||

| Superior parietal cortex | L | 7 | 19 | 3.96 | −48, −42, 51 |

| Superior parietal cortex | R | 7 | 12 | 3.51 | 45, −45, 51 |

| Lateral orbitofrontal gyrus | R | 47 | 6 | 3.53 | 45, 42, −12 |

| VAL+ | |||||

| Insula | R | 13 | 8 | 3.86 | 39, 12, −18 |

| Ventral striatum (nucleus accumbens) | L | 8 | 3.44 | −12, 9, −6 | |

| Ventral striatum (nucleus accumbens) | R | 3 | 3.41 | 12, 9, −3 | |

| VTA | 2 | 3.22 | 0, −21, −12 | ||

| Subgenual ACC | 33 | 4 | 3.28 | 0, 27, −3 | |

| Hippocampus | R | 34 | 56 | 3.65 | 24, −15, −18 |

| PHG | R | 35 | * | 3.79 | 33, −18, −15 |

| PHG | L | 35 | 14 | 3.61 | −30, −21, −15 |

| Temporopolar cortex | R | 38 | 7 | 3.42 | 54, 3, −12 |

| Anterior STG | L | 22 | 10 | 3.57 | −51, −6, −6 |

| Motor cortex | R | 4 | 15 | 3.39 | 18, −9, 72 |

| VAL− | |||||

| Middle temporal gyrus | R | 37 | 3 | 3.35 | 54, −42, −9 |

| Lateral orbitofrontal gyrus | R | 47 | 3 | 3.24 | 39, 45, −12 |

| Cerebellum | R | 14 | 4.57 | 21, −54, −24 | |

| FAM+ | |||||

| Ventral striatum (nucleus accumbens) | L | 16 | 3.88 | −12, 12, −3 | |

| Ventral striatum (nucleus accumbens) | R | 15 | 3.81 | 12, 9, −3 | |

| VTA | L/R | 2 | 3.4 | 0, −21, −18 | |

| VTA | L | 2 | 3.22 | −3, −18, −12 | |

| PHG | R | 35 | 56 | 4 | 27, −27, −15 |

| Anterior STG | R | 22 | 14 | 3.9 | 54, 3, −9 |

| Anterior STG | L | 22 | 6 | 3.49 | −51, −3, −9 |

| Motor cortex | R | 4 | 50 | 4.03 | 24, −12, 66 |

| Motor cortex | L | 4 | 9 | 3.72 | −3, 0, 72 |

Note: ACC, anterior cingulate cortex; L, left; R, right. * indicates that the activation peak merges with the same cluster as the peak reported above.

Figure 6.

Regression analysis for arousal (a) and valence (b) separately. Results of second-level one-sample t-tests on activation maps obtained from a regression analysis using the explicit emotion ratings of arousal and valence separately. Main figures: P ≤ 0.001, uncorrected, inset: P ≤ 0.005, uncorrected.

In addition, the same analysis for subjective familiarity ratings identified positive correlations with bilateral ventral striatum, ventral tegmental area (VTA), PHG, insula, as well as anterior STG and motor cortex (Table 3). This is consistent with the similarity between familiarity and positive valence ratings found in the factorial analysis of behavioral reports (Fig. 2).

These results were corroborated by an additional analysis based on a second-level model where regression maps from each emotion category were included as 9 separate conditions. Contrasts were computed by comparing emotions between the relevant quadrants in the arousal/valence space of our factorial analysis (a similar analysis using the loading of each musical piece on the 2 main axes of the factorial analysis [corresponding to Arousal and Valence] was found to be relatively insensitive, with significant differences between the 2 factors mainly found in STG and other effects observed only at lower statistical threshold, presumably reflecting improper modeling of each emotion clusters by the average valence or arousal dimensions alone). As predicted, the comparison of all emotions with high versus low Arousal (regardless of differences in Valence), confirmed a similar pattern of activations predominating in bilateral STG, caudate, premotor cortex, cerebellum, and occipital cortex (as found above when using the explicit ratings of arousal); whereas low versus high Arousal showed only at a lower threshold (P < 0.005, uncorrected) effects in bilateral hippocampi and parietal somatosensory cortex. However, when contrasting categories with positive versus negative Valence, regardless of Arousal, we did not observe any significant voxels. This null finding may reflect the unequal comparison made in this contrast (7 vs. 2 categories), but also some inhomogeneity between these 2 distant positive groups in the 2-dimensional Arousal/Valence space (see Fig. 2), and/or a true dissimilarity between emotions in the A+V+ versus A-V+ groups. Accordingly, the activation of some regions such as ventral striatum and PHG depended on both valence and arousal (Figs 5 and 7a).

Overall, these findings converge to suggest that the 2 main components identified in the factor analysis of behavioral data could partly be accounted by Arousal and Valence, but that these dimensions might not be fully orthogonal (as found in other stimulus modalities, e.g., see Winston et al. 2005) and instead be more effectively subsumed in the 3 domain-specific super-ordinate factors described above (Zentner et al. 2008) (we also examined F maps obtained with our RFX model based on the 9 emotion types, relative to a model based on arousal and valence ratings alone or a model including all 9 emotions plus arousal and valence. These F maps demonstrated more voxels with F values > 1 in the former than the 2 latter cases [43 506 vs. 40 619 and 42 181 voxels, respectively], and stronger effects in all ROIs [such as STG, VMPFC, etc]. These data suggest that explained variance in the data was larger with a model including 9 distinct emotions).

Discussion

Our study reveals for the first time the neural architecture underlying the complex “aesthetic” emotions induced by music and goes in several ways beyond earlier neuroimaging work that focused on basic categories (e.g., joy vs. sadness) or dimensions of affect (e.g., pleasantness vs. unpleasantness). First, we defined emotions according to a domain-specific model that identified 9 categories of subjective feelings commonly reported by listeners with various music preferences (Zentner et al. 2008). Our behavioral results replicated a high agreement between participants in rating these 9 emotions and confirmed that their reports could be mapped onto a higher order structure with different emotion clusters, in agreement with the 3 higher order factors (Vitality, Unease, and Sublimity) previously shown to describe the affective space of these 9 emotions (Zentner et al. 2008). Vitality and Unease are partly consistent with the 2 dimensions of Arousal and Valence that were identified by our factorial analysis of behavioral ratings, but they do not fully overlap with traditional bi-dimensional models (Russell 2003), as shown by the third factor of Sublimity that constitutes of special kind of positive affect elicited by music (Juslin and Laukka 2004; Konecni 2008), and a clear differentiation of these emotion categories that is more conspicuous when testing large populations of listeners in naturalistic settings (see Scherer and Zentner 2001; Zentner et al. 2008; Baltes et al. 2011).

Secondly, our study applied a parametric fMRI approach (Wood et al. 2008) using the intensity of emotions experienced during different music pieces. This approach allowed us to map the 9 emotion categories onto brain networks that were similarly organized in 4 groups, along the dimensions of Arousal and Valence identified by our factorial analysis. Specifically, at the brain level, we found that the 2 factors of Arousal and Valence were mapped onto distinct neural networks, but some specificities or unique combinations of activations were observed for certain emotion categories with similar arousal or valence levels. Importantly, our parametric fMRI approach enabled us to take into account the fact that emotional blends are commonly evoked by music (Barrett et al. 2010), unlike previous approaches using predefined (and often binary) categorizations that do not permit several emotions to be present in one stimulus.

Vitality and Arousal Networks

A robust finding was that high and low arousal emotions correlated with activity in distinct brain networks. High-arousal emotions recruited bilateral auditory areas in STG as well as the caudate nucleus and the motor cortex (see Fig. 8). The auditory increases were not due to loudness because the average sound volume was equalized for all musical stimuli and entered as a covariate of no interest in all fMRI regression analyses. Similar effects have been observed for the perception of arousal in voices (Wiethoff et al. 2008), which correlates with STG activation despite identical acoustic parameters. We suggest that such increases may reflect the auditory content of stimuli that are perceived as arousing, for example, faster tempo and/or rhythmical features.

In addition, several structures in motor circuits were also associated with high arousal, including the caudate head within the basal ganglia, motor and premotor cortices, and even cerebellum. These findings further suggest that the arousing effects of music depend on rhythmic and dynamic features that can entrain motor processes supported by these neural structures (Chen et al. 2006; Grahn and Brett 2007; Molinari et al. 2007). It has been proposed that distinct parts of the basal ganglia may process different aspects of music, with dorsal sectors in the caudate recruited by rhythm and more ventral sectors in the putamen preferentially involved in processing melody (Bengtsson and Ullen 2006). Likewise, the cerebellum is crucial for motor coordination and timing (Ivry et al. 2002) but also activates musical auditory patterns (Grahn and Brett 2007; Lebrun-Guillaud et al. 2008). Here, we found a greater activation of motor-related circuits for highly pleasant and highly arousing emotions (A+V+) that typically convey a strong impulse to move or dance, such as Joy or Power. Power elicited even stronger increases in motor areas as compared with Joy, consistent with the fact that this emotion may enhance the propensity to strike the beat, as when hand clapping or marching synchronously with the music, for example. This is consistent with a predisposition of young infants to display rhythmic movement to music, particularly marked when they show positive emotions (Zentner and Eerola 2010a). Here, activations in motor and premotor cortex were generally maximal for feelings of Tension (associated with negative valence), supporting our conclusion that these effects are related to the arousing nature rather than pleasantness of music. Such motor activations in Tension are likely to reflect a high complexity of rhythmical patterns (Bengtsson et al. 2009) in musical pieces inducing this emotion.

Low-arousal emotions engaged a different network centered on hippocampal regions and VMPFC including the subgenual anterior cingulate. These correspond to limbic brain structures implicated in both memory and emotion regulation (Svoboda et al. 2006). Such increases correlated with the intensity of emotions of pleasant nature, characterized by tender and calm feelings, but also with Sadness that entails more negative feelings. This pattern therefore suggests that these activations were not only specific for low arousal but also independent of valence. However, Janata (2009) previously showed that the VMPFC response to music was correlated with both the personal autobiographical salience and the subjective pleasing valence of songs. This finding might be explained by the unequal distribution of negative valence over the 9 emotion categories and reflect the special character of musically induced sadness, which represents to some extent a rather pleasant affective state.

The VMPFC is typically recruited during the processing of self-related information and autobiographical memories (D'Argembeau et al. 2007), as well as introspection (Ochsner et al. 2004), mind wandering (Mason et al. 2007), and emotion regulation (Pezawas et al. 2005). This region also overlaps with default brain networks activated during resting state (Raichle et al. 2001). However, mental idleness or relaxation alone is unlikely to account for increases during low-arousal emotions because VMPFC was significantly more activated by music than by passive listening of computerized pure tones during control epochs (see Table 1), similarly as shown by Brown et al. (2004).

Our findings are therefore consistent with the idea that these regions may provide a hub for the retrieval of memories evoked by certain musical experiences (Janata 2009) and further demonstrate that these effects are associated with a specific class of music-induced emotions. Accordingly, a prototypical emotion from this class was Nostalgia, which is often related to the evocation of personally salient autobiographical memories (Barrett et al. 2010). However, Nostalgia did not evoke greater activity in these regions as compared with neighbor emotions (Peacefulness, Tenderness, Transcendence, and Sadness). Moreover, we used musical pieces from classic repertoire that were not well known to our participants, such that effects of explicit memory and semantic knowledge were minimized.

However, a recruitment of memory processes in low-arousal emotions is indicated by concomitant activation of hippocampal and parahippocampal regions, particularly in the right hemisphere. The hippocampus has been found to activate in several studies on musical emotions, but with varying interpretations. It has been associated with unpleasantness, dissonance, or low chill intensity (Blood and Zatorre 2001; Brown et al. 2004; Koelsch et al. 2006, 2007; Mitterschiffthaler et al. 2007) but also with positive connotations (Brown et al. 2004; Koelsch et al. 2007). Our new results for a broader range of emotions converge with the suggestion of Koelsch (Koelsch et al. 2007; Koelsch 2010), who proposed a key role in tender affect, since we found consistent activation of the right hippocampus for low-arousal emotions in the A−V+ group. However, we found no selective increase for Tenderness compared with other emotions in this group, suggesting that hippocampal activity is more generally involved in the generation of calm and introspective feeling states. Although the hippocampus has traditionally been linked to declarative memory, recent evidence suggests a more general role for the formation and retention of flexible associations that can operate outside consciousness and without any explicit experience of remembering (Henke 2010). Therefore, we propose that hippocampus activation to music may reflect automatic associative processes that arise during absorbing states and dreaminess, presumably favored by slow auditory inputs associated with low-arousal music. This interpretation is consistent with “dreamy” being among the most frequently reported feeling states in response to music (Zentner et al. 2008, Table 2). Altogether, this particular combination of memory-related activation with low arousal and pleasantness might contribute to the distinctiveness of emotions associated with the super-ordinate class of Sublimity.

Whereas the hippocampus was selectively involved in low-arousal emotions, the right PHG was engaged across a broader range of conditions (see Fig. 7a). Indeed, activity in this region was correlated with the intensity of both arousal and valence ratings (Table 3) and found for all classes of emotions (Table 2), except Joy and Power (Fig. 7). These findings demonstrate that right PHG is not only activated during listening to music with unpleasant and dissonant content (Blood et al. 1999; Green et al. 2008), or to violations of harmony expectations (James et al. 2008), but also during positive low-arousal emotions such as Nostalgia and Tenderness as well as negative high-arousing emotions (i.e., Tension). Thus, PHG activity could not be explained in terms of valence or arousal dimensions alone. Given a key contribution of PHG to contextual memory and novelty processing (Hasselmo and Stern 2006; Henke 2010), its involvement in music perception and music-induced emotions might reflect a more general role in encoding complex auditory sequences that are relatively unpredictable or irregular, a feature potentially important for generating feelings of Tension (A+V− quadrant) as well as captivation (A−V+ quadrant)—unlike the more regular rhythmic patterns associated with A+V+ emotions (which induced the least activation in PHG).

Pleasantness and Valence Network

Another set of regions activated across several emotion categories included the mesolimbic system, that is, the ventral striatum and VTA, as well as the insula. These activations correlated with pleasant emotion categories (e.g., Joy and Wonder) and positive valence ratings (Table 3 and Fig. 6), consistent with other imaging studies on pleasant musical emotions (Blood et al. 1999; Blood and Zatorre 2001; Brown et al. 2004; Menon and Levitin 2005; Koelsch et al. 2006; Mitterschiffthaler et al. 2007). This accords with the notion that the ventral striatum and VTA, crucially implicated in reward processing, are activated by various pleasures like food, sex, and drugs (Berridge and Robinson 1998).

However, our ANOVA contrasting all emotion categories with positive versus negative valence showed no significant effects indicating that no brain structure was activated in common by all pleasant music experiences independently of the degree of arousal. This further supports the distinction of positive emotions into 2 distinct clusters that cannot be fully accounted by a simple bi-dimensional model. Thus, across the different emotion quadrants, striatum activity was not uniquely influenced by positive valence but also modulated by arousal. Moreover, we observed a striking lateralization in the ventral striatum: pleasant high-arousal emotions (A+V+) induced significant increases in the left striatum, whereas pleasant low-arousal music (A−V+) preferentially activated the right striatum (see Fig. 5). This asymmetry might explain the lack of common activations to positive valence independent of arousal and further suggests that these 2 dimensions are not totally orthogonal at the neural level. Accordingly, previous work suggested that these 2 emotion groups correspond to distinct higher order categories of Vitality and Sublimity (Zentner et al. 2008). The nature of asymmetric striatum activation in our study is unclear since little is known about lateralization of subcortical structures. Distinct left versus right hemispheric contributions to positive versus negative affect have been suggested (Davidson 1992) but are inconsistently found during music processing (Khalfa et al. 2005) and cannot account for the current segregation “within” positive emotions. As the left and right basal ganglia are linked to language (Crinion et al. 2006) and prosody processing (Lalande et al. 1992; Pell 2006), respectively, we speculate that this asymmetry might reflect differential responses to musical features associated with the high- versus low-arousal positive emotions (e.g., distinct rhythmical patterns or melodic contours) and correspond to hemispheric asymmetries at the cortical level (Zatorre and Belin 2001).

In addition, the insula responded only to A+V+ emotions, whereas an area in MOFC was selectively engaged during A−V+ emotions. These 2 regions have also been implicated in reward processing and positive affect (O'Doherty et al. 2001; Anderson et al. 2003; Bechara et al. 2005). Taken together, these differences between the 2 classes of positive emotions may provide a neural basis for different kinds of pleasure evoked by music, adding support to a distinction between “fun” (positive valence/high arousal) and “bliss” (positive valence/low arousal), as also proposed by others (Koelsch 2010; Koelsch et al. 2010).

The only area uniquely responding to negative valence was the lateral OFC that was however also correlated with low arousal (Table 3). No effect was observed in the amygdala, a region typically involved in processing threat-related emotions, such as fear or anger. This might reflect a lack of music excerpts effectively conveying imminent danger among our stimuli (Gosselin et al. 2005), although feelings of anxiety and suspense induced by scary music were subsumed in our category of Tension (Zentner et al. 2008). Alternatively, the amygdala might respond to brief events in music, such as harmonic transgressions or unexpected transitions (James et al. 2008; Koelsch et al. 2008), which were not captured by our fMRI design.

Emotion Blends

Aesthetic emotions are thought to often occur in blended form, perhaps because their triggers are less specific than the triggers of more basic adaptive responses to events of the real world (Zentner 2010). Indeed, when considering activation patterns in specific regions across conditions, some emotions seemed not to be confined to a single quadrant but showed some elements from adjacent quadrants (see Figs 5–8). For example, as already noted above, Power exhibited the same activations as other A+V+ categories (i.e., ventral striatum and insula) but stronger increases in motor areas similar to A−V− (Tension). By contrast, Wonder (in A+V+ group) showed weaker activation in motor networks but additional increase in the right hippocampus, similar to A−V− emotions; whereas Transcendence combined effects of positive low arousal (A−V+) with components of high-arousal emotions, including greater activation in left striatum (like A+V+) and right PHG (like A+V−). We also found evidence for the proposal that Nostalgia is a mixed emotion associated with both joy and sadness (Wildschut et al. 2006; Barrett et al. 2010), since this category shared activations with other positive emotions as well as Sadness. However, Nostalgia did not clearly differ from neighbor emotions (Peacefulness and Tenderness) except for some effects in visual areas, possibly reflecting differences in visual imagery.

These findings provide novel support to the notion that musical and other aesthetic emotions may generate blends of more simple affective states (Hunter et al. 2008; Barrett et al. 2010). However, our data remain preliminary, and a direct comparison between neighbor categories using our parametric approach is limited by the correlations between ratings. Nonetheless, it is likely that a more graded differentiation of activations in the neural networks identified in our study might underlie the finer distinctions between different categories of music-induced emotions. Employing a finer-grained temporal paradigm might yield a more subtle differentiation between all the emotion categories in further research.

Conclusions

Our study provides a first attempt to delineate the neural substrates of music-induced emotions using a domain-specific model with 9 distinct categories of affect. Our data suggest that these emotions are organized according to 2 main dimensions, which are only partly compatible with Arousal and Valence but more likely reflect a finer differentiation into 3 main classes (such as Vitality, Unease, and Sublimity). Our imaging findings primarily highlight the main higher order groups of emotions identified in the original model of Zentner et al. (2008), while a finer differentiation between emotion categories was found only for a few of them and will need further research to be substantiated.

These higher order affective dimensions were found to map onto brain systems shared with more basic, nonmusical emotions, such as reward and sadness. Importantly, however, our data also point to a crucial involvement of brain systems that are not primarily “emotional” areas, including motor pathways as well as memory (hippocampus and PHG) and self-reflexive processes (ventral ACC). These neural components appear to overstep a strictly 2D affective space, as they were differentially expressed across various categories of emotion and showed frequent blends between different quadrants in the Arousal/Valence space. The recruitment of these systems may add further dimensions to subjective feeling states evoked by music, contributing to their impact on memory and self-relevant associations (Scherer and Zentner 2001; Konecni 2008) and thus provide a substrate for the enigmatic power and unique experiential richness of these emotions.

Funding

This work was supported in parts by grants from the Swiss National Science Foundation (51NF40-104897) to the National Center of Competence in Research for Affective Sciences; the Société Académique de Genève (Fund Foremane); and a fellowship from the Lemanic Neuroscience Doctoral School.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/

Acknowledgments

We thank Klaus Scherer and Didier Grandjean for valuable comments and discussions. Conflict of Interest: None declared.

References

- Anderson AK, Christoff K, Stappen I, Panitz D, Ghahremani DG, Glover G, Gabrieli JD, Sobel N. Dissociated neural representations of intensity and valence in human olfaction. Nat Neurosci. 2003;6:196–202. doi: 10.1038/nn1001. [DOI] [PubMed] [Google Scholar]

- Baltes FR, Avram J, Miclea M, Miu AC. Emotions induced by operatic music: psychophysiological effects of music, plot, and acting A scientist’s tribute to Maria Callas. Brain Cogn. 2011;76:146–157. doi: 10.1016/j.bandc.2011.01.012. [DOI] [PubMed] [Google Scholar]

- Barrett FS, Grimm KJ, Robins RW, Wildschut T, Sedikides C, Janata P. Music-evoked nostalgia: affect, memory, and personality. Emotion. 2010;10:390–403. doi: 10.1037/a0019006. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Tranel D, Damasio AR. The Iowa Gambling Task and the somatic marker hypothesis: some questions and answers. Trends Cogn Sci. 2005;9:159–162. doi: 10.1016/j.tics.2005.02.002. [DOI] [PubMed] [Google Scholar]

- Bengtsson SL, Ullen F. Dissociation between melodic and rhythmic processing during piano performance from musical scores. Neuroimage. 2006;30:272–284. doi: 10.1016/j.neuroimage.2005.09.019. [DOI] [PubMed] [Google Scholar]

- Bengtsson SL, Ullen F, Ehrsson HH, Hashimoto T, Kito T, Naito E, Forssberg H, Sadato N. Listening to rhythms activates motor and premotor cortices. Cortex. 2009;45:62–71. doi: 10.1016/j.cortex.2008.07.002. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Res Brain Res Rev. 1998;28:309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Blood AJ, Zatorre RJ. Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proc Natl Acad Sci U S A. 2001;98:11818–11823. doi: 10.1073/pnas.191355898. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blood AJ, Zatorre RJ, Bermudez P, Evans AC. Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nat Neurosci. 1999;2:382–387. doi: 10.1038/7299. [DOI] [PubMed] [Google Scholar]

- Brown S, Martinez MJ, Parsons LM. Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport. 2004;15:2033–2037. doi: 10.1097/00001756-200409150-00008. [DOI] [PubMed] [Google Scholar]

- Büchel C, Holmes AP, Rees G, Friston KJ. Characterizing stimulus-response functions using nonlinear regressors in parametric fMRI experiments. Neuroimage. 1998;8:140–148. doi: 10.1006/nimg.1998.0351. [DOI] [PubMed] [Google Scholar]

- Chen JL, Zatorre RJ, Penhune VB. Interactions between auditory and dorsal premotor cortex during synchronization to musical rhythms. Neuroimage. 2006;32:1771–1781. doi: 10.1016/j.neuroimage.2006.04.207. [DOI] [PubMed] [Google Scholar]

- Crinion J, Turner R, Grogan A, Hanakawa T, Noppeney U, Devlin JT, Aso T, Urayama S, Fukuyama H, Stockton K, et al. Language control in the bilingual brain. Science. 2006;312:1537–1540. doi: 10.1126/science.1127761. [DOI] [PubMed] [Google Scholar]

- D'Argembeau A, Ruby P, Collette F, Degueldre C, Balteau E, Luxen A, Maquet P, Salmon E. Distinct regions of the medial prefrontal cortex are associated with self-referential processing and perspective taking. J Cogn Neurosci. 2007;19:935–944. doi: 10.1162/jocn.2007.19.6.935. [DOI] [PubMed] [Google Scholar]

- Davidson RJ. Anterior cerebral asymmetry and the nature of emotion. Brain Cogn. 1992;20:125–151. doi: 10.1016/0278-2626(92)90065-t. [DOI] [PubMed] [Google Scholar]

- Draper NR, Smith H. Applied regression analysis. New York: John Wiley and Sons; 1986. [Google Scholar]

- Ekman P. An argument for basic emotions. Cogn Emot. 1992a;6:169–200. [Google Scholar]

- Engell AD, Haxby JV, Todorov A. Implicit trustworthiness decisions: automatic coding of face properties in the human amygdala. J Cogn Neurosci. 2007;19:1508–1519. doi: 10.1162/jocn.2007.19.9.1508. [DOI] [PubMed] [Google Scholar]

- Evans P, Schubert E. Relationships between expressed and felt emotions in music. Musicae Scientiae. 2008;12:57–69. [Google Scholar]

- Gabrielson A, Juslin PN. Emotional expression in music. In: Davidson RJ, Scherer K, Goldsmith HH, editors. Handbook of affective sciences. New York: Oxford University Press; 2003. pp. 503–534. [Google Scholar]

- Gosselin N, Peretz I, Noulhiane M, Hasboun D, Beckett C, Baulac M, Samson S. Impaired recognition of scary music following unilateral temporal lobe excision. Brain. 2005;128:628–640. doi: 10.1093/brain/awh420. [DOI] [PubMed] [Google Scholar]

- Grahn JA, Brett M. Rhythm and beat perception in motor areas of the brain. J Cogn Neurosci. 2007;19:893–906. doi: 10.1162/jocn.2007.19.5.893. [DOI] [PubMed] [Google Scholar]

- Green AC, Baerentsen KB, Stodkilde-Jorgensen H, Wallentin M, Roepstorff A, Vuust P. Music in minor activates limbic structures: a relationship with dissonance? Neuroreport. 2008;19:711–715. doi: 10.1097/WNR.0b013e3282fd0dd8. [DOI] [PubMed] [Google Scholar]

- Hasselmo ME, Stern CE. Mechanisms underlying working memory for novel information. Trends Cogn Sci. 2006;10:487–493. doi: 10.1016/j.tics.2006.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henke K. A model for memory systems based on processing modes rather than consciousness. Nat Rev Neurosci. 2010;11:523–532. doi: 10.1038/nrn2850. [DOI] [PubMed] [Google Scholar]

- Hevner K. Experimental studies of the elements of expression in music. Am J Psychol. 1936;48:246–268. [Google Scholar]

- Hönekopp J. Once more: is beauty in the eye of the beholder? Relative contributions of private and shared taste to judgments of facial attractiveness. J Exp Psychol Hum Percept Perform. 2006;32:199–209. doi: 10.1037/0096-1523.32.2.199. [DOI] [PubMed] [Google Scholar]

- Hunter PG, Schellenberg EG, Schimmack U. Mixed affective responses to music with conflicting cues. Cogn Emot. 2008;22:327–352. [Google Scholar]

- Ivry RB, Spencer RM, Zelaznik HN, Diedrichsen J. The cerebellum and event timing. Ann N Y Acad Sci. 2002;978:302–317. doi: 10.1111/j.1749-6632.2002.tb07576.x. [DOI] [PubMed] [Google Scholar]

- James CE, Britz J, Vuilleumier P, Hauert CA, Michel CM. Early neuronal responses in right limbic structures mediate harmony incongruity processing in musical experts. Neuroimage. 2008;42:1597–1608. doi: 10.1016/j.neuroimage.2008.06.025. [DOI] [PubMed] [Google Scholar]

- Janata P. The neural architecture of music-evoked autobiographical memories. Cereb Cortex. 2009;19:2579–2594. doi: 10.1093/cercor/bhp008. [DOI] [PMC free article] [PubMed] [Google Scholar]